Semantic Segmentation (U-Net) of Archaeological Features in Airborne Laser Scanning—Example of the Bia?owie?a Forest

Abstract

:1. Introduction

1.1. Deep Learning

1.2. Deep Learning and ALS Data in Archaeology

1.3. Celtic Fields and Burial Mounds

2. Materials and Methods

2.1. Characteristics of the Area—The Primeval Forest of Białowieża

2.2. Airborne Laser Scanning and Digital Terrain Model

2.3. Digital Terrain Model Visualizations

2.4. Research and Training Area

2.5. Research and Training Datasets

2.6. Classes of the Objects

2.7. Deep Learning—Method Description and Training

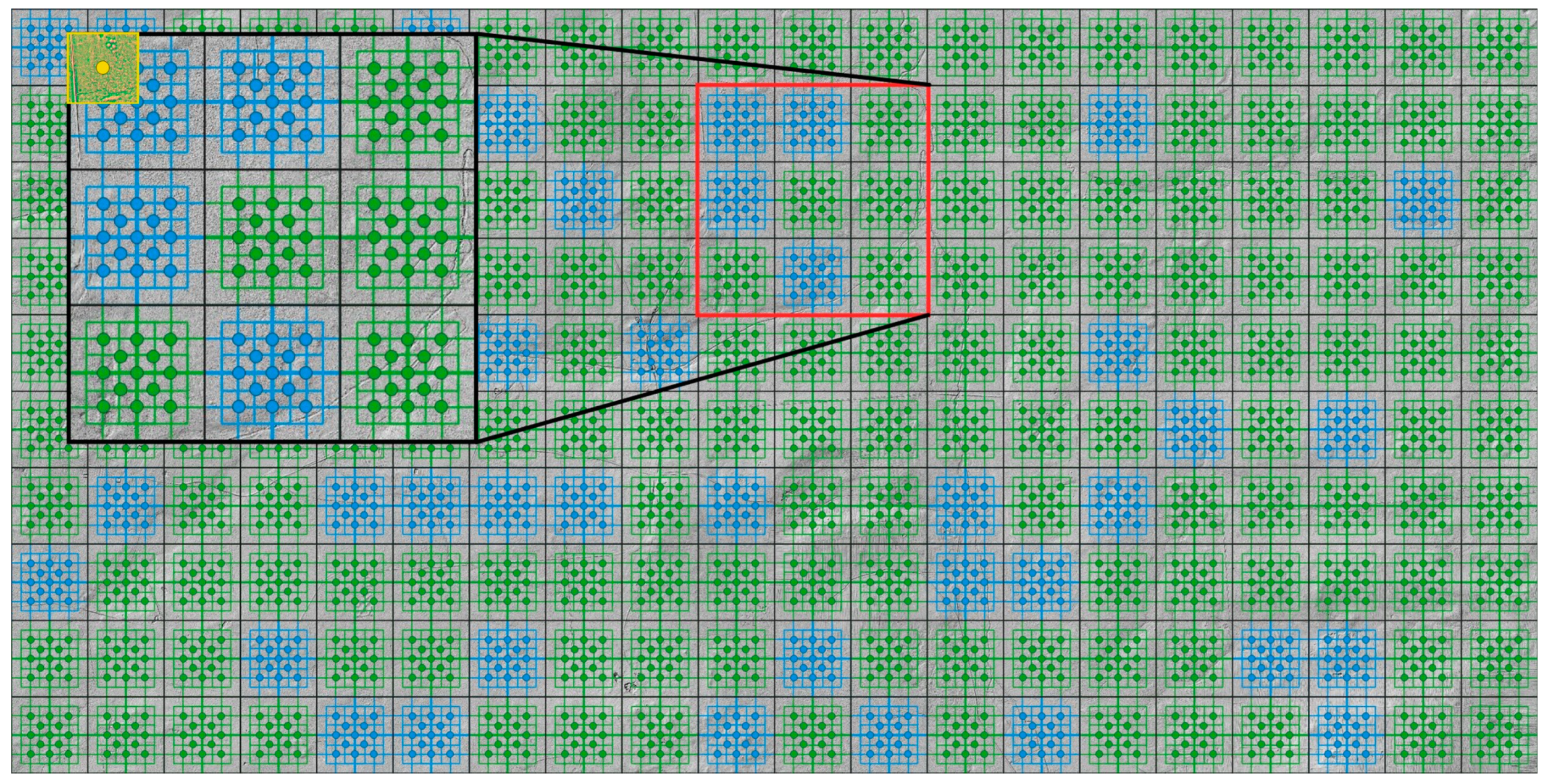

2.7.1. Data Description

2.7.2. Data Preparation

2.7.3. Problem Description

2.7.4. Model Architecture

2.7.5. Training

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Verschoof-van der Vaart, W.B.; Lambers, K.; Kowalczyk, W.; Bourgeois, Q.P.J. Combining Deep Learning and Location-Based Ranking for Large-Scale Archaeological Prospection of LiDAR Data from The Netherlands. ISPRS Int. J. Geo Inf. 2020, 9, 293. [Google Scholar] [CrossRef]

- Trier, Ø.D.; Cowley, D.C.; Waldeland, A.U. Using Deep Neural Networks on Airborne Laser Scanning Data: Results from a Case Study of Semi-automatic Mapping of Archaeological Topography on Arran, Scotland. Archaeol. Prospect. 2018, 26, 165–175. [Google Scholar] [CrossRef]

- Lambers, K.; Verschoof-van der Vaart, W.; Bourgeois, Q. Integrating Remote Sensing, Machine Learning, and Citizen Science in Dutch Archaeological Prospection. Remote Sens. 2019, 11, 794. [Google Scholar] [CrossRef] [Green Version]

- Tadeusiewicz, R.; Szaleniec, M. Leksykon Sieci Neuronowych; Wydawnictwo Fundacji “Projekt Nauka”: Wrocław, Poland, 2015; p. 94. [Google Scholar]

- Kurczyński, Z. Airborne Laser Scanning in Poland—Between Science and Practice. Archives of Photogrammetry. Cartogr. Remote Sens. 2019, 31, 105–133. [Google Scholar] [CrossRef]

- Kurczyński, Z.; Bakuła, K. Generowanie referencyjnego numerycznego modelu terenu o zasięgu krajowym w oparciu o lotnicze skanowanie laserowe w projekcie ISOK. In Monografia “Geodezyjne Technologie Pomiarowe”: Wydanie Specjalne; Kurczyński, Z., Ed.; Zarząd Główny Stowarzyszenia Geodetów Polskich: Warsaw, Poland, 2013; pp. 59–68. [Google Scholar]

- Stereńczak, K.; Zapłata, R.; Wójcik, J.; Kraszewski, B.; Mielcarek, M.; Mitelsztedt, K.; Białczak, M.; Krok, G.; Kuberski, Ł.; Markiewicz, A.; et al. ALS-Based Detection of Past Human Activities in the Białowieża Forest—New Evidence of Unknown Remains of Past Agricultural Systems. Remote Sens. 2020, 12, 2657. [Google Scholar] [CrossRef]

- Zapłata, R.; Stereńczak, K.; Kraszewski, B. Wielkoobszarowe badania dziedzictwa archeologicznego na terenach leśnych. Kur. Konserw. 2018, 15, 47–51. [Google Scholar]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- LeCun, Y.; Haffner, P.; Bottou, L.; Bengio, Y. Object recognition with gradient-based learning. In Shape, Contour and Grouping in Computer Vision; Springer: Berlin/Heidelberg, Germany, 1999; pp. 319–345. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Szegedy, C.; Wei, L.; Yangqing, J.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; 2015. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; 2016. [Google Scholar] [CrossRef] [Green Version]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef] [Green Version]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Lect. Notes Comput. Sci. 2015, 234–241. [Google Scholar] [CrossRef] [Green Version]

- Verschoof-van der Vaart, W.B.; Lambers, K. Learning to Look at LiDAR: The Use of R-CNN in the Automated Detection of Archaeological Objects in LiDAR Data from the Netherlands. J. Comput. Appl. Archaeol. 2019, 2, 31–40. [Google Scholar] [CrossRef] [Green Version]

- Kazimi, B.; Thiemann, F.; Sester, M. Object Instance Segmentation in Digital Terrain Models. In Proceedings of the Computer Analysis of Images and Patterns, Salerno, Italy, 3–5 September 2019; pp. 488–495. [Google Scholar] [CrossRef]

- Bakuła, K.; Ostrowski, W.; Zapłata, R. Automatyzacja w Procesie Detekcji Obiektów Archeologicznych z Danych ALS. Folia Praehist. Posnaniensia 2014, 19, 189. [Google Scholar] [CrossRef] [Green Version]

- Gallwey, E.; Tonkins, C. Bringing Lunar LiDAR Back Down to Earth: Mapping Our Industrial Heritage through Deep Transfer Learning. Remote Sens. 2019, 11, 1994. [Google Scholar] [CrossRef] [Green Version]

- Bundzel, M.; Jaščur, M.; Kováč, M.; Lieskovský, T.; Sinčák, P.; Tkáčik, T. Semantic Segmentation of Airborne LiDAR Data in Maya Archaeology. Remote Sens. 2020, 12, 3685. [Google Scholar] [CrossRef]

- Politz, F.; Kazimi, B.; Sester, M. Classification of laser scanning data using deep learning. In Proceedings of the 38th Scientific-Technical Annual Conference of the DGPF and PFGK18, Munich, Germany, 6–9 March 2018. [Google Scholar]

- Hesse, R. LiDAR-Derived Local Relief Models—A New Tool for Archaeological Prospection. Archaeol. Prospect. 2010, 17, 67–72. [Google Scholar] [CrossRef]

- Arnold, N.; Angelov, P.; Viney, T.; Atkinson, P. Automatic Extraction and Labelling of Memorial Objects From 3D Point Clouds. J. Comput. Appl. Archaeol. 2021, 4, 79–93. [Google Scholar] [CrossRef]

- Crawford, O.G.S. Air Survey and Archaeology. Geogr. J. 1923, 61, 342. [Google Scholar] [CrossRef]

- Arnoldussen, S.; van der Linden, M. Palaeo-Ecological and Archaeological Analysis of Two Dutch Celtic Fields (Zeijen-Noordse Veld and Wekerom-Lunteren): Solving the Puzzle of Local Celtic Field Bank Formation. Veg. Hist. Archaeobotany 2017, 26, 551–570. [Google Scholar] [CrossRef] [Green Version]

- Arnold, V. Älter als die Römer: Bisher übersehene Spuren einstiger 30, Beackerung unter bayerischen Wäldern. Forstl. Forsch. München 2020, 218, 8–18. [Google Scholar]

- Klamm, M. Aufbau und Entstehung eisenzeitlicher Ackerfluren (“celtic fields”) Neue Untersuchungen im Gehege Ausselbek, Kr. Schleswig-Flensburg. Archäologische Unters. 1993, 16, 122–124. [Google Scholar]

- Spek, T.; Waateringe, W.G.; Kooistra, M.; Bakker, L. Formation and Land-Use History of Celtic Fields in North-West Europe—An Interdisciplinary Case Study at Zeijen, the Netherlands. Eur. J. Archaeol. 2003, 6, 141–173. [Google Scholar] [CrossRef]

- Zimmerman, W.H. Die eisenzeitlichen Ackerfluren–Typ ‘Celtic field’–von Flögeln-Haselhörn, Kr. Wesermünde. Probl. Der Küstenforschung Im Südlichen Nordseegebiet 1976, 11, 79–90. [Google Scholar]

- Kooistra, M.J.; Maas, G.J. The Widespread Occurrence of Celtic Field Systems in the Central Part of the Netherlands. J. Archaeol. Sci. 2008, 35, 2318–2328. [Google Scholar] [CrossRef]

- Meylemans, E.; Creemers, G.; De Bie, M.; Paesen, J. Revealing extensive protohistoric field systems through high resolution LIDAR data in the northern part of Belgium. Archäologisches Korrespondenzblatt 2015, 45, 1–17. [Google Scholar]

- Nielsen, V. Prehistoric field boundaries in Eastern Denmark. J. Dan. Archaeol. 1984, 3, 135–163. [Google Scholar] [CrossRef]

- Arnold, V. »Celtic Fields« and Other Prehistoric Field Systems in Historical Forests of Schleswig-Holstein from Laser-Scan Dates. Archäologisches Korrespondenzblatt 2011, 41, 439–455. [Google Scholar] [CrossRef]

- Løvschal, M. Lines of Landscape Organization: Skovbjerg Moraine (Denmark) in the First Millennium BC. Oxf. J. Archaeol. 2015, 34, 259–278. [Google Scholar] [CrossRef]

- Whitefield, A. Neolithic ‘Celtic’ Fields? A Reinterpretation of the Chronological Evidence from Céide Fields in North-Western Ireland. Eur. J. Archaeol. 2017, 20, 257–279. [Google Scholar] [CrossRef] [Green Version]

- Behre, K.E. Zur Geschichte der Kulturlandschaft Nordwestdeutschlands seit dem Neolithikum. Ber. Der Römisch Ger. Komm. 2002, 83, 39–68. [Google Scholar]

- Buurman, J. Graan in Ijzertijd-silos uit Colmschate in Voordrachten gehouden te Middelburg ter gelegenheid van het afscheid van Ir. JA Trimpe Burger als provinciaal archeoloog van Zeeland. Ned. Archeol. Rapp. 1986, 3, 67–73. [Google Scholar]

- Vermeeren, C. Cultuurgewassen en onkruiden in Ittersumerbroek. In Bronstijdboeren in Ittersumerbroek. Opgraving van een Bronstijdnederzetting in Zwolle-Ittersumerbroek; Clevis, H., Verlinde, A.D., Eds.; Stichting Archeologie IJssel/Vechtstreek: Kampen, The Netherlands, 1991; pp. 93–106. [Google Scholar]

- Bakels, C.C. Fruits and seeds from the Iron Age settlements at Oss-Flussen. Analecta Praehist. Leiden. 1998, 30, 338–348. [Google Scholar]

- Banasiak, P.; Berezowski, P. Ancient Fields Systems in Poland: Discovered by Manual and Deep Learning Methods. Available online: https://prohistoric.wordpress.com (accessed on 31 December 2018).

- Zapłata, R.; Stereńczak, K. Archeologiczna niespodzianka w Puszczy Białowieskiej. Archeol. Żywa 2017, 1, 63. [Google Scholar]

- Zapłata, R.; Stereńczak, K. Puszcza Białowieska, LiDAR i dziedzictwo kulturowe–zagadnienia wprowadzające. Raport 2016, 11, 239–255. [Google Scholar]

- Stereńczak, K.; Krasnodębski, D.; Zapłata, R.; Kraszewski, B.; Mielcarek, M. Sprawozdanie z Realizacji Zadania Inwentaryzacja Dziedzictwa Kulturowego, w Projekcie pt. Ocena Stanu Różnorodności Biologicznej w Puszczy Białowieskiej na Podstawie Wybranych Elementów Przyrodniczych i Kulturowych; Forest Research Institute: Sękocin Stary, Poland, 2016. [Google Scholar]

- Zapłata, R.; Stereńczak, K.; Grześkowiak, M.; Wilk, A.; Obidziński, A.; Zawadzki, M.; Stępnik, J.; Kwiatkowska, E.; Kuciewicz, E. Raport Końcowy. Zadanie “Inwentaryzacja Dziedzictwa Kulturowego” na Terenie Polskiej części Puszczy Białowieskiej w Ramach Projektu “Ocena i Monitoring Zmian Stanu Różnorodności Biologicznej W Puszczy Białowieskiej Na Podstawie Wybranych Elementów Przyrodniczych I Kulturowych–Kontynuacja”; Forest Research Institute: Sękocin Stary, Poland, 2019. [Google Scholar]

- Sosnowski, M.; Noryśkiewicz, A.M.; Czerniec, J. Examining a scallop shell-shaped plate from the Late Roman Period discovered in Osie (site no.: Osie 28, AZP 27-41/26), northern Poland. Analecta Archaeol. Ressoviensia 2019, 14, 91–98. [Google Scholar] [CrossRef]

- Górska, I. Badania archeologiczne w Puszczy Białowieskiej. Archeol. Pol. 1976, 21, 109–134. [Google Scholar]

- Götze, A. Archäologische untersuchungen im urwalde von bialowies. In Beiträge zur Natur- und Kulturgeschichte Lithauens und Angrenzender Gebiete; Stechow, E., Ed.; Verlag der Bayerischen Akademie der Wissenschaften in Kommission des Verlags R. Oldenburg München: Munich, Germany, 1929; pp. 511–550. [Google Scholar]

- Oszmiański, M. Inwentaryzacja Kurhanów na Terenie Puszczy Białowieskiej; Wojewódzki Urząd Ochrony Zabytków w Białymstoku: Bialystok, Poland, 1996. [Google Scholar]

- Krasnodębski, D.; Olczak, H. Badania archeologiczne na terenie polskiej części Puszczy Białowieskiej—Stan obecny, problemy i perspektywy. In Biuletyn Konserwatorski Województwa Podlaskiego; Wojewódzki Urząd Ochrony Zabytków w Białymstoku: Bialystok, Poland, 2012; Volume 18, pp. 145–168. [Google Scholar]

- Krasnodębski, D.; Olczak, H. Puszcza Białowieska jako przykład badań archeologicznych na obszarach leśnych—Wyniki i problemy przeprowadzonej w 2016 r. inwentaryzacji dziedzictwa kulturowego. Podl. Zesz. Archeol. 2017, 13, 5–64. [Google Scholar]

- Zapłata, R.; Wilk, A.; Grześkowiak, M.; Obidziński, A.; Zawadzki, M.; Stereńczak, K.; Kuberski, Ł. Sprawozdanie Końcowe w Związku z Realizacją Umowy nr EO.271.3.5.2019 z Dnia 29 Marca 2019 r. “Dziedzictwo Kulturowe i Rys Historyczny Polskiej części Puszczy Białowieskiej”; Dyrekcja Generalna Lasów Państwowych: Warsaw, Poland, 2019.

- Samojlik, T. Antropogenne Przemiany Środowiska Puszczy Białowieskiej do Końca XVIII Wieku; Zakład Badania Ssaków PAN: Białowieża-Kraków, Poland, 2007. [Google Scholar]

- Krasnodębski, D.; Olczak, H.; Mizerka, J.; Niedziółka, K. Alleged burial mounds from the late Roman Period at Leśnictwo Sacharewo site 3, Białowieża Primeval Forest. Światowit 2018, 57, 89–99. [Google Scholar] [CrossRef]

- Olczak, H.; Krasnodębski, D.; Szlązak, R.; Wawrzeniuk, J. The Early Medieval Barrows with Kerbstones at the Leśnictwo Postołowo Site 11 in the Białowieża Forest (Szczekotowo Range). Spraw. Archeol. 2020, 72. [Google Scholar] [CrossRef]

- Samojlik, T. Rozkwit i upadek produkcji potażu w Puszczy Białowieskiej w XVII-XIX w. Rocz. Pol. Tow. Dendrol. 2016, 64, 9–19. [Google Scholar]

- Kokalj, Ž.; Hesse, R. Airborne Laser Scanning Raster Data Visualization; Založba ZRC: Ljubljana, Slovenia, 2017. [Google Scholar] [CrossRef]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and Flexible Image Augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef] [Green Version]

- Ulku, I.; Akagunduz, E. A survey on deep learning-based architectures for semantic segmentation on 2D images. arXiv 2019, arXiv:1912.10230. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Bertels, J.; Eelbode, T.; Berman, M.; Vandermeulen, D.; Maes, F.; Bisschops, R.; Blaschko, M.B. Optimizing the Dice Score and Jaccard Index for Medical Image Segmentation: Theory and Practice. Lect. Notes Comput. Sci. 2019, 92–100. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [Green Version]

- Somrak, M.; Džeroski, S.; Kokalj, Ž. Learning to Classify Structures in ALS-Derived Visualizations of Ancient Maya Settlements with CNN. Remote Sens. 2020, 12, 2215. [Google Scholar] [CrossRef]

- Bonhage, A.; Eltaher, M.; Raab, T.; Breuß, M.; Raab, A.; Schneider, A. A Modified Mask Region-based Convolutional Neural Network Approach for the Automated Detection of Archaeological Sites on High-resolution Light Detection and Ranging-derived Digital Elevation Models in the North German Lowland. Archaeol. Prospect. 2021, 28, 177–186. [Google Scholar] [CrossRef]

- Davis, D.S. Defining What We Study: The Contribution of Machine Automation in Archaeological Research. Digit. Appl. Archaeol. Cult. Herit. 2020, 18, e00152. [Google Scholar] [CrossRef]

- Bickler, S.H. Machine Learning Arrives in Archaeology. Adv. Archaeol. Pract. 2021, 9, 186–191. [Google Scholar] [CrossRef]

| Archaeological features: | |

| 1 | field system banks |

| 2 | field system plots |

| 3 | burial mounds |

| Modern features: | |

| 4 | roads |

| 5 | forest paths and divisions |

| 6 | modern landscape (e.g., houses, farmlands) |

| Natural features: | |

| 7 | inland waterways |

| 8 | inland dunes |

| Remaining land/area: | |

| 9 | background |

| F1-Score | IoU | Loss | |

|---|---|---|---|

| Training set | 0.91 | 0.84 | 0.16 |

| Validation set | 0.79 | 0.68 | 0.33 |

| Test set | 0.58 | 0.50 | 0.53 |

| Class | IoU |

|---|---|

| field system banks | 0.408 |

| field system plots | 0.616 |

| burial mounds | 0.615 |

| roads | 0.673 |

| forest paths and divisions | 0.514 |

| modern landscape | 0.531 |

| inland waterways | 0.573 |

| inland dunes | 0.333 |

| background | 0.782 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Banasiak, P.Z.; Berezowski, P.L.; Zapłata, R.; Mielcarek, M.; Duraj, K.; Stereńczak, K. Semantic Segmentation (U-Net) of Archaeological Features in Airborne Laser Scanning—Example of the Bia?owie?a Forest. Remote Sens. 2022, 14, 995. https://doi.org/10.3390/rs14040995

Banasiak PZ, Berezowski PL, Zapłata R, Mielcarek M, Duraj K, Stereńczak K. Semantic Segmentation (U-Net) of Archaeological Features in Airborne Laser Scanning—Example of the Bia?owie?a Forest. Remote Sensing. 2022; 14(4):995. https://doi.org/10.3390/rs14040995

Chicago/Turabian StyleBanasiak, Paweł Zbigniew, Piotr Leszek Berezowski, Rafał Zapłata, Miłosz Mielcarek, Konrad Duraj, and Krzysztof Stereńczak. 2022. "Semantic Segmentation (U-Net) of Archaeological Features in Airborne Laser Scanning—Example of the Bia?owie?a Forest" Remote Sensing 14, no. 4: 995. https://doi.org/10.3390/rs14040995

APA StyleBanasiak, P. Z., Berezowski, P. L., Zapłata, R., Mielcarek, M., Duraj, K., & Stereńczak, K. (2022). Semantic Segmentation (U-Net) of Archaeological Features in Airborne Laser Scanning—Example of the Bia?owie?a Forest. Remote Sensing, 14(4), 995. https://doi.org/10.3390/rs14040995