Fusion of Drone-Based RGB and Multi-Spectral Imagery for Shallow Water Bathymetry Inversion

Abstract

:1. Introduction

2. Methodology

2.1. Study Areas and Fieldwork

2.2. Pre-Processing of Drone-Based Imagery

2.3. Shallow Bathymetry Inversion in WASI

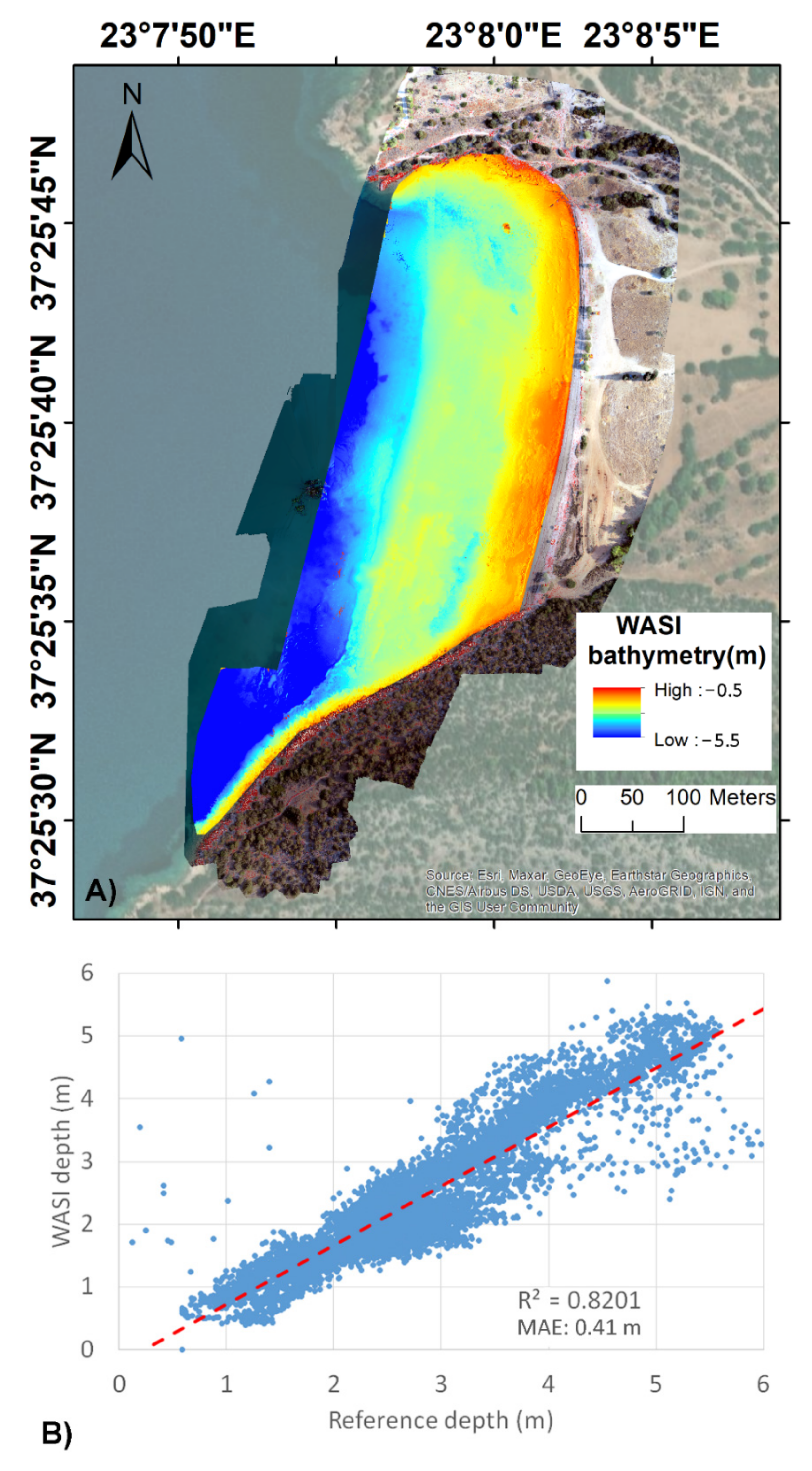

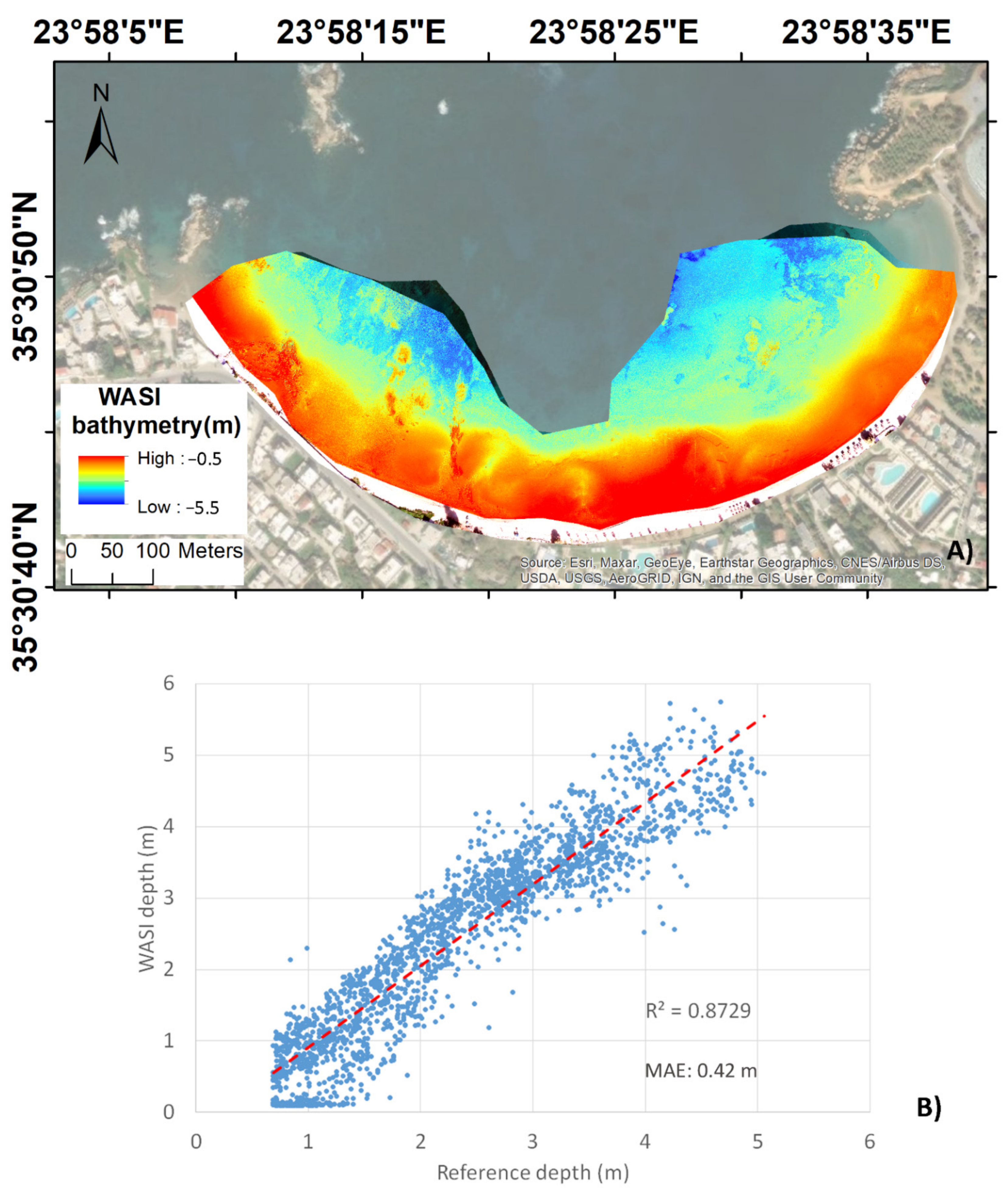

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Band Name | Central Wavelength (nm) | Fwhm* (nm) |

|---|---|---|

| P4P-Blue | 462 | 40 |

| P4P-Green | 525 | 50 |

| P4P-Red | 592 | 25 |

| MS-Blue | 480 | 10 |

| MS-Green | 560 | 10 |

| MS-Red | 671 | 5 |

| Study Area | CHL-a (mg/L) | SPM (mg/L) |

|---|---|---|

| Lambayanna | 0.3 | 0.3 |

| Kalamaki | 0.18 | 0.13 |

| Plakias | 0.18 | 0.10 |

References

- Bio, A.; Bastos, L.; Granja, H.; Pinho, J.L.S.; Gonçalves, J.A.; Henriques, R.; Madeira, S.; Magalhães, A.; Rodrigues, D. Methods for Coastal Monitoring and Erosion Risk Assessment: Two Portuguese Case Studies. RGCI 2015, 15, 47–63. [Google Scholar] [CrossRef] [Green Version]

- Davidson, M.; Van Koningsveld, M.; de Kruif, A.; Rawson, J.; Holman, R.; Lamberti, A.; Medina, R.; Kroon, A.; Aarninkhof, S. The CoastView Project: Developing Video-Derived Coastal State Indicators in Support of Coastal Zone Management. Coast. Eng. 2007, 54, 463–475. [Google Scholar] [CrossRef]

- Deronde, B.; Houthuys, R.; Debruyn, W.; Fransaer, D.; Lancker, V.V.; Henriet, J.-P. Use of Airborne Hyperspectral Data and Laserscan Data to Study Beach Morphodynamics along the Belgian Coast. Coas 2006, 2006, 1108–1117. [Google Scholar] [CrossRef]

- Papadopoulos, N.; Oikonomou, D.; Cantoro, G.; Simyrdanis, K.; Beck, J. Archaeological Prospection in Ultra-Shallow Aquatic Environments: The Case of the Prehistoric Submerged Site of Lambayanna, Greece. Near Surf. Geophys. 2021, 19, 677–697. [Google Scholar] [CrossRef]

- Wiseman, C.; O’Leary, M.; Hacker, J.; Stankiewicz, F.; McCarthy, J.; Beckett, E.; Leach, J.; Baggaley, P.; Collins, C.; Ulm, S.; et al. A Multi-Scalar Approach to Marine Survey and Underwater Archaeological Site Prospection in Murujuga, Western Australia. Quat. Int. 2021, 584, 152–170. [Google Scholar] [CrossRef]

- Costa, B.M.; Battista, T.A.; Pittman, S.J. Comparative Evaluation of Airborne LiDAR and Ship-Based Multibeam SoNAR Bathymetry and Intensity for Mapping Coral Reef Ecosystems. Remote Sens. Environ. 2009, 113, 1082–1100. [Google Scholar] [CrossRef]

- Goes, E.R.; Brown, C.J.; Araújo, T.C. Geomorphological Classification of the Benthic Structures on a Tropical Continental Shelf. Front. Mar. Sci. 2019, 6, 47. [Google Scholar] [CrossRef]

- Zhang, C. Applying Data Fusion Techniques for Benthic Habitat Mapping and Monitoring in a Coral Reef Ecosystem. ISPRS J. Photogramm. Remote Sens. 2015, 104, 213–223. [Google Scholar] [CrossRef]

- Carvalho, R.C.; Hamylton, S.; Woodroffe, C.D. Filling the ‘White Ribbon’ in Temperate Australia: A Multi-Approach Method to Map the Terrestrial-Marine Interface. In Proceedings of the 2017 IEEE/OES Acoustics in Underwater Geosciences Symposium (RIO Acoustics), Rio de Janeiro, Brazil, 25–27 July 2017; pp. 1–5. [Google Scholar]

- Alevizos, E.; Roussos, A.; Alexakis, D. Geomorphometric Analysis of Nearshore Sedimentary Bedforms from High-Resolution Multi-Temporal Satellite-Derived Bathymetry. Geocarto Int. 2021, 1–17. [Google Scholar] [CrossRef]

- Kenny, A.J.; Cato, I.; Desprez, M.; Fader, G.; Schüttenhelm, R.T.E.; Side, J. An Overview of Seabed-Mapping Technologies in the Context of Marine Habitat Classification. ICES J. Mar. Sci. 2003, 60, 411–418. [Google Scholar] [CrossRef] [Green Version]

- Kutser, T.; Hedley, J.; Giardino, C.; Roelfsema, C.; Brando, V.E. Remote Sensing of Shallow Waters—A 50 Year Retrospective and Future Directions. Remote Sens. Environ. 2020, 240, 111619. [Google Scholar] [CrossRef]

- Lyzenga, D.R. Passive Remote Sensing Techniques for Mapping Water Depth and Bottom Features. Appl. Opt. 1978, 17, 379–383. [Google Scholar] [CrossRef] [PubMed]

- Stumpf, R.P.; Holderied, K.; Sinclair, M. Determination of Water Depth with High-Resolution Satellite Imagery over Variable Bottom Types. Limnol. Oceanogr. 2003, 48, 547–556. [Google Scholar] [CrossRef]

- Geyman, E.C.; Maloof, A.C. A Simple Method for Extracting Water Depth From Multispectral Satellite Imagery in Regions of Variable Bottom Type. Earth Space Sci. 2019, 6, 527–537. [Google Scholar] [CrossRef]

- Gholamalifard, M.; Kutser, T.; Esmaili-Sari, A.; Abkar, A.A.; Naimi, B. Remotely Sensed Empirical Modeling of Bathymetry in the Southeastern Caspian Sea. Remote Sens. 2013, 5, 2746–2762. [Google Scholar] [CrossRef] [Green Version]

- Ma, S.; Tao, Z.; Yang, X.; Yu, Y.; Zhou, X.; Li, Z. Bathymetry Retrieval From Hyperspectral Remote Sensing Data in Optical-Shallow Water. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1205–1212. [Google Scholar] [CrossRef]

- Traganos, D.; Poursanidis, D.; Aggarwal, B.; Chrysoulakis, N.; Reinartz, P. Estimating Satellite-Derived Bathymetry (SDB) with the Google Earth Engine and Sentinel-2. Remote Sens. 2018, 10, 859. [Google Scholar] [CrossRef] [Green Version]

- Wei, C.; Zhao, Q.; Lu, Y.; Fu, D. Assessment of Empirical Algorithms for Shallow Water Bathymetry Using Multi-Spectral Imagery of Pearl River Delta Coast, China. Remote Sens. 2021, 13, 3123. [Google Scholar] [CrossRef]

- Kibele, J.; Shears, N.T. Nonparametric Empirical Depth Regression for Bathymetric Mapping in Coastal Waters. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5130–5138. [Google Scholar] [CrossRef]

- Caballero, I.; Stumpf, R.P. Retrieval of Nearshore Bathymetry from Sentinel-2A and 2B Satellites in South Florida Coastal Waters. Estuar. Coast. Shelf Sci. 2019, 226, 106277. [Google Scholar] [CrossRef]

- Dekker, A.G.; Phinn, S.R.; Anstee, J.; Bissett, P.; Brando, V.E.; Casey, B.; Fearns, P.; Hedley, J.; Klonowski, W.; Lee, Z.P.; et al. Intercomparison of Shallow Water Bathymetry, Hydro-Optics, and Benthos Mapping Techniques in Australian and Caribbean Coastal Environments. Limnol. Oceanogr. Methods 2011, 9, 396–425. [Google Scholar] [CrossRef] [Green Version]

- Klonowski, W.M. Retrieving Key Benthic Cover Types and Bathymetry from Hyperspectral Imagery. J. Appl. Remote Sens. 2007, 1, 011505. [Google Scholar] [CrossRef]

- Leiper, I.A.; Phinn, S.R.; Roelfsema, C.M.; Joyce, K.E.; Dekker, A.G. Mapping Coral Reef Benthos, Substrates, and Bathymetry, Using Compact Airborne Spectrographic Imager (CASI) Data. Remote Sens. 2014, 6, 6423–6445. [Google Scholar] [CrossRef] [Green Version]

- Lee, Z.; Carder, K.L.; Mobley, C.D.; Steward, R.G.; Patch, J.S. Hyperspectral Remote Sensing for Shallow Waters: 2. Deriving Bottom Depths and Water Properties by Optimization. Appl. Opt. 1999, 38, 3831–3843. [Google Scholar] [CrossRef] [Green Version]

- Mobley, C.D.; Sundman, L.K.; Davis, C.O.; Bowles, J.H.; Downes, T.V.; Leathers, R.A.; Montes, M.J.; Bissett, W.P.; Kohler, D.D.R.; Reid, R.P.; et al. Interpretation of Hyperspectral Remote-Sensing Imagery by Spectrum Matching and Look-up Tables. Appl. Opt. 2005, 44, 3576–3592. [Google Scholar] [CrossRef]

- Castillo-López, E.; Dominguez, J.A.; Pereda, R.; de Luis, J.M.; Pérez, R.; Piña, F. The Importance of Atmospheric Correction for Airborne Hyperspectral Remote Sensing of Shallow Waters: Application to Depth Estimation. Atmos. Meas. Tech. 2017, 10, 3919–3929. [Google Scholar] [CrossRef] [Green Version]

- Kobryn, H.T.; Wouters, K.; Beckley, L.E.; Heege, T. Ningaloo Reef: Shallow Marine Habitats Mapped Using a Hyperspectral Sensor. PLoS ONE 2013, 8, e70105. [Google Scholar] [CrossRef] [Green Version]

- Alevizos, E. How to Create High Resolution Digital Elevation Models of Terrestrial Landscape Using Uav Imagery and Open-Source Software. 2019. Available online: https://www.researchgate.net/publication/333248069_HOW_TO_CREATE_HIGH_RESOLUTION_DIGITAL_ELEVATION_MODELS_OF_TERRESTRIAL_LANDSCAPE_USING_UAV_IMAGERY_AND_OPEN-SOURCE_SOFTWARE (accessed on 1 October 2021).

- Román, A.; Tovar-Sánchez, A.; Olivé, I.; Navarro, G. Using a UAV-Mounted Multispectral Camera for the Monitoring of Marine Macrophytes. Front. Mar. Sci. 2021, 8, 722698. [Google Scholar] [CrossRef]

- Rossi, L.; Mammi, I.; Pelliccia, F. UAV-Derived Multispectral Bathymetry. Remote Sens. 2020, 12, 3897. [Google Scholar] [CrossRef]

- Parsons, M.; Bratanov, D.; Gaston, K.; Gonzalez, F. UAVs, Hyperspectral Remote Sensing, and Machine Learning Revolutionizing Reef Monitoring. Sensors 2018, 18, 2026. [Google Scholar] [CrossRef] [Green Version]

- Slocum, R.K.; Parrish, C.E.; Simpson, C.H. Combined Geometric-Radiometric and Neural Network Approach to Shallow Bathymetric Mapping with UAS Imagery. ISPRS J. Photogramm. Remote Sens. 2020, 169, 351–363. [Google Scholar] [CrossRef]

- Starek, M.J.; Giessel, J. Fusion of Uas-Based Structure-from-Motion and Optical Inversion for Seamless Topo-Bathymetric Mapping. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2999–3002. [Google Scholar]

- Chirayath, V.; Earle, S.A. Drones That See through Waves–Preliminary Results from Airborne Fluid Lensing for Centimetre-Scale Aquatic Conservation. Aquat. Conserv. Mar. Freshw. Ecosyst. 2016, 26, 237–250. [Google Scholar] [CrossRef] [Green Version]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Isgró, M.A.; Basallote, M.D.; Barbero, L. Unmanned Aerial System-Based Multispectral Water Quality Monitoring in the Iberian Pyrite Belt (SW Spain). Mine Water Environ. 2021. [Google Scholar] [CrossRef]

- Kabiri, K.; Rezai, H.; Moradi, M. A Drone-Based Method for Mapping the Coral Reefs in the Shallow Coastal Waters – Case Study: Kish Island, Persian Gulf. Earth Sci. Inform. 2020, 13, 1265–1274. [Google Scholar] [CrossRef]

- Fallati, L.; Saponari, L.; Savini, A.; Marchese, F.; Corselli, C.; Galli, P. Multi-Temporal UAV Data and Object-Based Image Analysis (OBIA) for Estimation of Substrate Changes in a Post-Bleaching Scenario on a Maldivian Reef. Remote Sens. 2020, 12, 2093. [Google Scholar] [CrossRef]

- Murfitt, S.L.; Allan, B.M.; Bellgrove, A.; Rattray, A.; Young, M.A.; Ierodiaconou, D. Applications of Unmanned Aerial Vehicles in Intertidal Reef Monitoring. Sci. Rep. 2017, 7, 10259. [Google Scholar] [CrossRef] [Green Version]

- Rossiter, T.; Furey, T.; McCarthy, T.; Stengel, D.B. UAV-Mounted Hyperspectral Mapping of Intertidal Macroalgae. Estuar. Coast. Shelf Sci. 2020, 242, 106789. [Google Scholar] [CrossRef]

- Tait, L.; Bind, J.; Charan-Dixon, H.; Hawes, I.; Pirker, J.; Schiel, D. Unmanned Aerial Vehicles (UAVs) for Monitoring Macroalgal Biodiversity: Comparison of RGB and Multispectral Imaging Sensors for Biodiversity Assessments. Remote Sens. 2019, 11, 2332. [Google Scholar] [CrossRef] [Green Version]

- Barnhart, H.X.; Haber, M.; Song, J. Overall Concordance Correlation Coefficient for Evaluating Agreement among Multiple Observers. Biometrics 2002, 58, 1020–1027. [Google Scholar] [CrossRef]

- Lin, L.; Hedayat, A.S.; Wu, W. A Unified Approach for Assessing Agreement for Continuous and Categorical Data. J. Biopharm. Stat. 2007, 17, 629–652. [Google Scholar] [CrossRef] [PubMed]

- Zhao, D.; Arshad, M.; Wang, J.; Triantafilis, J. Soil Exchangeable Cations Estimation Using Vis-NIR Spectroscopy in Different Depths: Effects of Multiple Calibration Models and Spiking. Comput. Electron. Agric. 2021, 182, 105990. [Google Scholar] [CrossRef]

- Albert, A. Inversion Technique for Optical Remote Sensing in Shallow Water. Ph.D. Thesis, Hamburg University, Hamburg, Germany, December 2004. Available online: https://ediss.sub.unihamburg.de/handle/ediss/812 (accessed on 1 October 2021).

- Tagle, X. Study of Radiometric Variations in Unmanned Aerial Vehicle Remote Sensing Imagery for Vegetation Mapping. Master’s Thesis, Lund University, Lund, Sweden, June 2017. [Google Scholar] [CrossRef]

- Burggraaff, O.; Schmidt, N.; Zamorano, J.; Pauly, K.; Pascual, S.; Tapia, C.; Spyrakos, E.; Snik, F. Standardized Spectral and Radiometric Calibration of Consumer Cameras. Opt. Express 2019, 27, 19075. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mouquet, P.; Quod, J.-P. Spectrhabent-OI-Acquisition et Analyse de la Librairie Spectrale Sous-Marine. 2010. Available online: https://archimer.ifremer.fr/doc/00005/11647/ (accessed on 1 October 2021).

- Alevizos, E.; Alexakis, D.D. Evaluation of Radiometric Calibration of Drone-Based Imagery for Improving Shallow Bathymetry Retrieval. Remote Sens. Lett. 2022, 13, 311–321. [Google Scholar] [CrossRef]

- Radiometric Calibration Model for MicaSense Sensors. Available online: https://support.micasense.com/hc/en-us/articles/115000351194-Radiometric-Calibration-Model-for-MicaSense-Sensors (accessed on 23 October 2021).

- Gege, P. A Case Study at Starnberger See for Hyperspectral Bathymetry Mapping Using Inverse Modeling. In Proceedings of the 2014 6th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lausanne, Switzerland, 24–27 June 2014; pp. 1–4. [Google Scholar]

- Dörnhöfer, K.; Göritz, A.; Gege, P.; Pflug, B.; Oppelt, N. Water Constituents and Water Depth Retrieval from Sentinel-2A—A First Evaluation in an Oligotrophic Lake. Remote Sens. 2016, 8, 941. [Google Scholar] [CrossRef] [Green Version]

- Niroumand-Jadidi, M.; Bovolo, F.; Bruzzone, L.; Gege, P. Physics-Based Bathymetry and Water Quality Retrieval Using PlanetScope Imagery: Impacts of 2020 COVID-19 Lockdown and 2019 Extreme Flood in the Venice Lagoon. Remote Sens. 2020, 12, 2381. [Google Scholar] [CrossRef]

- Albert, A.; Mobley, C.D. An Analytical Model for Subsurface Irradiance and Remote Sensing Reflectance in Deep and Shallow Case-2 Waters. Opt. Express 2003, 11, 2873–2890. [Google Scholar] [CrossRef]

- Gege, P.; Albert, A. A tool for inverse modeling of spectral measurements in deep and shallow waters. In Remote Sensing of Aquatic Coastal Ecosystem Processes; Richardson, L.L., Ledrew, E.F., Eds.; Remote Sensing and Digital Image Processing; Springer Netherlands: Dordrecht, The Netherlands, 2006; pp. 81–109. ISBN 978-1-4020-3968-3. [Google Scholar]

- Gege, P. WASI-2D: A Software Tool for Regionally Optimized Analysis of Imaging Spectrometer Data from Deep and Shallow Waters. Comput. Geosci. 2014, 62, 208–215. [Google Scholar] [CrossRef] [Green Version]

- Pinnel, N. A method for mapping submerged macrophytes in lakes using hyperspectral remote sensing. Ph.D. Thesis, Technischen Universitaet Muenchen, München, Germany, 2007. Available online: https://mediatum.ub.tum.de/doc/604557/document.pdf (accessed on 5 November 2021).

- Eugenio, F.; Marcello, J.; Martin, J.; Rodríguez-Esparragón, D. Benthic Habitat Mapping Using Multispectral High-Resolution Imagery: Evaluation of Shallow Water Atmospheric Correction Techniques. Sensors 2017, 17, 2639. [Google Scholar] [CrossRef] [Green Version]

- Garcia, R.A.; Lee, Z.; Hochberg, E.J. Hyperspectral Shallow-Water Remote Sensing with an Enhanced Benthic Classifier. Remote Sens. 2018, 10, 147. [Google Scholar] [CrossRef] [Green Version]

- Defoin-Platel, M.; Chami, M. How Ambiguous Is the Inverse Problem of Ocean Color in Coastal Waters? J. Geophys. Res. Ocean. 2007, 112. [Google Scholar] [CrossRef] [Green Version]

- Garcia, R.A. Uncertainty in Hyperspectral Remote Sensing: Analysis of the Potential and Limitation of Shallow Water Bathymetry and Benthic Classification. Ph.D. Thesis, Curtin University, Perth, Australia, October 2015. [Google Scholar]

| Study Area | Number of RGB Images | Number of MS Images | Altitude | Sun Zenith Angle (Degrees) | Acquisition Time (hh:mm) |

|---|---|---|---|---|---|

| Lambayanna beach | 500 | >1000 | 90 | 70 | 09:00 |

| Kalamaki bay | 400 | 400 | 150 | 52 | 11:30 |

| Plakias bay | 230 | 200 | 150 | 49 | 12:00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alevizos, E.; Oikonomou, D.; Argyriou, A.V.; Alexakis, D.D. Fusion of Drone-Based RGB and Multi-Spectral Imagery for Shallow Water Bathymetry Inversion. Remote Sens. 2022, 14, 1127. https://doi.org/10.3390/rs14051127

Alevizos E, Oikonomou D, Argyriou AV, Alexakis DD. Fusion of Drone-Based RGB and Multi-Spectral Imagery for Shallow Water Bathymetry Inversion. Remote Sensing. 2022; 14(5):1127. https://doi.org/10.3390/rs14051127

Chicago/Turabian StyleAlevizos, Evangelos, Dimitrios Oikonomou, Athanasios V. Argyriou, and Dimitrios D. Alexakis. 2022. "Fusion of Drone-Based RGB and Multi-Spectral Imagery for Shallow Water Bathymetry Inversion" Remote Sensing 14, no. 5: 1127. https://doi.org/10.3390/rs14051127