1. Introduction

Range sensors are devices that capture the three-dimensional (3-D) structure of the world from the viewpoint of the sensor, usually measuring distances to closest targets [

1,

2,

3,

4,

5]. These measurements could be across a scanning plane or a 3-D image with distance measurements at every point.

These range sensors have been used for many years in the fields of localization, obstacle detection and tracking for autonomous vehicles [

6,

7]. These can be employed as measurement devices to address the joint problem of online tracking and the detection of the current modality [

8]. These can be helpful indicators for use in sustainability assessment by presenting the distribution characteristics of each sustainability index for vehicles [

9]. Obstacle detection functions of autonomous vehicles require low failure rates [

10]. Interference is inherent to all active sensors and wireless applications with the same or an overlapping frequency range [

11,

12,

13,

14]. In general, interference describes the coherent superposition of two or more different waves at one point in space. A wave is a rotating vector in the complex plane, where the addition of several vectors results in deterministic changes to magnitude and phase. Signal amplitude can be decreased (destructive interference) or increased (constructive interference). Single interference can be treated as one-shot noise or error and can be eliminated using the Kalman filter or the particle learning technique [

15,

16].

Within a few years, the adaption rate of vehicular radio detection and ranging (radar) systems will have drastically increased in this newly emerging market [

17]. In terms of safety-related applications, interference risk will particularly threaten further proliferation if harmful mutual interference occurs [

18,

19,

20]. It is too late to find efficient and pragmatic countermeasures to avoid apparent interference risk when severe interference problems cause malfunction or out-of-order situations in safety radar devices [

14,

21]. The only reasonable and valid approach is to counteract these issues before the problems are manifested. The EU funding project, more safety for all by radar interference mitigation (MOSARIM), started in January 2010, intending to investigate possible automotive radar interference mechanisms via both simulation and real-world road tests [

18,

19,

20,

22]. Interference from an identical pulsed radar sensor with the same pulse repetition frequency (PRF) generates a ghost target at a constant distance. If the radars are similar, and only have slightly different PRFs, then a ghost target will appear as a large target moving slowly in the range. The apparent velocity is dependent on the difference between the two PRFs and could be anything from a couple of millimeters per second up to a couple of hundred meters per second. A slightly different PRF results in a moving ghost target, as the time difference between the transmitted pulse and the interfering pulse increases or decreases from pulse repetition period to pulse repetition period. If the PRFs are vastly different, then more than one ghost target could appear per cycle [

11,

23]. Appropriate countermeasures and mitigation techniques were studied and assessed, and general guidelines and recommendations were developed [

11,

23,

24,

25]. The ideas for countermeasures were extracted and structured into six basic categories. From the six basic categories, a list of 22 variants was compiled, evaluated, and ranked concerning various criteria for the implementation effort, required power and computational resources, cost, the requirement for harmonization, etc. They selected the top nine countermeasures for further evaluation in order to derive guidelines. Their mitigation performance ranged from a few

up to (theoretically) infinite

. Their accurate and consistent application to all new automotive radar products will result in close to interference-free automotive radar operations.

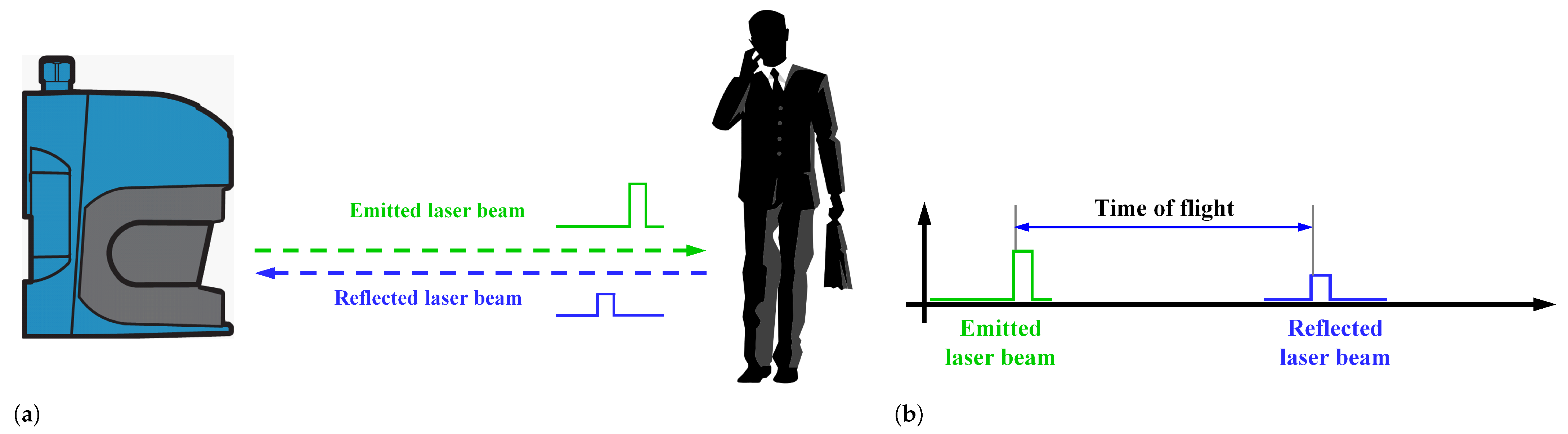

Time-of-flight (ToF) range cameras acquire a 3-D image of a scene simultaneously for all pixels from a single viewing location. Optical interference between a sensor and another sensor is a crucial matter [

26]. It is not easy to separate the amplitude-modulated wave from the sensor itself and another sensor once they are mixed. This shows how the mixed pixel and multipath separation algorithm can be applied to range imaging measurements projected into Cartesian coordinates. Significant improvements in accuracy can be achieved in particular situations where the influence of multipath interference frequently causes distortions [

27,

28,

29]. The multiple return separation algorithm requires a consistent and known relationship between the cameras’ amplitudes and phase responses at both modulation frequencies. This type of calibration was not available for these cameras, so it was carried out by comparing manually selected regions of the image judged to be minimally affected by multipath interference. If these regions did contain multipath, the calibration would be compromised, leading to poor performance. Another source of potential error is distance linearity measurement inaccuracies, which are common in uncalibrated range cameras. It is also possible that the optimization algorithm encountered local minima, producing an incorrect answer.

The light detection and ranging (LIDAR) sensor is one of the essential perception sensors for autonomous vehicles that can travel autonomously [

1,

2,

3,

4,

5,

6,

7,

10]. A LIDAR sensor installed on an autonomous vehicle lets this vehicle generate a detailed 3-D local map of its environment. Histogram analysis of the 3-D local map is used to select the obstacle candidates. It incorporates matched candidates into results from previous scans and updates the positions of all obstacles based on vehicle motion before the following scan. The car then takes these maps and combines them with high-resolution global maps of the world, producing different types of maps that allow it to drive itself. The reception of foreign laser pulses can lead to ghost targets or a reduced signal-to-noise ratio. The reception of unwanted laser pulses from other LIDAR sensors is called mutual interference between LIDAR sensors [

30,

31,

32,

33,

34,

35,

36,

37]. Concretely, interference effects in automotive LIDAR sensors are caused by the superposition of disturbances from other LIDAR sensors at the receiving antenna with the incoming coherent use signals being reflected from objects within the LIDAR sensor’s detection zone. The simplest method for the prevention of such interference is to install each sensor by tilting them forward 2° to 3°. However, that is not enough. Another measure is to emit as few lasers as possible. The laser should be off where sensor data are unnecessary. According to the analyzed result, the laser was automatically off in unnecessary areas and scans were curtailed. These functions prevent interference and extend laser devices and reduce power consumption. Moreover, one more step is ready for use, which is a function where motor speed is slightly changed on command. A difference in motor speed that is more than 300 almost prevents interference [

38].

Until now, interference has not been considered as a problem because the number of vehicles equipped with LIDAR sensors was small, and, as a result, the possibility of interference was extremely unlikely. So, despite a predicted higher number of LIDAR sensors, the possibility of interference-induced problems has to be reduced considerably. With the increase in the number of autonomous vehicles equipped with LIDAR sensors for use in obstacle detection and avoidance for safe navigation through environments, the possibility of mutual interference becomes an issue. Imagine massive traffic jams occurring in the morning and evening. What if all of these vehicles were equipped with LIDAR sensors? With a growing number of autonomous vehicles equipped with LIDAR sensors operating close to each other at the same time, LIDAR sensors may receive laser pulses from other LIDAR sensors.

This article is structured as follows: in

Section 2, the occurrence of mutual interference in radar and LIDAR sensors is discussed. The causes of mutual interference and the extensive study of radar sensor interference in the MOSARIM project are briefly described. In

Section 3, a new multi-plane LIDAR sensor, which uses coded pulse streams to measure surrounding perimeters without mutual interference, is presented. In

Section 4, three relevant mutual interference scenarios in real-world vehicle applications of simulations are presented. In

Section 5, four different simulation phases are set up according to the operating mode of two LIDAR sensors, and their results are shown. In each scenario, LIDAR sensors operated in single-pulse mode suffered from mutual interference, but LIDAR sensors with a coded pulse steam mode effectively rejected alien pulses. In

Section 6, the conclusion is given, and future research paths are suggested.

3. Signal Processing of Concurrent Firing LIDAR Sensor without Mutual Interference

In this section, we proposed a new multi-plane LIDAR sensor, which uses coded pulse streams encoded by carrier-hopping prime code (CHPC) technology to measure surrounding perimeters without mutual interference. These encoded pulses utilized a random azimuth identification and checksum with random spreading code. We modeled the entirety of the LIDAR sensor operation in Synopsys OptSim with Mathworks MATLAB and represented the alien pulse elimination functionality obtained via modeling and simulation. We chose parameters based on our previous prototype LIDAR sensor [

40] for optical characteristics related to laser transmission and reception, pulse reflection, lens, etc. Three scenarios of simulation and their results are shown, according to the arrangement of two LIDAR sensors. In each scenario, two LIDAR sensors were operated in single-pulse mode and/or coded pulse steam mode.

In the previous paper [

41], we proposed a LIDAR sensor that changes the measurement strategy from a sequential to a concurrent firing and measuring method. The proposed LIDAR utilized a 3-D scanning LIDAR method that consisted of 128 output channels in one vertical line in the measurement direction and concurrently measured the distance for each of these 128 channels. The LIDAR sensor emitted 128 coded pulse streams encoded by CHPC technology with identification and checksum. The emission channel could be recognized when the reflected pulse stream was received and demodulated. This information could estimate the time when the laser pulse stream was emitted and calculate the distance to the object reflecting the laser. By identifying the received reflected wave, even if several positions were measured simultaneously, the measurement position could be recognized after the reception. In this paper, we describe a more detailed description of the alien pulse rejection method of the CHPC encoder and decoder as presented in

Figure 3.

In order to generate a coded pulse stream to be transmitted, three pieces of information are required. A coded pulse stream to be transmitted can only be generated when there is an azimuth identification (ID) indicating the currently measured angle, a cyclic redundancy check (CRC) that checks whether there is an error when receiving data, and a spreading code for the generation of a coded pulse stream. The proposed LIDAR sensor generates azimuth ID and spreading code randomly, preventing collision with other LIDAR sensors using the same method or inference from the outside. The three pieces of information are stored in the internal memory and decode the reflected signal. When a reflected waves are received, these are converted into a pulse stream, decoded using the spreading code stored in the internal memory, and then the demodulation process is performed. Only when the pulse stream has the same spreading code in the internal memory is it deemed suitable and proceeds to the next step. If a different spreading code is used, it is judged inappropriate and then discarded. Data determined to be suitable are checked for data errors through the CRC inspection process. Data without errors move to the next step. If the error-free data have the same azimuth ID used for encoding, the distance to the destination is calculated using the difference between the time the azimuth ID was transmitted and the time it was received. Otherwise, the distance is discarded. It is only processed if all three pieces of information match the information transmitted in receiving reflected waves and calculating the distance. Otherwise, it is discarded to remove all information that causes mutual interference from the outside, fundamentally blocking the occurrence of mutual interference.

4. Simulation Scenarios in Real-World Vehicle Applications

We selected three relevant scenarios that can occur in indirect interference for analysis in practical measurements. The selection of scenarios took into account several different factors: indirect interference by LIDAR sensor reflection on other objects, the geometry of LIDAR sensors, and static objects such as jersey barriers. In this paper, we focus on running simulations for three scenarios. The first scenario is for indirect interference with two vehicles approaching each other and a jersey barrier (

Figure 4a). Indirect interference with a following vehicle and a jersey barrier is the second (

Figure 4b). The last is two vehicles driving parallel along a jersey barrier (

Figure 4c).

In each scenario, two LIDAR sensors were positioned inside the scene. These two LIDAR sensors operated in two operating modes according to the simulation scenarios. One mode was the coded pulse stream mode based on the concurrent firinging LIDAR sensor, and the other mode was the single-pulse mode based on Velodyne’s VLS-128. Two operating modes of LIDAR sensor are summarized in

Table 8. These LIDAR sensors were laser measurement sensors that scanned the surrounding perimeter on 128 planes. The light source was a near-infrared laser with a wavelength of 1550 nm. It corresponded to laser class 1 (eye-safe) as per EN 60825-1 (2007-3). It measured in the spherical coordinate system in which each point was determined by the distance from a fixed point and an angle from a fixed direction. It emitted 1550 nm pulsed laser pulses using laser diodes. If the laser pulse was reflected from the target object, the distance to the object was calculated by the time required for the pulsed laser pulse to be reflected and received by the sensor. For the simple simulation, azimuthal scanning took place in the sector of 170°. To measure the distance and reflected intensity of the surrounding perimeter in the scenarios, the Synopsys OptSim optical simulation software was used for optical characteristics related to laser transmission/reception, and the MathWorks software MATLAB was used for encoding and decoding, signal processing, intensity calculation, and distance calculation tasks [

40,

41,

42].

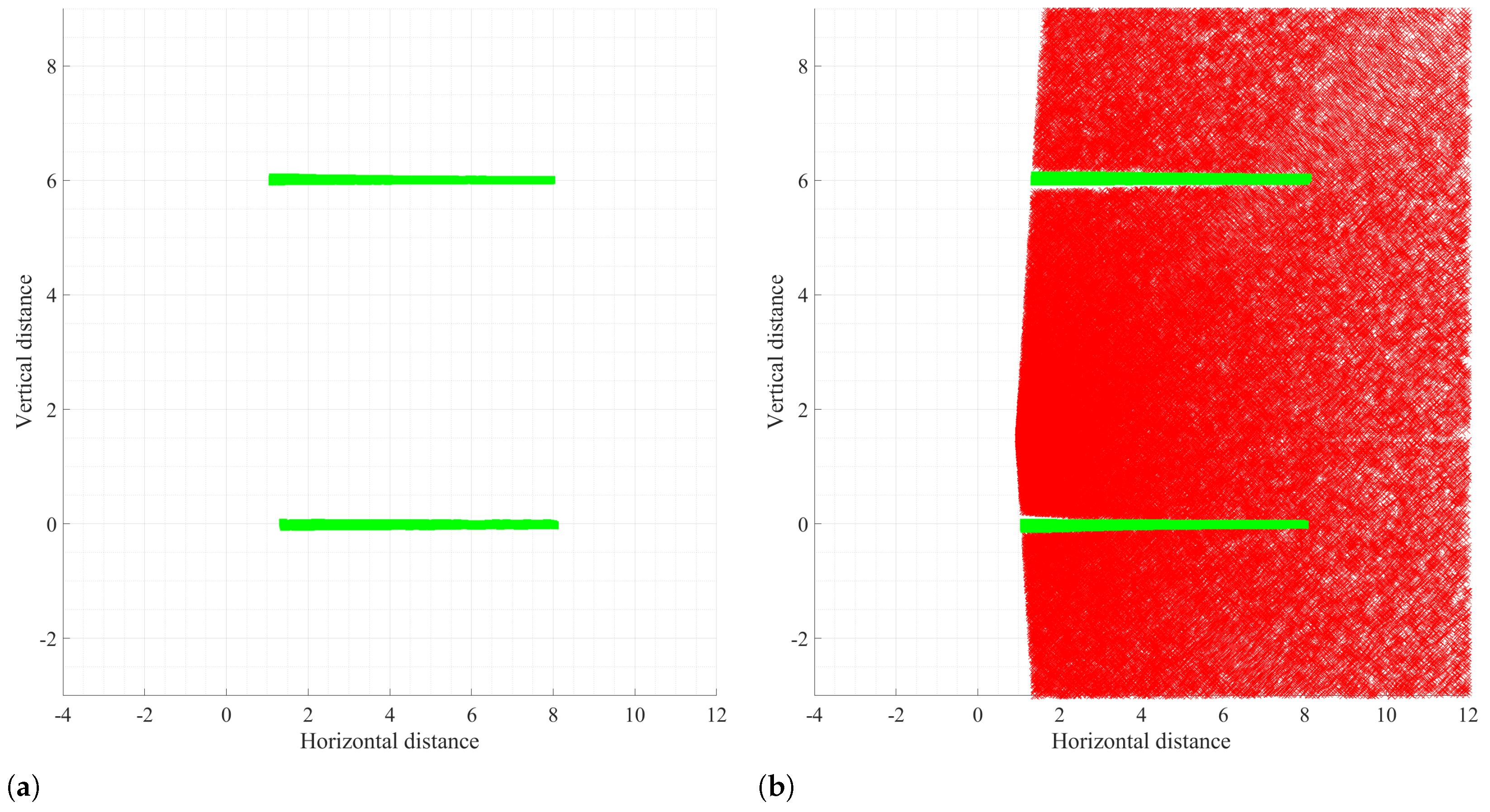

The scenarios consisted of four steps to identify the occurrence and elimination of mutual interference, and for each step, two LIDAR sensors operated in a predetermined mode. The training phase was carried out in each scenario first, and the mutual interference testing phases were performed later. LIDAR sensors ran and recorded measured data continuously. The analysis of recorded data shows the occurrence and influence of mutual interference.

The first step was the training phase, and normal LIDAR sensor operation was demarcated in the simulation scenarios. To specify the normal range of the distance measured by the two LIDAR sensors, this step consisted of two independent substeps. In the first substep, LIDAR sensor

ⓐ was the normal operating state of the single-pulse mode, but LIDAR sensor

ⓑ was an aborted state. In the second substep, they worked the opposite way. In these two substeps, we recorded the measured distance data at the normal operating LIDAR sensor for four working hours. We calculated the average distance, the maximum distance, and the minimum distance at each angle. After that, we calculated the upper and lower tolerance, as shown in Equations (

1)–(

5). The LIDAR sensor had two errors; one was a systematic error, and the other was a random statistical error. According to the rule of thumb, adding a slight margin to the maximum and minimum values could reduce misjudgment of the normal distance as interference.

The second step to the fourth step was a testing phase. It examined abnormal LIDAR sensor operation in the simulation scenarios. Two LIDAR sensors were in the normal operating state and ran simultaneously. We recorded the measured distance data from the both LIDAR sensor

ⓐ and

ⓑ for 24 h. We analyzed the recorded data and classified out-of-tolerance distance as the interfered measurement result, as shown in Equation (

6). In the second step, both LIDAR sensor

ⓐ and

ⓑ were operated in the single-pulse mode. In the third step, LIDAR sensor

ⓐ was the coded pulse stream mode, but LIDAR sensor

ⓑ was in the single-pulse mode. In the fourth step, two LIDAR sensor

ⓐ and

ⓑ were operated in the coded pulse stream mode.

6. Conclusions

Active sensors using a wireless domain, such as a radar or a LIDAR sensor, are not free from mutual interference. In the EU, through the MOSAIM project, a study was performed on how mutual interference occurs in vehicle radar sensors. It confirmed that direct and indirect mutual interference could occur in various environments where a vehicle can drive. Unlike a radar sensor, a LIDAR sensor has a very small divergence angle, and direct mutual interferences rarely occur. Since a LIDAR sensor creates a significant intensity of reflected waves compared to a radar sensor, indirect mutual interference is likely to occur. Several preceding studies on the occurrence and resolution of mutual interference in LIDAR sensors were limited to using a single plane.

We performed simulations in which a LIDAR sensor operated in three scenarios where indirect mutual interference occurred in a radar sensor and confirmed that mutual interference could occur in all situations. These mutual interferences have temporal and spatial locality that could lead to real objects. In this study, these simulations showed that mutual interference occurs in LIDAR sensors using multi-planes and can be effectively prevented by using a coded pulse stream. The coded pulse stream method proposed in this paper randomly generates necessary information and performs encoding and decoding processes based on this. It is shown that the alien signals that caused mutual interference were very effectively removed. It is necessary to study the coded pulse stream so that it can be used when there are many LiDAR sensors in a dense space in the future. Furthermore, it is necessary to study side effects that may occur when using coded pulse streams. Due to the significant differences between the simulations and the actual environment, the signal reflected by irregular objects can destroy the encoded pulse stream in reality. So, LIDAR sensors cannot decode the received encoded pulse stream correctly.