Cotton Cultivated Area Extraction Based on Multi-Feature Combination and CSSDI under Spatial Constraint

Abstract

:1. Introduction

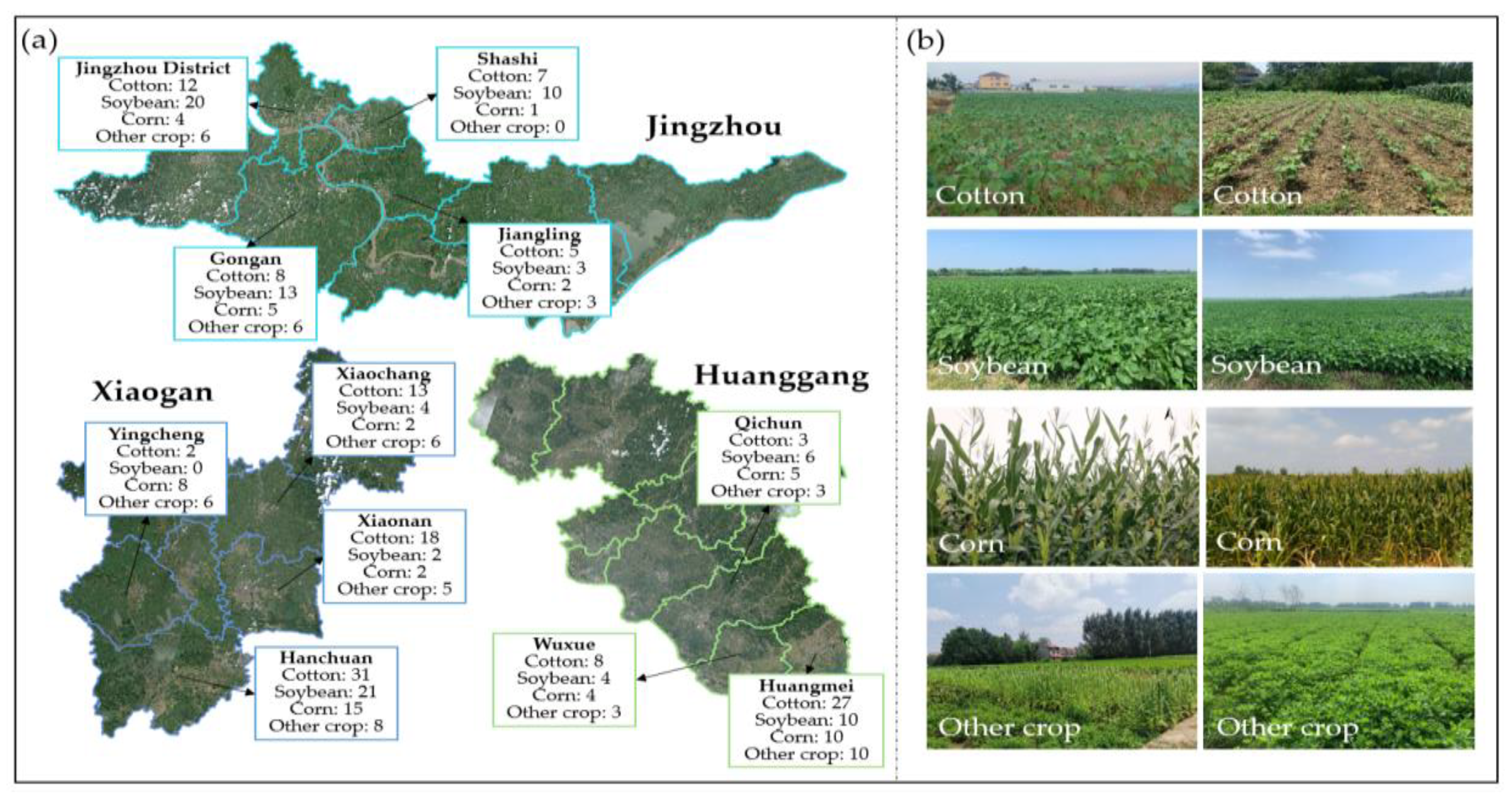

2. Study Area and Data

2.1. Study Area

2.2. Crop Phenology

2.3. Data

2.3.1. Satellite Data

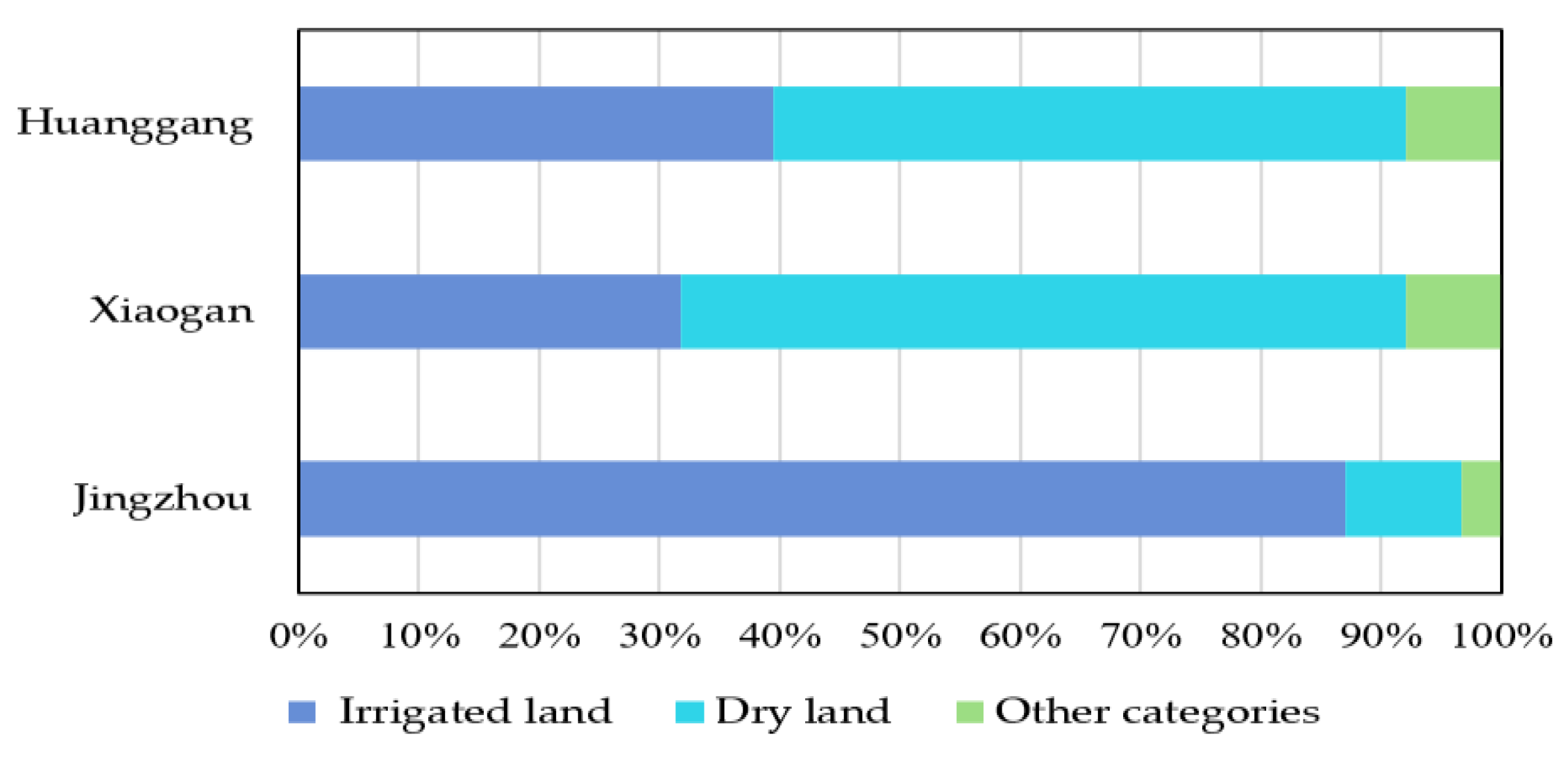

2.3.2. Land Use Status Data

2.3.3. Field Sampling Data

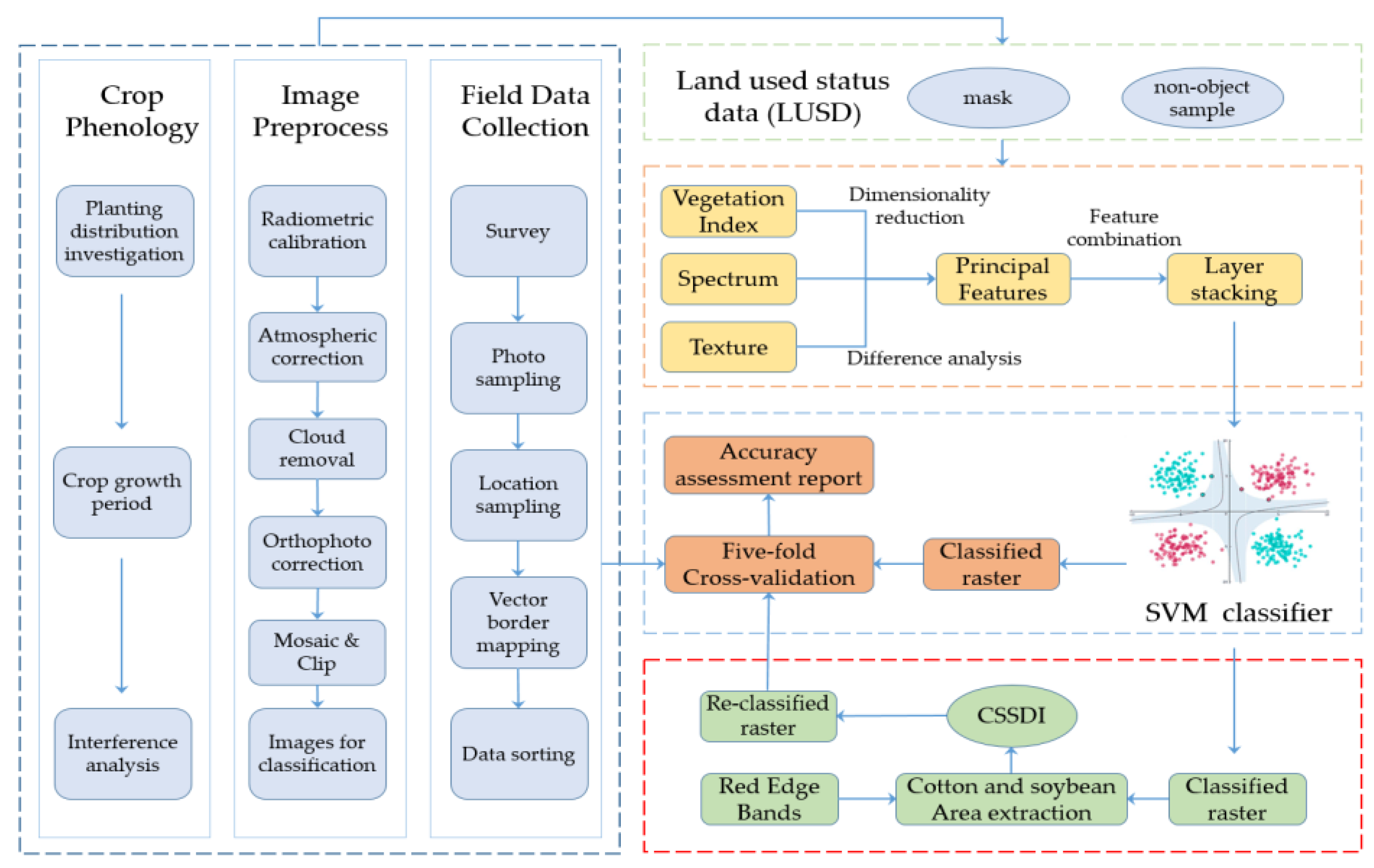

3. Methods

3.1. Spatial Constraint

3.2. Feature Selection and Combination

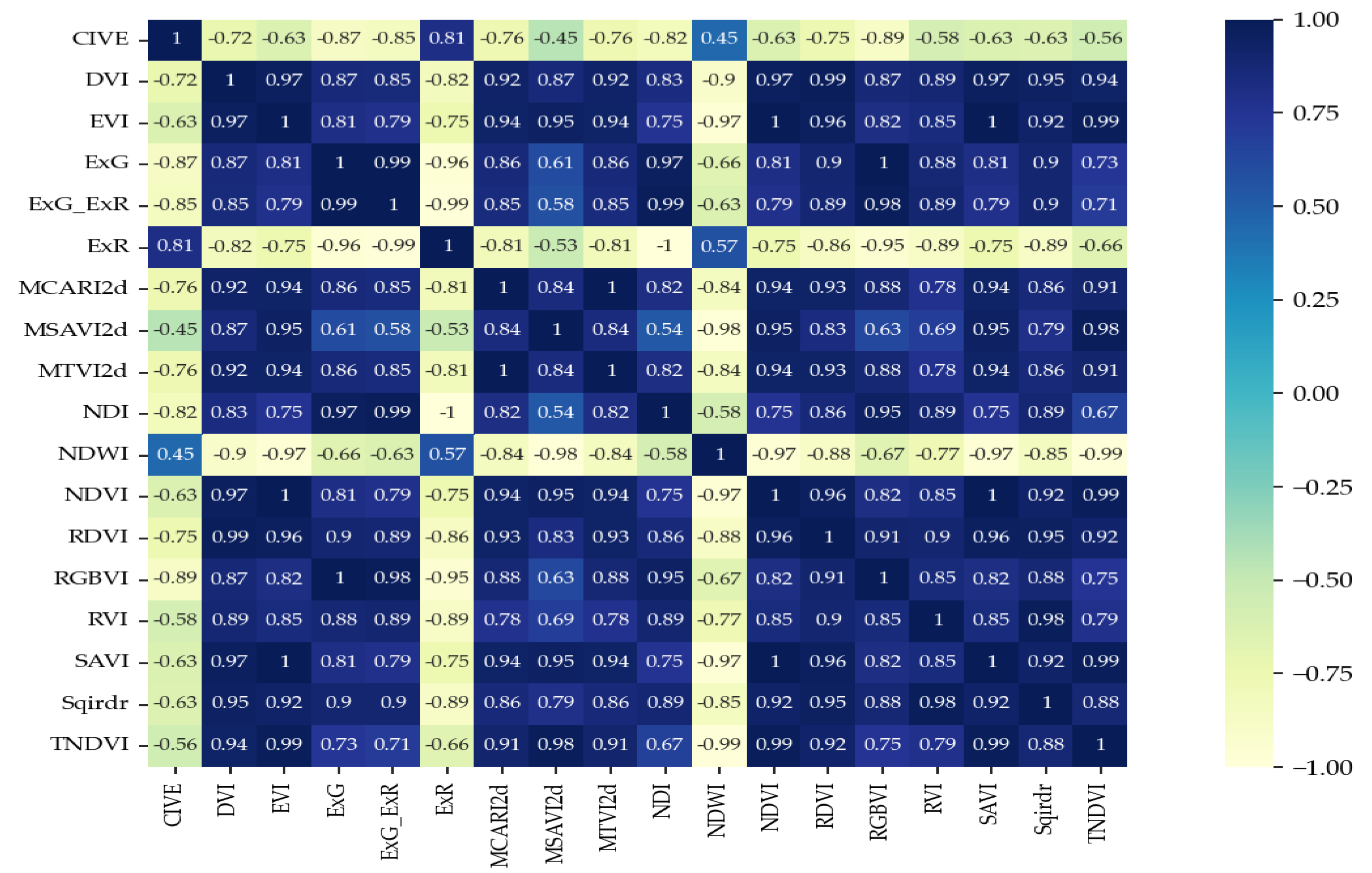

3.2.1. Features Selection

3.2.2. Feature Combination

3.3. SVM Algorithm

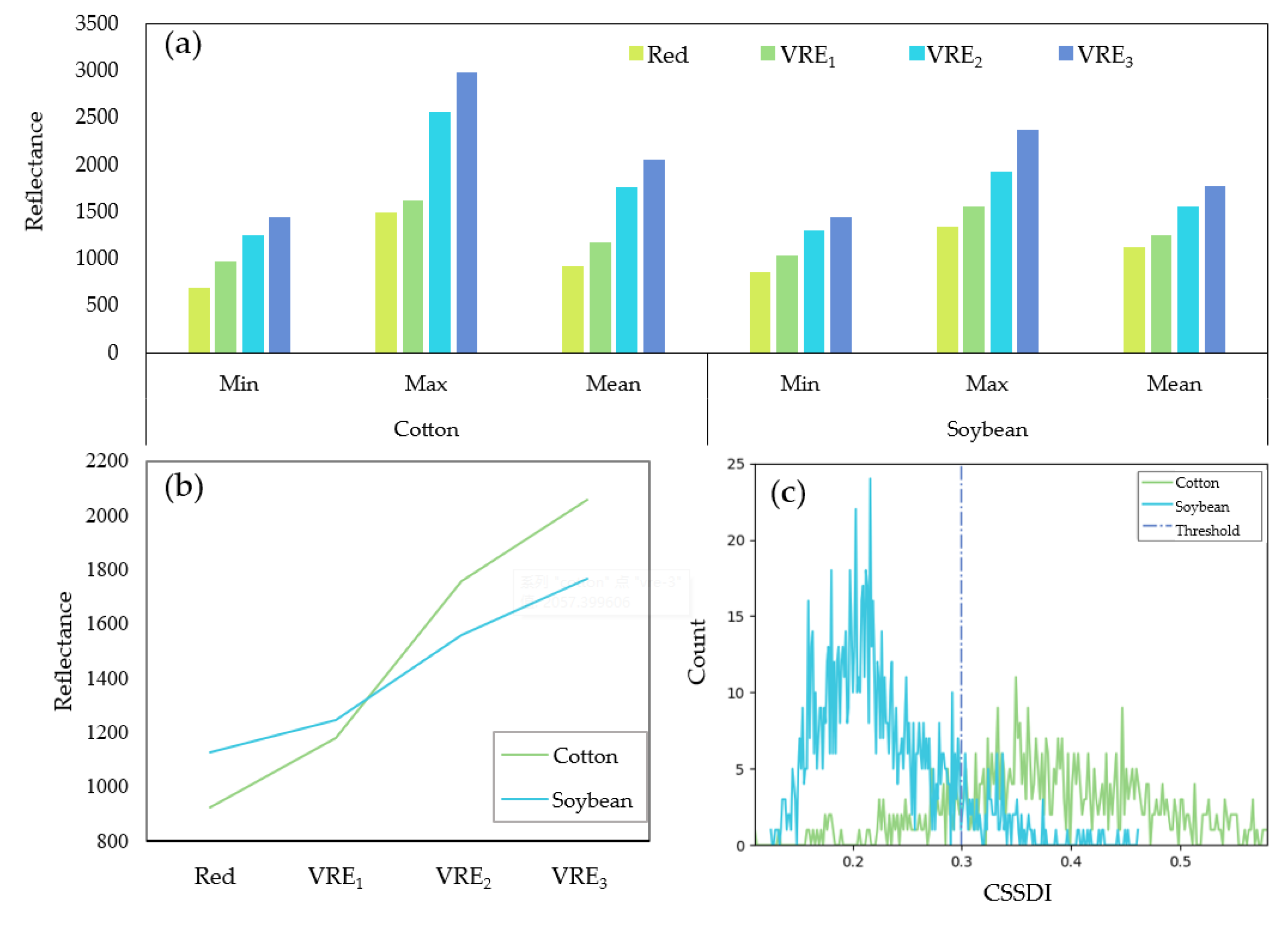

3.4. Cotton and Soybean Separation Difference Index

3.5. Evaluation Index

4. Results

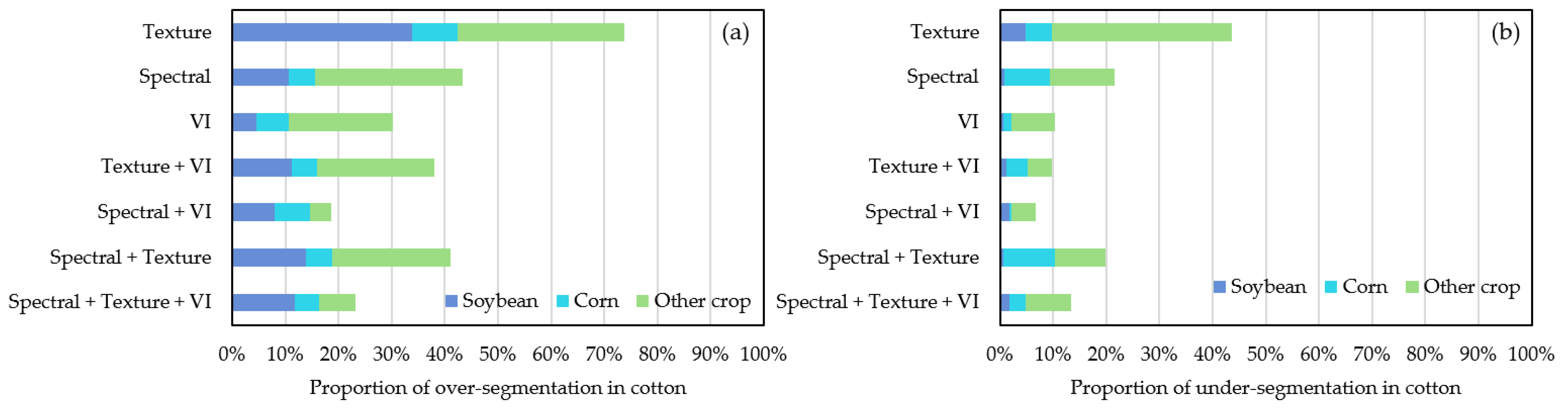

4.1. Classification Result of Feature Combination for Cotton Extraction

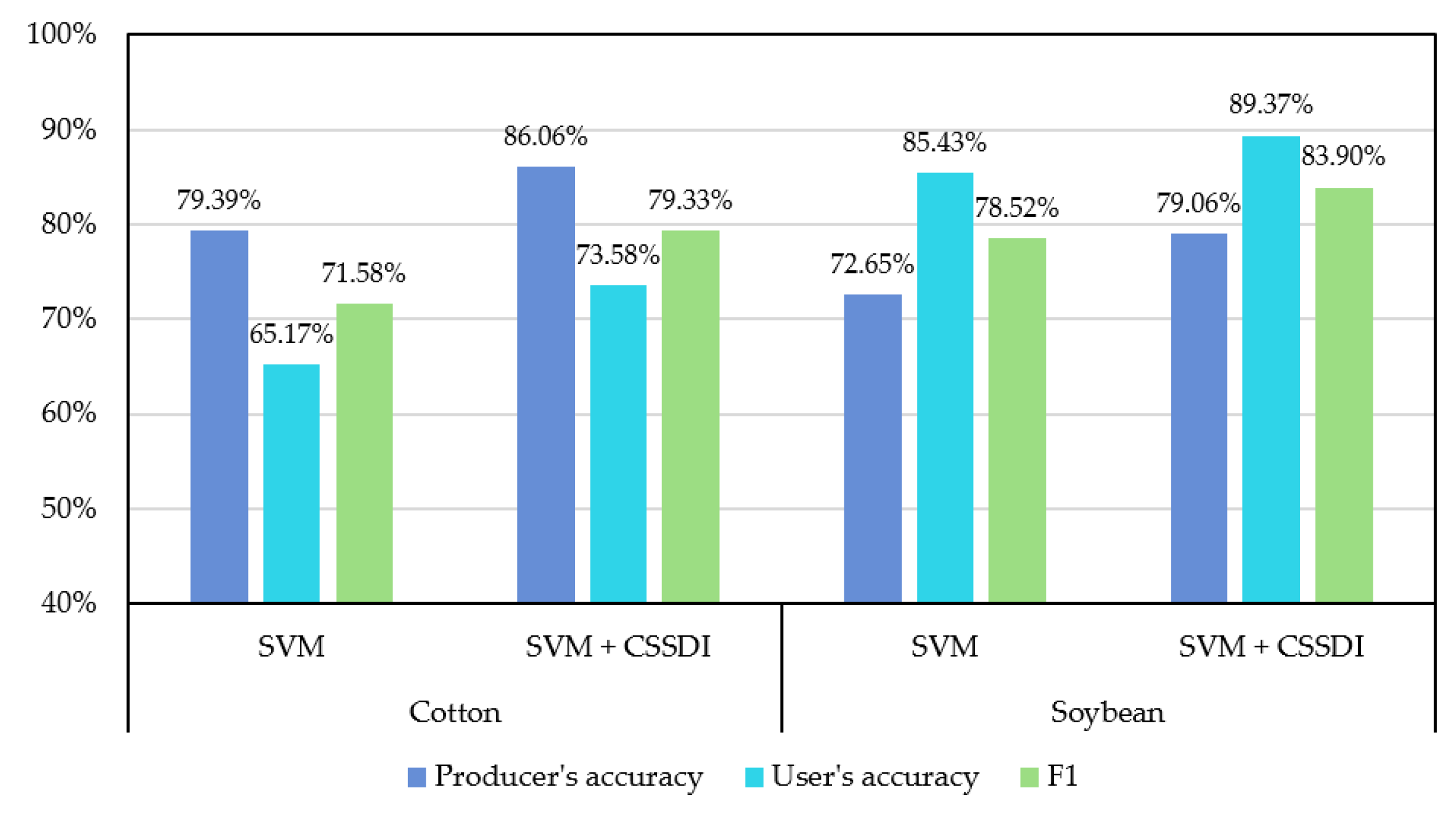

4.2. Improved Results of Cotton Extraction Based on CSSDI

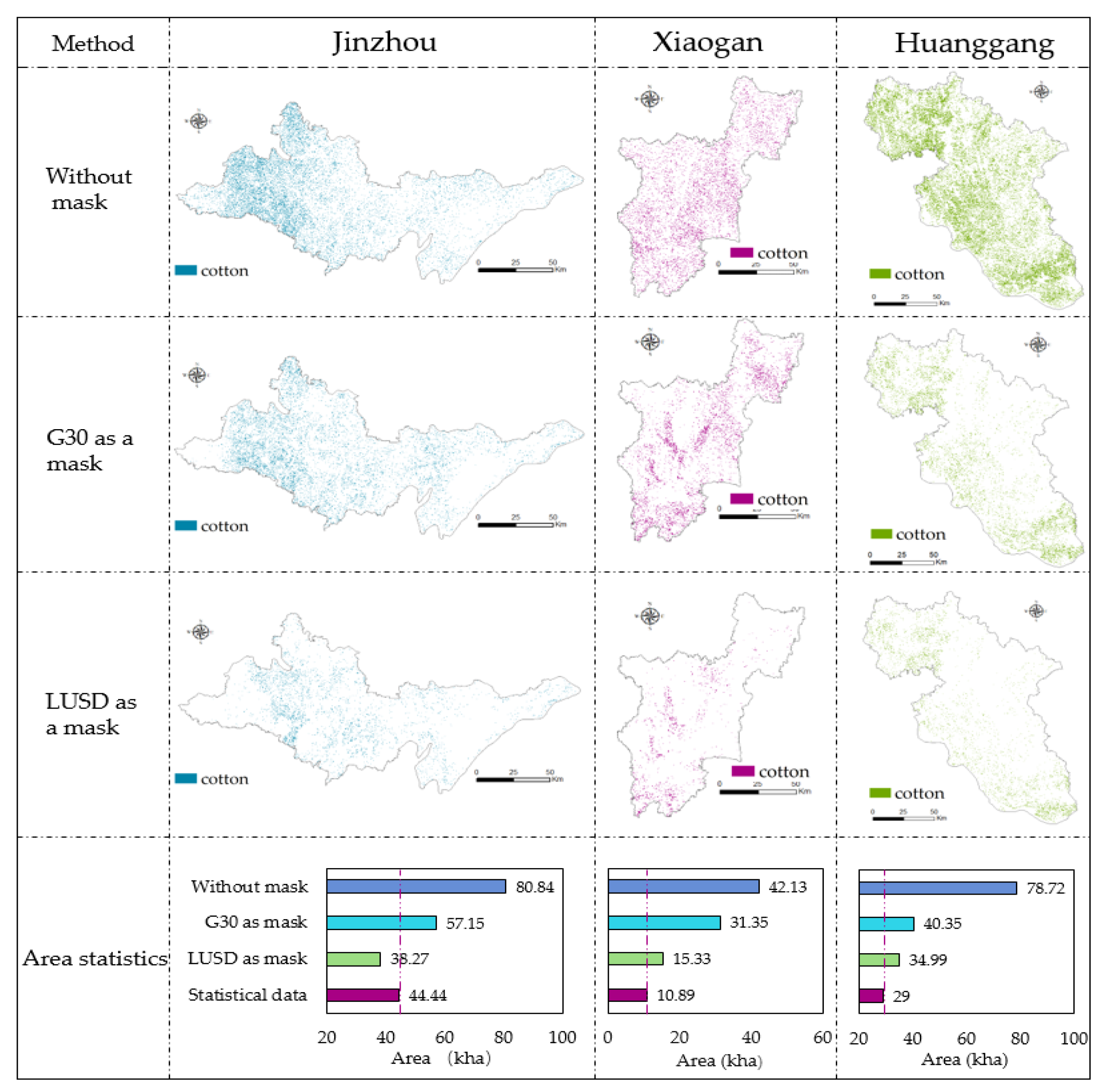

4.3. Comparison of Different Spatial Constraint Methods

5. Discussion

5.1. Necessity of Feature Selection and Combination

5.2. Advantages of CSSDI for Separating the Cotton and Soybean

5.3. Balance between F1 Scores and Area Errors Using LUSD

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| No. | Vegetation Index | Abbreviation | Formula | Reference |

|---|---|---|---|---|

| 1 | Excess green index | ExG | 2 × g − r − b | [45] |

| 2 | Excess red index | ExR | 1.4 × r − g | [46] |

| 3 | Excess green–Excess red | ExG–ExR | ExG − ExR | [46] |

| 4 | Color index of vegetation | CIVE | R × 0.441 − g × 0.881 + b × 0.385 + 18.78745 | [47] |

| 5 | Normalized difference index | NDI | (g − r)/(g + r) | [48] |

| 6 | RGB vegetation index | RGBVI | (g2 − b × r)/(g2 + b × r) | [49] |

| 7 | Normalized difference vegetation index | NDVI | (nir − r)/(nir + r) | [50] |

| 8 | Difference vegetation index | DVI | nir − r | [51] |

| 9 | Renormalized difference vegetation index | RDVI | √ (NDVI × DVI) | [52] |

| 10 | Ratio vegetation index | RVI | nir/r | [53] |

| 11 | Modified chlorophyll absorption in reflectance index | MCARI2d | (((nir − r) × 2.5 − (nir − g) × 1.3) × 1.5)/√((2 × nir + 1) × (2 × nir + 1) − (6 × nir − 5 × √(r)) − 0.5) | [54] |

| 12 | Modified Soil Adjusted Vegetation Index | MSAVI2d | (2 × nir + 1−√((2 × nir + 1) × (2 × nir + 1) − 8 × (nir − r))) × 0.5 | [55] |

| 13 | Modified Triangular Vegetation Index | MTVI2d | ((nir − g) × 1.2 − (r − g) × 2.5) × 1.5/√((2 × nir + 1) × (2 × nir + 1) − (6 × nir − 5 × √(r)) − 0.5) | [56] |

| 14 | Square root of (IR/R) | SQRT(IR/R) | √(nir/r) | [57] |

| 15 | Soil adjusted vegetation index | SAVI | ((nir − r) × 1.5)/(nir + r + 0.5) | [58] |

| 16 | Transformed normalized difference vegetation index | TNDVI | √((nir − r)/(nir + r) + 0.5) | [59] |

| 17 | Enhanced vegetation index | EVI | 2.5 × (nir − r)/(nir + 6 × r − 7.5 × b + 1) | [60] |

| 18 | Normalized difference water index | NDWI | (g − nir)/(g + nir) | [61] |

Appendix B

| Jingzhou | Xiaogan | Huanggang (without CSSDI) | Huanggang (with CSSDI) | |

|---|---|---|---|---|

| 1 | 87.15% | 78.76% | 70.97% | 78.69% |

| 2 | 87.43% | 79.46% | 71.13% | 75.46% |

| 3 | 85.07% | 84.99% | 71.18% | 79.12% |

| 4 | 87.04% | 75.21% | 77.57% | 81.26% |

| 5 | 87.96% | 82.13% | 66.03% | 82.11% |

| Average F1 | 86.93% | 80.11% | 71.58% | 79.33% |

| Reference Data (Pixel) | ||||||

|---|---|---|---|---|---|---|

| Cotton | Soybean | Corn | Other Crop | Total | ||

| Classified data (pixel) | Cotton | 156 | 11 | 20 | 3 | 190 |

| Soybean | 3 | 173 | 8 | 41 | 225 | |

| Corn | 1 | 36 | 81 | 7 | 125 | |

| Other crop | 8 | 35 | 18 | 113 | 174 | |

| Total | 168 | 255 | 127 | 164 | 714 | |

| Reference Data (Pixel) | ||||||

|---|---|---|---|---|---|---|

| Cotton | Soybean | Corn | Other Crop | Total | ||

| Classified data (pixel) | Cotton | 469 | 45 | 57 | 94 | 665 |

| Soybean | 19 | 108 | 9 | 16 | 152 | |

| Corn | 27 | 34 | 182 | 27 | 270 | |

| Other crop | 11 | 29 | 14 | 168 | 222 | |

| Total | 526 | 216 | 262 | 305 | 1309 | |

| Reference Data (Pixel) | ||||||

|---|---|---|---|---|---|---|

| Cotton | Soybean | Corn | Other Crop | Total | ||

| Classified data (pixel) | Cotton | 88 | 46 | 1 | 2 | 137 |

| Soybean | 20 | 124 | 0 | 0 | 144 | |

| Corn | 0 | 0 | 251 | 89 | 340 | |

| Other crop | 3 | 0 | 22 | 83 | 108 | |

| Total | 111 | 170 | 274 | 174 | 729 | |

| Reference Data (Pixel) | ||||||

|---|---|---|---|---|---|---|

| Cotton | Soybean | Corn | Other Crop | Total | ||

| Classified data (pixel) | Cotton | 96 | 36 | 1 | 0 | 133 |

| Soybean | 12 | 134 | 0 | 2 | 148 | |

| Corn | 0 | 0 | 251 | 89 | 340 | |

| Other crop | 3 | 0 | 22 | 83 | 108 | |

| Total | 111 | 170 | 274 | 174 | 729 | |

Appendix C

References

- Ren, Y.; Meng, Y.; Huang, W.; Ye, H.; Han, Y.; Kong, W.; Zhou, X.; Cui, B.; Xing, N.; Guo, A.; et al. Novel vegetation indices for cotton boll opening status estimation using Sentinel-2 data. Remote Sens. 2020, 12, 1712. [Google Scholar] [CrossRef]

- Mao, H.; Meng, J.; Ji, F.; Zhang, Q.; Fang, H. Comparison of machine learning regression algorithms for cotton leaf area index retrieval using Sentinel-2 spectral bands. Appl. Sci. 2019, 9, 1459. [Google Scholar] [CrossRef] [Green Version]

- Lu, X.; Jia, X.; Niu, J. The present situation and prospects of cotton industry development in China. Sci. Agric. Sin. 2018, 51, 26–36. [Google Scholar]

- Xun, L.; Zhang, J.; Cao, D.; Yang, S.; Yao, F. A novel cotton mapping index combining Sentinel-1 SAR and Sentinel-2 multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2021, 181, 148–166. [Google Scholar] [CrossRef]

- Yohannes, H.; Soromessa, T.; Argaw, M.; Dewan, A. Impact of landscape pattern changes on hydrological ecosystem services in the Beressa watershed of the Blue Nile Basin in Ethiopia. Sci. Total Environ. 2021, 793, 148559. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Chen, Z.; Tao, Y.; Huang, X.; Gu, X. Agricultural remote sensing big data: Management and applications. J. Integr. Agric. 2018, 17, 1915–1931. [Google Scholar] [CrossRef]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar] [CrossRef]

- Wang, N.; Zhai, Y.; Zhang, L. Automatic cotton mapping using time series of Sentinel-2 images. Remote Sens. 2021, 13, 1355. [Google Scholar] [CrossRef]

- Chaves, M.E.D.; de Carvalho Alves, M.; De Oliveira, M.S.; Sáfadi, T. A geostatistical approach for modeling soybean crop area and yield based on census and remote sensing data. Remote Sens. 2018, 10, 680. [Google Scholar] [CrossRef] [Green Version]

- Yang, L.; Wang, L.; Huang, J.; Mansaray, L.R.; Mijiti, R. Monitoring policy-driven crop area adjustments in northeast China using Landsat-8 imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101892. [Google Scholar] [CrossRef]

- Li, X.-Y.; Li, X.; Fan, Z.; Mi, L.; Kandakji, T.; Song, Z.; Li, D.; Song, X.-P. Civil war hinders crop production and threatens food security in Syria. Nat. Food 2022, 3, 38–46. [Google Scholar] [CrossRef]

- Ahmad, F.; Shafique, K.; Ahmad, S.R.; Ur-Rehman, S.; Rao, M. The utilization of MODIS and landsat TM/ETM+ for cotton fractional yield estimation in Burewala. Glob. J. Hum. Soc. Sci. 2013, 13, 7. [Google Scholar]

- Stoian, A.; Poulain, V.; Inglada, J.; Poughon, V.; Derksen, D. Land cover maps production with high resolution satellite image time series and convolutional neural networks: Adaptations and limits for operational systems. Remote Sens. 2019, 11, 1986. [Google Scholar] [CrossRef] [Green Version]

- Jia, X.; Wang, M.; Khandelwal, A.; Karpatne, A.; Kumar, V. Recurrent Generative Networks for Multi-Resolution Satellite Data: An Application in Cropland Monitoring. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019. [Google Scholar]

- Yi, Q. Remote estimation of cotton LAI using Sentinel-2 multispectral data. Trans. CSAE 2019, 35, 189–197. [Google Scholar]

- Xu, L.; Ming, D.; Zhou, W.; Bao, H.; Chen, Y.; Ling, X. Farmland extraction from high spatial resolution remote sensing images based on stratified scale pre-estimation. Remote Sens. 2019, 11, 108. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Whiting, M.L.; Ustin, S.L.; Zarco-Tejada, P.J.; Huffman, T.; Zhang, X. Maximizing the relationship of yield to site-specific management zones with object-oriented segmentation of hyperspectral images. Precis. Agric. 2018, 19, 348–364. [Google Scholar] [CrossRef] [Green Version]

- Hao, P.; Di, L.; Zhang, C.; Guo, L. Transfer learning for crop classification with Cropland Data Layer data (CDL) as training samples. Sci. Total Environ. 2020, 733, 138869. [Google Scholar] [CrossRef]

- Mazzia, V.; Khaliq, A.; Chiaberge, M. Improvement in land cover and crop classification based on temporal features learning from Sentinel-2 data using recurrent-convolutional neural network (R-CNN). Appl. Sci. 2020, 10, 238. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Y.; Zhang, Y.; Zhang, X.; Lin, Y.; Geng, J.; Ying, Y.; Rao, X. Real-time road recognition between cotton ridges based on semantic segmentation. J. Zhejiang Agric. Sci. 2021, 62, 1721–1725. [Google Scholar]

- Chen, K.; Zhu, L.; Song, P.; Tian, X.; Huang, C.; Nie, X.; Xiao, A.; He, L. Recognition of cotton terminal bud in field using improved Faster R-CNN by integrating dynamic mechanism. Trans. CSAE 2021, 37, 161–168. [Google Scholar]

- Crane-Droesch, A. Machine learning methods for crop yield prediction and climate change impact assessment in agriculture. Environ. Res. Lett. 2018, 13, 114003. [Google Scholar] [CrossRef] [Green Version]

- Junquera, V.; Meyfroidt, P.; Sun, Z.; Latthachack, P.; Grêt-Regamey, A. From global drivers to local land-use change: Understanding the northern Laos rubber boom. Environ. Sci. Policy 2020, 109, 103–115. [Google Scholar] [CrossRef]

- Yao, Y.; Yan, X.; Luo, P.; Liang, Y.; Ren, S.; Hu, Y.; Han, J.; Guan, Q. Classifying land-use patterns by integrating time-series electricity data and high-spatial resolution remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 106, 102664. [Google Scholar] [CrossRef]

- Lu, J.; Huang, J.; Wang, L.; Pei, Y. Paddy rice planting information extraction based on spatial and temporal data fusion approach in jianghan plain. Resour. Environ. Yangtze Basin 2017, 26, 874–881. [Google Scholar]

- Zhang, J.; Wu, H. Research on cotton area identification of Changji city based on high revolution remote sensing data. Tianjin Agric. Sci. 2017, 23, 55–60. [Google Scholar]

- Zhang, C.; Zhang, H.; Du, J.; Zhang, L. Automated paddy rice extent extraction with time stacks of Sentinel data: A case study in Jianghan plain, Hubei, China. In Proceedings of the 7th International Conference on Agro-Geoinformatics, Hangzhou, China, 6–9 August 2018. [Google Scholar]

- Son, N.-T.; Chen, C.-F.; Chen, C.-R.; Minh, V.-Q. Assessment of Sentinel-1A data for rice crop classification using random forests and support vector machines. Geocarto Int. 2018, 33, 587–601. [Google Scholar] [CrossRef]

- Htitiou, A.; Boudhar, A.; Lebrini, Y.; Hadria, R.; Lionboui, H.; Benabdelouahab, T. A comparative analysis of different phenological information retrieved from Sentinel-2 time series images to improve crop classification: A machine learning approach. Geocarto Int. 2020, 1–24. [Google Scholar] [CrossRef]

- Zhang, J.; He, Y.; Yuan, L.; Liu, P.; Zhou, X.; Huang, Y. Machine learning-based spectral library for crop classification and status monitoring. Agronomy 2019, 9, 496. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Li, H.; Chen, D.; Liu, Y.; Liu, S.; Liu, C.; Hu, G. Multiple classifiers combination method for tree species identification based on GF-5 and GF-6. Sci. Silvae Sin. 2020, 56, 93–104. [Google Scholar]

- Chen, Y.; Xin, M.; Liu, J. Cotton growth monitoring and yield estimation based on assimilation of remote sensing data and crop growth model. In Proceedings of the International Conference on Geoinformatics, Wuhan, China, 19–21 June 2015. [Google Scholar]

- Shen, Y.; Jiang, C.; Chan, K.L.; Hu, C.; Yao, L. Estimation of field-level NOx emissions from crop residue burning using remote sensing data: A case study in Hubei, China. Remote Sens. 2021, 13, 404. [Google Scholar] [CrossRef]

- She, B.; Yang, Y.; Zhao, Z.; Huang, L.; Liang, D.; Zhang, D. Identification and mapping of soybean and maize crops based on Sentinel-2 data. Int. J. Agric. Biol. Eng. 2020, 13, 171–182. [Google Scholar] [CrossRef]

- Yang, K.; Gong, Y.; Fang, S.; Duan, B.; Yuan, N.; Peng, Y.; Wu, X.; Zhu, R. Combining spectral and texture features of UAV images for the remote estimation of rice LAI throughout the entire growing season. Remote Sens. 2021, 13, 3001. [Google Scholar] [CrossRef]

- Kim, H.-O.; Yeom, J.-M. Effect of red-edge and texture features for object-based paddy rice crop classification using RapidEye multi-spectral satellite image data. Int. J. Remote Sens. 2014, 35, 7046–7068. [Google Scholar] [CrossRef]

- Kwak, G.-H.; Park, N.-W. Impact of texture information on crop classification with machine learning and UAV images. Appl. Sci. 2019, 9, 643. [Google Scholar] [CrossRef] [Green Version]

- Mantero, P.; Moser, G.; Serpico, S.B. Partially supervised classification of remote sensing images through SVM-based probability density estimation. IEEE Trans. Geosci. Remote Sens. 2005, 43, 559–570. [Google Scholar] [CrossRef]

- Wan, S.; Chang, S.-H. Crop classification with WorldView-2 imagery using Support Vector Machine comparing texture analysis approaches and grey relational analysis in Jianan Plain, Taiwan. Int. J. Remote Sens. 2019, 40, 8076–8092. [Google Scholar] [CrossRef]

- Chakhar, A.; Ortega-Terol, D.; Hernández-López, D.; Ballesteros, R.; Ortega, J.F.; Moreno, M.A. Assessing the accuracy of multiple classification algorithms for crop classification using Landsat-8 and Sentinel-2 data. Remote Sens. 2020, 12, 1735. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Xiao, C.; Li, P.; Feng, Z.; Liu, Y.; Zhang, X. Sentinel-2 red-edge spectral indices (RESI) suitability for mapping rubber boom in Luang Namtha Province, northern Lao PDR. Int. J. Appl. Earth Obs. Geoinf. 2020, 93, 102176. [Google Scholar] [CrossRef]

- Kang, Y.; Hu, X.; Meng, Q.; Zou, Y.; Zhang, L.; Liu, M.; Zhao, M. Land cover and crop classification based on red edge indices features of GF-6 WFV time series data. Remote Sens. 2021, 13, 4522. [Google Scholar] [CrossRef]

- Kang, L.; Ye, P.; Li, Y.; Doermann, D. Convolutional neural networks for no-reference image quality assessment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Meyer, G.E.; Hindman, T.W.; Laksmi, K. Machine vision detection parameters for plant species identification. In Proceedings of the SPIE on Precision Agriculture and Biological Quality, Boston, MA, USA, 14 January 1999. [Google Scholar]

- Kataoka, T.; Kaneko, T.; Okamoto, H.; Hata, S. Crop growth estimation system using machine vision. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Kobe, Japan, 20–24 July 2003. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. In Proceedings of the Third Symposium on Significant Results Obtained from the First Earth, Washington, DC, USA, 10–14 December 1973. [Google Scholar]

- Richardson, A.J.; Everitt, J.H. Using spectral vegetation indices to estimate rangeland productivity. Geocarto Int. 1992, 7, 63–69. [Google Scholar] [CrossRef]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Daughtry, C.S.; Walthall, C.; Kim, M.; De Colstoun, E.B.; McMurtrey Iii, J. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Richardson, A.J.; Wiegand, C. Distinguishing vegetation from soil background information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Marzukhi, F.; Said, M.A.M.; Ahmad, A.A. Coconut tree stress detection as an indicator of Red Palm Weevil (RPW) attack using Sentinel data. Int. J. Built Environ. Sust. 2020, 7, 1–9. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Gowri, L.; Manjula, K. Evaluation of various vegetation indices for multispectral satellite images. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 3494–3500. [Google Scholar]

- Liu, H.Q.; Huete, A. A feedback based modification of the NDVI to minimize canopy background and atmospheric noise. IEEE Trans. Geosci. Remote Sens. 1995, 33, 457–465. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

| Region | Cotton | Soybean | Rice | Corn |

|---|---|---|---|---|

| Jingzhou | 4.19% | 3.84% | 42.92% | 3.12% |

| Xiaogan | 3.16% | 1.54% | 72.13% | 3.48% |

| Huanggang | 7.42% | 3.86% | 80.20% | 2.72% |

| Hubei | 2.08% | 2.71% | 29.26% | 9.31% |

| Month | Period | Cotton [32,33] | Soybean [34] | Rice [33,35] | Corn [33] |

|---|---|---|---|---|---|

| April | Early | ||||

| Middle | Sowing | ||||

| Late | Seedling | Sowing | |||

| May | Early | ||||

| Middle | Seedling | ||||

| Late | Budding | ||||

| June | Early | Sowing | Planting | Sowing | |

| Middle | Tillering | ||||

| Late | Maturity | Seedling | |||

| July | Early | Blooming | Jointing | Jointing | |

| Middle | |||||

| Late | Heading/ Booting | ||||

| August | Early | Booting | |||

| Middle | Blooming | ||||

| Late | Filling | Filling | |||

| September | Early | Harvest | |||

| Middle | Harvest | Harvest | |||

| Late | Harvest | ||||

| October | Early | ||||

| Middle | |||||

| Late |

| Category | Jingzhou | Xiaogan | Huangang | ||||||

|---|---|---|---|---|---|---|---|---|---|

| No. | Average Area (m2) | Total Area (m2) | No. | Average Area (m2) | Total Area (m2) | No. | Average Area (m2) | Total Area (m2) | |

| Cotton | 32 | 2603 | 83,310 | 62 | 4242 | 263,028 | 38 | 1460 | 55,496 |

| Soybean | 46 | 2670 | 122,839 | 27 | 4019 | 108,520 | 20 | 4261 | 85,216 |

| Corn | 12 | 5116 | 61,392 | 27 | 4845 | 130,812 | 19 | 7220 | 137,176 |

| Other crop | 15 | 5453 | 81,790 | 25 | 6087 | 152,185 | 16 | 5429 | 86,861 |

| No. | Classification Scheme |

|---|---|

| 1 | Spectral + Texture + Vegetation index |

| 2 | Spectral + Texture |

| 3 | Spectral + Vegetation index |

| 4 | Texture + Vegetation index |

| 5 | Vegetation index |

| 6 | Spectral |

| 7 | Texture |

| Method | Evaluation Index | Jingzhou | Xiaogan | Huanggang |

|---|---|---|---|---|

| Without mask | Producer’s accuracy | 93.29% | 81.22% | 85.45% |

| User’s accuracy | 75.18% | 75.68% | 76.50% | |

| F1 | 83.26% | 78.35% | 80.73% | |

| OA | 85.31% | 86.03% | 95.34% | |

| kappa | 0.82 | 0.85 | 0.94 | |

| Area error | 81.91% | 286.87% | 171.45% | |

| G30 as a mask | Producer’s accuracy | 85.98% | 80.38% | 67.67% |

| User’s accuracy | 74.80% | 41.72% | 69.12% | |

| F1 | 80.00% | 54.93% | 68.39% | |

| OA | 80.14% | 51.65% | 74.16% | |

| kappa | 0.75 | 0.37 | 0.63 | |

| Area error | 28.60% | 187.88% | 39.14% | |

| LUSD as a mask | Producer’s accuracy | 93.29% | 85.87% | 86.06% |

| User’s accuracy | 81.38% | 76.35% | 73.58% | |

| F1 | 86.93% | 80.83% | 79.33% | |

| OA | 72.05% | 73.02% | 75.25% | |

| kappa | 0.60 | 0.61 | 0.65 | |

| Area error | 13.89% | 40.77% | 20.66% |

| Evaluation Index | LUSD for Mask | LUSD for Sample Augmentation |

|---|---|---|

| Producer’s accuracy | 86.43% | 80.80% |

| User’s accuracy | 73.19% | 76.68% |

| F1 | 79.26% | 78.69% |

| OA | 75.58% | 83.80% |

| kappa | 0.65 | 0.80 |

| Area error | 58.51% | 7.01% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, Y.; Li, D.; Wang, M.; Jiang, H.; Luo, L.; Wu, Y.; Liu, C.; Xie, T.; Zhang, Q.; Jahangir, Z. Cotton Cultivated Area Extraction Based on Multi-Feature Combination and CSSDI under Spatial Constraint. Remote Sens. 2022, 14, 1392. https://doi.org/10.3390/rs14061392

Hong Y, Li D, Wang M, Jiang H, Luo L, Wu Y, Liu C, Xie T, Zhang Q, Jahangir Z. Cotton Cultivated Area Extraction Based on Multi-Feature Combination and CSSDI under Spatial Constraint. Remote Sensing. 2022; 14(6):1392. https://doi.org/10.3390/rs14061392

Chicago/Turabian StyleHong, Yong, Deren Li, Mi Wang, Haonan Jiang, Lengkun Luo, Yanping Wu, Chen Liu, Tianjin Xie, Qing Zhang, and Zahid Jahangir. 2022. "Cotton Cultivated Area Extraction Based on Multi-Feature Combination and CSSDI under Spatial Constraint" Remote Sensing 14, no. 6: 1392. https://doi.org/10.3390/rs14061392