Robust Filter-Based Visual Navigation Solution with Miscalibrated Bi-Monocular or Stereo Cameras

Abstract

:1. Introduction

- A loss of depth estimation coverage: feature matching accuracy is impacted by the wrong calibration, making the 3D environment reconstruction sparse with potential obstacles’ misdetection;

- A loss of accuracy almost impossible to detect during the actual mission. This implies the wrong depth estimation, and so the wrong localization and 3D reconstruction.

2. Related Works

3. Mitigation of Stereo Calibration Errors

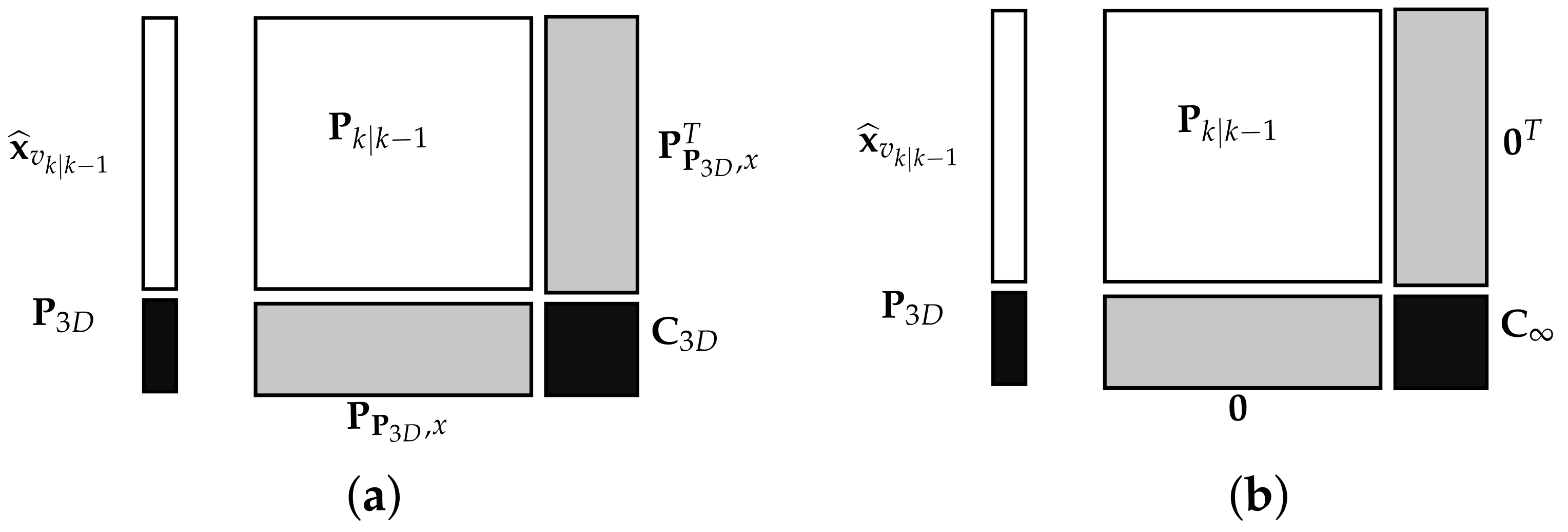

3.1. Background on Linearly Constrained EKF

3.2. Stereo-Based Visual Navigation

3.3. LCEKF Customization for Stereo-Based Visual Navigation

4. Experiments

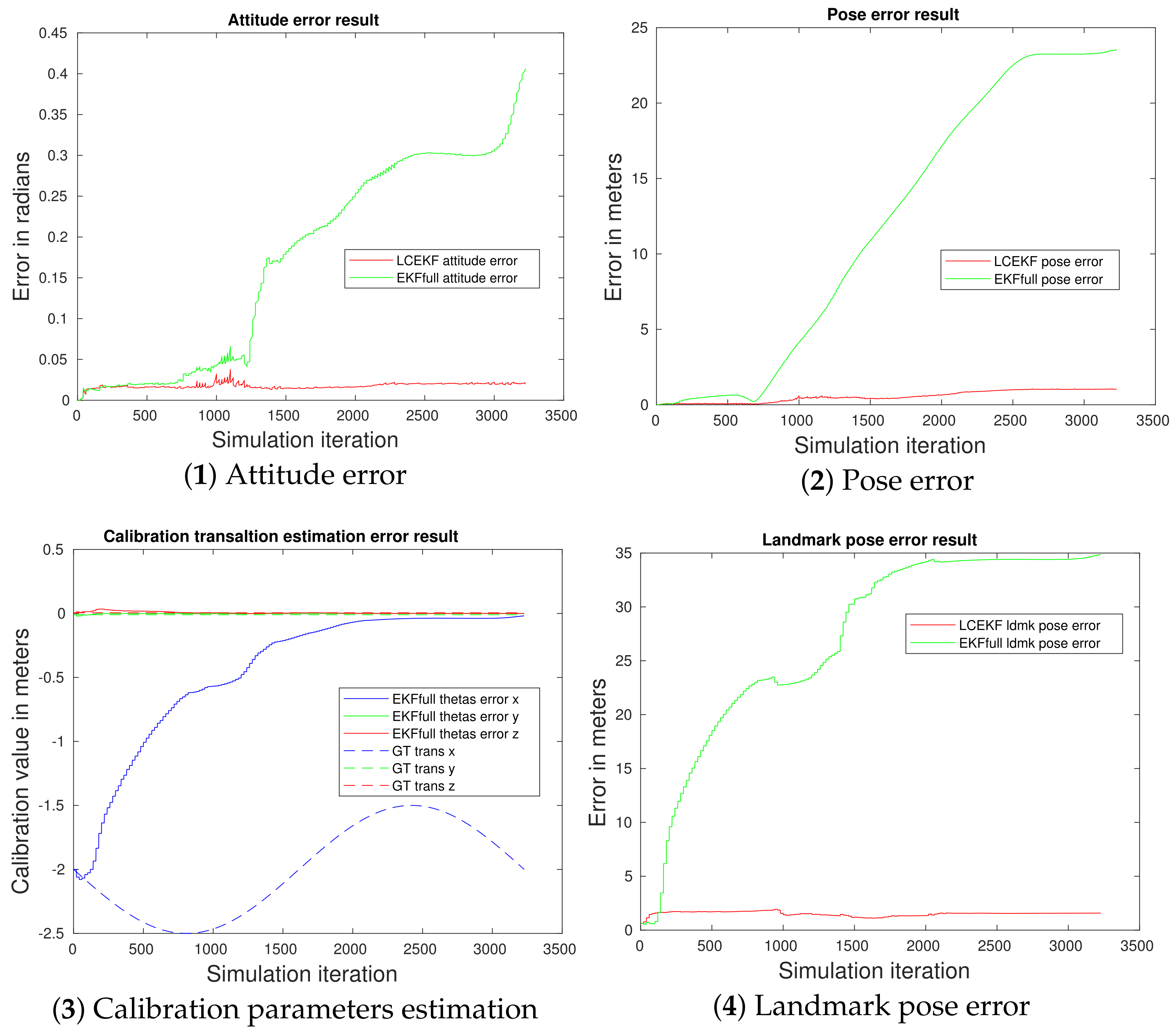

- A standard EKF-based navigation that supposes the given calibration parameters to be good (denoted EKF);

- A standard EKF-based navigation that contains the 6 parameters of in its state vector such that the recalibration is performed on-line (denoted EKFfull).

4.1. State/Measurement Models

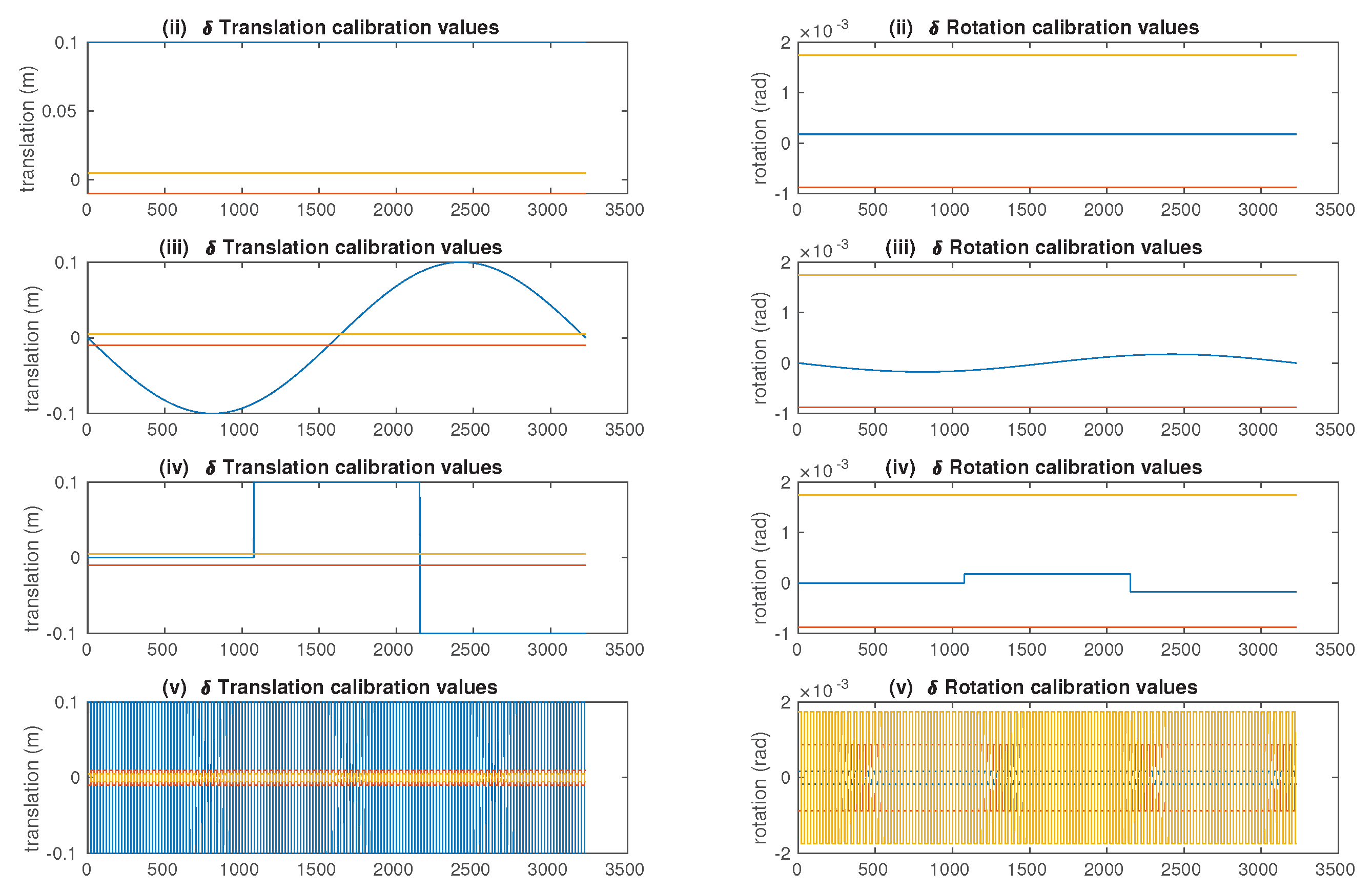

4.2. Noises and Miscalibration/Decalibration Types

- (i)

- Reference case: ;

- (ii)

- Constant noise: . This represents the use of the wrong initial calibration, but with no stress during the experiment;

- (iii)

- Sinusoidal noise: . This case represents for example the day/night thermal constraint encountered for planetary exploration rovers;

- (iv)

- Discontinuous noise: . This case represents a chock or accident encountered while navigating ( can be a combination of Heaviside functions);

- (v)

- Periodic noise: ; This case simulated a strong vibration of the system for a non-perfectly rigid stereo system ( is a periodic square wave).

- For the IMU: ; ;

- For the 2D detections: .

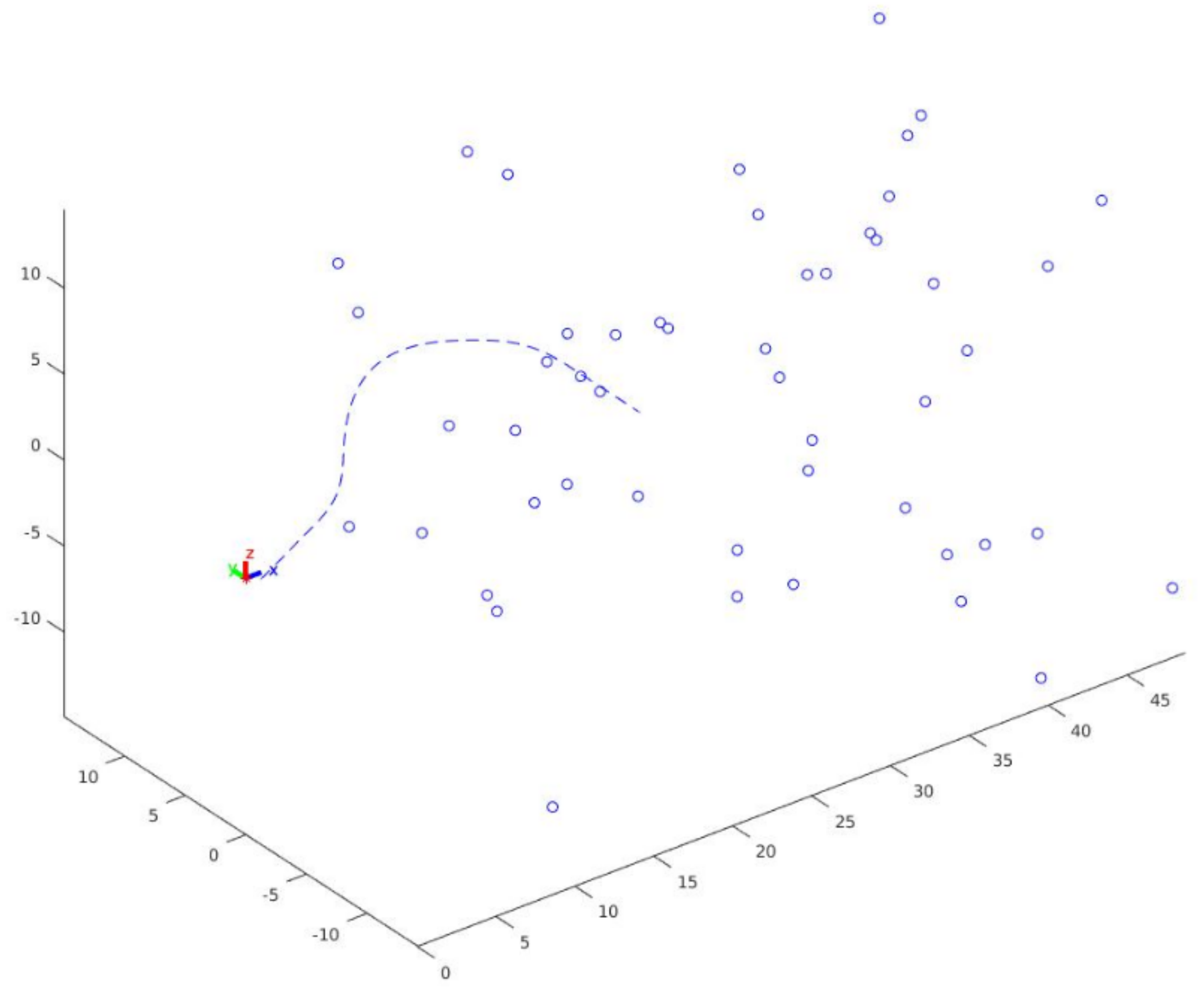

4.3. SLAM Experiment with IMU

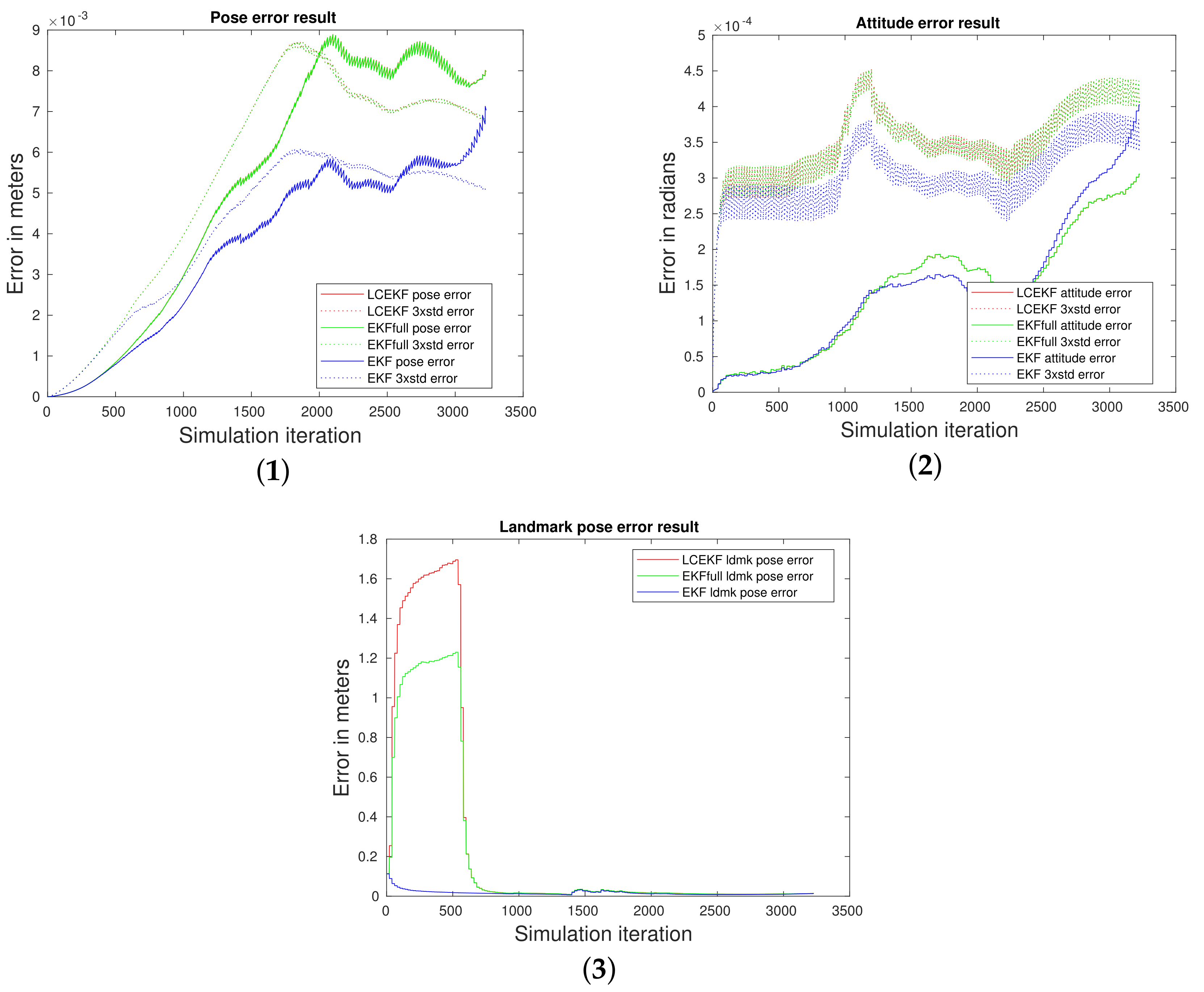

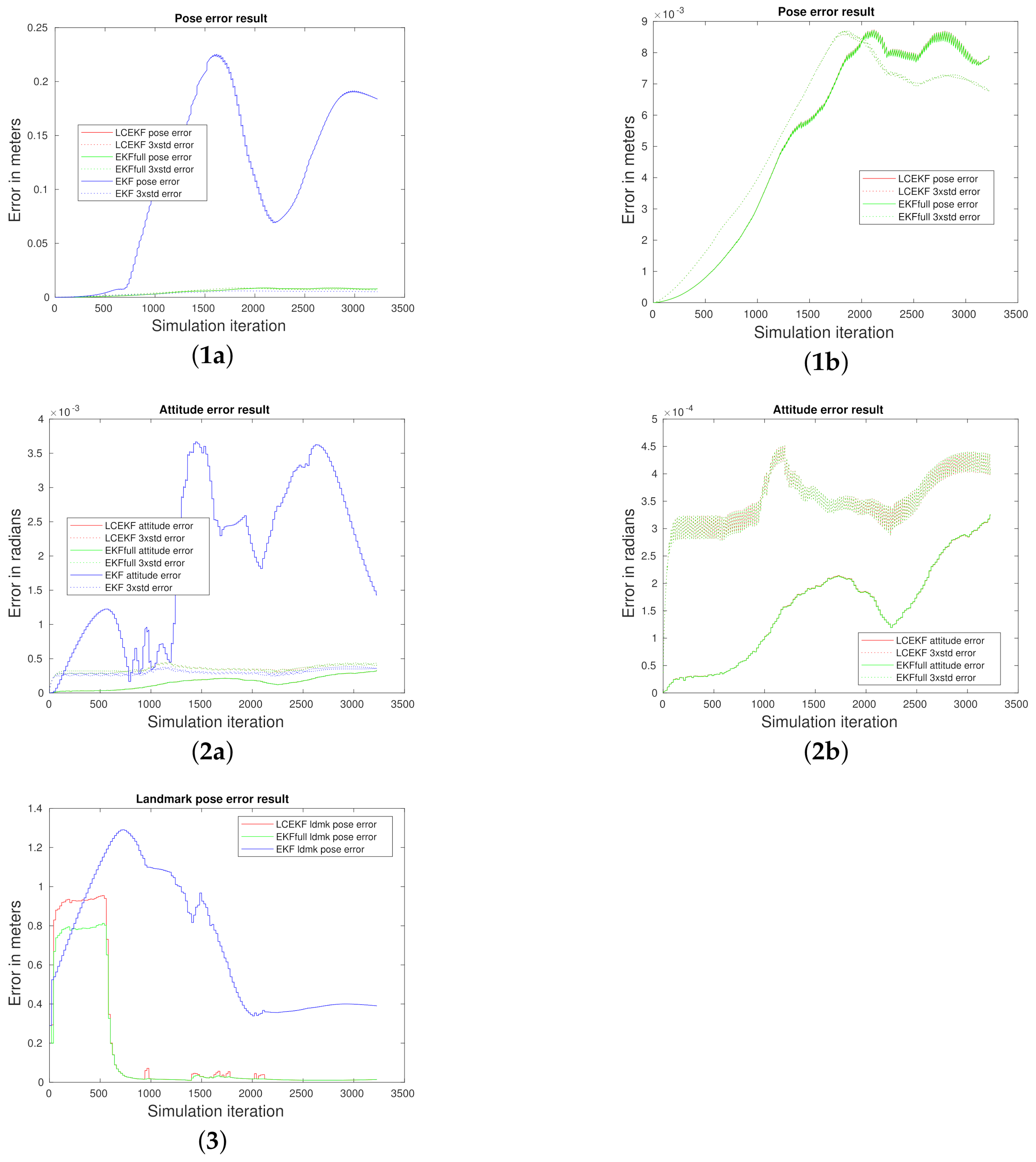

4.3.1. Reference Case Comparison (i)

4.3.2. Noisy Cases (ii), (iii), and (iv)

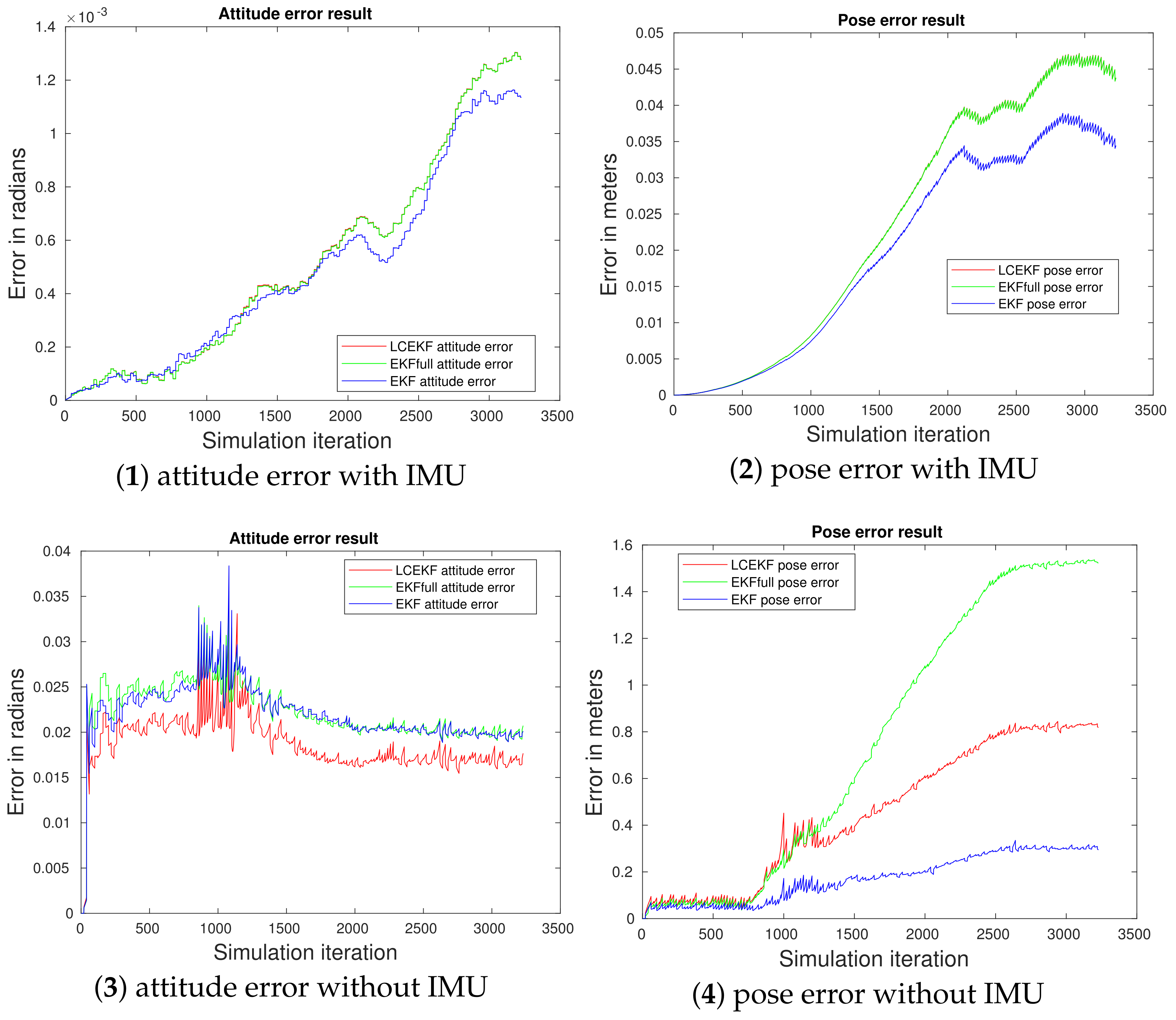

4.3.3. Periodic Noise Case (v)

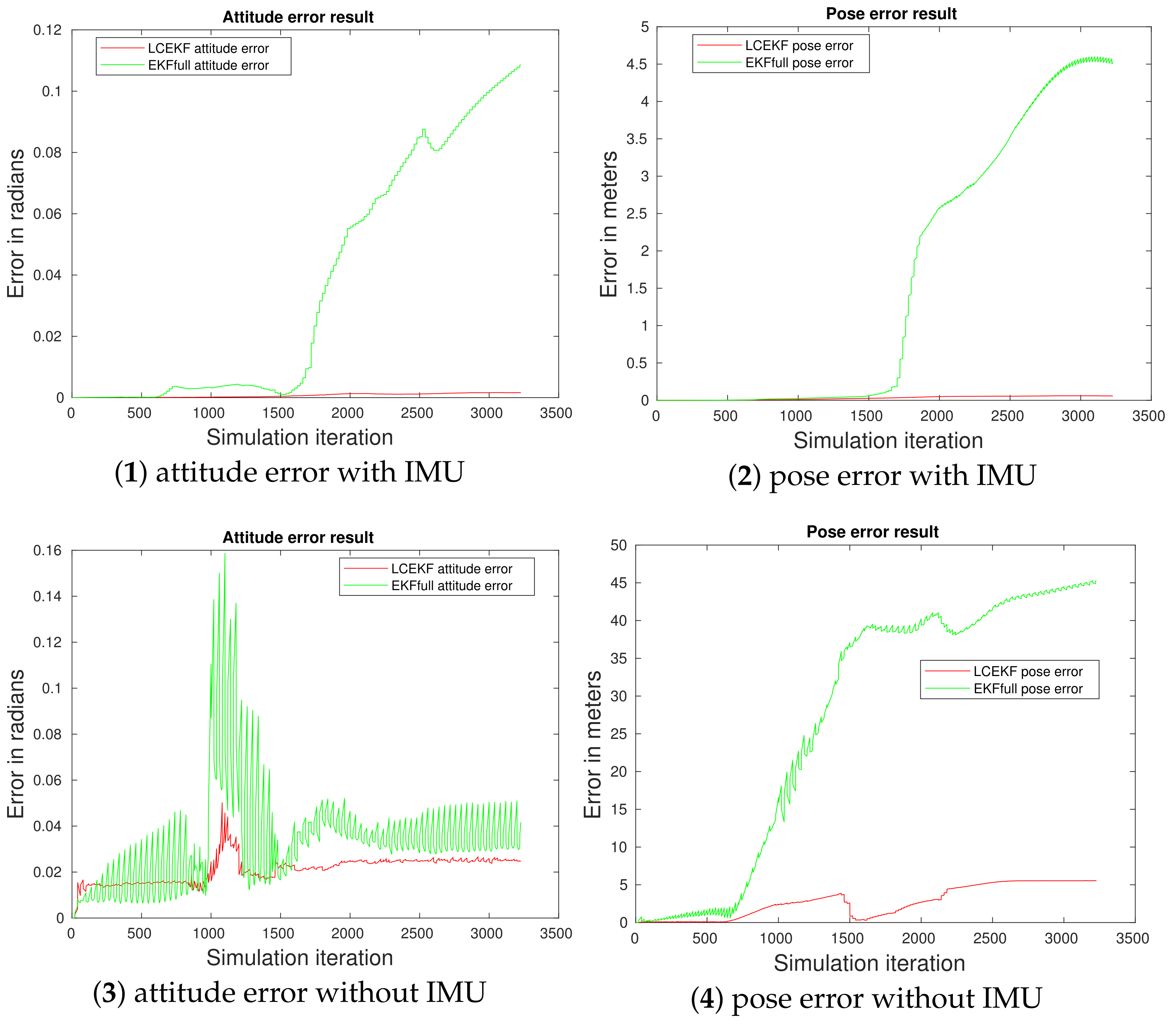

4.4. SLAM Experiment Comparison with and without the IMU

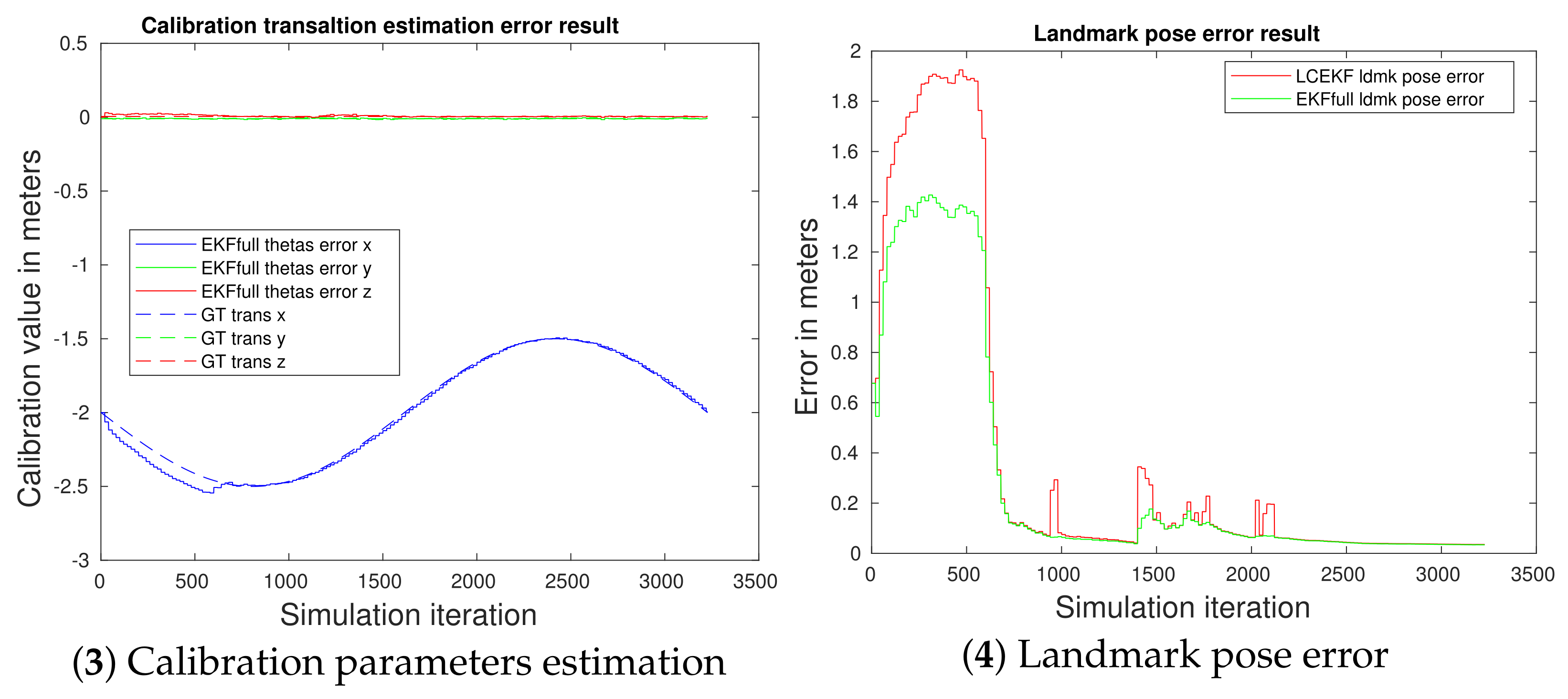

4.5. On the Influence of Kalman Covariance Initialization for Calibration Parameters

Conclusions

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Szczepanski, M. Online Stereo Camera Calibration on Embedded Systems. Ph.D. Thesis, Université Clermont Auvergne, Clermont-Ferrand, France, 2019. [Google Scholar]

- Blume, H. Towards a Common Software/Hardware Methodology for Future Advanced Driver Assistance Systems; River Publishers: Roma, Italy, 2017. [Google Scholar]

- Longuet-Higgins, H.C. A computer algorithm for reconstructing a scene from two projections. Nature 1981, 293, 133–135. [Google Scholar] [CrossRef]

- Hartley, R. In Defense of the 8-Point Algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 580–593. [Google Scholar] [CrossRef] [Green Version]

- Nistér, D. An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–770. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Luong, Q.T.; Faugeras, O. Motion of an uncalibrated stereo rig: Self-calibration and metric reconstruction. IEEE Trans. Robot. Autom. 1996, 12, 103–113. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Takahashi, H.; Tomita, F. Self-Calibration of Stereo Cameras; ICCV: Vienna, Austria, 1988. [Google Scholar]

- Sun, P.; Lu, N.G.; Dong, M.L.; Yan, B.X.; Wang, J. Simultaneous all-parameters calibration and assessment of a stereo camera pair using a scale bar. Sensors 2018, 18, 3964. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dang, T.; Hoffmann, C. Stereo calibration in vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004. [Google Scholar]

- Dang, T.; Hoffmann, C.; Stiller, C. Self-calibration for active automotive stereo vision. In Proceedings of the IEEE Intelligent Vehicles Symposium, Meguro-Ku, Japan, 13–15 June 2006. [Google Scholar]

- Dang, T.; Hoffmann, C.; Stiller, C. Continuous Stereo Self-Calibration by Camera Parameter Tracking. IEEE Trans. Image Process. 2009, 18, 1536–1550. [Google Scholar] [CrossRef] [PubMed]

- Mueller, G.R.; Wuensche, H.J. Continuous extrinsic online calibration for stereo cameras. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gothenburg, Sweden, 19–22 June 2016. [Google Scholar]

- Mueller, G.R.; Wuensche, H.J. Continuous stereo camera calibration in urban scenarios. In Proceedings of the IEEE International Conference on Intelligent Transportation, Yokohama, Japan, 16–19 October 2017. [Google Scholar]

- Collado, J.M.; Hilario, C.; de la Escalera, A.; Armingol, J.M. Self-calibration of an on-board stereo-vision system for driver assistance systems. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gothenburg, Sweden, 19–22 June 2016. [Google Scholar]

- De Paula, M.B.; Jung, C.R.; da Silveira, L., Jr. Automatic on-the-fly extrinsic camera calibration of onboard vehicular cameras. Expert Syst. Appl. 2014, 41, 1997–2007. [Google Scholar] [CrossRef]

- Musleh, B.; Martín, D.; Armingol, J.M.; De la Escalera, A. Pose self-calibration of stereo vision systems for autonomous vehicle applications. Sensors 2016, 16, 1492. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rehder, E.; Kinzig, C.; Bender, P.; Lauer, M. Online stereo camera calibration from scratch. In Proceedings of the IEEE Intelligent Vehicles Symposium, Los Angeles, CA, USA, 11–14 June 2017. [Google Scholar]

- Häne, C.; Heng, L.; Lee, G.H.; Fraundorfer, F.; Furgale, P.; Sattler, T.; Pollefeys, M. 3D visual perception for self-driving cars using a multi-camera system: Calibration, mapping, localization, and obstacle detection. Image Vis. Comput. 2017, 68, 14–27. [Google Scholar] [CrossRef] [Green Version]

- Muhovič, J.; Perš, J. Correcting Decalibration of Stereo Cameras in Self-Driving Vehicles. Sensors 2020, 20, 3241. [Google Scholar] [CrossRef] [PubMed]

- Konolige, K. Small vision systems: Hardware and implementation. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 1998; pp. 203–212. [Google Scholar]

- Crassidis, J.L.; Junkins, J.L. Optimal Estimation of Dynamic Systems; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Poulo, S. Adaptive Filtering, Algorithms and Practical Implementations; Springer: New York, NY, USA, 2008. [Google Scholar]

- Simon, D. Optimal State Estimation: Kalman, H Infinity, and Nonlinear Approaches; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Spall, J.C.; Wall, K.D. Asymptotic distribution theory for the Kalman filter state estimator. Commun. Stat.-Theory Methods 1984, 13, 1981–2003. [Google Scholar] [CrossRef]

- Vorobyov, S.A. Principles of minimum variance robust adaptive beamforming design. Signal Process. 2013, 93, 3264–3277. [Google Scholar] [CrossRef]

- Liu, X.; Li, L.; Li, Z.; Fernando, T.; Iu, H.H. Stochastic stability condition for the extended Kalman filter with intermittent observations. IEEE Trans. Circuits Syst. II Express Briefs 2016, 64, 334–338. [Google Scholar] [CrossRef]

- Vila-Valls, J.; Chaumette, E.; Vincent, F.; Closas, P. Modelling mismatch and noise statistics uncertainty in linear MMSE estimation. In Proceedings of the European Signal Processing Conference, A Coruna, Spain, 2–6 September 2019. [Google Scholar]

- Hrustic, E.; Ben Abdallah, R.; Vilà-Valls, J.; Vivet, D.; Pagès, G.; Chaumette, E. Robust linearly constrained extended Kalman filter for mismatched nonlinear systems. Int. J. Robust Nonlinear Control 2021, 31, 787–805. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vivet, D.; Vilà-Valls, J.; Pages, G.; Chaumette, E. Robust Filter-Based Visual Navigation Solution with Miscalibrated Bi-Monocular or Stereo Cameras. Remote Sens. 2022, 14, 1470. https://doi.org/10.3390/rs14061470

Vivet D, Vilà-Valls J, Pages G, Chaumette E. Robust Filter-Based Visual Navigation Solution with Miscalibrated Bi-Monocular or Stereo Cameras. Remote Sensing. 2022; 14(6):1470. https://doi.org/10.3390/rs14061470

Chicago/Turabian StyleVivet, Damien, Jordi Vilà-Valls, Gaël Pages, and Eric Chaumette. 2022. "Robust Filter-Based Visual Navigation Solution with Miscalibrated Bi-Monocular or Stereo Cameras" Remote Sensing 14, no. 6: 1470. https://doi.org/10.3390/rs14061470

APA StyleVivet, D., Vilà-Valls, J., Pages, G., & Chaumette, E. (2022). Robust Filter-Based Visual Navigation Solution with Miscalibrated Bi-Monocular or Stereo Cameras. Remote Sensing, 14(6), 1470. https://doi.org/10.3390/rs14061470