Prediction of Field-Scale Wheat Yield Using Machine Learning Method and Multi-Spectral UAV Data

Abstract

:1. Introduction

2. Materials and Methods

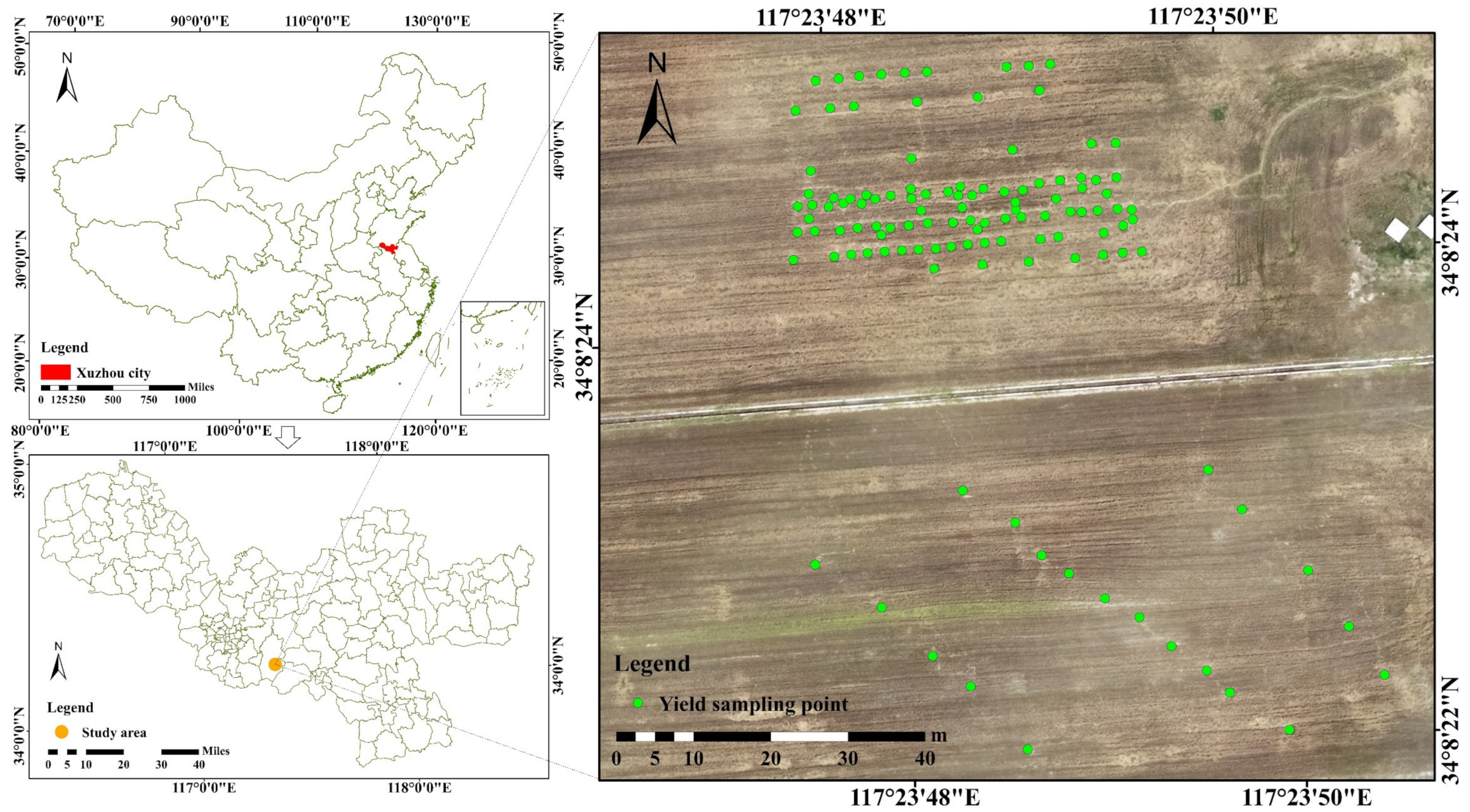

2.1. Study Area

2.2. Field Data Acquisition

2.2.1. UAV Image Acquisition and Processing

2.2.2. Yield Data Acquisition

2.3. Selection of VIs

2.4. Machine Learning Methods for Yield Prediction

2.5. Evaluation Indexes of Models

3. Results

3.1. Comparison of Model Accuracies at Different Stages

3.2. Yield Prediction for Multiple Stages

3.2.1. Correlation Analysis

3.2.2. Yield Prediction for Multiple Stages

3.3. Yield Prediction in Large Plots

3.4. Spatial Distribution of Predicted Yield

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mueller, N.D.; Gerber, J.S.; Johnston, M.; Ray, D.K.; Ramankutty, N.; Foley, J.A. Closing yield gaps through nutrient and water management. Nature 2012, 490, 254–257. [Google Scholar] [CrossRef] [PubMed]

- Samberg, L.H.; Gerber, J.S.; Ramankutty, N.; Herrero, M.; West, P.C. Subnational distribution of average farm size and smallholder contributions to global food production. Environ. Res. Lett. 2016, 11, 124010. [Google Scholar] [CrossRef]

- Bongiovanni, R.; Lowenberg-Deboer, J. Precision Agriculture and Sustainability. Precis. Agric. 2004, 5, 359–387. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Wang, J.; Song, X.; Feng, H. Winter wheat biomass estimation based on spectral indices, band depth analysis and partial least squares regression using hyperspectral measurements. Comput. Electron. Agric. 2014, 100, 51–59. [Google Scholar] [CrossRef]

- Wang, Y.; Chang, K.; Chen, R.; Lo, J.; Shen, Y. Large-area rice yield forecasting using satellite imageries. Int. J. Appl. Earth Obs. 2010, 12, 27–35. [Google Scholar] [CrossRef]

- Du, M.; Noguchi, N. Multi-temporal monitoring of wheat growth through correlation analysis of satellite images, unmanned aerial vehicle images with ground variable. IFAC-PapersOnLine 2016, 49, 5–9. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Yu, N.; Li, L.; Schmitz, N.; Tiaz, L.F.; Greenberg, J.A.; Diers, B.W. Development of methods to improve soybean yield estimation and predict plant maturity with an unmanned aerial vehicle based platform. Remote Sens. Environ. 2016, 187, 91–101. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Verger, A.; Vigneau, N.; Cheron, C.; Gilliot, J.; Comar, A.; Baret, F. Green area index from an unmanned aerial system over wheat and rapeseed crops. Remote Sens. Environ. 2014, 152, 654–664. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Tao, H.; Feng, H.; Xu, L.; Miao, M.; Yang, G.; Yang, X.; Fan, L. Estimation of the yield and plant height of winter wheat using UAV-based hyperspectral images. Sensors 2020, 20, 1231. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qi, H.; Zhu, B.; Wu, Z.; Liang, Y.; Li, J.; Wang, L.; Chen, T.; Lan, Y.; Zhang, L. Estimation of peanut leaf area index from unmanned aerial vehicle multispectral images. Sensors 2020, 20, 6732. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef] [Green Version]

- Zheng, H.; Li, W.; Jiang, J.; Liu, Y.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; Zhang, Y.; Yao, X. A comparative assessment of different modeling algorithms for estimating leaf nitrogen content in winter wheat using multispectral images from an unmanned aerial vehicle. Remote Sens. 2018, 10, 2026. [Google Scholar] [CrossRef] [Green Version]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Lobell, D.B.; Burke, M.B. On the use of statistical models to predict crop yield responses to climate change. Agric. For. Meteorol. 2010, 150, 1443–1452. [Google Scholar] [CrossRef]

- Tao, F.; Yokozawa, M.; Zhang, Z. Modelling the impacts of weather and climate variability on crop productivity over a large area: A new process-based model development, optimization, and uncertainties analysis. Agric. For. Meteorol. 2009, 149, 831–850. [Google Scholar] [CrossRef]

- Van Diepen, C.A.; Wolf, J.; van Keulen, H.; Rappoldt, C. WOFOST: A simulation model of crop production. Soil Use Manag. 1989, 5, 16–24. [Google Scholar] [CrossRef]

- Jones, J.W.; Hoogenboom, G.; Porter, C.H.; Boote, K.J.; Batchelor, W.D.; Hunt, L.A.; Wilkens, P.W.; Singh, U.; Gijsman, A.J.; Ritchie, J.T. The DSSAT cropping system model. Eur. J. Agron. 2003, 18, 235–265. [Google Scholar] [CrossRef]

- Keating, B.A.; Carberry, P.S.; Hammer, G.L.; Probert, M.E.; Robertson, M.J.; Holzworth, D.; Huth, N.I.; Hargreaves, J.; Meinke, H.; Hochman, Z.; et al. An overview of APSIM, a model designed for farming systems simulation. Eur. J. Agron. 2003, 18, 267–288. [Google Scholar] [CrossRef] [Green Version]

- Filippi, P.; Jones, E.J.; Wimalathunge, N.S.; Somarathna, P.D.S.N.; Pozza, L.E.; Ugbaje, S.U.; Jephcott, T.G.; Paterson, S.E.; Whelan, B.M.; Bishop, T.F.A. An approach to forecast grain crop yield using multi-layered, multi-farm data sets and machine learning. Precis. Agric. 2019, 20, 1015–1029. [Google Scholar] [CrossRef]

- Aghighi, H.; Azadbakht, M.; Ashourloo, D.; Shahrabi, H.S.; Radiom, S. Machine learning regression techniques for the silage maize yield prediction using time-series images of Landsat 8 OLI. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4563–4577. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Lobell, D.; Potgieter, A.B.; Wang, S.; Peng, J.; Xu, T.; Asseng, S.; Zhang, Y.; You, L.; et al. Integrating satellite and climate data to predict wheat yield in Australia using machine learning approaches. Agric. For. Meteorol. 2019, 274, 144–159. [Google Scholar] [CrossRef]

- Zhang, Z.; Song, X.; Tao, F.; Zhang, S.; Shi, W. Climate trends and crop production in China at county scale, 1980 to 2008. Theor. Appl. Climatol. 2016, 123, 291–302. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Z.; Luo, Y.; Cao, J.; Xie, R.; Li, S. Integrating satellite-derived climatic and vegetation indices to predict smallholder maize yield using deep learning. Agric. For. Meteorol. 2021, 311, 108666. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Fang, P.; Zhang, X.; Wei, P.; Wang, Y.; Zhang, H.; Liu, F.; Zhao, J. The classification performance and mechanism of machine learning algorithms in winter wheat mapping using Sentinel-2 10 m resolution imagery. Appl. Sci. 2020, 10, 5075. [Google Scholar] [CrossRef]

- Fu, Z.; Jiang, J.; Gao, Y.; Krienke, B.; Wang, M.; Zhong, K.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; et al. Wheat growth monitoring and yield estimation based on multi-rotor unmanned aerial vehicle. Remote Sens. 2020, 12, 508. [Google Scholar] [CrossRef] [Green Version]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Kefauver, S.C.; Vicente, R.; Vergara-Diaz, O.; Fernandez-Gallego, J.A.; Kerfal, S.; Lopez, A.; Melichar, J.P.E.; Serret Molins, M.D.; Araus, J.L. Comparative UAV and field phenotyping to assess yield and nitrogen use efficiency in hybrid and conventional barle. Front. Plant Sci. 2017, 8, 1733. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of winter wheat above-ground biomass using unmanned aerial vehicle-based snapshot hyperspectral sensor and crop height improved models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef] [Green Version]

- Mkhabela, M.S.; Bullock, P.; Raj, S.; Wang, S.; Yang, Y. Crop yield forecasting on the Canadian Prairies using MODIS NDVI data. Agric. For. Meteorol. 2011, 151, 385–393. [Google Scholar] [CrossRef]

- Rudorff, B.F.T.; Batista, G.T. Spectral response of wheat and its relationship to agronomic variables in the tropical region. Remote Sens. Environ. 1990, 31, 53–63. [Google Scholar] [CrossRef] [Green Version]

- Cui, Z.; Zhang, H.; Chen, X.; Zhang, C.; Ma, W.; Huang, C.; Zhang, W.; Mi, G.; Miao, Y.; Li, X.; et al. Pursuing sustainable productivity with millions of smallholder farmers. Nature 2018, 555, 363. [Google Scholar] [CrossRef]

- Curtis, T.; Halford, N.G. Food security: The challenge of increasing wheat yield and the importance of not compromising food safety. Ann. Appl. Biol. 2014, 164, 354–372. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Zhang, K.; Sun, Y.; Zhao, Y.; Zhuang, H.; Ban, W.; Chen, Y.; Fu, E.; Chen, S.; Liu, J.; et al. Combining spectral and texture features of UAS-based multispectral images for maize leaf area index estimation. Remote Sens. 2022, 14, 331. [Google Scholar] [CrossRef]

- Baugh, W.M.; Groeneveld, D.P. Empirical proof of the empirical line. Int. J. Remote Sens. 2008, 29, 665–672. [Google Scholar] [CrossRef]

- Saeed, U.; Dempewolf, J.; Becker-Reshef, I.; Khan, A.; Ahmad, A.; Wajid, S.A. Forecasting wheat yield from weather data and MODIS NDVI using Random Forests for Punjab province, Pakistan. Int. J. Remote Sens. 2017, 38, 4831–4854. [Google Scholar] [CrossRef]

- Johnson, M.D.; Hsieh, W.W.; Cannon, A.J.; Davidson, A.; Bedard, F. Crop yield forecasting on the Canadian Prairies by remotely sensed vegetation indices and machine learning methods. Agric. For. Meteorol. 2016, 218, 74–84. [Google Scholar] [CrossRef]

- Bolton, D.K.; Friedl, M.A. Forecasting crop yield using remotely sensed vegetation indices and crop phenology metrics. Agric. For. Meteorol. 2013, 173, 74–84. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef] [Green Version]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubuehler, M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Sellaro, R.; Crepy, M.; Ariel Trupkin, S.; Karayekov, E.; Sabrina Buchovsky, A.; Rossi, C.; Jose Casal, J. Cryptochrome as a sensor of the blue/green ratio of natural radiation in arabidopsis. Plant Physiol. 2010, 154, 401–409. [Google Scholar] [CrossRef] [Green Version]

- Sulik, J.J.; Long, D.S. Spectral considerations for modeling yield of canola. Remote Sens. Environ. 2016, 184, 161–174. [Google Scholar] [CrossRef] [Green Version]

- Zhou, L.; Chen, N.; Chen, Z.; Xing, C. ROSCC: An efficient remote sensing observation-sharing method based on cloud computing for soil moisture mapping in precision agriculture. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5588–5598. [Google Scholar] [CrossRef]

- Qi, J.; Kerr, Y.H.; Moran, M.S.; Weltz, M.; Huete, A.R.; Sorooshian, S.; Bryant, R. Leaf area index estimates using remotely sensed data and BRDF models in a semiarid region. Remote Sens. Environ. 2000, 73, 18–30. [Google Scholar] [CrossRef] [Green Version]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Miller, G.J.; Morris, J.T.; Wang, C. Estimating aboveground biomass and its spatial distribution in coastal wetlands utilizing planet multispectral imagery. Remote Sens. 2019, 11, 2020. [Google Scholar] [CrossRef] [Green Version]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Rasmussen, C.E. Gaussian processes in machine learning. In Lecture Notes in Artificial Intelligence; Bousquet, O., VonLuxburg, U., Ratsch, G., Eds.; Advanced Lectures On Machine Learning; Springer: Berlin, Germany, 2004; Volume 3176, pp. 63–71. [Google Scholar] [CrossRef] [Green Version]

- Verrelst, J.; Pablo Rivera, J.; Gitelson, A.; Delegido, J.; Moreno, J.; Camps-Valls, G. Spectral band selection for vegetation properties retrieval using Gaussian processes regression. Int. J. Appl. Earth Obs. 2016, 52, 554–567. [Google Scholar] [CrossRef]

- Dong, L.; Du, H.; Han, N.; Li, X.; Zhu, D.; Mao, F.; Zhang, M.; Zheng, J.; Liu, H.; Huang, Z.; et al. Application of convolutional neural network on lei bamboo above-ground-biomass (AGB) estimation using Worldview-2. Remote Sens. 2020, 12, 958. [Google Scholar] [CrossRef] [Green Version]

- Rhee, J.; Im, J. Meteorological drought forecasting for ungauged areas based on machine learning: Using long-range climate forecast and remote sensing data. Agric. For. Meteorol. 2017, 237, 105–122. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Rafique, R.; Islam, S.M.R.; Kazi, J.U. Machine learning in the prediction of cancer therapy. Comput. Struct. Biotechnol. J. 2021, 19, 4003–4017. [Google Scholar] [CrossRef]

- Podgorelec, V.; Kokol, P.; Stiglic, B.; Rozman, I. Decision trees: An overview and their use in medicine. J. Med. Syst. 2002, 26, 445–463. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso: A retrospective. J. R. Stat. Soc. B 2011, 73, 273–282. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Liu, L.; Ji, M.; Buchroithner, M. Combining partial least squares and the gradient-boosting method for soil property retrieval using visible near-infrared shortwave infrared spectra. Remote Sens. 2017, 9, 1299. [Google Scholar] [CrossRef] [Green Version]

- Hong, T.; Pinson, P.; Fan, S.; Zareipour, H.; Troccoli, A.; Hyndman, R.J. Probabilistic energy forecasting: Global Energy Forecasting Competition 2014 and beyond. Int. J. Forecast. 2016, 32, 896–913. [Google Scholar] [CrossRef] [Green Version]

- Yadav, S.; Shukla, S. Analysis of k-fold cross-validation over hold-out validation on colossal datasets for quality classification. In Proceedings of the 2016 IEEE 6th International Conference On Advanced Computing (IACC), Bhimavaram, India, 27–28 February 2016; pp. 78–83. [Google Scholar] [CrossRef]

- Pearson, E.S.; Snow, B.A.S. Tests for rank correlation coefficients. Biometrika 1962, 49, 185–191. [Google Scholar] [CrossRef]

- Labus, M.P.; Nielsen, G.A.; Lawrence, R.L.; Engel, R.; Long, D.S. Wheat yield estimates using multi-temporal NDVI satellite imagery. Int. J. Remote Sens. 2002, 23, 4169–4180. [Google Scholar] [CrossRef]

- Shanahan, J.F.; Schepers, J.S.; Francis, D.D.; Varvel, G.E.; Wilhelm, W.W.; Tringe, J.M.; Schlemmer, M.R.; Major, D.J. Use of remote-sensing imagery to estimate corn grain yield. Agron. J. 2001, 93, 583–589. [Google Scholar] [CrossRef] [Green Version]

- Lai, Y.R.; Pringle, M.J.; Kopittke, P.M.; Menzies, N.W.; Orton, T.G.; Dang, Y.P. An empirical model for prediction of wheat yield, using time-integrated Landsat NDVI. Int. J. Appl. Earth Obs. 2018, 72, 99–108. [Google Scholar] [CrossRef]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Minh, V.Q.; Trung, N.H. A comparative analysis of multitemporal MODIS EVI and NDVI data for large-scale rice yield estimation. Agric. For. Meteorol. 2014, 197, 52–64. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef] [Green Version]

- Guan, K.; Wu, J.; Kimball, J.S.; Anderson, M.C.; Frolking, S.; Li, B.; Hain, C.R.; Lobe, D.B. The shared and unique values of optical, fluorescence, thermal and microwave satellite data for estimating large-scale crop yields. Remote Sens. Environ. 2017, 199, 333–349. [Google Scholar] [CrossRef] [Green Version]

- Royo, C.; Aparicio, N.; Villegas, D.; Casadesus, J.; Monneveux, P.; Araus, J.L. Usefulness of spectral reflectance indices as durum wheat yield predictors under contrasting Mediterranean conditions. Int. J. Remote Sens. 2003, 24, 4403–4419. [Google Scholar] [CrossRef]

- Wenliang, Z.; Zhen, H.; Junping, H. Remote sensing estimation for winter wheat yield in Henan based on the MODIS-NDVI data. Geogr. Res. 2012, 31, 2310–2320. [Google Scholar] [CrossRef]

- Gallego, F.J. Remote sensing and land cover area estimation. Int. J. Remote Sens. 2004, 25, 3019–3047. [Google Scholar] [CrossRef]

- Dordas, C. Variation in dry matter and nitrogen accumulation and remobilization in barley as affected by fertilization, cultivar, and source-sink relations. Eur. J. Agron. 2012, 37, 31–42. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, N.; Hong, J.; Zhang, Q.; Wang, C.; Xue, Q.; Zhou, S.; Huang, Q.; Wang, Z. Effect of source-sink manipulation on photosynthetic characteristics of flag leaf and the remobilization of dry mass and nitrogen in vegetative organs of wheat. J. Integr. Agric. 2014, 13, 1680–1690. [Google Scholar] [CrossRef] [Green Version]

- Taylor, J.A.; McBratney, A.B.; Whelan, B.M. Establishing management classes for broadacre agricultural production. Agron. J. 2007, 99, 1366–1376. [Google Scholar] [CrossRef]

- Han, J.; Zhang, Z.; Cao, J.; Luo, Y.; Zhang, L.; Li, Z.; Zhang, J. Prediction of winter wheat yield based on multi-source data and machine learning in China. Remote Sens. 2020, 12, 236. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Huang, J.; Feng, Q.; Yin, D. Winter wheat yield prediction at county level and uncertainty analysis in main wheat-producing regions of China with deep learning approaches. Remote Sens. 2020, 12, 1744. [Google Scholar] [CrossRef]

| VI | Formulation | Reference |

|---|---|---|

| GRRI | G/R | [44] |

| GBRI | G/B | [45] |

| RBRI | R/B | [45] |

| NDYI | (G − B)/(G + B) | [46] |

| RVI | NIR/R | [47] |

| NDVI | (NIR − R)/(NIR + R) | [48] |

| MTVI | 1.2*(1.2*(NIR − G) − 2.5*(R − G)) | [49] |

| EVI2 | 2.5*(NIR − R)/(NIR + 2.4*R + 1) | [50] |

| MSAVI2 | 0.5*((2*NIR + 1) − (sqrt((2*NIR)^2 − 8*(NIR − R)) | [51] |

| TCARI | 3*((RE − R) − 0.2*(RE − G)*(RE/R)) | [52] |

| Combination of VIs | All VIs | ESCVIs | ||||

|---|---|---|---|---|---|---|

| Stage\Algorithm | GPR | SVR | RFR | GPR | SVR | RFR |

| Flowering | 0.79 a | 0.77 a | 0.75 a | 0.80 a | 0.82 a | 0.77 a |

| 62.60 b | 64.34 b | 67.66 b | 63.46 b | 59.26 b | 67.07 b | |

| 49.37 c | 52.32 c | 52.81 c | 52.47 c | 49.65 c | 49.52 c | |

| Filling | 0.87 a | 0.86 a | 0.83 a | 0.87 a | 0.87 a | 0.80 a |

| 49.41 b | 50.55 b | 55.51 b | 49.22 b | 49.33 b | 63.12 b | |

| 42.82 c | 43.44 c | 44.29 c | 42.74 c | 42.83 c | 53.08 c | |

| Flowering & Filling | 0.83 a | 0.79 a | 0.83 a | 0.88 a | 0.87 a | 0.86 a |

| 58.24 b | 63.86 b | 56.68 b | 49.18 b | 50.83 b | 52.88 b | |

| 49.77 c | 52.56 c | 46.36 c | 42.57 c | 43.34 c | 43.42 c | |

| Plots/Indexes (g/m2) | Mean of Measurements | Mean of Predictions | RMSE | MAE |

|---|---|---|---|---|

| 1 m × 1 m | 529.58 | 532.58 | 63.44 | 50.97 |

| 3 m × 3 m | 554.05 | 529.72 | 55.95 | 38.73 |

| 5 m × 5 m | 515.83 | 534.10 | 39.76 | 35.48 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bian, C.; Shi, H.; Wu, S.; Zhang, K.; Wei, M.; Zhao, Y.; Sun, Y.; Zhuang, H.; Zhang, X.; Chen, S. Prediction of Field-Scale Wheat Yield Using Machine Learning Method and Multi-Spectral UAV Data. Remote Sens. 2022, 14, 1474. https://doi.org/10.3390/rs14061474

Bian C, Shi H, Wu S, Zhang K, Wei M, Zhao Y, Sun Y, Zhuang H, Zhang X, Chen S. Prediction of Field-Scale Wheat Yield Using Machine Learning Method and Multi-Spectral UAV Data. Remote Sensing. 2022; 14(6):1474. https://doi.org/10.3390/rs14061474

Chicago/Turabian StyleBian, Chaofa, Hongtao Shi, Suqin Wu, Kefei Zhang, Meng Wei, Yindi Zhao, Yaqin Sun, Huifu Zhuang, Xuewei Zhang, and Shuo Chen. 2022. "Prediction of Field-Scale Wheat Yield Using Machine Learning Method and Multi-Spectral UAV Data" Remote Sensing 14, no. 6: 1474. https://doi.org/10.3390/rs14061474