Effects of UAV-LiDAR and Photogrammetric Point Density on Tea Plucking Area Identification

Abstract

:1. Introduction

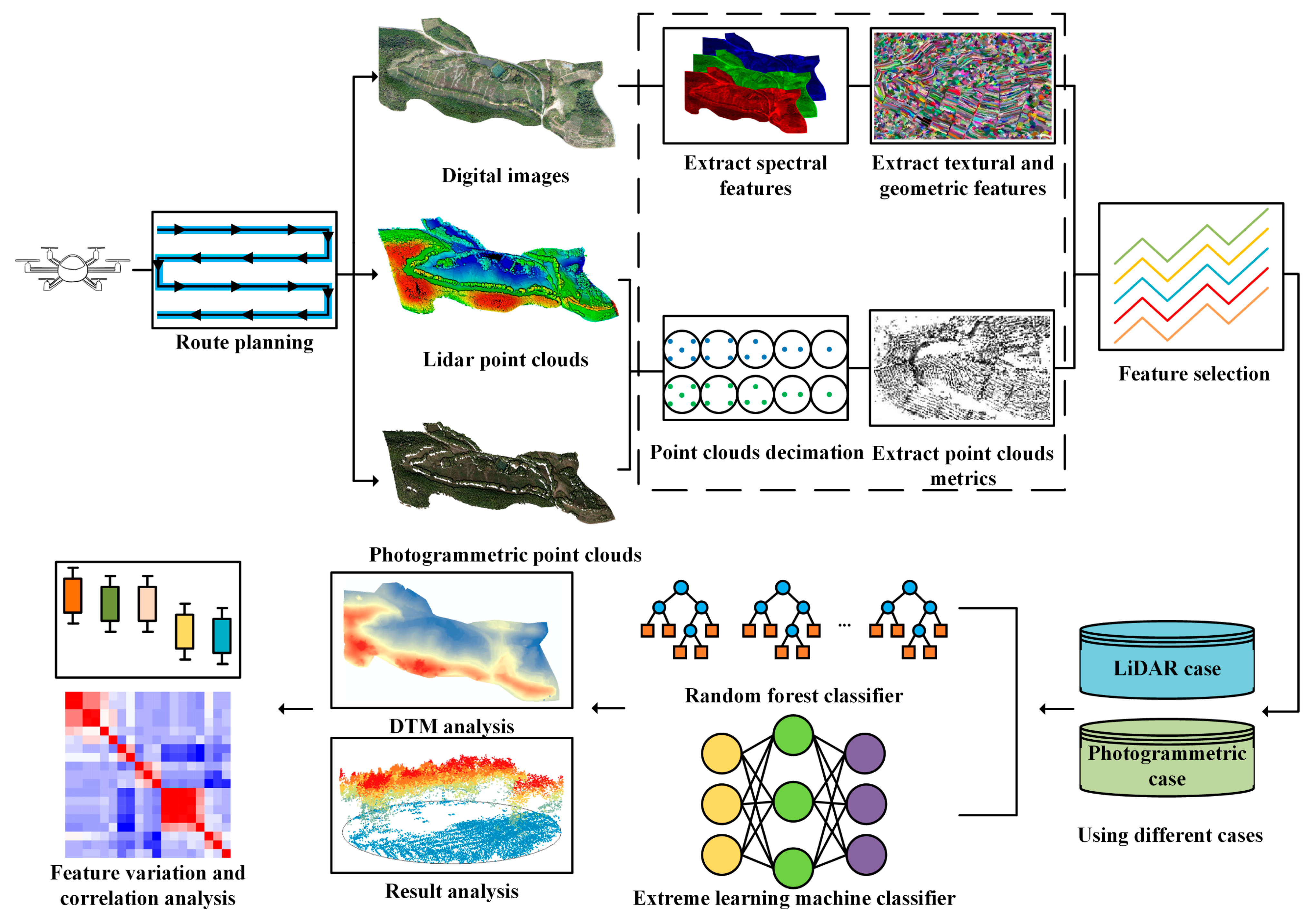

2. Materials and Methods

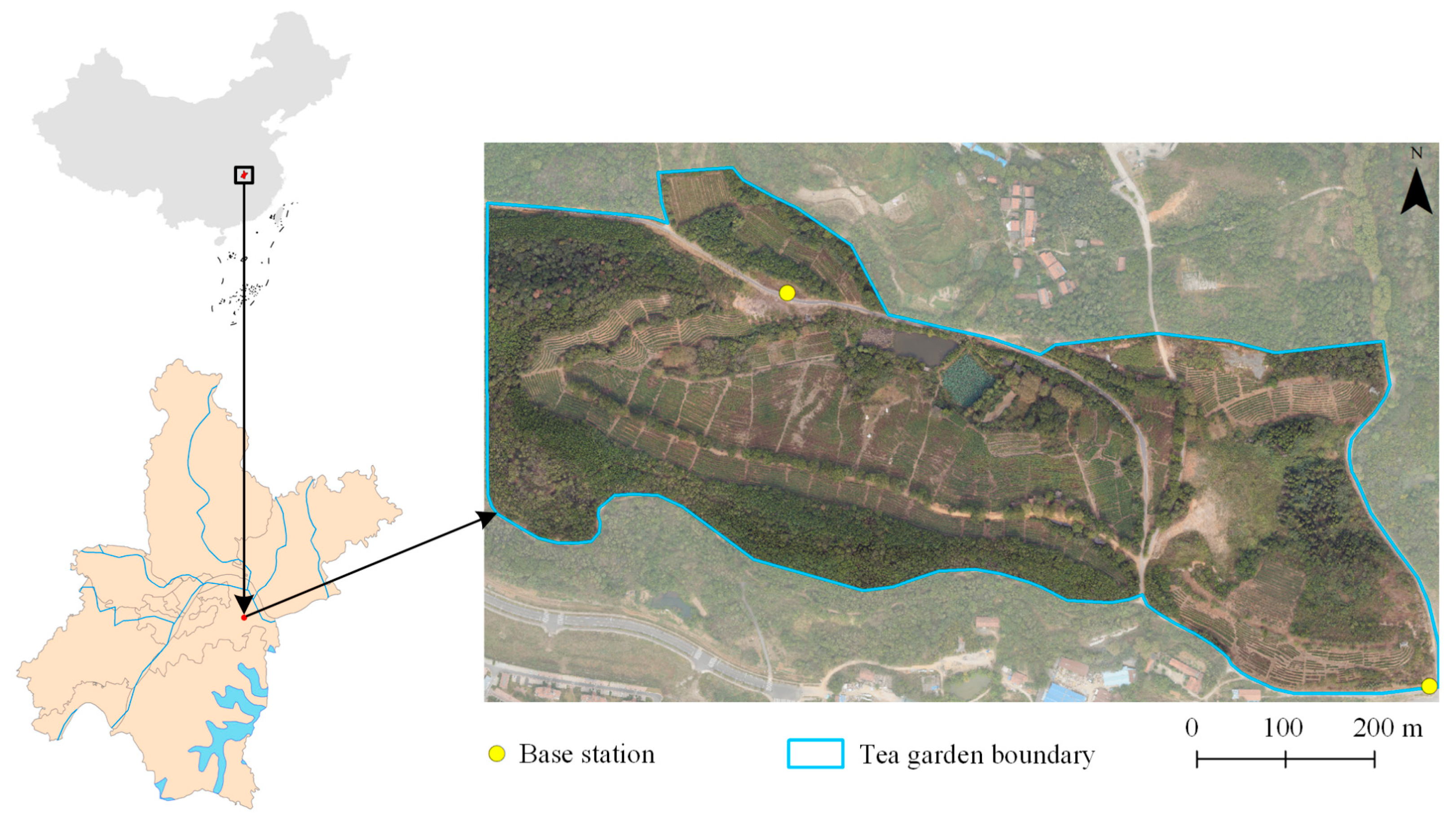

2.1. Study Area

2.2. UAV Data and Processing

2.2.1. LiDAR Data

2.2.2. Photogrammetric Data

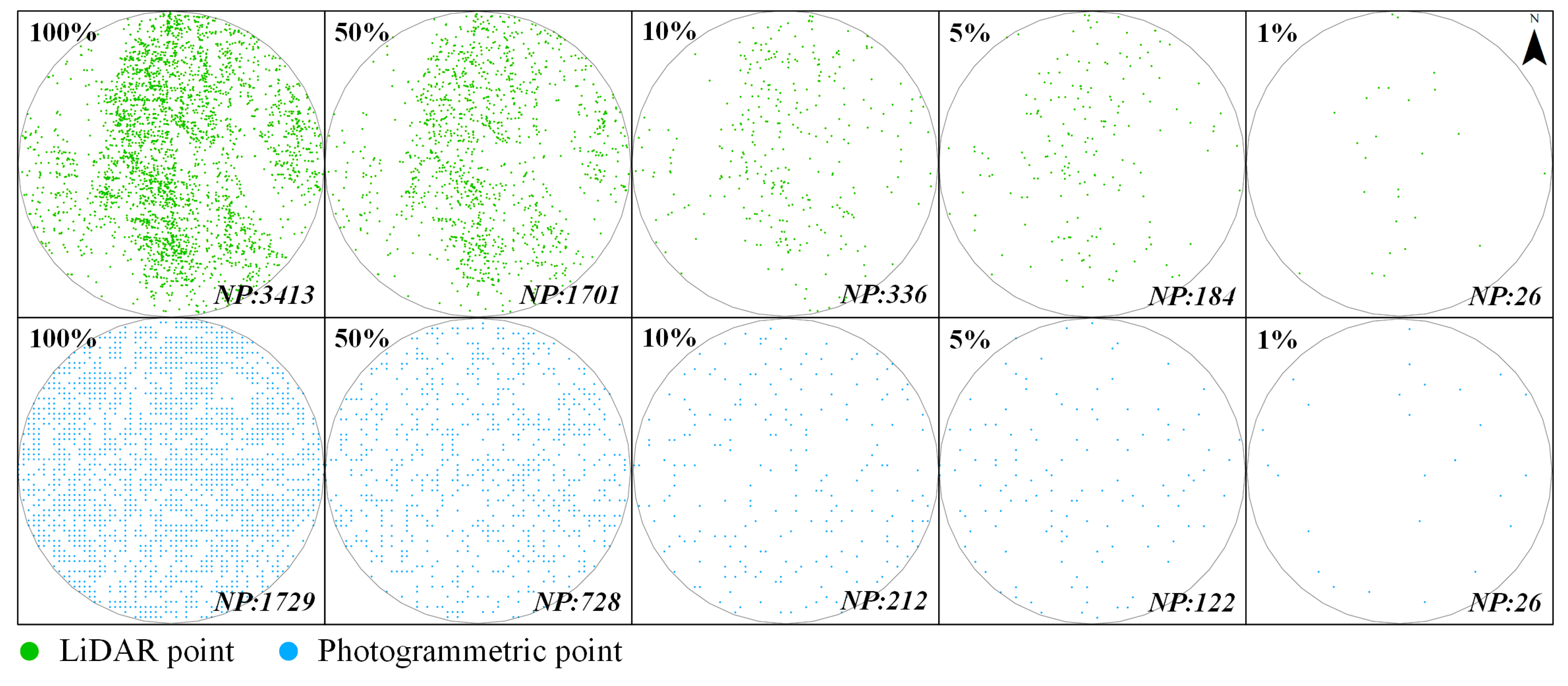

2.3. Point Cloud Data Decimation and Feature Extraction

2.4. Classification Algorithms and Feature Selection

2.4.1. Classification Algorithms

2.4.2. Feature Selection

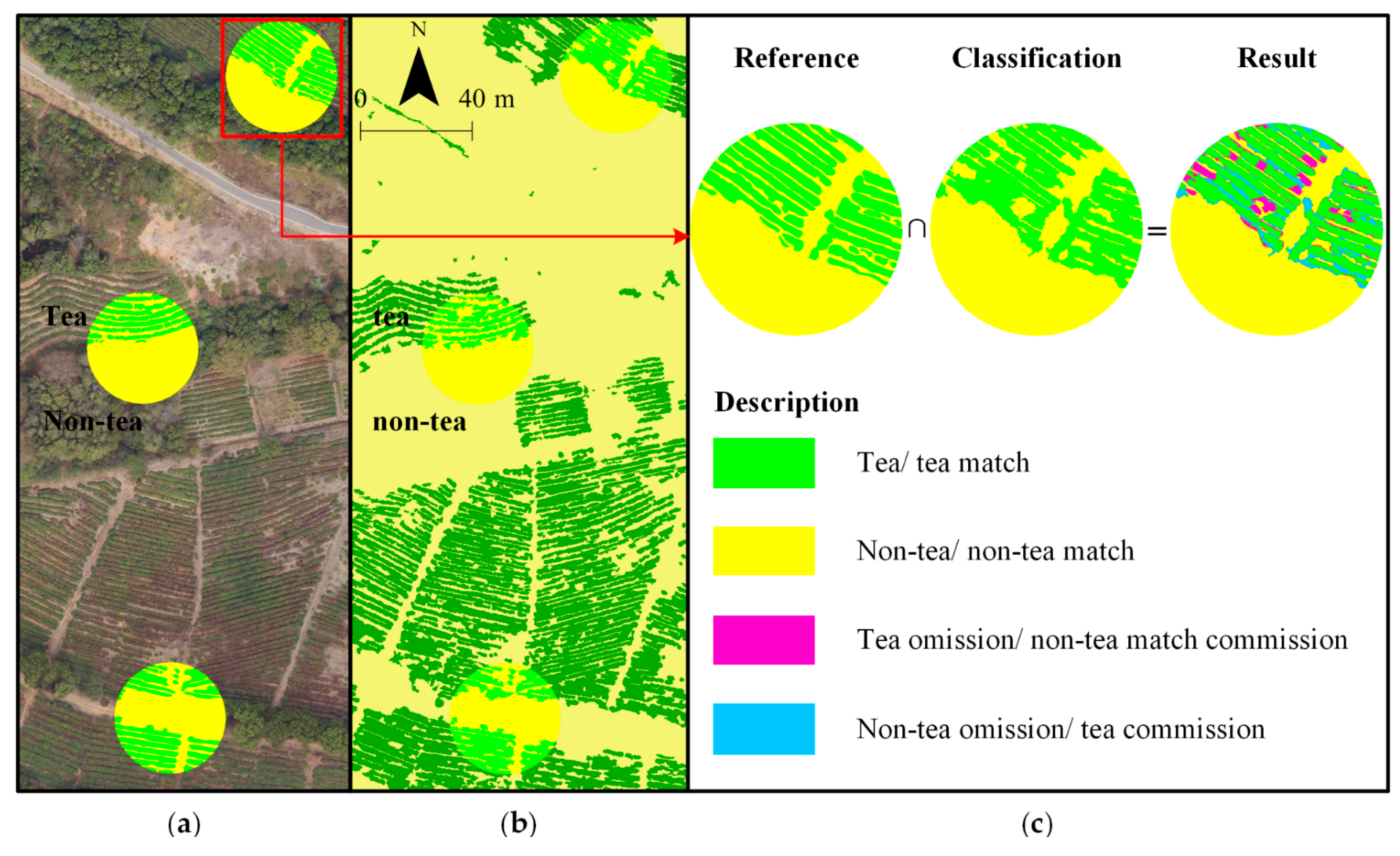

2.5. Accuracy Assessment

3. Results

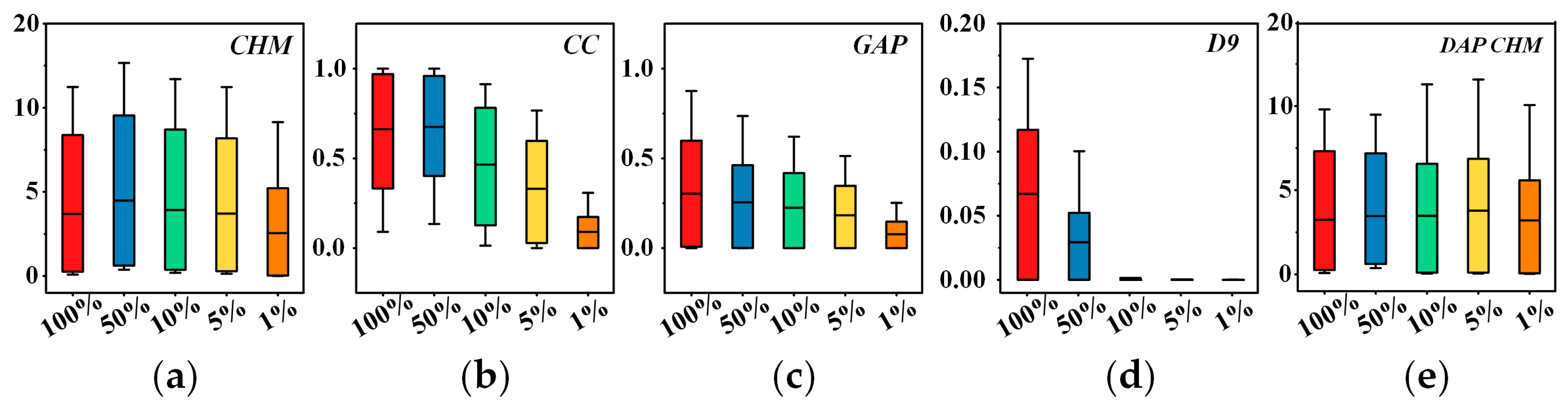

3.1. Point Density Effect on the DTM

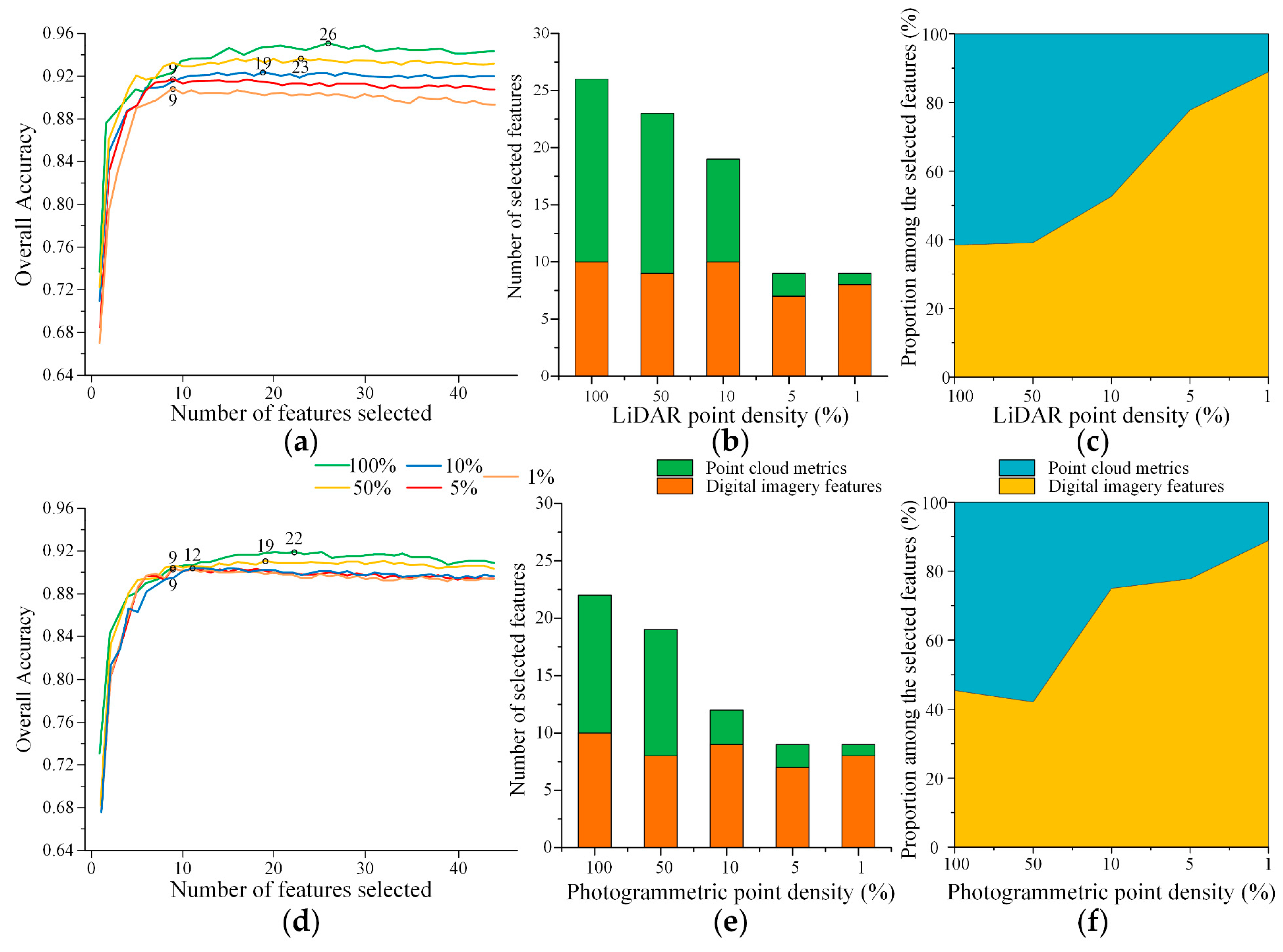

3.2. Point Density Effect on Feature Selection

3.3. Point Density Effect on Classification Accuracy

3.3.1. Algorithm Accuracy Assessment

3.3.2. Area-Based Accuracy Assessment

3.4. Cost–Benefit Comparison

4. Discussion

4.1. Performance of LiDAR and Photogrammetric Data

4.2. Point Cloud Density Effect on Mapping Tea Plucking Areas

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Wang, B.; Li, J.; Jin, X.; Xiao, H. Mapping tea plantations from multi-seasonal Landsat-8 OLI imageries using a random forest classifier. J. Indian Soc. Remote Sens. 2019, 47, 1315–1329. [Google Scholar] [CrossRef]

- Wambu, E.W.; Fu, H.Z.; Ho, Y.S. Characteristics and trends in global tea research: A Science Citation Index Expanded-based analysis. Int. J. Food Sci. Technol. 2017, 52, 644–651. [Google Scholar] [CrossRef]

- Zhang, M.; Yonggen, C.; Dongmei, F.; Qing, Z.; Zhiqiang, P.; Kai, F.; Xiaochang, W. Temporal evolution of carbon storage in Chinese tea plantations from 1950 to 2010. Pedosphere 2017, 27, 121–128. [Google Scholar] [CrossRef]

- Zhu, J.; Pan, Z.; Wang, H.; Huang, P.; Sun, J.; Qin, F.; Liu, Z. An improved multi-temporal and multi-feature tea plantation identification method using Sentinel-2 imagery. Sensors 2019, 19, 2087. [Google Scholar] [CrossRef] [Green Version]

- Teke, M.; Deveci, H.S.; Haliloglu, O.; Gurbuz, S.Z.; Sakarya, U. A Short Survey of Hyperspectral Remote Sensing Applications in Agriculture; IEEE: New York, NY, USA, 2013; pp. 171–176. [Google Scholar]

- Peña, J.M.; Gutiérrez, P.A.; Hervás-Martínez, C.; Six, J.; Plant, R.E.; López-Granados, F. Object-based image classification of summer crops with machine learning methods. Remote Sens. 2014, 6, 5019–5041. [Google Scholar] [CrossRef] [Green Version]

- Csillik, O.; Kumar, P.; Mascaro, J.; O’Shea, T.; Asner, G.P. Monitoring tropical forest carbon stocks and emissions using Planet satellite data. Sci. Rep. 2019, 9, 17831. [Google Scholar] [CrossRef] [Green Version]

- Lama, G.F.C.; Sadeghifar, T.; Azad, M.T.; Sihag, P.; Kisi, O. On the Indirect Estimation of Wind Wave Heights over the Southern Coasts of Caspian Sea: A Comparative Analysis. Water 2022, 14, 843. [Google Scholar] [CrossRef]

- Sadeghifar, T.; Lama, G.; Sihag, P.; Bayram, A.; Kisi, O. Wave height predictions in complex sea flows through soft-computing models: Case study of Persian Gulf. Ocean Eng. 2022, 245, 110467. [Google Scholar] [CrossRef]

- Imai, M.; Kurihara, J.; Kouyama, T.; Kuwahara, T.; Fujita, S.; Sakamoto, Y.; Sato, Y.; Saitoh, S.I.; Hirata, T.; Yamamoto, H.; et al. Radiometric calibration for a multispectral sensor onboard RISESAT microsatellite based on lunar observations. Sensors 2021, 21, 15. [Google Scholar] [CrossRef]

- Maponya, M.G.; Van Niekerk, A.; Mashimbye, Z.E. Pre-harvest classification of crop types using a Sentinel-2 time-series and machine learning. Comput. Electron. Agric. 2020, 169, 105164. [Google Scholar] [CrossRef]

- Rao, N.; Kapoor, M.; Sharma, N.; Venkateswarlu, K. Yield prediction and waterlogging assessment for tea plantation land using satellite image-based techniques. Int. J. Remote Sens. 2007, 28, 1561–1576. [Google Scholar]

- Bian, M.; Skidmore, A.K.; Schlerf, M.; Wang, T.; Liu, Y.; Zeng, R.; Fei, T. Predicting foliar biochemistry of tea (Camellia sinensis) using reflectance spectra measured at powder, leaf and canopy levels. ISPRS J. Photogramm. Remote Sens. 2013, 78, 148–156. [Google Scholar] [CrossRef]

- Chen, Y.-T.; Chen, S.-F. Localizing plucking points of tea leaves using deep convolutional neural networks. Comput. Electron. Agric. 2020, 171, 105298. [Google Scholar] [CrossRef]

- Næsset, E.; Gobakken, T. Estimation of above- and below-ground biomass across regions of the boreal forest zone using airborne laser. Remote Sens. Environ. 2008, 112, 3079–3090. [Google Scholar] [CrossRef]

- Puliti, S.; Ene, L.T.; Gobakken, T.; Næsset, E. Use of partial-coverage UAV data in sampling for large scale forest inventories. Remote Sens. Environ. 2017, 194, 115–126. [Google Scholar] [CrossRef]

- Saponaro, M.; Agapiou, A.; Hadjimitsis, D.G.; Tarantino, E. Influence of Spatial Resolution for Vegetation Indices’ Extraction Using Visible Bands from Unmanned Aerial Vehicles’ Orthomosaics Datasets. Remote Sens. 2021, 13, 3238. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Lama, G.F.C.; Crimaldi, M.; Pasquino, V.; Padulano, R.; Chirico, G.B. Bulk Drag Predictions of Riparian Arundo donax Stands through UAV-Acquired Multispectral Images. Water 2021, 13, 1333. [Google Scholar] [CrossRef]

- Song, K.; Brewer, A.; Ahmadian, S.; Shankar, A.; Detweiler, C.; Burgin, A.J. Using unmanned aerial vehicles to sample aquatic ecosystems. Limnol. Oceanogr. Meth. 2017, 15, 1021–1030. [Google Scholar] [CrossRef]

- Jin, S.; Su, Y.; Song, S.; Xu, K.; Hu, T.; Yang, Q.; Wu, F.; Xu, G.; Ma, Q.; Guan, H. Non-destructive estimation of field maize biomass using terrestrial lidar: An evaluation from plot level to individual leaf level. Plant Methods 2020, 16, 69. [Google Scholar] [CrossRef]

- Wang, D.; Wan, B.; Liu, J.; Su, Y.; Guo, Q.; Qiu, P.; Wu, X. Estimating aboveground biomass of the mangrove forests on northeast Hainan Island in China using an upscaling method from field plots, UAV-LiDAR data and Sentinel-2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2020, 85, 101986. [Google Scholar] [CrossRef]

- Scheller, J.H.; Mastepanov, M.; Christensen, T.R. Toward UAV-based methane emission mapping of Arctic terrestrial ecosystems. Sci. Total Environ. 2022, 819, 153161. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Wan, B.; Cao, Z.; Zhang, Q.; Wang, D. Exploring the potential of unmanned aerial vehicle (UAV) remote sensing for mapping plucking area of tea plantations. Forests 2021, 12, 1214. [Google Scholar] [CrossRef]

- Næsset, E. Effects of different sensors, flying altitudes, and pulse repetition frequencies on forest canopy metrics and biophysical stand properties derived from small-footprint airborne laser data. Remote Sens. Environ. 2009, 113, 148–159. [Google Scholar] [CrossRef]

- Jakubowski, M.K.; Guo, Q.; Kelly, M. Tradeoffs between lidar pulse density and forest measurement accuracy. Remote Sens. Environ. 2013, 130, 245–253. [Google Scholar] [CrossRef]

- Singh, K.K.; Chen, G.; McCarter, J.B.; Meentemeyer, R.K. Effects of LiDAR point density and landscape context on estimates of urban forest biomass. ISPRS J. Photogramm. Remote Sens. 2015, 101, 310–322. [Google Scholar] [CrossRef] [Green Version]

- Liu, K.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Estimating forest structural attributes using UAV-LiDAR data in Ginkgo plantations. ISPRS J. Photogramm. Remote Sens. 2018, 146, 465–482. [Google Scholar] [CrossRef]

- Dubayah, R.O.; Sheldon, S.; Clark, D.B.; Hofton, M.A.; Blair, J.B.; Hurtt, G.C.; Chazdon, R.L. Estimation of tropical forest height and biomass dynamics using lidar remote sensing at La Selva, Costa Rica. J. Geophys. Res. Biogeosci. 2010, 115, G00E09. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from motion photogrammetry in forestry: A review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef] [Green Version]

- Filippelli, S.K.; Lefsky, M.A.; Rocca, M.E. Comparison and integration of lidar and photogrammetric point clouds for mapping pre-fire forest structure. Remote Sens. Environ. 2019, 224, 154–166. [Google Scholar] [CrossRef]

- Lin, J.; Wang, M.; Ma, M.; Lin, Y. Aboveground tree biomass estimation of sparse subalpine coniferous forest with UAV oblique photography. Remote Sens. 2018, 10, 1849. [Google Scholar] [CrossRef] [Green Version]

- Jurjević, L.; Liang, X.; Gašparović, M.; Balenović, I. Is field-measured tree height as reliable as believed–Part II, A comparison study of tree height estimates from conventional field measurement and low-cost close-range remote sensing in a deciduous forest. ISPRS J. Photogramm. Remote Sens. 2020, 169, 227–241. [Google Scholar] [CrossRef]

- Cao, L.; Coops, N.C.; Sun, Y.; Ruan, H.; Wang, G.; Dai, J.; She, G. Estimating canopy structure and biomass in bamboo forests using airborne LiDAR data. ISPRS J. Photogramm. Remote Sens. 2019, 148, 114–129. [Google Scholar] [CrossRef]

- Zhao, X.; Guo, Q.; Su, Y.; Xue, B. Improved progressive TIN densification filtering algorithm for airborne LiDAR data in forested areas. ISPRS J. Photogramm. Remote Sens. 2016, 117, 79–91. [Google Scholar] [CrossRef] [Green Version]

- White, J.C.; Tompalski, P.; Coops, N.C.; Wulder, M.A. Comparison of airborne laser scanning and digital stereo imagery for characterizing forest canopy gaps in coastal temperate rainforests. Remote Sens. Environ. 2018, 208, 1–14. [Google Scholar] [CrossRef]

- Leberl, F.; Irschara, A.; Pock, T.; Meixner, P.; Gruber, M.; Scholz, S.; Wiechert, A. Point clouds. Photogramm. Eng. Remote Sens. 2010, 76, 1123–1134. [Google Scholar] [CrossRef]

- Kim, S.; McGaughey, R.J.; Andersen, H.-E.; Schreuder, G. Tree species differentiation using intensity data derived from leaf-on and leaf-off airborne laser scanner data. Remote Sens. Environ. 2009, 113, 1575–1586. [Google Scholar] [CrossRef]

- Ritchie, C.J.; Evans, L.D.; Jacobs, D.; Everitt, H.J.; Weltz, A.M. Measuring Canopy Structure with an Airborne Laser Altimeter. Trans. ASAE 1993, 36, 1235–1238. [Google Scholar] [CrossRef]

- Qiu, P.; Wang, D.; Zou, X.; Yang, X.; Xie, G.; Xu, S.; Zhong, Z. Finer resolution estimation and mapping of mangrove biomass using UAV LiDAR and worldview-2 data. Forests 2019, 10, 871. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Wang, D.; Wan, B.; Qiu, P.; Su, Y.; Guo, Q.; Wang, R.; Sun, F.; Wu, X. Evaluating the performance of Sentinel-2, Landsat 8 and Pléiades-1 in mapping mangrove extent and species. Remote Sens. 2018, 10, 1468. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: A new learning scheme of feedforward neural networks. In Proceedings of the 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No. 04CH37541), Budapest, Hungary, 25–29 July 2004; pp. 985–990. [Google Scholar]

- Han, M.; Liu, B. Ensemble of extreme learning machine for remote sensing image classification. Neurocomputing 2015, 149, 65–70. [Google Scholar] [CrossRef]

- Moreno, R.; Corona, F.; Lendasse, A.; Graña, M.; Galvão, L.S. Extreme learning machines for soybean classification in remote sensing hyperspectral images. Neurocomputing 2014, 128, 207–216. [Google Scholar] [CrossRef]

- Shang, X.; Chisholm, L.A. Classification of Australian native forest species using hyperspectral remote sensing and machine-learning classification algorithms. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 7, 2481–2489. [Google Scholar] [CrossRef]

- Hariharan, S.; Mandal, D.; Tirodkar, S.; Kumar, V.; Bhattacharya, A.; Lopez-Sanchez, J.M. A novel phenology based feature subset selection technique using random forest for multitemporal PolSAR crop classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 4244–4258. [Google Scholar] [CrossRef] [Green Version]

- Pham, L.T.H.; Brabyn, L. Monitoring mangrove biomass change in Vietnam using SPOT images and an object-based approach combined with machine learning algorithms. ISPRS J. Photogramm. Remote Sens. 2017, 128, 86–97. [Google Scholar] [CrossRef]

- Granitto, P.M.; Furlanello, C.; Biasioli, F.; Gasperi, F. Recursive feature elimination with random forest for PTR-MS analysis of agroindustrial products. Chemom. Intell. Lab. Syst. 2006, 83, 83–90. [Google Scholar] [CrossRef]

- Ghosh, A.; Joshi, P.K. A comparison of selected classification algorithms for mapping bamboo patches in lower Gangetic plains using very high resolution WorldView 2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 298–311. [Google Scholar] [CrossRef]

- Kamal, M.; Phinn, S.; Johansen, K. Object-based approach for multi-scale mangrove composition mapping using multi-resolution image datasets. Remote Sens. 2015, 7, 4753–4783. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Whiteside, T.; Boggs, G.; Maier, S. Area-based validity assessment of single- and multi-class object-based image analysis. In Proceedings of the 15th Australasian Remote Sensing and Photogrammetry Conference, Alice Springs, Australia, 13–17 September 2010. [Google Scholar]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Gerke, M.; Vosselman, G.; Yang, M.Y. Filtering photogrammetric point clouds using standard LiDAR filters towards dtm generation. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, IV-2, 319–326. [Google Scholar] [CrossRef] [Green Version]

- Barbasiewicz, A.; Widerski, T.; Daliga, K. The analysis of the accuracy of spatial models using photogrammetric software: Agisoft Photoscan and Pix4D. In Proceedings of the E3S Web of Conferences, Avignon, France, 4–5 June 2018; p. 00012. [Google Scholar]

- Ma, Q.; Su, Y.; Guo, Q. Comparison of canopy cover estimations from airborne LiDAR, aerial imagery, and satellite imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 4225–4236. [Google Scholar] [CrossRef]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- Næsset, E. Effects of different flying altitudes on biophysical stand properties estimated from canopy height and density measured with a small-footprint airborne scanning laser. Remote Sens. Environ. 2004, 91, 243–255. [Google Scholar] [CrossRef]

- Cățeanu, M.; Ciubotaru, A. The effect of lidar sampling density on DTM accuracy for areas with heavy forest cover. Forests 2021, 12, 265. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Kučerová, D.; Vivodová, Z.; Kollárová, K. Silicon alleviates the negative effects of arsenic in poplar callus in relation to its nutrient concentrations. Plant Cell Tissue Organ Cult. 2021, 145, 275–289. [Google Scholar] [CrossRef]

- Mandlburger, G.; Pfennigbauer, M.; Schwarz, R.; Flöry, S.; Nussbaumer, L. Concept and performance evaluation of a novel UAV-borne topo-bathymetric LiDAR sensor. Remote Sens. 2020, 12, 986. [Google Scholar] [CrossRef] [Green Version]

- Hu, T.; Sun, X.; Su, Y.; Guan, H.; Sun, Q.; Kelly, M.; Guo, Q. Development and performance evaluation of a very low-cost UAV-LiDAR system for forestry applications. Remote Sens. 2021, 13, 77. [Google Scholar] [CrossRef]

- Jurjević, L.; Gašparović, M.; Liang, X.; Balenović, I. Assessment of close-range remote sensing methods for DTM estimation in a lowland deciduous Forest. Remote Sens. 2021, 13, 2063. [Google Scholar] [CrossRef]

| LiDAR Metrics | Implication | |

|---|---|---|

| Height metrics | HMean | Mean of heights |

| HSD, HVAR | Standard deviation of heights and variance of heights | |

| HAAD | Average absolute deviation of heights | |

| HIQ | Interquartile distance of percentile height, H75th–H25th | |

| Percentile heights (H1, H5, H10, H20, H25, H30, H40, H50, H60, H70, H75, H80, H90, H95, and H99) | Height percentiles. Point clouds are sorted according to the elevation. Fifteen height percentile metrics ranging from 1% to 99% height | |

| Canopy height model value | Value of CHM: | |

| Canopy volume metrics | CC0.2m | Canopy cover above 0.2 m |

| Gap | Canopy volume-related metric | |

| Leaf area index | Dimensionless quantity that characterizes plant canopies | |

| Density metrics | Canopy return density (D0, D1, D2, D3, D4, D5, D6, D7, D8, and D9) | The proportion of points above the quantiles to the total number of points |

| Feature | Implication |

|---|---|

| Spectral mean values (RGB) | The average spectral luminance of all pixels in a wavelength band within an image object |

| Brightness | Reflects the total spectral luminance difference among image objects |

| Length/width | Represented by a minimal outsourcing rectangle |

| Shape index | Used to reflect the smoothness of image object boundaries |

| Textural feature | Entropy, contrast, homogeneity, and correlation calculated through the gray-level co-occurrence matrix (GLCM) with a distance of 1 [42]. The GLCM is a matrix used to count the correlations between the gray levels of two pixels at a given spacing and orientation in an image |

| Indicator | Equations |

|---|---|

| ABUA | (|C∩R|)/(|C|) |

| ABPA | (|C∩R|)/(|R|) |

| ABOA | R|) |

| Type | Density of All Returns (pts/m2) | Density of Ground Returns (pts/m2) | |

|---|---|---|---|

| LiDAR 1 | 100 | 25.44 | 0.84 |

| 50 | 12.71 | 0.65 | |

| 10 | 2.55 | 0.35 | |

| 5 | 1.27 | 0.24 | |

| 1 | 0.25 | 0.08 | |

| Photogrammetric 2 | 100 | 22.27 | 1.16 |

| 50 | 9.72 | 1.13 | |

| 10 | 2.46 | 0.71 | |

| 5 | 1.65 | 0.55 | |

| 1 | 0.36 | 0.17 | |

| Type | Density of Ground Returns (pts/m2) | MAE (m) | RMSE (m) | |

|---|---|---|---|---|

| LiDAR 1 | 100 | 0.84 | 0.038 | 0.060 |

| 50 | 0.65 | 0.051 | 0.072 | |

| 10 | 0.35 | 0.059 | 0.087 | |

| 5 | 0.24 | 0.337 | 1.884 | |

| 1 | 0.08 | 0.735 | 2.253 | |

| Photogrammetric 2 | 100 | 1.16 | 0.23 | 0.256 |

| 50 | 1.13 | 0.241 | 0.266 | |

| 10 | 0.71 | 0.244 | 0.268 | |

| 5 | 0.55 | 0.236 | 0.261 | |

| 1 | 0.17 | 0.274 | 0.477 | |

| LiDAR 1 | Point Density (point/m2) | Number of Selected Features | Description of Selected Features |

|---|---|---|---|

| 100 | 25.44 | 26 (16) | Red band, green band, blue band, brightness, length/width, shape index, entropy, contrast, homogeneity, correlation, D0, D9, LAI (leaf area index), Gap, CC0.2m, H10, H80, H90, H95, H99, HVAR, HIQ, HAAD, HMean, HSD, and CHM |

| 50 | 12.71 | 23 (14) | Red band, green band, blue band, brightness, length/width, shape index, entropy, contrast, homogeneity, D8, LAI, Gap, CC0.2m, H20, H25, H40, H50, H60, H70, H75, HVAR, HMean, and CHM |

| 10 | 2.55 | 19 (9) | Red band, green band, blue band, brightness, length/width, shape index, entropy, contrast, homogeneity, correlation, D1, D3, LAI, Gap, CC0.2m, H30, H40, HVAR, and CHM |

| 5 | 1.27 | 9 (2) | Red band, green band, blue band, brightness, length/width, shape index, entropy, Gap, and CHM |

| 1 | 0.25 | 9 (1) | Red band, green band, blue band, brightness, length/width, shape index, entropy, contrast, and CHM |

| Photogrammetric 1 | Point Density (point/m2) | Number of Selected Features | Description of Selected Features |

|---|---|---|---|

| 100 | 22.27 | 22 (12) | Red band, green band, blue band, brightness, length/width, shape index, entropy, contrast, homogeneity, correlation, Gap, CC0.2m, H10, H80, H90, H95, H99, HIQ, HAAD, HMean, HSD, and CHM |

| 50 | 9.72 | 19 (11) | Red band, green band, blue band, brightness, length/width, shape index, entropy, contrast, LAI, Gap, CC0.2m, H20, H25, H50, H60, H70, HVAR, HMean, and CHM |

| 10 | 2.46 | 12 (3) | Red band, green band, blue band, brightness, length/width, shape index, entropy, contrast, homogeneity, Gap, CC0.2m, and CHM |

| 5 | 1.65 | 9 (2) | Red band, green band, blue band, brightness, length/width, shape index, entropy, Gap, and CHM |

| 1 | 0.36 | 9 (1) | Red band, green band, blue band, brightness, length/width, shape index, entropy, contrast, and CHM |

| Data | Percentage of Point Densities | RF | ELM |

|---|---|---|---|

| OA (Kappa) | OA (Kappa) | ||

| LiDAR | 100 | 94.39% (0.91) | 93.44% (0.91) |

| 50 | 93.80% (0.90) | 92.98% (0.89) | |

| 10 | 93.01% (0.88) | 92.27% (0.87) | |

| 5 | 91.93% (0.86) | 90.89% (0.85) | |

| 1 | 90.65% (0.85) | 89.78% (0.85) | |

| Photogrammetric | 100 | 91.58% (0.86) | 90.07% (0.86) |

| 50 | 91.04% (0.85) | 89.74% (0.84) | |

| 10 | 90.55% (0.84) | 89.25% (0.83) | |

| 5 | 90.64% (0.84) | 88.57% (0.83) | |

| 1 | 90.55% (0.84) | 88.32% (0.83) |

| Density 1 | Class | LiDAR | Photogrammetric | ||||

|---|---|---|---|---|---|---|---|

| ABUA | ABPA | ABOA | ABUA | ABPA | ABOA | ||

| 100 | tea | 78.46 | 85.07 | 87.63 | 76.42 | 74.34 | 84.32 |

| non-tea | 92.57 | 88.81 | 87.91 | 89.05 | |||

| 50 | tea | 76.56 | 86.22 | 87.01 | 74.41 | 80.12 | 83.58 |

| non-tea | 92.99 | 87.36 | 90.15 | 86.85 | |||

| 10 | tea | 75.86 | 79.87 | 85.49 | 73.84 | 73.60 | 83.04 |

| non-tea | 90.14 | 87.87 | 87.42 | 87.55 | |||

| 5 | tea | 72.79 | 72.61 | 82.64 | 72.52 | 71.86 | 82.11 |

| non-tea | 87.29 | 87.40 | 86.59 | 86.96 | |||

| 1 | tea | 72.05 | 71.04 | 81.47 | 71.56 | 70.79 | 81.57 |

| non-tea | 86.41 | 85.75 | 86.25 | 86.69 | |||

| Density 1 | Class | LiDAR | Photogrammetric | ||||

|---|---|---|---|---|---|---|---|

| ABUA | ABPA | ABOA | ABUA | ABPA | ABOA | ||

| 100 | tea | 76.54 | 82.92 | 86.64 | 76.93 | 74.83 | 84.54 |

| non-tea | 92.61 | 88.42 | 88.02 | 89.17 | |||

| 50 | tea | 74.74 | 84.24 | 86.43 | 72.87 | 78.47 | 83.04 |

| non-tea | 93.12 | 89.74 | 88.47 | 85.23 | |||

| 10 | tea | 75.83 | 79.46 | 85.42 | 73.74 | 73.43 | 83.12 |

| non-tea | 90.11 | 90.55 | 87.61 | 87.75 | |||

| 5 | tea | 72.98 | 72.85 | 82.59 | 72.86 | 72.19 | 82.48 |

| non-tea | 87.17 | 87.24 | 86.98 | 87.36 | |||

| 1 | tea | 72.28 | 71.20 | 81.58 | 70.98 | 70.21 | 81.28 |

| non-tea | 86.02 | 86.53 | 87.11 | 86.53 | |||

| Component | Detailed Costs | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Photogrammetric Case | LiDAR Case | |||||||||

| Flight height (m) | 60 | 90 | 120 | 200 | 300 | 60 | 90 | 120 | 200 | 300 |

| Point cloud density (pts/m2) | 22.27 | 9.72 | 2.46 | 1.65 | 0.36 | 25.44 | 12.71 | 2.55 | 1.27 | 0.25 |

| Scanning width (m) | 100 | 150 | 200 | 334 | 500 | 100 | 150 | 200 | 334 | 500 |

| Time consumed (hour) | 12.80 | 8.53 | 6.40 | 3.83 | 2.56 | 44.80 | 29.86 | 22.40 | 13.41 | 8.96 |

| Cost (USD) | 2560 | 1706 | 1280 | 766 | 512 | 44,800 | 29,860 | 22,400 | 13,410 | 8960 |

| Cost (USD per ha) | 25.60 | 17.06 | 12.80 | 7.66 | 5.12 | 448 | 299 | 224 | 134 | 90 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Q.; Hu, M.; Zhou, Y.; Wan, B.; Jiang, L.; Zhang, Q.; Wang, D. Effects of UAV-LiDAR and Photogrammetric Point Density on Tea Plucking Area Identification. Remote Sens. 2022, 14, 1505. https://doi.org/10.3390/rs14061505

Zhang Q, Hu M, Zhou Y, Wan B, Jiang L, Zhang Q, Wang D. Effects of UAV-LiDAR and Photogrammetric Point Density on Tea Plucking Area Identification. Remote Sensing. 2022; 14(6):1505. https://doi.org/10.3390/rs14061505

Chicago/Turabian StyleZhang, Qingfan, Maosheng Hu, Yansong Zhou, Bo Wan, Le Jiang, Quanfa Zhang, and Dezhi Wang. 2022. "Effects of UAV-LiDAR and Photogrammetric Point Density on Tea Plucking Area Identification" Remote Sensing 14, no. 6: 1505. https://doi.org/10.3390/rs14061505

APA StyleZhang, Q., Hu, M., Zhou, Y., Wan, B., Jiang, L., Zhang, Q., & Wang, D. (2022). Effects of UAV-LiDAR and Photogrammetric Point Density on Tea Plucking Area Identification. Remote Sensing, 14(6), 1505. https://doi.org/10.3390/rs14061505