A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images

Abstract

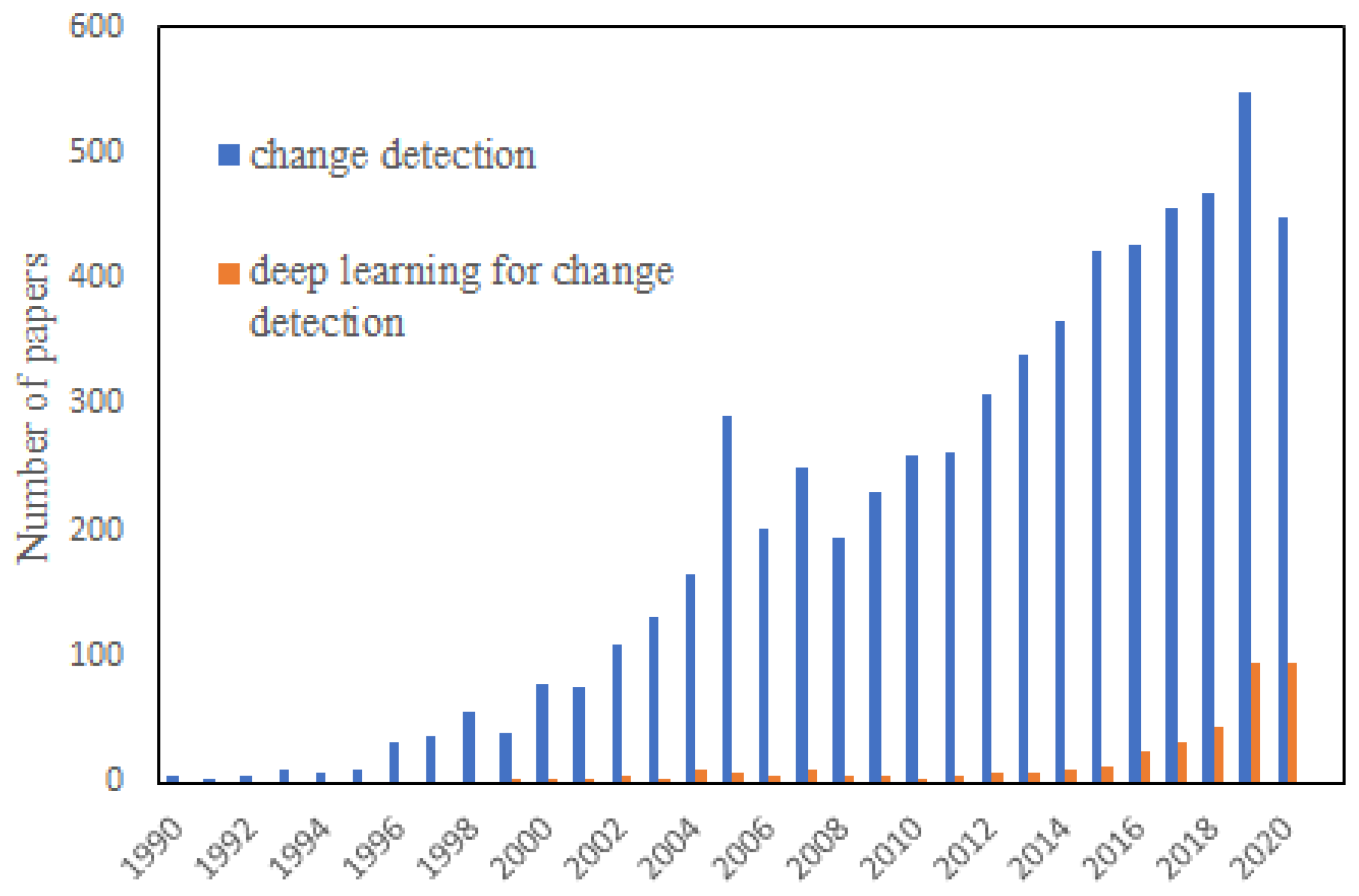

:1. Introduction

- (1)

- We provide a systematic review of change detection based on deep learning using HR remote sensing images, which covers the most popular feature extraction network and the construct mechanisms of every part of this framework.

- (2)

- We analyze the granularity of change detection algorithms according to the detection unit, which allows us to apply a reasonable method independently of the particular applications.

- (3)

- We present the popular dataset used for change detection from HR remote sensing images in detail, and the representative evaluation metrics for this task are investigated.

- (4)

- We present several suggestions for future research on change detection using remote sensing data.

2. Deep Learning-Based Change Detection Algorithms

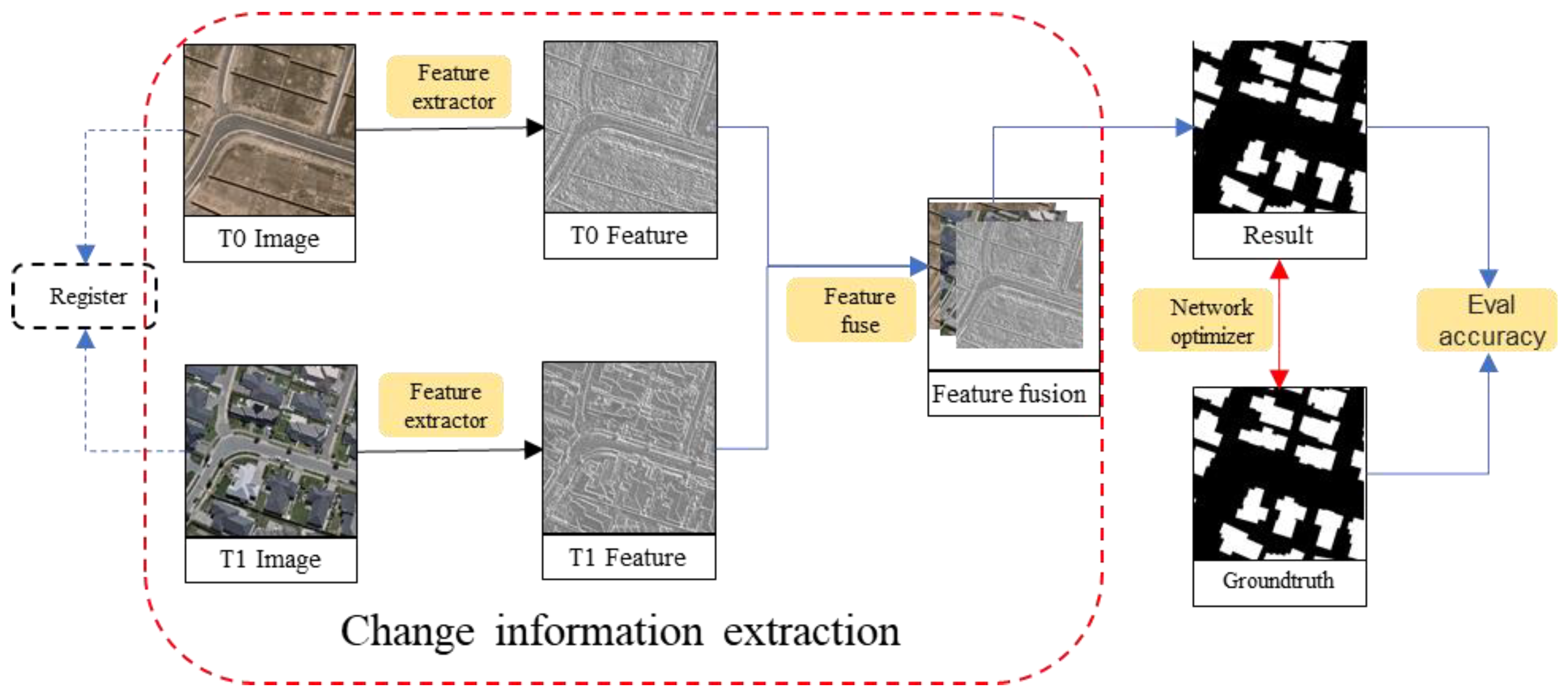

2.1. Change Detection Framework

2.2. Mainstrem Feature Extraction Network

2.2.1. Encoder–Decoder and Autoencoder (AE) Models

2.2.2. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM)

2.2.3. Convolution Neural Networks (CNNs)

2.2.4. Generative Adversarial Networks (GANs)

2.2.5. Transformer-Based Networks

2.3. Change Information Extraction

2.3.1. Feature Extraction Strategy

2.3.2. Feature Similarity Strategy

2.3.3. Feature Fusion Strategy

2.4. Optimization Strategy

3. The Analysis of Granularity for Change Detection

3.1. Scene-Level Change Detection

3.2. Region-Level Change Detection

3.2.1. Patch/Super-Pixel-Based Change Detection

3.2.2. Pixel-Based Change Detection

3.2.3. Object-Based Change Detection

4. Related Datasets and Evaluation Metrics

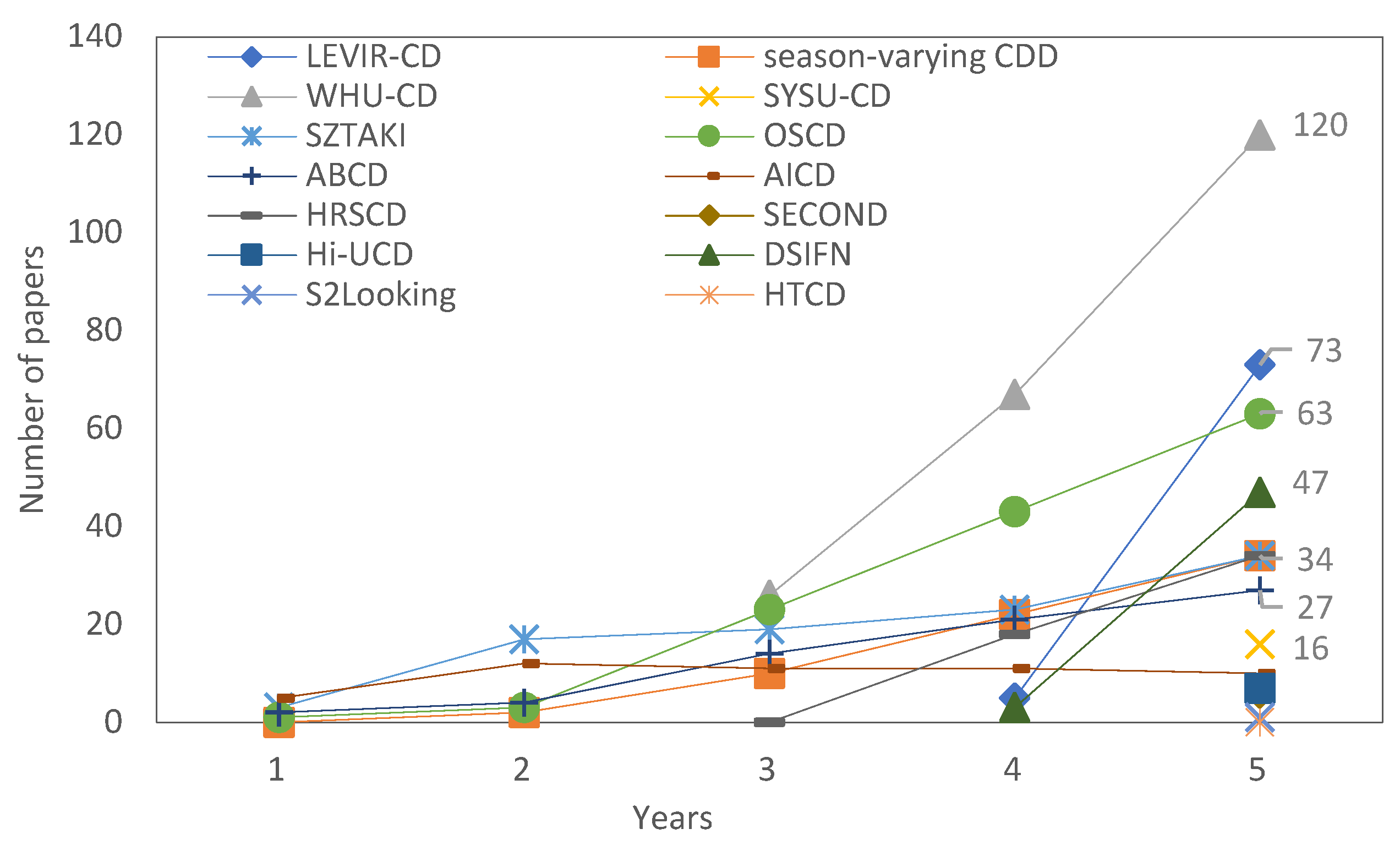

4.1. Popular Datasets for Change Detection

4.1.1. Binary Change Detection Dataset

4.1.2. Semantic Change Detection Dataset

4.1.3. Buildings Change Detection Dataset

4.2. Evaluation Metrics

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Singh, A. Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Z.; Zhong, Y.; Wang, J.; Ma, A.; Zhang, L. Building damage assessment for rapid disaster response with a deep object-based semantic change detection framework: From natural disasters to man-made disasters. Remote Sens. Environ. 2021, 265, 112636. [Google Scholar] [CrossRef]

- Moya, L.; Muhari, A.; Adriano, B.; Koshimura, S.; Mas, E.; Marval-Perez, L.R.; Yokoya, N. Detecting urban changes using phase correlation and ℓ1-based sparse model for early disaster response: A case study of the 2018 Sulawesi Indonesia earthquake-tsunami. Remote Sens. Environ. 2020, 242, 111743–111756. [Google Scholar] [CrossRef]

- Liu, R.; Kuffer, M.; Persello, C. The Temporal Dynamics of Slums Employing a CNN-Based Change Detection Approach. Remote Sens. 2019, 11, 2844. [Google Scholar] [CrossRef] [Green Version]

- Bruzzone, L.; Serpico, S.B. An iterative technique for the detection of land-cover transitions in multitemporal remote-sensing images. IEEE Trans. Geosci. Remote Sens. 1997, 35, 858–867. [Google Scholar] [CrossRef] [Green Version]

- De Bem, P.P.; De Carvalho Junior, O.A.; Fontes Guimarães, R.; Trancoso Gomes, R.A. Change Detection of Deforestation in the Brazilian Amazon Using Landsat Data and Convolutional Neural Networks. Remote Sens. 2020, 12, 901. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Vosselman, G.; Gerke, M.; Tuia, D.; Yang, M.Y. Change Detection between Multimodal Remote Sensing Data Using Siamese CNN. arXiv 2018, arXiv:1807.09562. [Google Scholar]

- Chen, J.; Liu, H.; Hou, J.; Yang, M.; Deng, M. Improving Building Change Detection in VHR Remote Sensing Imagery by Combining Coarse Location and Co-Segmentation. ISPRS Int. J. Geo-Inf. 2018, 7, 213. [Google Scholar] [CrossRef] [Green Version]

- Qin, R.; Tian, J.; Reinartz, P. 3D change detection-Approaches and applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef] [Green Version]

- Ban, Y.; Yousif, O. Change Detection Techniques: A Review. In Multitemporal Remote Sensing. Remote Sensing and Digital Image Processing; Ban, Y., Yousif, O., Eds.; Remote Sensing and Digital Image Processing; Springer: Cham, Switzerland, 2016; Volume 20, pp. 19–43. [Google Scholar]

- Liu, T.; Yang, L.; Lunga, D. Change detection using deep learning approach with object-based image analysis. Remote Sens. Environ. 2021, 256, 112308. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the Conference On Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015; pp. 3431–3440. [Google Scholar]

- Chen, L.-C.; Yang, Y.; Wang, J.; Xu, W.; Yuille, A.L. Attention to Scale: Scale-Aware Semantic Image Segmentation. In Proceedings of the Conference on Computer Vision and Pattern Recognition(CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3640–3649. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Peng, D.; Zhang, M.; Wanbing, G. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef] [Green Version]

- Xu, X.; Li, W.; Ran, Q.; Du, Q.; Gao, L.; Zhang, B. Multisource Remote Sensing Data Classification Based on Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 937–949. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep learning for remote sensing image classification: A survey. Wiley Interdiscip Rev. Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef] [Green Version]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J.; Lei, L.; Zou, H. Multi-scale object detection in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2018, 145, 3–22. [Google Scholar] [CrossRef]

- Zhang, Z.; Jiang, R.; Mei, S.; Zhang, S.; Zhang, Y. Rotation-Invariant Feature Learning for Object Detection in VHR Optical Remote Sensing Images by Double-Net. IEEE Access 2020, 8, 20818–20827. [Google Scholar] [CrossRef]

- Newell, A.; Yang, K.; Deng, J. Stacked Hourglass Networks for Human Pose Estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 483–499. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the Conference on Computer Vision And Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zhu, Q.; Sun, X.; Zhong, Y.; Zhang, L. High-Resolution Remote Sensing Image Scene Understanding: A Review. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium(IGARSS), Yokohama, Japan, 28 July–2 August 2019; pp. 3061–3064. [Google Scholar]

- Michael, K.; Arnt-Børre, S.; Robert, J. Semantic Segmentation of Small Objects and Modeling of Uncertainty in Urban Remote Sensing Images Using Deep Convolutional Neural Networks. In Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 680–688. [Google Scholar]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change Detection in Synthetic Aperture Radar Images Based on Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef]

- Gao, F.; Dong, J.; Li, B.; Xu, Q. Automatic Change Detection in Synthetic Aperture Radar Images Based on PCANet. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1792–1796. [Google Scholar] [CrossRef]

- Yao, S.; Shahzad, M.; Zhu, X.X. Building height estimation in single SAR image using OSM building footprints. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; pp. 1–4. [Google Scholar]

- Peng, D.; Bruzzone, L.; Zhang, Y.; Guan, H.; Ding, H.; Huang, X. SemiCDNet: A Semisupervised Convolutional Neural Network for Change Detection in High Resolution Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5891–5906. [Google Scholar] [CrossRef]

- Jacobsen, K. Characteristics of very high resolution optical satellites for Topographic mapping. In ISPRS-International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH: Göttingen, Germany, 2011; Volume XXXVIII-4/W19, pp. 137–142. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Tan, K.; Jia, X.; Wang, X.; Chen, Y. A Deep Siamese Network with Hybrid Convolutional Feature Extraction Module for Change Detection Based on Multi-sensor Remote Sensing Images. Remote Sens. 2020, 12, 205. [Google Scholar] [CrossRef] [Green Version]

- Bao, T.; Fu, C.; Fang, T.; Huo, H. PPCNET: A Combined Patch-Level and Pixel-Level End-to-End Deep Network for High-Resolution Remote Sensing Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1797–1801. [Google Scholar] [CrossRef]

- Zhang, M.; Shi, W. A Feature Difference Convolutional Neural Network-Based Change Detection Method. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7232–7246. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. The Time Variable in Data Fusion: A Change Detection Perspective. IEEE Geosci. Remote Sens. Mag. 2015, 3, 8–26. [Google Scholar] [CrossRef]

- Tewkesbury, A.P.; Comber, A.J.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef] [Green Version]

- You, Y.; Cao, J.; Zhou, W. A Survey of Change Detection Methods Based on Remote Sensing Images for Multi-Source and Multi-Objective Scenarios. Remote Sens. 2020, 12, 2460. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Khelifi, L.; Mignotte, M. Deep Learning for Change Detection in Remote Sensing Images: Comprehensive Review and Meta-Analysis. IEEE Access 2020, 8, 126385–126400. [Google Scholar] [CrossRef]

- Lan, G.; Yoshua, B.; Aaron, C. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Fischer, A.; Igel, C. An Introduction to Restricted Boltzmann Machines. In Proceedings of the Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications(CIARP), Buenos Aires, Argentina, 3–6 September 2012; pp. 14–36. [Google Scholar]

- Liu, M.-Y.; Breuel, T.; Kautz, J. Unsupervised Image-to-Image Translation Networks. In Proceedings of the International Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Baziotis, C.; Androutsopoulos, I.; Konstas, I.; Potamianos, A. SEQ^3: Differentiable Sequence-to-Sequence-to-Sequence Autoencoder for Unsupervised Abstractive Sentence Compression. In Proceedings of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies(NAACL), Minneapolis, MN, USA, 6–7 June 2019. [Google Scholar]

- Zabalza, J.; Ren, J.; Zheng, J.; Zhao, H.; Qing, C.; Yang, Z.; Du, P.; Marshall, S. Novel segmented stacked autoencoder for effective dimensionality reduction and feature extraction in hyperspectral imaging. Neurocomputing 2016, 185, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Nurmaini, S.; Darmawahyuni, A.; Sakti Mukti, A.N.; Rachmatullah, M.N.; Firdaus, F.; Tutuko, B. Deep Learning-Based Stacked Denoising and Autoencoder for ECG Heartbeat Classification. Electronics 2020, 9, 135. [Google Scholar] [CrossRef] [Green Version]

- Liu, G.; Li, L.; Jiao, L.; Dong, Y.; Li, X. Stacked Fisher autoencoder for SAR change detection. Pattern Recogn. 2019, 96, 106971. [Google Scholar] [CrossRef]

- Gong, M.; Yang, H.; Zhang, P. Feature learning and change feature classification based on deep learning for ternary change detection in SAR images. ISPRS J. Photogramm. Remote Sens. 2017, 129, 212–225. [Google Scholar] [CrossRef]

- Ye, X.; Wang, L.; Xing, H.; Huang, L. Denoising hybrid noises in image with stacked autoencoder. In Proceedings of the 2015 IEEE International Conference on Information and Automation(ICIA), Lijiang, China, 8–10 August 2015; pp. 2720–2724. [Google Scholar]

- Shao, Z.; Deng, J.; Wang, L.; Fan, Y.; Sumari, N.S.; Cheng, Q. Fuzzy autoencode based cloud detection for remote sensing imagery. Remote Sens. 2017, 9, 311. [Google Scholar] [CrossRef] [Green Version]

- Iyer, V.; Aved, A.; Howlett, T.B.; Carlo, J.T.; Abayowa, B. Autoencoder versus pre-trained CNN networks: Deep-features applied to accelerate computationally expensive object detection in real-time video streams. In Proceedings of the Target and Background Signatures IV, Berlin, Germany, 10–11 September 2018; p. 107940Y. [Google Scholar]

- Amberkar, A.; Awasarmol, P.; Deshmukh, G.; Dave, P. Speech Recognition using Recurrent Neural Networks. In Proceedings of the International Conference on Current Trends towards Converging Technologies(ICCTCT), Coimbatore, India, 1–3 March 2018; pp. 1–4. [Google Scholar]

- Liu, P.; Qiu, X.; Huang, X. Recurrent neural network for text classification with multi-task learning. In Proceedings of the Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence(IJCAI), New York, NY, USA, 9–15 July 2016; pp. 2873–2879. [Google Scholar]

- Zhong, Y.; Li, H.; Dai, Y. Open-World Stereo Video Matching with Deep RNN. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Hewamalage, H.; Bergmeir, C.; Bandara, K. Recurrent Neural Networks for Time Series Forecasting: Current status and future directions. Int. J. Forecast. 2021, 37, 388–427. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Ke, H.; Fang, X.; Zhan, Z.; Chen, S. Landslide recognition by deep convolutional neural network and change detection. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4654–4672. [Google Scholar] [CrossRef]

- Mou, L.; Bruzzone, L.; Zhu, X. Learning Spectral-Spatial-Temporal Features via a Recurrent Convolutional Neural Network for Change Detection in Multispectral Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 924–935. [Google Scholar] [CrossRef] [Green Version]

- Liu, R.; Cheng, Z.; Zhang, L.; Li, J. Remote Sensing Image Change Detection Based on Information Transmission and Attention Mechanism. IEEE Access 2019, 7, 156349–156359. [Google Scholar] [CrossRef]

- Lyu, H.; Lu, H.; Mou, L. Learning a Transferable Change Rule from a Recurrent Neural Network for Land Cover Change Detection. Remote Sens. 2016, 8, 506. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.; Wu, C.; Du, B.; Zhang, L.; Wang, L. Change Detection in Multisource VHR Images via Deep Siamese Convolutional Multiple-Layers Recurrent Neural Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2848–2864. [Google Scholar] [CrossRef]

- Ordóñez, F.J.; Roggen, D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Song, A.; Choi, J.; Han, Y.; Kim, Y. Change Detection in Hyperspectral Images Using Recurrent 3D Fully Convolutional Networks. Remote Sens. 2018, 10, 1827. [Google Scholar] [CrossRef] [Green Version]

- Lyu, H.; Lu, H. Learning a transferable change detection method by recurrent neural network. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 5157–5160. [Google Scholar]

- Sarigul, M.; Ozyildirim, B.M.; Avci, M. Differential convolutional neural network. Neural Netw. 2019, 116, 279–287. [Google Scholar] [CrossRef] [PubMed]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, Early Access. [CrossRef] [PubMed]

- Han, Y.; Tang, B.P.; Deng, L. An enhanced convolutional neural network with enlarged receptive fields for fault diagnosis of planetary gearboxes. Comput. Ind. 2019, 107, 50–58. [Google Scholar] [CrossRef]

- Lee, H.; Kwon, H. Contextual deep cnn based hyperspectral classification. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 3322–3325. [Google Scholar]

- Mazzini, D.; Buzzelli, M.; Pauy, D.P.; Schettini, R. A CNN Architecture for Efficient Semantic Segmentation of Street Scenes. In Proceedings of the International Conference on Consumer Electronics(ICCE), Berlin, Germany, 2–5 September 2018. [Google Scholar]

- Sharifzadeh, F.; Akbarizadeh, G.; Kavian, Y.S. Ship Classification in SAR Images Using a New Hybrid CNN-MLP Classifier. J. Indian Soc. Remote Sens. 2019, 47, 551–562. [Google Scholar] [CrossRef]

- Pires De Lima, R.; Marfurt, K. Convolutional Neural Network for Remote-Sensing Scene Classification: Transfer Learning Analysis. Remote Sens. 2020, 12, 86. [Google Scholar] [CrossRef] [Green Version]

- Lei, J.; Luo, X.; Fang, L.; Wang, M.; Gu, Y. Region-Enhanced Convolutional Neural Network for Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5693–5702. [Google Scholar] [CrossRef]

- Cao, C.; Dragićević, S.; Li, S. Land-Use Change Detection with Convolutional Neural Network Methods. Environments 2019, 6, 25. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations(ICLR), San Diego, CA, USA, 7–9 May 2014. [Google Scholar]

- He, K.; Zhang, J.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention(MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K. Densely Connected Convolutional Networks. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Liu, X.; Chi, M.; Zhang, Y.; Qin, Y. Classifying high resolution remote sensing images by fine-tuned VGG deep networks. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium(IGARSS), Valencia, Spain, 22–27 July 2018; pp. 7137–7140. [Google Scholar]

- Guo, Y.; Liao, J.; Shen, G. A Deep Learning Model With Capsules Embedded for High-Resolution Image Classification. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2020, 14, 214–223. [Google Scholar] [CrossRef]

- Wang, A.; Wang, M.; Wu, H.; Jiang, K.; Iwahori, Y. A novel LiDAR data classification algorithm combined capsnet with resnet. Sensors 2020, 20, 1151. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A General End-to-End 2-D CNN Framework for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2018, 57, 3–13. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Li, K.; Li, Z.; Fang, S. Siamese NestedUNet Networks for Change Detection of High Resolution Satellite Image. In Proceedings of the International Conference on Control, Robotics and Intelligent System(CCRIS), Xiamen, China, 27 October 2020; pp. 42–48. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2014, 63, 139–144. [Google Scholar] [CrossRef]

- Li, X.; Luo, M.; Ji, S.; Zhang, L.; Lu, M. Evaluating generative adversarial networks based image-level domain transfer for multi-source remote sensing image segmentation and object detection. Int. J. Remote Sens. 2020, 41, 7343–7367. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Chen, X.; Duan, Y.; Houthooft, R.; Schulman, J.; Sutskever, I.; Abbeel, P. InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets. arXiv 2016, arXiv:1606.03657. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-Attention Generative Adversarial Networks. arXiv 2018, arXiv:1805.08318. [Google Scholar]

- Brock, A.; Donahue, J.; Simonyan, K. Large Scale GAN Training for High Fidelity Natural Image Synthesis. In Proceedings of the 7th International Conference on Learning Representations(ICLR), New Orleans, LA, USA, 6–9 May 2018. [Google Scholar]

- GAN_Zoo. Available online: https://github.com/hindupuravinash/the-gan-zoo (accessed on 20 March 2022).

- Jiang, F.; Gong, M.; Zhan, T.; Fan, X. A semisupervised GAN-based multiple change detection framework in multi-spectral images. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1223–1227. [Google Scholar] [CrossRef]

- Zhao, W.; Mou, L.; Chen, J.; Bo, Y.; Emery, W.J. Incorporating metric learning and adversarial network for seasonal invariant change detection. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2720–2731. [Google Scholar] [CrossRef]

- Li, X.; Du, Z.; Huang, Y.; Tan, Z. A deep translation (GAN) based change detection network for optical and SAR remote sensing images. ISPRS J. Photogramm. Remote Sens. 2021, 179, 14–34. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision(ECCV), Online, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Zhang, Y.; Liu, H.; Hu, Q. TransFuse: Fusing Transformers and CNNs for Medical Image Segmentation. arXiv 2021, arXiv:2102.08005. [Google Scholar]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. arXiv 2021, arXiv:2103.00208. [Google Scholar] [CrossRef]

- Ke, L.; Lin, Y.; Zeng, Z.; Zhang, L.; Meng, L. Adaptive Change Detection With Significance Test. IEEE Access 2018, 6, 27442–27450. [Google Scholar] [CrossRef]

- Ridd, M.K.; Liu, J. A Comparison of Four Algorithms for Change Detection in an Urban Environment. Remote Sens. Environ. 1998, 63, 95–100. [Google Scholar] [CrossRef]

- Liu, T.; Li, Y.; Cao, Y.; Shen, Q. Change detection in multitemporal synthetic aperture radar images using dual-channel convolutional neural network. J. Appl. Remote Sens. 2017, 11, 042615. [Google Scholar] [CrossRef] [Green Version]

- Chen, P.; Guo, L.; Zhang, X.; Qin, K.; Ma, W.; Jiao, L. Attention-Guided Siamese Fusion Network for Change Detection of Remote Sensing Images. Remote Sens. 2021, 13, 4597. [Google Scholar] [CrossRef]

- Adam, W.H.; Konstantinos, G.D.; Iasonas, K. Segmentation-Aware Convolutional Networks Using Local Attention Masks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5048–5057. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A Discriminative Feature Learning Approach for Deep Face Recognition. In Proceedings of the European Conference on Computer Vision(ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 499–515. [Google Scholar]

- Larabi, M.; Liu, Q.; Wang, Y. Convolutional neural network features based change detection in satellite images. In Proceedings of the First International Workshop on Pattern Recognition(IWPR), Tokyo, Japan, 11–13 July 2016. [Google Scholar]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change Detection Based on Deep Siamese Convolutional Network for Optical Aerial Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Faiz, R.; Bhavan, V.; Jared, V.C.; John, K.; Andreas, S. Siamese Network with Multi-Level Features for Patch-Based Change Detection in Satellite Imagery. In Proceedings of the IEEE Global Conference on Signal and Information Processing (GlobalSIP), Anaheim, CA, USA, 26–29 November 2018; pp. 958–962. [Google Scholar]

- Sun, Y.; Chen, Y.; Wang, X.; Tang, X. Deep learning face representation by joint identification-verification. In Proceedings of the 27th International Conference on Neural Information Processing Systems(NIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 1988–1996. [Google Scholar]

- Mueller, J.; Thyagarajan, A. Siamese recurrent architectures for learning sentence similarity. In Proceedings of the Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 5 March 2016; pp. 2786–2792. [Google Scholar]

- Guo, E.; Fu, X.; Zhu, J.; Deng, M.; Liu, Y.; Zhu, Q.; Li, H. Learning to Measure Change: Fully Convolutional Siamese Metric Networks for Scene Change Detection. arXiv 2018, arXiv:1810.09111. [Google Scholar]

- Ren, C.; Wang, X.; Gao, J.; Zhou, X.; Chen, H. Unsupervised Change Detection in Satellite Images With Generative Adversarial Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10047–10061. [Google Scholar] [CrossRef]

- Sakurada, K.; Okatani, T. Change Detection from a Street Image Pair using CNN Features and Superpixel Segmentation. In Proceedings of the British Machine Vision Conference (BMVC), Swansea, UK, 7–10 September 2015; pp. 1–12. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Gong, M.; Qin, K.; Zhang, P. A Deep Convolutional Coupling Network for Change Detection Based on Heterogeneous Optical and Radar Images. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 545–559. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, G.; Chen, K.; Yan, M.; Sun, X. Triplet-Based Semantic Relation Learning for Aerial Remote Sensing Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2019, 16, 266–270. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1162. [Google Scholar] [CrossRef]

- Xufeng, H.; Leung, T.; Jia, Y.; Sukthankar, R.; Berg, A.C. MatchNet: Unifying feature and metric learning for patch-based matching. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3279–3286. [Google Scholar]

- Chen, J.; Yuan, Z.; Peng, J.; Chen, L.; Huang, H.; Zhu, J.; Lin, T.; Li, H. DASNet: Dual attentive fully convolutional siamese networks for change detection of high resolution satellite images. arXiv 2020, arXiv:2003.03608. [Google Scholar] [CrossRef]

- Mesquita, D.B.; Santos, R.F.D.; Macharet, D.G.; Campos, M.F.M.; Nascimento, E.R. Fully Convolutional Siamese Autoencoder for Change Detection in UAV Aerial Images. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1455–1459. [Google Scholar] [CrossRef]

- Liu, J.; Chen, K.; Xu, G.; Sun, X.; Yan, M.; Diao, W.; Han, H. Convolutional Neural Network-Based Transfer Learning for Optical Aerial Images Change Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 127–131. [Google Scholar] [CrossRef]

- Xiang, S.; Wang, M.; Jiang, X.; Xie, G.; Zhang, Z.; Tang, P. Dual-Task Semantic Change Detection for Remote Sensing Images Using the Generative Change Field Module. Remote Sens. 2021, 13, 3336. [Google Scholar] [CrossRef]

- Chen, H.; Wu, C.; Du, B.; Zhang, L. Deep Siamese Multi-scale Convolutional Network for Change Detection in Multi-temporal VHR Images. In Proceedings of the 10th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Shanghai, China, 5–7 August 2019. [Google Scholar]

- Zheng, Z.; Wan, Y.; Zhang, Y.; Xiang, S.; Peng, D.; Zhang, B. CLNet: Cross-layer convolutional neural network for change detection in optical remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 175, 247–267. [Google Scholar] [CrossRef]

- Song, L.; Xia, M.; Jin, J.; Qian, M.; Zhang, Y. SUACDNet: Attentional change detection network based on siamese U-shaped structure. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102597. [Google Scholar] [CrossRef]

- Guo, H.; Shi, Q.; Marinoni, A.; Du, B.; Zhang, L. Deep building footprint update network: A semi-supervised method for updating existing building footprint from bi-temporal remote sensing images. Remote Sens. Environ. 2021, 264, 112589. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support(DLMIA), Granada, Spain, 20 September 2018; pp. 3–11. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. arXiv 2016, arXiv:1606.00915. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. In Proceedings of the International Conference on Learning Representations(ICLR), San Juan, Puerto Rico, 2–4 May 2016; pp. 1–13. [Google Scholar]

- Szegedy, C.; Wei, L.; Yangqing, J.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nguyen, T.L.; Han, D. Detection of Road Surface Changes from Multi-Temporal Unmanned Aerial Vehicle Images Using a Convolutional Siamese Network. Sustainability 2020, 12, 2482. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Duan, H.; Hui, Z.; Wang, F.-Y. Data Augmentation Using Image Generation for Change Detection. In Proceedings of the 2021 IEEE 1st International Conference on Digital Twins and Parallel Intelligence (DTPI), Beijing, China, 15 July–15 August 2021; pp. 188–191. [Google Scholar]

- Chen, H.; Li, W.; Shi, Z. Adversarial Instance Augmentation for Building Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Li, X.; Yuan, Z.; Wang, Q. Unsupervised Deep Noise Modeling for Hyperspectral Image Change Detection. Remote Sens. 2019, 11, 258. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.F.; Guo, L.Z.; Zhou, Z.H. Towards Safe Weakly Supervised Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 334–346. [Google Scholar] [CrossRef] [Green Version]

- Ibrahim, M.S.; Vahdat, A.; Ranjbar, M.; Macready, W.G. Semi-Supervised Semantic Image Segmentation with Self-correcting Networks. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2018; pp. 12712–12722. [Google Scholar]

- Kerdegari, H.; Razaak, M.; Argyriou, V.; Remagnino, P. Urban scene segmentation using semi-supervised GAN. In Proceedings of the Image and Signal Processing for Remote Sensing XXV, Strasbourg, France, 9–11 September 2019. [Google Scholar]

- Jiang, X.; Tang, H. Dense High-Resolution Siamese Network for Weakly-Supervised Change Detection. In Proceedings of the 2019 6th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 2–4 November 2019; pp. 547–552. [Google Scholar]

- Khan, S.H.; He, X.; Porikli, F.; Bennamoun, M.; Sohel, F.; Togneri, R. Learning deep structured network for weakly supervised change detection. arXiv 2016, arXiv:1606.02009. [Google Scholar]

- Andermatt, P.; Timofte, R. A Weakly Supervised Convolutional Network for Change Segmentation and Classification. In Proceedings of the Asian Conference on Computer Vision(ACCV), Kyoto, Japan, 30 November–4 December 2020; pp. 103–119. [Google Scholar]

- Song, H.; Kim, M.; Park, D.; Shin, Y.; Lee, J.-G. Learning from Noisy Labels with Deep Neural Networks: A Survey. arXiv 2020, arXiv:2007.08199. [Google Scholar] [CrossRef] [PubMed]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Guan, Y.; Shao, L. Multi-Granularity Canonical Appearance Pooling for Remote Sensing Scene Classification. IEEE Trans. Image Process. 2020, 29, 5396–5407. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2020, 13, 3735. [Google Scholar] [CrossRef]

- Hazel, G.G. Multivariate Gaussian MRF for multispectral scene segmentation and anomaly detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1199–1211. [Google Scholar] [CrossRef]

- Chen, C.; Fan, L. Scene segmentation of remotely sensed images with data augmentation using U-net++. In Proceedings of the 2021 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shanghai, China, 27–29 August 2021; pp. 201–205. [Google Scholar]

- Wu, C.; Zhang, L.; Zhang, L. A scene change detection framework for multi-temporal very high resolution remote sensing images. Signal Processing 2016, 124, 184–197. [Google Scholar] [CrossRef]

- Wang, Y.; Du, B.; Ru, L.; Wu, C.; Luo, H. Scene Change Detection VIA Deep Convolution Canonical Correlation Analysis Neural Network. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium(IGARSS), Yokohama, Japan, 28 July–2 August 2019; pp. 198–201. [Google Scholar]

- Wu, C.; Zhang, L.; Du, B. Kernel Slow Feature Analysis for Scene Change Detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2367–2384. [Google Scholar] [CrossRef]

- Huang, X.; Liu, H.; Zhang, L. Spatiotemporal Detection and Analysis of Urban Villages in Mega City Regions of China Using High-Resolution Remotely Sensed Imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3639–3657. [Google Scholar] [CrossRef]

- Daudt, R.C.; Saux, B.L.; Boulch, A.; Gousseau, Y. Urban Change Detection for Multispectral Earth Observation Using Convolutional Neural Networks. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS ), Valencia, Spain, 22–27 July 2018; pp. 2115–2118. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Yang, J.; Jiang, Y.-G.; Hauptmann, A.G.; Ngo, C.-W. Evaluating bag-of-visual-words representations in scene classification. In Proceedings of the Proceedings of the International Workshop on Workshop on Multimedia Information Retrieval, Augsburg, Bavaria, Germany, 24–29 September 2007; pp. 197–206. [Google Scholar]

- Bernhard, S.; John, P.; Thomas, H. Efficient sparse coding algorithms. In Proceedings of the Conference on Neural Information Processing Systems(NIPS), Vancouver, British, 4–7 December 2007; pp. 801–808. [Google Scholar]

- Burges, C.J.C. A Tutorial on Support Vector Machines for Pattern Recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X. On Combining Multiple Features for Hyperspectral Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2012, 50, 879–893. [Google Scholar] [CrossRef]

- Chang, X.; Xiang, T.; Hospedales, T.M. Scalable and Effective Deep CCA via Soft Decorrelation. arXiv 2017, arXiv:1707.09669. [Google Scholar]

- Ru, L.; Du, B.; Wu, C. Multi-Temporal Scene Classification and Scene Change Detection With Correlation Based Fusion. IEEE Trans. Image Process. 2021, 30, 1382–1394. [Google Scholar] [CrossRef] [PubMed]

- Gong, M.; Zhan, T.; Zhang, P.; Miao, Q. Superpixel-Based Difference Representation Learning for Change Detection in Multispectral Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2658–2673. [Google Scholar] [CrossRef]

- Lei, Y.; Liu, X.; Shi, J.; Lei, C.; Wang, J. Multiscale Superpixel Segmentation With Deep Features for Change Detection. IEEE Access. 2019, 7, 36600–36616. [Google Scholar] [CrossRef]

- Daudt, R.C.; Saux, B.L.; Boulch, A. Fully Convolutional Siamese Networks for Change Detection. In Proceedings of the 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4063–4067. [Google Scholar]

- Hou, B.; Liu, Q.; Wang, H.; Wang, Y. From W-Net to CDGAN: Bitemporal Change Detection via Deep Learning Techniques. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1790–1802. [Google Scholar] [CrossRef] [Green Version]

- Ding, Q.; Shao, Z.; Huang, X.; Altan, O. DSA-Net: A novel deeply supervised attention-guided network for building change detection in high-resolution remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102591. [Google Scholar] [CrossRef]

- Jiang, H.; Hu, X.; Li, K.; Zhang, J.; Gong, J.; Zhang, M. PGA-SiamNet: Pyramid Feature-Based Attention-Guided Siamese Network for Remote Sensing Orthoimagery Building Change Detection. Remote Sens. 2020, 12, 484. [Google Scholar] [CrossRef] [Green Version]

- Sanghyun, W.; Jongchan, P.; Joon-Young, L.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision(ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Shi, Q.; Liu, M.; Li, S.; Liu, X.; Wang, F.; Zhang, L. A Deeply Supervised Attention Metric-Based Network and an Open Aerial Image Dataset for Remote Sensing Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Fisher, P. The pixel: A snare and a delusion. Int. J. Remote Sens. 1997, 18, 679–685. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G. Yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 10 March 2022).

- Wang, Q.; Zhang, X.; Chen, G.; Dai, F.; Gong, Y.; Zhu, K. Change detection based on Faster R-CNN for high-resolution remote sensing images. Remote Sens. Lett. 2018, 9, 923–932. [Google Scholar] [CrossRef]

- Zhang, L.; Hu, X.; Zhang, M.; Shu, Z.; Zhou, H. Object-level change detection with a dual correlation attention-guided detector. ISPRS J. Photogramm. Remote Sens. 2021, 177, 147–160. [Google Scholar] [CrossRef]

- Han, P.; Ma, C.; Li, Q.; Leng, P.; Bu, S.; Li, K. Aerial image change detection using dual regions of interest networks. Neurocomputing 2019, 349, 190–201. [Google Scholar] [CrossRef]

- Ji, S.; Shen, Y.; Lu, M.; Zhang, Y. Building Instance Change Detection from Large-Scale Aerial Images using Convolutional Neural Networks and Simulated Samples. Remote Sens. 2019, 11, 1343. [Google Scholar] [CrossRef] [Green Version]

- Benedek, C.; Sziranyi, T. Change Detection in Optical Aerial Images by a Multilayer Conditional Mixed Markov Model. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3416–3430. [Google Scholar] [CrossRef] [Green Version]

- Bourdis, N.; Marraud, D.; Sahbi, H. Constrained optical flow for aerial image change detection. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 4176–4179. [Google Scholar]

- Lebedev, M.A.; Vizilter, Y.V.; Vygolov, O.V.; Knyaz, V.A.; Rubis, A.Y. Change Detection in Remote Sensing Images Using Conditional Adversarial Networks. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 565–571. [Google Scholar] [CrossRef] [Green Version]

- Shao, R.; Du, C.; Chen, H.; Li, J. SUNet: Change Detection for Heterogeneous Remote Sensing Images from Satellite and UAV Using a Dual-Channel Fully Convolution Network. Remote Sens. 2021, 13, 3750. [Google Scholar] [CrossRef]

- Rodrigo, C.D.; Bertrand, L.S.; Alexandre, B.; Yann, G. Multitask Learning for Large-scale Semantic Change Detection. arXiv 2018, arXiv:1810.08452. [Google Scholar]

- Yang, K.; Xia, G.-S.; Liu, Z.; Du, B.; Yang, W.; Pelillo, M.; Zhang, L. Asymmetric Siamese Networks for Semantic Change Detection in Aerial Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Tian, S.; Ma, A.; Zheng, Z.; Zhong, Y. Hi-UCD: A Large-scale Dataset for Urban Semantic Change Detection in Remote Sensing Imagery. arXiv 2020, arXiv:2011.03247. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction From an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Fujita, A.; Sakurada, K.; Imaizumi, T.; Ito, R.; Hikosaka, S.; Nakamura, R. Damage detection from aerial images via convolutional neural networks. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017; pp. 5–8. [Google Scholar]

- Shen, L.; Lu, Y.; Hao, C.; Wei, H.; Xie, D.; Yue, J.; Chen, R.; Zhang, Y.; Zhang, A.; Lv, S.; et al. S2Looking: A Satellite Side-Looking Dataset for Building Change Detection. arXiv 2021, arXiv:2107.09244. [Google Scholar] [CrossRef]

- Ritwik, G.; Richard, H.; Sandra, S.; Nirav, P.; Bryce, G.; Jigar, D.; Eric, H.; Howie, C.; Matthew, G. Creating xBD: A Dataset for Assessing Building Damage from Satellite Imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–21 June 2019; pp. 10–17. [Google Scholar]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Stehman, S.V.; Woodcock, C.E. Making better use of accuracy data in land change studies: Estimating accuracy and area and quantifying uncertainty using stratified estimation. Remote Sens. Environ. 2013, 129, 122–131. [Google Scholar] [CrossRef]

- Li, B.; Zhou, Q. Accuracy assessment on multi-temporal land-cover change detection using a trajectory error matrix. Int. J. Remote Sens. 2009, 30, 1283–1296. [Google Scholar] [CrossRef]

- Pratomo, J.; Kuffer, M.; Kohli, D.; Martinez, J. Application of the trajectory error matrix for assessing the temporal transferability of OBIA for slum detection. Eur. J. Remote Sens. 2018, 51, 838–849. [Google Scholar] [CrossRef] [Green Version]

- Gong, J.; Hu, X.; Pang, S.; Wei, Y. Roof-Cut Guided Localization for Building Change Detection from Imagery and Footprint Map. Photogramm. Eng. Remote Sens. 2019, 85, 543–558. [Google Scholar] [CrossRef]

| Datasets | Classes | Sensor | Image Pairs | Image Size | Resolution (m) | Real | Period (Years) |

|---|---|---|---|---|---|---|---|

| SZTAKI | 2 | aerial | 13 | 952 × 640 | 1.5 | √ | - |

| CDD | 2 | satellite | 16,000 | 256 × 256 | 0.03–1 | √ | - |

| OSCD | 2 | satellite | 24 | 600 × 600 | 10 | √ | 2 |

| ABCD | 2 | aerial | 8506/8444 | 160 × 160/ 120 × 120 | 0.4 | √ | 11 |

| AICD | 2 | aerial | 500 | 800 × 600 | 0.5 | ✗ | - |

| LEVIR-CD | 2 | satellite | 637 | 1024 × 1024 | 0.5 | √ | 6 |

| WHU-CD | 2 | aerial | 1 | 32,507 × 15,354 | 0.075 | √ | 4 |

| SYSU-CD | 2 | aerial | 20,000 | 256 × 256 | 0.5 | 7 | |

| HRSCD | 1 + 5 | aerial | 291 | 10,000 × 10,000 | 0.5 | √ | 6, 7 |

| SECOND | 1 + 6 | aerial | 4662 | 512 × 512 | - | √ | - |

| Hi-UCD | 1 + 9 | aerial | 1293 | 1024 × 1024 | 0.1 | √ | 1, 2 |

| DSIFN | 2 | satellite | 3940 | 512 × 512 | 2 | √ | 5, 8, 10, 15, 17 |

| S2Looking | 2 | satellite | 5000 | 1024 × 1024 | 0.5–0.8 | √ | 1–3 |

| HTCD | 2 | satellite, aerial | 1 | 11 K × 15 K | 0.59710.007465 | √ | 12 |

| Detected | Reference | |

|---|---|---|

| Changed | Not Changed | |

| Changed | TP | FP |

| Not Changed | FN | TN |

| Groups | Classification Result | Reference | Detected |

|---|---|---|---|

| Correct | non-changed | non-changed | |

| changed | changed | ||

| Incorrect | non-changed | non-changed | |

| non-changed | changed | ||

| changed | non-changed | ||

| changed | changed with incorrect trajectory |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, H.; Peng, M.; Zhong, Y.; Xie, H.; Hao, Z.; Lin, J.; Ma, X.; Hu, X. A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 1552. https://doi.org/10.3390/rs14071552

Jiang H, Peng M, Zhong Y, Xie H, Hao Z, Lin J, Ma X, Hu X. A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images. Remote Sensing. 2022; 14(7):1552. https://doi.org/10.3390/rs14071552

Chicago/Turabian StyleJiang, Huiwei, Min Peng, Yuanjun Zhong, Haofeng Xie, Zemin Hao, Jingming Lin, Xiaoli Ma, and Xiangyun Hu. 2022. "A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images" Remote Sensing 14, no. 7: 1552. https://doi.org/10.3390/rs14071552

APA StyleJiang, H., Peng, M., Zhong, Y., Xie, H., Hao, Z., Lin, J., Ma, X., & Hu, X. (2022). A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images. Remote Sensing, 14(7), 1552. https://doi.org/10.3390/rs14071552