Detecting Moving Trucks on Roads Using Sentinel-2 Data

Abstract

:1. Introduction

Background

2. Materials and Methods

2.1. Theoretical Basis

2.2. Sentinel-2 Data

2.3. Open Street Map Road Data

2.4. Training and Validation Boxes

- a noticeably higher reflectance in one of the VIS bands compared to its VIS counterparts and the surrounding,

- the presence of condition (1) for each VIS band,

- condition (2) must be fulfilled in the direct neighbourhood and the correct spatial order.

Vis Spectra

2.5. Machine Learning and Object Extraction Method

2.5.1. Random Forest Model

2.5.2. Rf Feature Selection

2.5.3. Feature Statistics

2.5.4. Hyper Parameter Optimization

- 1.

- 2.

- Minimum samples per split (min_samples_split): How many samples are needed for creating a new split? If this is not achieved, a leaf is created, hence it drives the size of the trees [119]. Range: 2–7. Selected: 5.

- 3.

2.5.5. Feature Importances

2.5.6. Prediction

2.5.7. Object Extraction

2.5.8. Object Characterization

Heading

Speed

2.6. Validation

- the ML classifier was evaluated on the pixel level based on the spectra extracted from the validation boxes,

- the detected boxes were evaluated with the box geometries of the validation boxes,

- the detection counts were validated with German station traffic counts in a set of validation areas as done e.g., by [43].

2.6.1. Metrics

2.6.2. Classifier Validation

2.6.3. Detection Box Validation

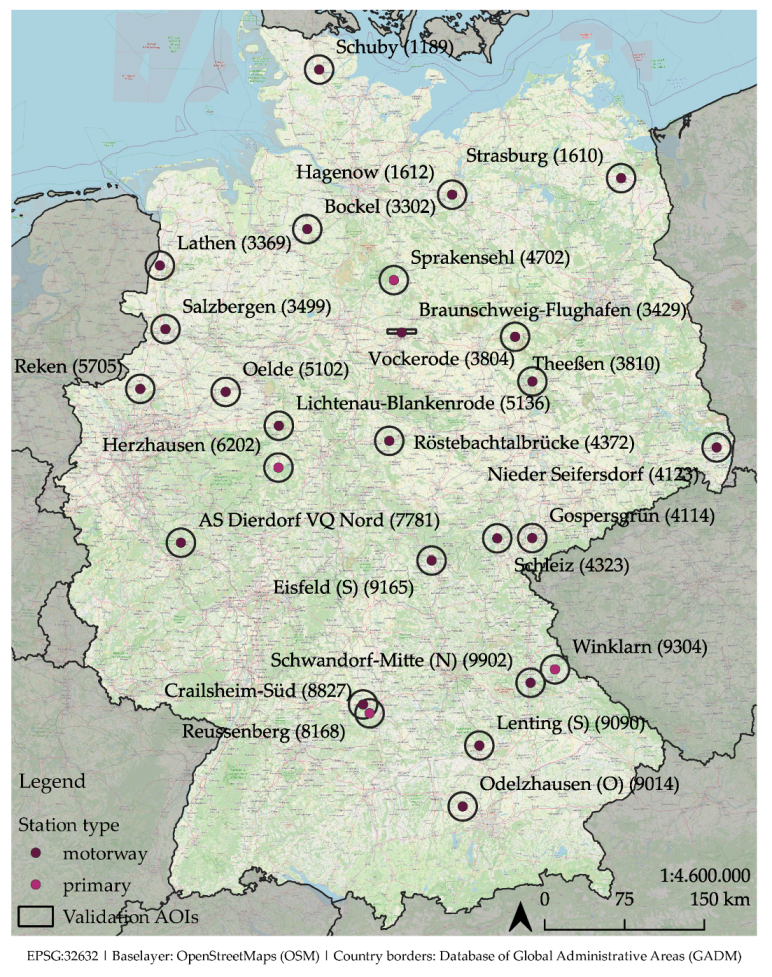

2.6.4. Traffic Count Station Validation

3. Results

3.1. Classifier Validation

3.2. Detection Box Validation

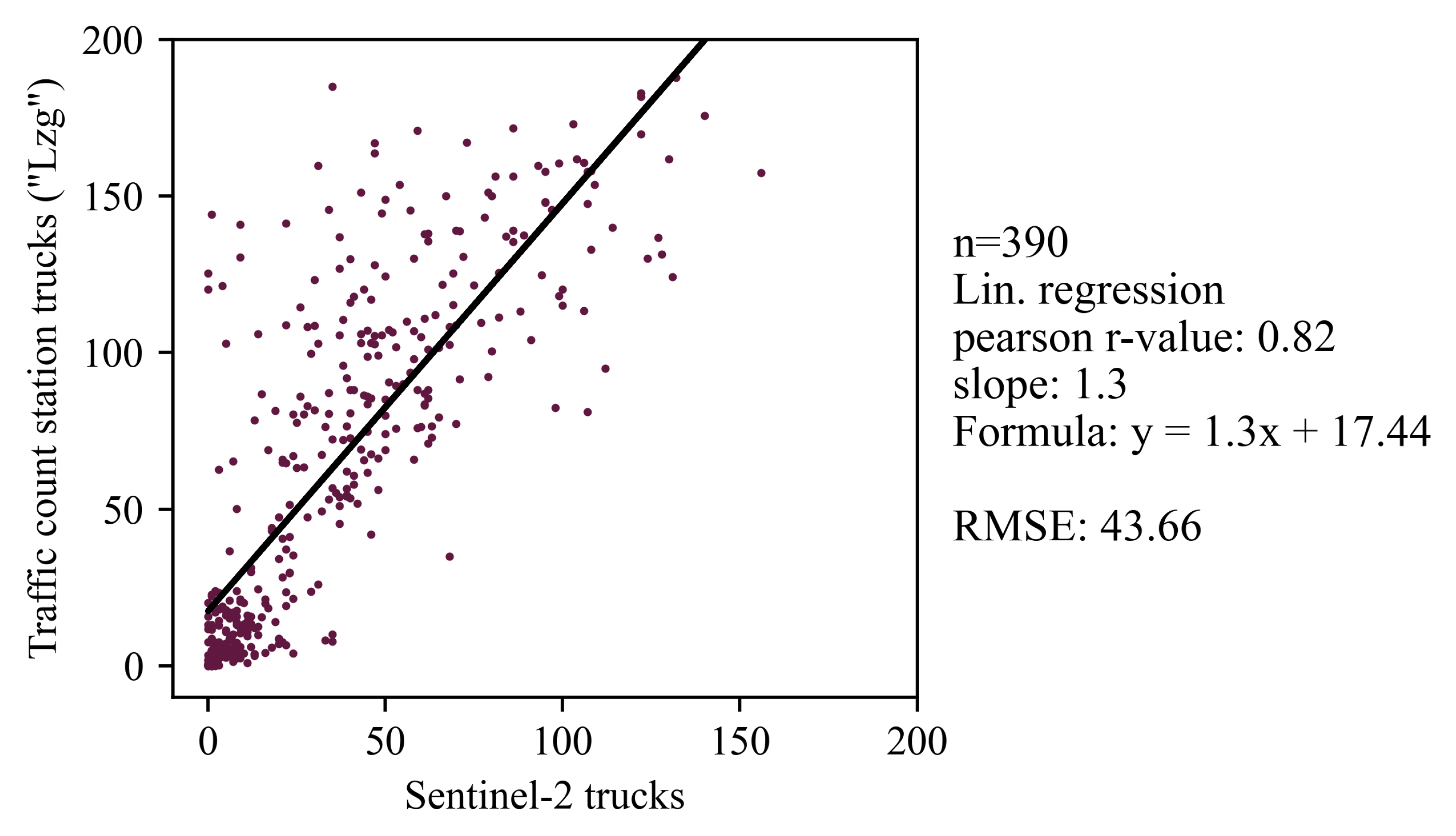

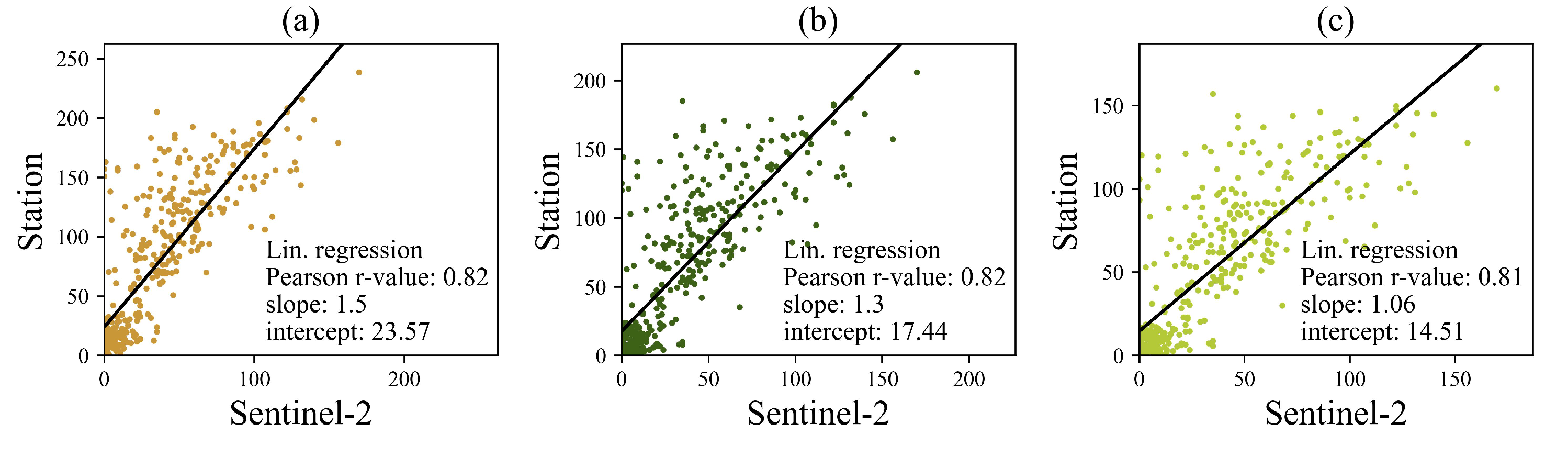

3.3. Traffic Count Station Validation

3.3.1. Relationship between Sentinel-2 Truck Counts and Station Truck Types

3.3.2. Relationship between Sentinel-2 Truck Counts and Station Car Counts

4. Discussion

4.1. Detection Performance and Validation

4.2. Applications

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ALS | Airborne laser scanning |

| AOI | Area of interest |

| API | Application Programming Interface |

| BAST | German Federal Highway Research Institute |

| CART | Classification and regression trees |

| CNN | Convolutional Neural Network |

| DEM | Digital elevation model |

| DLR | German Aerospace Center |

| EC | European Commission |

| ESA | European Space Agency |

| EU | European Union |

| FN | False negative |

| FP | False positive |

| FPR | False positive rate |

| ID | Identifier |

| IOU | Intersection over union |

| L2 | Level 2 |

| L2A | Level 2A |

| LiDAR | Light detection and ranging |

| ML | Machine learning |

| MSI | Multispectral Instrument |

| NIR | Near infrared |

| OSM | OpenStreetMap |

| PM | Particulate matter |

| PR | Precision-recall |

| RACE | Rapid Action on Coronavirus and EO |

| RF | Random forest |

| RMSE | Root mean square error |

| SWIR | Shortwave infrared |

| TN | True negative |

| TP | True positive |

| TPR | True positive rate |

| UAV | Unmanned aerial vehicle |

| VHR | Very high resolution |

| VIS | Visual |

Appendix A

| Sentinel-2 Tile ID | Country | N Training | N Validation | |

|---|---|---|---|---|

| 1 | T31UEQ | France | 250 | |

| 2 | T32UNA | Germany | 250 | |

| 3 | T35JPM | Russia | 250 | |

| 4 | T36VUM | Spain | 250 | |

| 5 | T49QHF | Ukraine | 250 | |

| 6 | T35UQR | South Africa | 250 | |

| 7 | T30TVK | China | 250 | |

| 8 | T49QCE | USA | 250 | |

| 9 | T23KKQ | Brazil | 250 | |

| 10 | T18TWK | Australia | 250 | |

| 11 | T33TUN | Austria | 35 | |

| 12 | T31UFS | Belgium | 35 | |

| 13 | T34UDC | Poland | 35 | |

| 14 | T29SND | Portugal | 35 | |

| 15 | T35TMK | Romania | 35 | |

| 16 | T37MCT | Kenya | 35 | |

| 17 | T52SDE | South Korea | 35 | |

| 18 | T12SUC | USA | 35 | |

| 19 | T21HUB | Argentina | 35 | |

| 20 | T60HUD | New Zealand | 35 | |

| Sum | 2500 | - | ||

| - | 350 | |||

| Share | 85% | - | ||

| - | 15% |

| Station Name | Road Type | Used Acquisitions | |

|---|---|---|---|

| 1 | AS Dierdof VQ Nord (7781) | A | 14 |

| 2 | Bockel (3302) | A | 16 |

| 3 | Braunschweig-Flughafen (3429) | A | 24 |

| 4 | Crailsheim-Süd (8827) | B | 14 |

| 5 | Eisfeld (S) (9165) | A | 18 |

| 6 | Gospersgrün (4114) | A | 13 |

| 7 | Hagenow (1612) | A | 14 |

| 8 | Herzhausen (6202) | B | 9 |

| 9 | Lathen (3369) | A | 7 |

| 10 | Lenting (S) (9090) | A | 18 |

| 11 | Lichtenau-Blankenrode (5136) | A | 23 |

| 12 | Nieder Seifersdorf (4123) | A | 20 |

| 13 | Oelde (5102) | A | 16 |

| 14 | Odelzhausen (O) (9014) | A | 14 |

| 15 | Reken (5705) | A | 7 |

| 16 | Reussenberg (8168) | A | 14 |

| 17 | Röstebachtalbrücke (4372) | A | 8 |

| 18 | Salzbergen (3499) | A | 10 |

| 19 | Schleiz (4323) | A | 12 |

| 20 | Schuby (1189) | A | 17 |

| 21 | Schwandorf-Mitte (N) (9902) | A | 14 |

| 22 | Sprakensehl (4702) | B | 28 |

| 23 | Strasburg (1610) | A | 17 |

| 24 | Theeßen (3810) | A | 10 |

| 25 | Vockerode (3804) | A | 19 |

| 26 | Winklarn (9304) | B | 14 |

| Sum | 390 |

References

- Novotny, E.V.; Bechle, M.J.; Millet, D.B.; Marshall, J.D. National satellite-based land-use regression: NO2 in the United States. Environ. Sci. Technol. 2011, 45, 4407–4414. [Google Scholar] [CrossRef] [PubMed]

- Beevers, S.D.; Westmoreland, E.; de Jong, M.C.; Williams, M.L.; Carslaw, D.C. Trends in NOx and NO2 emissions from road traffic in Great Britain. Atmos. Environ. 2012, 54, 107–116. [Google Scholar] [CrossRef]

- Saucy, A.; Röösli, M.; Künzli, N.; Tsai, M.Y.; Sieber, C.; Olaniyan, T.; Baatjies, R.; Jeebhay, M.; Davey, M.; Flückiger, B.; et al. Land Use Regression Modelling of Outdoor NO2 and PM2.5 Concentrations in Three Low Income Areas in the Western Cape Province, South Africa. Int. J. Environ. Res. Public Health 2018, 15, 1452. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- European Environment Agency. Air Quality in Europe—2019 Report; European Environment Agency: Copenhagen, Denmark, 2019.

- Harrison, R.M.; Vu, T.V.; Jafar, H.; Shi, Z. More mileage in reducing urban air pollution from road traffic. Environ. Int. 2021, 149, 106329. [Google Scholar] [CrossRef]

- European Union Eurostat. Freight Transport Statistics; European Union Eurostat: Luxembourg, 2020.

- Organization for Economic Cooperation. OECD Data—Freight Transport; Organization for Economic Cooperation: Paris, France, 2021. [Google Scholar]

- Bureau of Transportation Statistics. Freight Shipments by Mode; Bureau of Transportation Statistics: Washington, DC, USA, 2020.

- Huo, H.; Yao, Z.; Zhang, Y.; Shen, X.; Zhang, Q.; He, K. On-board measurements of emissions from diesel trucks in five cities in China. Atmos. Environ. 2012, 54, 159–167. [Google Scholar] [CrossRef]

- Liimatainen, H.; van Vliet, O.; Aplyn, D. The potential of electric trucks—An international commodity-level analysis. Appl. Energy 2019, 236, 804–814. [Google Scholar] [CrossRef]

- Boarnet, M.G.; Hong, A.; Santiago-Bartolomei, R. Urban spatial structure, employment subcenters, and freight travel. J. Transport Geogr. 2017, 60, 267–276. [Google Scholar] [CrossRef]

- Deutsches Statistisches Bundesamt (DESTATIS). Truck Toll Mileage Index; Deutsches Statistisches Bundesamt: Wiesbaden, Germany, 2020.

- Li, B.; Gao, S.; Liang, Y.; Kang, Y.; Prestby, T.; Gao, Y.; Xiao, R. Estimation of Regional Economic Development Indicator from Transportation Network Analytics. Sci. Rep. 2020, 10, 2647. [Google Scholar] [CrossRef]

- Berman, J.D.; Ebisu, K. Changes in U.S. air pollution during the COVID-19 pandemic. Sci. Total Environ. 2020, 739, 139864. [Google Scholar] [CrossRef]

- Venter, Z.S.; Aunan, K.; Chowdhury, S.; Lelieveld, J. COVID-19 lockdowns cause global air pollution declines. Proc. Natl. Acad. Sci. USA 2020, 117, 18984–18990. [Google Scholar] [CrossRef]

- Chan, K.L.; Khorsandi, E.; Liu, S.; Baier, F.; Valks, P. Estimation of Surface NO2 Concentrations over Germany from TROPOMI Satellite Observations Using a Machine Learning Method. Remote Sens. 2021, 13, 969. [Google Scholar] [CrossRef]

- Bernas, M.; Płaczek, B.; Korski, W.; Loska, P.; Smyła, J.; Szymała, P. A Survey and Comparison of Low-Cost Sensing Technologies for Road Traffic Monitoring. Sensors 2018, 18, 3243. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rosenbaum, D.; Kurz, F.; Thomas, U.; Suri, S.; Reinartz, P. Towards automatic near real-time traffic monitoring with an airborne wide angle camera system. Eur. Transp. Res. Rev. 2009, 1, 11–21. [Google Scholar] [CrossRef] [Green Version]

- Bundesanstalt für Straßenwesen. Automatische Zählstellen auf Autobahnen und Bundesstraßen; Bundesanstalt für Straßenwesen: Bergisch Gladbach, Germany, 2021.

- Federal Highway Administration. U.S. Traffic Monitoring Location Data; Federal Highway Administration: Washington, DC, USA, 2020.

- Autobahnen- und Schnellstraßen-Finanzierungs-Aktiengesellschaft. Verkehrsentwicklung; Autobahnen- und Schnellstraßen-Finanzierungs-Aktiengesellschaft: Vienna, Austria, 2021. [Google Scholar]

- Bottero, M.; Dalla Chiara, B.; Deflorio, F. Wireless sensor networks for traffic monitoring in a logistic centre. Transp. Res. Part C Emerg. Technol. 2013, 26, 99–124. [Google Scholar] [CrossRef]

- Gerhardinger, A.; Ehrlich, D.; Pesaresi, M. Vehicles detection from very high resolution satellite imagery. Int. Arch. Photogramm. Remote Sens. 2005, 36, W24. [Google Scholar]

- Datondji, S.R.E.; Dupuis, Y.; Subirats, P.; Vasseur, P. A Survey of Vision-Based Traffic Monitoring of Road Intersections. IEEE Trans. Intell. Transport. Syst. 2016, 17, 2681–2698. [Google Scholar] [CrossRef]

- Janecek, A.; Valerio, D.; Hummel, K.A.; Ricciato, F.; Hlavacs, H. The Cellular Network as a Sensor: From Mobile Phone Data to Real-Time Road Traffic Monitoring. IEEE Trans. Intell. Transport. Syst. 2015, 16, 2551–2572. [Google Scholar] [CrossRef]

- Wang, D.; Al-Rubaie, A.; Davies, J.; Clarke, S.S. Real time road traffic monitoring alert based on incremental learning from tweets. In Proceedings of the 2014 IEEE Symposium on Evolving and Autonomous Learning Systems (EALS), Orlando, FL, USA, 9–12 December 2014; pp. 50–57. [Google Scholar] [CrossRef]

- Nellore, K.; Hancke, G. A Survey on Urban Traffic Management System Using Wireless Sensor Networks. Sensors 2016, 16, 157. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Qin, R.; Zhang, G.; Albanwan, H. Spatial Temporal Analysis of Traffic Patterns during the COVID-19 Epidemic by Vehicle Detection Using Planet Remote-Sensing Satellite Images. Remote Sens. 2021, 13, 208. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Vehicle Detection From UAV Imagery with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–21. [Google Scholar] [CrossRef]

- Charouh, Z.; Ezzouhri, A.; Ghogho, M.; Guennoun, Z. A Resource-Efficient CNN-Based Method for Moving Vehicle Detection. Sensors 2022, 22, 1193. [Google Scholar] [CrossRef] [PubMed]

- Ghasemi Darehnaei, Z.; Rastegar Fatemi, S.M.J.; Mirhassani, S.M.; Fouladian, M. Ensemble Deep Learning Using Faster R-CNN and Genetic Algorithm for Vehicle Detection in UAV Images. IETE J. Res. 2021, 1–10. [Google Scholar] [CrossRef]

- Maity, M.; Banerjee, S.; Sinha Chaudhuri, S. Faster R-CNN and YOLO based Vehicle detection: A Survey. In Proceedings of the 2021 5th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 8–10 April 2021; pp. 1442–1447. [Google Scholar] [CrossRef]

- Luo, X.; Tian, X.; Zhang, H.; Hou, W.; Leng, G.; Xu, W.; Jia, H.; He, X.; Wang, M.; Zhang, J. Fast Automatic Vehicle Detection in UAV Images Using Convolutional Neural Networks. Remote Sens. 2020, 12, 1994. [Google Scholar] [CrossRef]

- Koga, Y.; Miyazaki, H.; Shibasaki, R. A Method for Vehicle Detection in High-Resolution Satellite Images that Uses a Region-Based Object Detector and Unsupervised Domain Adaptation. Remote Sens. 2020, 12, 575. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Liu, Y.; Liu, T.; Lin, Z.; Wang, S. DAGN: A Real-Time UAV Remote Sensing Image Vehicle Detection Framework. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1884–1888. [Google Scholar] [CrossRef]

- Tan, Q.; Ling, J.; Hu, J.; Qin, X.; Hu, J. Vehicle Detection in High Resolution Satellite Remote Sensing Images Based on Deep Learning. IEEE Access 2020, 8, 153394–153402. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef] [Green Version]

- Ji, H.; Gao, Z.; Mei, T.; Li, Y. Improved Faster R-CNN with Multiscale Feature Fusion and Homography Augmentation for Vehicle Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1761–1765. [Google Scholar] [CrossRef]

- Wang, L.; Liao, J.; Xu, C. Vehicle Detection Based on Drone Images with the Improved Faster R-CNN. In Proceedings of the 2019 11th International Conference on Machine Learning and Computing—ICMLC ’19, Zhuhai, China, 22–24 February 2019; pp. 466–471. [Google Scholar] [CrossRef]

- Yu, Y.; Gu, T.; Guan, H.; Li, D.; Jin, S. Vehicle Detection From High-Resolution Remote Sensing Imagery Using Convolutional Capsule Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1894–1898. [Google Scholar] [CrossRef]

- Tao, C.; Mi, L.; Li, Y.; Qi, J.; Xiao, Y.; Zhang, J. Scene Context-Driven Vehicle Detection in High-Resolution Aerial Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7339–7351. [Google Scholar] [CrossRef]

- Zheng, K.; Wei, M.; Sun, G.; Anas, B.; Li, Y. Using Vehicle Synthesis Generative Adversarial Networks to Improve Vehicle Detection in Remote Sensing Images. ISPRS Int. J. Geo-Inf. 2019, 8, 390. [Google Scholar] [CrossRef] [Green Version]

- Kaack, L.H.; Chen, G.H.; Morgan, M.G. Truck traffic monitoring with satellite images. In Proceedings of the Conference on Computing & Sustainable Societies—COMPASS 19, Accra, Ghana, 3–5 July 2019; pp. 155–164. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.Y.; Liao, W.; Li, X.; Cao, Y.; Rosenhahn, B. Vehicle Detection in Aerial Images. Photogramm. Eng. Remote Sens. 2019, 85, 297–304. [Google Scholar] [CrossRef] [Green Version]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-Detect: Vehicle Detection and Classification through Semantic Segmentation of Aerial Images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef] [Green Version]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J.; Zou, H. Toward Fast and Accurate Vehicle Detection in Aerial Images Using Coupled Region-Based Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3652–3664. [Google Scholar] [CrossRef]

- Sakai, K.; Seo, T.; Fuse, T. Traffic density estimation method from small satellite imagery: Towards frequent remote sensing of car traffic. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 1776–1781. [Google Scholar] [CrossRef]

- Koga, Y.; Miyazaki, H.; Shibasaki, R. A CNN-Based Method of Vehicle Detection from Aerial Images Using Hard Example Mining. Remote Sens. 2018, 10, 124. [Google Scholar] [CrossRef] [Green Version]

- Tayara, H.; Gil Soo, K.; Chong, K.T. Vehicle Detection and Counting in High-Resolution Aerial Images Using Convolutional Regression Neural Network. IEEE Access 2018, 6, 2220–2230. [Google Scholar] [CrossRef]

- Yang, T.; Wang, X.; Yao, B.; Li, J.; Zhang, Y.; He, Z.; Duan, W. Small Moving Vehicle Detection in a Satellite Video of an Urban Area. Sensors 2016, 16, 1528. [Google Scholar] [CrossRef] [Green Version]

- Heiselberg, P.; Heiselberg, H. Aircraft Detection above Clouds by Sentinel-2 MSI Parallax. Remote Sens. 2021, 13, 3016. [Google Scholar] [CrossRef]

- Heiselberg, H. Aircraft and Ship Velocity Determination in Sentinel-2 Multispectral Images. Sensors 2019, 19, 2873. [Google Scholar] [CrossRef] [Green Version]

- Frantz, D.; Haß, E.; Uhl, A.; Stoffels, J.; Hill, J. Improvement of the Fmask algorithm for Sentinel-2 images: Separating clouds from bright surfaces based on parallax effects. Remote Sens. Environ. 2018, 215, 471–481. [Google Scholar] [CrossRef]

- Gascon, F.; Bouzinac, C.; Thépaut, O.; Jung, M.; Francesconi, B.; Louis, J.; Lonjou, V.; Lafrance, B.; Massera, S.; Gaudel-Vacaresse, A.; et al. Copernicus Sentinel-2A Calibration and Products Validation Status. Remote Sens. 2017, 9, 584. [Google Scholar] [CrossRef] [Green Version]

- Skakun, S.; Vermote, E.; Roger, J.C.; Justice, C. Multispectral Misregistration of Sentinel-2A Images: Analysis and Implications for Potential Applications. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2408–2412. [Google Scholar] [CrossRef] [PubMed]

- Gatti, A.; Bertolini, A. Sentinel-2 Products Specification Document; European Space Agency: Paris, France, 2015. [Google Scholar]

- Börner, A.; Ernst, I.; Ruhé, M.; Zujew, S. Airborne Camera Experiments for Traffic Monitoring. In Proceedings of the ISPRS—10th Congress International Society for Photogrammetry and Remote Sensing, Istanbul, Turkey, 12–23 July 2004. [Google Scholar]

- Reinartz, P.; Lachaise, M.; Schmeer, E.; Krauss, T.; Runge, H. Traffic monitoring with serial images from airborne cameras. ISPRS J. Photogramm. Remote Sens. 2006, 61, 149–158. [Google Scholar] [CrossRef]

- Palubinskas, G.; Kurz, F.; Reinartz, P. Detection of Traffic Congestion in Optical Remote Sensing Imagery. In Proceedings of the IGARSS 2008, 2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; pp. II-426–II-429. [Google Scholar] [CrossRef] [Green Version]

- Leitloff, J.; Rosenbaum, D.; Kurz, F.; Meynberg, O.; Reinartz, P. An Operational System for Estimating Road Traffic Information from Aerial Images. Remote Sens. 2014, 6, 11315–11341. [Google Scholar] [CrossRef] [Green Version]

- Yao, W.; Zhang, M.; Hinz, S.; Stilla, U. Airborne traffic monitoring in large areas using LiDAR data—Theory and experiments. Int. J. Remote Sens. 2012, 33, 3930–3945. [Google Scholar] [CrossRef]

- Suchandt, S.; Runge, H.; Breit, H.; Steinbrecher, U.; Kotenkov, A.; Balss, U. Automatic Extraction of Traffic Flows Using TerraSAR-X Along-Track Interferometry. IEEE Trans. Geosci. Remote Sens. 2010, 48, 807–819. [Google Scholar] [CrossRef]

- Meyer, F.; Hinz, S.; Laika, A.; Weihing, D.; Bamler, R. Performance analysis of the TerraSAR-X Traffic monitoring concept. ISPRS J. Photogramm. Remote Sens. 2006, 61, 225–242. [Google Scholar] [CrossRef]

- Hinz, S.; Leitloff, J.; Stilla, U. Context-supported vehicle detection in optical satellite images of urban areas. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 25–29 July 2005; Volume 4, pp. 2937–2941. [Google Scholar] [CrossRef]

- Larsen, S.Ø.; Koren, H.; Solberg, R. Traffic Monitoring using Very High Resolution Satellite Imagery. Photogramm. Eng. Remote Sens. 2009, 75, 859–869. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Gao, F.; Li, B.; Xu, Q.; Zhong, C. Moving Vehicle Information Extraction from Single-Pass WorldView-2 Imagery Based on ERGAS-SNS Analysis. Remote Sens. 2014, 6, 6500–6523. [Google Scholar] [CrossRef] [Green Version]

- Bar, D.E.; Raboy, S. Moving Car Detection and Spectral Restoration in a Single Satellite WorldView-2 Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2077–2087. [Google Scholar] [CrossRef]

- Pesaresi, M.; Gutjahr, K.H.; Pagot, E. Estimating the velocity and direction of moving targets using a single optical VHR satellite sensor image. Int. J. Remote Sens. 2008, 29, 1221–1228. [Google Scholar] [CrossRef]

- Du, B.; Cai, S.; Wu, C. Object Tracking in Satellite Videos Based on a Multiframe Optical Flow Tracker. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3043–3055. [Google Scholar] [CrossRef] [Green Version]

- Ahmadi, S.A.; Ghorbanian, A.; Mohammadzadeh, A. Moving vehicle detection, tracking and traffic parameter estimation from a satellite video: A perspective on a smarter city. Int. J. Remote Sens. 2019, 40, 8379–8394. [Google Scholar] [CrossRef]

- Toth, C.; Jóźków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Kopsiaftis, G.; Karantzalos, K. Vehicle detection and traffic density monitoring from very high resolution satellite video data. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 1881–1884. [Google Scholar] [CrossRef]

- Chang, Y.; Wang, S.; Zhou, Y.; Wang, L.; Wang, F. A Novel Method of Evaluating Highway Traffic Prosperity Based on Nighttime Light Remote Sensing. Remote Sens. 2019, 12, 102. [Google Scholar] [CrossRef] [Green Version]

- Tang, T.; Zhou, S.; Deng, Z.; Lei, L.; Zou, H. Arbitrary-Oriented Vehicle Detection in Aerial Imagery with Single Convolutional Neural Networks. Remote Sens. 2017, 9, 1170. [Google Scholar] [CrossRef] [Green Version]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- European Space Agency. Multi Spectral Instrument (MSI) Overview; European Space Agency: Paris, France, 2020. [Google Scholar]

- Kääb, A.; Leprince, S. Motion detection using near-simultaneous satellite acquisitions. Remote Sens. Environ. 2014, 154, 164–179. [Google Scholar] [CrossRef] [Green Version]

- Meng, L.; Kerekes, J.P. Object Tracking Using High Resolution Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 146–152. [Google Scholar] [CrossRef] [Green Version]

- Easson, G.; DeLozier, S.; Momm, H.G. Estimating Speed and Direction of Small Dynamic Targets through Optical Satellite Imaging. Remote Sens. 2010, 2, 1331–1347. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Xu, B.; Zhi, W.; Hu, C.; Dong, Y.; Jin, S.; Lu, Y.; Chen, T.; Xu, W.; Liu, Y.; et al. Space eye on flying aircraft: From Sentinel-2 MSI parallax to hybrid computing. Remote Sens. Environ. 2020, 246, 111867. [Google Scholar] [CrossRef]

- Council of the European Union. COUNCIL DIRECTIVE 96/53/EC; European Union: Brussels, Belgium, 1997. [Google Scholar]

- National Heavy Vehicle Regulator. Common Heavy Freight Vehicle Configurations; National Heavy Vehicle Regulator: Newstead, Australia, 2017.

- Strahler, A.H.; Woodcock, C.E.; Smith, J.A. On the nature of models in remote sensing. Remote Sens. Environ. 1986, 20, 121–139. [Google Scholar] [CrossRef]

- European Space Agency. Euro Data Cube Custom Script Contest: The Winner Is…; European Space Agency: Paris, France, 2020. [Google Scholar]

- Fisser, H. Truck Detection Using Sentinel-2 Data; University of Wuerzburg: Wuerzburg, Germany, 2020. [Google Scholar]

- Verschae, R.; Ruiz-del Solar, J. Object Detection: Current and Future Directions. Front. Robot. AI 2015, 2, 29. [Google Scholar] [CrossRef] [Green Version]

- Jain, A.K.; Ratha, N.K.; Lakshmanan, S. Object detection using gabor filters. Patt. Recognit. 1997, 30, 295–309. [Google Scholar] [CrossRef]

- Trivedi, M.M.; Harlow, C.A.; Conners, R.W.; Goh, S. Object detection based on gray level cooccurrence. Comput. Vis. Graph. Image Process. 1984, 28, 199–219. [Google Scholar] [CrossRef]

- Shaikh, S.H.; Saeed, K.; Chaki, N. Moving Object Detection Using Background Subtraction. In Moving Object Detection Using Background Subtraction; Series Title: SpringerBriefs in Computer Science; Springer International Publishing: Cham, Switzerland, 2014; pp. 15–23. [Google Scholar] [CrossRef]

- Piccardi, M. Background subtraction techniques: A review. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No.04CH37583), The Hague, The Netherlands, 10–13 October 2004; pp. 3099–3104. [Google Scholar] [CrossRef] [Green Version]

- Hoeser, T.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review-Part I: Evolution and Recent Trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate Object Localization in Remote Sensing Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Han, J.; Guo, L.; Qian, X.; Zhou, P.; Yao, X.; Hu, X. Object detection in remote sensing imagery using a discriminatively trained mixture model. ISPRS J. Photogramm. Remote Sens. 2013, 85, 32–43. [Google Scholar] [CrossRef]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Pang, J.; Li, C.; Shi, J.; Xu, Z.; Feng, H. R2-CNN: Fast Tiny Object Detection in Large-scale Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5512–5524. [Google Scholar] [CrossRef] [Green Version]

- Sarwar, F.; Griffin, A.; Periasamy, P.; Portas, K.; Law, J. Detecting and Counting Sheep with a Convolutional Neural Network. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- European Space Agency. Products and Algorithms; European Space Agency: Paris, France, 2021. [Google Scholar]

- Open Street Map Foundation. Open Street Map; Open Street Map Foundation: Cambridge, UK, 2021. [Google Scholar]

- Open Street Map Foundation. Overpass API; Open Street Map Foundation: Cambridge, UK, 2021. [Google Scholar]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep Learning Based Oil Palm Tree Detection and Counting for High-Resolution Remote Sensing Images. Remote Sens. 2016, 9, 22. [Google Scholar] [CrossRef] [Green Version]

- Yang, B.; Dai, W.; Dong, Z.; Liu, Y. Automatic Forest Mapping at Individual Tree Levels from Terrestrial Laser Scanning Point Clouds with a Hierarchical Minimum Cut Method. Remote Sens. 2016, 8, 372. [Google Scholar] [CrossRef] [Green Version]

- Han, X.; Zhong, Y.; Zhang, L. An Efficient and Robust Integrated Geospatial Object Detection Framework for High Spatial Resolution Remote Sensing Imagery. Remote Sens. 2017, 9, 666. [Google Scholar] [CrossRef] [Green Version]

- Zhu, L.; Wen, G. Hyperspectral Anomaly Detection via Background Estimation and Adaptive Weighted Sparse Representation. Remote Sens. 2018, 10, 272. [Google Scholar] [CrossRef] [Green Version]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Guo, Q. A maximum entropy approach to one-class classification of remote sensing imagery. Int. J. Remote Sens. 2010, 31, 2227–2235. [Google Scholar] [CrossRef]

- Barbet-Massin, M.; Jiguet, F.; Albert, C.H.; Thuiller, W. Selecting pseudo-absences for species distribution models: How, where and how many?: How to use pseudo-absences in niche modelling? Methods Ecol. Evol. 2012, 3, 327–338. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Casey, R.; Nagy, G. Decision tree design using a probabilistic model (Corresp). IEEE Trans. Inf. Theory 1984, 30, 93–99. [Google Scholar] [CrossRef]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; Volume 1, pp. 278–282. [Google Scholar] [CrossRef]

- Amit, Y.; Geman, D. Shape Quantization and Recognition with Randomized Trees. Neural Comput. 1997, 9, 1545–1588. [Google Scholar] [CrossRef] [Green Version]

- Fawagreh, K.; Gaber, M.M.; Elyan, E. Random forests: From early developments to recent advancements. Syst. Sci. Control Eng. 2014, 2, 602–609. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Cutler, A.; Cutler, D.R.; Stevens, J.R. Random Forests. In Ensemble Machine Learning; Zhang, C., Ma, Y., Eds.; Springer: Boston, MA, USA, 2012; pp. 157–175. [Google Scholar] [CrossRef]

- Ho, T.K. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar] [CrossRef] [Green Version]

- Guyon, I.; Elisseeff, A.; Kaelbling, L.P. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar] [CrossRef] [Green Version]

- Probst, P.; Wright, M.N.; Boulesteix, A. Hyperparameters and tuning strategies for random forest. WIREs Data Min. Knowl. Discov. 2019, 9, e1301. [Google Scholar] [CrossRef] [Green Version]

- Bergstra, J.; Bengio, Y. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Van Essen, B.; Macaraeg, C.; Gokhale, M.; Prenger, R. Accelerating a random forest classifier: Multi-core, GPGPU, or FPGA? In Proceedings of the 2012 IEEE 20th International Symposium on Field-Programmable Custom Computing Machines, Toronto, ON, Canada, 29 April–1 May 2012. [Google Scholar] [CrossRef] [Green Version]

- Story, M.; Congalton, R. Accuracy Assessment: A User’s Perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Gordon, M.; Kochen, M. Recall-precision trade-off: A derivation. J. Am. Soc. Inf. Sci. 1989, 40, 145–151. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning—ICML ’06, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar] [CrossRef] [Green Version]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond Accuracy, F-Score and ROC: A Family of Discriminant Measures for Performance Evaluation. In AI 2006: Advances in Artificial Intelligence; Hutchison, D., Kanade, T., Kittler, J., Kleinberg, J.M., Mattern, F., Mitchell, J.C., Naor, M., Nierstrasz, O., Pandu Rangan, C., Steffen, B., et al., Eds.; Series Title: Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4304, pp. 1015–1021. [Google Scholar] [CrossRef] [Green Version]

- Provost, F.J.; Fawcett, T.; Kohavi, R. The Case against Accuracy Estimation for Comparing Induction Algorithms. In Proceedings of the ICML ’98: Proceedings of the Fifteenth International Conference on Machine Learning, Madison, WI, USA, 24–27 July 1998; pp. 445–453. [Google Scholar]

- Manning, C.D.; Schütze, H. Foundations of Statistical Natural Language Processing; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Mohri, M.; Rostamizadeh, A.; Talwalkar, A. Foundations of Machine Learning; MIT Press: Cambridge, MA, USA; London, UK, 2018. [Google Scholar]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Landgrebe, T.; Duin, R. Efficient Multiclass ROC Approximation by Decomposition via Confusion Matrix Perturbation Analysis. IEEE Trans. Patt. Anal. Mach. Intell. 2008, 30, 810–822. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing; The Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Bundesministerium für Verkehr, Bau und Stadtentwicklung. Technische Lieferbedingungen für Streckenstationen; Bundesministerium für Verkehr, Bau und Stadtentwicklung: Berlin, Germany, 2012.

- Bundesrepublik Deutschland. Straßenverkers-Ordnung; Bundesrepublik Deutschland: Berlin, Germany, 2013. [Google Scholar]

- Bundesanstalt für Straßenwesen. Automatische Dauerzählstellen—Beschreibung der CSV-Ergebnistabelle; Bundesanstalt für Straßenwesen: Bergisch Gladbach, Germany, 2020.

- ECMWF Copernicus Climate Change Service; European Centre for Medium-Range Weather Forecasts: Reading, UK, 2021.

- Ghosh, A.; Sabuj, M.S.; Sonet, H.H.; Shatabda, S.; Farid, D.M. An Adaptive Video-based Vehicle Detection, Classification, Counting, and Speed-measurement System for Real-time Traffic Data Collection. In Proceedings of the 2019 IEEE Region 10 Symposium (TENSYMP), Kolkata, India, 7–9 June 2019; pp. 541–546. [Google Scholar] [CrossRef]

- Lippitt, C.D.; Zhang, S. The impact of small unmanned airborne platforms on passive optical remote sensing: A conceptual perspective. Int. J. Remote Sens. 2018, 39, 4852–4868. [Google Scholar] [CrossRef]

- Tabor, K.M.; Holland, M.B. Opportunities for improving conservation early warning and alert systems. Remote Sens. Ecol. Conserv. 2021, 7, 7–17. [Google Scholar] [CrossRef]

| Index | Denotation | Explanation |

|---|---|---|

| 0 | B02_centered | Difference B02 from B02 mean |

| 1 | B03_centered | Difference B03 from B03 mean |

| 2 | B04_centered | Difference B04 from B04 mean |

| 3 | B08_centered | Difference B08 from B08 mean |

| 4 | B03_B02_ratio | Difference B03 vs. B02 |

| 5 | B04_B02_ratio | Difference B04 vs. B02 |

| 6 | reflectance_variance | Variation among B02, B03, B04 |

| Precision | Recall | Score | Support | Overall Accuracy | |

|---|---|---|---|---|---|

| blue | 0.91 | 0.87 | 0.89 | 350 | |

| green | 0.9 | 0.86 | 0.88 | 350 | |

| red | 0.87 | 0.79 | 0.83 | 350 | |

| background | 0.73 | 0.86 | 0.79 | 350 | |

| Average | 0.85 | 0.84 | 0.85 | ||

| 0.84 |

| Mean Count | Standard Deviation | Max. Count | Min. Count | |

|---|---|---|---|---|

| Stations | 63.18 | 54.98 | 206 | 0 |

| Sentinel-2 | 35.14 | 34.45 | 170 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fisser, H.; Khorsandi, E.; Wegmann, M.; Baier, F. Detecting Moving Trucks on Roads Using Sentinel-2 Data. Remote Sens. 2022, 14, 1595. https://doi.org/10.3390/rs14071595

Fisser H, Khorsandi E, Wegmann M, Baier F. Detecting Moving Trucks on Roads Using Sentinel-2 Data. Remote Sensing. 2022; 14(7):1595. https://doi.org/10.3390/rs14071595

Chicago/Turabian StyleFisser, Henrik, Ehsan Khorsandi, Martin Wegmann, and Frank Baier. 2022. "Detecting Moving Trucks on Roads Using Sentinel-2 Data" Remote Sensing 14, no. 7: 1595. https://doi.org/10.3390/rs14071595

APA StyleFisser, H., Khorsandi, E., Wegmann, M., & Baier, F. (2022). Detecting Moving Trucks on Roads Using Sentinel-2 Data. Remote Sensing, 14(7), 1595. https://doi.org/10.3390/rs14071595