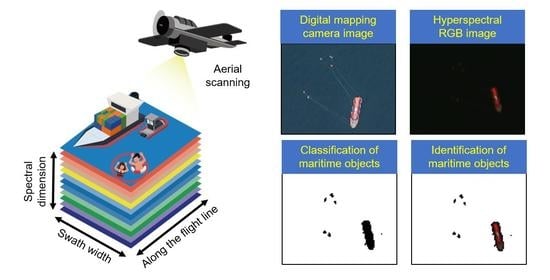

Classification and Identification of Spectral Pixels with Low Maritime Occupancy Using Unsupervised Machine Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Analysis Procedure

2.2. Clustering Techniques

- (1)

- Randomly place K centroids.

- (2)

- Allocate each data point to the nearest centroid (clustering).

- (3)

- Update the centroid of the cluster based on the data designated as a cluster.

- (4)

- When the centroid is updated, the new centroid is updated with the average value of the assigned data.

- (5)

- Repeat steps 2 and 3 until the new centroid is not updated.

- (1)

- Select an arbitrary point that satisfies the condition of core points as an initial value (seed) in the spatial dataset.

- (2)

- Separate the core points and border points by extracting density (base)-reachable points from the initial value, and classify points that do not belong to these as noises.

- (3)

- Connect the core points located around a circle of radius EPS.

- (4)

- The connected core points are defined as one cluster.

- (5)

- All border points are allocated to one cluster (if the border point spans multiple clusters, it is allocated to the first cluster in the iterative process).

- (6)

- The algorithm repeats the above steps and terminates when no more points can be allocated to clusters.

2.3. Color Mapping Techniques

2.4. Airborne Hyperspectral Data

2.5. Field Spectrum Data

3. Results and Discussion

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, S.; Qi, Z.; Zhang, D. Ship Tracking Using Background Subtraction and Inter-frame Correlation. In Proceedings of the 2009 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar]

- Fefilatyev, S.; Goldgof, D.; Lembke, C. Tracking Ships from Fast Moving Camera through Image Registration. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; IEEE: Piscataway, NJ, USA, 2010. [Google Scholar]

- Shi, Z.; Yu, X.; Jiang, Z.; Li, B. Ship Detection in High-resolution Optical Imagery Based on Anomaly Detector and Local Shape Feature. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4511–4523. [Google Scholar] [CrossRef]

- Shao, Z.; Wu, W.; Wang, Z.; Du, W.; Li, C. SeaShips: A Large-scale Precisely Annotated Dataset for Ship Detection. IEEE Trans. Multimed. 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Kim, K.; Hong, S.; Choi, B.; Kim, E. Probabilistic Ship Detection and Classification Using Deep Learning. Appl. Sci. 2018, 8, 936. [Google Scholar] [CrossRef] [Green Version]

- Kanjir, U.; Greidanus, H.; Oštir, K. Vessel Detection and Classification from Spaceborne Optical Images: A Literature Survey. Remote Sens. Environ. 2018, 207, 1–26. [Google Scholar] [CrossRef]

- Yu, Y.; Yang, X.; Xiao, S.; Lin, J. Automated Ship Detection from Optical Remote Sensing Images. KEM 2012, 500, 785–791. [Google Scholar] [CrossRef]

- Lang, H.; Xi, Y.; Zhang, X. Ship Detection in High-Resolution SAR Images by Clustering Spatially Enhanced Pixel Descriptor. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5407–5423. [Google Scholar] [CrossRef]

- Chen, P.; Li, Y.; Zhou, H.; Liu, B.; Liu, P. Detection of Small Ship Objects Using Anchor Boxes Cluster and Feature Pyramid Network Model for SAR Imagery. JMSE 2020, 8, 112. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Chen, L.; Li, F.; Huang, M. Ship Detection and Tracking Method for Satellite Video Based on Multiscale Saliency and Surrounding Contrast Analysis. J. Appl. Remote Sens. 2019, 13, 026511. [Google Scholar] [CrossRef]

- Wu, J.; Mao, S.; Wang, X.; Zhang, T. Ship Target Detection and Tracking in Cluttered Infrared Imagery. Opt. Eng. 2011, 50, 057207. [Google Scholar] [CrossRef]

- Qi, S.; Wu, J.; Zhou, Q.; Kang, M. Low-resolution Ship Detection from High-altitude Aerial Images. In Proceedings of the 2017 10th International Symposium on Multispectral Image Processing and Pattern Recognition (MIPPR2017), Xiangyang, China, 28–29 October 2017; SPIE: Bellingham, WA, USA, 2017. [Google Scholar]

- Zhao, L.; Shi, G. Maritime Anomaly Detection Using Density-based Clustering and Recurrent Neural Network. J. Navig. 2019, 72, 894–916. [Google Scholar] [CrossRef]

- Huang, L.; Wen, Y.; Guo, W.; Zhu, X.; Zhou, C.; Zhang, F.; Zhu, M. Mobility Pattern Analysis of Ship Trajectories Based on Semantic Transformation and Topic Model. Ocean. Eng. 2020, 201, 107092. [Google Scholar] [CrossRef]

- Zhao, L.; Shi, G.; Yang, J. An Adaptive Hierarchical Clustering Method for Ship Trajectory Data Based on DBSCAN Algorithm. In Proceedings of the 2017 IEEE 2nd International Conference on Big Data Analysis (ICBDA), Beijing, China, 10–12 March 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Hang, R.; Li, Z.; Liu, Q.; Ghamisi, P.; Bhattacharyya, S.S. Hyperspectral Image Classification with Attention-aided CNNs. IEEE Trans. Geosci. Remote Sens. 2021, 59, 2281–2293. [Google Scholar] [CrossRef]

- Hirano, A.; Madden, M.; Welch, R. Hyperspectral Image Data for Mapping Wetland Vegetation. Wetlands 2003, 23, 436–448. [Google Scholar] [CrossRef]

- Prospere, K.; McLaren, K.; Wilson, B. Plant Species Discrimination in a Tropical Wetland Using In Situ Hyperspectral Data. Remote Sens. 2014, 6, 8494–8523. [Google Scholar] [CrossRef] [Green Version]

- Gao, B.-C.; Montes, M.J.; Davis, C.O.; Goetz, A.F.H. Atmospheric Correction Algorithms for Hyperspectral Remote Sensing Data of Land and Ocean. Remote Sens. Environ. 2009, 113, S17–S24. [Google Scholar] [CrossRef]

- Staenz, K.; Neville, R.A.; Lévesque, J.; Szeredi, T.; Singhroy, V.; Borstad, G.A.; Hauff, P. Evaluation of Casi and SFSI Hyperspectral Data for Environmental and Geological Applications—Two Case Studies. Can. J. Remote Sens. 1999, 25, 311–322. [Google Scholar] [CrossRef]

- Randolph, K.; Wilson, J.; Tedesco, L.; Li, L.; Pascual, D.L.; Soyeux, E. Hyperspectral Remote Sensing of Cyanobacteria in Turbid Productive Water Using Optically Active Pigments, Chlorophyll a and Phycocyanin. Remote Sens. Environ. 2008, 112, 4009–4019. [Google Scholar] [CrossRef]

- Delegido, J.; Alonso, L.; González, G.; Moreno, J. Estimating Chlorophyll Content of Crops from Hyperspectral Data Using a Normalized Area over Reflectance Curve (NAOC). Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 165–174. [Google Scholar] [CrossRef]

- Xi, G.; Huang, X.; Xie, Y.; Gang, B.; Bao, Y.; Dashzebeg, G.; Nanzad, T.; Dorjsuren, A.; Enkhnasan, D.; Ariunaa, M. Detection of Larch Forest Stress from Jas’s Larch Inchworm (Erannis Jacobsoni Djak) Attack Using Hyperspectral Remote Sensing. Remote Sens. 2021, 14, 124. [Google Scholar] [CrossRef]

- Liu, H.; Yu, T.; Hu, B.; Hou, X.; Zhang, Z.; Liu, X.; Liu, J.; Wang, X.; Zhong, J.; Tan, Z.; et al. UAV-Borne Hyperspectral Imaging Remote Sensing System Based on Acousto-Optic Tunable Filter for Water Quality Monitoring. Remote Sens. 2021, 13, 4069. [Google Scholar] [CrossRef]

- Guyot, A.; Lennon, M.; Thomas, N.; Gueguen, S.; Petit, T.; Lorho, T.; Cassen, S.; Hubert-Moy, L. Airborne Hyperspectral Imaging for Submerged Archaeological Mapping in Shallow Water Environments. Remote Sens. 2019, 11, 2237. [Google Scholar] [CrossRef] [Green Version]

- Salehi, S.; Lorenz, S.; Sørensen, E.V.; Zimmermann, R.; Fensholt, R.; Heincke, B.H.; Kirsch, M.; Gloaguen, R. Integration of Vessel-Based Hyperspectral Scanning and 3D-Photogrammetry for Mobile Mapping of Steep Coastal Cliffs in the Arctic. Remote Sens. 2018, 10, 175. [Google Scholar] [CrossRef] [Green Version]

- Han, Y.; Li, J.; Zhang, Y.; Hong, Z.; Wang, J. Sea Ice Detection Based on an Improved Similarity Measurement Method Using Hyperspectral Data. Sensors 2017, 17, 1124. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Park, J.-J.; Park, K.-A.; Foucher, P.-Y.; Deliot, P.; Floch, S.L.; Kim, T.-S.; Oh, S.; Lee, M. Hazardous Noxious Substance Detection Based on Ground Experiment and Hyperspectral Remote Sensing. Remote Sens. 2021, 13, 318. [Google Scholar] [CrossRef]

- Brando, V.E.; Lovell, J.L.; King, E.A.; Boadle, D.; Scott, R.; Schroeder, T. The Potential of Autonomous Ship-borne Hyperspectral Radiometers for the Validation of Ocean Color Radiometry Data. Remote Sens. 2016, 8, 150. [Google Scholar] [CrossRef] [Green Version]

- Freitas, S.; Silva, H.; Almeida, J.M.; Silva, E. Convolutional Neural Network Target Detection in Hyperspectral Imaging for Maritime Surveillance. Int. J. Adv. Robot. Syst. 2019, 16, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Park, J.-J.; Oh, S.; Park, K.-A.; Foucher, P.-Y.; Jang, J.-C.; Lee, M.; Kim, T.-S.; Kang, W.-S. The Ship Detection Using Airborne and In-situ Measurements Based on Hyperspectral Remote Sensing. J. Korean Earth Sci. Soc. 2017, 38, 535–545. [Google Scholar] [CrossRef]

- Zhengzhou, W.; Quinye, T.; Hongguang, L.; Bingliang, H. Surface Ship Target Detection in Hyperspectral Images Based on Improved Variance Minimum Algorithm. In Proceedings of the 2016 8th international Conference on Digital Image Processing (ICDIP2016), Chengu, China, 20–22 May 2016; SPIE: Bellingham, WA, USA, 2016. [Google Scholar]

- Yan, L.; Noro, N.; Takara, Y.; Ando, F.; Yamaguchi, M. Using Hyperspectral Image Enhancement Method for Small Size Object Detection on the Sea Surface. In Proceedings of the 2015 SPIE Remote Sensing, Toulouse, France, 21–24 September 2015; SPIE: Bellingham, WA, USA, 2015. [Google Scholar]

- Yan, L.; Yamaguchi, M.; Noro, N.; Takara, Y.; Ando, F. A Novel Two-stage Deep Learning-based Small-object Detection using Hyperspectral Images. Opt. Rev. 2019, 26, 597–606. [Google Scholar] [CrossRef]

- Park, Y.J.; Jang, H.J.; Kim, Y.S.; Baik, K.H.; Lee, H.S. A Research on the Applicability of Water Wuality Analysis using the Hyperspectral Sensor. J. Korean Soc. Environ. Anal. 2014, 17, 113–124. [Google Scholar]

- Park, J.-J.; Oh, S.; Park, K.-A.; Kim, T.-S.; Lee, M. Applying Hyperspectral Remote Sensing Methods to Ship Detection Based on Airborne and Ground Experiments. Int. J. Remote Sens. 2020, 41, 5928–5952. [Google Scholar] [CrossRef]

- Li, N.; Ding, L.; Zhao, H.; Shi, J.; Wang, D.; Gong, X. Ship Detection Based on Multiple Features in Random Forest Model for Hyperspectral Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–3, 891–895. [Google Scholar] [CrossRef] [Green Version]

- Park, J.-J.; Kim, T.-S.; Park, K.-A.; Oh, S.; Lee, M.; Foucher, P.-Y. Application of Spectral Mixture Analysis to Vessel Monitoring Using Airborne Hyperspectral Data. Remote Sens. 2020, 12, 2968. [Google Scholar] [CrossRef]

- Žalik, K.R. An Efficient K′-Means Clustering Algorithm. Pattern Recognit. Lett. 2008, 29, 1385–1391. [Google Scholar] [CrossRef]

- Jing, L.; Ng, M.K.; Huang, J.Z. An Entropy Weighting K-Means Algorithm for Subspace Clustering of High-Dimensional Sparse Data. IEEE Trans. Knowl. Data Eng. 2007, 19, 1026–1041. [Google Scholar] [CrossRef]

- Kodinariya, T.M.; Makwana, P.R. Review on Determining Number of Cluster in K-means Clustering. Int. J. Adv. Res. Comput. Sci. Manag. Stud. 2013, 1, 90–95. [Google Scholar]

- Scikit-Learn.org. Machine Learning in Python. Available online: https://scikit-learn.org/stable/modules/clustering.html#clustering (accessed on 15 September 2021).

- Kwon, H.; Woo, M.; Kim, Y.H.; Kang, S. Statistical Leakage Analysis Using Gaussian Mixture Model. IEEE Access 2018, 6, 51939–51950. [Google Scholar] [CrossRef]

- Reynolds, D.A. Gaussian mixture models. In Encyclopedia of Biometrics; Li, S.Z., Jain, A., Eds.; Springer: Boston, MA, USA, 2009; Volume 741, pp. 659–663. [Google Scholar]

- Erman, J.; Arlitt, M.; Mahanti, A. Traffic Classification Using Clustering Algorithms. In Proceedings of the 2006 SIGCOMM workshop on Mining network data, Pisa, Italy, 15 September 2006; ACM Press: New York, NY, USA, 2006. [Google Scholar]

- Hahsler, M.; Piekenbrock, M.; Doran, D. Dbsacn: Fast Density-Based Clustering with R. J. Stat. Soft. 2019, 91, 1–30. [Google Scholar] [CrossRef] [Green Version]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD-96), Portland, OR, USA, 2–4 August 1996; AAAI Press: Palo Alto, CA, USA, 1996. [Google Scholar]

- De Carvalho, O.A.; Meneses, P.R. Spectral Correlation Mapper (SCM): An Improvement on the Spectral Angle Mapper (SAM). In Proceedings of the Summaries of the 9th JPL Airborne Earth Science Workshop, Pasadena, CA, USA, 23–26 January 2000. [Google Scholar]

| K-Means | Gaussian Mixture | DBSCAN | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| K = 2 | K =3 | K = 4 | K = 5 | n = 2 | n = 3 | n = 4 | n = 5 | EPS 0.015 | EPS 0.025 | EPS 0.035 | EPS 0.045 | |

| Precision | 0.2886 | 0.0703 | 0.0395 | 0.0391 | 0.1562 | 0.0547 | 0.0392 | 0.0354 | 0.5348 | 0.9322 | 0.9480 | 0.9599 |

| Recall | 0.6852 | 0.9155 | 0.9067 | 0.9433 | 0.9739 | 0.9996 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 0.6587 | 0.4733 |

| F1 Score | 0.4061 | 0.1306 | 0.0757 | 0.0751 | 0.2692 | 0.1307 | 0.0754 | 0.0684 | 0.6969 | 0.9649 | 0.7773 | 0.6340 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, D.; Oh, S.; Lee, D. Classification and Identification of Spectral Pixels with Low Maritime Occupancy Using Unsupervised Machine Learning. Remote Sens. 2022, 14, 1828. https://doi.org/10.3390/rs14081828

Seo D, Oh S, Lee D. Classification and Identification of Spectral Pixels with Low Maritime Occupancy Using Unsupervised Machine Learning. Remote Sensing. 2022; 14(8):1828. https://doi.org/10.3390/rs14081828

Chicago/Turabian StyleSeo, Dongmin, Sangwoo Oh, and Daekyeom Lee. 2022. "Classification and Identification of Spectral Pixels with Low Maritime Occupancy Using Unsupervised Machine Learning" Remote Sensing 14, no. 8: 1828. https://doi.org/10.3390/rs14081828