Developing a More Reliable Aerial Photography-Based Method for Acquiring Freeway Traffic Data

Abstract

:1. Introduction

2. Literature Review

3. Collection of Real Traffic Flow

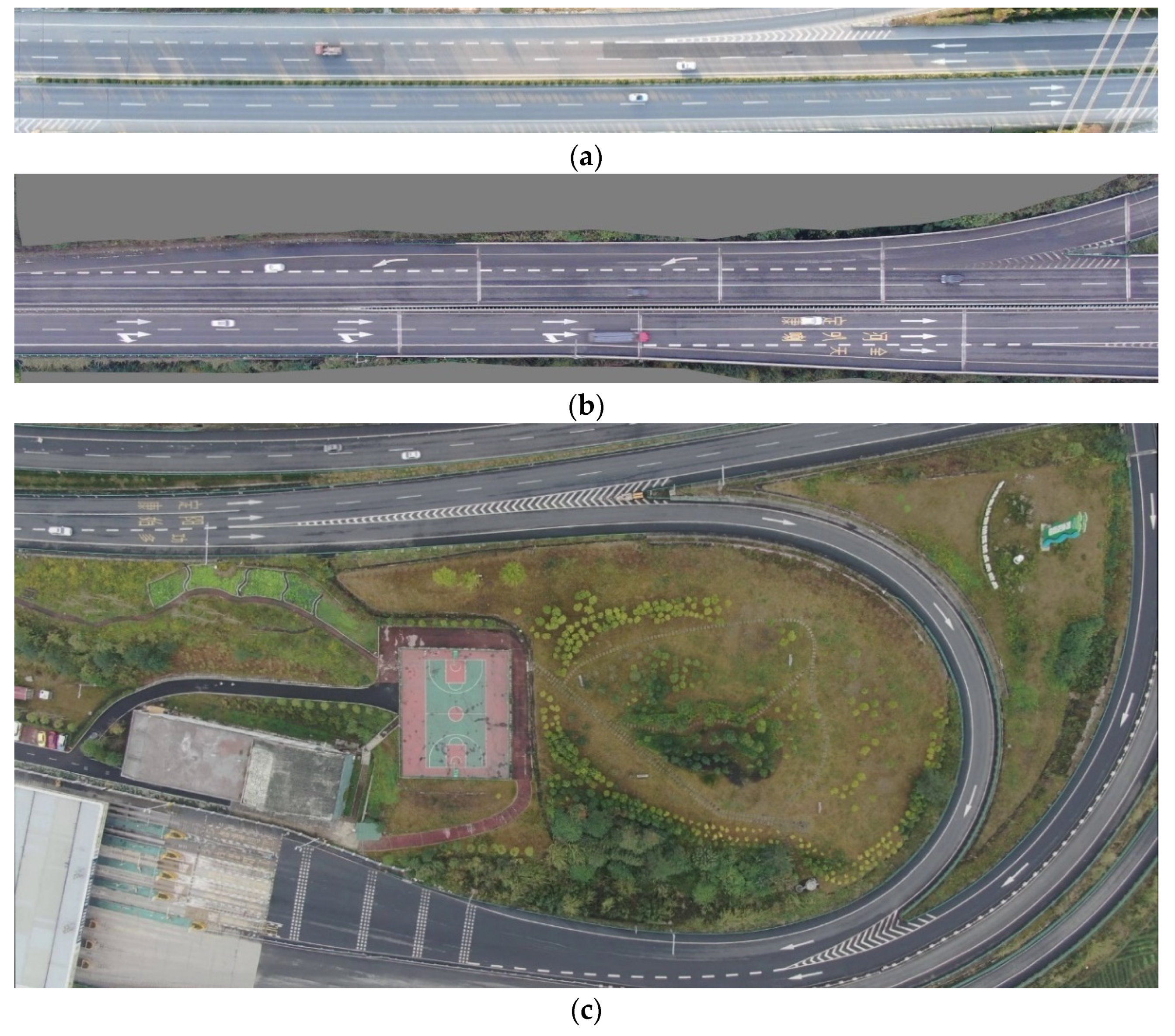

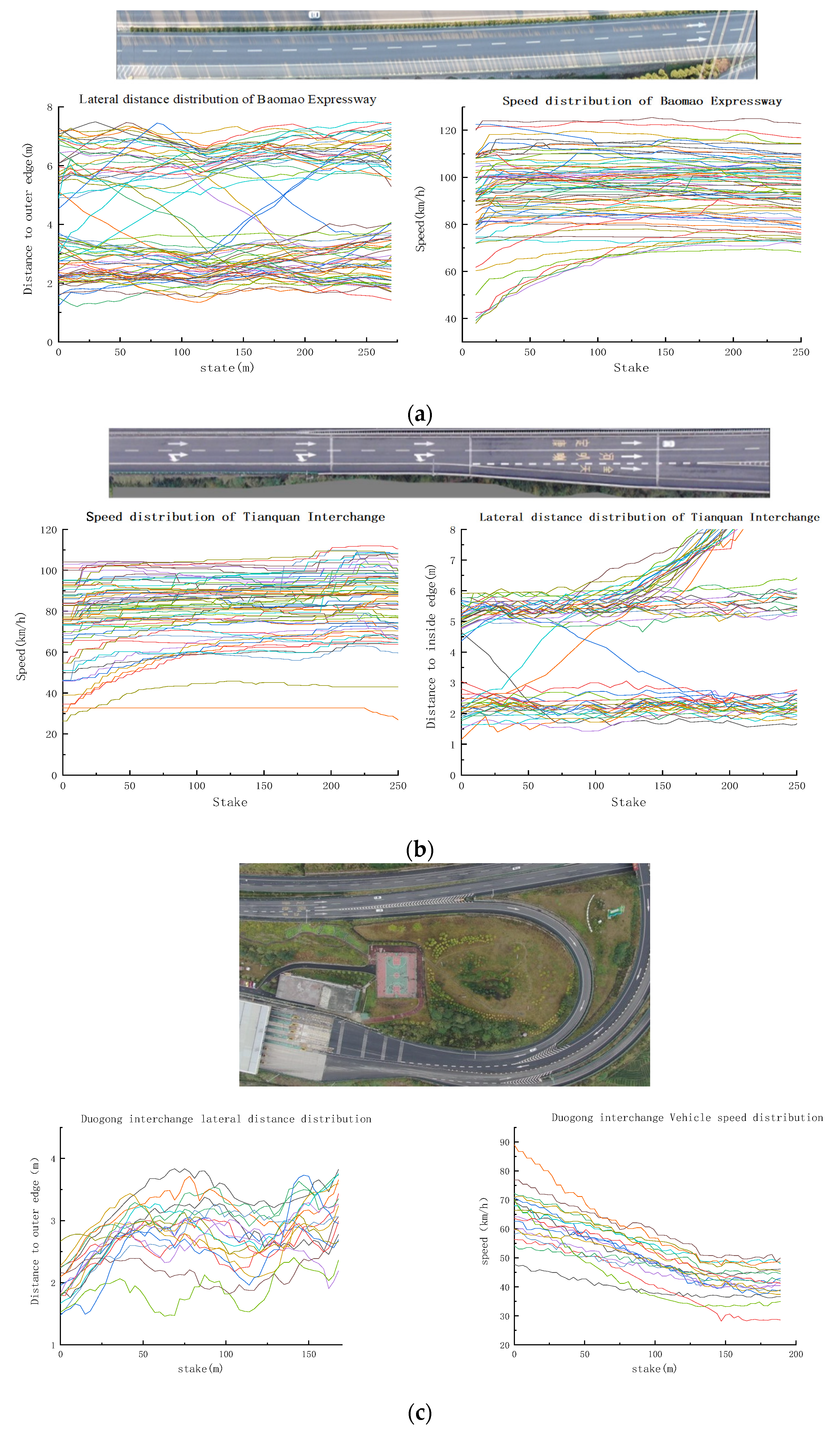

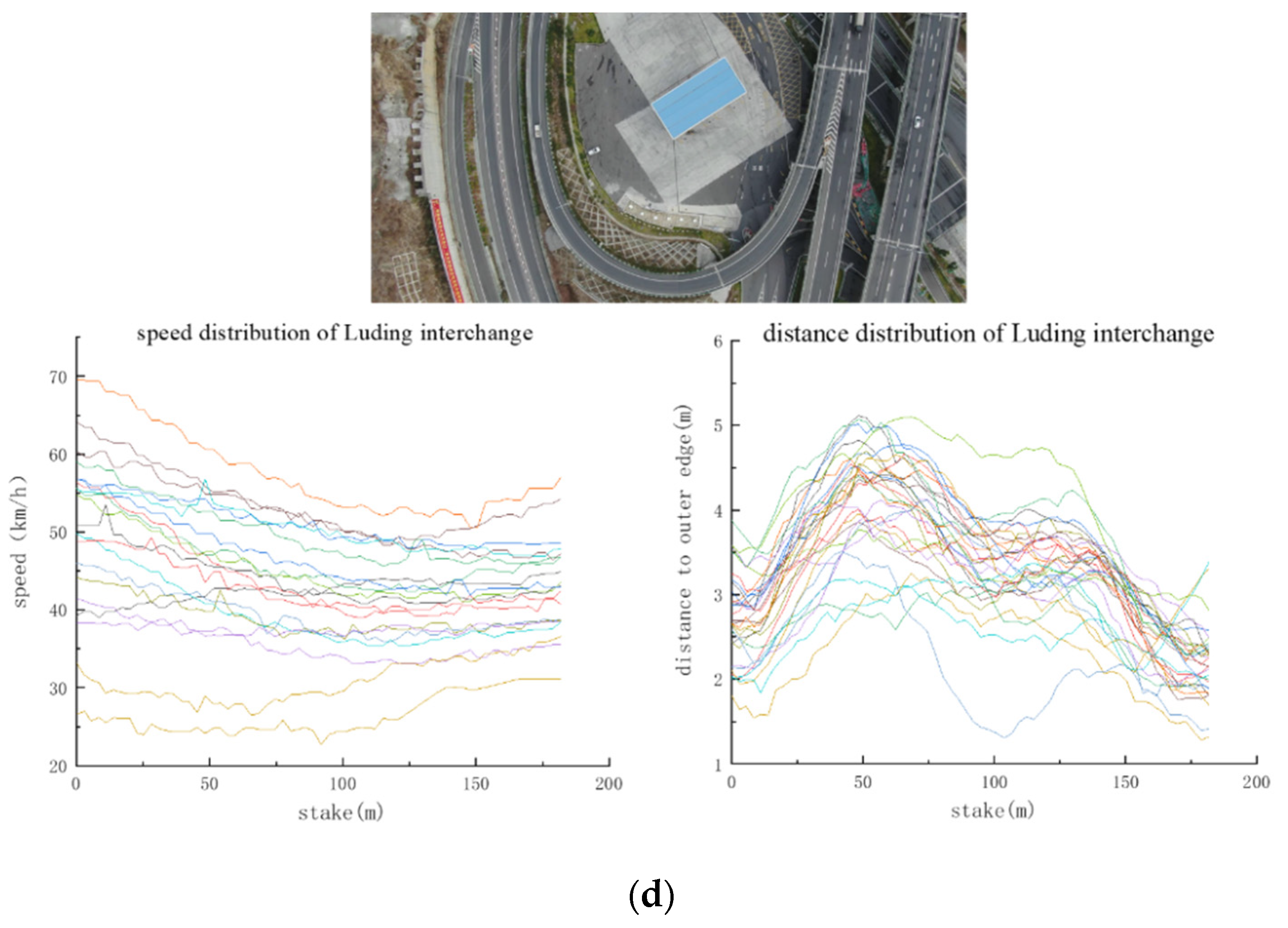

3.1. Research Location

3.2. Research Equipment

3.3. Research Process and Data Acquisition

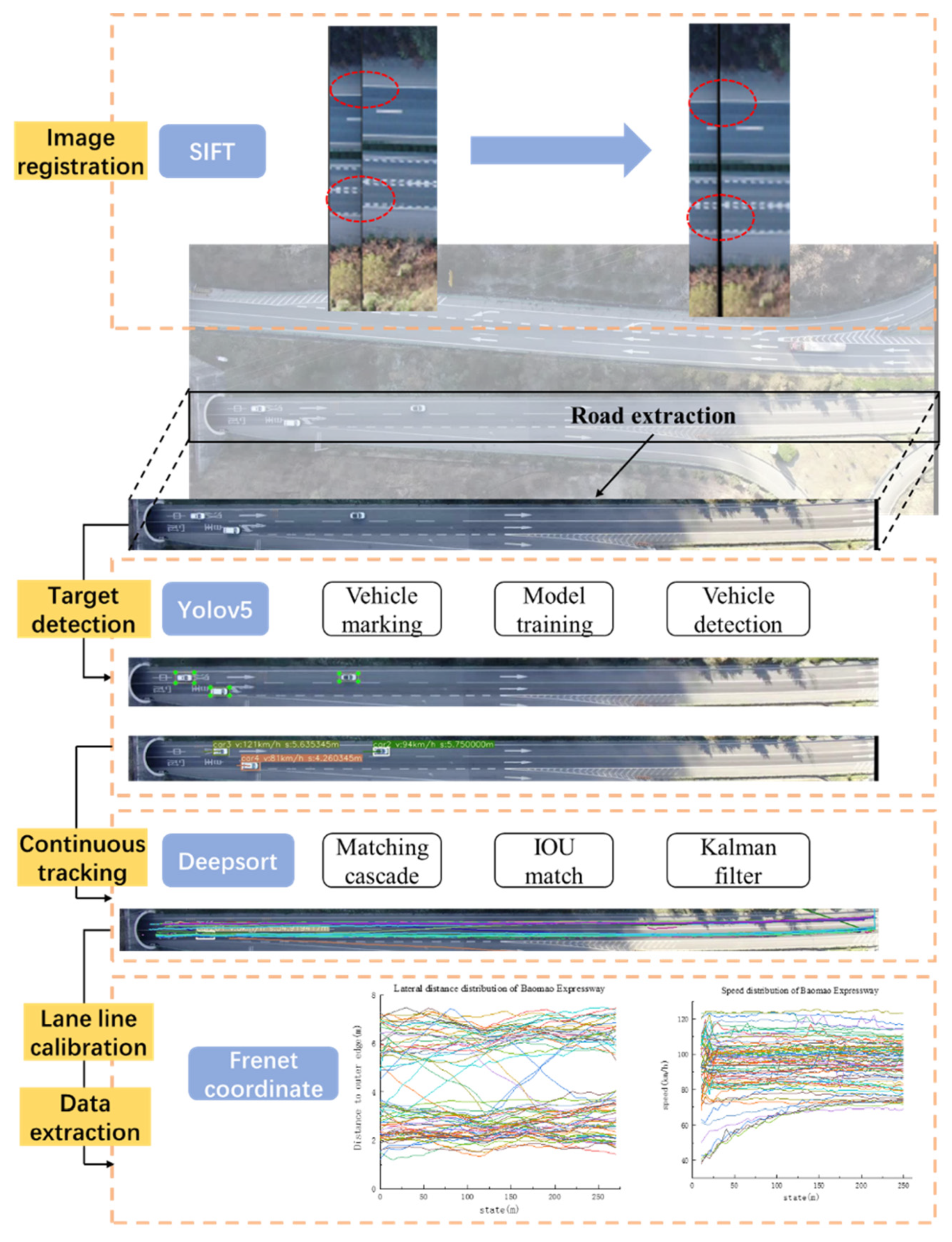

4. Materials and Methods

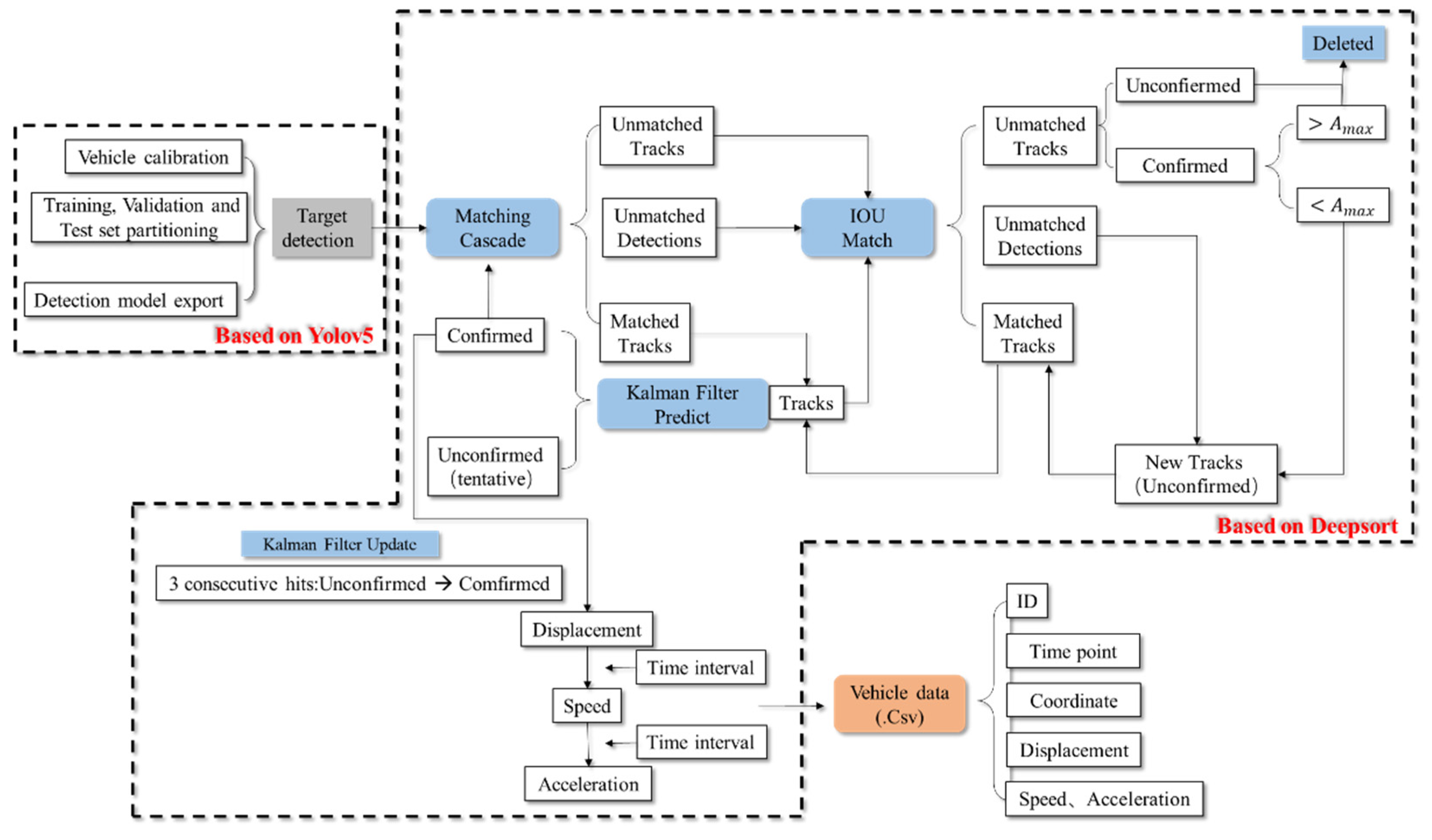

- (1)

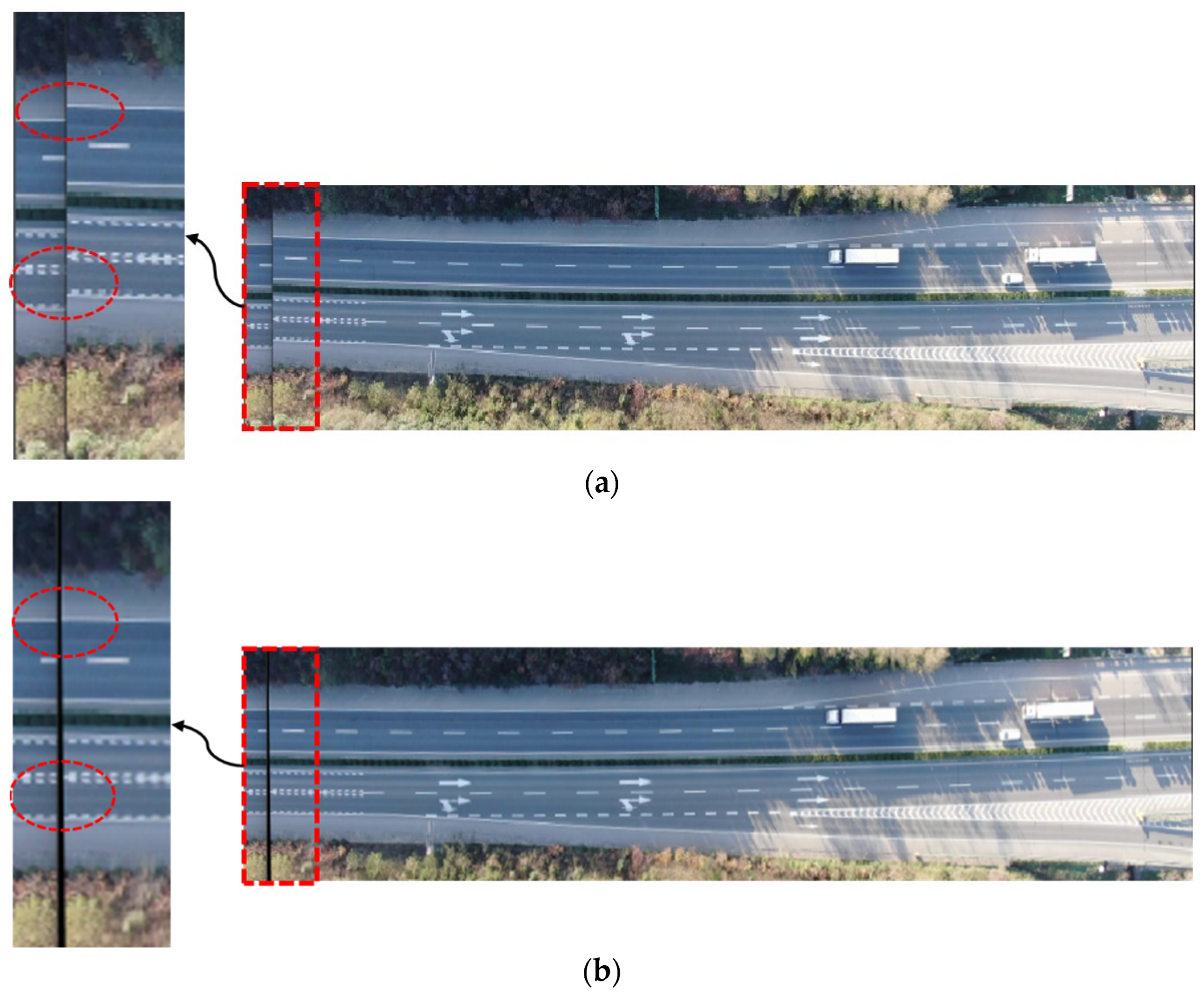

- Image registration: In drone aerial photography, camera shake is unavoidable and will result in significant mistakes. To solve this problem, we applied the SIFT algorithm to register the video.

- (2)

- Target detection: Following a comparative analysis, we employed YOLOv5 based on deep learning to realize vehicle detection, calibrating over 6000 images of cars of various forms and obtaining the best recognition model after 100 training cycles.

- (3)

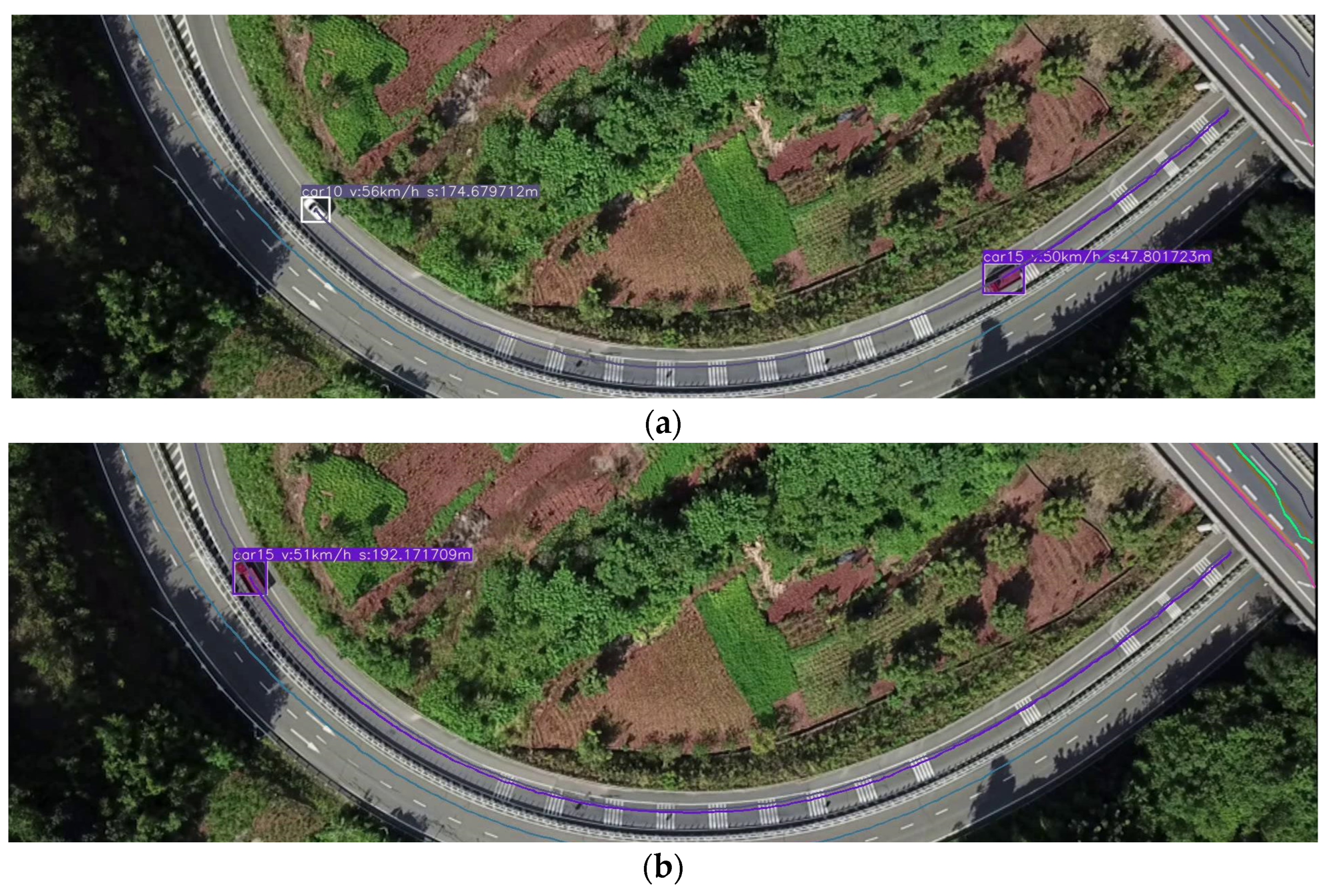

- Continuous vehicle tracking: After achieving high-precision vehicle identification, the DeepSORT algorithm was used for continuous vehicle tracking and trajectory extraction, and the vehicle speed was recovered using the distance calibration value and time interval.

- (4)

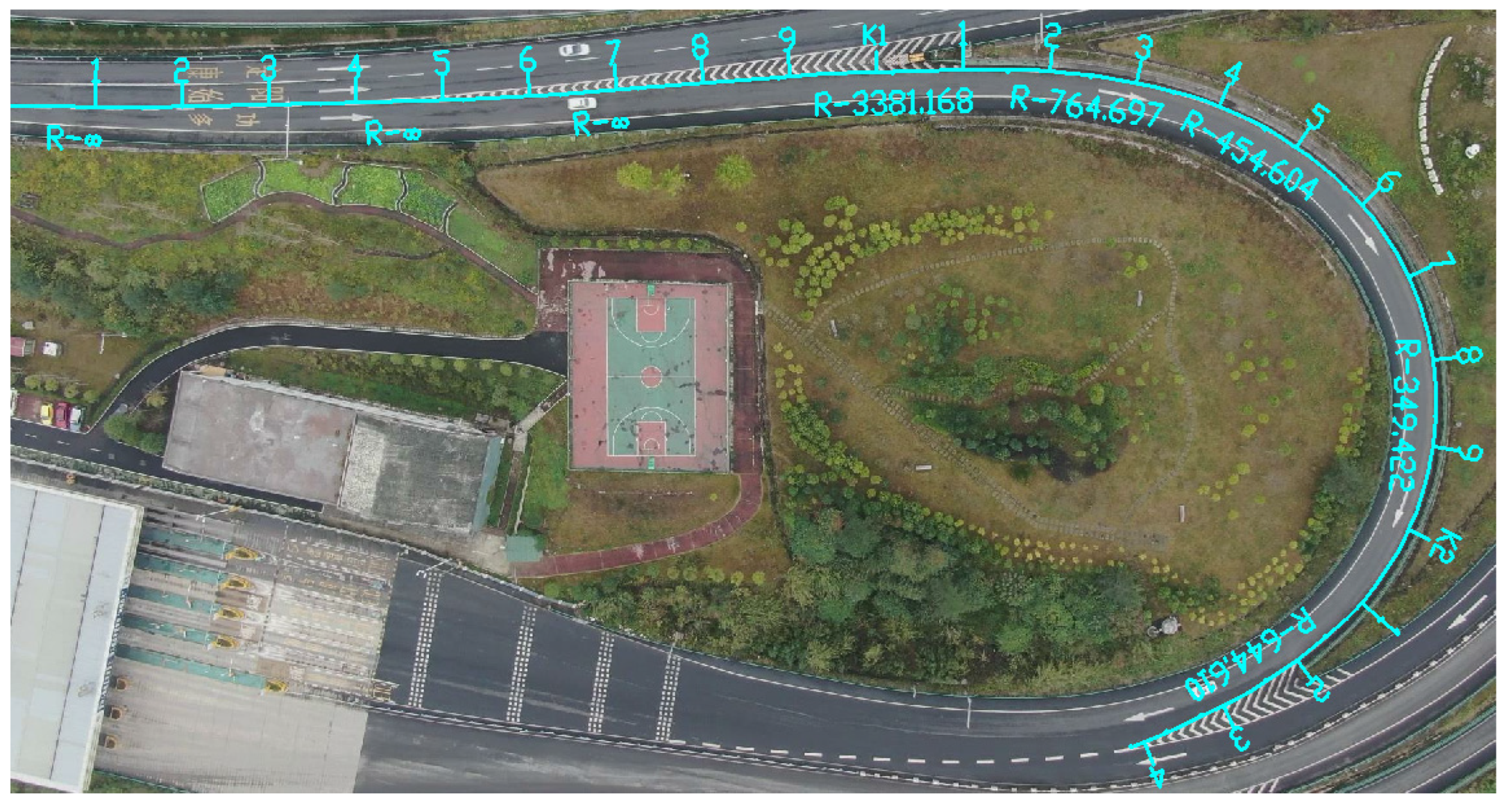

- Lane line calibration: To ensure a solid linkage of vehicle data with lane lines, we first calibrated lane lines and then computed the relationship with the vehicle.

- (5)

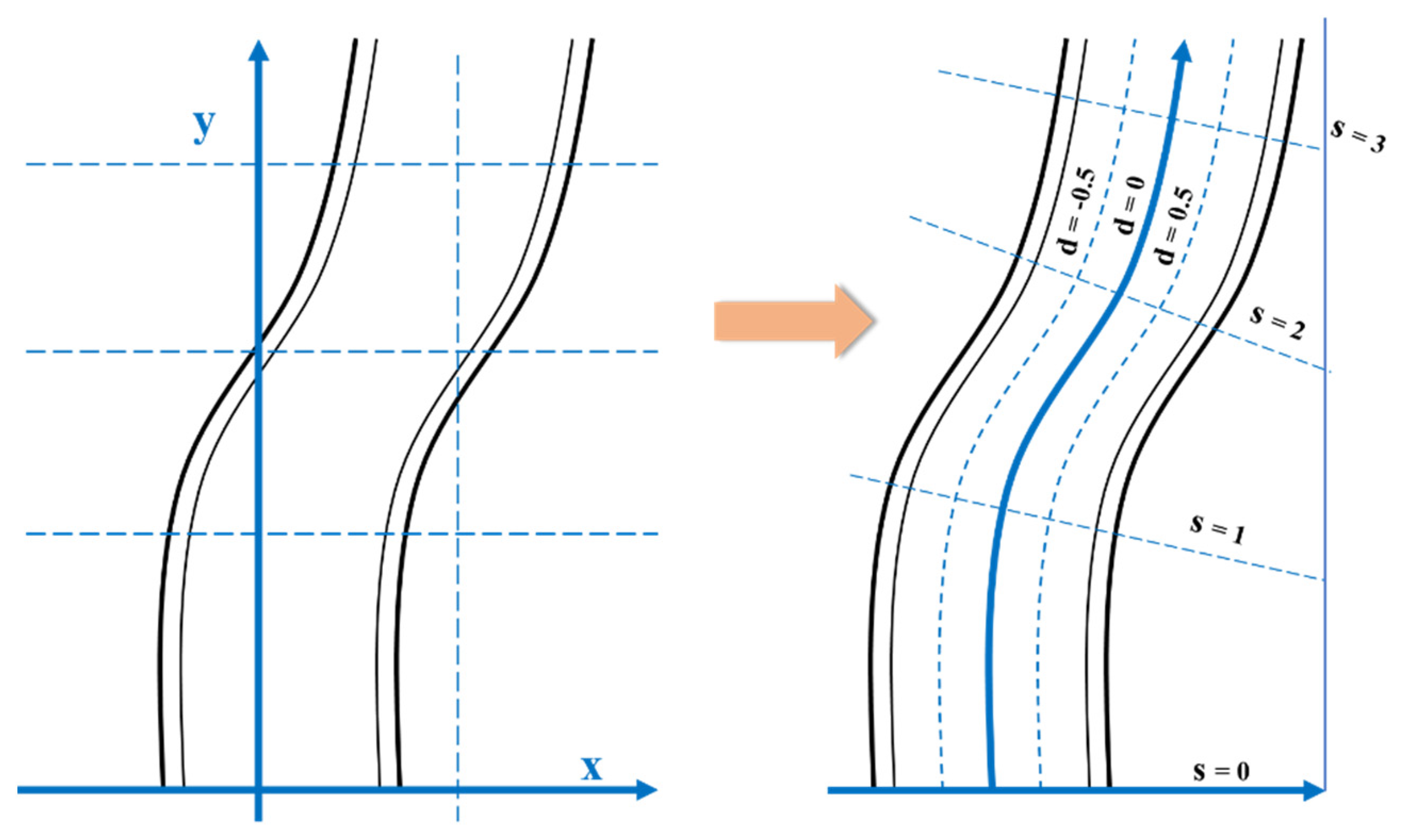

- Data extraction: We used Python, based on the Helen formula, to extract vehicle and lane line data, and established a Frenet coordinate system for data display.

4.1. Image Registration Based on SIFT Algorithm

4.2. Deep Learning-Based Vehicle Data Extraction

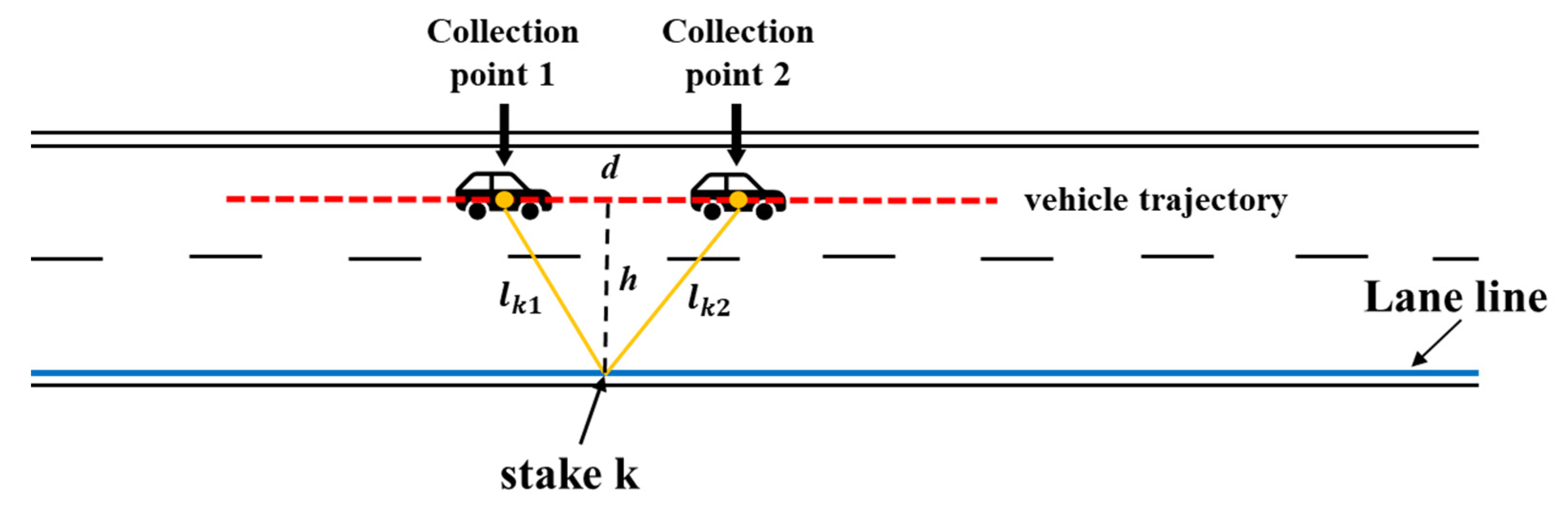

4.2.1. Calibration of the Pixel Distance Parameter

4.2.2. Vehicle Detection Based on YOLOv5

4.2.3. Continuous Vehicle Tracking Using the DeepSORT Algorithm

4.2.4. Validation of Data

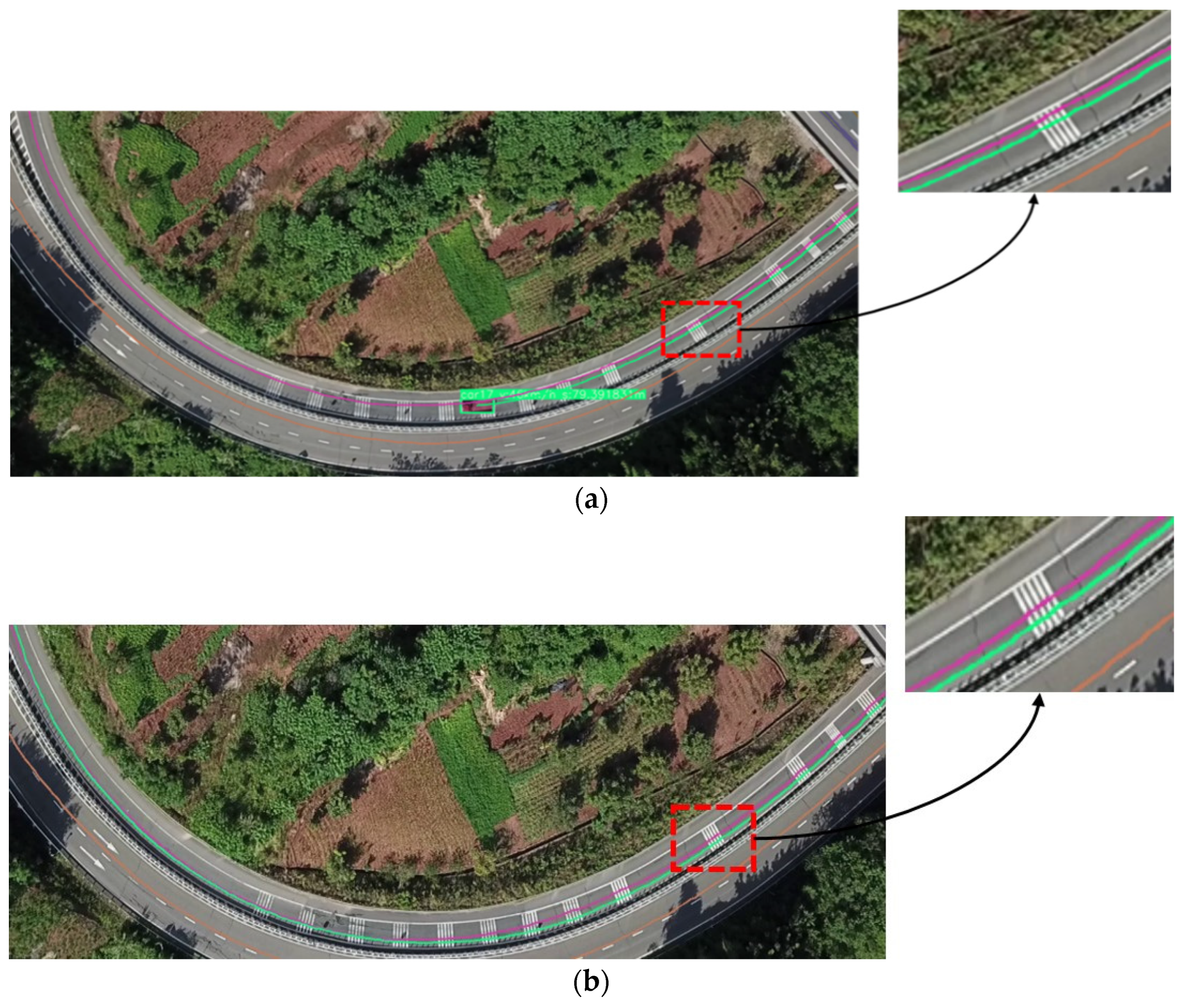

4.3. Vehicle Data Associated with Lane Lines

4.3.1. Lane Line Calibration

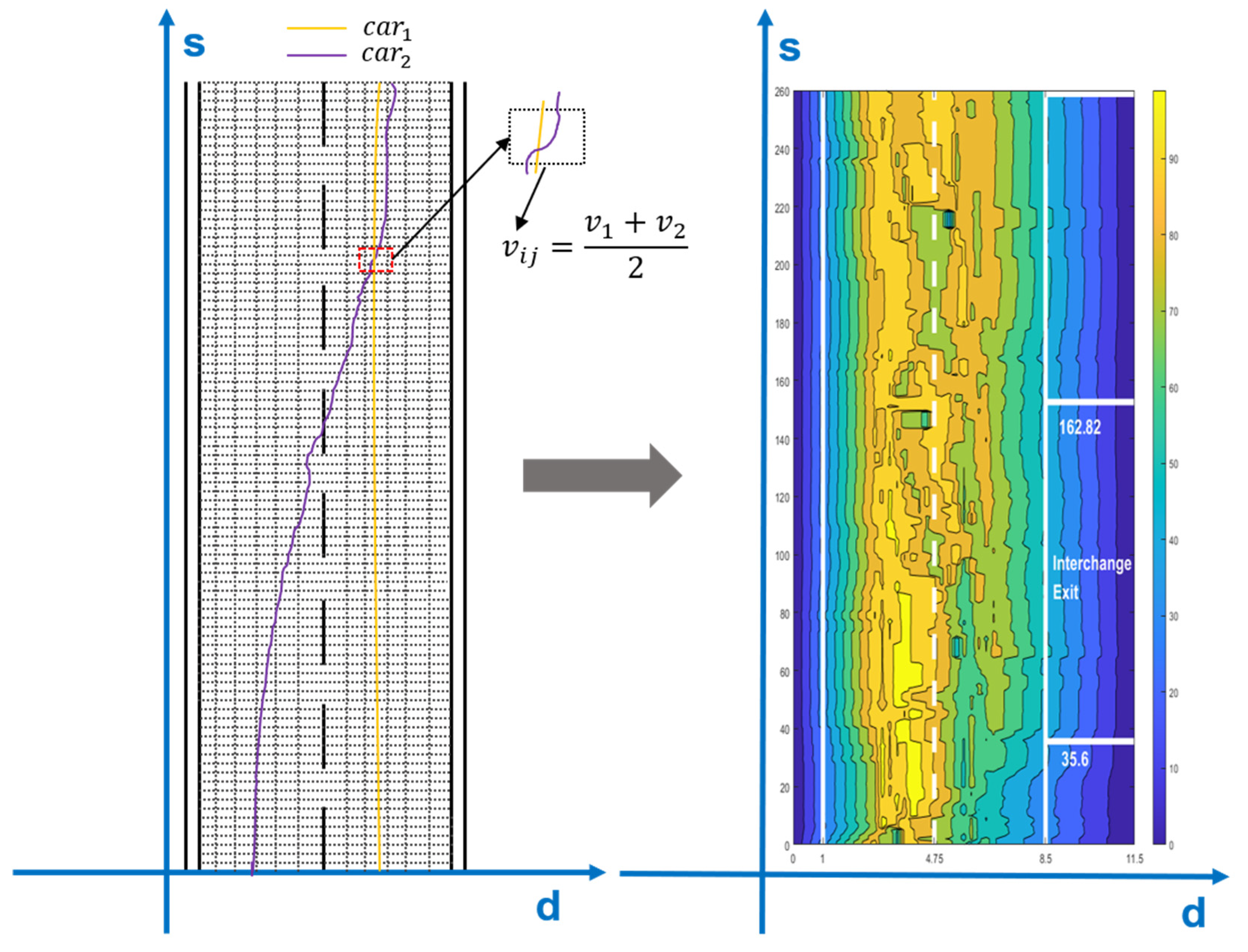

4.3.2. Section Speed Extraction

4.3.3. Extraction of Lane Line and Lateral Vehicle Distance

4.3.4. Data Cleaning

- Vehicle misidentification can occur, including the possibility of mistaking non-vehicle elements for vehicles, although this is uncommon.

- A vehicle is temporarily blocked by a gantry or sign, resulting in intermittent recognition.

- Data from vehicles outside the lane being studied will be collected.

- When the vehicle first appears on the screen, there is a gradual recognition process. As the vehicle’s middle is chosen as the detecting point, the speed information entered onto the screen may be erroneous.

- A few vehicles may have number switching.

- As the incorrectly identified items are all fixed objects on the screen, all data with an identification speed of less than 5 km/h are exported to 0 km/h in the software, which can be erased directly thereafter.

- As long as the vehicle number does not change during the recognition interruption (caused by the obstruction of the gantry, sign, etc.) for around one second, there is no problem with the data.

- The distance between all vehicles and the calibration lane line can be calculated; the distance greater than the width of the lane is the vehicle data of the remaining lanes, and all data of its number can be deleted.

- The problem of gradual recognition of vehicles entering the screen is unavoidable. The collection range can be appropriately larger than the road section to be studied, then data within 10 m of the entry process can be deleted.

- The number switching of a small number of vehicles can correspond to the numbering unification; when more vehicles are switched, the problem can be solved by increasing the data training.

4.3.5. Frenet Coordinate System

5. Results

6. Discussion and Conclusions

- (1)

- Accurate recognition of small target vehicles is still a challenge when using a larger range of aerial video.

- (2)

- Target recognition training using deep learning is still a somewhat hard task, which we can look into simplifying.

- (3)

- The data gained through video-based target recognition are not entirely usable. Data filtering was carried out in this work, but a more effective way to evaluate and optimize speed and trajectory data remains a key research focus.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- WHO. Global Plan for the Decade of Action for Road Safety 2021–2030; WHO: Geneva, Switzerland, 2021. [Google Scholar]

- Qaid, H.; Widyanti, A.; Salma, S.A.; Trapsilawati, F.; Wijayanto, T.; Syafitri, U.D.; Chamidah, N. Speed choice and speeding behavior on Indonesian highways: Extending the theory of planned behavior. IATSS Res. 2021. [Google Scholar] [CrossRef]

- Vos, J.; Farah, H.; Hagenzieker, M. Speed behaviour upon approaching freeway curves. Accid. Anal. Prev. 2021, 159, 106276. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Overton, R.; Han, L.D.; Yan, X.; Richards, S.H. The influence of curbs on driver behaviors in four-lane rural highways—A driving simulator based study. Accid. Anal. Prev. 2013, 50, 1289–1297. [Google Scholar] [CrossRef] [PubMed]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef] [Green Version]

- Kim, E.-J.; Park, H.-C.; Ham, S.-W.; Kho, S.-Y.; Kim, D.-K. Extracting vehicle trajectories using unmanned aerial vehicles in congested traffic conditions. J. Adv. Transp. 2019, 2019, 9060797. [Google Scholar] [CrossRef]

- NGSIM: Next Generation Simulation; FHWA, U.S. Department of Transportation: Washington, DC, USA, 2007.

- Krajewski, R.; Bock, J.; Kloeker, L.; Eckstein, L. The highd dataset: A drone dataset of naturalistic vehicle trajectories on german highways for validation of highly automated driving systems. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2118–2125. [Google Scholar]

- Moranduzzo, T.; Melgani, F. Detecting cars in UAV images with a catalog-based approach. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6356–6367. [Google Scholar] [CrossRef]

- Rodríguez-Canosa, G.R.; Thomas, S.; Del Cerro, J.; Barrientos, A.; MacDonald, B. A real-time method to detect and track moving objects (DATMO) from unmanned aerial vehicles (UAVs) using a single camera. Remote Sens. 2012, 4, 1090–1111. [Google Scholar] [CrossRef] [Green Version]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Yu, G.; Wu, X.; Wang, Y.; Ma, Y. An enhanced Viola-Jones vehicle detection method from unmanned aerial vehicles imagery. IEEE Trans. Intell. Transp. Syst. 2016, 18, 1845–1856. [Google Scholar] [CrossRef]

- Ke, R.; Li, Z.; Kim, S.; Ash, J.; Cui, Z.; Wang, Y. Real-time bidirectional traffic flow parameter estimation from aerial videos. IEEE Trans. Intell. Transp. Syst. 2016, 18, 890–901. [Google Scholar] [CrossRef]

- Liu, K.; Mattyus, G. Fast multiclass vehicle detection on aerial images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1938–1942. [Google Scholar]

- Pomoni, M.; Plati, C.; Kane, M.; Loizos, A. Polishing behaviour of asphalt surface course containing recycled materials. Int. J. Transp. Sci. Technol. 2021. [Google Scholar] [CrossRef]

- Kogbara, R.B.; Masad, E.A.; Kassem, E.; Scarpas, A.T.; Anupam, K. A state-of-the-art review of parameters influencing measurement and modeling of skid resistance of asphalt pavements. Constr. Build. Mater. 2016, 114, 602–617. [Google Scholar] [CrossRef]

- He, Y.; Sun, X.; Zhang, J.; Hou, S. A comparative study on the correlation between highway speed and traffic safety in China and the United States. China J. Highw. Transp. 2010, 23, 73–78. [Google Scholar]

- Singh, H.; Kathuria, A. Analyzing driver behavior under naturalistic driving conditions: A review. Accid. Anal. Prev. 2021, 150, 105908. [Google Scholar] [CrossRef] [PubMed]

- Chang, K.-H. Motion Analysis. In e-Design; Academic Press: Cambridge, MA, USA, 2015; pp. 391–462. [Google Scholar] [CrossRef]

- Bruck, L.; Haycock, B.; Emadi, A. A review of driving simulation technology and applications. IEEE Open J. Veh. Technol. 2020, 2, 1–16. [Google Scholar] [CrossRef]

- Ali, Y.; Bliemer, M.C.; Zheng, Z.; Haque, M.M. Comparing the usefulness of real-time driving aids in a connected environment during mandatory and discretionary lane-changing manoeuvres. Transp. Res. Part C Emerg. Technol. 2020, 121, 102871. [Google Scholar] [CrossRef]

- Deng, W.; Ren, B.; Wang, W.; Ding, J. A Survey of Automatic Generation Methods for Simulation Scenarios for Autonomous Driving. China J. Highw. Transp. 2022, 35, 316–333. [Google Scholar]

- Sharma, A.; Zheng, Z.; Kim, J.; Bhaskar, A.; Haque, M.M. Is an informed driver a better decision maker? A grouped random parameters with heterogeneity-in-means approach to investigate the impact of the connected environment on driving behaviour in safety-critical situations. Anal. Methods Accid. Res. 2020, 27, 100127. [Google Scholar] [CrossRef]

- Stipancic, J.; Miranda-Moreno, L.; Saunier, N. Vehicle manoeuvers as surrogate safety measures: Extracting data from the gps-enabled smartphones of regular drivers. Accid. Anal. Prev. 2018, 115, 160–169. [Google Scholar] [CrossRef]

- Yang, G.; Xu, H.; Tian, Z.; Wang, Z. Vehicle speed and acceleration profile study for metered on-ramps in California. J. Transp. Eng. 2016, 142, 04015046. [Google Scholar] [CrossRef]

- Zhang, Z.; Hao, X.; Wu, W. Research on the running speed prediction model of interchange ramp. Procedia-Soc. Behav. Sci. 2014, 138, 340–349. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, T.V.; Krajzewicz, D.; Fullerton, M.; Nicolay, E. DFROUTER—Estimation of vehicle routes from cross-section measurements. In Modeling Mobility with Open Data; Springer: Berlin/Heidelberg, Germany, 2015; pp. 3–23. [Google Scholar]

- Zhang, C.; Yan, X.; Li, X.; Pan, B.; Wang, H.; Ma, X. Running speed model of passenger cars at the exit of a single lane of an interchange. China J. Highw. Transp. 2017, 6, 279–286. [Google Scholar]

- Kurtc, V. Studying car-following dynamics on the basis of the HighD dataset. Transp. Res. Rec. 2020, 2674, 813–822. [Google Scholar] [CrossRef]

- Lu, X.-Y.; Skabardonis, A. Freeway traffic shockwave analysis: Exploring the NGSIM trajectory data. In Proceedings of the 86th Annual Meeting of the Transportation Research Board, Washington, DC, USA, 21–25 January 2007. [Google Scholar]

- Li, Y.; Wu, D.; Lee, J.; Yang, M.; Shi, Y. Analysis of the transition condition of rear-end collisions using time-to-collision index and vehicle trajectory data. Accid. Anal. Prev. 2020, 144, 105676. [Google Scholar] [CrossRef]

- Li, L.; Jiang, R.; He, Z.; Chen, X.M.; Zhou, X. Trajectory data-based traffic flow studies: A revisit. Transp. Res. Part C Emerg. Technol. 2020, 114, 225–240. [Google Scholar] [CrossRef]

- Feng, Z.; Zhu, Y. A survey on trajectory data mining: Techniques and applications. IEEE Access 2016, 4, 2056–2067. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Chen, Q.; Gu, R.; Huang, H.; Lee, J.; Zhai, X.; Li, Y. Using vehicular trajectory data to explore risky factors and unobserved heterogeneity during lane-changing. Accid. Anal. Prev. 2021, 151, 105871. [Google Scholar] [CrossRef]

- Hu, Y.; Li, Y.; Huang, H.; Lee, J.; Yuan, C.; Zou, G. A high-resolution trajectory data driven method for real-time evaluation of traffic safety. Accid. Anal. Prev. 2022, 165, 106503. [Google Scholar] [CrossRef]

- Raju, N.; Kumar, P.; Arkatkar, S.; Joshi, G. Determining risk-based safety thresholds through naturalistic driving patterns using trajectory data on expressways. Saf. Sci. 2019, 119, 117–125. [Google Scholar] [CrossRef]

- Wang, L.; Abdel-Aty, M.; Ma, W.; Hu, J.; Zhong, H. Quasi-vehicle-trajectory-based real-time safety analysis for expressways. Transp. Res. Part C Emerg. Technol. 2019, 103, 30–38. [Google Scholar] [CrossRef]

- Ali, G.; McLaughlin, S.; Ahmadian, M. Quantifying the effect of roadway, driver, vehicle, and location characteristics on the frequency of longitudinal and lateral accelerations. Accid. Anal. Prev. 2021, 161, 106356. [Google Scholar] [CrossRef]

- Liu, T.; Li, Z.; Liu, P.; Xu, C.; Noyce, D.A. Using empirical traffic trajectory data for crash risk evaluation under three-phase traffic theory framework. Accid. Anal. Prev. 2021, 157, 106191. [Google Scholar] [CrossRef]

- Reinolsmann, N.; Alhajyaseen, W.; Brijs, T.; Pirdavani, A.; Hussain, Q.; Brijs, K. Investigating the impact of dynamic merge control strategies on driving behavior on rural and urban expressways—A driving simulator study. Transp. Res. Part F Traffic Psychol. Behav. 2019, 65, 469–484. [Google Scholar] [CrossRef] [Green Version]

- Yu, R.; Han, L.; Zhang, H. Trajectory data based freeway high-risk events prediction and its influencing factors analyses. Accid. Anal. Prev. 2021, 154, 106085. [Google Scholar] [CrossRef]

- Hu, X.; Yuan, Y.; Zhu, X.; Yang, H.; Xie, K. Behavioral responses to pre-planned road capacity reduction based on smartphone GPS trajectory data: A functional data analysis approach. J. Intell. Transp. Syst. 2019, 23, 133–143. [Google Scholar] [CrossRef]

- Singla, N. Motion detection based on frame difference method. Int. J. Inf. Comput. Technol. 2014, 4, 1559–1565. [Google Scholar]

- Xue, L.-X.; Luo, Y.-L.; Wang, Z.-C. Detection algorithm of adaptive moving objects based on frame difference method. Appl. Res. Comput. 2011, 28, 1551–1552. [Google Scholar]

- Piccardi, M. Background subtraction techniques: A review. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No. 04CH37583), The Hague, The Netherlands, 10–13 October 2004; pp. 3099–3104. [Google Scholar]

- Barnich, O.; Van Droogenbroeck, M. ViBe: A universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 2010, 20, 1709–1724. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.; Zhang, L.; Liu, T.; Gong, S. Structural System Identification Based on Computer Vision. China Civ. Eng. J. 2018, 51, 21–27. [Google Scholar]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Li, Z.; Yang, Y.; Qi, L.; Ke, R. High-resolution vehicle trajectory extraction and denoising from aerial videos. IEEE Trans. Intell. Transp. Syst. 2020, 22, 3190–3202. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

| Literature | Data Source | Conclusions and Results |

|---|---|---|

| [3] | Various speed profiles on 153 Dutch expressway curves | Deflection angle and curve length are connected to curve speed. Regardless of horizontal radius or speed, vehicles come to a halt and decelerate roughly 135 m into the curve. |

| [35] | NGSIM dataset | Examines elements that influence likelihood of collision during lane changes from the perspective of vehicle groups, as well as unobserved heterogeneity of individual lane change movements. |

| [36] | HighD dataset | Active safety management strategy developed by combining traffic conditions and conflicts. |

| [32] | Review | Use of traffic flow research based on trajectory data from microscopic, mesoscopic, and macroscopic perspectives is reviewed, with paucity of data at this stage identified as a major issue. |

| [37] | Combines road collection with driving simulation data | Using trajectory data, quantifies back-end risk and proposes thresholds. |

| [38] | Less accurate quasi-vehicle trajectory data | Bayesian matching case control logistic regression model is established to explore impact of traffic parameters along quasi-trajectory of vehicles on real-time collision risk. |

| [39] | Second Strategic Highway Research Program Naturalistic Driving Study (SHRP2 NDS) | Understanding and quantifying effects of factors such as road speed, driver age and gender, vehicle class, and location on longitudinal and lateral acceleration cycle rates. |

| [40] | empirical vehicle trajectory data collected from Interstate 80 in California, USA, and Yingtian Expressway in Nanjing, China | According to the three-phase theory, three regression models were created to quantify impact of traffic flow factors and traffic states on collision risk, allowing the evaluation of collision risk for distinct traffic phases and phase transitions. |

| [41] | Driving simulator | Investigates efficiency of static and dynamic merging control in management of urban and rural expressway traffic. |

| [42] | HighD | Collision prediction using a random parameter logistic regression model that takes into account data heterogeneity problem. |

| [43] | Smartphone GPS trajectory data | Investigates behavior of vehicles when faced with traffic congestion and road closures. |

| Province | Highway | Road section | Features |

|---|---|---|---|

| Shaanxi | Baomao expressway | General main line segment | Free-flow straight sections, with stable and fast speed, and a small amount of lane-changing behavior. |

| Sichuan | Yakang expressway | Tianquan interchange expressway exit | A number of lane-changing behaviors on the main line, which has a big radius and a right-biased curve. |

| Sichuan | Yakang expressway | Duogong interchange ring ramp | Vehicle direction and speed fluctuate dramatically in the circular curve section with a small radius. |

| Shaanxi | Jingkun expressway | Huangguan tunnel small net distance exit | Main line is straight with a lane change and clear acceleration and deceleration characteristics. |

| Sichuan | Yakang expressway | Luding interchange ring ramp | Heavy traffic, slow speed, and many interfering vehicles. |

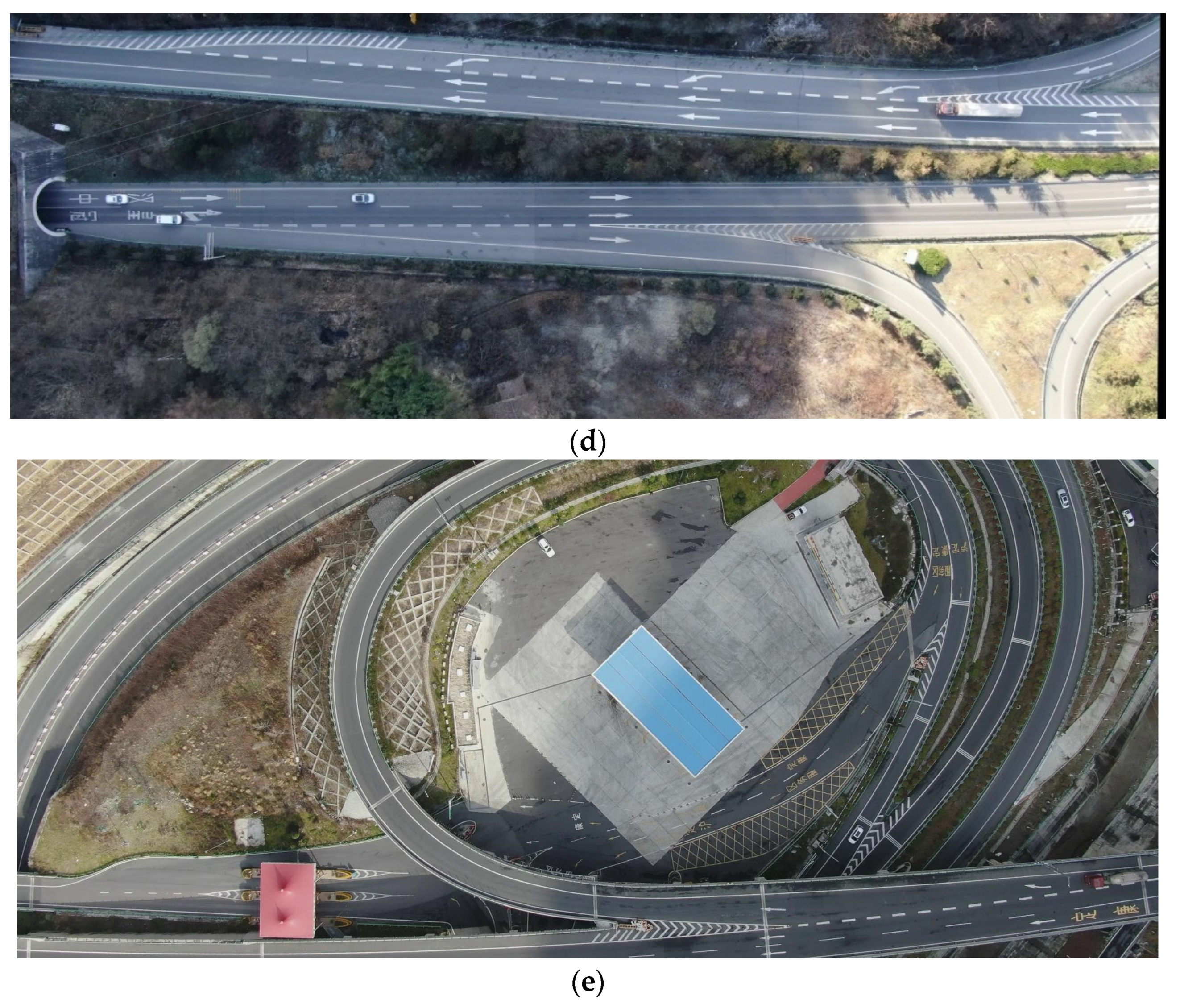

| Height (h) | Effect of Vehicle Recognition | Error Analysis |

|---|---|---|

| <200 m | 95% recognition, continuous tracking | Road section’s shooting range is narrow, vehicle’s process time entering and leaving the screen is long, and the process speed error is considerable, resulting in more erroneous data during entry and exit process. |

| >250 m | 93% recognition, some vehicles have identification (ID) switching | When faced with a more complex road environment, the vehicle is too small at this height, and it is easy to cause ID switch when the white car and white marking line overlap; if the height is too high, wind speed is high, and drone stability is poor, the real distance represented by one pixel is large, and the system error increases. |

| 200–250 m | 98% recognition, continuous tracking | At this height, it can be adjusted according to road environment and wind speed to achieve continuous and stable tracking of the vehicle, with tiny errors between vehicle speed and trajectory. |

| Road | Precision (%) | Recall (%) | True Negative | ID Switch (%) |

|---|---|---|---|---|

| Main section of Baomao expressway (road 1) | 95.00 | 98.53 | 3 | 5 |

| Tianquan interchange exit of Yakang expressway (road 2) | 92.35 | 97.60 | 7 | 12 |

| Duogong interchange ring ramp of Yakang expressway (road 3) | 95.00 | 96.00 | 4 | 5 |

| Type | Small Car | Large Car | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Real speed (km/h) | 79 | 79 | 64.8 | 86 | 86 | 74.05 | 86.4 | 69 | 64.8 | 47.12 | 45 | 45 | 64.2 | 39.8 | 43.2 | 39 | 57.6 | 61.5 | |

| Detected speed (km/h) | 77 | 79 | 67 | 80 | 82 | 79 | 91 | 66 | 68 | 45 | 41 | 40.5 | 65 | 36 | 39 | 39 | 59 | 60 | |

| Type | Max (Error) (km/h) | Maximum Error rate (%) | Mean Error (km/h) | Mean Error Rate (%) | T (%) | RMSE (km/h) |

|---|---|---|---|---|---|---|

| Large car | 5 | 10 | 2.98 | 6.06 | 95.7 | 2.94 |

| Small car | 6 | 6.98 | 3.89 | 5.08 | 98.5 | 3.74 |

| Time (s) | Vehicle ID | Speed (km/h) | Displacement | X | Y | Acceleration (m/s2) |

|---|---|---|---|---|---|---|

| 91.09 | 10 | 45.00 | 2.72 | 1518.00 | 39.00 | 2.00 |

| 91.42 | 10 | 47.42 | 7.22 | 1511.00 | 75.00 | 2.01 |

| 91.76 | 10 | 48.29 | 11.55 | 1505.00 | 110.00 | 0.72 |

| 92.09 | 10 | 48.30 | 15.52 | 1499.00 | 142.00 | 0.01 |

| 92.43 | 10 | 47.10 | 19.50 | 1493.00 | 174.00 | −1.00 |

| …… | ||||||

| Real Stake | Pixel Stake | Car 2 Speed | Car 2 Acceleration | Car 3 Speed | Car 3 Acceleration | Car 9 Speed | Car 9 Acceleration |

|---|---|---|---|---|---|---|---|

| 0 | 0 | 102.535 | 6.696 | 99.271 | −0.641 | 91.397 | 0.081 |

| 5 | 40 | 102.535 | 6.696 | 100.281 | 0.842 | 92.816 | 1.183 |

| 10 | 80 | 102.444 | −0.0759 | 100.281 | 0.842 | 92.816 | 1.183 |

| 15 | 120 | 102.444 | −0.0759 | 100.435 | 0.128 | 92.860 | 0.037 |

| 20 | 160 | 102.911 | 0.389 | 100.435 | 0.128 | 92.860 | 0.037 |

| …… | |||||||

| Real Stake | Pixel Stake | Car 2 Speed | Car 2 Acceleration | Car 2 Lateral Distance | Car 2 Lateral Speed | Car 2 Lateral Acceleration | Car 3 Speed |

|---|---|---|---|---|---|---|---|

| 0 | 0 | 102.535 | 6.696 | 1.962 | −0.274 | −0.175 | …… |

| 5 | 40 | 102.535 | 6.696 | 1.908 | −0.274 | −0.175 | …… |

| 10 | 80 | 102.444 | −0.0759 | 1.854 | −0.332 | −0.175 | …… |

| 15 | 120 | 102.444 | −0.0759 | 1.814 | −0.332 | −0.175 | …… |

| 20 | 160 | 102.911 | 0.389 | 1.826 | −0.021 | 0.933 | …… |

| …… | |||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Tang, Z.; Zhang, M.; Wang, B.; Hou, L. Developing a More Reliable Aerial Photography-Based Method for Acquiring Freeway Traffic Data. Remote Sens. 2022, 14, 2202. https://doi.org/10.3390/rs14092202

Zhang C, Tang Z, Zhang M, Wang B, Hou L. Developing a More Reliable Aerial Photography-Based Method for Acquiring Freeway Traffic Data. Remote Sensing. 2022; 14(9):2202. https://doi.org/10.3390/rs14092202

Chicago/Turabian StyleZhang, Chi, Zhongze Tang, Min Zhang, Bo Wang, and Lei Hou. 2022. "Developing a More Reliable Aerial Photography-Based Method for Acquiring Freeway Traffic Data" Remote Sensing 14, no. 9: 2202. https://doi.org/10.3390/rs14092202

APA StyleZhang, C., Tang, Z., Zhang, M., Wang, B., & Hou, L. (2022). Developing a More Reliable Aerial Photography-Based Method for Acquiring Freeway Traffic Data. Remote Sensing, 14(9), 2202. https://doi.org/10.3390/rs14092202