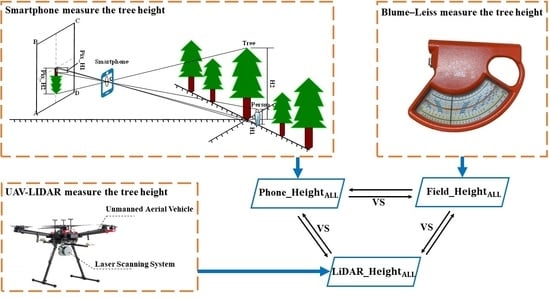

Intelligent Estimating the Tree Height in Urban Forests Based on Deep Learning Combined with a Smartphone and a Comparison with UAV-LiDAR

Abstract

:1. Introduction

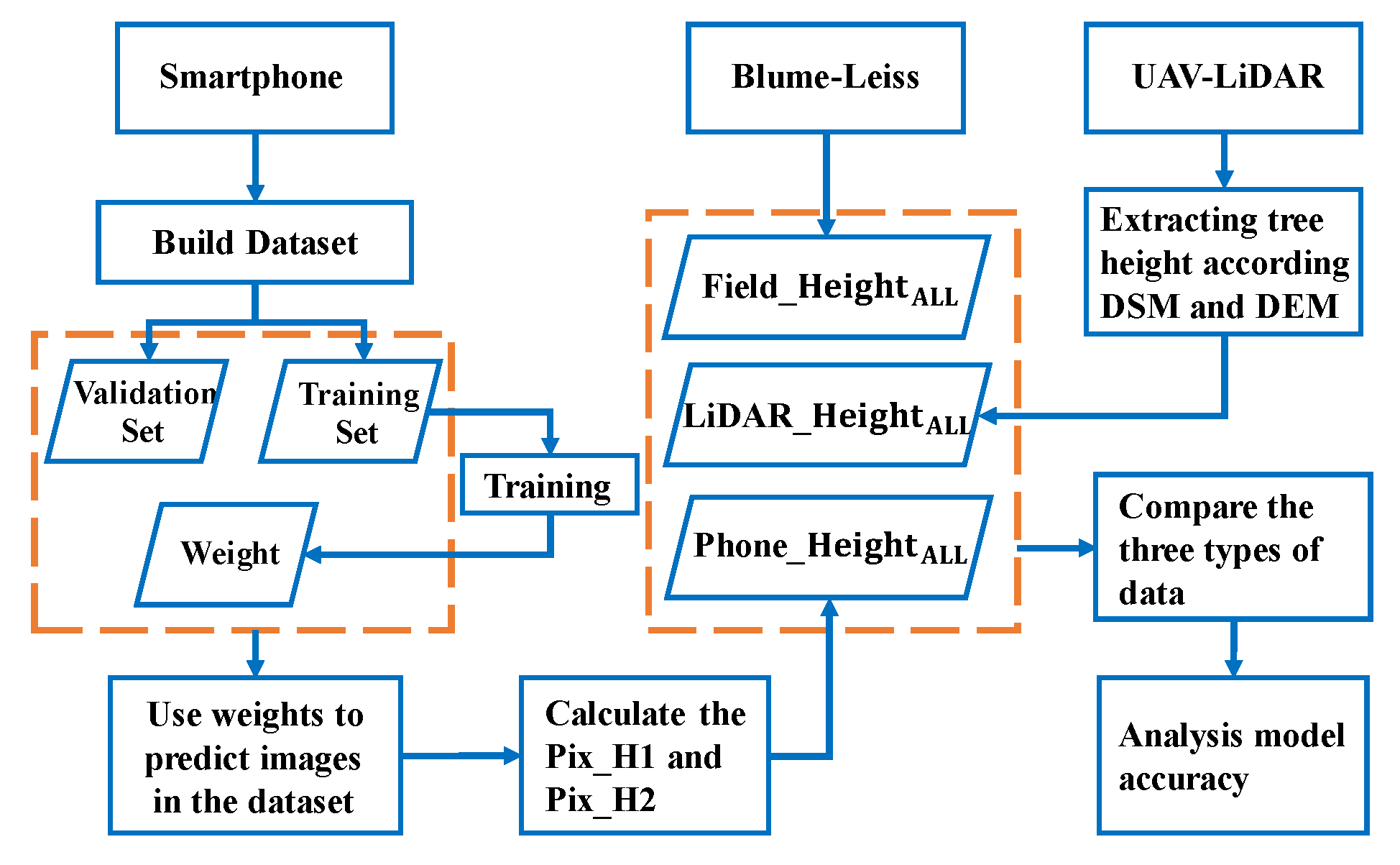

2. Study Area and Methodology

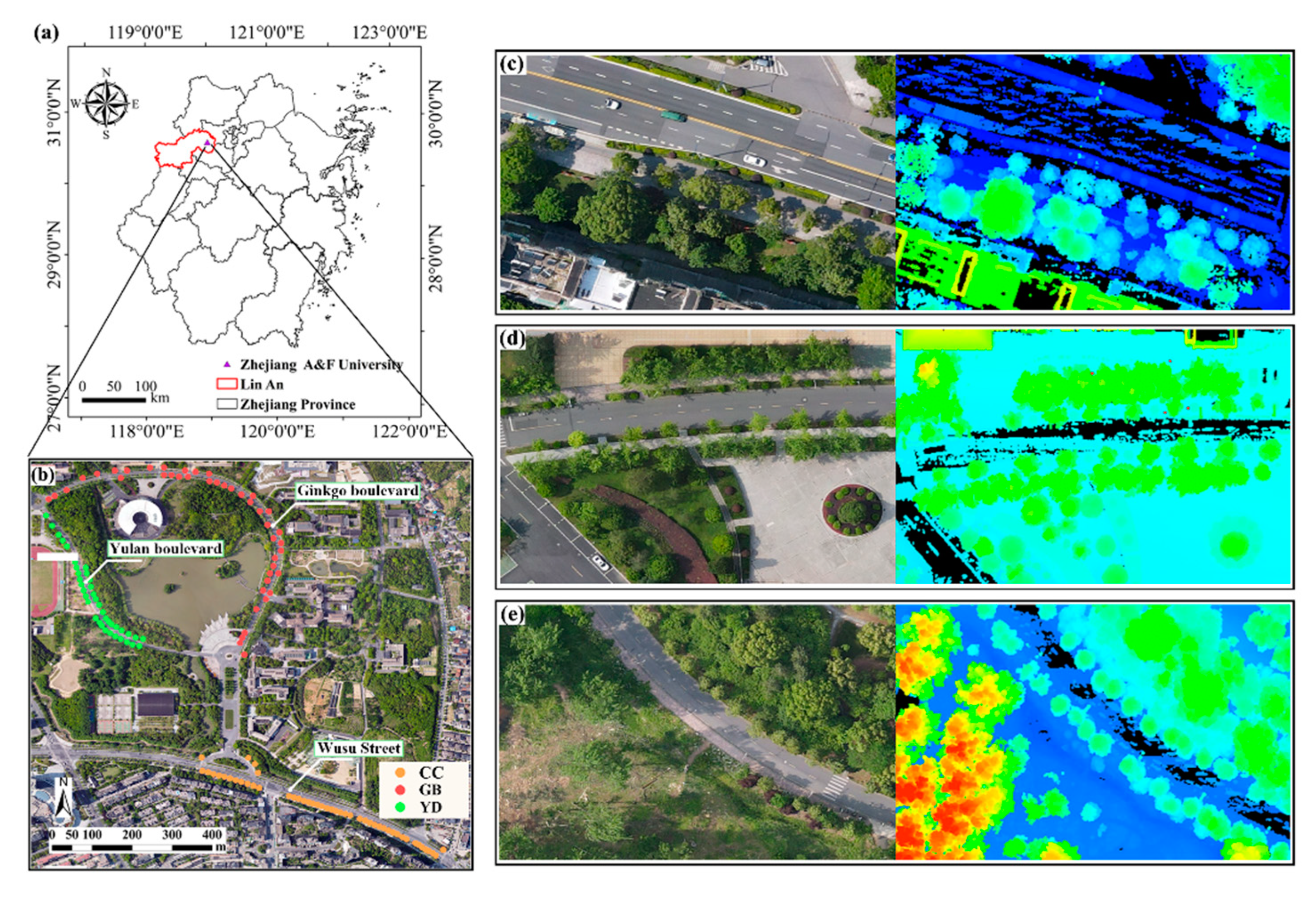

2.1. Study Area and Data

2.1.1. Study Area

2.1.2. Person–Tree Image Acquisition and Labeling

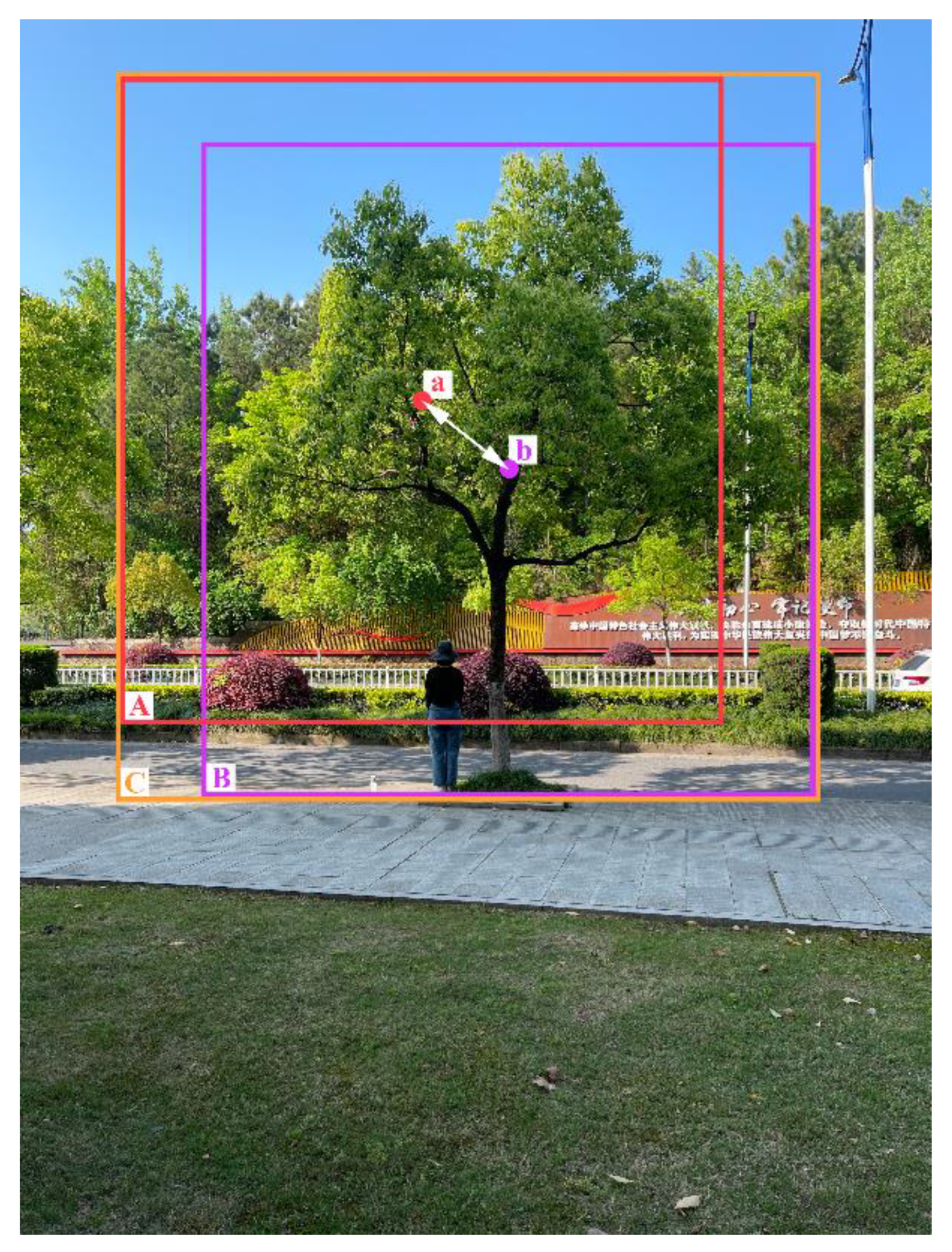

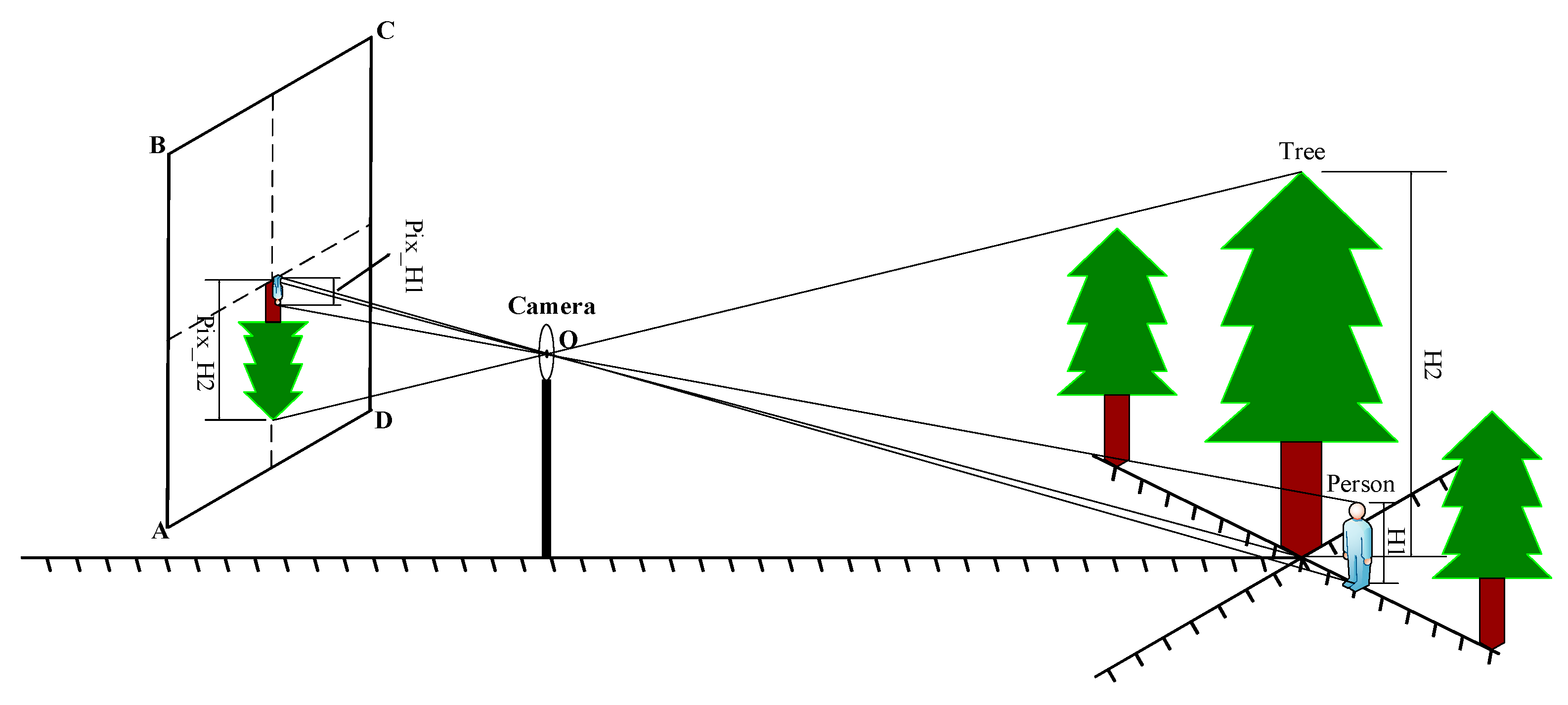

2.2. Person–Tree Scale Height Measurement Model

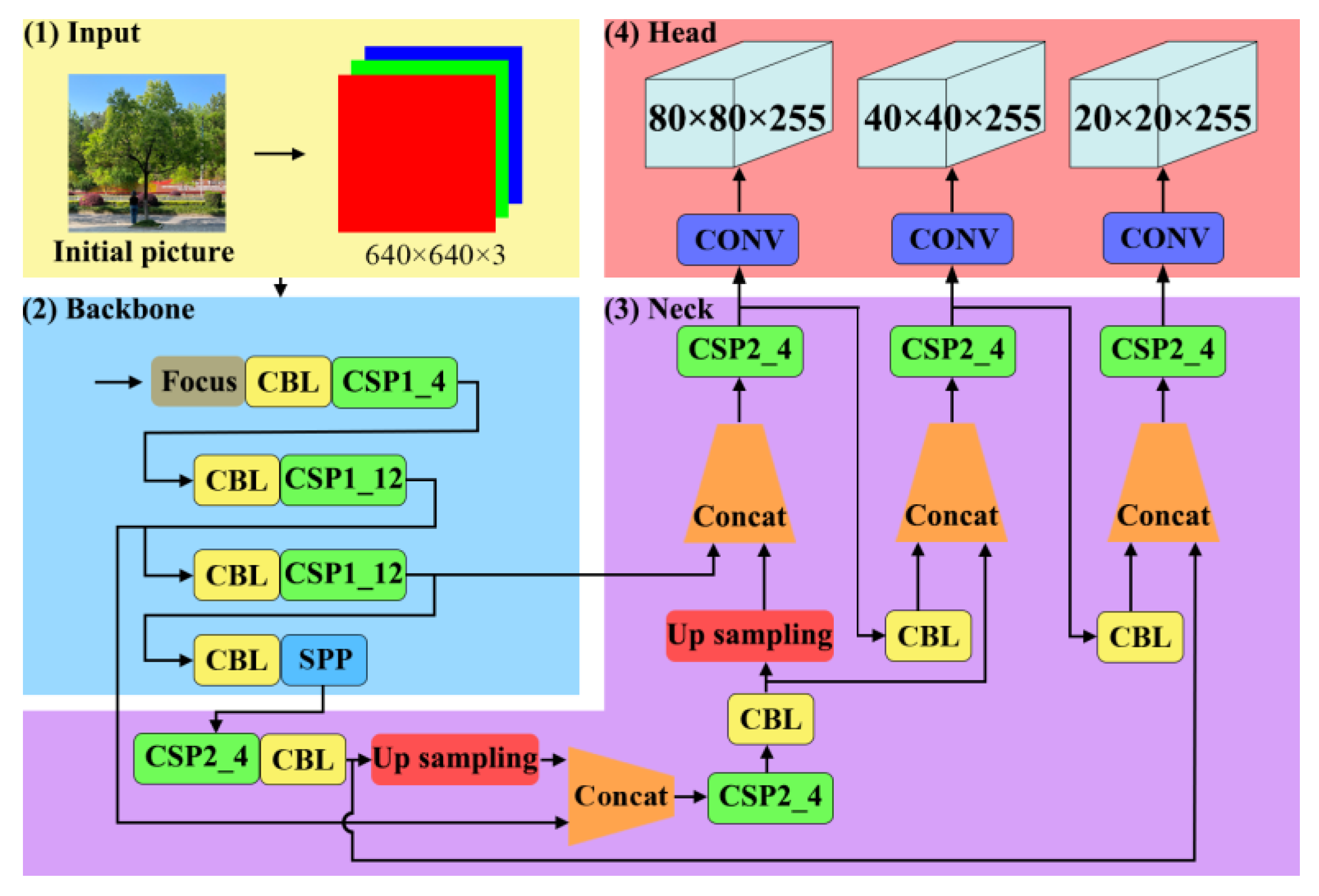

2.2.1. The Construction of Person–Tree Scale Height Measurement Model

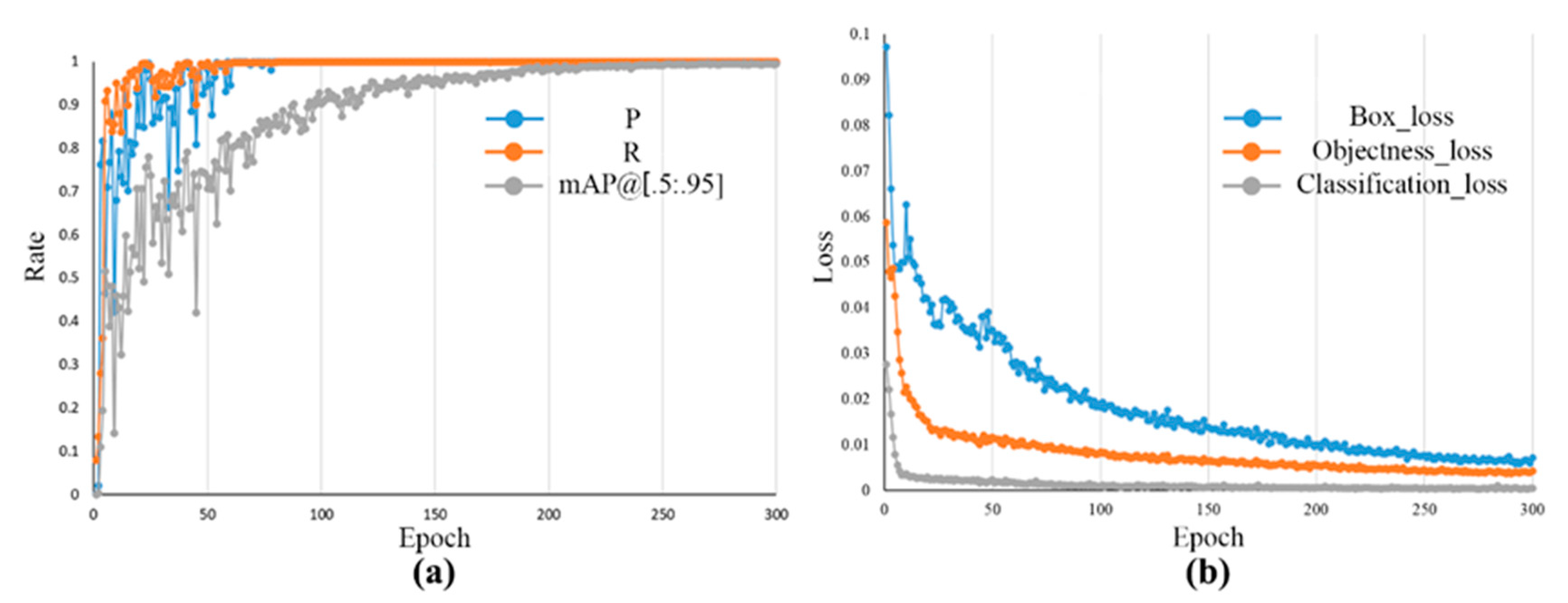

2.2.2. Training of the Person–Tree Scale Height Measurement Model

2.2.3. Evaluation Indexes for the Accuracy of the Person–Tree Scale Height Measurement Model

2.3. Evaluation of the Tree Height Extraction Accuracy

3. Results and Analysis

3.1. Person–Tree Scale Height Measurement Model

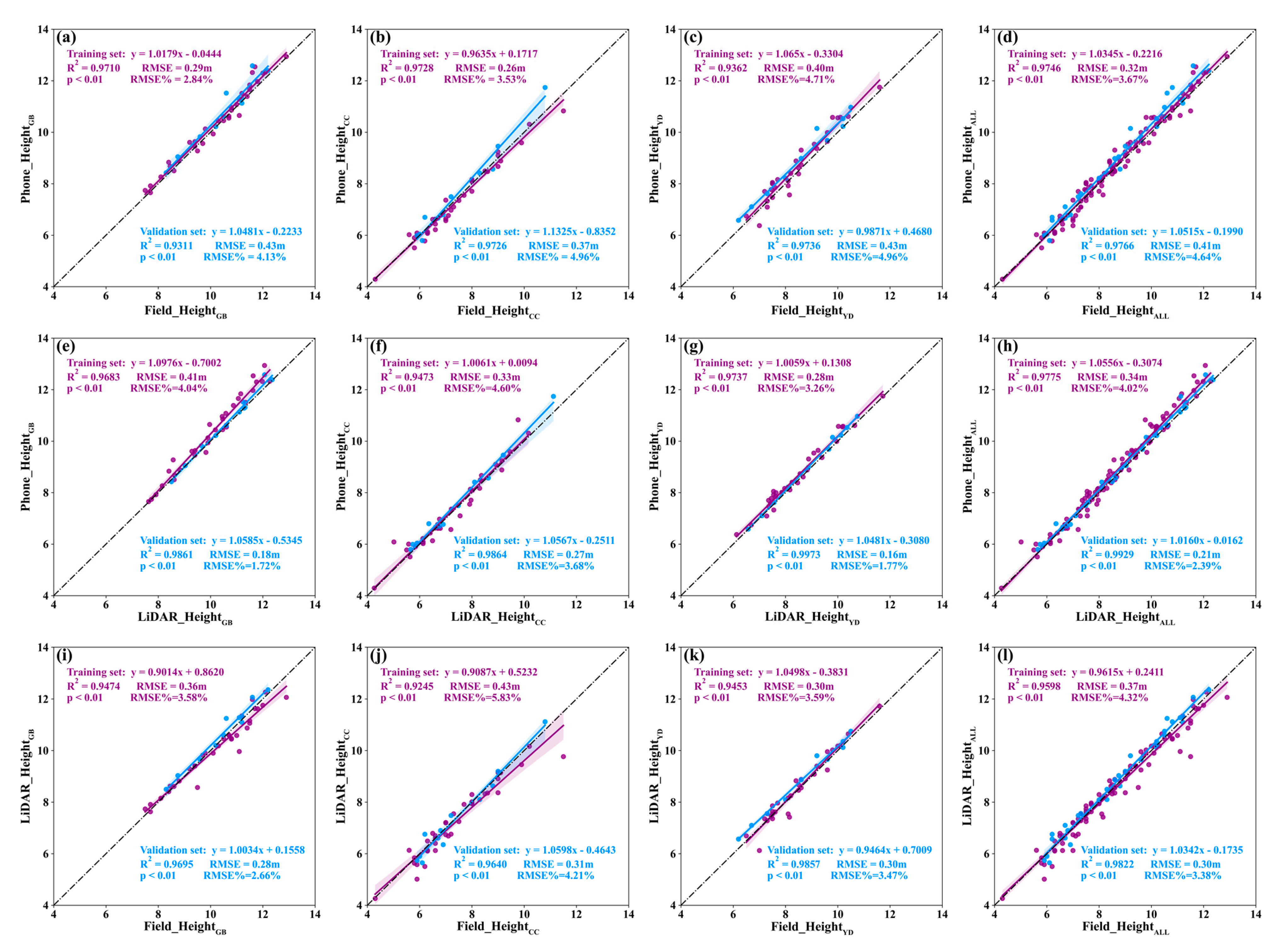

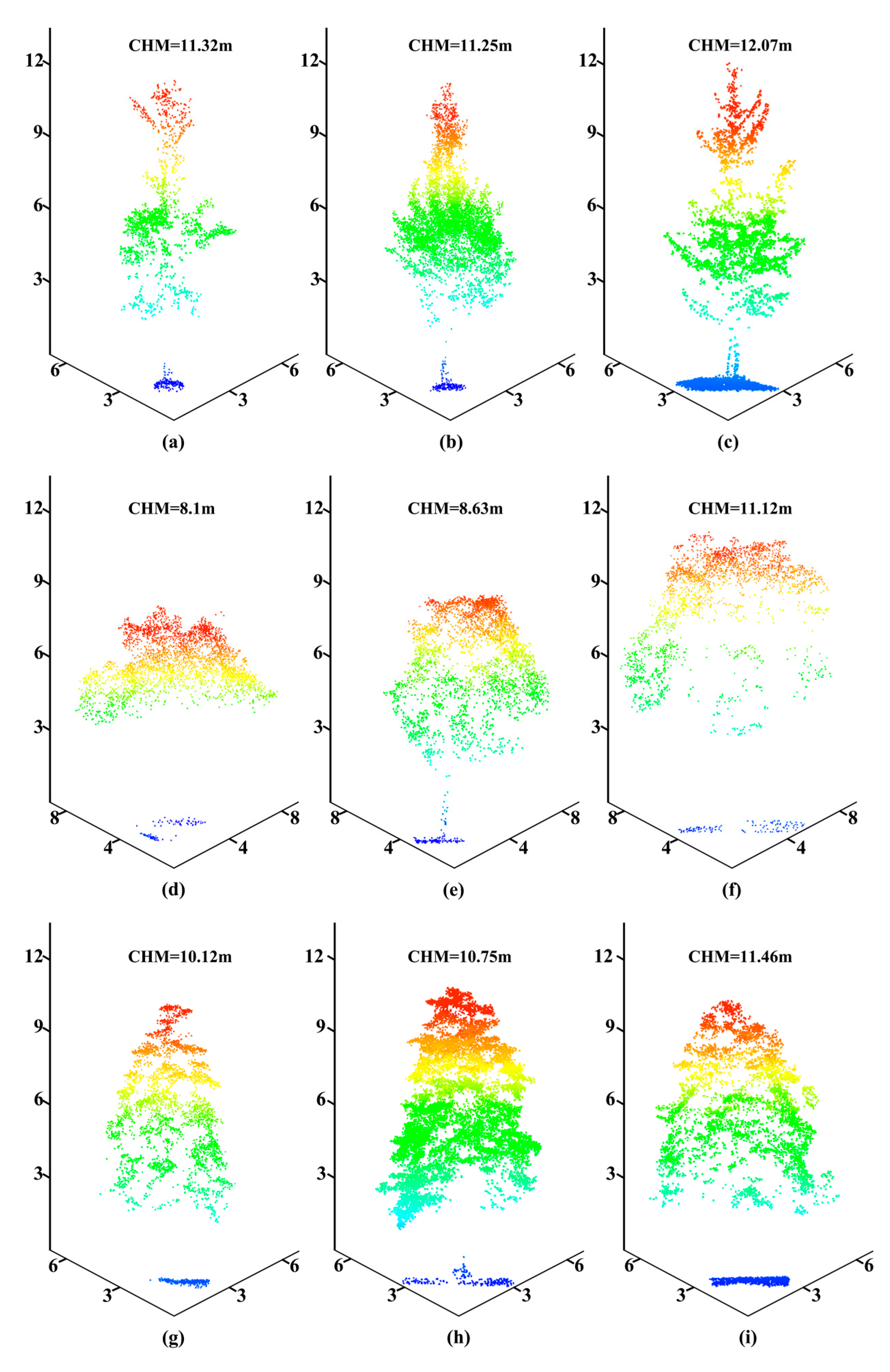

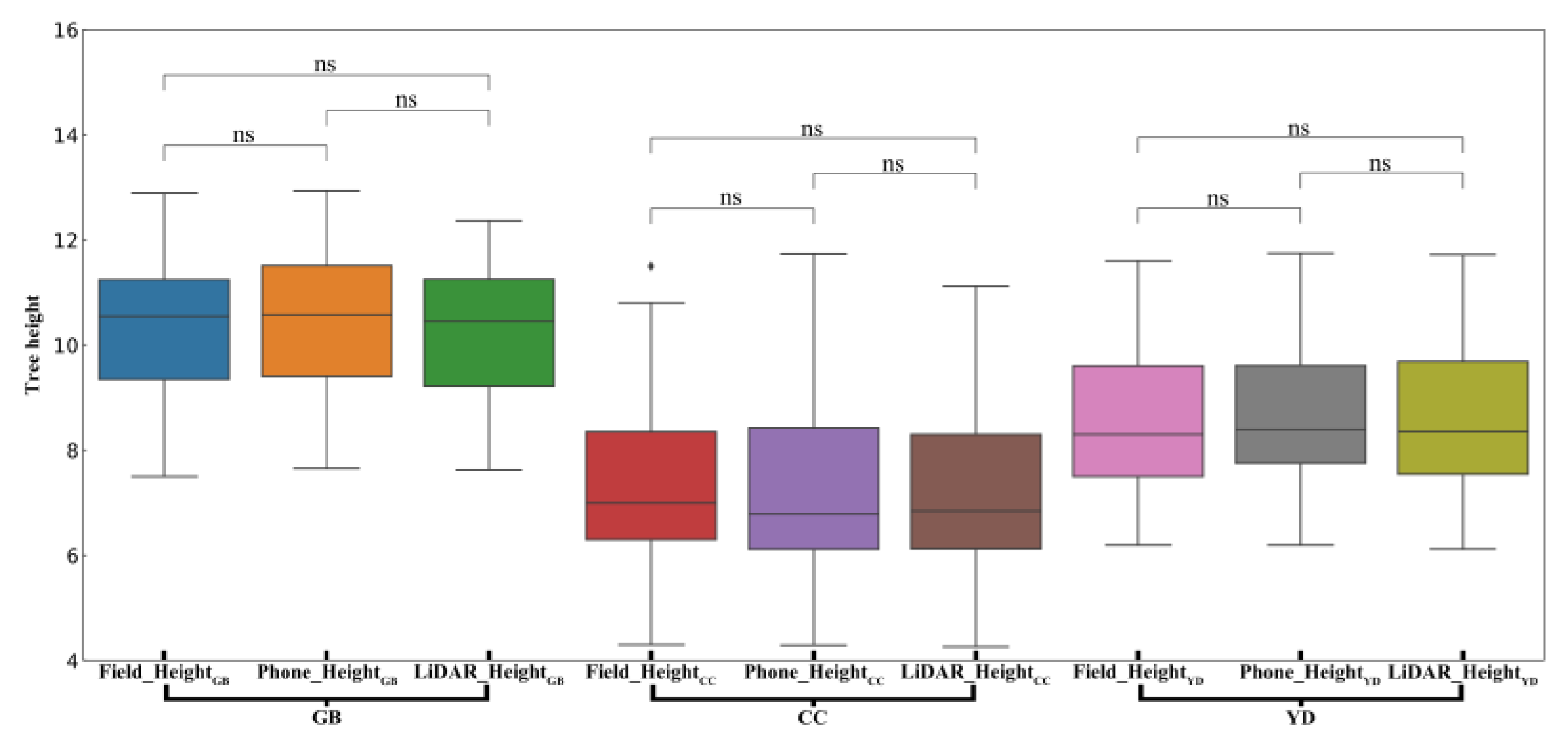

3.2. Tree-Height Extraction Results

4. Conclusions and Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rowntree, R.A. Ecology of the urban forest—Introduction to Part I. Urban Ecol. 1984, 8, 1–11. [Google Scholar] [CrossRef]

- Wang, C.; Cai, C.; Tao, K. The Concept, Range and Research Area of Urban Forest. World For. Res. 2004, 17, 23–27. [Google Scholar] [CrossRef]

- Wang, M.; Miao, R. The Conponents of Urban Forestry and Its Types. For. Res. 1997, 10, 531–536. [Google Scholar]

- Yang, J. Urban Forestry Planning and Management; China Forestry Publishing House: Beijing, China, 2012. [Google Scholar]

- Cai, S.; He, S.; Han, N.; Du, H. U-Net Neural Network Combined with Object-Oriented Urban Forest High-Score lmageInformation Extraction. Landsc. Archit. Acad. J. 2020, 28–37. [Google Scholar] [CrossRef]

- Qiu, Z.; Feng, Z.; Song, Y.; Li, M.; Zhang, P. Carbon sequestration potential of forest vegetation in China from 2003 to 2050: Predicting forest vegetation growth based on climate and the environment. J. Clean. Prod. 2020, 252, 119715. [Google Scholar] [CrossRef]

- Hu, Y.; Qi, R.; You, W.; Da, L. Ecological Service Functional Assessment on Forest Ecological System of Kunshan City. J. Nanjing For. Univ. 2005, 29, 111–114. [Google Scholar]

- Li, Z.; Zhong, J.; Sun, Z.; Yang, W. Spatial Pattern of Carbon Sequestration and Urban Sustainability: Analysis of Land-Use and Carbon Emission in Guang’an, China. Sustainability 2017, 9, 1951. [Google Scholar] [CrossRef] [Green Version]

- Iizuka, K.; Kosugi, Y.; Noguchi, S.; Iwagami, S. Toward a comprehensive model for estimating diameter at breast height of Japanese cypress (Chamaecyparis obtusa) using crown size derived from unmanned aerial systems. Comput. Electron. Agric. 2022, 192, 106579. [Google Scholar] [CrossRef]

- Fang, H.; Ye, Y.; Liu, W.; Wei, S.; Ma, L. Continuous estimation of canopy leaf area index (LAI) and clumping index over broadleaf crop fields: An investigation of the PASTIS-57 instrument and smartphone applications. Agric. For. Meteorol. 2018, 253, 48–61. [Google Scholar] [CrossRef]

- Qu, Y.; Wang, Z.; Shang, J.; Liu, J.; Zou, J. Estimation of leaf area index using inclined smartphone camera. Comput. Electron. Agric. 2021, 191, 106514. [Google Scholar] [CrossRef]

- Rudic, T.E.; McCulloch, L.A.; Cushman, K. Comparison of Smartphone and Drone Lidar Methods for Characterizing Spatial Variation in PAI in a Tropical Forest. Remote Sens. 2020, 12, 1765. [Google Scholar] [CrossRef]

- de Araújo Carvalho, M.; Junior, J.M.; Martins, J.A.C.; Zamboni, P.; Costa, C.S.; Siqueira, H.L.; Araújo, M.S.; Gonçalves, D.N.; Furuya, D.E.G.; Osco, L.P. A deep learning-based mobile application for tree species mapping in RGB images. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103045. [Google Scholar]

- McRoberts, R.E.; Tomppo, E.O.; Næsset, E. Advances and emerging issues in national forest inventories. Scand. J. For. Res. 2010, 25, 368–381. [Google Scholar] [CrossRef]

- Wang, Y.; Lehtomäki, M.; Liang, X.; Pyörälä, J.; Kukko, A.; Jaakkola, A.; Liu, J.; Feng, Z.; Chen, R.; Hyyppä, J. Is field-measured tree height as reliable as believed—A comparison study of tree height estimates from field measurement, airborne laser scanning and terrestrial laser scanning in a boreal forest. ISPRS J. Photogramm. Remote Sens. 2019, 147, 132–145. [Google Scholar] [CrossRef]

- Song, J.; Zhang, X.; Song, W.; Chi, Z.; Yang, L.; Sun, H.; Li, Q. Measuring Standing Wood Height with Hemispherical Image. J. Northeast For. Univ. 2020, 48, 61–65. [Google Scholar]

- Ma, X.; Li, J.; Zhao, K.; Wu, T.; Zhang, P. Simulation of Spatial Service Range and Value of Carbon Sink Based on Intelligent Urban Ecosystem Management System and Net Present Value Models—An Example from the Qinling Mountains. Forests 2022, 13, 407. [Google Scholar] [CrossRef]

- Meng, X. Dendrometry; China Forestry Publishing House: Beijing, China, 2006. [Google Scholar]

- Husch, B.; Beers, T.W.; Jr, J.A.K. Forest Mensuration; John Wiley & Sons: Hoboken, NJ, USA, 2002. [Google Scholar]

- Laar, A.V.; Akça, A. Forest Mensuration; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007; Volume 13. [Google Scholar]

- Wang, Y.; Qin, Y.; Cui, J. Occlusion robust wheat ear counting algorithm based on deep learning. Front. Plant Sci. 2021, 12, 645899. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, J.; Duan, A. Tree-Height Growth Model for Chinese Fir Plantation Based on Bayesian Method. Sci. Silvae Sin. 2014, 50, 69–75. [Google Scholar]

- Liu, L.; Pang, Y.; Li, Z. Individual Tree DBH and Height Estimation Using Terrestrial Laser Scanning (TLS) in a Subtropical Forest. Sci. Silvae Sin. 2016, 52, 26–37. [Google Scholar]

- Wang, J.; Zhang, L.; Lv, C.; Niu, L. Tree Species Identification Methods Based on Point Cloud Data Using Ground-based LiDAR. Trans. Chin. Soc. Agric. Mach. 2018, 49, 180–188. [Google Scholar]

- Mikita, T.; Janata, P.; Surový, P. Forest stand inventory based on combined aerial and terrestrial close-range photogrammetry. Forests 2016, 7, 165. [Google Scholar] [CrossRef] [Green Version]

- Jaakkola, A.; Hyyppä, J.; Yu, X.; Kukko, A.; Kaartinen, H.; Liang, X.; Hyyppä, H.; Wang, Y. Autonomous collection of forest field reference—The outlook and a first step with UAV laser scanning. Remote Sens. 2017, 9, 785. [Google Scholar] [CrossRef] [Green Version]

- Noordermeer, L.; Bollandsås, O.M.; Ørka, H.O.; Næsset, E.; Gobakken, T. Comparing the accuracies of forest attributes predicted from airborne laser scanning and digital aerial photogrammetry in operational forest inventories. Remote Sens. Environ. 2019, 226, 26–37. [Google Scholar] [CrossRef]

- Luo, H.; Khoshelham, K.; Chen, C.; He, H. Individual tree extraction from urban mobile laser scanning point clouds using deep pointwise direction embedding. ISPRS J. Photogramm. Remote Sens. 2021, 175, 326–339. [Google Scholar] [CrossRef]

- Corte, A.P.D.; Rex, F.E.; Almeida, D.R.A.d.; Sanquetta, C.R.; Silva, C.A.; Moura, M.M.; Wilkinson, B.; Zambrano, A.M.A.; Neto, E.M.d.C.; Veras, H.F. Measuring individual tree diameter and height using GatorEye High-Density UAV-Lidar in an integrated crop-livestock-forest system. Remote Sens. 2020, 12, 863. [Google Scholar] [CrossRef] [Green Version]

- Xu, Z.; Liu, H.; Chen, Y.; Chen, Q.; Li, H.; Wang, J. Outer Upper Crown Profile Simulation and Visualization for Cunninghamia lanceolata Based on UAV-borne LiDAR Data. For. Res. 2021, 34, 40–48. [Google Scholar]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV lidar and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Liang, X.; Hyyppä, J.; Kaartinen, H.; Lehtomäki, M.; Pyörälä, J.; Pfeifer, N.; Holopainen, M.; Brolly, G.; Francesco, P.; Hackenberg, J. International benchmarking of terrestrial laser scanning approaches for forest inventories. ISPRS J. Photogramm. 2018, 144, 137–179. [Google Scholar] [CrossRef]

- Mohamed, N.; Al-Jaroodi, J.; Jawhar, I.; Idries, A.; Mohammed, F. Unmanned aerial vehicles applications in future smart cities. Technol. Forecast. Soc. Chang. 2020, 153, 119293. [Google Scholar] [CrossRef]

- Chen, X.; Xu, A. Height extraction method of multiple standing trees based on monocular vision of smart phones. J. Beijing For. Univ. 2020, 42, 43–52. [Google Scholar]

- Wu, X.; Xu, A.; Yang, T. Passive measurement method of tree height and crown diameter using a smartphone. IEEE Access 2020, 8, 11669–11678. [Google Scholar]

- Zhang, D.-Y.; Luo, H.-S.; Wang, D.-Y.; Zhou, X.-G.; Li, W.-F.; Gu, C.-Y.; Zhang, G.; He, F.-M. Assessment of the levels of damage caused by Fusarium head blight in wheat using an improved YoloV5 method. Comput. Electron. Agric. 2022, 198, 107086. [Google Scholar] [CrossRef]

- Tian, Y.; Feng, Z.; Chang, C. Research and Experiment of Dendrometer with Walking Stick. J. Agric. Sci. Technol. 2021, 23, 78–85. [Google Scholar] [CrossRef]

- Fan, G.; Dong, Y.; Chen, D.; Chen, F. New Method for Forest Resource Data Collection Based on Smartphone Fusion with Multiple Sensors. Mob. Inf. Syst. 2020, 2020, 5736978. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Glenn, J. YOLOv5 Release v5.0. 2021. Available online: http://github.com/ultralytics/yolov5 (accessed on 13 May 2021).

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2778–2788. [Google Scholar]

- Singh, B.; Kaur, P.; Singh, R.; Ahuja, P. Biology and chemistry of Ginkgo biloba. Fitoterapia 2008, 79, 401–418. [Google Scholar] [CrossRef]

- Feng, Z.; Niu, J.; Zhang, W.; Wang, X.; Yao, F.; Tian, Y. Effects of ozone exposure on sub-tropical evergreen Cinnamomum camphora seedlings grown in different nitrogen loads. Trees 2011, 25, 617–625. [Google Scholar] [CrossRef]

- Isagi, Y.; Kanazashi, T.; Suzuki, W.; Tanaka, H.; Abe, T. Microsatellite analysis of the regeneration process of Magnolia obovata Thunb. Heredity 2000, 84, 143–151. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Yang, G.; Feng, W.; Jin, J.; Lei, Q.; Li, X.; Gui, G.; Wang, W. Face mask recognition system with YOLOV5 based on image recognition. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 1398–1404. [Google Scholar]

- Long, Y.; Lei, R.; Dong, Y.; Li, D.; Zhao, C. YOLOv5 based on Aircraft Type Detection from Remotely Sensed Optical Images. J. Geo-Inf. Sci. 2022, 24, 572–582. [Google Scholar]

- Xu, R.; Lin, H.; Lu, K.; Cao, L.; Liu, Y. A forest fire detection system based on ensemble learning. Forests 2021, 12, 217. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, S.; Yan, Y.; Tang, S.; Zhao, S. Identification and Analysis of Emergency Behavior of Cage-Reared Laying Ducks Based on YoloV5. Agriculture 2022, 12, 485. [Google Scholar] [CrossRef]

- He, Y.; Chen, D.; Peng, L. Research on object detection algorithm of economic forestry pests based on improvedYOLOv5. J. Chin. Agric. Mech. 2022, 43, 106–115. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Neubeck, A.; Gool, L.V. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; pp. 850–855. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Bragg, D.C. Accurately measuring the height of (real) forest trees. J. For. 2014, 112, 51–54. [Google Scholar] [CrossRef] [Green Version]

- Moe, K.T.; Owari, T.; Furuya, N.; Hiroshima, T. Comparing individual tree height information derived from field surveys, LiDAR and UAV-DAP for high-value timber species in Northern Japan. Forests 2020, 11, 223. [Google Scholar] [CrossRef] [Green Version]

- Imai, Y.; Setojima, M.; Yamagishi, Y.; Fujiwara, N. Tree-Height Measuring Characteristics of Urban Forests by LiDAR Data Different in Resolution; International Society for Photogrammetry Remote Sensing: Boca Raton, FL, USA, 2004; pp. 1–4. [Google Scholar]

- Sibona, E.; Vitali, A.; Meloni, F.; Caffo, L.; Dotta, A.; Lingua, E.; Motta, R.; Garbarino, M. Direct measurement of tree height provides different results on the assessment of LiDAR accuracy. Forests 2016, 8, 7. [Google Scholar] [CrossRef] [Green Version]

- Ganz, S.; Käber, Y.; Adler, P. Measuring Tree Height with Remote Sensing—A Comparison of Photogrammetric and LiDAR Data with Different Field Measurements. Forests 2019, 10, 694. [Google Scholar] [CrossRef] [Green Version]

- Yang, K.; Zhao, Y.; Zhang, J.; Chen, C.; Zhao, P. Tree height extraction using high-resolution imagery acquired from an unmanned aerial vehicle (UAV). J. Beijing For. Univ. 2017, 39, 17–23. [Google Scholar] [CrossRef]

- Zhao, F.; Feng, Z.; Gao, X.; Zhen, J.; Wang, Z. Measure method of tree height and volume using total station under canopy cover condition. Trans. Chin. Soc. Agric. Eng. 2014, 30, 182–190. [Google Scholar]

- Shen, T.; Liu, W.; Wang, J. Distance measurement system based on binocular stereo vision. Electron. Meas. Technol. 2015, 38, 52–54. [Google Scholar] [CrossRef]

| Tree No. |

Field_HeightGB /m |

Phone_HeightGB /m | Absolute Error/m | Relative Error/% |

|---|---|---|---|---|

| GB 6 | 8.30 | 8.43 | 0.13 | 1.54% |

| GB 8 | 11.20 | 11.31 | 0.11 | 0.98% |

| GB 13 | 10.60 | 11.52 | 0.92 | 8.68% |

| GB 16 | 11.10 | 11.28 | 0.18 | 1.62% |

| GB 24 | 9.60 | 9.83 | 0.23 | 2.34% |

| GB 27 | 11.60 | 12.58 | 0.98 | 8.45% |

| GB 30 | 12.20 | 12.39 | 0.19 | 1.56% |

| GB 36 | 10.50 | 10.61 | 0.11 | 1.05% |

| GB 38 | 11.20 | 11.13 | 0.07 | 0.63% |

| GB 42 | 10.20 | 10.22 | 0.02 | 0.20% |

| GB 44 | 8.75 | 9.05 | 0.30 | 3.42% |

| Tree No. |

Field_HeightCC /m |

Phone_HeightCC /m | Absolute Error/m | Relative Error/% |

|---|---|---|---|---|

| CC 3 | 7.20 | 7.50 | 0.30 | 4.14% |

| CC 5 | 6.20 | 6.70 | 0.50 | 8.03% |

| CC 6 | 6.70 | 6.66 | 0.04 | 0.58% |

| CC 13 | 5.90 | 5.99 | 0.09 | 1.58% |

| CC 18 | 6.80 | 6.77 | 0.03 | 0.44% |

| CC 26 | 90 | 9.46 | 0.46 | 5.09% |

| CC 28 | 6.10 | 5.80 | 0.30 | 4.98% |

| CC 30 | 6.00 | 6.04 | 0.04 | 0.63% |

| CC 32 | 6.90 | 6.80 | 0.10 | 1.49% |

| CC 45 | 8.30 | 8.41 | 0.11 | 1.33% |

| CC 46 | 8.80 | 8.57 | 0.23 | 2.63% |

| CC 47 | 10.80 | 11.74 | 0.94 | 8.70% |

| Tree No. |

Field_HeightYD /m |

Phone_HeightYD /m | Absolute Error/m | Relative Error/% |

|---|---|---|---|---|

| YD 3 | 10.20 | 10.23 | 0.03 | 0.29% |

| YD 4 | 9.20 | 10.15 | 0.95 | 10.33% |

| YD 8 | 8.00 | 8.22 | 0.22 | 2.69% |

| YD 11 | 7.30 | 7.62 | 0.32 | 4.32% |

| YD 21 | 6.20 | 6.58 | 0.38 | 6.13% |

| YD 28 | 8.60 | 8.98 | 0.38 | 4.44% |

| YD 31 | 6.70 | 7.11 | 0.41 | 6.07% |

| YD 34 | 9.60 | 9.69 | 0.09 | 0.90% |

| YD 36 | 10.50 | 10.97 | 0.47 | 4.48% |

| YD 37 | 10.20 | 10.53 | 0.33 | 3.24% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xuan, J.; Li, X.; Du, H.; Zhou, G.; Mao, F.; Wang, J.; Zhang, B.; Gong, Y.; Zhu, D.; Zhou, L.; et al. Intelligent Estimating the Tree Height in Urban Forests Based on Deep Learning Combined with a Smartphone and a Comparison with UAV-LiDAR. Remote Sens. 2023, 15, 97. https://doi.org/10.3390/rs15010097

Xuan J, Li X, Du H, Zhou G, Mao F, Wang J, Zhang B, Gong Y, Zhu D, Zhou L, et al. Intelligent Estimating the Tree Height in Urban Forests Based on Deep Learning Combined with a Smartphone and a Comparison with UAV-LiDAR. Remote Sensing. 2023; 15(1):97. https://doi.org/10.3390/rs15010097

Chicago/Turabian StyleXuan, Jie, Xuejian Li, Huaqiang Du, Guomo Zhou, Fangjie Mao, Jingyi Wang, Bo Zhang, Yulin Gong, Di’en Zhu, Lv Zhou, and et al. 2023. "Intelligent Estimating the Tree Height in Urban Forests Based on Deep Learning Combined with a Smartphone and a Comparison with UAV-LiDAR" Remote Sensing 15, no. 1: 97. https://doi.org/10.3390/rs15010097

APA StyleXuan, J., Li, X., Du, H., Zhou, G., Mao, F., Wang, J., Zhang, B., Gong, Y., Zhu, D., Zhou, L., Huang, Z., Xu, C., Chen, J., Zhou, Y., Chen, C., Tan, C., & Sun, J. (2023). Intelligent Estimating the Tree Height in Urban Forests Based on Deep Learning Combined with a Smartphone and a Comparison with UAV-LiDAR. Remote Sensing, 15(1), 97. https://doi.org/10.3390/rs15010097