MT-FANet: A Morphology and Topology-Based Feature Alignment Network for SAR Ship Rotation Detection

Abstract

:1. Introduction

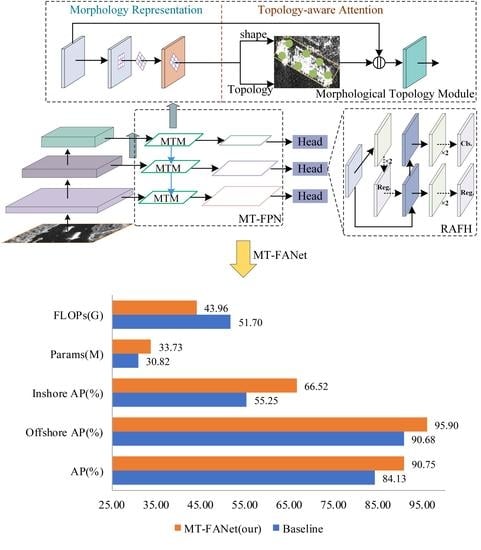

- We adopted deformable convolutions to improve the network’s feature representation ability for irregularly shaped ship targets, focusing more on the features of the target itself rather than the background, and thus mitigating the impacts of complex background interference.

- It is well-known that the topological structures of ship targets contain important feature information. Therefore, we developed a novel morphology and topology feature pyramid network (MT-FPN) to exploit the inherent topological structure information of SAR ship targets, which can elucidate effective features for consequent ship target detection.

- To achieve a balance between the speed and accuracy of the proposed detection model, a rotation alignment feature head (RAFH) was designed to predict fine-tuning and feature differentiation. This addresses the feature misalignment issue and enables rotation bounding box prediction, thus improving the model’s detection performance.

2. Related Work

2.1. Deep Learning Detection Method for SAR Ship Targets

2.2. Feature Pyramid Structure

3. Proposed Method Description

3.1. Overview of the Proposed MT-FANet

3.2. Morphology and Topology Feature Pyramid Network

3.2.1. Feature Fusion

3.2.2. Morphology and Topology Module

3.3. Rotation Alignment Feature Head

3.3.1. Rotation Offset Prediction

3.3.2. Decoupled Feature Prediction

3.4. Loss Function

4. Experimental Results

4.1. Experimental Datasets and Details

4.1.1. Datasets

4.1.2. Experimental Details

4.2. Evaluation Metrics

4.3. Comparison with State-of-the-Art Methods

4.4. Ablation Studies

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, B.; Xu, G.; Zhou, R.; Zhang, H.; Hong, W. Multi-channel back-projection algorithm for mmwave automotive MIMO SAR imaging with Doppler-division multiplexing. IEEE J. Sel. Top. Signal Process. 2022, 1–13. [Google Scholar] [CrossRef]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J. Learning deep ship detector in SAR images from scratch. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4021–4039. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, H.; Wang, C.; Zhang, B.; Liu, M. Crop classification based on temporal information using sentinel-1 SAR time-series data. Remote Sens. 2018, 11, 53. [Google Scholar] [CrossRef] [Green Version]

- Brusch, S.; Lehner, S.; Fritz, T.; Soccorsi, M.; Soloviev, A.; van Schie, B. Ship surveillance with TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1092–1103. [Google Scholar] [CrossRef]

- Liao, M.; Wang, C.; Wang, Y.; Jiang, L. Using SAR images to detect ships from sea clutter. IEEE Geosci. Remote Sens. Lett. 2008, 5, 194–198. [Google Scholar] [CrossRef]

- Robey, F.; Fuhrmann, D.; Kelly, E. A CFAR adaptive matched filter detector. IEEE Trans. Aerosp. Electron. Syst. 1992, 28, 208–216. [Google Scholar] [CrossRef] [Green Version]

- Sugimoto, M.; Ouchi, K.; Nakamura, Y. On the novel use of model-based decomposition in SAR polarimetry for target detection on the sea. Remote Sens. Lett. 2013, 4, 843–852. [Google Scholar] [CrossRef]

- Charalampidis, D.; Kasparis, T. Wavelet-based rotational invariant roughness features for texture classification and segmentation. IEEE Trans Image Process. 2002, 11, 825–837. [Google Scholar] [CrossRef]

- Wang, C.; Bi, F.; Chen, L.; Chen, J. A novel threshold template algorithm for ship detection in high-resolution SAR images. In Proceedings of the IEEE International Geoscience Remote Sensing Symposium, Beijing, China, 10–15 July 2016; pp. 100–103. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, B.; Yu, H.; Chen, J.; Xing, M.; Hong, W. Sparse synthetic aperture radar imaging from compressed sensing and machine learning: Theories, applications, and trends. IEEE Trans. Geosci. Remote Sens. 2022, 10, 32–69. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X. Quad-FPN: A Novel Quad Feature Pyramid Network for SAR Ship Detection. Remote Sens. 2021, 13, 2771. [Google Scholar] [CrossRef]

- Sun, K.; Liang, Y.; Ma, X.; Huai, Y.; Xing, M. DSDet: A Lightweight Densely Connected Sparsely Activated Detector for Ship Target Detection in High-Resolution SAR Images. Remote Sens. 2021, 13, 2743. [Google Scholar] [CrossRef]

- Kang, M.; Ji, K.; Leng, X.; Lin, Z. Contextual region-based convolutional neural network with multilayer fusion for SAR ship detection. Remote Sens. 2017, 9, 860–872. [Google Scholar] [CrossRef] [Green Version]

- Li, D.; Liang, Q.; Liu, H.; Liu, Q.; Liu, H.; Liao, G. A novel multidimensional domain deep learning network for SAR ship detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5203213. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X. SAR ship detection dataset (SSDD): Official release and comprehensive data analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Xu, C.; Su, H.; Li, J. RSDD-SAR: Rotated ship detection dataset in SAR images. J. Radars 2022, 11, 581–599. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar] [CrossRef] [Green Version]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3520–3529. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A. SSD: Single shot MultiBox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar] [CrossRef] [Green Version]

- Shao, Z.; Zhang, X.; Zhang, T.; Xu, X.; Zeng, T. RBFA-Net: A Rotated Balanced Feature-Aligned Network for Rotated SAR Ship Detection and Classification. Remote Sens. 2022, 14, 3345. [Google Scholar] [CrossRef]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef] [Green Version]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar] [CrossRef] [Green Version]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Pan, Z.; Lei, B. Learning a rotation invariant detector with rotatable bounding box. arXiv 2017, arXiv:1711.09405. [Google Scholar]

- Han, J.; Ding, J.; Xue, N.; Xia, G. Redet: A rotation-equivariant detector for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2786–2795. [Google Scholar] [CrossRef]

- Xu, Z.; Gao, R.; Huang, K.; Xu, Q. Triangle Distance IoU Loss, Attention-Weighted Feature Pyramid Network, and Rotated-SARShip Dataset for Arbitrary-Oriented SAR Ship Detection. Remote Sens. 2022, 14, 4676. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, D.; Qiu, X.; Li, F. Scattering-Point-Guided RPN for Oriented Ship Detection in SAR Images. Remote Sens. 2023, 15, 1411. [Google Scholar] [CrossRef]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar] [CrossRef] [Green Version]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.; Lu, Q. Learning roi transformer for oriented object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar] [CrossRef]

- Guo, Z.; Liu, C.; Zhang, X.; Jiao, J.; Ji, X.; Ye, Q. Beyond bounding-box: Convex-hull feature adaptation for oriented and densely packed object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8792–8801. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Li, J.; Xia, G. Align deep features for oriented object detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5602511. [Google Scholar] [CrossRef]

- Li, Y.; Ding, Z.; Zhang, C.; Wang, Y.; Chen, J. SAR ship detection based on resnet and transfer learning. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar] [CrossRef]

- Rostami, M.; Kolouri, S.; Eaton, E.; Kim, K. Deep Transfer Learning for Few-Shot SAR Image Classification. Remote Sens. 2019, 11, 1374. [Google Scholar] [CrossRef] [Green Version]

- Lang, H.; Li, C.; Xu, J. Multisource heterogeneous transfer learning via feature augmentation for ship classification in SAR imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5228814. [Google Scholar] [CrossRef]

- Lou, X.; Liu, Y.; Xiong, Z.; Wang, H. Generative knowledge transfer for ship detection in SAR images. Comput. Electr. Eng. 2022, 101, 108041. [Google Scholar] [CrossRef]

- Lu, C.; Li, W. Ship Classification in High-Resolution SAR Images via Transfer Learning with Small Training Dataset. Sensors 2019, 19, 63. [Google Scholar] [CrossRef] [Green Version]

- Guo, H.; Yang, X.; Wang, N.; Song, B.; Gao, X. A rotational Libra R-CNN method for ship detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5772–5781. [Google Scholar] [CrossRef]

- Fu, K.; Fu, J.; Wang, Z.; Sun, X. Scattering-keypoint-guided network for oriented ship detection in high-resolution and large-scale SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11162–11178. [Google Scholar] [CrossRef]

- Kang, Y.; Wang, Z.; Fu, J.; Sun, X.; Fu, K. SFR-Net: Scattering feature relation network for aircraft detection in complex SAR images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5218317. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3det: Refined single-stage detector with feature refinement for rotating object. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; pp. 3163–3171. [Google Scholar] [CrossRef]

- Lin, T.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef] [Green Version]

- Fu, J.; Sun, X.; Wang, Z.; Fu, K. An anchor-free method based on feature balancing and refinement network for multiscale ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1331–1344. [Google Scholar] [CrossRef]

- Li, X.; Li, D.; Liu, H.; Wan, J.; Chen, Z.; Liu, Q. A-BFPN: An Attention-Guided Balanced Feature Pyramid Network for SAR Ship Detection. Remote Sens. 2022, 14, 3829. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Wu, J.; Pan, Z.; Lei, B.; Hu, Y. FSANet: Feature-and-Spatial-Aligned Network for Tiny Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5630717. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef] [Green Version]

- Hu, H.; Gu, J.; Zhang, Z.; Dai, J.; Wei, Y. Relation networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3588–3597. [Google Scholar] [CrossRef] [Green Version]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Zhu, Z.; Xu, M.; Bai, S.; Huang, T.; Bai, X. Asymmetric non-local neural networks for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 593–602. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.; Ye, Q.; Qiu, Q.; Jiao, J. Oriented response networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4961–4970. [Google Scholar] [CrossRef] [Green Version]

| Method | Obtained Results | Related References |

|---|---|---|

| Pre-training and transfer learning | Mitigating limitations of fewer samples | [35,36,37], etc. |

| Data augmentation | [38,39], etc. | |

| Feature selection | Enhanced model architecture | [22,40,41,42], etc. |

| Parameter | Value |

|---|---|

| Number of images | 7000 |

| Image size | 512 × 512 |

| Number of trains | 5000 |

| Number of tests | 2000 |

| Polarization | HH, HV, VH, DH, DV, VV |

| Imaging mode | SM, FSII, FSI, QPSI, UFS, SS |

| Resolution | 2~20 m |

| Method | AP50 (%) | Recall (%) | F1 | O. AP50 (%) | I. AP50 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|---|

| R-FasterR-CNN [17] | 83.44 ± 0.34 | 86.93 ± 0.19 | 85.15 ± 0.26 | 90.47 ± 0.40 | 49.44 ± 0.51 | 41.41 | 50.38 |

| RoI Transformer [32] | 88.39 ± 0.02 | 89.95 ± 0.02 | 89.17 ± 0.01 | 94.53 ± 0.17 | 60.19 ± 0.56 | 55.32 | 51.48 |

| Oriented R-CNN [19] | 88.69 ± 0.29 | 90.50 ± 0.23 | 89.59 ± 0.26 | 90.56 ± 0.30 | 65.73 ± 0.28 | 41.35 | 50.41 |

| R-FCOS [26] | 85.35 ± 0.13 | 87.60 ± 0.13 | 86.46 ± 0.12 | 92.94 ± 0.13 | 50.12 ± 0.45 | 32.17 | 51.73 |

| CFA [33] | 89.36 ± 0.09 | 91.50 ± 0.39 | 90.41 ± 0.23 | 90.80 ± 0.32 | 66.51 ± 0.17 | 36.83 | 48.58 |

| R3Det [43] | 80.58 ± 0.34 | 82.88 ± 0.14 | 81.77 ± 0.25 | 89.76 ± 0.46 | 56.47 ± 0.39 | 41.81 | 83.91 |

| S2ANet [34] | 87.84 ± 0.14 | 89.17 ± 0.19 | 88.50 ± 0.16 | 93.31 ± 0.16 | 63.32 ± 0.17 | 36.45 | 49.40 |

| Proposed method | 90.84 ± 0.18 | 92.21 ± 0.21 | 91.52 ± 0.22 | 95.72 ± 0.19 | 66.87 ± 0.39 | 33.73 | 43.96 |

| Method | F.P.L | AP50 (%) | R (%) | F1 | O. AP50(%) | I. AP50 (%) | P. (M) | Fs. (G) |

|---|---|---|---|---|---|---|---|---|

| Baseline | P3~P7 | 83.97 ± 0.10 | 88.34 ± 0.10 | 86.10 ± 0.15 | 90.64 ± 0.15 | 54.62 ± 0.18 | 36.13 | 52.39 |

| Modified-Baseline | P3~P5 | 84.28 ± 0.13 | 88.82 ± 0.17 | 86.49 ± 0.18 | 90.83 ± 0.14 | 55.55 ± 0.20 | 30.82 | 51.70 |

| Proposed method | P3~P5 | 90.84 ± 0.18 | 92.21 ± 0.21 | 91.52 ± 0.22 | 95.72 ± 0.19 | 66.87 ± 0.39 | 33.73 | 43.96 |

| MT-FPN | RAFH | AP50 (%) | Recall (%) | F1 | O. AP50 (%) | I. AP50 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|---|---|

| × | × | 84.28 ± 0.13 | 88.82 ± 0.17 | 86.49 ± 0.18 | 90.83 ± 0.14 | 55.55 ± 0.20 | 30.82 | 51.70 |

| √ | × | 87.32 ± 0.20 | 89.13 ± 0.18 | 88.22 ± 0.14 | 92.90 ± 0.21 | 59.20 ± 0.33 | 33.68 | 53.62 |

| √ | √ | 90.84 ± 0.18 | 92.21 ± 0.21 | 91.52 ± 0.22 | 95.72 ± 0.19 | 66.87 ± 0.39 | 33.73 | 43.96 |

| Method | AP50 (%) | Recall (%) | F1 | O. AP50 (%) | I. AP50 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|---|

| FPN | 88.64 ± 0.21 | 90.34 ± 0.25 | 89.47 ± 0.22 | 92.66 ± 0.28 | 58.42 ± 0.24 | 30.86 | 42.03 |

| Proposed method | 90.84 ± 0.18 | 92.21 ± 0.21 | 91.52 ± 0.22 | 95.72 ± 0.19 | 66.87 ± 0.39 | 33.73 | 43.96 |

| Hyperparameter λ set | AP50 (%) | Recall (%) | F1 | O. AP50 (%) | I. AP50 (%) |

|---|---|---|---|---|---|

| λ = 1 | 90.19 ± 0.26 | 91.71 ± 0.24 | 90.94 ± 0.24 | 95.52 ± 0.32 | 63.54 ± 0.18 |

| Proposed method (λ = 0.5) | 90.84 ± 0.18 | 92.21 ± 0.21 | 91.52 ± 0.22 | 95.72 ± 0.19 | 66.87 ± 0.39 |

| Backbone | Method | AP50 (%) | Recall (%) | F1 | O. AP50 (%) | I. AP50 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|---|---|

| ResNet101 | Baseline | 85.09 ± 0.23 | 88.99 ± 0.14 | 86.99 ± 0.18 | 91.17 ± 0.21 | 58.15 ± 0.31 | 49.81 | 71.17 |

| Proposed method | 90.72 ± 0.24 | 91.93 ± 0.28 | 91.31 ± 0.25 | 95.93 ± 0.23 | 67.47 ± 0.28 | 52.72 | 63.43 | |

| ResNet50 | Baseline | 84.28 ± 0.13 | 88.82 ± 0.17 | 86.49 ± 0.18 | 90.83 ± 0.14 | 55.55 ± 0.20 | 30.82 | 51.70 |

| Proposed method | 90.84 ± 0.18 | 92.21 ± 0.21 | 91.52 ± 0.22 | 95.72 ± 0.19 | 66.87 ± 0.39 | 33.73 | 43.96 | |

| ResNet18 | Baseline | 83.25 ± 0.20 | 87.68 ± 0.17 | 85.40 ± 0.18 | 90.39 ± 0.38 | 51.22 ± 0.30 | 17.86 | 38.98 |

| Proposed method | 89.21 ± 0.14 | 90.76 ± 0.29 | 89.98 ± 0.20 | 94.83 ± 0.12 | 61.83 ± 0.54 | 21.12 | 31.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Q.; Li, D.; Jiang, R.; Liu, S.; Liu, H.; Li, S. MT-FANet: A Morphology and Topology-Based Feature Alignment Network for SAR Ship Rotation Detection. Remote Sens. 2023, 15, 3001. https://doi.org/10.3390/rs15123001

Liu Q, Li D, Jiang R, Liu S, Liu H, Li S. MT-FANet: A Morphology and Topology-Based Feature Alignment Network for SAR Ship Rotation Detection. Remote Sensing. 2023; 15(12):3001. https://doi.org/10.3390/rs15123001

Chicago/Turabian StyleLiu, Qianqian, Dong Li, Renjie Jiang, Shuang Liu, Hongqing Liu, and Suqi Li. 2023. "MT-FANet: A Morphology and Topology-Based Feature Alignment Network for SAR Ship Rotation Detection" Remote Sensing 15, no. 12: 3001. https://doi.org/10.3390/rs15123001