Combining Multi-Source Data and Feature Optimization for Plastic-Covered Greenhouse Extraction and Mapping Using the Google Earth Engine: A Case in Central Yunnan Province, China

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Data Sources and Processing

2.2.1. Sentinel-1 SAR Image

2.2.2. Sentinel-2 Optical Image

2.2.3. Shuttle Radar Topography Mission (SRTM) Terrain Data

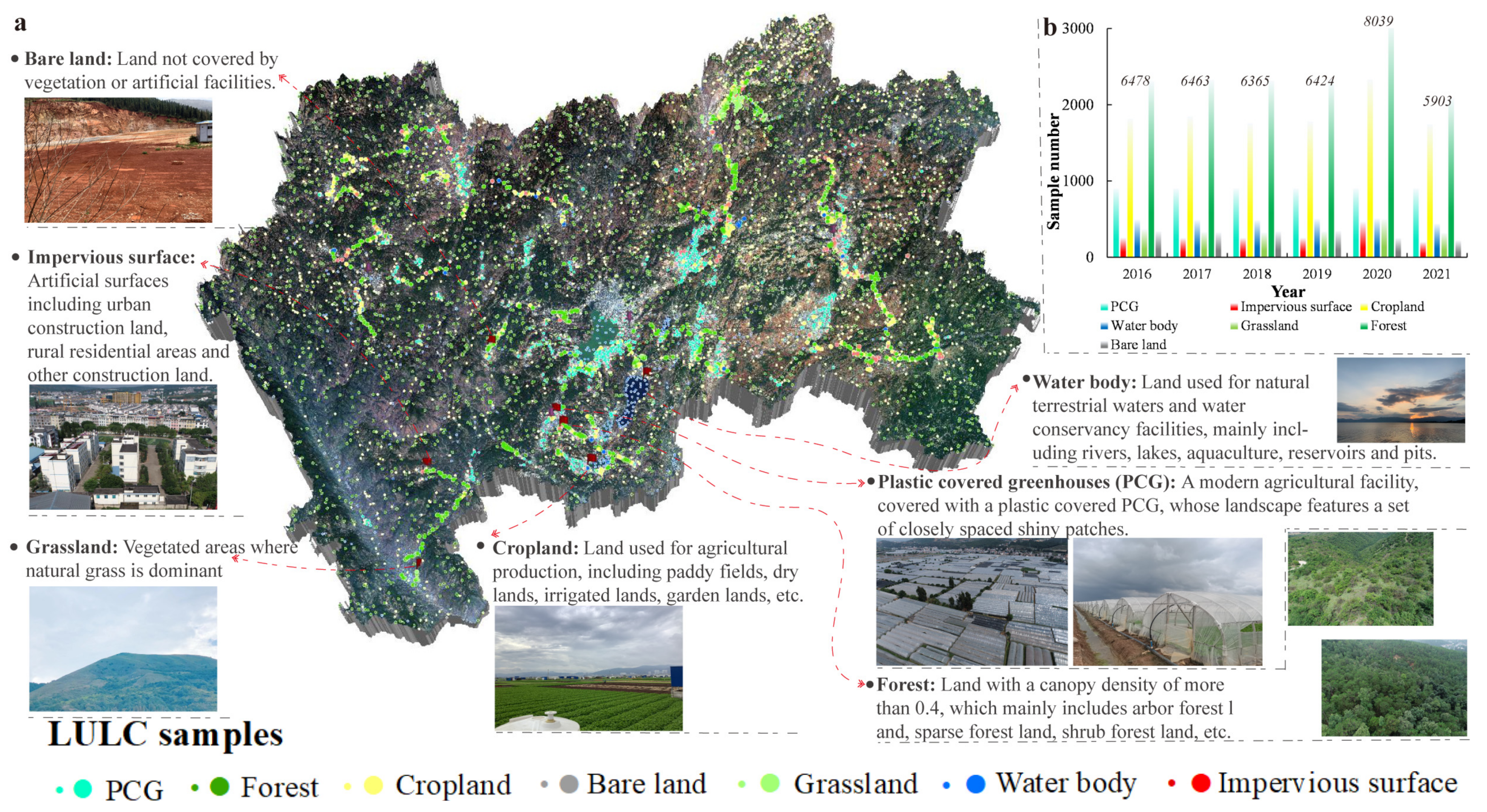

2.2.4. Reference Data for Supervised Classification

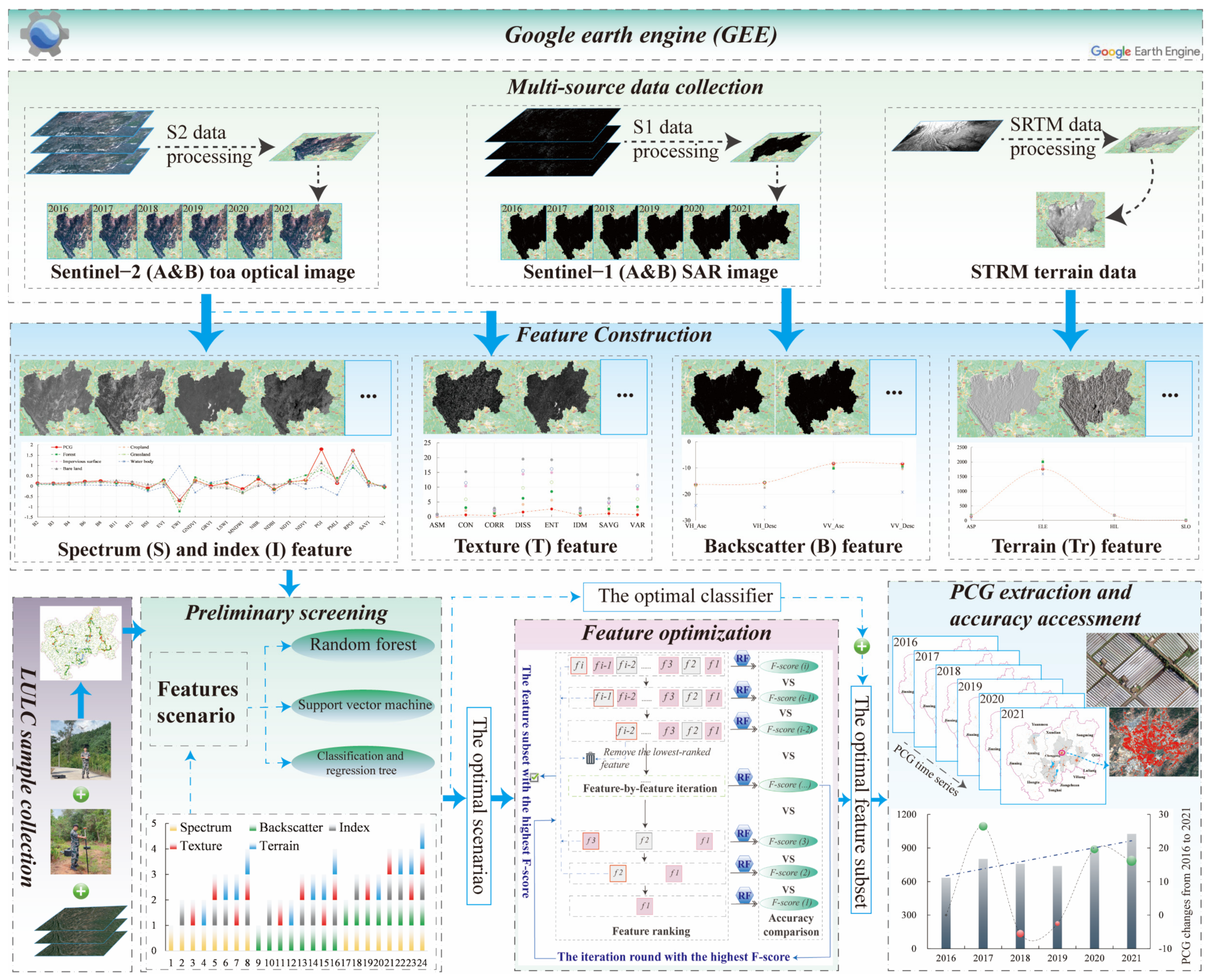

2.3. Methods

2.3.1. Overview of the Methodology

2.3.2. Feature Construction

- (1)

- Spectrum (S) and index (I) features

- (2)

- Texture (T) features

- (3)

- Backscatter (B) feature

- (4)

- Terrain (Tr) feature

| Feature Types | Feature Factors | Calculation Methods | References |

|---|---|---|---|

| Spectrum Features (S) | B2 (Blue, B) | Extracting specific band from the S2 annual composite images | Nemmaoui et al. [21] |

| B3 (Green, G) | |||

| B4 (Red, R) | |||

| B6 (Vegetation Red Edge-2) | |||

| B8 (Near infrared, NIR) | |||

| B11 (Shortwave Infrared-1, SWIR1) | |||

| B12 (Shortwave Infrared-2, SWIR2) | |||

| Index Features (I) | BSI (Bare Soil Index) | ((SWIR1 + R) − (NIR + B))/((SWIR1 + R) + (NIR + B)) | Roy et al. [55] |

| VI (Vegetation indices) | ((SWIR1 − NIR)/(SWIR1 + NIR))*((NIR − R)/(NIR + R)) | He et al. [56] | |

| EVI (Enhanced Vegetation Index) | 2.5*(NIR − R)/(NIR + 6*R − 7.5*B + 1) | Jiang et al. [57] | |

| EWI (Enhanced Water Index) | (G − SWIR1)/(G + SWIR1)) + (G − NIR)/(G + NIR) − (NIR − R)/(NIR + R) | Wang et al. [58] | |

| NDVI (Normalized Difference Vegetation Index) | (NIR − R)/(NIR + R) | Rouse et al. [59] | |

| GNDVI (Green NDVI) | (NIR − G)/(NIR + G) | Phadikar and Goswami [60] | |

| GRVI (Green Red Vegetation Indices) | (G − R)/(G + R) | Khadanga and Jain [61] | |

| LSWI (Land Surface Water Index) | (NIR − SWIR1)/(NIR + SWIR1) | Chandrasekar et al. [62] | |

| MNDWI (Modified Normalized Difference Water Index) | (G − SWIR1)/(G + SWIR1) | Xu [63] | |

| NBR (Normalized Burn Ratio) | (NIR − SWIR2)/(NIR + SWIR2) | Picotte et al. [64] | |

| NDBI (Normalized Difference Built-up Index) | (SWIR1 − NIR)/(SWIR1 + NIR) | Aziz [65] | |

| NDTI (Normalized Difference Tillage Index) | (SWIR1 − SWIR2)/(SWIR1 + SWIR2) | Fernández-Buces et al. [51] | |

| SAVI (Soil Adjusted Vegetation Index) | (1.5*(NIR − R))/(NIR + R + 0.5) | HUETE [66] | |

| PGI (Plastic Greenhouse Index) | (100*R*(NIR − R))/(1− (NIR + B + G)/3) | Yang et al. [9] | |

| PMLI (Plastic-Mulched Landcover Index) | (SWIR1 − R)/(SWIR1 + R) | Lu et al. [25] | |

| RPGI (Retrogressive Plastic Greenhouse Index) | (100*B)/(1 − (NIR + B + G)/3) | Yang et al. [9] | |

| Texture Features (T) | ASM (Angular Second Moment) | The grey-level 8-bit image calculated by the (0.3*NIR)+(0.59*R)+(0.11*G) formula was used as an input variable to input the ee. glcmTexture(size, kernel, average) function provided by the GEE platform to construct texture features. | Tassi and Vizzari [52] |

| CON (Contrast) | |||

| CORR (Correlation) | |||

| DISS (Dissimilarity) | |||

| ENT (Entropy) | |||

| IDM (Inverse Difference Moment) | |||

| SAVG (Sum Average) | |||

| VAR (Variance) | |||

| Backscatter Features (B) | VH_Asc | Median value of all bands of VH cross polarization in ascending (Asc) orbit | This study |

| VH_Desc | Median value of all bands of VH cross polarization in descending (Desc) orbit | ||

| VV_Asc | Median value of all bands of VV monopolarization in ascending (Asc)/descending (Desc) orbit | ||

| VV_Desc | |||

| Terrain Features (Tr) | ASP (Aspect) | Based on the SRTMGL1_003 terrain data, the selected terrain features were calculated using ee.Algorithms.Terrain (input) function provided by the GEE platform. | Lin et al. [1] |

| ELE (Elevation) | |||

| HIL (Hillshade) | |||

| SLO (Slope) |

2.3.3. Machine Learning-Based Supervised Classifier

- (1)

- CART classifier

- (2)

- RF classifier

- (3)

- SVM classifier

2.3.4. Feature Optimization Strategy

2.3.5. Accuracy Assessment

3. Results

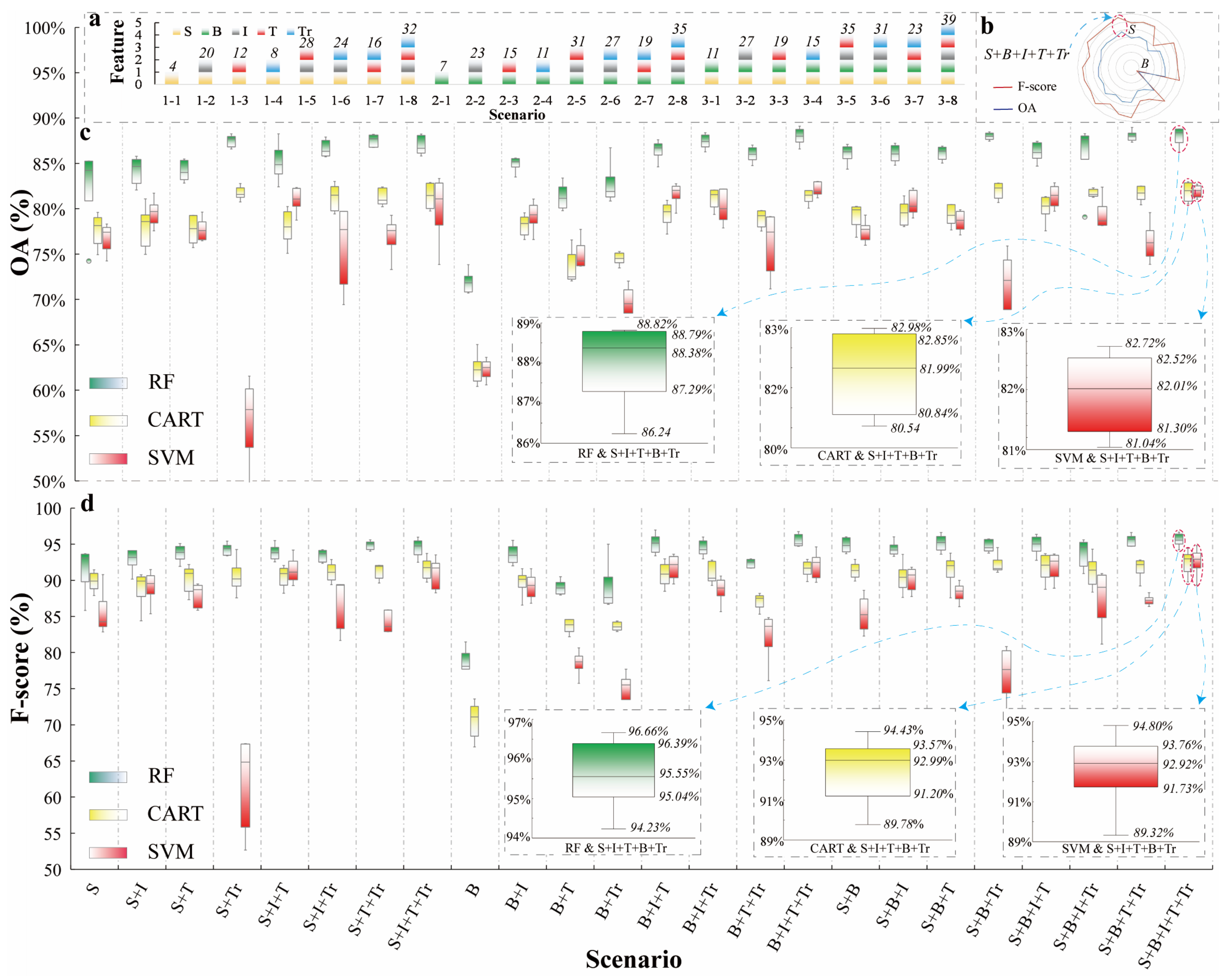

3.1. Preliminary Screening of Feature Scenarios and Classifiers

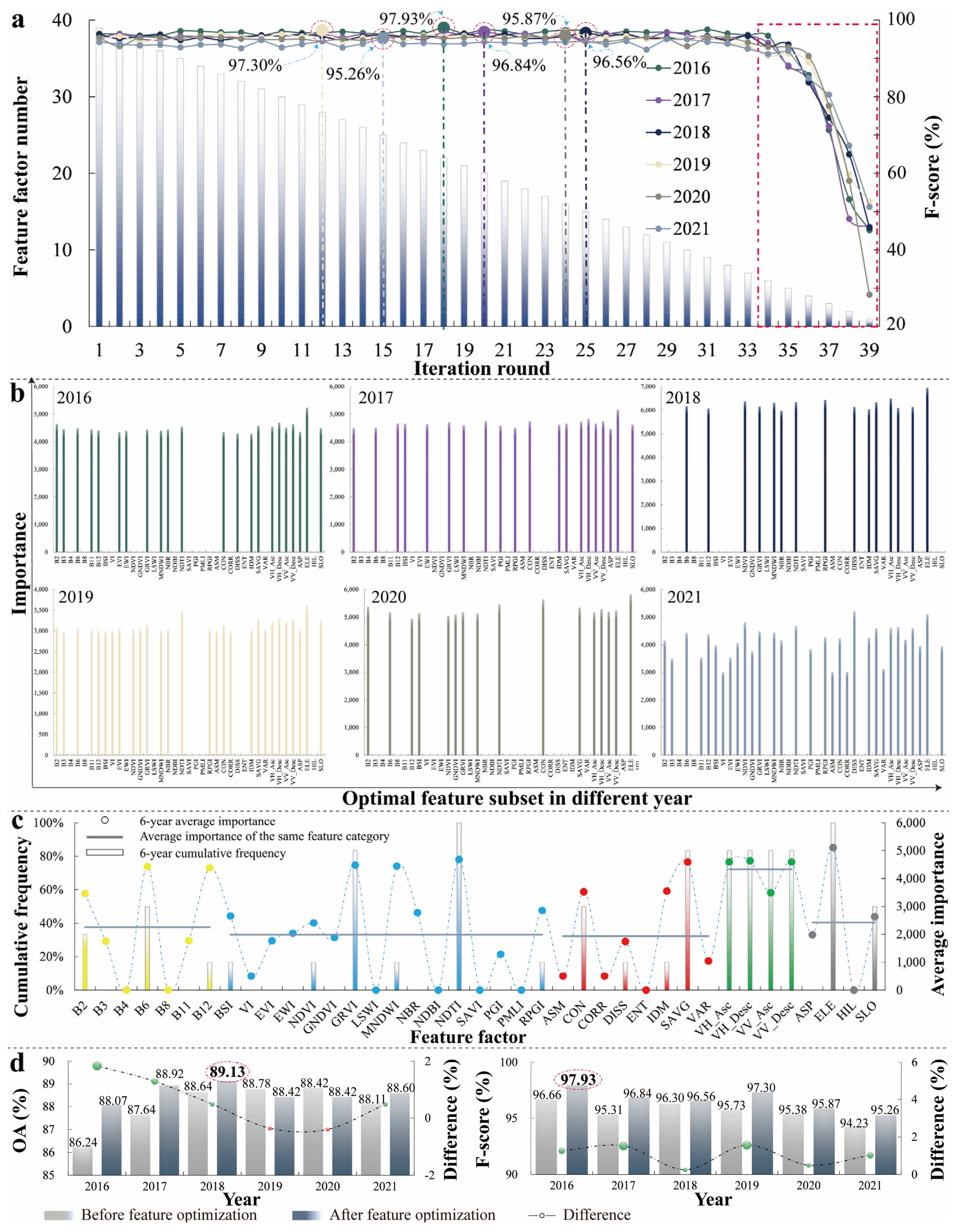

3.2. Feature Optimization Based on RF-RFE Method

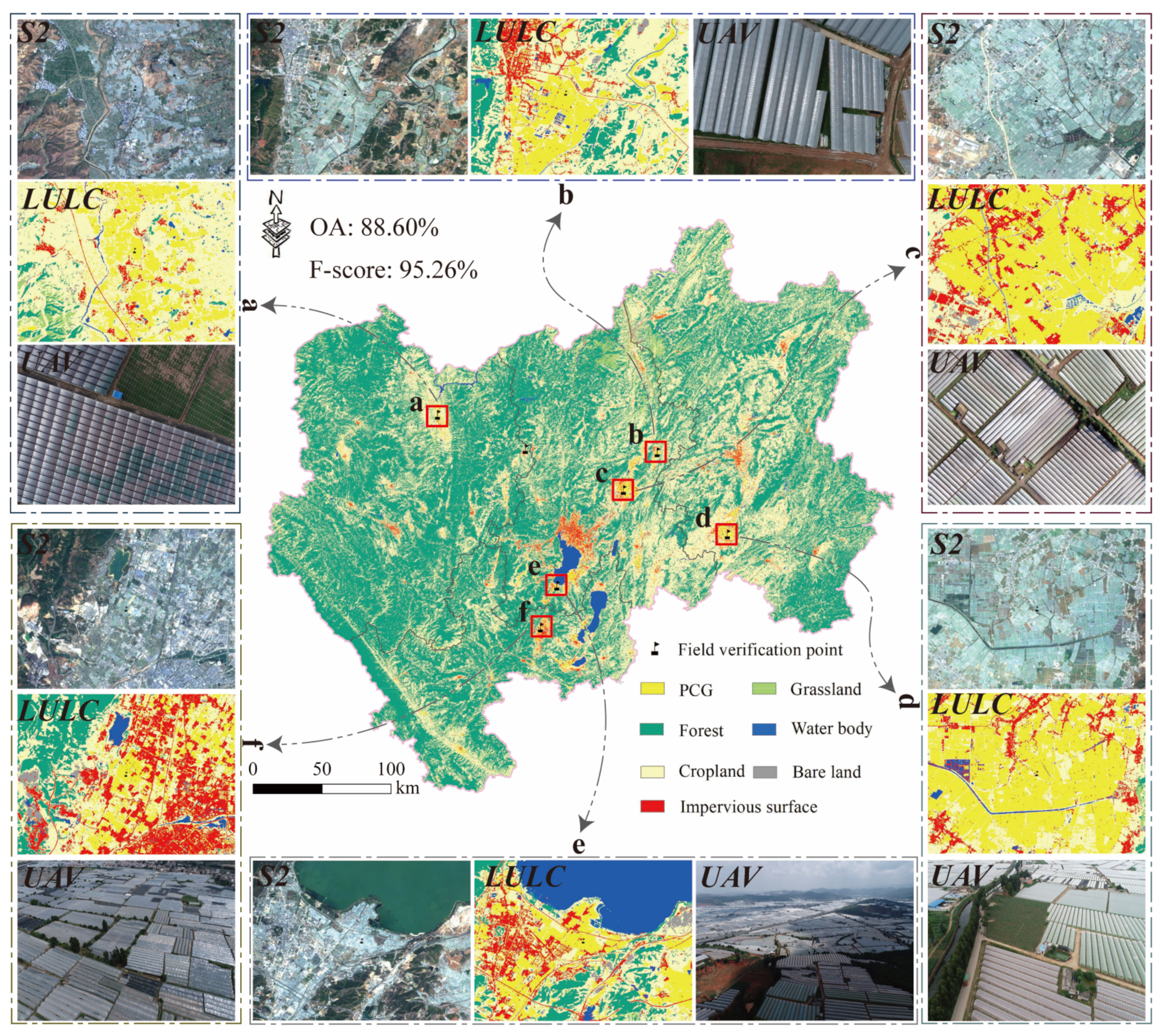

3.3. Accuracy Assessment

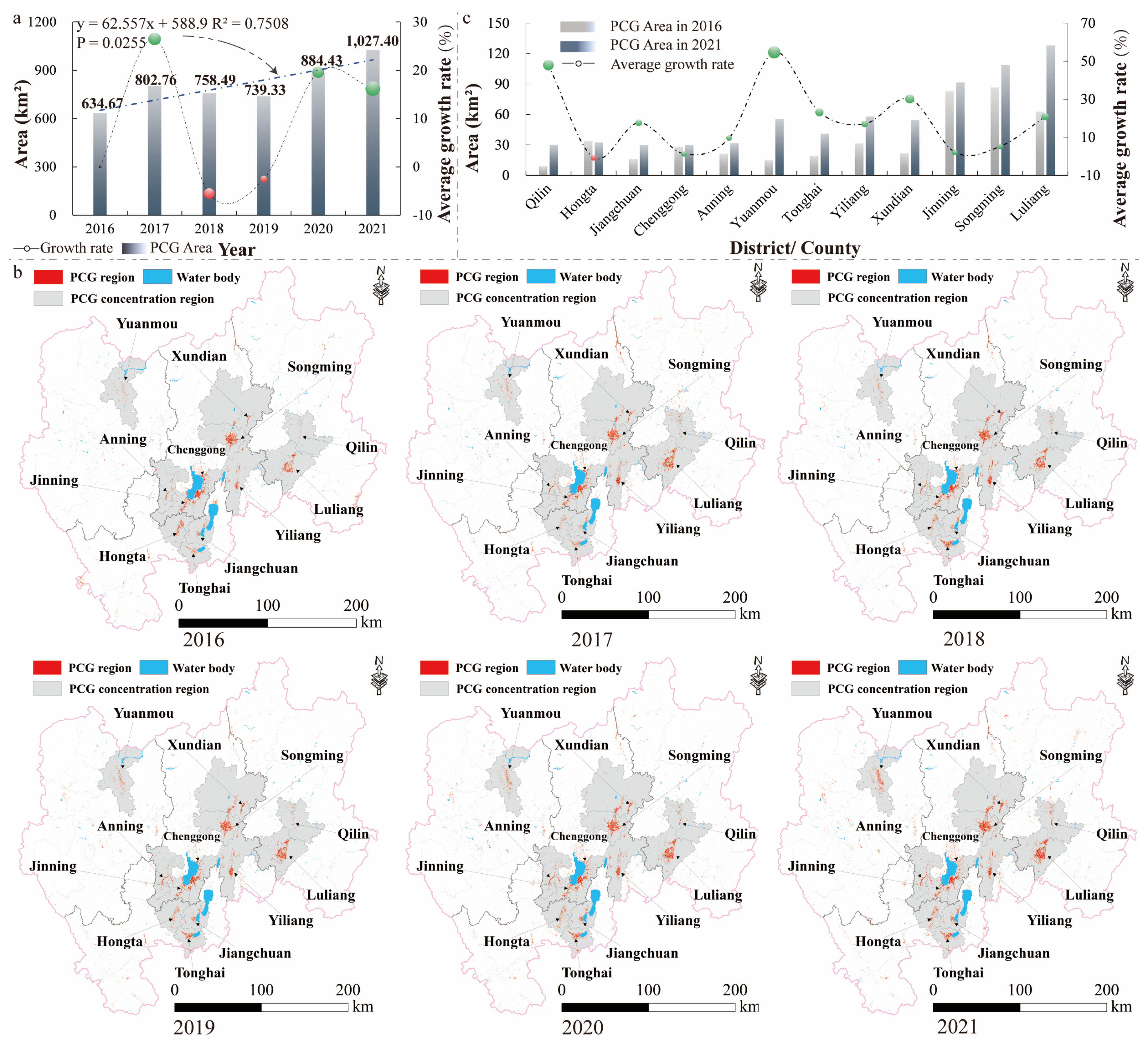

3.4. Spatiotemporal Pattern of PCG in the CYP

4. Discussion

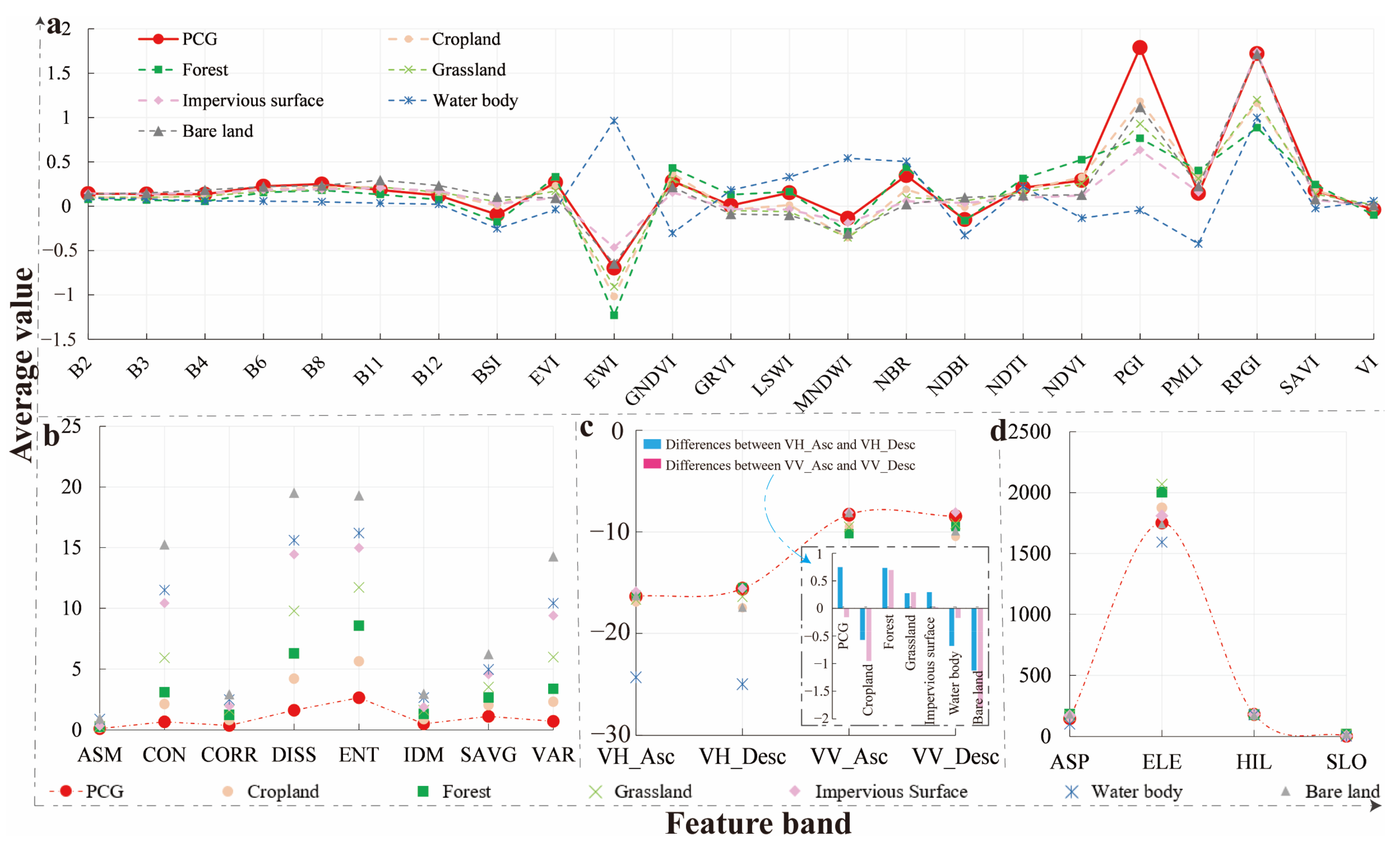

4.1. Influence of Feature Variables on Classification Accuracy

4.2. Feature Optimization Strategy

4.3. Comparative Analysis of Classification Results and Published Products

4.4. Limitations and Future Work

5. Conclusions

- (1)

- The 6-year average F-score of the RF classifier in all feature scenarios was 3.77% (93.16% vs. 89.39%) and 9.26% (93.16% vs. 83.90%) higher than those of SVM and CART, respectively. Additionally, the F-score of three classifiers demonstrated an upward trend with increasing features, and the average F-score of the S + I + B + T + Tr scenario had the highest value (93.59%). The combination of the RF classifier and S + I + T + B + Tr scenario created the highest F-score of 95.60% for PCGs.

- (2)

- The F-score each year after feature optimization was improved to varying degrees, and the 6-year average F-score increased by 1.03% (96.63% vs. 95.60%), which proved that feature optimization had a positive impact on PCG recognition. In addition, the top five feature factors with the highest 6-year average importance were ELE, NDTI, VH_Desc, VH_Asc, and SAVG, which covered almost all the examined feature types, indicating that a reasonable combination of multiple types of features can effectively improve the recognition accuracy of PCGs.

- (3)

- The average F-score of PCGs extracted by the combined RF algorithm and the optimal feature subset exceeded 95.00% and passed the visual inspection from satellite images and UAV images, which indicated that the PCG spatiotemporal mapping results were reliable. From 2016 to 2021, the PCGs in CYP were prominently agglomerated in the central region, and the PCG region increased steadily, mainly demonstrating a trend of spreading out from the croplands in the PCG-concentrated region.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lin, J.; Jin, X.; Ren, J.; Liu, J.; Liang, X.; Zhou, Y. Rapid Mapping of Large-Scale Greenhouse Based on Integrated Learning Algorithm and Google Earth Engine. Remote Sens. 2021, 13, 1245. [Google Scholar] [CrossRef]

- Briassoulis, D.; Babou, E.; Hiskakis, M.; Scarascia, G.; Picuno, P.; Guarde, D.; Dejean, C. Review, Mapping and Analysis of the Agricultural Plastic Waste Generation and Consolidation in Europe. Waste Manag. Res. 2013, 31, 1262–1278. [Google Scholar] [CrossRef]

- Novelli, A.; Aguilar, M.A.; Nemmaoui, A.; Aguilar, F.J.; Tarantino, E. Performance Evaluation of Object Based Greenhouse Detection from Sentinel-2 MSI and Landsat 8 OLI Data: A Case Study from Almería (Spain). Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 403–411. [Google Scholar] [CrossRef]

- Briassoulis, D.; Dougka, G.; Dimakogianni, D.; Vayas, I. Analysis of the Collapse of a Greenhouse with Vaulted Roof. Biosyst. Eng. 2016, 151, 495–509. [Google Scholar] [CrossRef]

- Hasituya, H.; Chen, Z.; Wang, L.; Wu, W.; Jiang, Z.; Li, H. Monitoring Plastic-Mulched Farmland by Landsat-8 Oli Imagery Using Spectral and Textural Features. Remote Sens. 2016, 8, 353. [Google Scholar] [CrossRef]

- Aguilar, M.Á.; Jiménez-Lao, R.; Nemmaoui, A.; Aguilar, F.J.; Koc-San, D.; Tarantino, E.; Chourak, M. Evaluation of the Consistency of Simultaneously Acquired Sentinel-2 and Landsat 8 Imagery on Plastic Covered Greenhouses. Remote Sens. 2020, 12, 2015. [Google Scholar] [CrossRef]

- Katan, J. Solar heating (solarization) of soil soilborne pests. Plant Pathol. 1981, 19, 211–236. [Google Scholar] [CrossRef]

- Picuno, P.; Sica, C.; Laviano, R.; Dimitrijević, A.; Scarascia-Mugnozza, G. Experimental Tests and Technical Characteristics of Regenerated Films from Agricultural Plastics. Polym. Degrad. Stab. 2012, 97, 1654–1661. [Google Scholar] [CrossRef]

- Yang, D.; Chen, J.; Zhou, Y.; Chen, X.; Chen, X.; Cao, X. Mapping Plastic Greenhouse with Medium Spatial Resolution Satellite Data: Development of a New Spectral Index. ISPRS J. Photogramm. Remote Sens. 2017, 128, 47–60. [Google Scholar] [CrossRef]

- Ou, C.; Yang, J.; Du, Z.; Liu, Y.; Feng, Q.; Zhu, D. Long-Term Mapping of a Greenhouse in a Typical Protected Agricultural Region Using Landsat Imagery and the Google Earth Engine. Remote Sens. 2020, 12, 55. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Bianconi, F.; Aguilar, F.J.; Fernández, I. Object-Based Greenhouse Classification from GeoEye-1 and WorldView-2 Stereo Imagery. Remote Sens. 2014, 6, 3554–3582. [Google Scholar] [CrossRef]

- Wu, C.F.; Deng, J.S.; Wang, K.; Ma, L.G.; Tahmassebi, A.R.S. Object-Based Classification Approach for Greenhouse Mapping Using Landsat-8 Imagery. Int. J. Agric. Biol. Eng. 2016, 9, 79–88. [Google Scholar] [CrossRef]

- Xiong, Y.; Zhang, Q.; Chen, X.; Bao, A.; Zhang, J.; Wang, Y. Large Scale Agricultural Plastic Mulch Detecting and Monitoring with Multi-Source Remote Sensing Data: A Case Study in Xinjiang, China. Remote Sens. 2019, 11, 2088. [Google Scholar] [CrossRef]

- Zhang, P.; Du, P.; Guo, S.; Zhang, W.; Tang, P.; Chen, J.; Zheng, H. A Novel Index for Robust and Large-Scale Mapping of Plastic Greenhouse from Sentinel-2 Images. Remote Sens. Environ. 2022, 276, 113042. [Google Scholar] [CrossRef]

- Agüera, F.; Aguilar, F.J.; Aguilar, M.A. Using Texture Analysis to Improve Per-Pixel Classification of Very High Resolution Images for Mapping Plastic Greenhouses. ISPRS J. Photogramm. Remote Sens. 2008, 63, 635–646. [Google Scholar] [CrossRef]

- Picuno, P.; Tortora, A.; Capobianco, R.L. Analysis of Plasticulture Landscapes in Southern Italy through Remote Sensing and Solid Modelling Techniques. Landsc. Urban Plan. 2011, 100, 45–56. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, P.; Wang, L.; Sun, G.; Zhao, J.; Zhang, H.; Du, N. The Influence of Facility Agriculture Production on Phthalate Esters Distribution in Black Soils of Northeast China. Sci. Total Environ. 2015, 506–507, 118–125. [Google Scholar] [CrossRef]

- Ge, D.; Wang, Z.; Tu, S.; Long, H.; Yan, H.; Sun, D.; Qiao, W. Coupling Analysis of Greenhouse-Led Farmland Transition and Rural Transformation Development in China’s Traditional Farming Area: A Case of Qingzhou City. Land Use Policy 2019, 86, 113–125. [Google Scholar] [CrossRef]

- He, F.; Ma, C. Development and Strategy of Facility Agriculture in China. Chin. Agric. Sci. Bull. 2007, 23, 462–465. [Google Scholar]

- Ou, C.; Yang, J.; Du, Z.; Zhang, T.; Niu, B.; Feng, Q.; Liu, Y.; Zhu, D. Landsat-Derived Annual Maps of Agricultural Greenhouse in Shandong Province, China from 1989 to 2018. Remote Sens. 2021, 13, 4830. [Google Scholar] [CrossRef]

- Nemmaoui, A.; Aguilar, M.A.; Aguilar, F.J.; Novelli, A.; Lorca, A.G. Greenhouse Crop Identification from Multi-Temporal Multi-Sensor Satellite Imagery Using Object-Based Approach: A Case Study from Almería (Spain). Remote Sens. 2018, 10, 1751. [Google Scholar] [CrossRef]

- Matton, N.; Canto, G.S.; Waldner, F.; Valero, S.; Morin, D.; Inglada, J.; Arias, M.; Bontemps, S.; Koetz, B.; Defourny, P. An Automated Method for Annual Cropland Mapping along the Season for Various Globally-Distributed Agrosystems Using High Spatial and Temporal Resolution Time Series. Remote Sens. 2015, 7, 13208–13232. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Vallario, A.; Aguilar, F.J.; Lorca, A.G.; Parente, C. Object-Based Greenhouse Horticultural Crop Identification from Multi-Temporal Satellite Imagery: A Case Study in Almeria, Spain. Remote Sens. 2015, 7, 7378–7401. [Google Scholar] [CrossRef]

- González-Yebra, Ó.; Aguilar, M.A.; Nemmaoui, A.; Aguilar, F.J. Methodological Proposal to Assess Plastic Greenhouses Land Cover Change from the Combination of Archival Aerial Orthoimages and Landsat Data. Biosyst. Eng. 2018, 175, 36–51. [Google Scholar] [CrossRef]

- Lu, L.; Di, L.; Ye, Y. A Decision-Tree Classifier for Extracting Transparent Plastic-Mulched Landcover from Landsat-5 TM Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4548–4558. [Google Scholar] [CrossRef]

- Novelli, A.; Tarantino, E. Combining Ad Hoc Spectral Indices Based on LANDSAT-8 OLI/TIRS Sensor Data for the Detection of Plastic Cover Vineyard. Remote Sens. Lett. 2015, 6, 933–941. [Google Scholar] [CrossRef]

- Hasituya; Chen, Z.; Wang, L.; Liu, J. Selecting Appropriate Spatial Scale for Mapping Plastic-Mulched Farmland with Satellite Remote Sensing Imagery. Remote Sens. 2017, 9, 265. [Google Scholar] [CrossRef]

- Levin, N.; Lugassi, R.; Ramon, U.; Braun, O.; Ben-Dor, E. Remote Sensing as a Tool for Monitoring Plasticulture in Agricultural Landscapes. Int. J. Remote Sens. 2007, 28, 183–202. [Google Scholar] [CrossRef]

- Carvajal, F.; Agüera, F.; Aguilar, F.J.; Aguilar, M.A. Relationship between Atmospheric Corrections and Training-Site Strategy with Respect to Accuracy of Greenhouse Detection Process from Very High Resolution Imagery. Int. J. Remote Sens. 2011, 31, 2977–2994. [Google Scholar] [CrossRef]

- Koc-San, D. Evaluation of Different Classification Techniques for the Detection of Glass and Plastic Greenhouses from WorldView-2 Satellite Imagery. J. Appl. Remote Sens. 2013, 7, 073553. [Google Scholar] [CrossRef]

- Balcik, F.B.; Senel, G.; Goksel, C. Greenhouse Mapping Using Object Based Classification and Sentinel-2 Satellite Imagery. In Proceedings of the 2019 8th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Istanbul, Turkey, 16–19 July 2019. [Google Scholar] [CrossRef]

- Jiménez-Lao, R.; Aguilar, F.J.; Nemmaoui, A.; Aguilar, M.A. Remote Sensing of Agricultural Greenhouses and Plastic-Mulched Farmland: An Analysis of Worldwide Research. Remote Sens. 2020, 12, 2649. [Google Scholar] [CrossRef]

- Lu, L.; Tao, Y.; Di, L. Object-Based Plastic-Mulched Landcover Extraction Using Integrated Sentinel-1 and Sentinel-2 Data. Remote Sens. 2018, 10, 1820. [Google Scholar] [CrossRef]

- Qi, Z.; Yeh, A.G.O.; Li, X.; Lin, Z. A Novel Algorithm for Land Use and Land Cover Classification Using RADARSAT-2 Polarimetric SAR Data. Remote Sens. Environ. 2012, 118, 21–39. [Google Scholar] [CrossRef]

- Veloso, A.; Mermoz, S.; Bouvet, A.; Le Toan, T.; Planells, M.; Dejoux, J.F.; Ceschia, E. Understanding the Temporal Behavior of Crops Using Sentinel-1 and Sentinel-2-like Data for Agricultural Applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A Review of Supervised Object-Based Land-Cover Image Classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- He, Z.; Zhang, M.; Wu, B.; Xing, Q. Extraction of Summer Crop in Jiangsu Based on Google Earth Engine. J. Geo-Inf. Sci. 2019, 21, 752–766. [Google Scholar] [CrossRef]

- Ma, A.; Chen, D.; Zhong, Y.; Zheng, Z.; Zhang, L. National-Scale Greenhouse Mapping for High Spatial Resolution Remote Sensing Imagery Using a Dense Object Dual-Task Deep Learning Framework: A Case Study of China. ISPRS J. Photogramm. Remote Sens. 2021, 181, 279–294. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Nemmaoui, A.; Novelli, A.; Aguilar, F.J.; Lorca, A.G. Object-Based Greenhouse Mapping Using Very High Resolution Satellite Data and Landsat 8 Time Series. Remote Sens. 2016, 8, 513. [Google Scholar] [CrossRef]

- Li, J.; Wang, J.; Zhang, J.; Liu, C.; He, S.; Liu, L. Growing-Season Vegetation Coverage Patterns and Driving Factors in the China-Myanmar Economic Corridor Based on Google Earth Engine and Geographic Detector. Ecol. Indic. 2022, 136, 108620. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Shelestov, A.; Lavreniuk, M.; Kussul, N.; Novikov, A.; Skakun, S. Exploring Google Earth Engine Platform for Big Data Processing: Classification of Multi-Temporal Satellite Imagery for Crop Mapping. Front. Earth Sci. 2017, 5, 17. [Google Scholar] [CrossRef]

- Yu, Y.; Shen, Y.; Wang, J.; Wei, Y.; Liu, Z. Simulation and Mapping of Drought and Soil Erosion in Central Yunnan Province, China. Adv. Sp. Res. 2021, 68, 4556–4572. [Google Scholar] [CrossRef]

- Nong, L.; Wang, J.; Yu, Y. Research on Ecological Environment Quality in Central Yunnan Based on MRSEI Model. J. Ecol. Rural Environ. 2021, 37, 972–982. [Google Scholar]

- Xiao, W.; Xu, S.; He, T. Mapping Paddy Rice with Sentinel-1/2 and Phenology-, Object-Based Algorithm—A Implementation in Hangjiahu Plain in China Using Gee Platform. Remote Sens. 2021, 13, 990. [Google Scholar] [CrossRef]

- Ibrahim, E.; Gobin, A. Sentinel-2 Recognition of Uncovered and Plastic Covered Agricultural Soil. Remote Sens. 2021, 13, 4195. [Google Scholar] [CrossRef]

- Liu, C.; Li, W.; Zhu, G.; Zhou, H.; Yan, H.; Xue, P. Land Use/Land Cover Changes and Their Driving Factors in the Northeastern Tibetan Plateau Based on Geographical Detectors and Google Earth Engine: A Case Study in Gannan Prefecture. Remote Sens. 2020, 12, 3139. [Google Scholar] [CrossRef]

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and Change Detection Using Landsat TM Data. Remote Sens. Environ. 2001, 75, 230–244. [Google Scholar] [CrossRef]

- Farr, T.G.; Rosen, P.A.; Caro, E.; Crippen, R.; Duren, R. The Shuttle Radar Topography Mission. Rev. Geophys. 2007, 45, 65–77. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, J. Ecological Security Early-Warning in Central Yunnan Province, China, Based on the Gray Model. Ecol. Indic. 2020, 111, 106000. [Google Scholar] [CrossRef]

- Fernández-Buces, N.; Siebe, C.; Cram, S.; Palacio, J.L. Mapping Soil Salinity Using a Combined Spectral Response Index for Bare Soil and Vegetation: A Case Study in the Former Lake Texcoco, Mexico. J. Arid Environ. 2006, 65, 644–667. [Google Scholar] [CrossRef]

- Tassi, A.; Vizzari, M. Object-Oriented Lulc Classification in Google Earth Engine Combining Snic, Glcm, and Machine Learning Algorithms. Remote Sens. 2020, 12, 3776. [Google Scholar] [CrossRef]

- Chen, X.; Yang, K.; Wang, J. Extraction of Impervious Surface in Mountainous City Combined with Sentinel Images and Feature Optimization. Softw. Guid. 2022, 21, 214–219. [Google Scholar]

- Zhang, H.; Lin, H.; Li, Y.; Zhang, Y.; Fang, C. Mapping Urban Impervious Surface with Dual-Polarimetric SAR Data: An Improved Method. Landsc. Urban Plan. 2016, 151, 55–63. [Google Scholar] [CrossRef]

- Roy, P.S.; Sharma, K.P.; Jain, A. Stratification of Density in Dry Deciduous Forest Using Satellite Remote Sensing Digital Data—An Approach Based on Spectral Indices. J. Biosci. 1996, 21, 723–734. [Google Scholar] [CrossRef]

- He, Y.; Zhang, B.; Ma, C. The Impact of Dynamic Change of Cropland on Grain Production in Jilin. J. Geogr. Sci. 2004, 14, 56–62. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a Two-Band Enhanced Vegetation Index without a Blue Band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Q.; Qian, J.; Xiao, X. Greenhouse Extraction Based on the Enhanced Water Index—A Case Study in Jiangmen of Guangdong. J. Integr. Technol. 2017, 6, 11–21. [Google Scholar]

- Rouse, J.; Haas, R.; Schell, J.; Deering, D. Monitoring Vegetation Systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Phadikar, S.; Goswami, J. Vegetation Indices Based Segmentation for Automatic Classification of Brown Spot and Blast Diseases of Rice. In Proceedings of the 2016 3rd International Conference on Recent Advances in Information Technology (RAIT), Dhanbad, India, 3–5 March 2016; pp. 284–289. [Google Scholar] [CrossRef]

- Khadanga, G.; Jain, K. Tree Census Using Circular Hough Transform and GRVI. Procedia Comput. Sci. 2020, 171, 389–394. [Google Scholar] [CrossRef]

- Chandrasekar, K.; Sesha Sai, M.V.R.; Roy, P.S.; Dwevedi, R.S. Land Surface Water Index (LSWI) Response to Rainfall and NDVI Using the MODIS Vegetation Index Product. Int. J. Remote Sens. 2010, 31, 3987–4005. [Google Scholar] [CrossRef]

- Xu, H. A Study on Information Extraction of Water Body with the Modified Normalized Difference Water Index (MNDWI). J. Remote Sens. 2005, 9, 589–595. [Google Scholar]

- Picotte, J.J.; Peterson, B.; Meier, G.; Howard, S.M. 1984–2010 Trends in Fire Burn Severity and Area for the Conterminous US. Int. J. Wildl. Fire 2016, 25, 413–420. [Google Scholar] [CrossRef]

- Aziz, M.A. Applying the Normalized Difference Built-Up Index to the Fayoum Oasis, Egypt (1984–2013). Remote Sens. GIS Appl. Nat. Resour. Popul. 2014, 2, 53–66. [Google Scholar]

- Huete, A.R. A Soil-Adjusted Vegetation Index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees. Biometrics 1984, 40, 874. [Google Scholar]

- Breiman, L.; Last, M.; Rice, J. Random Forests: Finding Quasars. In Statistical Challenges in Astronomy; Springer: New York, NY, USA, 2003; pp. 243–254. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene Selection for Cancer Classification Using Support Vector Machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Darst, B.F.; Malecki, K.C.; Engelman, C.D. Using Recursive Feature Elimination in Random Forest to Account for Correlated Variables in High Dimensional Data. BMC Genet. 2018, 19, 65. [Google Scholar] [CrossRef]

- Grabska, E.; Frantz, D.; Ostapowicz, K. Evaluation of Machine Learning Algorithms for Forest Stand Species Mapping Using Sentinel-2 Imagery and Environmental Data in the Polish Carpathians. Remote Sens. Environ. 2020, 251, 112103. [Google Scholar] [CrossRef]

- Gregorutti, B.; Michel, B.; Saint-Pierre, P. Correlation and Variable Importance in Random Forests. Stat. Comput. 2017, 27, 659–678. [Google Scholar] [CrossRef]

- Chen, J.; Shen, R.; Li, B.; Ti, C.; Yan, X.; Zhou, M.; Wang, S. The Development of Plastic Greenhouse Index Based on Logistic Regression Analysis. Remote Sens. Nat. Resour. 2019, 31, 43–50. [Google Scholar]

- Zanaga, D.; Van De Kerchove, R.; De Keersmaecker, W.; Souverijns, N.; Brockmann, C.; Quast, R.; Wevers, J.; Grosu, A.; Paccini, A.; Vergnaud, S. ESA WorldCover 10 m 2020 V100. 2021. Available online: https://zenodo.org/record/5571936 (accessed on 28 September 2021).

- Li, J.; Wang, J.; Zhang, J.; Zhang, J.; Kong, H. Dynamic Changes of Vegetation Coverage in China-Myanmar Economic Corridor over the Past 20 Years. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102378. [Google Scholar] [CrossRef]

- Bontemps, S.; Boettcher, M.; Brockmann, C.; Kirches, G.; Lamarche, C.; Radoux, J.; Santoro, M.; Van Bogaert, E.; Wegmüller, U.; Herold, M.; et al. Multi-Year Global Land Cover Mapping at 300 M and Characterization for Climate Modelling: Achievements of the Land Cover Component of the ESA Climate Change Initiative. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2015, 40, 323–328. [Google Scholar] [CrossRef]

- Friedl, M.A.; Sulla-Menashe, D.; Tan, B.; Schneider, A.; Ramankutty, N.; Sibley, A.; Huang, X. MODIS Collection 5 Global Land Cover: Algorithm Refinements and Characterization of New Datasets. Remote Sens. Environ. 2010, 114, 168–182. [Google Scholar] [CrossRef]

- Feng, Q.; Niu, B.; Zhu, D.; Yao, X.; Liu, Y. A Dataset of Remote Sensing-Based Classification for Agricultural Plastic Greenhouses in China in 2019. China Sci. Data 2021, 6, 153–170. [Google Scholar] [CrossRef] [PubMed]

| - | Sentinel-1 (A and B) | Sentinel-2 (A and B) | ||

|---|---|---|---|---|

| Mode/Format | GRD_IW | Level-1C | ||

| Frequency/Wavelength | 5.405 GHz/5.5 cm | – | ||

| Orbital Mode | Ascending/Descending | – | ||

| Temporal Resolution (d) | 6 | 5 | ||

| Spatial Resolution (m) | 10 | 10 | 20 | 60 |

| Polarization/Band | VH | B2 (Blue) | B5 (Vegetation Red Edge-1) | B1 (Coastal aerosol) |

| HH | B3 (Green) | B6 (Vegetation Red Edge-2) | B9 (Water vapor) | |

| VV + VH | B4 (Red) | B7 (Vegetation Red Edge-3) | B10 (Shortwave Infrared-Cirrus) | |

| HH + HV | B8 (NIR) | B8a (Vegetation Red Edge-4) | – | |

| – | – | B11 (Shortwave Infrared-1) | – | |

| – | – | B12 (Shortwave Infrared-2) | – | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Wang, H.; Wang, J.; Zhang, J.; Lan, Y.; Deng, Y. Combining Multi-Source Data and Feature Optimization for Plastic-Covered Greenhouse Extraction and Mapping Using the Google Earth Engine: A Case in Central Yunnan Province, China. Remote Sens. 2023, 15, 3287. https://doi.org/10.3390/rs15133287

Li J, Wang H, Wang J, Zhang J, Lan Y, Deng Y. Combining Multi-Source Data and Feature Optimization for Plastic-Covered Greenhouse Extraction and Mapping Using the Google Earth Engine: A Case in Central Yunnan Province, China. Remote Sensing. 2023; 15(13):3287. https://doi.org/10.3390/rs15133287

Chicago/Turabian StyleLi, Jie, Hui Wang, Jinliang Wang, Jianpeng Zhang, Yongcui Lan, and Yuncheng Deng. 2023. "Combining Multi-Source Data and Feature Optimization for Plastic-Covered Greenhouse Extraction and Mapping Using the Google Earth Engine: A Case in Central Yunnan Province, China" Remote Sensing 15, no. 13: 3287. https://doi.org/10.3390/rs15133287

APA StyleLi, J., Wang, H., Wang, J., Zhang, J., Lan, Y., & Deng, Y. (2023). Combining Multi-Source Data and Feature Optimization for Plastic-Covered Greenhouse Extraction and Mapping Using the Google Earth Engine: A Case in Central Yunnan Province, China. Remote Sensing, 15(13), 3287. https://doi.org/10.3390/rs15133287