Inversion of Leaf Area Index in Citrus Trees Based on Multi-Modal Data Fusion from UAV Platform

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area Overview

2.2. Data Acquisition

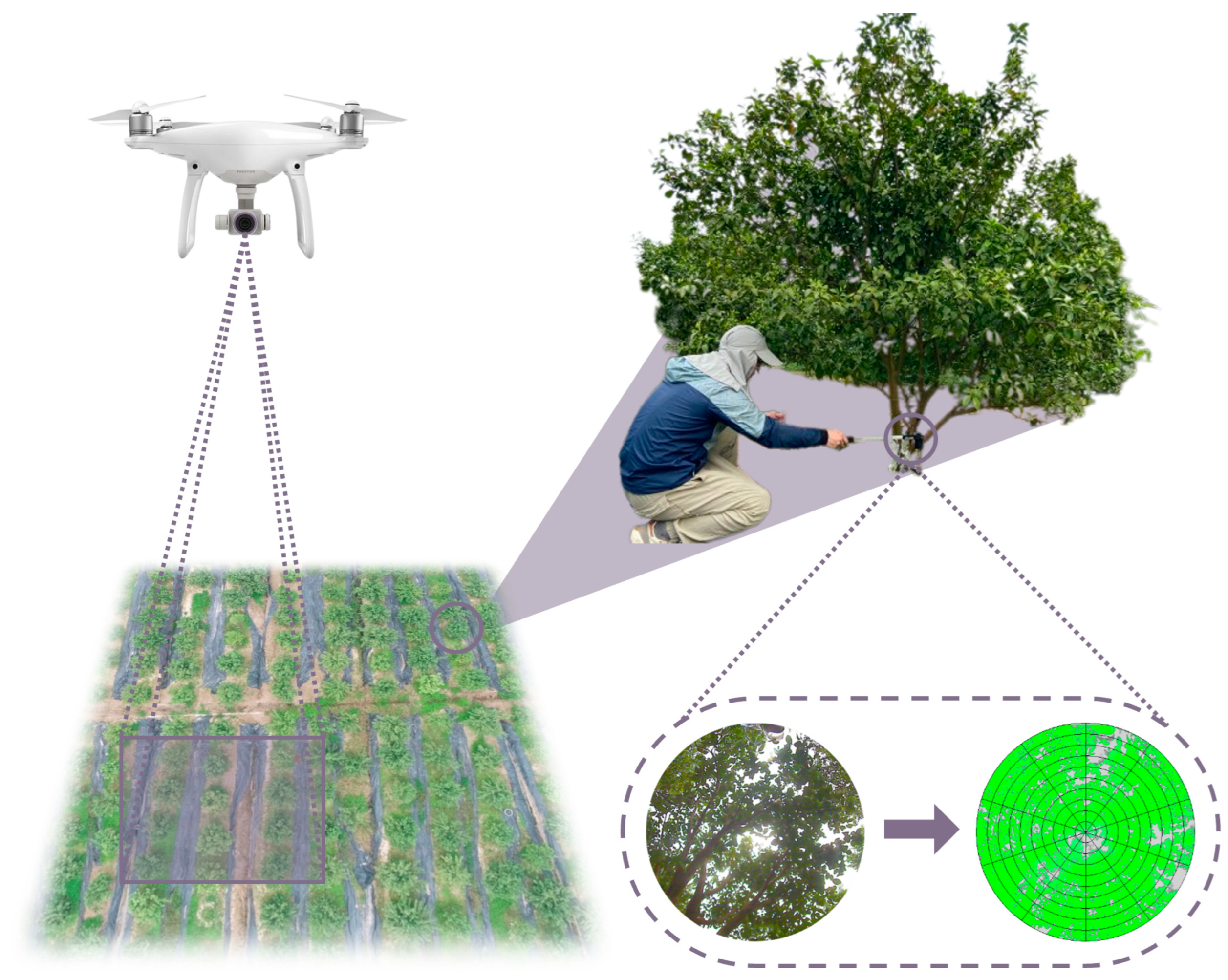

2.2.1. Measuring LAI of Citrus Trees

2.2.2. UAV RGB Image Acquisition

2.3. Data Pre-Processing

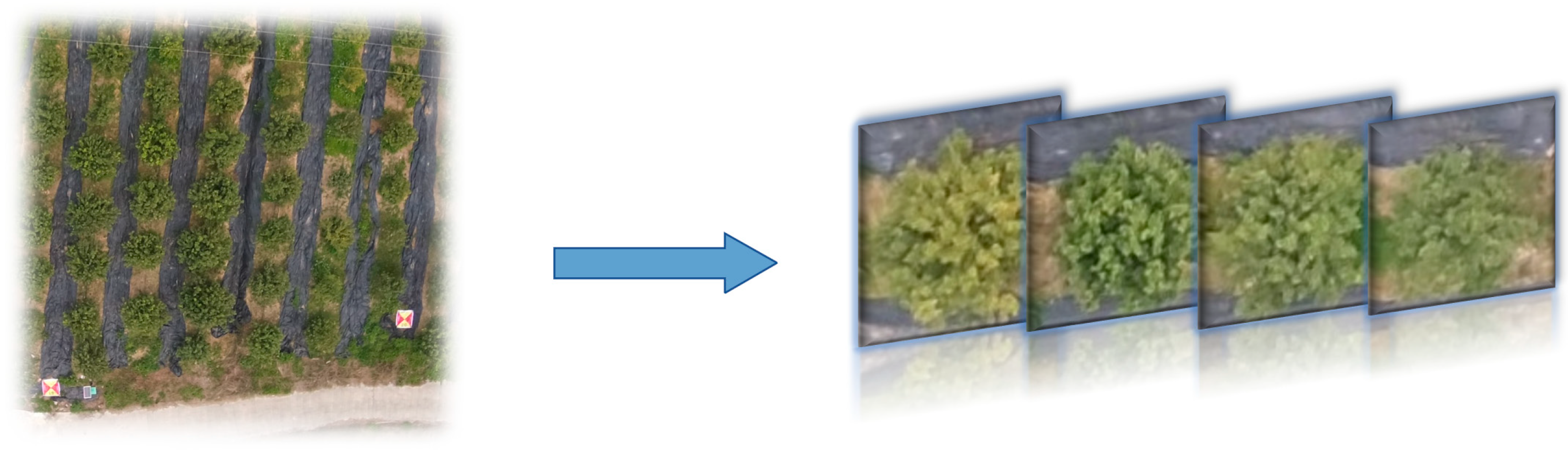

2.3.1. Acquisition of RGB Images of Citrus Trees

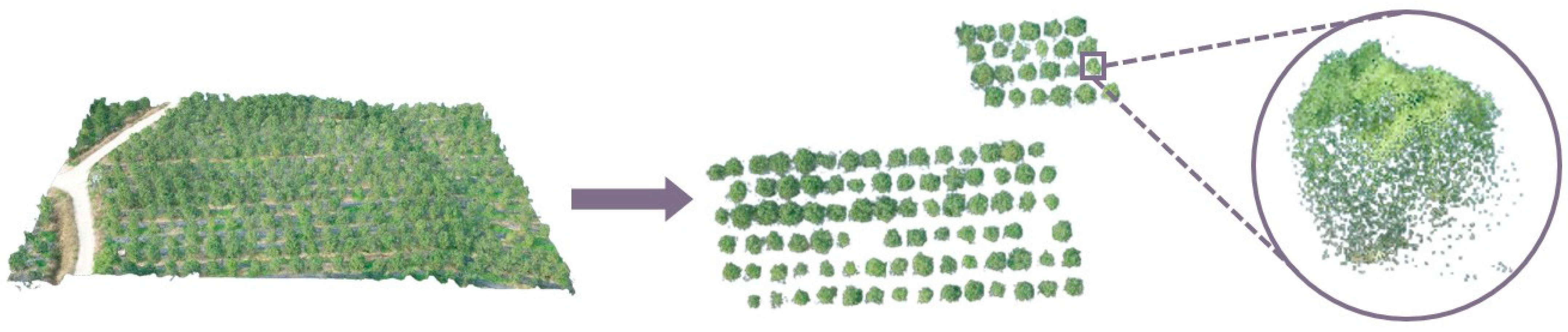

2.3.2. Acquisition of Point Cloud Data of Citrus Tree

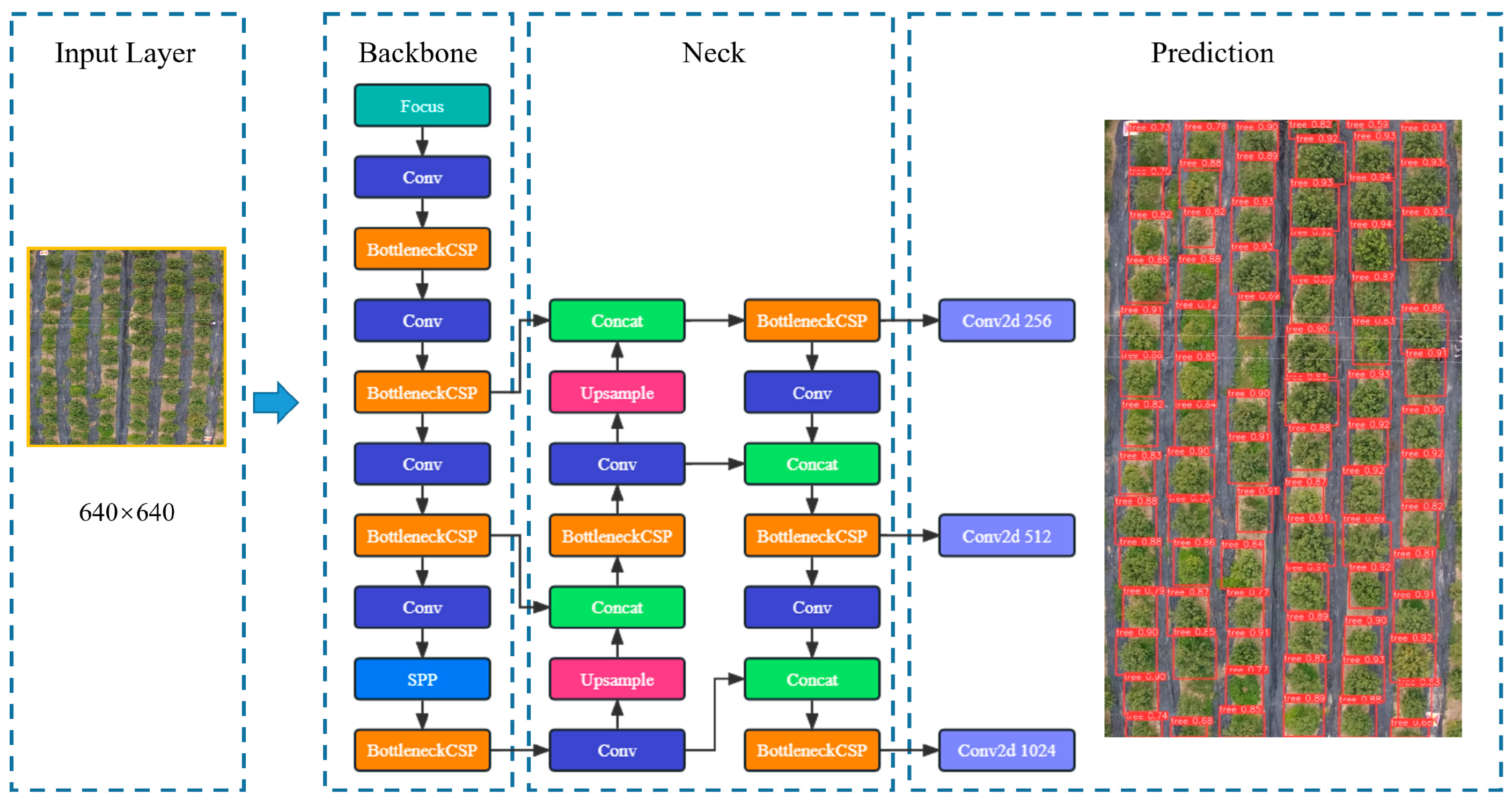

2.3.3. RGB Data Model

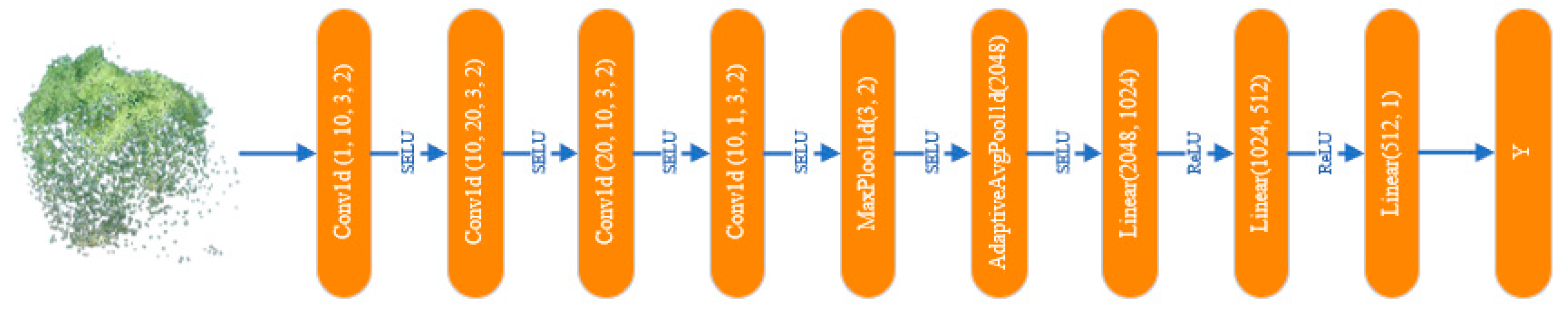

2.3.4. Point Cloud Data Model

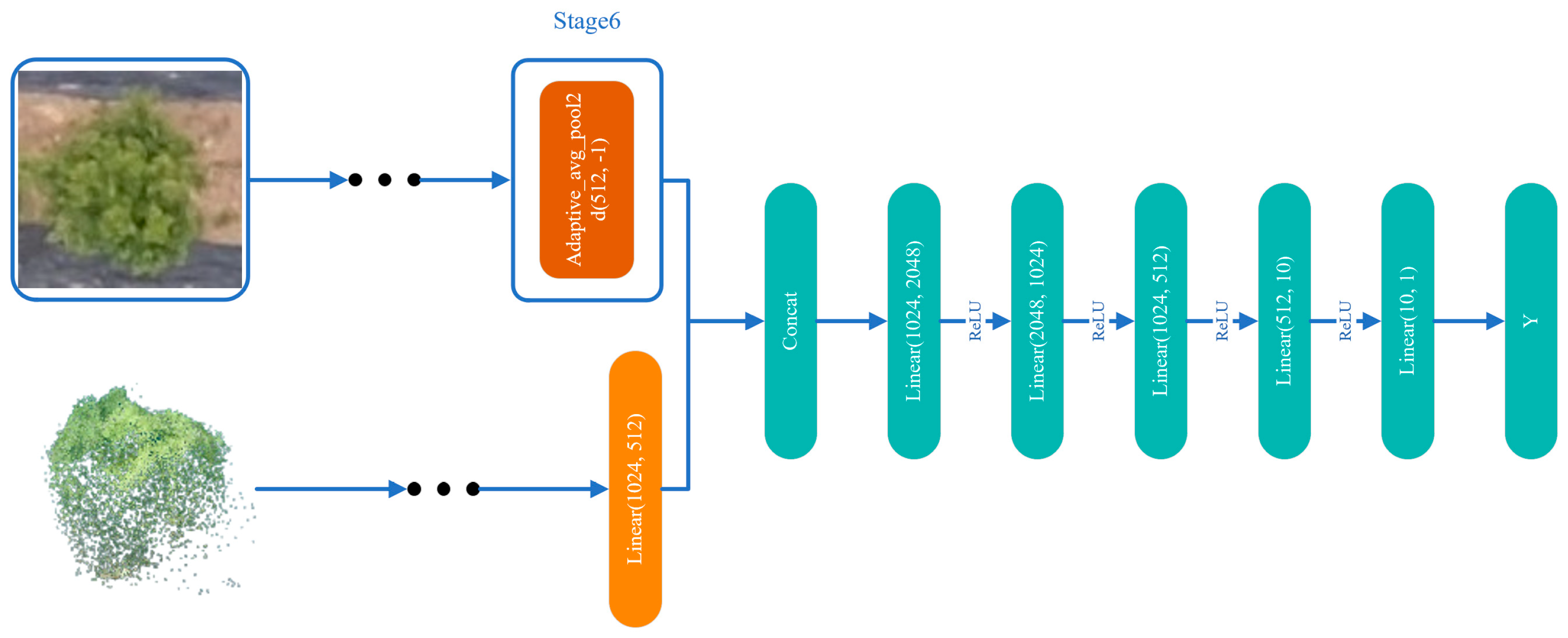

2.3.5. Multi-Modal Data Model

2.4. Model Evaluation

3. Results

3.1. Single-Modal Data for LAI Estimation of Citrus Trees

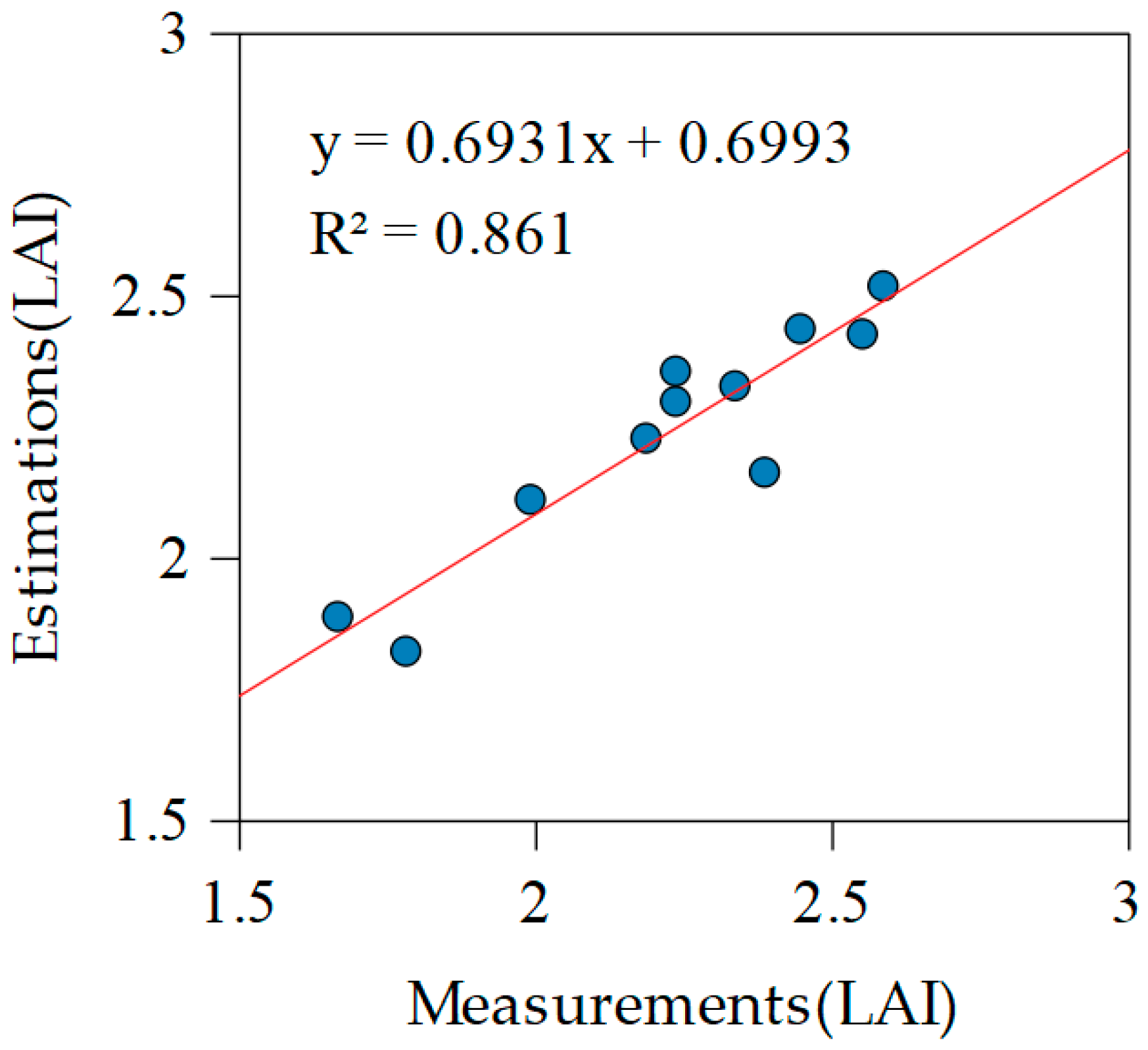

3.1.1. RGB Data for LAI Estimation of Citrus Trees

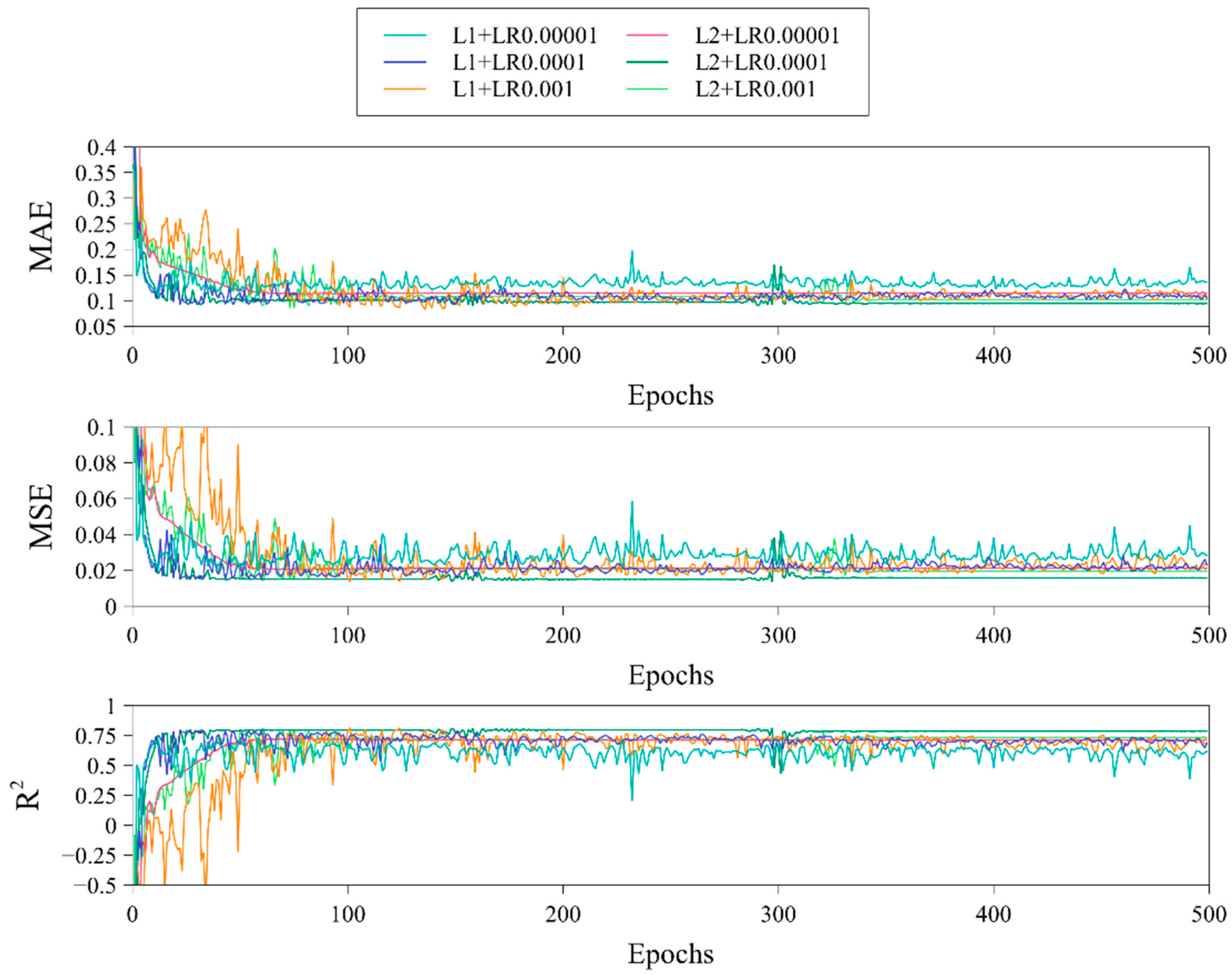

3.1.2. Point Cloud Data for LAI Estimation of Citrus Trees

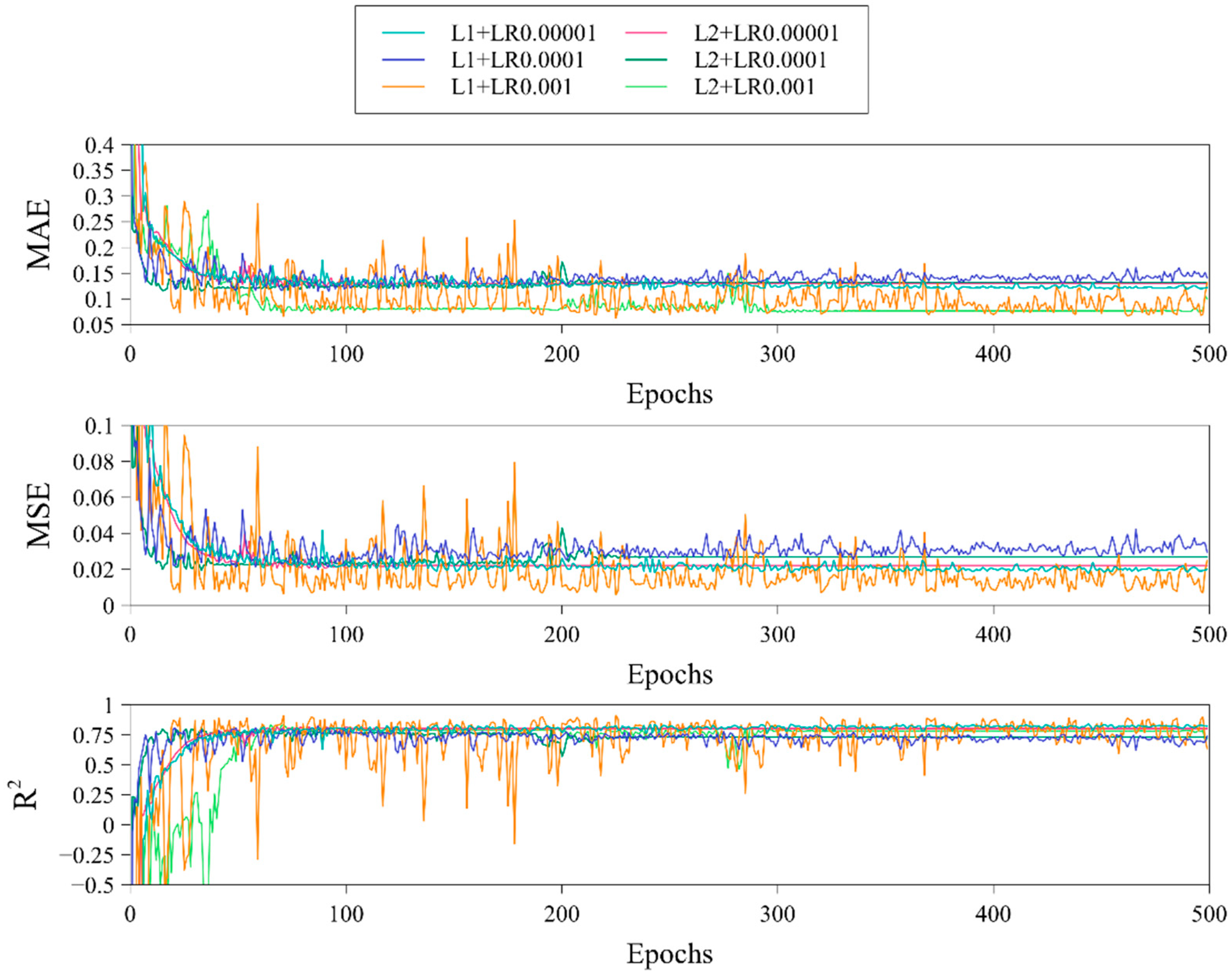

3.2. Multi-Modal Data for LAI Estimation of Citrus Trees

3.3. Exploding and Vanishing Gradients of Multi-Modal Data Problem

4. Discussion

4.1. Feasibility of Estimating LAI from RGB Data and Point Cloud Data

4.2. Setting of Loss Function and Learning Rate Hyperparameters

4.3. The Role of Multi-Modal Data in Estimation Result Improvement

4.4. Estimation Model Optimization for Multi-Modal Data

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rehman, A.; Deyuan, Z.; Hussain, I.; Iqbal, M.S.; Yang, Y.; Jingdong, L. Prediction of Major Agricultural Fruits Production in Pakistan by Using an Econometric Analysis and Machine Learning Technique. Int. J. Fruit Sci. 2018, 18, 445–461. [Google Scholar] [CrossRef]

- Nawaz, R.; Abbasi, N.A.; Ahmad Hafiz, I.; Khalid, A.; Ahmad, T.; Aftab, M. Impact of Climate Change on Kinnow Fruit Industry of Pakistan. Agrotechnology 2019, 8, 2. [Google Scholar] [CrossRef]

- Abdullahi, H.S.; Mahieddine, F.; Sheriff, R.E. Technology Impact on Agricultural Productivity: A Review of Precision Agriculture Using Unmanned Aerial Vehicles. In Proceedings of the Wireless and Satellite Systems; Pillai, P., Hu, Y.F., Otung, I., Giambene, G., Eds.; Springer: Cham, Switzerland, 2015; pp. 388–400. [Google Scholar]

- Mogili, U.R.; Deepak, B.B.V.L. Review on Application of Drone Systems in Precision Agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Lieret, M.; Lukas, J.; Nikol, M.; Franke, J. A Lightweight, Low-Cost and Self-Diagnosing Mechatronic Jaw Gripper for the Aerial Picking with Unmanned Aerial Vehicles. Procedia Manuf. 2020, 51, 424–430. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Boursianis, A.D.; Papadopoulou, M.S.; Diamantoulakis, P.; Liopa-Tsakalidi, A.; Barouchas, P.; Salahas, G.; Karagiannidis, G.; Wan, S.; Goudos, S.K. Internet of Things (IoT) and Agricultural Unmanned Aerial Vehicles (UAVs) in Smart Farming: A Comprehensive Review. Internet Things 2022, 18, 100187. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Nijland, W.; de Jong, R.; de Jong, S.M.; Wulder, M.A.; Bater, C.W.; Coops, N.C. Monitoring Plant Condition and Phenology Using Infrared Sensitive. Agric. For. Meteorol. 2014, 184, 98–106. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, L.; Tian, T.; Yin, J. A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring. Remote Sens. 2021, 13, 1221. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, Sensors, and Data Processing in Agroforestry: A Review towards Practical Applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Anthony, D.; Elbaum, S.; Lorenz, A.; Detweiler, C. On Crop Height Estimation with UAVs. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4805–4812. [Google Scholar]

- García-Martínez, H.; Flores-Magdaleno, H.; Ascencio-Hernández, R.; Khalil-Gardezi, A.; Tijerina-Chávez, L.; Mancilla-Villa, O.R.; Vázquez-Peña, M.A. Corn Grain Yield Estimation from Vegetation Indices, Canopy Cover, Plant Density, and a Neural Network Using Multispectral and RGB Images. Agriculture 2020, 10, 277. [Google Scholar] [CrossRef]

- Lin, G.; GuiJun, Y.; BaoShan, W.; HaiYang, Y.; Bo, X.; HaiKuan, F. Soybean leaf area index retrieval with UAV (Unmanned Aerial Vehicle) remote sensing imagery. Zhongguo Shengtai Nongye Xuebao/Chin. J. Eco-Agric. 2015, 23, 868–876. [Google Scholar]

- Zarco-Tejada, P.J.; Guillén-Climent, M.L.; Hernández-Clemente, R.; Catalina, A.; González, M.R.; Martín, P. Estimating Leaf Carotenoid Content in Vineyards Using High Resolution Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle (UAV). Agric. For. Meteorol. 2013, 171, 281–294. [Google Scholar] [CrossRef]

- Yin, G.; Li, A.; Jin, H.; Zhao, W.; Bian, J.; Qu, Y.; Zeng, Y.; Xu, B. Derivation of Temporally Continuous LAI Reference Maps through Combining the LAINet Observation System with CACAO. Agric. For. Meteorol. 2017, 233, 209–221. [Google Scholar] [CrossRef]

- Watson, D.J. Comparative Physiological Studies on the Growth of Field Crops: I. Variation in Net Assimilation Rate and Leaf Area between Species and Varieties, and within and between Years. Ann. Bot. 1947, 11, 41–76. [Google Scholar] [CrossRef]

- Patil, P.; Biradar, P.U.; Bhagawathi, A.S.; Hejjegar, I. A Review on Leaf Area Index of Horticulture Crops and Its Importance. Int. J. Curr. Microbiol. App. Sci 2018, 7, 505–513. [Google Scholar] [CrossRef]

- Bréda, N.J.J. Ground-based Measurements of Leaf Area Index: A Review of Methods, Instruments and Current Controversies. J. Exp. Bot. 2003, 54, 2403–2417. [Google Scholar] [CrossRef] [PubMed]

- Yan, G.; Hu, R.; Luo, J.; Weiss, M.; Jiang, H.; Mu, X.; Xie, D.; Zhang, W. Review of Indirect Optical Measurements of Leaf Area Index: Recent Advances. Challenges, and Perspectives. Agric. For. Meteorol. 2019, 265, 390–411. [Google Scholar] [CrossRef]

- Leblanc, S.G.; Chen, J.M.; Kwong, M. Tracing radiation and architecture of canopies. In TRAC Manual, Ver. 2.1.3; Natural Resources Canada; Canada Centre for Remote Sensing: Ottawa, ON, Canada, 2002; p. 25. [Google Scholar]

- Knerl, A.; Anthony, B.; Serra, S.; Musacchi, S. Optimization of Leaf Area Estimation in a High-Density Apple Orchard Using Hemispherical Photography. HortScience 2018, 53, 799–804. [Google Scholar] [CrossRef]

- Chen, L.; Wu, G.; Chen, G.; Zhang, F.; He, L.; Shi, W.; Ma, Q.; Sun, Y. Correlative Analyses of LAI, NDVI, SPAD and Biomass of Winter Wheat in the Suburb of Xi’an. In Proceedings of the 2011 19th International Conference on Geoinformatics, Shanghai, China, 24–26 June 2011; pp. 1–5. [Google Scholar]

- Brandão, Z.N.; Zonta, J.H. Hemispherical Photography to Estimate Biophysical Variables of Cotton. Rev. Bras. Eng. Agríc. Ambient. 2016, 20, 789–794. [Google Scholar] [CrossRef]

- Mu, Y.; Fujii, Y.; Takata, D.; Zheng, B.; Noshita, K.; Honda, K.; Ninomiya, S.; Guo, W. Characterization of Peach Tree Crown by Using High-Resolution Images from an Unmanned Aerial Vehicle. Hortic. Res. 2018, 5, 74. [Google Scholar] [CrossRef]

- Raj, R.; Walker, J.P.; Pingale, R.; Nandan, R.; Naik, B.; Jagarlapudi, A. Leaf Area Index Estimation Using Top-of-Canopy Airborne RGB Images. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102282. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Tortia, C.; Mania, E.; Guidoni, S.; Gay, P. Leaf Area Index Evaluation in Vineyards Using 3D Point Clouds from UAV Imagery. Precis. Agric. 2020, 21, 881–896. [Google Scholar] [CrossRef]

- Wang, L.; Chang, Q.; Li, F.; Yan, L.; Huang, Y.; Wang, Q.; Luo, L. Effects of Growth Stage Development on Paddy Rice Leaf Area Index Prediction Models. Remote Sens. 2019, 11, 361. [Google Scholar] [CrossRef]

- Zarate-Valdez, J.L.; Whiting, M.L.; Lampinen, B.D.; Metcalf, S.; Ustin, S.L.; Brown, P.H. Prediction of Leaf Area Index in Almonds by Vegetation Computers and Electronics. Agriculture 2012, 85, 24–32. [Google Scholar] [CrossRef]

- Diago, M.-P.; Correa, C.; Millán, B.; Barreiro, P.; Valero, C.; Tardaguila, J. Grapevine Yield and Leaf Area Estimation Using Supervised Classification Methodology on RGB Images Taken under Field Conditions. Sensors 2012, 12, 16988–17006. [Google Scholar] [CrossRef]

- Hasan, U.; Sawut, M.; Chen, S. Estimating the Leaf Area Index of Winter Wheat Based on Unmanned Aerial Vehicle RGB-Image Parameters. Sustainability 2019, 11, 6829. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Tanaka, Y.; Imachi, Y.; Yamashita, M.; Katsura, K. Feasibility of Combining Deep Learning and RGB Images Obtained by Unmanned Aerial Vehicle for Leaf Area Index Estimation in Rice. Remote Sens. 2021, 13, 84. [Google Scholar] [CrossRef]

- Li, S.; Dai, L.; Wang, H.; Wang, Y.; He, Z.; Lin, S. Estimating Leaf Area Density of Individual Trees Using the Point Cloud Segmentation of Terrestrial LiDAR Data and a Voxel-Based Model. Remote Sens. 2017, 9, 1202. [Google Scholar] [CrossRef]

- Li, M.; Shamshiri, R.R.; Schirrmann, M.; Weltzien, C.; Shafian, S.; Laursen, M.S. UAV Oblique Imagery with an Adaptive Micro-Terrain Model for Estimation of Leaf Area Index and Height of Maize Canopy from 3D Point Clouds. Remote Sens. 2022, 14, 585. [Google Scholar] [CrossRef]

- Yang, J.; Xing, M.; Tan, Q.; Shang, J.; Song, Y.; Ni, X.; Wang, J.; Xu, M. Estimating Effective Leaf Area Index of Winter Wheat Based on UAV Point Cloud Data. Drones 2023, 7, 299. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J.; Shang, J. Estimating Effective Leaf Area Index of Winter Wheat Using Simulated Observation on Unmanned Aerial Vehicle-Based Point Cloud Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2874–2887. [Google Scholar] [CrossRef]

- Mazzini, R.B.; Ribeiro, R.V.; Pio, R.M. A Simple and Non-Destructive Model for Individual Leaf Area Estimation in Citrus. Fruits 2010, 65, 269–275. [Google Scholar] [CrossRef]

- Dutra, A.D.; Filho, M.A.C.; Pissinato, A.G.V.; Gesteira, A.d.S.; Filho, W.d.S.S.; Fancelli, M. Mathematical Models to Estimate Leaf Area of Citrus Genotypes. AJAR 2017, 12, 125–132. [Google Scholar] [CrossRef]

- Raj, R.; Suradhaniwar, S.; Nandan, R.; Jagarlapudi, A.; Walker, J. Drone-Based Sensing for Leaf Area Index Estimation of Citrus Canopy. In Proceedings of UASG 2019; Jain, K., Khoshelham, K., Zhu, X., Tiwari, A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 79–89. [Google Scholar]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean Yield Prediction from UAV Using Multimodal Data Fusion and Deep Learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Zhang, Y.; Ta, N.; Guo, S.; Chen, Q.; Zhao, L.; Li, F.; Chang, Q. Combining Spectral and Textural Information from UAV RGB Images for Leaf Area Index Monitoring in Kiwifruit Orchard. Remote Sens. 2022, 14, 1063. [Google Scholar] [CrossRef]

- Wu, S.; Deng, L.; Guo, L.; Wu, Y. Wheat Leaf Area Index Prediction Using Data Fusion Based on High-Resolution Unmanned Aerial Vehicle Imagery. Plant Methods 2022, 18, 68. [Google Scholar] [CrossRef]

- Anthony, B.; Serra, S.; Musacchi, S. Optimization of Light Interception, Leaf Area and Yield in “WA38”: Comparisons among Training Systems, Rootstocks and Pruning Techniques. Agronomy 2020, 10, 689. [Google Scholar] [CrossRef]

- Chianucci, F.; Cutini, A. Digital Hemispherical Photography for Estimating Forest Canopy Properties: Current Controversies and Opportunities. iForest-Biogeosci. For. 2012, 5, 290. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 10 April 2023).

- Lee, Y.; Hwang, J.; Lee, S.; Bae, Y.; Park, J. An Energy and GPU-Computation Efficient Backbone Network for Real-Time Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Klambauer, G.; Unterthiner, T.; Mayr, A.; Hochreiter, S. Self-Normalizing Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A Survey of Deep Neural Network Architectures and Their Applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Pouyanfar, S.; Sadiq, S.; Yan, Y.; Tian, H.; Tao, Y.; Reyes, M.P.; Shyu, M.-L.; Chen, S.-C.; Iyengar, S.S. A Survey on Deep Learning: Algorithms. Techniques, and Applications. ACM Comput. Surv. 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Mohd Ali, M.; Hashim, N.; Abd Aziz, S.; Lasekan, O. Utilisation of Deep Learning with Multi-modal Data Fusion for Determination of Pineapple Quality Using Thermal Imaging. Agronomy 2023, 13, 401. [Google Scholar] [CrossRef]

- Patil, R.R.; Kumar, S. Rice-Fusion: A Multi-modality Data Fusion Framework for Rice Disease Diagnosis. IEEE Access 2022, 10, 5207–5222. [Google Scholar] [CrossRef]

- Zhang, Y.; Han, W.; Zhang, H.; Niu, X.; Shao, G. Evaluating Soil Moisture Content under Maize Coverage Using UAV Multi-modal Data by Machine Learning Algorithms. J. Hydrol. 2023, 617, 129086. [Google Scholar] [CrossRef]

| Parameter | Value/Method |

|---|---|

| Flight altitude | 50 m |

| Flight speed | 3 m/s |

| Shooting mode | Timed shooting |

| Pitch gimbal | −90, −60, −45 |

| Side overlap rate | 80% |

| Forward overlap rate | 80% |

| Point Cloud | X-Axis | Y-Axis | Z-Axis | Red | Green | Blue |

|---|---|---|---|---|---|---|

| 1 | 655,215.95899963 | 2,592,974.80603027 | 6.21799994 | 113 | 138 | 92 |

| 2 | 655,215.96000671 | 2,592,974.75402832 | 6.15500021 | 146 | 178 | 123 |

| 3 | 655,215.96400452 | 2,592,974.74298096 | 6.14599991 | 149 | 178 | 123 |

| Parameter | Value/Method |

|---|---|

| Epochs | 500 |

| Batch Size | 16 |

| Optimizer | Adam |

| Loss Function | L1 |

| L2 | |

| Learning Rate | 0.001 |

| 0.0001 | |

| 0.00001 |

| Experimental Parameters | Validation | Test | ||||

|---|---|---|---|---|---|---|

| MAE | MSE | R2 | MAE | MSE | R2 | |

| L1 + LR0.001 | 0.087 | 0.013 | 0.814 | 0.149 | 0.037 | 0.535 |

| L1 + LR0.0001 | 0.092 | 0.015 | 0.795 | 0.15 | 0.034 | 0.575 |

| L1 + LR0.00001 | 0.11 | 0.019 | 0.731 | 0.129 | 0.028 | 0.647 |

| L2 + LR0.001 | 0.09 | 0.014 | 0.802 | 0.174 | 0.047 | 0.407 |

| L2 + LR0.0001 | 0.092 | 0.013 | 0.813 | 0.149 | 0.035 | 0.561 |

| L2 + LR0.00001 | 0.112 | 0.02 | 0.727 | 0.159 | 0.04 | 0.494 |

| Experimental Parameters | Validation | Test | ||||

|---|---|---|---|---|---|---|

| MAE | MSE | R2 | MAE | MSE | R2 | |

| L1 + LR0.001 | 0.079 | 0.01 | 0.864 | 0.123 | 0.021 | 0.74 |

| L1 + LR0.0001 | 0.096 | 0.014 | 0.797 | 0.11 | 0.017 | 0.783 |

| L1 + LR0.00001 | 0.085 | 0.01 | 0.863 | 0.078 | 0.014 | 0.815 |

| L2 + LR0.001 | 0.133 | 0.026 | 0.644 | 0.194 | 0.058 | 0.279 |

| L2 + LR0.0001 | 0.082 | 0.01 | 0.86 | 0.096 | 0.016 | 0.796 |

| L2 + LR0.00001 | 0.093 | 0.015 | 0.791 | 0.126 | 0.026 | 0.671 |

| Experimental Parameters | Validation | Test | ||||

|---|---|---|---|---|---|---|

| MAE | MSE | R2 | MAE | MSE | R2 | |

| L1 + LR0.001 | 0.062 | 0.005 | 0.914 | 0.078 | 0.008 | 0.861 |

| L1 + LR0.0001 | 0.114 | 0.019 | 0.825 | 0.08 | 0.009 | 0.829 |

| L1 + LR0.00001 | 0.117 | 0.017 | 0.841 | 0.066 | 0.010 | 0.805 |

| L2 + LR0.001 | 0.076 | 0.009 | 0.834 | 0.079 | 0.009 | 0.849 |

| L2 + LR0.0001 | 0.115 | 0.019 | 0.804 | 0.101 | 0.017 | 0.813 |

| L2 + LR0.00001 | 0.124 | 0.02 | 0.816 | 0.081 | 0.012 | 0.765 |

| Method | Point Cloud | X-Axis | Y-Axis | Z-Axis | Red | Green | Blue |

|---|---|---|---|---|---|---|---|

| Original Data | 1 | 655,215.95899963 | 2,592,974.80603027 | 6.21799994 | 113 | 138 | 92 |

| 2 | 655,215.96000671 | 2,592,974.75402832 | 6.15500021 | 146 | 178 | 123 | |

| 3 | 655,215.96400452 | 2,592,974.74298096 | 6.14599991 | 149 | 178 | 123 | |

| Min-Max Normalization | 1 | 0.010779881646158174 | 0.8936204728670418 | 0.049631731939932866 | 0.2924528301886793 | 0.3368983957219251 | 0.2431192660550459 |

| 2 | 0.00040218885987997055 | 0.8812293692026287 | 0.018224273530279778 | 0.48584905660377353 | 0.588235294117647 | 0.408256880733945 | |

| 3 | 0.0019987597479484975 | 0.8763324494939297 | 0.014734433142619796 | 0.5 | 0.588235294117647 | 0.408256880733945 |

| Group | Activation Function | Batch Normalization |

|---|---|---|

| Group A | ReLU | YES |

| Group B | SELU | YES |

| Group C | SELU | NO |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, X.; Li, W.; Xiao, J.; Zhu, H.; Yang, D.; Yang, J.; Xu, X.; Lan, Y.; Zhang, Y. Inversion of Leaf Area Index in Citrus Trees Based on Multi-Modal Data Fusion from UAV Platform. Remote Sens. 2023, 15, 3523. https://doi.org/10.3390/rs15143523

Lu X, Li W, Xiao J, Zhu H, Yang D, Yang J, Xu X, Lan Y, Zhang Y. Inversion of Leaf Area Index in Citrus Trees Based on Multi-Modal Data Fusion from UAV Platform. Remote Sensing. 2023; 15(14):3523. https://doi.org/10.3390/rs15143523

Chicago/Turabian StyleLu, Xiaoyang, Wanjian Li, Junqi Xiao, Hongyun Zhu, Dacheng Yang, Jing Yang, Xidan Xu, Yubin Lan, and Yali Zhang. 2023. "Inversion of Leaf Area Index in Citrus Trees Based on Multi-Modal Data Fusion from UAV Platform" Remote Sensing 15, no. 14: 3523. https://doi.org/10.3390/rs15143523

APA StyleLu, X., Li, W., Xiao, J., Zhu, H., Yang, D., Yang, J., Xu, X., Lan, Y., & Zhang, Y. (2023). Inversion of Leaf Area Index in Citrus Trees Based on Multi-Modal Data Fusion from UAV Platform. Remote Sensing, 15(14), 3523. https://doi.org/10.3390/rs15143523