A Hybrid Approach Based on GAN and CNN-LSTM for Aerial Activity Recognition

Abstract

:1. Introduction

2. Related Works

2.1. Handcrafted Methods

2.2. Deep Learning Methods

2.3. Data Augmentation

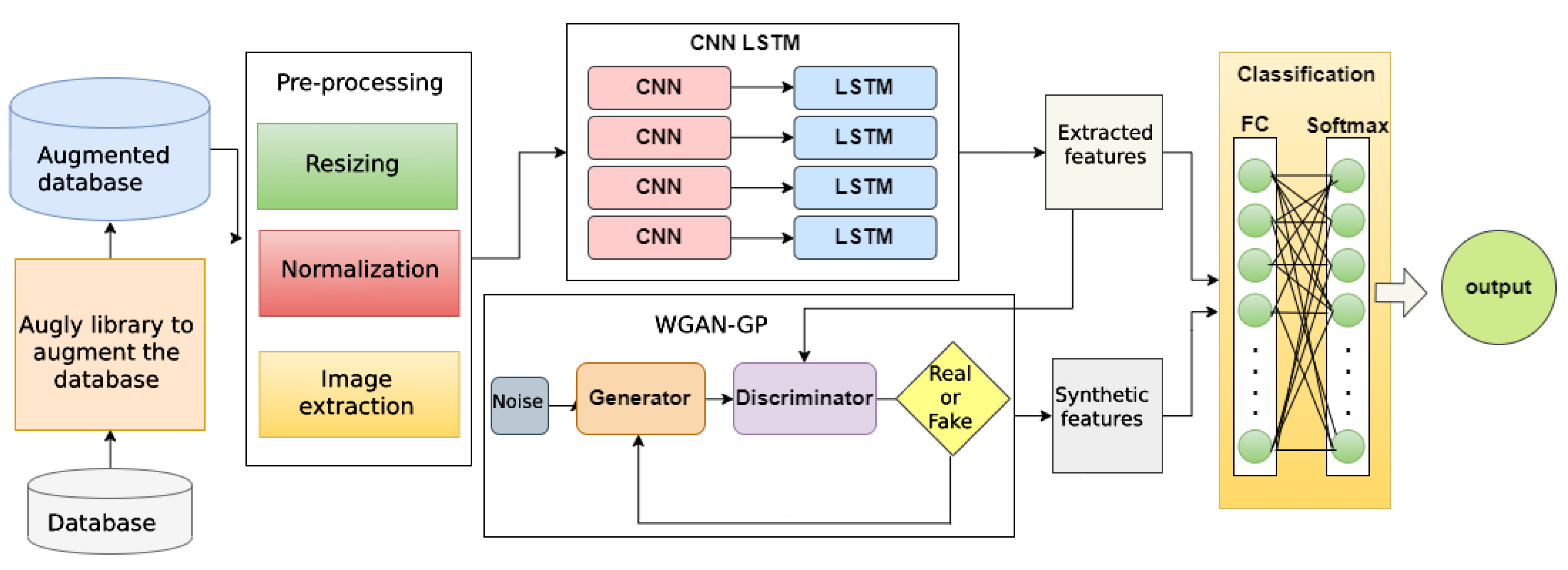

3. Proposed Approach

3.1. Phase 1: Data Augmentation

3.2. Phase 2: Preprocessing

3.3. Phase 3: Feature Extraction

3.3.1. Spatial Feature Extraction

3.3.2. Temporal Feature Extraction

3.4. Phase 4: WGAN for Generating Synthetic Features

3.5. Phase 5: Action Classification

4. Experiments and Results

4.1. Dataset

4.2. Implementation Setup

4.3. Results

5. Conclusions

- We propose a new Hybrid GAN-Based CNN-LSTM approach for aerial activity recognition that overcomes challenges related to small size and class imbalance in aerial video datasets.

- We propose a new data augmentation approach that combines a data transformation approach and a GAN-based technique.

- To the best of our knowledge, we are the first to propose a GAN-based technique to synthesize discriminative spatio-temporal features to augment softmax classifier training.

- We prove that our proposed aerial action recognition model outperforms state-of-art results while reducing computational complexity.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gohari, A.; Ahmad, A.; Rahim, R.; Supa’at, A.; Abd Razak, S.; Gismalla, M. Involvement of Surveillance Drones in Smart Cities: A Systematic Review. IEEE Access 2022, 10, 56611–56628. [Google Scholar] [CrossRef]

- Mohd Daud, S.; Mohd Yusof, M.; Heo, C.; Khoo, L.; Chainchel, S.M.; Mahmood, M.; Nawawi, H. Applications of drone in disaster management: A scoping review. Sci. Justice 2022, 62, 30–42. [Google Scholar] [CrossRef] [PubMed]

- Penmetsa, S.; Minhuj, F.; Singh, A.; Omkar, S.N. Autonomous UAV for suspicious action detection using pictorial human pose estimation and classification. Elcvia Electron. Lett. Comput. Vis. Image Anal. 2014, 13, 18–32. [Google Scholar] [CrossRef] [Green Version]

- Sultani, W.; Shah, M. Human action recognition in drone videos using a few aerial training examples. Comput. Vis. Image Underst. 2021, 206, 103186. [Google Scholar] [CrossRef]

- Mumuni, A.; Mumuni, F. Data augmentation: A comprehensive survey of modern approaches. Array 2022, 16, 100258. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.C.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Yacoob, Y.; Black, M.J. Parameterized modeling and recognition of activities. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271), Bombay, India, 4–7 January 1998; pp. 120–127. [Google Scholar] [CrossRef] [Green Version]

- Ke, Y.; Hebert, M. Volumetric features for video event detection. Int. J. Comput. Vis. 2010, 88, 339–362. [Google Scholar] [CrossRef] [Green Version]

- Bobick, A.F.; Davis, J.W. The recognition of human movement using temporal templates. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 257–267. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Hu, Y.; Chan, S.; Chia, L.-T. Motion context: A new representation for human action recognition. Motion context: A new representation for human action recognition. In Proceedings of the Computer Vision—ECCV 2008, 10th European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Part IV. pp. 817–829. [Google Scholar]

- Efros, A.A.; Malik, J. Recognizing action at a distance. In Proceedings of the Ninth IEEE International Conference on Computer Vision—ICCV’03, Nice, France, 13–16 October 2003; Volume 2, p. 726. [Google Scholar]

- Willems, G.; Tuytelaars, T.; Van Gool, L. An Efficient Dense and Scale-Invariant Spatio-Temporal Interest Point Detector. In Proceedings of the Computer Vision—ECCV, Marseille, France, 12–18 October 2008; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2008; Volume 5303, pp. 650–663. [Google Scholar]

- Scovanner, P.; Ali, S.; Shah, M. A 3-dimensional sift descriptor and its application to action recognition. In Proceedings of the 15th ACM International Conference on Multimedia, Augsburg, Germany, 24–29 September 2007; pp. 357–360. [Google Scholar]

- Dollar, P.; Rabaud, V.; Cottrell, G.; Belongie, S. Behavior recognition via sparse spatio-temporal features. In Proceedings of the 2005 IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Beijing, China, 15–16 October 2005; pp. 65–72. [Google Scholar]

- Laptev, I. On Space-Time Interest Points. Int. Comput. Vis. 2005, 64, 107–123. [Google Scholar] [CrossRef]

- Peng, X.; Wang, L.; Wang, X.; Qiao, Y. Bag of visual words and fusion methods for action recognition: Comprehensive study and good practice. Comput. Vis. Image Underst. 2016, 150, 109–125. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Schmid, C. Action recognition with improved trajectories. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 3 March 2014; pp. 3551–3558. [Google Scholar]

- Navneet, D.; Bill, T. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar] [CrossRef] [Green Version]

- Akhtar, N.; Mian, A. Threat of Adversarial Attacks on Deep Learning in Computer Vision: A Survey. IEEE Access 2018, 6, 14410–14430. [Google Scholar] [CrossRef]

- Kwon, H.; Lee, J. AdvGuard: Fortifying Deep Neural Networks against Optimized Adversarial Example Attack. IEEE Access, 2020; early access. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 2014, 27, 1–9. [Google Scholar]

- Tu, Z.; Xie, W.; Qin, Q.; Poppe, R.; Veltkamp, R.C.; Li, B.; Yuan, J. Multi-stream CNN: Learning representations based on human-related regions for action recognition. Pattern Recognit. 2018, 79, 32–43. [Google Scholar] [CrossRef]

- Zhao, Y.; Man, K.L.; Smith, J.; Siddique, K.; Guan, S.-U. Improved two-stream model for human action recognition. J. Image Video Proc. 2020, 2020, 24. [Google Scholar] [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Medsker, L.R.; Jain, L.C. Recurrent neural networks. Des. Appl. 2001, 5, 64–67. [Google Scholar]

- Wang, H.; Wang, L. Modeling Temporal Dynamics and Spatial Configurations of Actions Using Two-Stream Recurrent Neural Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef] [Green Version]

- Zhu, W.; Lan, C.; Xing, J.; Zeng, W.; Li, Y.; Shen, L.; Xie, X. Co-occurrence feature learning for skeleton based action recognition using regularized deep LSTM networks. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, AAAI’16, Phoenix, AZ, USA, 12–17 February 2016; pp. 3697–3703. [Google Scholar]

- Liu, J.; Wang, G.; Hu, P.; Duan, L.Y.; Kot, A.C. Global context-aware attention LSTM networks for 3d action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4674–4683. [Google Scholar]

- Wu, J.; Wang, G.; Yang, W.; Ji, X. Action recognition with joint attention on multi-level deep features. arXiv 2016, arXiv:1607.02556. [Google Scholar]

- Sun, L.; Jia, K.; Chen, K.; Yeung, D.Y.; Shi, B.E.; Savarese, S. Lattice long short-term memory for human action recognition. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2147–2156. [Google Scholar]

- Malik, N.U.R.; Abu-Bakar, N.u.R.; Sheikh, U.U.; Channa, A.; Popescu, N. Cascading Pose Features with CNN-LSTM for Multiview Human Action Recognition. Signals 2023, 4, 40–55. [Google Scholar] [CrossRef]

- Hoelzemann, A.; Sorathiya, N. Data Augmentation Strategies for Human Activity Data Using Generative Adversarial Neural Networks. In Proceedings of the 17th Workshop on Context and Activity Modeling and Recognition, Kassel, Germany, 22–26 March 2021. [Google Scholar]

- Kim, T.; Lee, H.; Cho, M.A.; Lee, H.S.; Cho, D.H.; Lee, S. Learning Temporally Invariant and Localizable Features via Data Augmentation for Video Recognition. arXiv 2020, arXiv:2008.05721v1. [Google Scholar]

- Yun, S.; Oh, S.J. VideoMix: Rethinking Data Augmentation for Video Classification. arXiv 2020, arXiv:2012.03457v1. [Google Scholar]

- Dong, J.; Wang, X. Feature Re-Learning with Data Augmentation for Video Relevance Prediction. IEEE Trans. Knowl. Data Eng. 2019, 33, 1946–1959. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Ge, L.; Li, R.; Fang, Y. Three-stream CNNs for action recognition. Pattern Recognit. 2017, 92, 33–40. [Google Scholar] [CrossRef]

- Li, J.; Yang, M.; Liu, Y.; Wang, Y.; Zheng, Q.; Wang, D. Dynamic hand gesture recognition using multi-direction 3D convolutional neural networks. Eng. Lett. 2019, 27, 490–500. [Google Scholar]

- Hang, H. Cisse, mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Hu, L.; Huang, S.; Wang, S.; Liu, W.; Ning, J. Do We Really Need Frame-by-Frame Annotation Datasets for Object Tracking? In Proceedings of the MM 2021—29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 4949–4957. [Google Scholar]

- Papakipos, Z. AugLy: Data Augmentations for Robustness. Artificial Intelligence (cs.AI). arXiv 2022, arXiv:2201.06494. [Google Scholar]

- Qi, M.; Wang, Y. stagNet: An attentive semantic RNN for group activity and individual action recognition. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 549–565. [Google Scholar] [CrossRef]

- Lee, H.-Y.; Huang, J.-B. Unsupervised representation learning by sorting sequences. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 667–676. [Google Scholar]

- Cauli, N.; Recupero, D.R. Survey on Videos Data Augmentation for Deep Learning Models. Future Internet 2022, 14, 93. [Google Scholar] [CrossRef]

- Zhou, T.; Porikli, F.; Crandall, D.; Van Gool, L.; Wang, W. A Survey on Deep Learning Technique for Video Segmentation. arXiv 2021, arXiv:2107.01153. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Chen, J.; Sharma, N.; Pan, S.; Long, G.; Blumenstein, M. Adversarial Action Data Augmentation for Similar Gesture Action Recognition. In Proceedings of the International Joint Conference on Neural Networks, Budapest, Hungary, 14–19 July 2019. [Google Scholar]

- Wei, D.; Xu, X.; Shen, H.; Huang, K. General Method for Appearance-Controllable Human Video Motion Transfer. IEEE Trans. Multimed 2021, 23, 2457–2470. [Google Scholar] [CrossRef]

- Aberman, K.; Shi, M.; Liao, J.; Liscbinski, D.; Chen, B. Deep Video-Based Performance Cloning. Comput. Graph. Forum 2019, 38, 219–233. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Jia, G.; Chen, L.; Zhang, M.; Yong, J. Self-Paced Video Data Augmentation by Generative Adversarial Networks with Insufficient Samples. In Proceedings of the MM ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1652–1660. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Ahsan, U.; Sun, C.; Essa, I. DiscrimNet: Semi- supervised action recognition from videos using generative adversarial networks. arXiv 2018, arXiv:1801.07230. [Google Scholar]

- Hang, Y.; Hou, C. Open-set human activity recognition based on micro-Doppler signatures. Pattern Recogn. 2019, 85, 60–69. [Google Scholar]

- Dong, J.; Li, X.; Xu, C.; Yang, G.; Wang, X. Feature relearning with data augmentation for content-based video recommendation. In Proceedings of the MM 2018—2018 ACM Multimedia Conference, Seoul, Republic of Korea, 22–26 October 2018; pp. 2058–2062. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Xia, K.; Huang, J.; Wang, A.H. LSTM-CNN Architecture for Human Activity Recognition. IEEE Access 2020, 8, 56855–56866. [Google Scholar] [CrossRef]

- Bayoudh, K. An Attention-based Hybrid 2D/3D CNN-LSTM for Human Action Recognition. In Proceedings of the 2nd International Conference on Computing and Information Technology (ICCIT), 2022/ FCIT/UT/KSA, Tabuk, Saudi Arabia, 25–27 January 2022; pp. 97–103. [Google Scholar] [CrossRef]

- Gao, X.; Deng, F.; Yue, X. Data augmentation in fault diagnosis based on the Wasserstein generative adversarial network with gradient penalty. Neurocomputing 2020, 396, 487–494. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Cardarilli, G.C.; Di Nunzio, L.; Fazzolari, R.; Giardino, D.; Nannarelli, A.; Re, M.; Spanò, S. A pseudo-softmax function for hardware-based high speed image classification. Sci. Rep. 2021, 11, 15307. [Google Scholar] [CrossRef]

- Soomro, K.; Zamir, R.; Shah, M. Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Ghadi, Y.Y.; Waheed, M. Automated Parts-Based Model for Recognizing Human–Object Interactions from Aerial Imagery with Fully Convolutional Network. Remote Sens. 2022, 14, 1492. [Google Scholar] [CrossRef]

| Parameter | Values |

|---|---|

| Input frame resizing | 224 × 224 × 3 |

| Number of CNN layers | 4 |

| Filter sizes | 16, 32, 64, and 128 |

| Kernel size | 3 × 3 |

| Max pooling | Yes |

| Number of LSTM units | 32 |

| Epochs | 100 |

| Batch size | 30 |

| Optimizer | Adam |

| Loss function | Categorical cross-entropy loss |

| Dropout rate | 0.25 |

| Parameter | Values |

|---|---|

| Noise vector dimension z | 100 |

| Number of layers | four layers |

| Activation functions | LeakyReLU; output layer: hyperbolic tangent |

| Number of neurons in each layer | (128, 256, 512, 32) |

| Parameter | Values |

|---|---|

| Number of layers | four layers |

| Activation functions | LeakyReLU; output layer: linear |

| Number of neurons in each layer | (512, 256, 128, 1) |

| Parameter | Values |

|---|---|

| Optimizer | RMSprop (lr = 0.00001) |

| Batch size | 128 |

| Number of epochs | 50,000 |

| Loss function | Wasserstein loss |

| GP regularization parameter | 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bousmina, A.; Selmi, M.; Ben Rhaiem, M.A.; Farah, I.R. A Hybrid Approach Based on GAN and CNN-LSTM for Aerial Activity Recognition. Remote Sens. 2023, 15, 3626. https://doi.org/10.3390/rs15143626

Bousmina A, Selmi M, Ben Rhaiem MA, Farah IR. A Hybrid Approach Based on GAN and CNN-LSTM for Aerial Activity Recognition. Remote Sensing. 2023; 15(14):3626. https://doi.org/10.3390/rs15143626

Chicago/Turabian StyleBousmina, Abir, Mouna Selmi, Mohamed Amine Ben Rhaiem, and Imed Riadh Farah. 2023. "A Hybrid Approach Based on GAN and CNN-LSTM for Aerial Activity Recognition" Remote Sensing 15, no. 14: 3626. https://doi.org/10.3390/rs15143626

APA StyleBousmina, A., Selmi, M., Ben Rhaiem, M. A., & Farah, I. R. (2023). A Hybrid Approach Based on GAN and CNN-LSTM for Aerial Activity Recognition. Remote Sensing, 15(14), 3626. https://doi.org/10.3390/rs15143626