Abstract

As the lakes located in the Qinghai-Tibet Plateau are important carriers of water resources in Asia, dynamic changes to these lakes intuitively reflect the climate and water resource variations of the Qinghai-Tibet Plateau. To address the insufficient performance of the Convolutional Neural Network (CNN) in learning the spatial relationship between long-distance continuous pixels, this study proposes a water recognition model for lakes on the Qinghai-Tibet Plateau based on U-Net and ViTenc-UNet. This method uses Vision Transformer (ViT) to replace the continuous Convolutional Neural Network layer in the encoder of the U-Net model, which can more accurately identify and extract the continuous spatial relationship of lake water bodies. A Convolutional Block Attention Module (CBAM) mechanism was added to the decoder of the model enabling the spatial information and spectral information characteristics of the water bodies to be more completely preserved. The experimental results show that the ViTenc-UNet model can complete the task of lake water recognition on the Qinghai-Tibet Plateau more efficiently, and the Overall Accuracy, Intersection over Union, Recall, Precision, and F1 score of the classification results for lake water bodies reached 99.04%, 98.68%, 99.08%, 98.59%, and 98.75%, which were, respectively, 4.16%, 6.20% 5.34%, 4.80%, and 5.34% higher than the original U-Net model. Compared to FCN, the DeepLabv3+, TransUNet, and Swin-Unet models also have different degrees of advantages. This model innovatively introduces ViT and CBAM into the water extraction task of lakes on the Qinghai-Tibet Plateau, showing excellent water classification performance of these lake bodies. This method has certain classification advantages and will provide an important scientific reference for the accurate real-time monitoring of important water resources on the Qinghai-Tibet Plateau.

1. Introduction

Water is an indispensable substance for the survival of human beings and all living things and an extremely valuable natural resource for industrial and agricultural production, economic development, and environmental improvement [1,2]. The lakes of the Qinghai-Tibet Plateau are important carriers of water resources, numbering more than 1500 lakes [3] and accounting for 57.6% of the national total lake area; the plateau thus represents the main lake distribution area [4]. The dynamic changes in lake area on the Qinghai-Tibet Plateau are closely related to global climate change. Thus, monitoring and evaluating the changes in lake area on the Qinghai-Tibet Plateau is of great significance for studying the climate change of the Qinghai-Tibet Plateau under the background of global warming [5].

The early research on the lakes of the Qinghai-Tibet Plateau mainly relied on the field research of researchers, but due to the harsh natural conditions, which were time-consuming and labor-intensive, and the poor experimental equipment conditions, the research content and research results had great limitations, which could not meet the multi-temporal phase and high-precision monitoring requirements of the researchers [6]. With the development and application of remote sensing technology, the accuracy of remote sensing data is improving, and it has become common to obtain ground object information. The absorption rate of water in the electromagnetic band of 0.4–2.5 μm is significantly higher than that of other types of features, which makes optical remote sensing a unique advantage in monitoring changes in the areas of large lakes.

The Normalized Difference Water Index (NDWI) [7] threshold method is a simple water extraction method that uses the differences in the sensitivity of water to spectral characteristics in different bands to distinguish water bodies from other features. Several researchers have also proposed an improved WI [8,9] to cope with different application scenarios. The WI’s key disadvantage is that the threshold for distinguishing lake water bodies from other features is too dependent on the prior knowledge from the researchers [10], and the classification accuracy is not stable enough or universally applicable when facing water classification tasks in different natural environments. However, the considerable overlap between the lake water bodies predicted by the WI and real values can effectively reduce the amount of manual work when sketching a water body, and the researchers need only to modify the wrong lake boundary to obtain the true value, so as to obtain an accurate dataset [11,12].

Machine Learning (ML) is another common method for extracting lake water bodies from remote sensing imagery, including supervised classification [13] and unsupervised classification [14]. Supervised classification establishes the judgment criteria for training samples and makes category judgments on the study areas. However, the high time and labor cost of labeling due to a large number of samples is especially obvious in large-scale lake extraction tasks [15]. In addition, this method uses artificial choices for remote sensing imagery interpretation workers, and the subjective factor is strong and lacking in objectivity. Unsupervised classification, also known as cluster analysis or group point analysis, requires no prior data and minimal initial human input. This method ignores the spatial information of the image, and the computer automatically classifies similar characters into one category according to certain rules based on differences in the data themselves, which may lead to the phenomenon of “different objects have the same spectrum” [16], resulting in the incorrect classification of water bodies and other features.

As a branch of ML, Deep Learning (DL) has the following advantages: it requires no prior rules, input data can output results directly, and DL can fully exploit the deep features of the data. With the development of deep learning technology, many studies using DL methods to extract different objects have emerged [17,18,19]. When Convolutional Neural Network (CNN) [20], a neural network specifically designed for processing data with a grid-like structure is used to solve large lake extraction tasks, due to the size limitations of the receptive field, only some local features can be extracted, while ignoring the spatial correlation of continuous water bodies. Additionally, a large number of noise points will appear in the extraction results, making the classification effect poor. The Fully Convolutional Network (FCN) [21] is a semantic segmentation model that replaces the fully connected layer of the convolutional neural network with a convolutional layer, which achieves an improvement from image classification to pixel classification [22]. However, the model does not take the global spatial relationship between pixels into enough consideration [23], and the extraction results lack spatial consistency. The U-Net network [24] proposed based on the FCN increases the number of decoders to make it the same as the number of encoders and uses Skip Connection between the two to concat together the features extracted in this way. Although the accuracy of U-Net is improved compared with FCN, it only uses some convolutional operations and still does not solve the problem of insufficient consideration of global spatial relationships between pixels. Moreover, the redundancy of cutting image patches will lead to longer training of the model. In order to adapt to the extraction tasks of different lake water bodies, the researchers made improvements to all classical deep learning models, including replacing the encoder or decoder with another model [25,26,27,28,29,30,31] and increasing or decreasing the number of model layers [32,33,34,35,36] and mechanisms that increase attention [37,38,39,40,41,42]. In order to meet the requirements of rapid mapping and ensure a certain degree of accuracy, many automated mapping methods have emerged to extract lake water bodies [43,44,45,46].

In the past, deep learning studies on lake water extraction often used convolution kernels of different sizes as the core for encoder extraction of water features, but these studies were limited by the receptive field and often could not learn the spatial relationship characteristics between multiple pixels well, resulting in leakage and misseparation of water bodies [47,48]. As the amount of data in the classification task increases, the degree of missed misalignment will further increase. In this paper, the ViTenc-UNet model is proposed. This model replaces the convolutional layer of the encoder core in the original U-Net model with the Vision Transformer, which enhances the model’s ability to capture the spatial relationship of continuous water. In addition, to reduce the loss of water information in different bands of images, we add the Convolutional Block Attention Module (CBAM) mixed attention mechanism, which consisted of the channel attention module and the spatial attention module to the decoder to increase the confidence weight of the spectral and spatial information of water in the model. Without increasing the number of network structure layers, this model innovatively uses the ViT layer and CBAM hybrid attention mechanism in the identification and extraction task of lake water bodies on the Qinghai-Tibet Plateau, which effectively improves the efficiency of large-scale lake water classification tasks, enhances the extraction capacity of lake water bodies on the Qinghai-Tibet Plateau, and provides effective help in monitoring the dynamic changes of lake water bodies on the Qinghai-Tibet.

2. Data

2.1. Study Regions

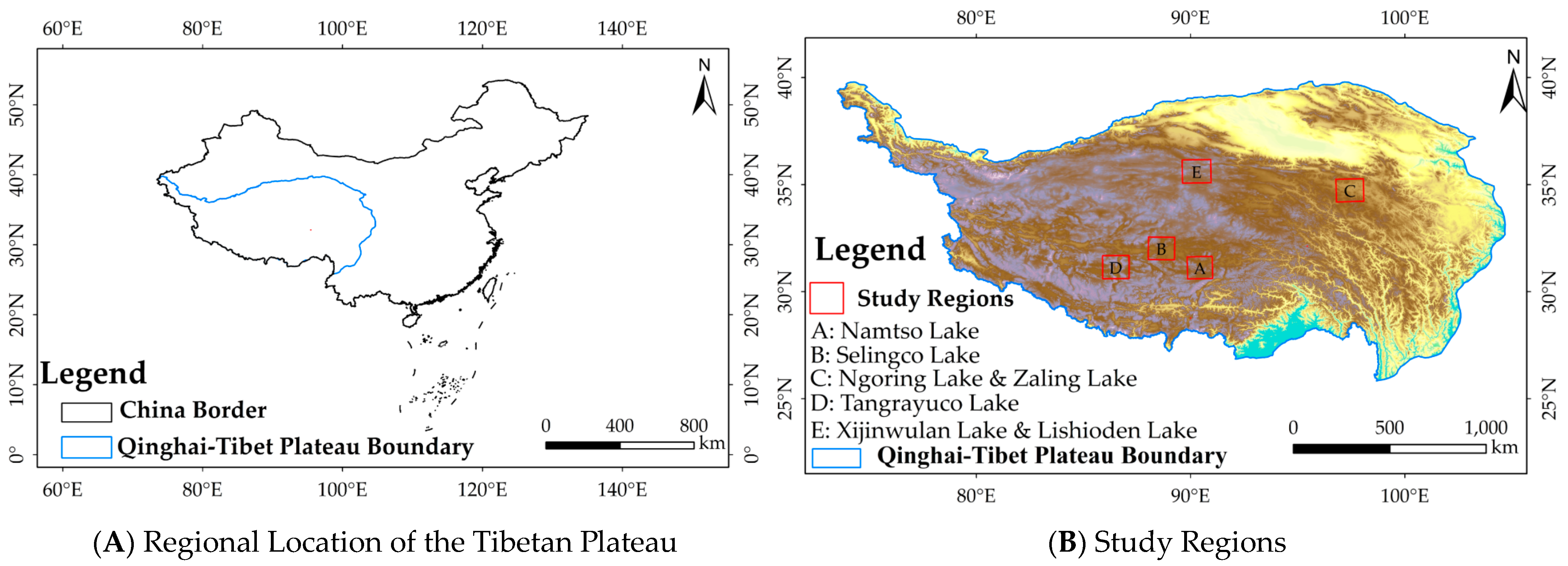

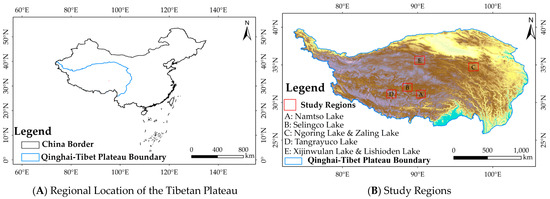

The study area, the Qinghai-Tibet Plateau, has a latitude and longitude range of 25°59′30″N~40°1′0″N and 67°40′37″E~104°40′57″E, with a total area of 3.0834 million km2 and an average altitude of about 4320 m. In terms of administrative regions, this area covers China, Kyrgyzstan, India, Myanmar, Bhutan, Nepal, Tajikistan, Afghanistan, Pakistan, and the Kashmir region. The bulk of the Qinghai-Tibet Plateau is located within China, including the Tibet Autonomous Region, northwestern Yunnan Province, western Sichuan Province, southern Xinjiang Uygur Autonomous Region, Qinghai Province, and northwestern and southwestern Gansu Province. The region is located at the junction of the Eurasian Plate and the Indian Plate, and the geological movement is intense, which has bred many world-famous mountains [49]. Frequent geological movements that brought about the topographic depression for the production of lakes also created lake basins, enabling the Qinghai-Tibet Plateau to provide large and small lakes for objective analysis. At the same time, the precipitation area of the Qinghai-Tibet Plateau has large differences and obvious characteristics [50]. The inflow area is a typical plateau continental climate, and the precipitation is scarce. The outflow area is affected by the Indian monsoon and the East Asian monsoon, and has obvious monsoon characteristics. The outflow precipitation is significantly increased compared with the inflow area’s precipitation. As the transition zone of the first step of China’s topography, the east and south of the Qinghai-Tibet Plateau, is affected by the monsoon from the Pacific Ocean and the Indian Ocean, forming a continental monsoon climate with both continental and oceanic climate characteristics, rainy summers, and cold and dry winters. The hinterland of the plateau presents typical continental characteristics such as hot summers and cold winters, as well as scarce precipitation. The difference in precipitation distribution also leads to different lake recharge water sources, which in turn affects the regional variation in lake species, with freshwater lakes located in the east and south, whereas most lakes in the northwest are saltwater lakes.

The lakes selected for this study are distributed in the inflow area and outflow area of the Qinghai-Tibet Plateau, including Ngoring Lake, Zaling Lake, Selingco Lake, Tangrayuco Lake, Namtso Lake, Xijinwulan Lake, and Lishioden Lake. Ngoring Lake and Zaling Lake are located in the outflow area of the northeast of the Qinghai-Tibet Plateau and are important freshwater lakes in the upper reaches of the Yellow River. Located in the inland flow area of the hinterland of the Qinghai-Tibet Plateau, Selingco Lake has continued to grow in size in recent years, surpassing Namtso Lake to become the largest lake in the Tibet Autonomous Region, and Selingco Lake is also the second largest saltwater lake in China after Qinghai Lake, surrounded by numerous satellite lakes. Adjacent to Selingco Lake, Namtso Lake runs southwest–northeast and is a typical endorheic lake and saltwater lake. Located in the Ali no man’s land in the hinterland of the Qinghai-Tibet Plateau, the overall shape of Tangrayuco Lake is wide from north to south and narrow in the middle, representing a typical saltwater lake in the endorheic area. Xijinwulan Lake and Lishioden Lake are located in the inland flow area of the northern foothills of the Qinghai-Tibet Plateau and are surrounded by many satellite lakes formed due to lake degradation. Figure 1A shows the regional location of the Tibetan Plateau in China, and Figure 1B shows the study regions.

Figure 1.

The location of the Study Regions.

2.2. Data and Processing

The Sentinel-2 mission consists of two polar-orbiting satellites, Sentinel-2A and Sentinel-2B. These two satellites have the same parameters and each carries a Multispectral Instrument (MSI). These satellites function at an altitude of 786 km, with a swath width of 290 km and a revisit period of 5 days (up to 3 days at high latitudes), and use a spectral band covering 13 bands from visible to shortwave infrared, with resolutions of 10 m, 20 m, and 60 m. The selected Sentinel-2 imageries have a total of five scenes, with row and column numbers shown in Table 1.

Table 1.

The detailed information of selected images.

There are two types of Sentinel-2 images provided by the Sentinels Scientific Data Hub of ESA (https://scihub.copernicus.eu/dhus/#/home (accessed on 2 February 2023)), Sentinel-2 L1C and Sentinel-2 L2A. Here, the L1C level images are orthorectified, and the atmospheric apparent reflectance products are geometrically corrected but have not yet been atmospherically calibrated. L2A-level images are atmospherically corrected bottom reflectance products. L1C-level images require radiometric calibration and atmospheric correction to obtain L2-level images. Then, the resampling process is carried out to obtain a single-band image with a resolution of 10 m. Finally, the three-channel image is synthesized, producing bands band2 (blue band), band3 (green band), and band4 (red band).

We then selected the single-band images band3 and band11 (shortwave infrared 1 band) after resampling. Considering the possibility of lake floats such as lake ice, the Improved Normalized Difference Water Index (MNDWI) [8] is more applicable than the NDWI [7], and the equation is as follows,

where is band3 images and is band11 images; the calculated results were relatively rough water body borders. Drawing the regions of interest (ROI) of the water body based on the results obtains a statistical analysis of it. Next, the threshold can be obtained from the statistical analysis of ROI. This threshold was used to reclassify the water body and other ground objects to obtain the more detailed water body borders. The more detailed water body borders were converted to the shapefile shape because of their original raster shape. Finally, visual interpretation was used to edit the reclassified boundary and adjust the label image of the lake water body.

Image and label collections were cut to obtain 256 × 256 size images and labels. In order to maintain a relative balance of negative and positive samples in the dataset, we cleaned the samples in the dataset. In this paper, a dataset consisting of 11,530 samples was randomly divided into the training set, the validation set, and the prediction set. The training set contains 8071 samples, the validation set contains 2306 samples, and the prediction set contains 1153 samples, which were obtained according to a ratio of 7:2:1. Finally, before being fed into the model for training, the training set and validation set undergo image flipping to complete the image augmentation.

3. Methods

3.1. Modification of Original UNet

The presentation of the lake water surface on remote sensing images is affected by the terrain of the lake basin, as well as lake composition, cloud cover, recharge water source, human activities, and other factors. The lake surface offers rich spatial and spectral details [53]. Although the convolutional layer can complete the feature identification and extraction of each pixel, due to the size of the receptive field, the convolutional layer in the original U-Net model encoder cannot effectively mine the context details between the complex continuous water pixels of the lakes of the Qinghai-Tibet Plateau, resulting in a lack of lake water continuity, which is particularly obvious in complex lake shores, coastal tidal flats, and the bare ground in lakes. The aforementioned three types of terrain and water bodies are intertwined, so the water bodies are cut and presented in fragmented shapes on remote sensing images, which poses difficulties in accurately interpreting the water pixels in the images and demonstrating their spatial continuity. Aiming at restoring water body pixels’ spatial continuity and interpreting them accurately, we applied the ViT structure to the ViTenc-UNet model encoder to solve the problems. The self-attention mechanism of ViT pays more attention to the global spatial relationship between long-distance continuous water pixels and preserves this information. ViT draws on the processing method of the Transformer structure for word and sentence structure in the field of Natural Language Processing (NLP), which divides the input image into several “word embedding”. The spatial relationship and spectral features of the images are then processed in parallel based on their semantic information in the embedded words to completely extract the water body information of lakes on the Qinghai-Tibet Plateau. A convolutional layer added after the ViT output is used to map the feature dimensions of constrained images. In addition, inserting the CBAM mechanism can retain key weighted and confidence information on the lake water when the decoder restores the image feature operation, which can accurately complete the prediction and extraction of the lake water surface and effectively improve the recognition effects for different lakes.

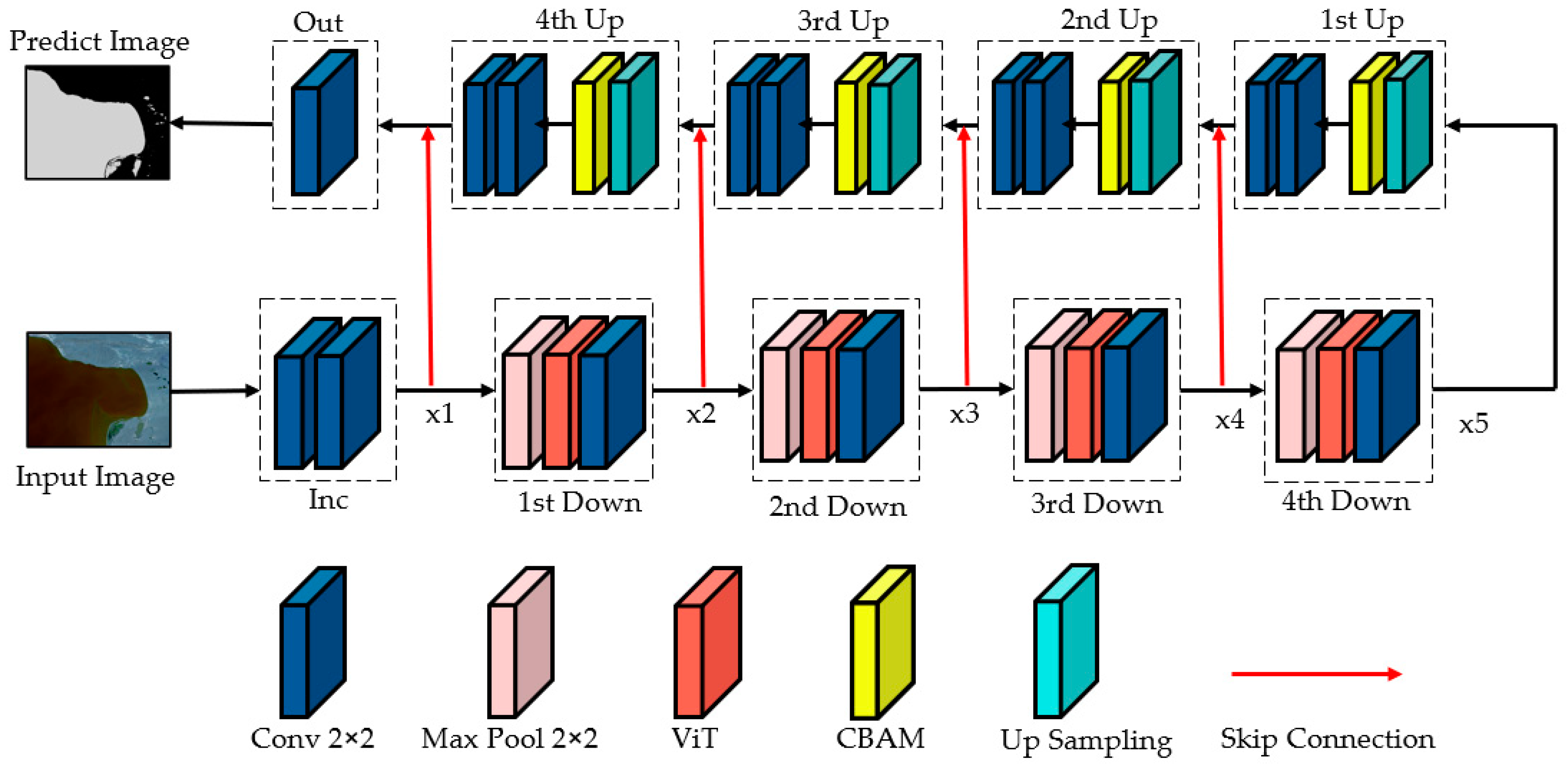

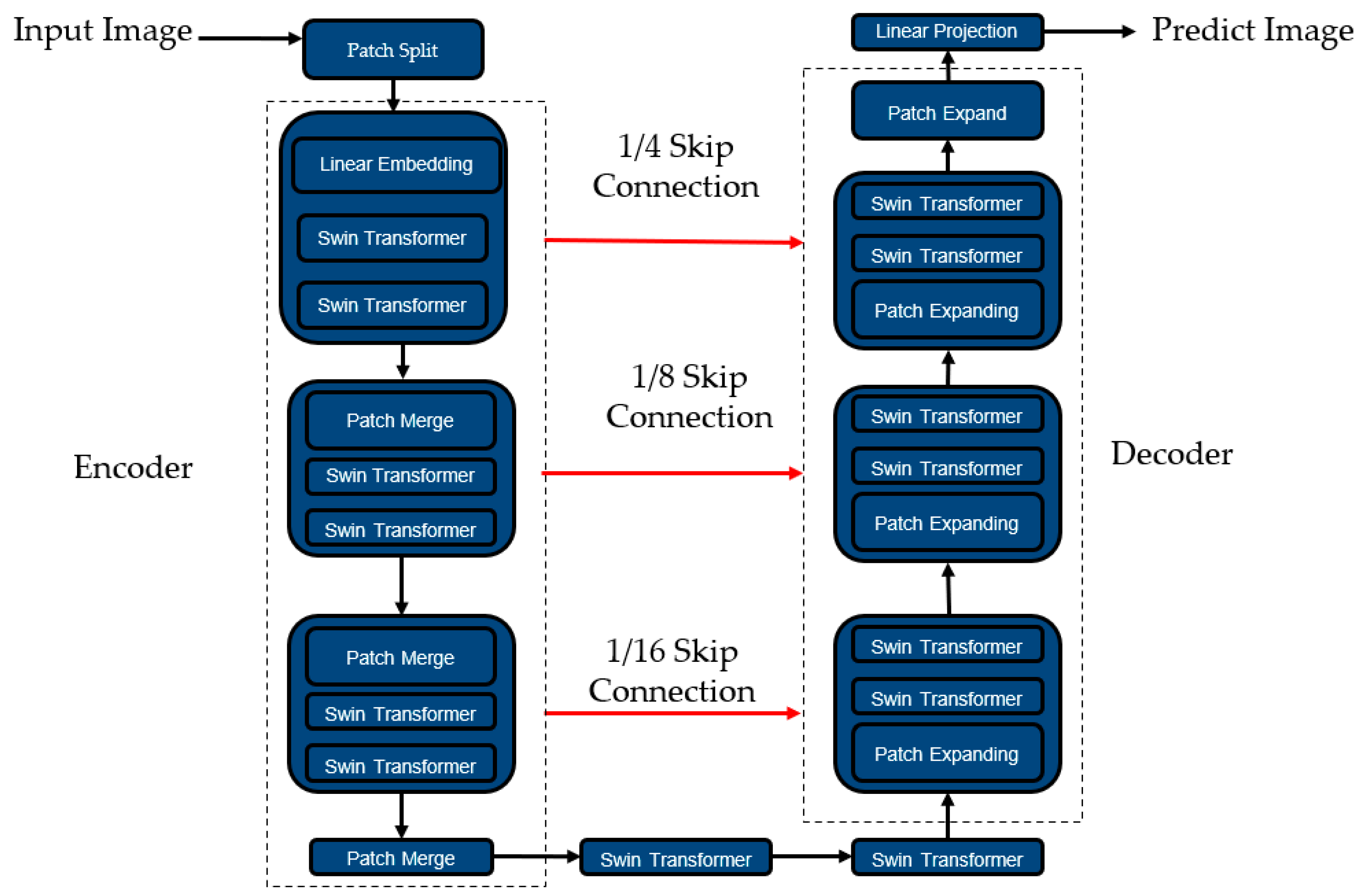

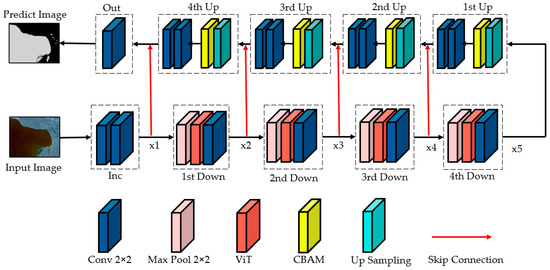

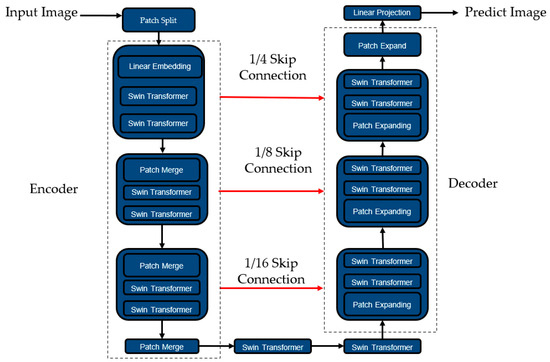

The structure of ViTenc-UNet, an improved model based on U-Net, is shown in Figure 2. The ViTenc-UNet model includes an Inc layer that initializes the input image into a multidimensional matrix, an Out layer that constrains the output to predict the image feature dimension, four downsampling layers, four upsampling layers, and four skip connections. The Inc layer is used to initialize the input image to be predicted. The function of the downsampling layer is to extract the deep semantic features of the image; additionally, the number of channels increases from 64, 128, 256, and 512, to 1024. The upsampling layer is used to restore the features of the image. The skip connection is used to fuse and stitch the output results of each upsampling layer and the downsampling results of the corresponding dimension, which decreases the feature information loss during downsampling. The role of the Out layer is to map and output prediction results.

Figure 2.

The ViTenc-UNet structure.

3.2. Encoder

The ViTenc-UNet model encoder includes an initialization layer and four successive downsampling layers with the same structure, each of which includes a pooling layer, a ViT layer, and a convolutional layer, with different input and output channel numbers. The initialization layer consists of a set of two continuous 3 × 3 convolutional layer stacks, each of which is activated non-linearly using the ReLU function.

The pooling layer in the downsampling layer consists of a 2 × 2 maximum pooling layers, which segments the input patch into a few parts with the same pooling size to reduce the spatial dimension of the image. ViT for extracting the deep semantic features of images in the downsampling layer is a transformer-based image segmentation method [54] that processes image data into fixed-size sequence data with a similar structure to text, sentences, paragraphs, etc., and enters the sequence data into the Transformer layer to learn the characteristics of the sequence data. The specific principle for an existing image can be expressed using the following equation:

where is the splitting patch, is the original input image, is the height of the original input image, is the width of the original input image, and is the number of channels of the original input image. Here, to embed the image into the encoder, we split it into several image patches. Each patch has the same image size and the same number of channels as the original input image. The principle is as follows:

where is the number of image patches, is the size of the image patch, and is the number of channels of the image patch (consistent with the number of channels of the original input image). An image patch has a size of (, , ) and is a flattened vector with length . Next, a liner transformation layer with dimension D is used to map the vector, constraining the size of the input vector to form an image patch of the D dimension, similar to the “word embedding” of the image patch. The mapped image patch does not have position coding, and a learnable, numerical vector needs to be embedded into the image patch to represent the position embedding of the image patch. The principle is as follows:

where represents the position encoding of even columns, and represents the position coding of odd columns. Next, we concatenate the position encoding and image patch fusion to form an image patch with position encoding. Before passing into the encoder’s Multihead Self-Attention (MSA) mechanism, the image patch needs to be subjected to a Layer Normalization expression to reduce the Covariate Shift inside the image patch. The principle is as follows:

where is the image patch input from the previous layer, and is the pre-normalized image.

In the MSA mechanism, each image patch needs to compute the Query (hereafter referred to as Q), Key (hereafter referred to as K), and Value (hereafter referred to as V). By calculating the dot product of the transpose matrix of Q and K and applying the softmax function to map these vector products to the (0, 1) interval, the attention weight between each image patch and other image patches is obtained, and these weights are multiplied by the corresponding V and added to obtain a new image patch. The principle is as follows:

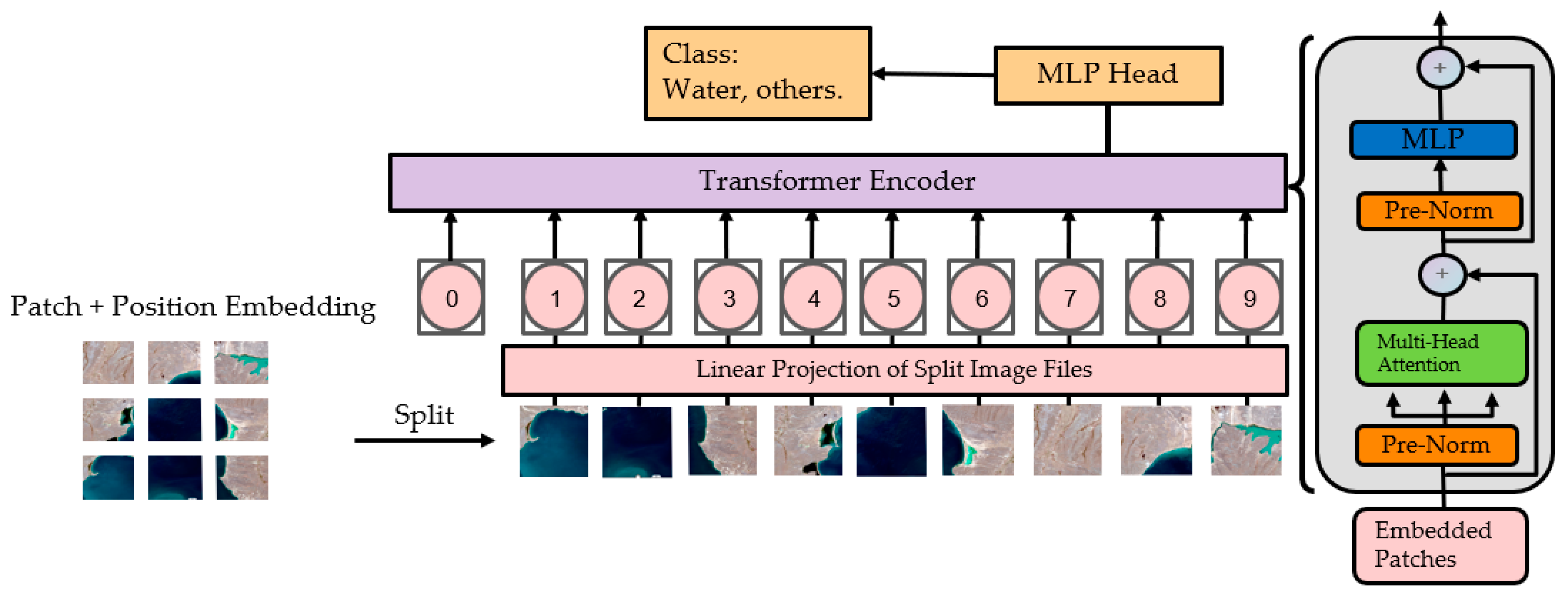

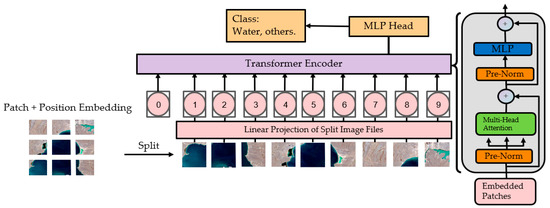

where is the vector information that needs to be queried (Query), is the queried vector index (Key), is the value obtained by the query, and dk is the degree of scaling. ViTenc-UNet models use learnable scaling coefficients, which are continuously adjusted using the training situation during the model training process. The ViT structure is shown in Figure 3 [54].

Figure 3.

The ViT structure.(“0” to “9” is image patch with linear embedding and position embedding).

Image patches weighted by the MSA will be passed into the Feedforward Neural Network (FNN) for further feature extraction. The transformer layer will then output a new sequence that contains a high-level feature representation of the input image. For the classification extraction task of water body images, we used the first image patch in the sequence to obtain the final classification probability of water bodies and other features. Finally, the downsampling layer structure connects a 3 × 3 convolutional layer, which acts as a map output image feature matrix.

3.3. Decoder

The structure of the ViTenc-UNet model decoder includes four feature dimensions reduced in the sequence of the upsampling layer and Out layer. Each upsampling layer includes a transposed convolutional layer, a bilinear interpolation layer, a CBAM attention layer, and two continuous 3 × 3 convolutional layers. In addition, skip connections are used in the module to connect the feature dimensions of the downsampling layer and upsampling layer. The core part of the upsampling layer is two parallel upsampling methods, transpose convolution and bilinear interpolation.

The ViTenc-UNet model upsampling methods are Bilinear Interpolation [55] and Transpose Convolution [56]. By upsampling the input feature map, we restore the input feature map to the initial value. Specifically, bilinear interpolation obtains the pixel value of an unknown point through the pixel values of four adjacent points of unknown points and performs linear interpolation from two directions. The corresponding formula is as follows:

where the pixel values of unknown points found by , , , , and are the pixel values of the known four adjacent points, and , , , and are the weight values of the four adjacent points, respectively. The weights will change dynamically with the distance of adjacent image points.

In addition, we choose the transpose convolution method according to bilinear variation. Transpose convolution is different from ordinary convolution. Ordinary convolution characterizes the input feature dimension such that the resulting feature dimension will be reduced or unchanged to varying degrees, while transpose convolution will amplify the input feature dimension. However, the transpose convolution process will not restore the corresponding cell value. After the upsampling process is completed, the feature map of the image will perform two consecutive convolution operations to extract the feature dimensions of the image.

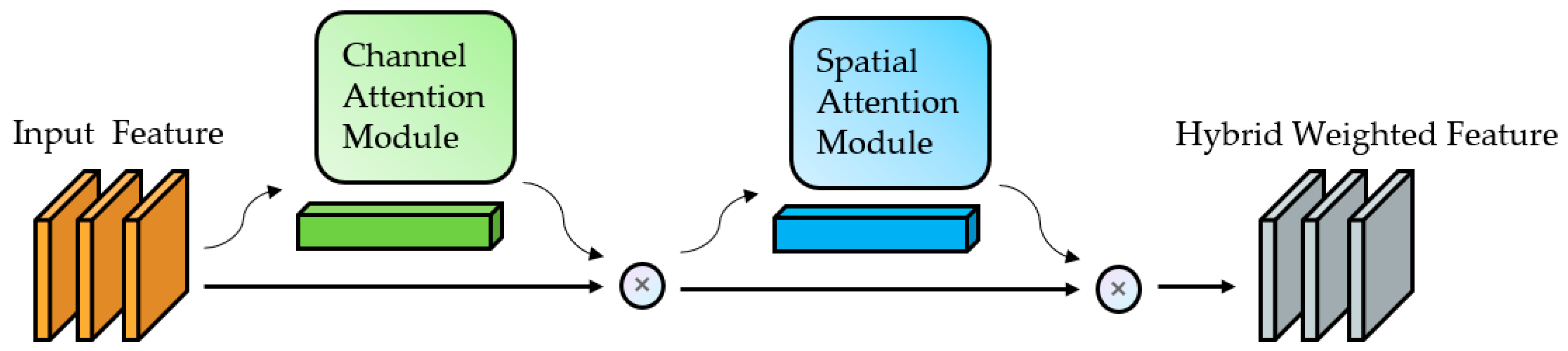

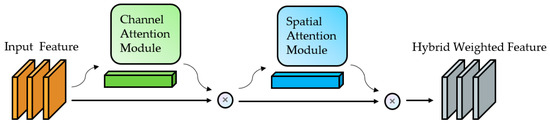

To better preserve the spatial and channel features, we need to weight both of them. Therefore, we next introduce the CBAM hybrid attention mechanism [57], which contains the Channel Attention Module and Spatial Attention Module. The structure of this mechanism is shown in Figure 4 [57].

Figure 4.

The CBAM structure.

The channel attention pair of the structure performs maximum pooling and average pooling operations on the input patches and then passes these two results into a Multi-Layer Perception (MLP) with two convolutional layers. Next, the maximum pooling feature and the average pooling feature processed in MLP are summed, and sigmoid activation is performed on the summation result to obtain the channel attention weighting coefficient. The principle is as follows:

where is the channel attention-weighted feature dimension, and is the input feature dimension.

Spatial attention takes the features from the channel attention weighted as the input patches of this module, performs maximum pooling and average pooling operations in the channel dimension, and concatenates the two pooling results. After a 2 × 2 convolutional layer operation, the dimension is reduced to one dimension and then activated by a sigmoid function to obtain spatial attention weights. The principle is as follows:

where is the channel attention weighting coefficient, and is the input feature dimension.

We then multiply this weight by the initial input feature dimension to obtain the channel and spatially weighted feature dimensions. Finally, two consecutive 3 × 3 convolutional layers are used to map the feature dimensions of the output image. The output layer consists of a 1 × 1 convolutional layer.

3.4. Comparison

Five models, FCN, U-Net, DeepLabv3+ [58], TransUNet [59], and Swin-Unet [60], are included in the comparative experiments.

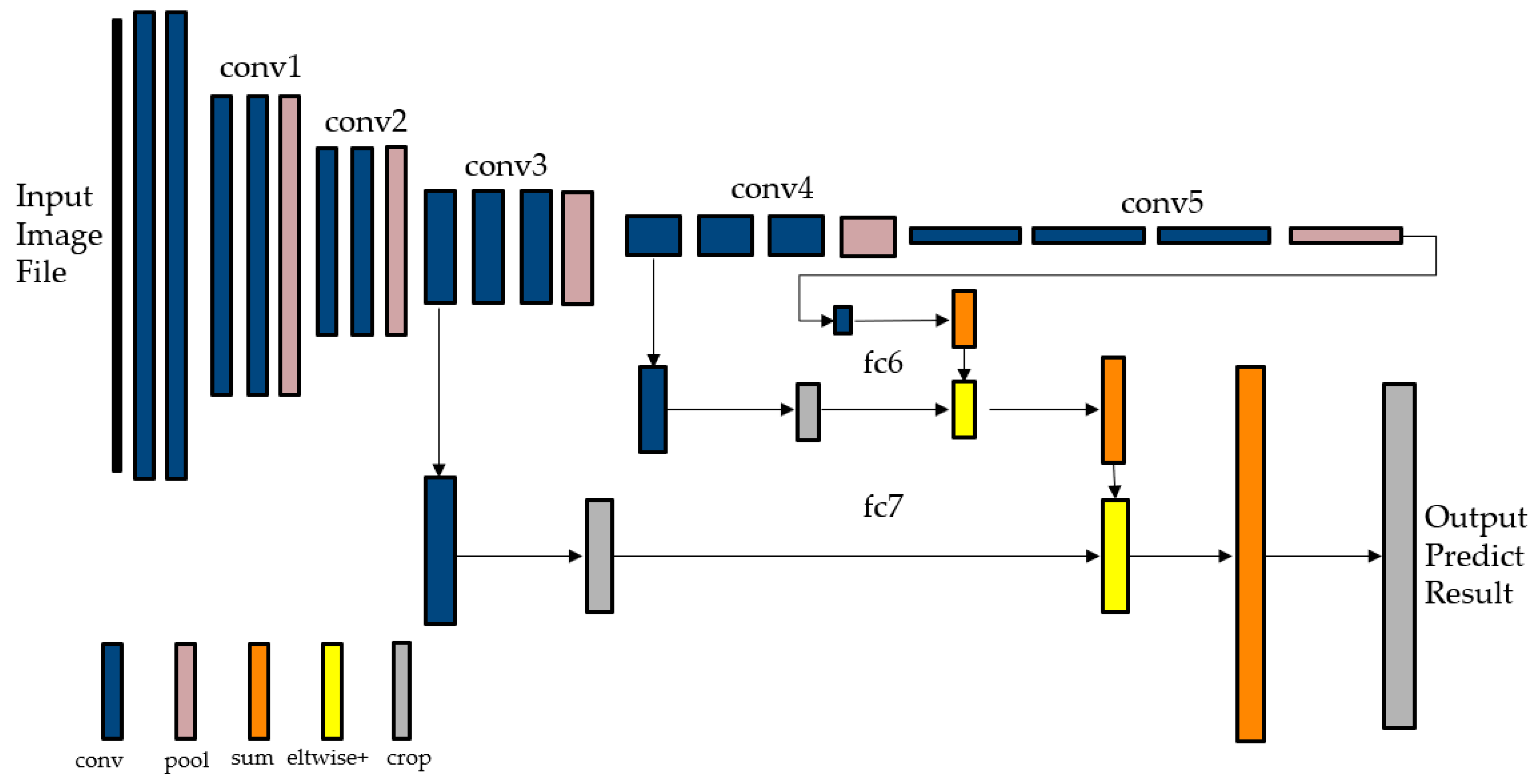

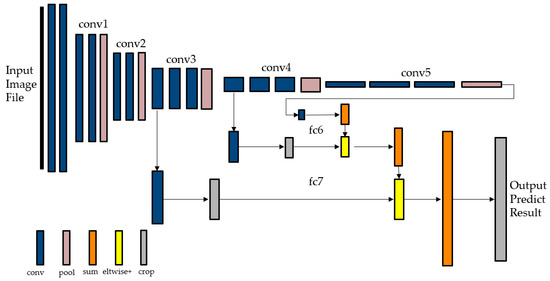

The FCN model consists of five convolution operations and two fully connected operations. In the five convolution operations, the image is scaled in each operation, while the third and fourth operations also retain the feature map of the cell. After five scaling operations, the feature information of the cell changed in the fully connected operation of the sixth and seventh layers. The obtained features are then upsampled, and the fourth and third convolutional layers are used sequentially to supplement the features with details in this process to complete the restoration operation of the image, whose structure is shown in Figure 5 [21].

Figure 5.

The FCN structure.

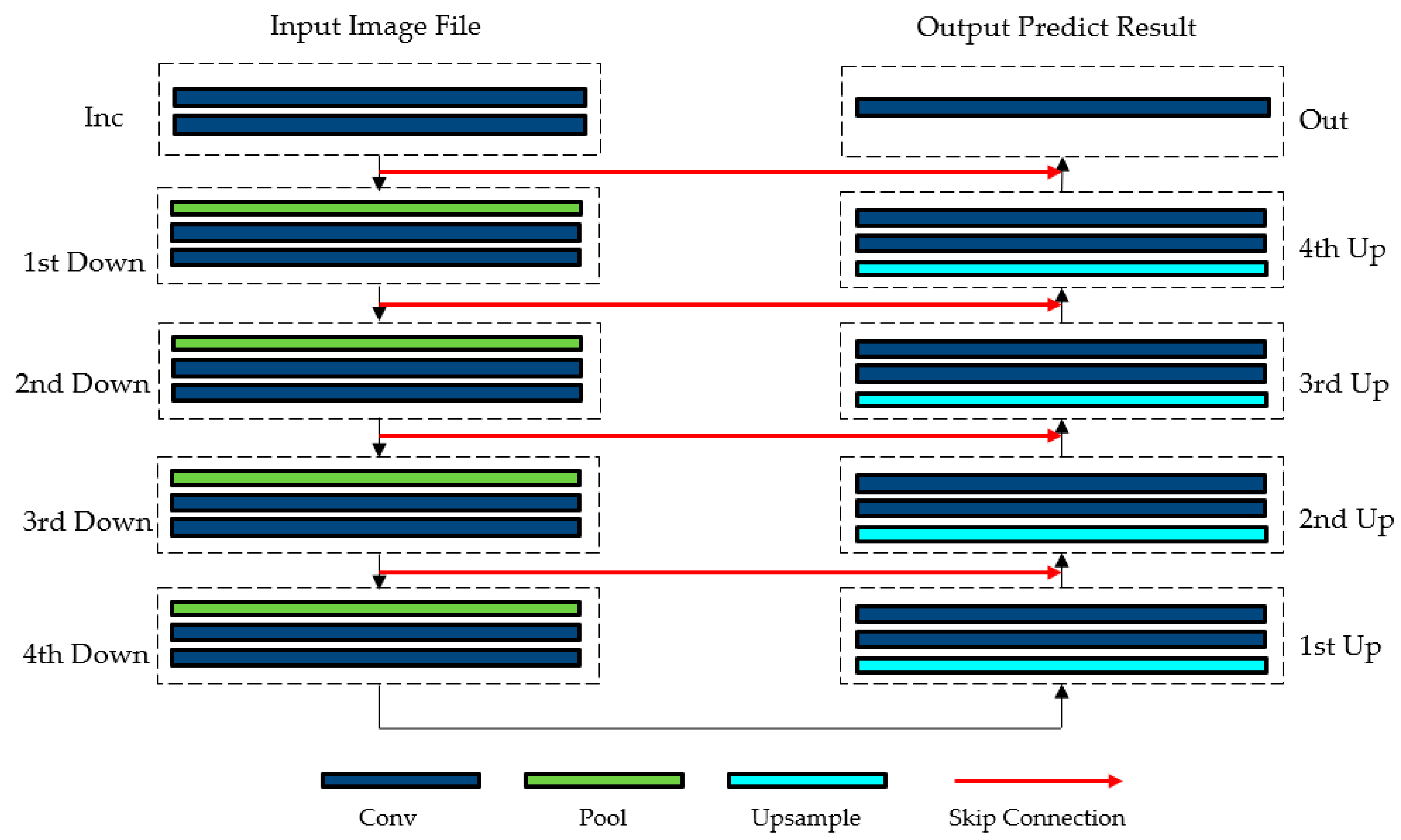

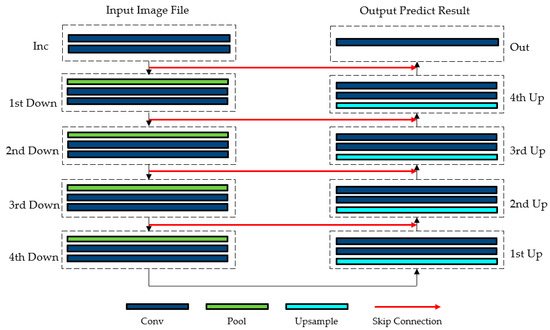

The structure of the U-Net model includes four downsampling layers and four upsampling layers. Convolutional layers are used before the input downsampling layer and after the output from the upsampling layer to initialize the input matrix and map the output results. Each downsampling layer is the maximum pooling layer and two consecutive convolutional layers that extract the feature dimensions of the image. Each upsampling layer includes Bilinear Interpolation and Transpose Convolution, and a skip connection is used to fuse the symmetrical downsampling layer output features. The structure is shown in Figure 6 [24].

Figure 6.

The U-Net structure.

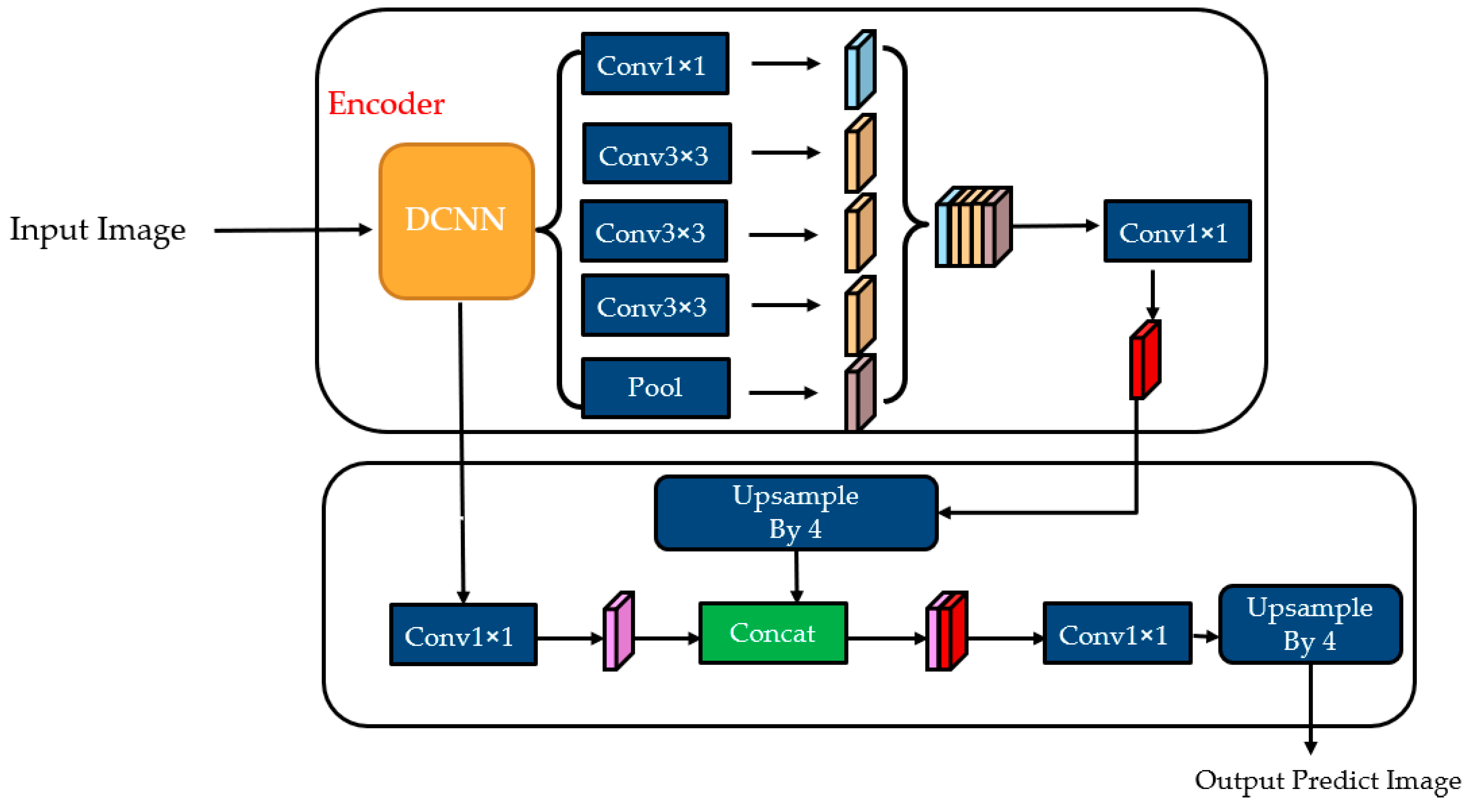

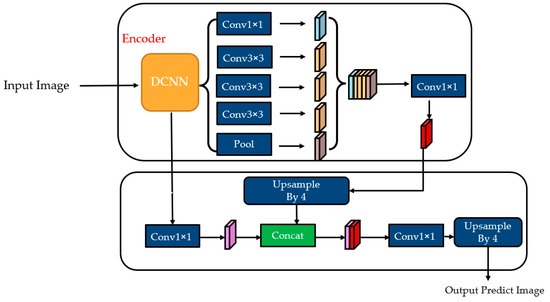

The encoder of DeepLabv3+ first selects a low-level feature and uses a 1 × 1 convolutional layer to compress the channel to reduce the specific gravity and then gradually obtains the segmentation result in 3 × 3 convolutional layers. The decoder part directly upsamples the output of the encoder by 4 times to make its resolution consistent with the low-level feature. After the two features are fused and stitched, a 3 × 3 convolution is performed. Then, upsampling is performed to obtain the predicted output, whose structure is shown in Figure 7 [58].

Figure 7.

The DeepLabv3+ structure.

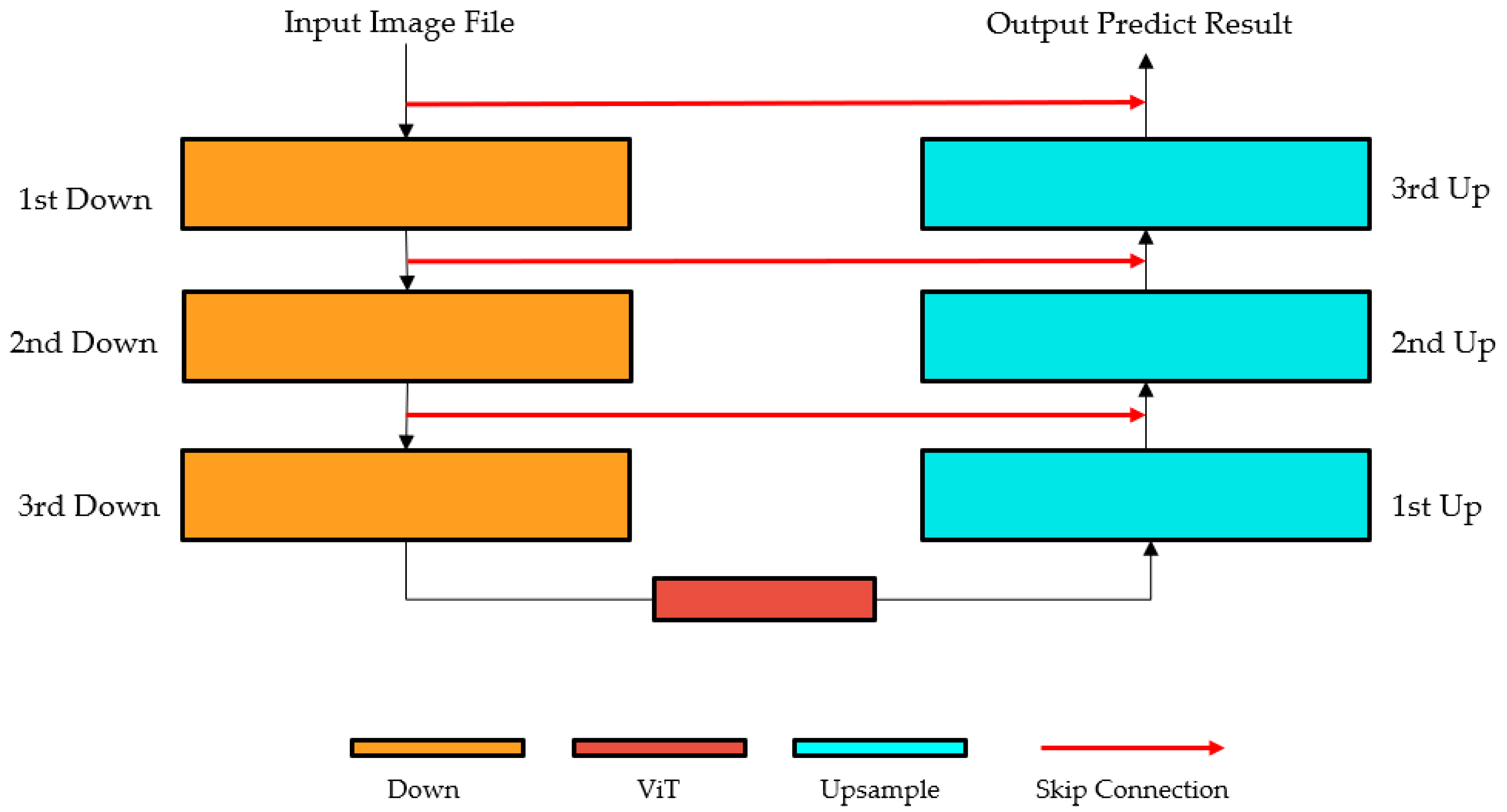

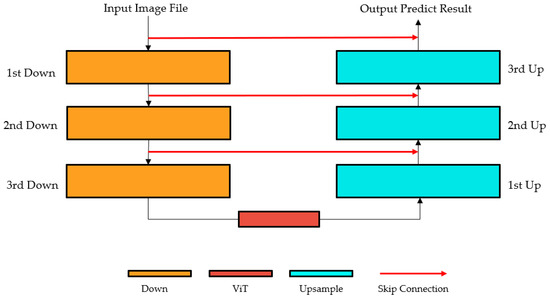

TransUNet is a model based on U-Net modifications, with specific changes introduced to reduce the number of downsampling layers and upsampling layers of the model to three and add a ViT structure between the third downsampling layer and the first upsampling layer. We also construct an encoder that combines the convolutional neural network and a Transformer structure. The decoder is composed of a cascaded upsampler with a skip connection between the encoder and the decoder. The corresponding structure is shown in Figure 8 [59].

Figure 8.

The TransUNet structure.

The overall structure of Swin-Unet is similar to U-Net, consisting of an encoder, bottleneck block, and decoder, with a skip connection between the encoder and the decoder. The whole structure is shaped like a “U”, the Swin Transformer (Shifted window Transformer) replaces all convolutional layers in the encoder and decoder, and the bottleneck block is directly composed of two Swin Transformer connections. The structure is shown in Figure 9 [60].

Figure 9.

The Swin-Unet structure.

4. Experiments

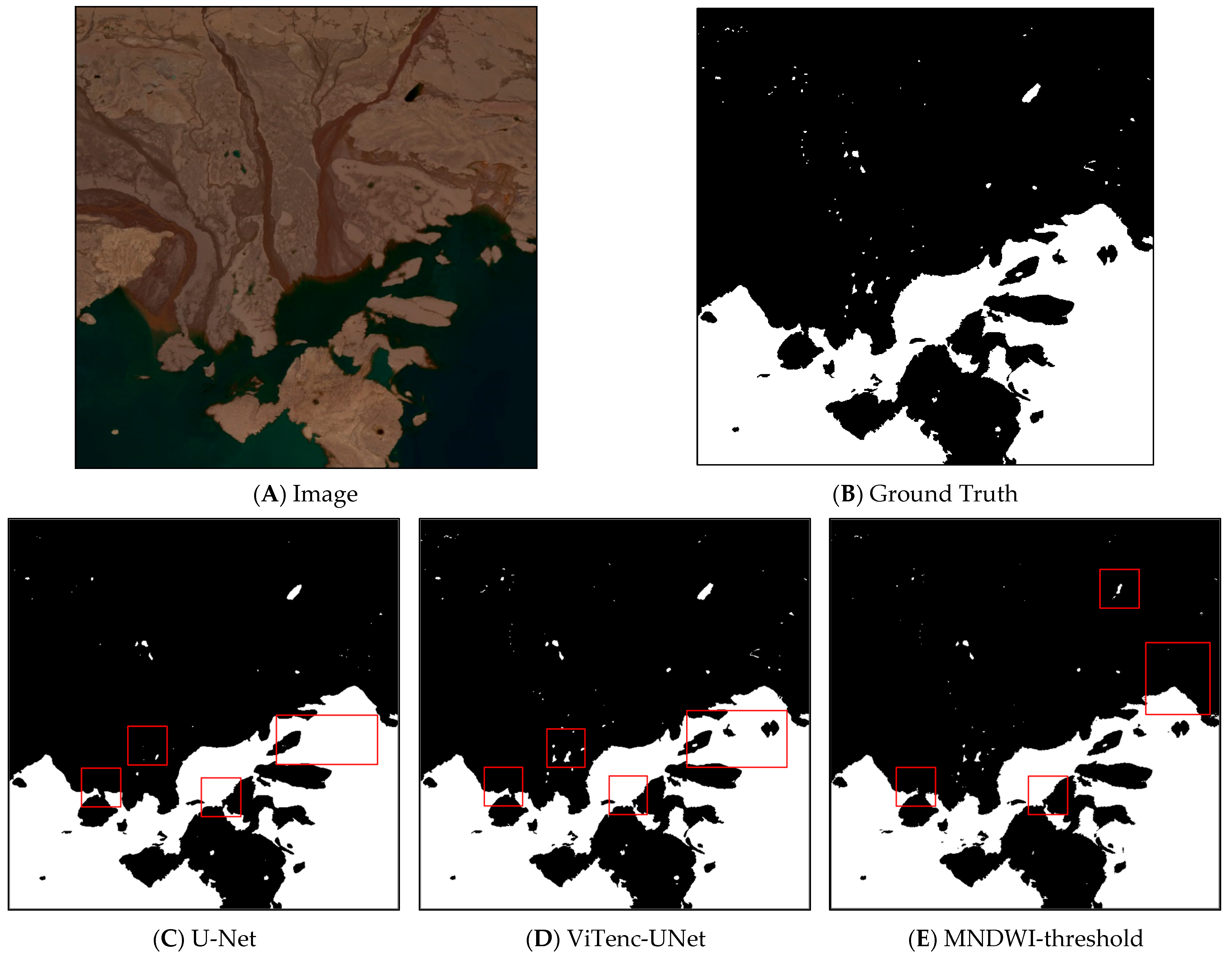

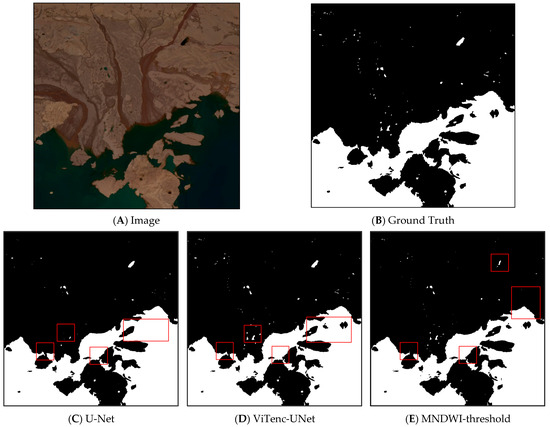

The ViTenc-UNet model uses the PyTorch 1.10.2 software package for construction and development. Based on the characteristics of binary classification in this experiment, the loss algorithm of the ViTenc-UNet model uses the Binary Cross Entropy Loss function (BCEL). Moreover, the Adam optimizer is used for weight updates, and Sigmoid is used as the activation function. The learning rate is 0.01; the default number of iteration rounds is 300 epochs; and to optimize memory use, the batch size is set to two. The model is trained on an NVIDIA GeForce RTX3070 Ti (8 G) GPU. After each training iteration is completed, the model saves the weight, loss function, and other metrics of the training completed to the local computer and zeroes out the confusion matrix and loss function of this iteration before the next iteration starts. The loss function is a quantitative value of how close the model’s predicted value is to the true value, wherein the smaller the number, the better the model’s prediction effect. Figure 10C–E show a comparison of the predicted effects in some areas. Here, the extraction effect of ViTenc-UNet is significantly better than that of U-Net before improvement and MNDWI-threshold, as the former generally retains the continuity and integrity of lake water bodies, while the latter has obvious bare surface misclassification in lakes in addition to the leakage of small lake water bodies.

Figure 10.

Improved before-and-after prediction comparisons. Black and white regions in predicted results are non-water regions and water regions, respectively. The region marked with red box indicates the prediction performance comparison among U-Net, the ViTenc-UNet, and the MNDWI-threshold predictions.

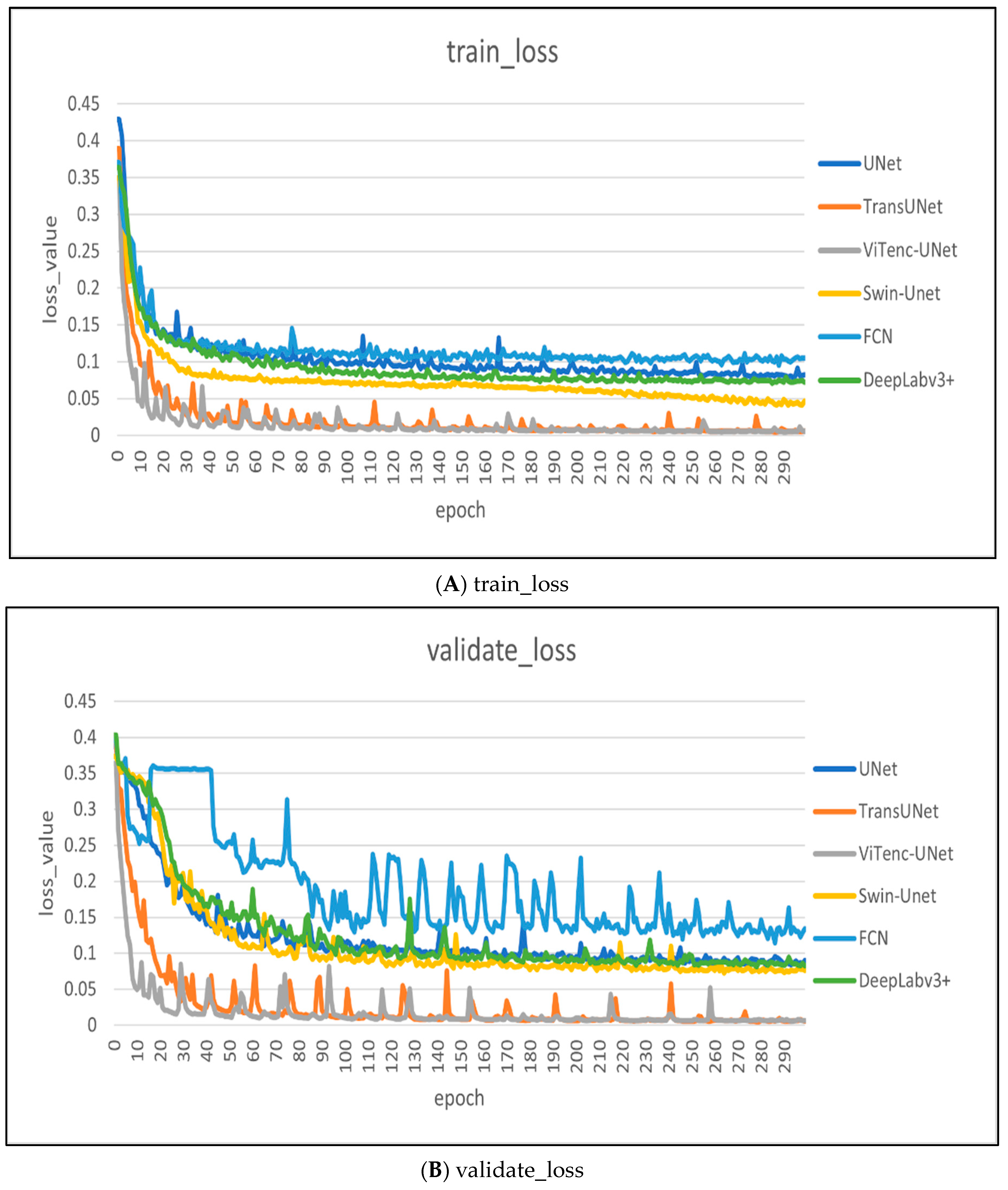

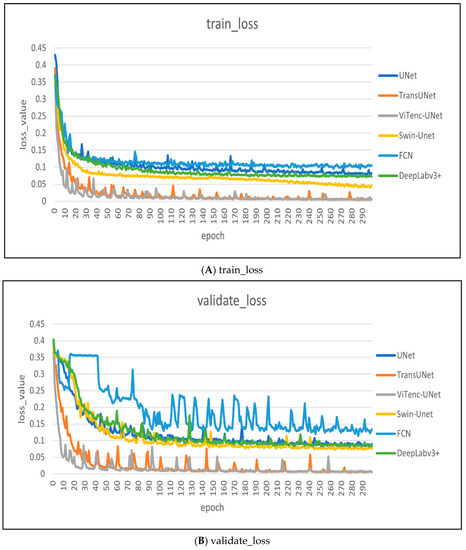

Figure 11A,B show the changes to the loss of the training set and validation set during the training process of each model. Here, the loss of the training set and the validation set is shown to decrease with an increase in rounds, with oscillation in the interval. In the 11th round of training, the loss value change of the model tended to decrease slowly. At epoch 299, the training loss reached a minimum of 0.0041, which was minimal compared to the training loss of the remaining five models. At epoch 296, the validation loss reached a minimum of 0.0058, which was minimal compared to the validation loss of the remaining five models.

Figure 11.

The changes of loss value in training epochs and validating epochs.

In order to comprehensively compare the performance of the ViTenc-UNet model with other models in the water classification task, we used five indicators: Overall Accuracy (), Intersection over Union (), Precision (), Recall (), and F1 Scores (). The classification results are divided into four categories: Correctly extracted water pixels are considered True Positive (), incorrectly extracted non-water pixels are False Positive (), correctly extracted non-water pixels are True Negative (), and incorrectly extracted water pixels are False Negative ().

is the ratio of the number of correctly extracted water pixels and correctly extracted non-water pixels to the total number of pixels, and the principle is as follows:

is the ratio of the intersection of predicted and true values and the union between the two, and the principle is as follows:

is the ratio of the number of correctly extracted water pixels to all classified water pixels, and the principle is as follows:

is the ratio of the number of correctly extracted water pixels to all pixels that should represent the number of water pixels, and the principle is as follows:

The scores represent the harmonic average of precision () and recall (), and the principle is as follows:

Table 2 counts the quantitative study results of the ViTenc-UNet model compared to other models and MNDWI-threshold methods. The evaluation index of the ViTenc-UNet model is better than that of other model methods. Moreover, compared to the current advanced DeepLabv3+, TransUNet, and Swin-Unet models, the present model still achieves better overall classification effects for lake water bodies. From the five selected indicators, the overall accuracy, intersection/merge ratio, accuracy, recall rate, and F1 score of the ViTenc-UNet model reached 99.04%, 98.68%, 99.08%, 98.59%, and 98.75%, respectively, which improved by 4.16%, 6.20%, 5.34%, 4.80%, and 5.34% compared to the original U-Net model. Additionally, all indicators were significantly improved compared to the other methods. It was shown that the ViTenc-UNet model has a good effect on the identification and extraction of lakes on the Qinghai-Tibet Plateau.

Table 2.

Statistics of quantitative research evaluation results.

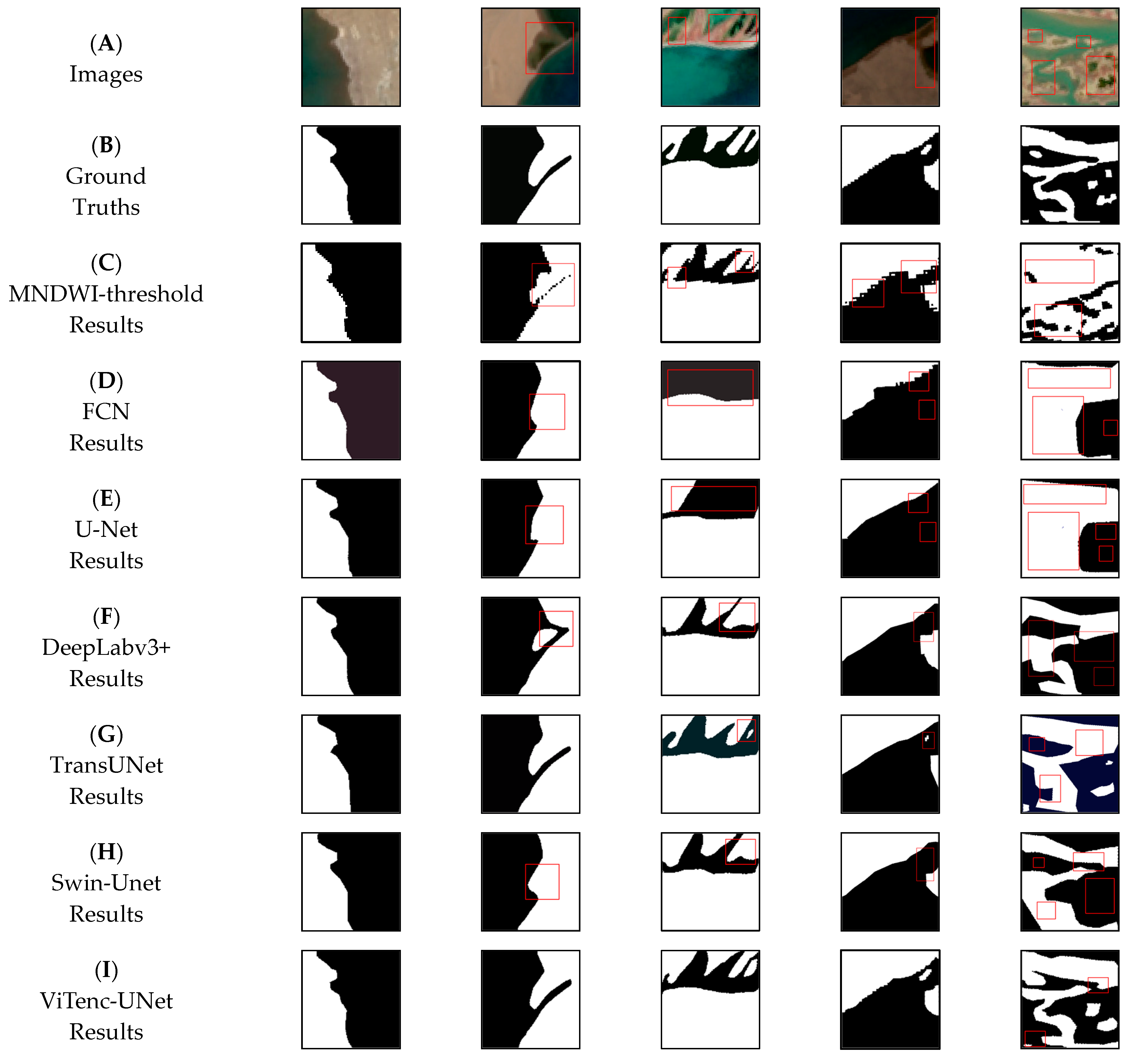

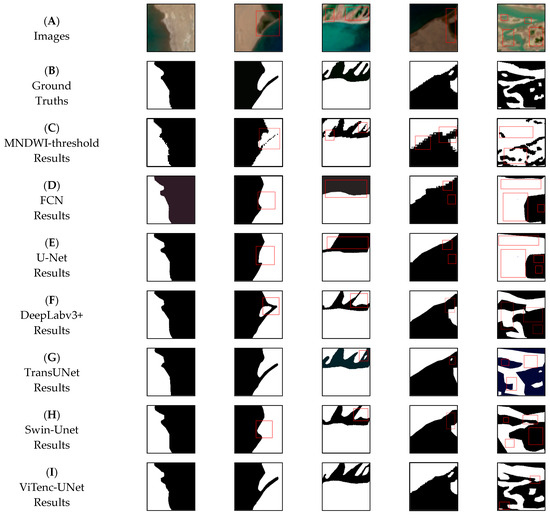

For comparison, due to the large number of datasets and limited space, Figure 12 presents actual images of five typical regions, including the labels and image predictions created using the above model in these regions.

Figure 12.

Prediction classification effects of lake water bodies in different regions: (A) images; (B) ground truths; (C) MNDWI results; (D) FCN results; (E) U-Net results; (F) DeepLabv3+ results; (G) TransUNet results; (H) Swin-Unet results; (I) ViTenc-UNet results. Black and white regions in predicted results are non-water regions and water regions, respectively. The region marked with red box indicates the focus region where prediction error occurs.

Figure 12 shows that the above seven methods have identified and extracted water bodies in different regions, but the extraction effect of the ViTenc-UNet model is better than that of the other six methods. For the area of the simple lake water body and lake shore, ViTenc-UNet has similar effects to the other six methods and can achieve the expected extraction effects. For the complex areas of the lake shore and the area with exposed reefs in the lake, the FCN, U-Net, and MNDWI-threshold methods featured obvious misalignment and leakage phenomena. The DeepLabv3+, TransUNet, and Swin-Unet models have slightly worse effects, which are mainly reflected in the insufficient spatial continuity of lake water bodies. Additionally, part of the surface is misdivided into lake water bodies. The ViTenc-UNet models perform well, showing the distribution of lake water bodies more objectively and realistically. For the area where alluvial fans of recharge rivers and lakes are staggered, FCN and U-Net models cannot accurately distinguish water bodies from other features. The DeepLabv3+ and Swin-Unet models can identify most water bodies, but the large number of details of some lake water bodies still makes them unable to meet the classification requirements. TransUNet model extraction is slightly better, but the prediction of spatial relationships between water bodies is still insufficient. Although the MNDWI-threshold method can extract the correct direction of simple water body boundaries, it can be seen from Figure 12 that the pixels’ prediction is missing. In addition, in the prediction of complex water body boundaries, its misclassification and missing points are very obvious. The ViTenc-UNet model is significantly better than the other six methods in these areas, and the prediction of the edge of the lake water body is more accurate, and has successfully completed the spatial continuity and spectral information extraction of lake water bodies.

5. Discussion

The traditional semantic segmentation task of classifying objects such as roads [61], buildings [62], farmland [63], and other objects generally has a relatively regular polygonal shape, and the classification difficulty is relatively simple. The water bodies of lakes on the Qinghai-Tibet Plateau are affected by water levels and complex terrains. Moreover, various irregular shapes are presented by remote sensing images in different years, which poses great challenges to the accuracy of the corresponding classification tasks. In the past, the image labeling of lake water datasets generally used labeling software such as “LabelMe” to visually and manually label the target lake water body. In the labeling process, due to the different shapes of lake water bodies, the complex twists and turns of lake shores, and the different mineral content of lakes, water pixels inevitably become misseparated from other feature pixels, which is especially obvious in more than two consecutive water pixels and lake shore mudflats. These misseparation cases can lead to false positives in the training process of the model, thereby affecting model performance. In order to obtain the true value of the water boundary of the lake on the Qinghai-Tibet Plateau, we mainly used the water index threshold method, supplemented by manual correction of unreasonable boundaries, which effectively reduces the working time of manual visual interpretation, so that these times could be used more effectively for the correction of water boundaries based on some regional details. The size of the dataset will also affect the performance of the ViTenc-UNet model because the encoder body of the model, the Vision Transformer layer, lacks the bias induction characteristics provided by the convolutional layer structure, and the absence of prior rules will lead to poor performance of the model in the opposite sample task. Thus, we selected Sentinel-2 remote sensing images with multiple scenes to expand the scale of the dataset.

The Transformer layer was originally designed as a self-attention mechanism for word and sentence translation tasks in the field of natural language processing [64]. Compared to the text language data represented by words and sentences, remote sensing image data have more dimensions and more complex spatial relationships. Thus, in the ViT used in this article, it was necessary to split the image into several “image patches”, and the size of the input “image patches” depended on the size of the input image. To determine a suitable input size, we selected a number of commonly used image sizes for testing. For this test, the five remote sensing images used in this paper were divided into five sizes: 128, 256, 480, 512, and 600. The amount of information remained unchanged, and the datasets of each size were still tested using ViTenc-UNet.

The test results are shown in Table 3. The size of the image dataset was reduced to 256 × 256, and the indicators of the measurement test showed an overall upward trend, with the 256 × 256 size being optimal. The recall rate of the dataset with a size of 600 × 600 was significantly lower than that of the other four sizes, which indicates that if the size of the input image is too large, the initialized “image patches” will be too large and not suitable for the transformer layer, resulting in leakage of the target figure. The 128 × 128 size dataset was too small, its spatial relationship was too fragmented, and the test result index was slightly lower than the optimal result index.

Table 3.

Statistics of the image size test results.

FCN, U-Net, and DeepLabv3+ do not contain a Transformer layer and still use the convolutional layer to extract image features. However, these models are limited by the small range of the receptive field of the convolutional layer and cannot accurately reflect the spatial continuity between multiple water bodies. The model with the Transformer layer has different advantages over other models in this lake water classification task, which can be found from the position, role, and number of the Transformer layer in the model. As the core part of the encoder group, the Vision Transformer layer in the ViTenc-UNet model is located in the middle of each encoder group, which replaces the convolutional layer of learning image features in the original U-Net model to learn the deep features of the image. The retained 1 × 1 convolutional layer is then used to constrain the feature dimension of the output image, which can preserve the spatial continuity of the image and reduce misalignment and omission. The ViT layer in the TransUNet model is located between the downsampling layer and the upsampling layer of the model and does not replace the convolutional layer of the original downsampling layer. The encoder essence of this layer is still a continuous 3 × 3 convolutional layer, and the Vision Transformer layer and convolutional layer jointly participate in learning image features, with the weighted confidence in the image features extracted using the convolutional layer. The difference between the Swin Transformer layer and the Vision Transformer layer in the Swin-Unet model is that the Swin Transformer’s MSA exists inside each window, and its receptive field increases with an increase in the layer. Additionally, the overall structure presents a “pyramid” shape, while the Vision Transformer’s MSA has weighted confidence in global features. Moreover, the Swin Transformer’s receptive field is fixed, and its overall structure is columnar. The Swin Transformer in this model completely replaces the convolutional layer in the original downsampling layer and the upsampling layer. Compared to the other two models containing a Transformer layer, the output layer of each sampling layer in the Swin-Unet model has no direct connection path to the previous Swin Transformer layer. Additionally, the gradient flow is blocked by the layer normalization module, which will lead to the disappearance of the gradient [60]. The classification effect is not as good as that of the ViTenc-UNet model and the TransUNet model and offers fewer transformer layers. In addition, too many Swin Transformer layers in the model can greatly increase the running time of the model.

An ablation experiment was applied in this paper to explore the influence of the CBAM attention mechanism and ViT layer on model improvement. We modified the U-Net source model to obtain two models for the ablation experiments. The encoder remained the same as the ViTenc-UNet encoder, but the U-Net model did not contain the CBAM attention mechanism in the decoder. The decoder was the same as the ViTenc-UNet decoder, but the encoder was not modified for the U-Net model. The results of the ablation experiment are shown in Table 4.

Table 4.

Statistics of the ablation experiment results.

When only the CBAM attention mechanism was added to the decoder of the model, except for a slight increase in IoU, all other indicators decreased. When only the ViT layer was added to the encoder of the model, all evaluation indicators were significantly improved, but the improvement was still slightly smaller than that of ViTenc-UNet. This experiment showed that the ViT layer can effectively improve the recognition extraction ability of the model. When the CBAM attention mechanism was added to the model as an independent structure, it did not effectively improve the recognition extraction ability of the model. However, as a supplementary structure, the CBAM attention mechanism improved the retention of spatial relationship information and spectral information extracted by the model.

To verify the reliability and stability of ViTenc-UNet, we also test the model in the public lake semantic segmentation dataset [65]. The additional experiment results are shown in Table 5.

Table 5.

Statistics of the experiment results of the public dataset (epochs are 100, batch_size is 16, optimizer is Adam, loss function is BCEWithLogitsLoss, and learning rate is 0.001).

In this experiment, the ViTenc-UNet model continued its excellent performance and demonstrated stable and reliable water boundary recognition ability, with FCN having poor performance and could not complete prediction tasks well. The five indicators of the ViTenc-UNet are superior to other models, especially in terms of IoU and F1 scores, which are significantly higher than other models. To some extent, the experimental results demonstrate that the ViTenc-UNet model has significant potential for identifying and extracting lake water boundaries, which is reasonable. Although ViTenc-UNet’s time cost for training and testing is ranking second, its performance in five indicators is the best of six models, which will help improve the effects of lake water bodies. In future improvements, we will also consider reducing model parameters to optimize runtime.

6. Conclusions

With the diversification of remote sensing data sources and increasingly rich remote sensing image information, traditional lake water extraction methods can no longer meet the current requirements for the efficient and accurate monitoring of lake water bodies. To better cope with the task of extracting large lake water bodies and realize the high-precision extraction of various lake water bodies from the complex terrain of the Qinghai-Tibet Plateau, we innovatively proposed an advanced model for extracting the main structure with Vision Transformer as the image feature. We named this new model ViTenc-UNet. ViTenc-UNet replaces the convolutional layer used to extract image features in traditional semantic segmentation model encoders with the Vision Transformer structure, which can accurately preserve the spatial continuity of the lake water body in remote sensing images and reduce interference from noise points and the pepper salt phenomenon on lake water information. We also added a spatial attention mechanism and spectral attention mechanism to the decoder to fully mine the spatial information and multispectral information of the water body in the image. To verify the performance of ViTenc-UNet, we collected data from multiple typical lake groups in the inflow and outflow areas of the Qinghai-Tibet Plateau and completed the experiment using these datasets. In these test areas, compared to other semantic segmentation models, the ViTenc-UNet model achieved different degrees of advantages in multiple index data, showing excellent performance in the semantic segmentation of lake water.

In future work, we will continue to improve the ViTenc-UNet model, including, but not limited to, the following: improving the model based on the position, number, and role of the Transformer structure in the model; starting from the number of layers and skip connections between the encoder and decoder; and using a lightweight encoder and decoder to optimize the structure of the model and reduce the model training time. In this study, the scope of the dataset was expanded, the proportion of lake water bodies in different topographic and geomorphological environments was increased, and the generalizability of the model was expanded to include lake water bodies.

Author Contributions

Methodology, X.Z. and H.W.; Supervision, H.W.; Software, X.Z. and L.L.; Funding acquisition, H.W. and L.L.; Data curation, X.Z.; Validation, X.Z.; Writing—original draft, X.Z.; Writing—review and edit, X.Z., H.W., Y.Z., J.L., T.Q., H.T. and Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China (2022YFF1303404), the Key Science and Technology Project of Inner Mongolia (2021ZD0011), the Key Science and Technology Project of Inner Mongolia (2021ZD0015) and the Key Science and Technology Project of Sichuan Province (2022YFS0450).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, J.; Sheng, Y.; Tong, T.S.D. Monitoring Decadal Lake Dynamics across the Yangtze Basin Downstream of Three Gorges Dam. Remote Sens. Environ. 2014, 152, 251–269. [Google Scholar] [CrossRef]

- Sheng, Y.; Song, C.; Wang, J.; Lyons, E.A.; Knox, B.R.; Cox, J.S.; Gao, F. Representative Lake Water Extent Mapping at Continental Scales Using Multi-Temporal Landsat-8 Imagery. Remote Sens. Environ. 2016, 185, 129–141. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, M.; Zhou, T.; Chen, W. Progress in remote sensing monitoring of lake area, water level, and volume changes on the Tibetan Plateau. Natl. Remote Sens. Bull. 2022, 26, 115–125. [Google Scholar]

- Ma, W.; Bai, L.; Ma, W.; Hu, W.; Xie, Z.; Su, R.; Wang, B.; Ma, Y. Interannual and Monthly Variability of Typical Inland Lakes on the Tibetan Plateau Located in Three Different Climatic Zones. Remote Sens. 2022, 14, 5015. [Google Scholar] [CrossRef]

- Zhang, G.; Yao, T.; Chen, W.; Zheng, G.; Shum, C.K.; Yang, K.; Piao, S.; Sheng, Y.; Yi, S.; Li, J.; et al. Regional Differences of Lake Evolution across China during 1960s–2015 and Its Natural and Anthropogenic Causes. Remote Sens. Environ. 2019, 221, 386–404. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, L.; Zhong, X.; Yao, T.; Qi, J.; Wang, Y.; Xue, Y. Quantifying the Major Drivers for the Expanding Lakes in the Interior Tibetan Plateau. Sci. Bull. 2022, 67, 474–478. [Google Scholar] [CrossRef]

- McFeters, S.K. The use of the Normalized Difference Water Index(NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalized difference water index(NDWI) to enhance open features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Feyisa, G.L.; Meilby, H.; Fensholt, R.; Proud, S.R. Automated Water Extraction Index: A New Technique for Surface Water Mapping Using Landsat Imagery. Remote Sens. Environ. 2014, 140, 23–35. [Google Scholar] [CrossRef]

- Yang, X.; Qin, Q.; Yésou, H.; Ledauphin, T.; Koehl, M.; Grussenmeyer, P.; Zhu, Z. Monthly Estimation of the Surface Water Extent in France at a 10-m Resolution Using Sentinel-2 Data. Remote Sens. Environ. 2020, 244, 111803. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, X.; Xiao, P. Spectral Index-Driven FCN Model Training for Water Extraction from Multispectral Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 192, 344–360. [Google Scholar] [CrossRef]

- Li, J.; Meng, Y.; Li, Y.; Cui, Q.; Yang, X.; Tao, C.; Wang, Z.; Li, L.; Zhang, W. Accurate Water Extraction Using Remote Sensing Imagery Based on Normalized Difference Water Index and Unsupervised Deep Learning. J. Hydrol. 2022, 612, 128202. [Google Scholar] [CrossRef]

- Lary, D.J.; Alavi, A.H.; Gandomi, A.H.; Walker, A.L. Machine Learning in Geosciences and Remote Sensing. Geosci. Front. 2016, 7, 3–10. [Google Scholar] [CrossRef]

- Abid, N.; Shahzad, M.; Malik, M.I.; Schwanecke, U.; Ulges, A.; Kovács, G.; Shafait, F. UCL: Unsupervised Curriculum Learning for Water Body Classification from Remote Sensing Imagery. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102568. [Google Scholar] [CrossRef]

- Chen, M.F.; Cohen-Wang, B.; Mussmann, S.; Sala, F.; Ré, C. Comparing the Value of Labeled and Unlabeled Data in Method-of-Moments Latent Variable Estimation. arXiv 2021, arXiv:2103.02761. [Google Scholar]

- Cui, W.; Yao, M.; Hao, Y.; Wang, Z.; He, X.; Wu, W.; Li, J.; Zhao, H.; Xia, C.; Wang, J. Knowledge and Geo-Object Based Graph Convolutional Network for Remote Sensing Semantic Segmentation. Sensors 2021, 21, 3848. [Google Scholar] [CrossRef]

- Li, T.; Zhang, J.; Zhang, Y. Classification of Hyperspectral Image Based on Deep Belief Networks. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; IEEE: New York, NY, USA; pp. 5132–5136. [Google Scholar]

- Makantasis, K.; Karantzalos, K.; Doulamis, A.; Doulamis, N. Deep Supervised Learning for Hyperspectral Data Classification through Convolutional Neural Networks. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; IEEE: New York, NY, USA; pp. 4959–4962. [Google Scholar]

- Chen, Y.; Tang, L.; Kan, Z.; Bilal, M.; Li, Q. A Novel Water Body Extraction Neural Network (WBE-NN) for Optical High-Resolution Multispectral Imagery. J. Hydrol. 2020, 588, 125092. [Google Scholar] [CrossRef]

- Dong, Z.; Wang, G.; Amankwah, S.O.Y.; Wei, X.; Hu, Y.; Feng, A. Monitoring the Summer Flooding in the Poyang Lake Area of China in 2020 Based on Sentinel-1 Data and Multiple Convolutional Neural Networks. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102400. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Pan, J.; Wei, Z.; Zhao, Y.; Zhou, Y.; Lin, X.; Zhang, W.; Tang, C. Enhanced FCN for Farmland Extraction from Remote Sensing Image. Multimed. Tools Appl. 2022, 81, 38123–38150. [Google Scholar] [CrossRef]

- Deng, H.; Xu, T.; Zhou, Y.; Miao, T. Depth Density Achieves a Better Result for Semantic Segmentation with the Kinect System. Sensors 2020, 20, 812. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Li, K.; Wang, J.; Yao, J. Effectiveness of Machine Learning Methods for Water Segmentation with ROI as the Label: A Case Study of the Tuul River in Mongolia. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102497. [Google Scholar] [CrossRef]

- Li, Z.; Wang, R.; Zhang, W.; Hu, F.; Meng, L. Multiscale Features Supported DeepLabV3+ Optimization Scheme for Accurate Water Semantic Segmentation. IEEE Access 2019, 7, 155787–155804. [Google Scholar] [CrossRef]

- Kang, J.; Guan, H.; Peng, D.; Chen, Z. Multi-Scale Context Extractor Network for Water-Body Extraction from High-Resolution Optical Remotely Sensed Images. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102499. [Google Scholar] [CrossRef]

- Lu, M.; Fang, L.; Li, M.; Zhang, B.; Zhang, Y.; Ghamisi, P. NFANet: A Novel Method for Weakly Supervised Water Extraction from High-Resolution Remote-Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5617114. [Google Scholar] [CrossRef]

- Ren, Y.; Li, X.; Yang, X.; Xu, H. Development of a Dual-Attention U-Net Model for Sea Ice and Open Water Classification on SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4010205. [Google Scholar] [CrossRef]

- Wang, S.; Peppa, M.V.; Xiao, W.; Maharjan, S.B.; Joshi, S.P.; Mills, J.P. A Second-Order Attention Network for Glacial Lake Segmentation from Remotely Sensed Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 189, 289–301. [Google Scholar] [CrossRef]

- Tambe, R.G.; Talbar, S.N.; Chavan, S.S. Deep Multi-Feature Learning Architecture for Water Body Segmentation from Satellite Images. J. Vis. Commun. Image Represent. 2021, 77, 103141. [Google Scholar] [CrossRef]

- Poliyapram, V.; Imamoglu, N.; Nakamura, R. Deep Learning Model for Water/Ice/Land Classification Using Large-Scale Medium Resolution Satellite Images. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: New York, NY, USA; pp. 3884–3887. [Google Scholar]

- Li, M.; Wu, P.; Wang, B.; Park, H.; Hui, Y.; Yanlan, W. A Deep Learning Method of Water Body Extraction from High Resolution Remote Sensing Images with Multisensors. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3120–3132. [Google Scholar] [CrossRef]

- Ge, C.; Xie, W.; Meng, L. Extracting Lakes and Reservoirs from GF-1 Satellite Imagery over China Using Improved U-Net. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1504105. [Google Scholar] [CrossRef]

- Aghdami-Nia, M.; Shah-Hosseini, R.; Rostami, A.; Homayouni, S. Automatic Coastline Extraction through Enhanced Sea-Land Segmentation by Modifying Standard U-Net. Int. J. Appl. Earth Obs. Geoinf. 2022, 109, 102785. [Google Scholar] [CrossRef]

- Zhou, P.; Li, X.; Foody, G.M.; Boyd, D.S.; Wang, X.; Ling, F.; Zhang, Y.; Wang, Y.; Du, Y. Deep Feature and Domain Knowledge Fusion Network for Mapping Surface Water Bodies by Fusing Google Earth RGB and Sentinel-2 Images. IEEE Geosci. Remote Sensing Lett. 2023, 20, 6001805. [Google Scholar] [CrossRef]

- Yan, S.; Xu, L.; Yu, G.; Yang, L.; Yun, W.; Zhu, D.; Ye, S.; Yao, X. Glacier Classification from Sentinel-2 Imagery Using Spatial-Spectral Attention Convolutional Model. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102445. [Google Scholar] [CrossRef]

- He, Y.; Yao, S.; Yang, W.; Yan, H.; Zhang, L.; Wen, Z.; Zhang, Y.; Liu, T. An Extraction Method for Glacial Lakes Based on Landsat-8 Imagery Using an Improved U-Net Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6544–6558. [Google Scholar] [CrossRef]

- Parajuli, J.; Fernandez-Beltran, R.; Kang, J.; Pla, F. Attentional Dense Convolutional Neural Network for Water Body Extraction from Sentinel-2 Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6804–6816. [Google Scholar] [CrossRef]

- Zhang, X.; Li, J.; Hua, Z. MRSE-Net: Multiscale Residuals and SE-Attention Network for Water Body Segmentation from Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5049–5064. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, Y.; Liu, Q.; Liu, X. High Resolution Remote Sensing Water Image Segmentation Based on Dual Branch Network. In Proceedings of the 2022 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Falerna, Italy, 12–15 September 2022; IEEE: New York, NY, USA; pp. 1–6. [Google Scholar]

- Yan, Q.; Chen, Y.; Jin, S.; Liu, S.; Jia, Y.; Zhen, Y.; Chen, T.; Huang, W. Inland Water Mapping Based on GA-LinkNet from CyGNSS Data. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1500305. [Google Scholar] [CrossRef]

- Yang, X.; Qin, Q.; Grussenmeyer, P.; Koehl, M. Urban Surface Water Body Detection with Suppressed Built-up Noise Based on Water Indices from Sentinel-2 MSI Imagery. Remote Sens. Environ. 2018, 219, 259–270. [Google Scholar] [CrossRef]

- Luo, X.; Tong, X.; Hu, Z. An Applicable and Automatic Method for Earth Surface Water Mapping Based on Multispectral Images. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102472. [Google Scholar] [CrossRef]

- Kaushik, S.; Singh, T.; Joshi, P.K.; Dietz, A.J. Automated Mapping of Glacial Lakes Using Multisource Remote Sensing Data and Deep Convolutional Neural Network. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103085. [Google Scholar] [CrossRef]

- Yao, T.; Bolch, T.; Chen, D.; Gao, J.; Immerzeel, W.; Piao, S.; Su, F.; Thompson, L.; Wada, Y.; Wang, L. The imbalance of the Asian water tower. Nat. Rev. Earth Environ. 2022, 3, 618–632. [Google Scholar] [CrossRef]

- Gupta, D.; Kushwaha, V.; Gupta, A.; Singh, P.K. Deep Learning Based Detection of Water Bodies Using Satellite Images. In Proceedings of the 2021 International Conference on Intelligent Technologies (CONIT), Hubli, India, 25–27 June 2021; IEEE: New York, NY, USA; pp. 1–4. [Google Scholar]

- Zhang, G.Q.; Luo, W.; Chen, W.F.; Zheng, G. A robust but variable lake expansion on the tibetan plateau. Sci. Bull. 2019, 64, 1306–1309. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G.Q.; Yao, T.; Xie, H.J.; Zhang, K.X.; Zhu, F.J. Lakes’ state and abundance across the Tibetan Plateau. Chin. Sci. Bull. 2014, 59, 3010–3021. [Google Scholar] [CrossRef]

- Akiyama, T.S.; Junior, J.M.; Goncalves, W.N.; De Araujo Carvalho, M.; Eltner, A. Evaluating Different Deep Learning Models for Automatic Water Segmentation. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: New York, NY, USA; pp. 4716–4719. [Google Scholar]

- Xiong, S. Research Achievements of the Qinghai-Tibet Plateau Based on 60 Years of Aeromagnetic Surveys. China Geol. 2021, 4, 147–177. [Google Scholar] [CrossRef]

- Li, F.-F.; Lu, H.-L.; Wang, G.-Q.; Yao, Z.-Y.; Li, Q.; Qiu, J. Zoning of Precipitation Regimes on the Qinghai-Tibet Plateau and Its Surrounding Areas Responded by the Vegetation Distribution. Sci. Total Environ. 2022, 838, 155844. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Shi, K.; Zhou, Y.; Li, N. Remote Sensing Estimation of Water Clarity for Various Lakes in China. Water Res. 2021, 192, 116844. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Chao, L.; Zhang, K.; Li, Z.; Zhu, Y.; Wang, J.; Yu, Z. Geographically Weighted Regression Based Methods for Merging Satellite and Gauge Precipitation. J. Hydrol. 2018, 558, 275–289. [Google Scholar] [CrossRef]

- Zhou, Y.; Chang, H.; Lu, X.; Lu, Y. DenseUNet: Improved Image Classification Method Using Standard Convolution and Dense Transposed Convolution. Knowl.-Based Syst. 2022, 254, 109658. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. In Proceedings of the Computer Vision—ECCV 2022 Workshops, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Yang, M.; Yuan, Y.; Liu, G. SDUNet: Road Extraction via Spatial Enhanced and Densely Connected UNet. Pattern Recognit. 2022, 126, 108549. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B. Integrating Semantic Edges and Segmentation Information for Building Extraction from Aerial Images Using UNet. Mach. Learn. Appl. 2021, 6, 100194. [Google Scholar] [CrossRef]

- He, Y.; Zhang, X.; Zhang, Z.; Fang, H. Automated Detection of Boundary Line in Paddy Field Using MobileV2-UNet and RANSAC. Comput. Electron. Agric. 2022, 194, 106697. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wang, Z.; Gao, X.; Zhang, Y.; Zhao, G. MSLWENet: A Novel Deep Learning Network for Lake Water Body Extraction of Google Remote Sensing Images. Remote Sens. 2020, 12, 4140. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).