Deep Learning-Based Detection of Urban Forest Cover Change along with Overall Urban Changes Using Very-High-Resolution Satellite Images

Abstract

:1. Introduction

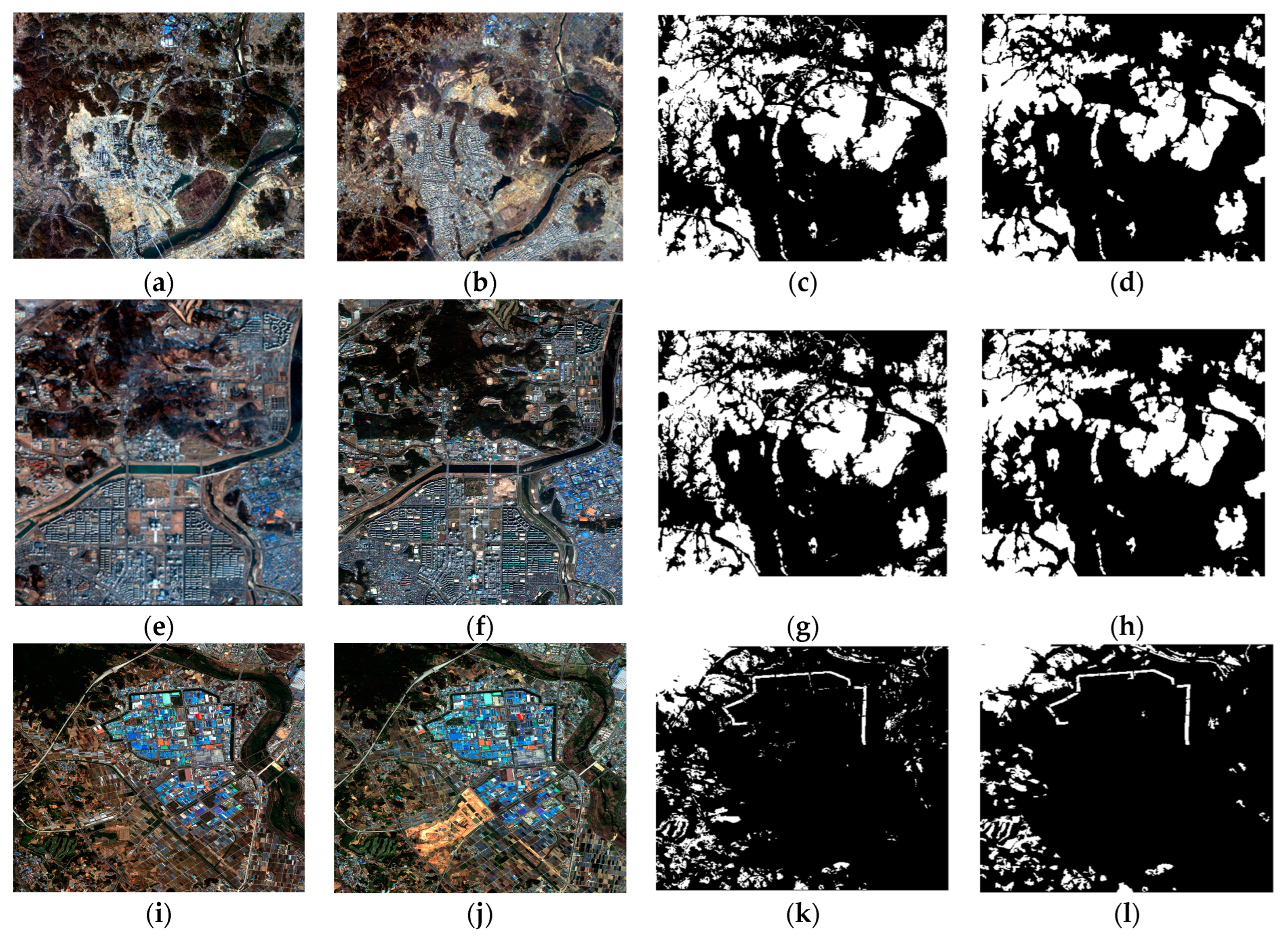

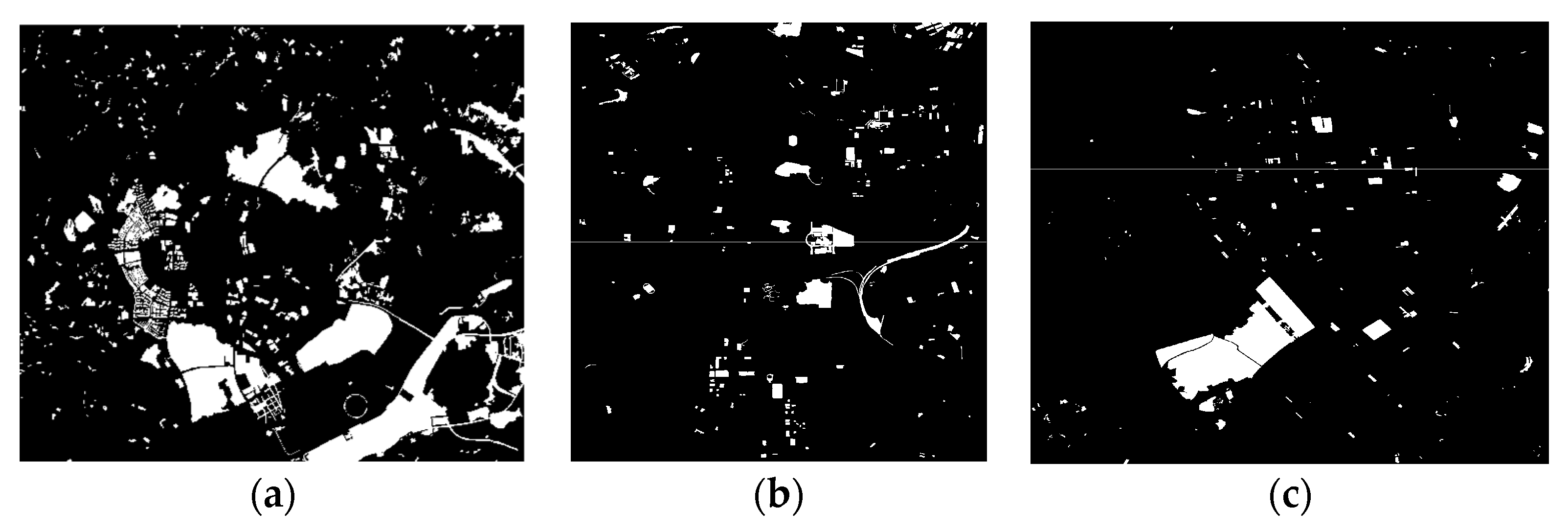

2. Datasets

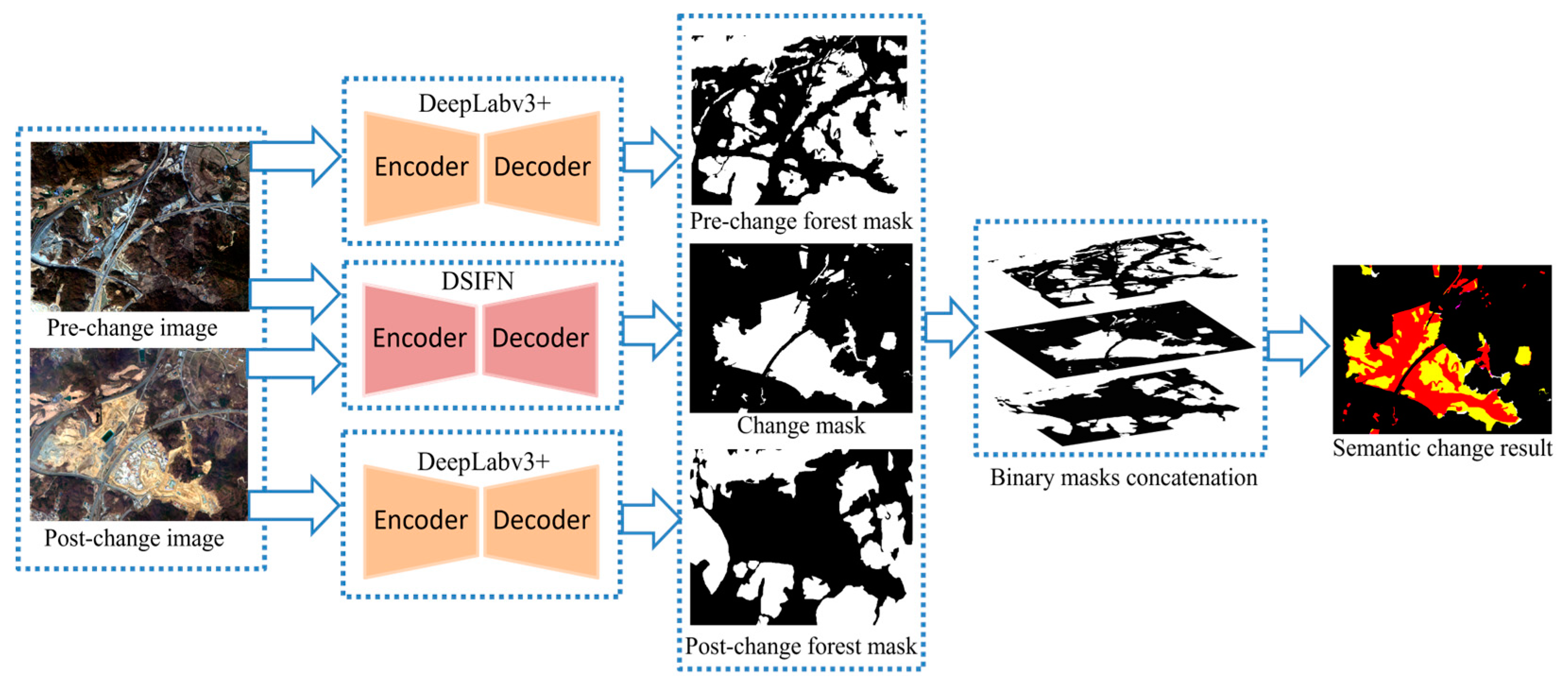

3. Methodology

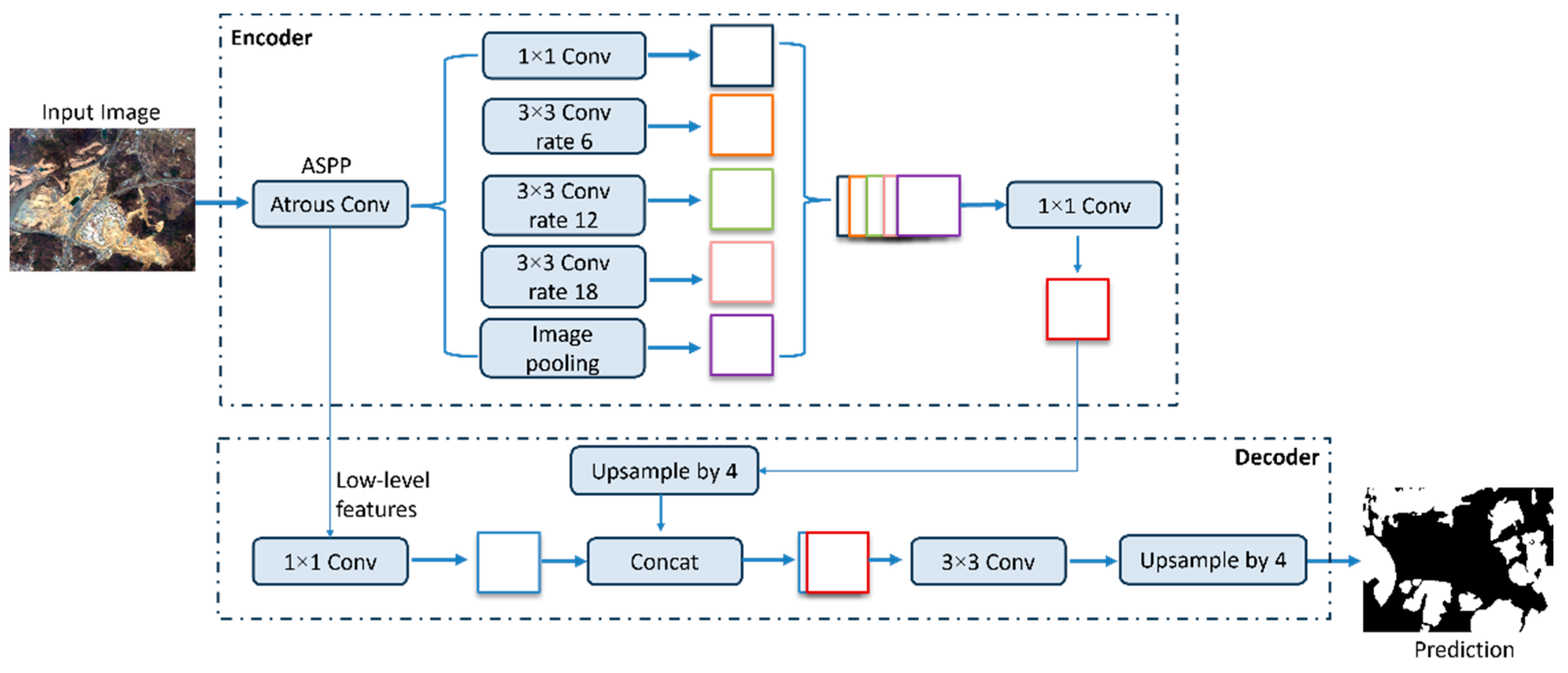

3.1. Binary Forest Mask Generation

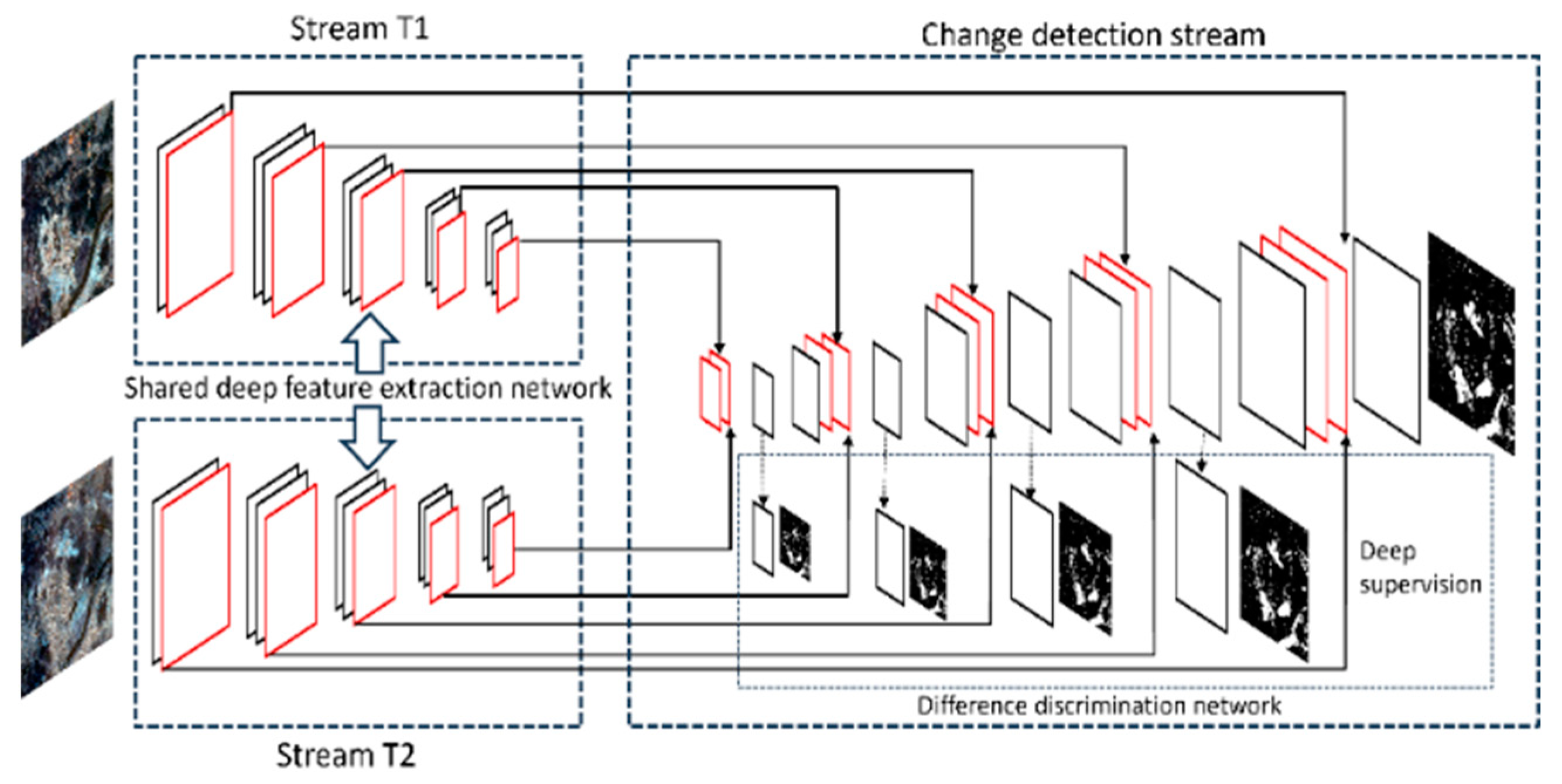

3.2. Binary Change Mask Generation

3.3. Forest Change Monitoring

3.4. Validation

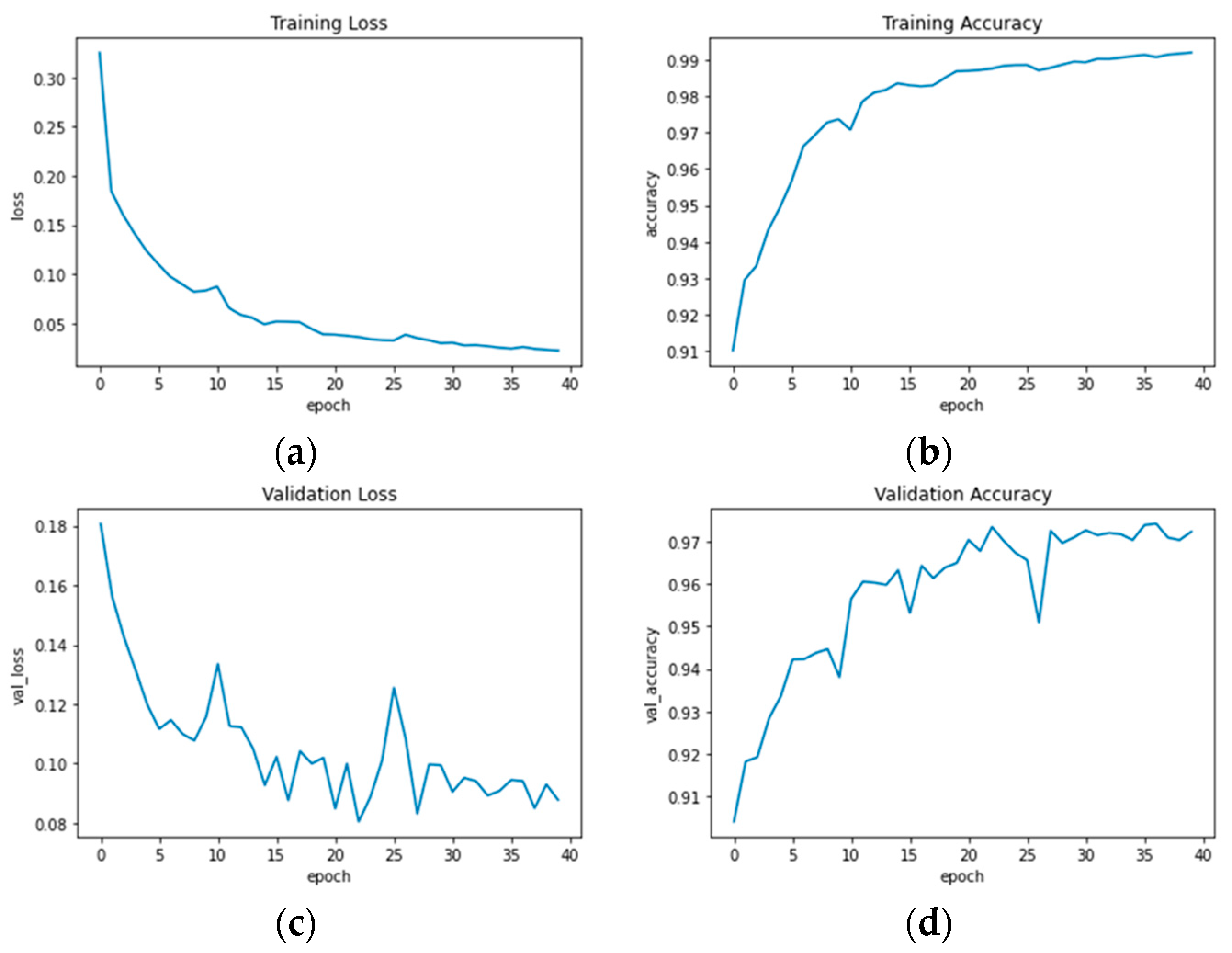

4. Experimental Results

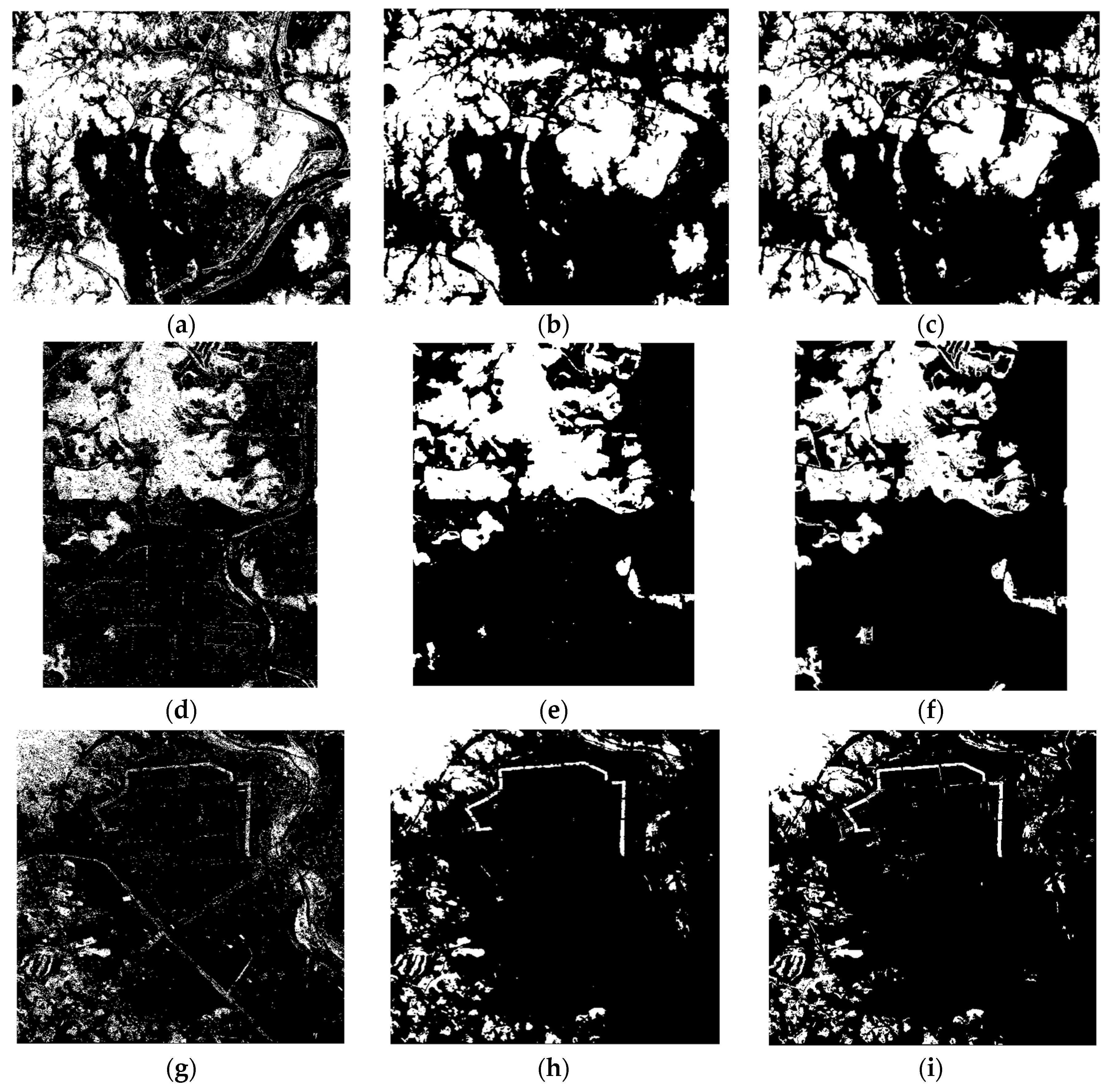

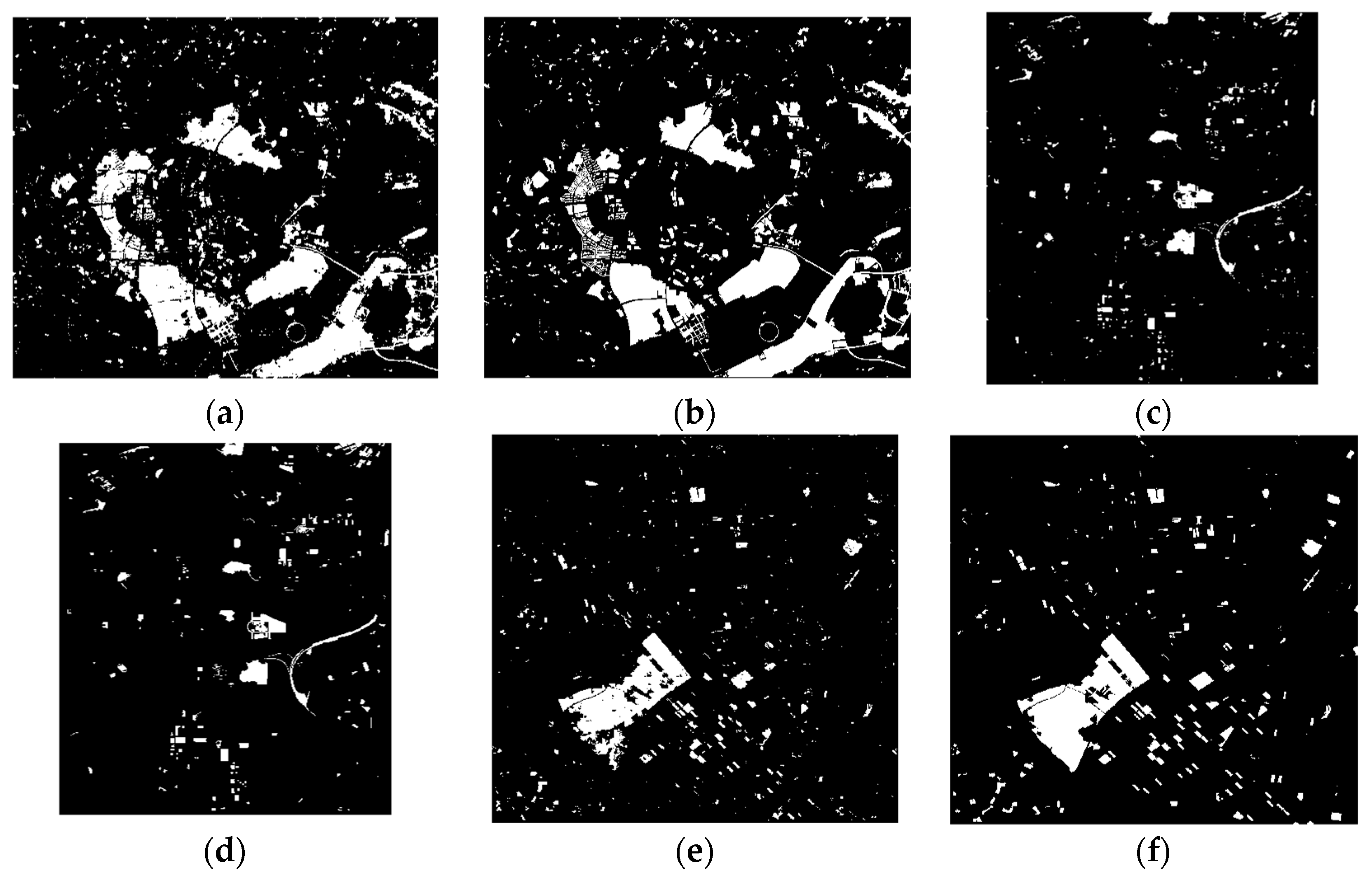

4.1. Binary Forest Masks

4.2. Change Detection

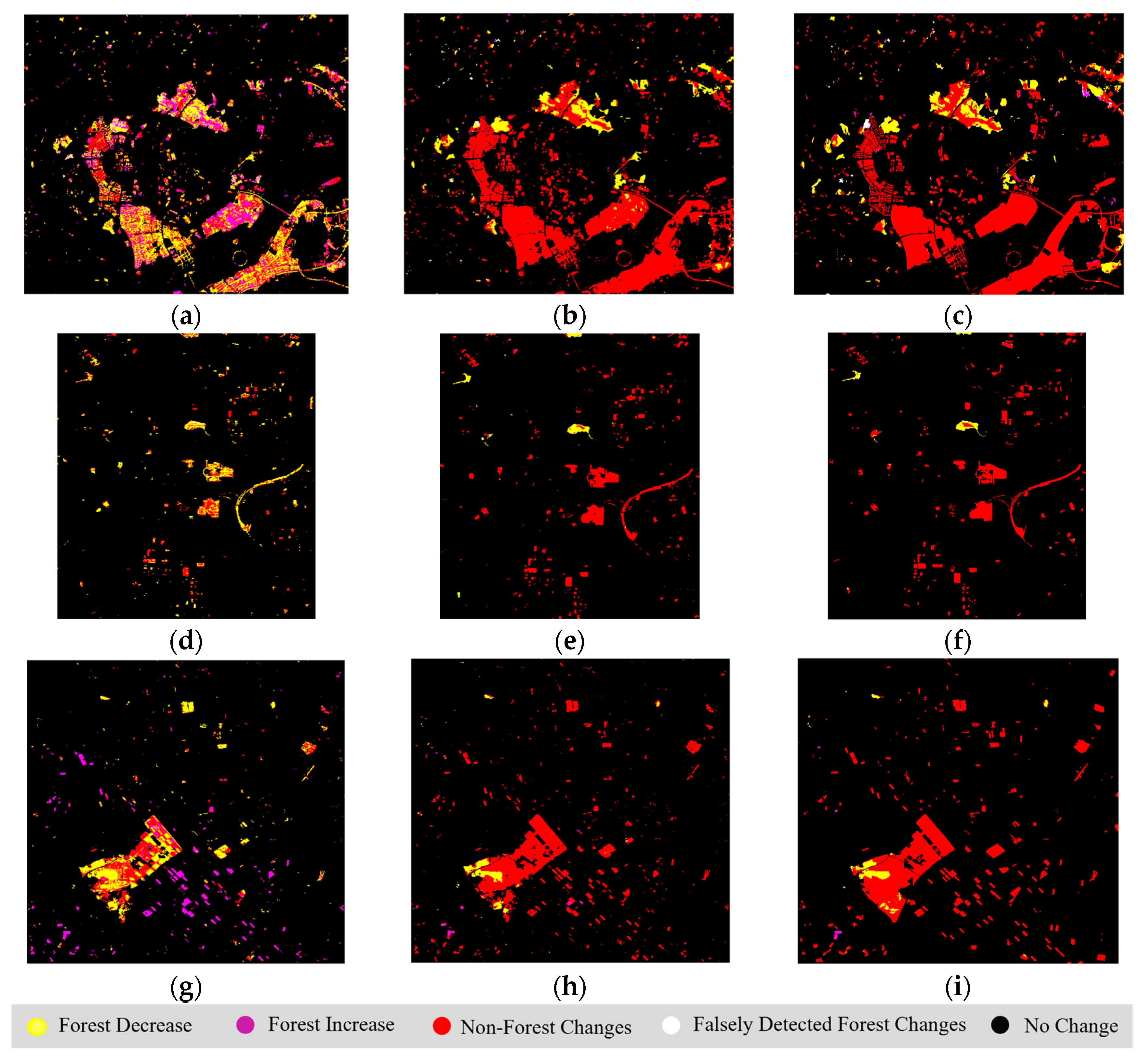

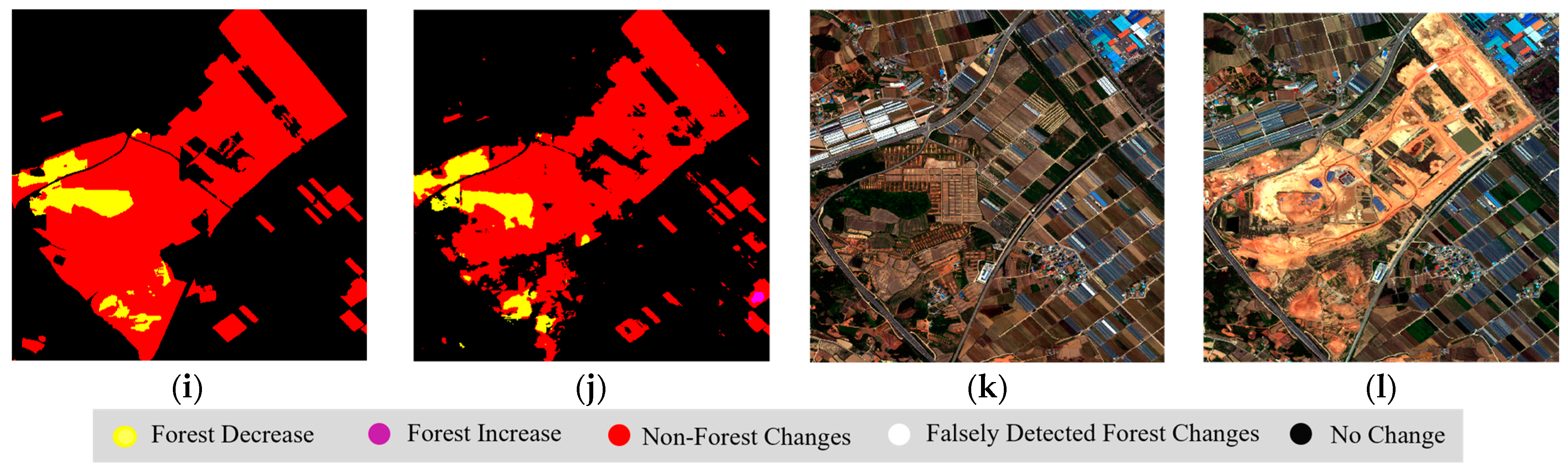

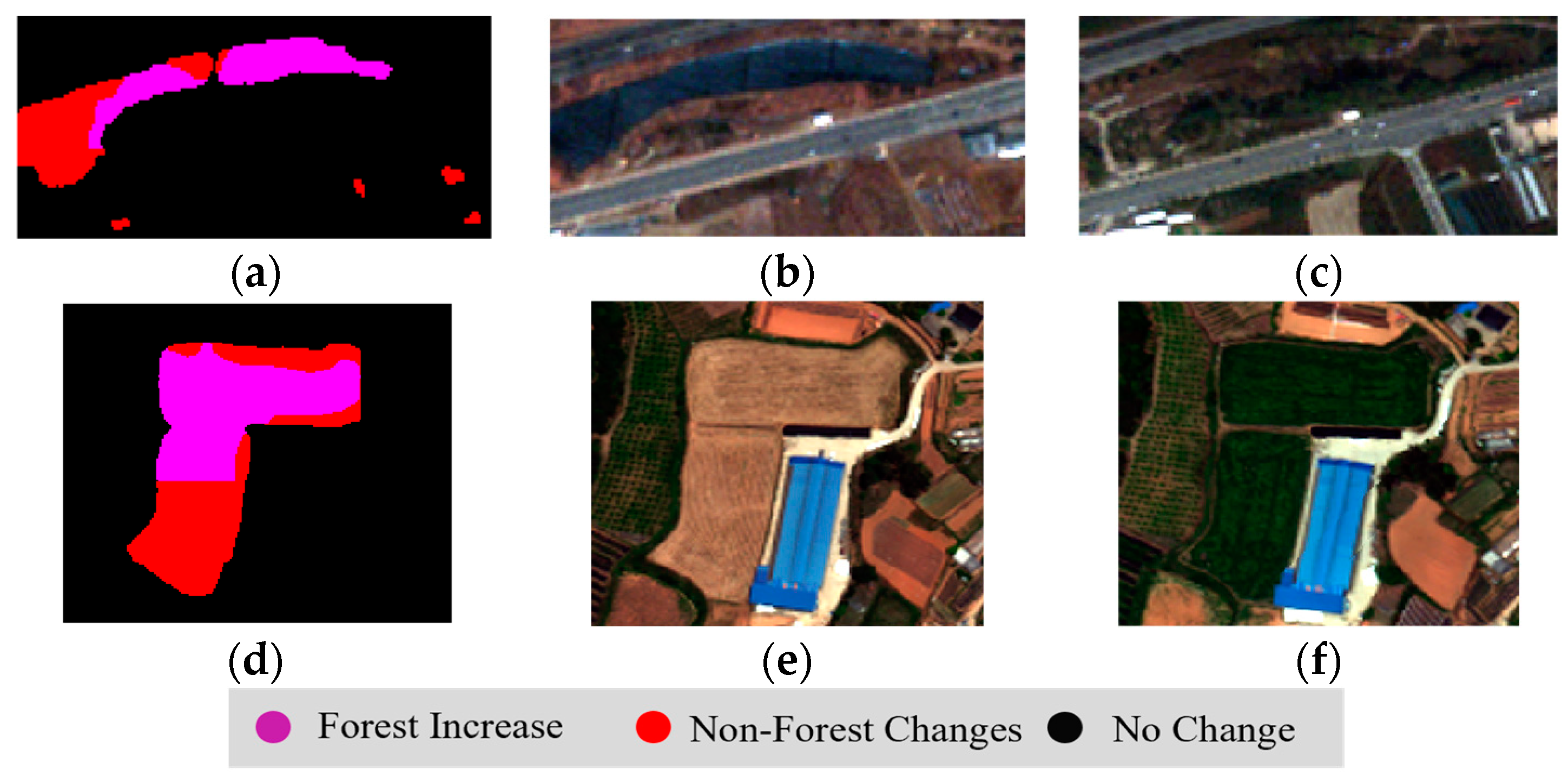

4.3. Finalizing Forest Cover Changes

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, W.Y. Urban nature and urban ecosystem services. In Greening Cities: Forms and Functions; Springer: Singapore, 2017; pp. 181–199. [Google Scholar]

- Elmqvist, T.; Setälä, H.; Handel, S.N.; van der Ploeg, S.; Aronson, J.; Blignaut, J.N.; Gómez-Baggethun, E.; Nowak, D.J.; Kronenberg, J.; de Groot, R. Benefits of restoring ecosystem services in urban areas. Curr. Opin. Environ. Sustain. 2015, 14, 101–108. [Google Scholar] [CrossRef]

- Long, L.C.; D’Amico, V.; Frank, S.D. Urban forest fragments buffer trees from warming and pests. Sci. Total Environ. 2019, 658, 1523–1530. [Google Scholar] [CrossRef]

- Li, X.; Chen, W.Y.; Sanesi, G.; Lafortezza, R. Remote sensing in urban forestry: Recent applications and future directions. Remote Sens. 2019, 11, 1144. [Google Scholar] [CrossRef]

- Islam, K.; Sato, N. Deforestation, land conversion and illegal logging in Bangladesh: The case of the Sal (Shorea robusta) forests. iForest Biogeosci. For. 2012, 5, 171. [Google Scholar] [CrossRef]

- Samset, B.H.; Fuglestvedt, J.S.; Lund, M.T. Delayed emergence of a global temperature response after emission mitigation. Nat. Commun. 2020, 11, 3261. [Google Scholar] [CrossRef]

- Alzu’bi, A.; Alsmadi, L. Monitoring deforestation in Jordan using deep semantic segmentation with satellite imagery. Ecol. Inform. 2022, 70, 101745. [Google Scholar] [CrossRef]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C.; Budavari, B.; Kwan, L.; Lu, Y.; Perez, D.; Li, J.; Skarlatos, D.; Vlachos, M. Vegetation detection using deep learning and conventional methods. Remote Sens. 2020, 12, 2502. [Google Scholar] [CrossRef]

- Shakya, A.K.; Ramola, A.; Vidyarthi, A. Exploration of Pixel-Based and Object-Based Change Detection Techniques by Analyzing ALOS PALSAR and LANDSAT Data. In Smart and Sustainable Intelligent Systems; Wiley: Hoboken, NJ, USA, 2021; pp. 229–244. [Google Scholar]

- Afify, N.M.; El-Shirbeny, M.A.; El-Wesemy, A.F.; Nabil, M. Analyzing satellite data time-series for agricultural expansion and its water consumption in arid region: A case study of the Farafra oasis in Egypt’s Western Desert. Euro-Mediterr. J. Environ. Integr. 2023, 8, 129–142. [Google Scholar] [CrossRef]

- Schultz, M.; Clevers, J.G.; Carter, S.; Verbesselt, J.; Avitabile, V.; Quang, H.V.; Herold, M. Performance of vegetation indices from Landsat time series in deforestation monitoring. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 318–327. [Google Scholar] [CrossRef]

- Mohsenifar, A.; Mohammadzadeh, A.; Moghimi, A.; Salehi, B. A novel unsupervised forest change detection method based on the integration of a multiresolution singular value decomposition fusion and an edge-aware Markov Random Field algorithm. Int. J. Remote Sens. 2021, 42, 9376–9404. [Google Scholar] [CrossRef]

- Stow, D. Geographic object-based image change analysis. In Handbook of Applied Spatial Analysis: Software Tools, Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2009; pp. 565–582. [Google Scholar]

- Gamanya, R.; De Maeyer, P.; De Dapper, M. Object-oriented change detection for the city of Harare, Zimbabwe. Expert Syst. Appl. 2009, 36, 571–588. [Google Scholar] [CrossRef]

- Keyport, R.N.; Oommen, T.; Martha, T.R.; Sajinkumar, K.S.; Gierke, J.S. A comparative analysis of pixel-and object-based detection of landslides from very high-resolution images. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 1–11. [Google Scholar] [CrossRef]

- Lu, P.; Qin, Y.; Li, Z.; Mondini, A.C.; Casagli, N. Landslide mapping from multi-sensor data through improved change detection-based Markov random field. Remote Sens. Environ. 2019, 231, 111235. [Google Scholar] [CrossRef]

- Wu, L.; Li, Z.; Liu, X.; Zhu, L.; Tang, Y.; Zhang, B.; Xu, B.; Liu, M.; Meng, Y.; Liu, B. Multi-type forest change detection using BFAST and monthly landsat time series for monitoring spatiotemporal dynamics of forests in subtropical wetland. Remote Sens. 2020, 12, 341. [Google Scholar] [CrossRef]

- Bergamasco, L.; Martinatti, L.; Bovolo, F.; Bruzzone, L. An unsupervised change detection technique based on a super-resolution convolutional autoencoder. In Proceedings of the IGARSS 2021, Brussels, Belgium, 11–16 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3337–3340. [Google Scholar]

- Shafique, A.; Cao, G.; Khan, Z.; Asad, M.; Aslam, M. Deep learning-based change detection in remote sensing images: A review. Remote Sens. 2022, 14, 871. [Google Scholar] [CrossRef]

- Hou, B.; Liu, Q.; Wang, H.; Wang, Y. From W-Net to CDGAN: Bitemporal change detection via deep learning techniques. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1790–1802. [Google Scholar] [CrossRef]

- Zhang, X.; He, L.; Qin, K.; Dang, Q.; Si, H.; Tang, X.; Jiao, L. SMD-Net: Siamese Multi-Scale Difference-Enhancement Network for Change Detection in Remote Sensing. Remote Sens. 2022, 14, 1580. [Google Scholar] [CrossRef]

- Mou, L.; Bruzzone, L.; Zhu, X.X. Learning spectral-spatial-temporal features via a recurrent convolutional neural network for change detection in multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 57, 924–935. [Google Scholar] [CrossRef]

- De Bem, P.P.; de Carvalho Junior, O.A.; Fontes Guimarães, R.; Trancoso Gomes, R.A. Change detection of deforestation in the Brazilian Amazon using landsat data and convolutional neural networks. Remote Sens. 2020, 12, 901. [Google Scholar] [CrossRef]

- Khan, S.H.; He, X.; Porikli, F.; Bennamoun, M. Forest change detection in incomplete satellite images with deep neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5407–5423. [Google Scholar] [CrossRef]

- Sefrin, O.; Riese, F.M.; Keller, S. Deep learning for land cover change detection. Remote Sens. 2020, 13, 78. [Google Scholar] [CrossRef]

- Isaienkov, K.; Yushchuk, M.; Khramtsov, V.; Seliverstov, O. Deep learning for regular change detection in Ukrainian forest ecosystem with sentinel-2. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 364–376. [Google Scholar] [CrossRef]

- Zulfiqar, A.; Ghaffar, M.M.; Shahzad, M.; Weis, C.; Malik, M.I.; Shafait, F.; Wehn, N. AI-ForestWatch: Semantic segmentation based end-to-end framework for forest estimation and change detection using multi-spectral remote sensing imagery. J. Appl. Remote Sens. 2021, 15, 024518. [Google Scholar] [CrossRef]

- Khankeshizadeh, E.; Mohammadzadeh, A.; Moghimi, A.; Mohsenifar, A. FCD-R2U-net: Forest change detection in bi-temporal satellite images using the recurrent residual-based U-net. Earth Sci. Inform. 2022, 15, 2335–2347. [Google Scholar] [CrossRef]

- Nguyen-Trong, K.; Tran-Xuan, H. Coastal forest cover change detection using satellite images and convolutional neural networks in Vietnam. IAES Int. J. Artif. Intell. 2022, 11, 930. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Liu, M.; Fu, B.; Fan, D.; Zuo, P.; Xie, S.; He, H.; Liu, L.; Huang, L.; Gao, E.; Zhao, M. Study on transfer learning ability for classifying marsh vegetation with multi-sensor images using DeepLabV3+ and HRNet deep learning algorithms. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102531. [Google Scholar] [CrossRef]

- da Silva Mendes, P.A.; Coimbra, A.P.; de Almeida, A.T. Vegetation classification using DeepLabv3+ and YOLOv5. In Proceedings of the ICRA 2022 Workshop in Innovation in Forestry Robotics: Research and Industry Adoption, Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Lee, K.; Wang, B.; Lee, S. Analysis of YOLOv5 and DeepLabv3+ Algorithms for Detecting Illegal Cultivation on Public Land: A Case Study of a Riverside in Korea. Int. J. Environ. Res. Public Health 2023, 20, 1770. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. Tree, shrub, and grass classification using only RGB images. Remote Sens. 2020, 12, 1333. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, J.; Yang, K.; Wang, L.; Su, F.; Chen, X. Semantic segmentation of high-resolution remote sensing images based on a class feature attention mechanism fused with Deeplabv3+. Comput. Geosci. 2022, 158, 104969. [Google Scholar] [CrossRef]

- Sharifzadeh, S.; Tata, J.; Sharifzadeh, H.; Tan, B. Farm area segmentation in satellite images using deeplabv3+ neural networks. In Proceedings of the 8th International Conference on Data Management Technologies and Applications (DATA 2019), Prague, Czech Republic, 26–28 July 2019; Revised Selected Papers 8. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 115–135. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Multitask learning for large-scale semantic change detection. Comput. Vis. Image Underst. 2019, 187, 102783. [Google Scholar] [CrossRef]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A remote sensing land-cover dataset for domain adaptive semantic segmentation. arXiv 2021, arXiv:2110.08733. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

| Sites | Site 1 (Sejong) | Site 2 (Daejeon) | Site 3 (Gwangju) |

|---|---|---|---|

| Sensor | Kompsat-3 | QuickBird-2 | WorldView-3 |

| Acquisition Date | Pre-change (16/11/2013) Post-change (26/02/2019) | pre-change (12/2002) Post-change (10/2006) | Pre-change (05/2017) Post-change (05/2018) |

| Spatial Resolution | 2.8 m | 2.44 m | 1.24 m |

| Bands | Blue, green, red, NIR | Blue, green, red, NIR | Blue, green, red, NIR |

| Size | 3879 × 3344 pixels | 2622 × 2938 pixels | 5030 × 4643 pixels |

| Site | Technique | Images | F1-Score | Kappa | IoU | Accuracy | FAR | MR |

|---|---|---|---|---|---|---|---|---|

| 1 | Proposed method | Pre-change | 0.908 | 0.855 | 0.831 | 0.933 | 0.0679 | 0.0967 |

| Post-change | 0.874 | 0.813 | 0.777 | 0.917 | 0.062 | 0.147 | ||

| Unet | Pre-change | 0.887 | 0.831 | 0.797 | 0.924 | 0.089 | 0.099 | |

| Post-change | 0.849 | 0.778 | 0.738 | 0.903 | 0.082 | 0.123 | ||

| SegNet | Pre-change | 0.902 | 0.843 | 0.822 | 0.925 | 0.120 | 0.098 | |

| Post-change | 0.774 | 0.686 | 0.631 | 0.871 | 0.092 | 0.186 | ||

| NDVI | Pre-change | 0.789 | 0.665 | 0.753 | 0.840 | 0.198 | 0.079 | |

| Post-change | 0.825 | 0.724 | 0.694 | 0.870 | 0.160 | 0.064 | ||

| 2 | Proposed method | Pre-change | 0.915 | 0.887 | 0.844 | 0.958 | 0.032 | 0.68 |

| Post-change | 0.902 | 0.872 | 0.821 | 0.952 | 0.037 | 0.080 | ||

| Unet | Pre-change | 0.903 | 0.870 | 0.823 | 0.950 | 0.012 | 0.398 | |

| Post-change | 0.894 | 0.857 | 0.809 | 0.944 | 0.032 | 0.203 | ||

| SegNet | Pre-change | 0.889 | 0.846 | 0.801 | 0.937 | 0.102 | 0.063 | |

| Post-change | 0.868 | 0.814 | 0.767 | 0.922 | 0.145 | 0.035 | ||

| NDVI | Pre-change | 0.733 | 0.669 | 0.617 | 0.892 | 0.012 | 0.398 | |

| Post-change | 0.841 | 0.792 | 0.620 | 0.925 | 0.032 | 0.203 | ||

| 3 | Proposed method | Pre-change | 0.853 | 0.834 | 0.744 | 0.965 | 0.035 | 0.093 |

| Post-change | 0.836 | 0.816 | 0.719 | 0.963 | 0.026 | 0.102 | ||

| Unet | Pre-change | 0.827 | 0.801 | 0.705 | 0.954 | 0.047 | 0.099 | |

| Post-change | 0.820 | 0.792 | 0.695 | 0.950 | 0.059 | 0.102 | ||

| SegNet | Pre-change | 0.823 | 0.799 | 0.699 | 0.958 | 0.124 | 0.098 | |

| Post-change | 0.774 | 0.746 | 0.618 | 0.951 | 0.102 | 0.120 | ||

| NDVI | Pre-change | 0.643 | 0.601 | 0.551 | 0.924 | 0.037 | 0.429 | |

| Post-change | 0.525 | 0.475 | 0.473 | 0.905 | 0.039 | 0.546 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Javed, A.; Kim, T.; Lee, C.; Oh, J.; Han, Y. Deep Learning-Based Detection of Urban Forest Cover Change along with Overall Urban Changes Using Very-High-Resolution Satellite Images. Remote Sens. 2023, 15, 4285. https://doi.org/10.3390/rs15174285

Javed A, Kim T, Lee C, Oh J, Han Y. Deep Learning-Based Detection of Urban Forest Cover Change along with Overall Urban Changes Using Very-High-Resolution Satellite Images. Remote Sensing. 2023; 15(17):4285. https://doi.org/10.3390/rs15174285

Chicago/Turabian StyleJaved, Aisha, Taeheon Kim, Changhui Lee, Jaehong Oh, and Youkyung Han. 2023. "Deep Learning-Based Detection of Urban Forest Cover Change along with Overall Urban Changes Using Very-High-Resolution Satellite Images" Remote Sensing 15, no. 17: 4285. https://doi.org/10.3390/rs15174285

APA StyleJaved, A., Kim, T., Lee, C., Oh, J., & Han, Y. (2023). Deep Learning-Based Detection of Urban Forest Cover Change along with Overall Urban Changes Using Very-High-Resolution Satellite Images. Remote Sensing, 15(17), 4285. https://doi.org/10.3390/rs15174285