High-Resolution Network with Transformer Embedding Parallel Detection for Small Object Detection in Optical Remote Sensing Images

Abstract

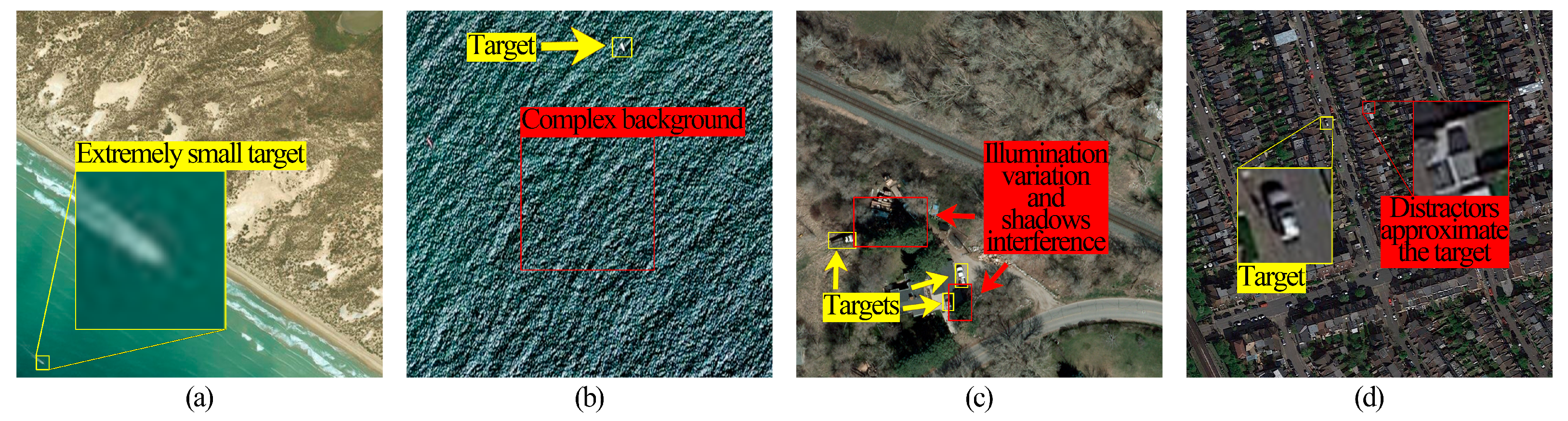

:1. Introduction

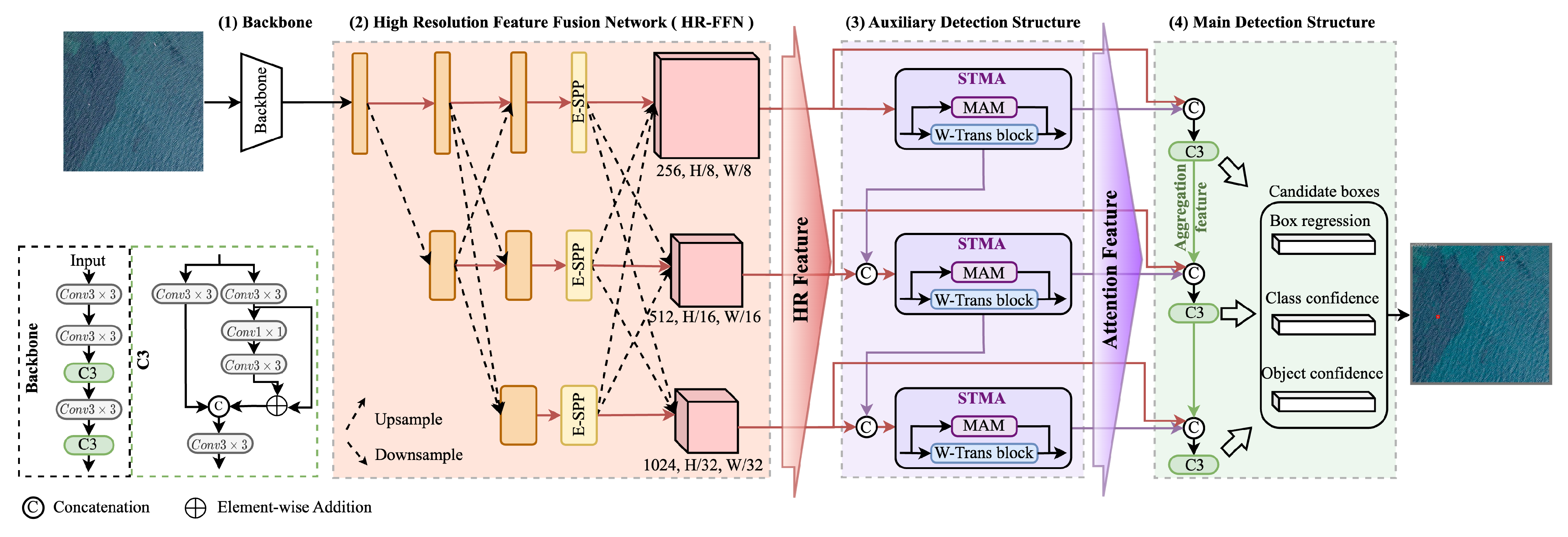

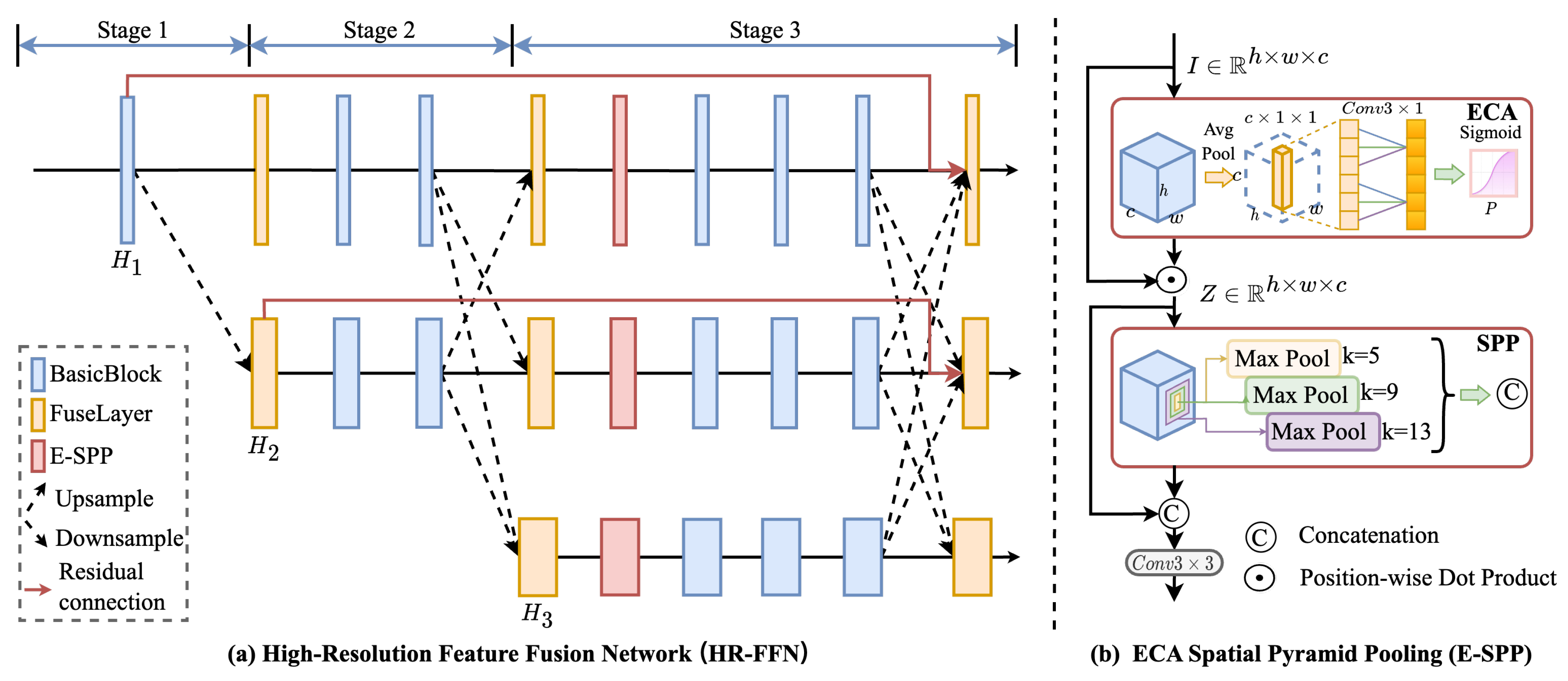

- A new multi-scale feature extraction network—HR-FFN—is proposed to retain more semantic information about small objects while achieving an accurate location, and we utilize the shallow and deep features simultaneously to alleviate the oversight of small-scale features during convolution operations.

- To address the semantic ambiguity caused by background confusion, a mixed attention mechanism named STMA is proposed to enhance the distinguishable features by modeling global information to enhance the object saliency and establishing a pixel-level correlation to suppress the complex background.

- A detection approach for small objects in remote sensing scenes, called HRTP-Net, is presented in this paper. Extensive ablation experiments show both the effectiveness and advantages of the HRTP-Net. The results on several representative datasets demonstrate that our approach brings significant improvement in small object detection.

2. Related Work

2.1. Multi-Resolution Feature Learning

2.2. Attention Mechanism in Deep Learning Network

2.3. Remote Sensing Images Object Detection

3. Proposed Method

3.1. Overall Structure

3.2. High-Resolution Feature Fusion Network (HR-FFN)

3.3. Window-Based Transformer (W-Trans)

3.4. Swin-Transformer-Based Mixed Attention Module (STMA)

4. Experiments

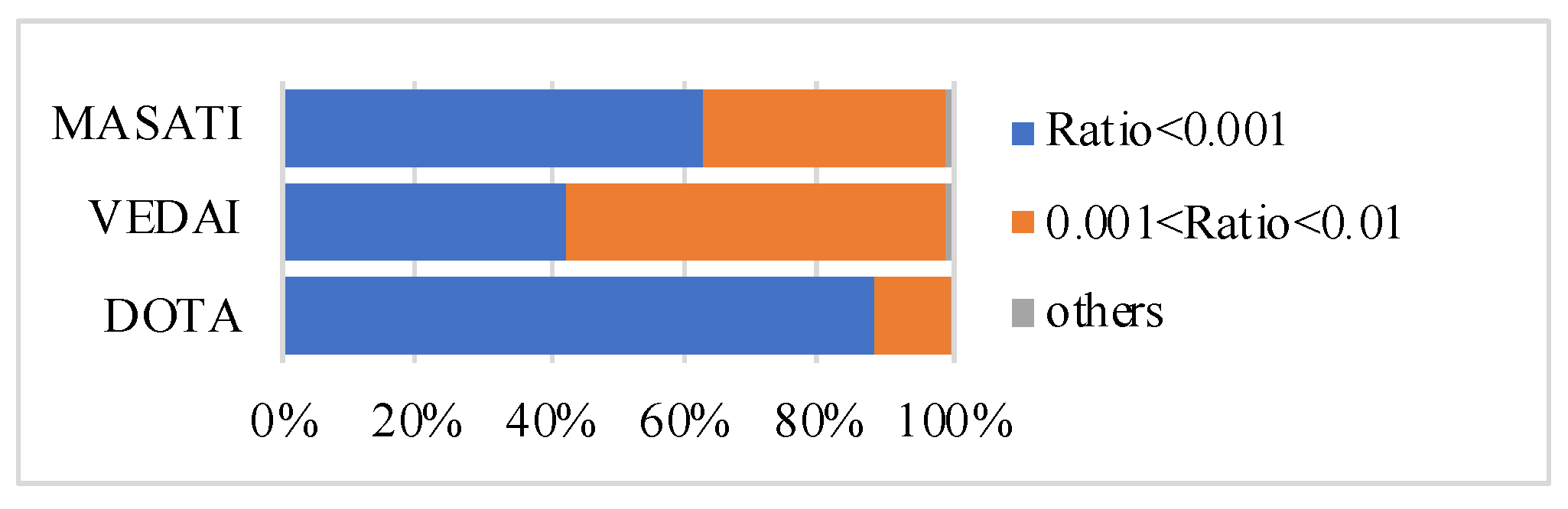

4.1. Datasets

4.1.1. MASATI Dataset

4.1.2. VEDAI Dataset

4.1.3. DOTA Dataset

4.2. Implementation Details

4.2.1. Evaluation Metrics

4.2.2. Training Settings

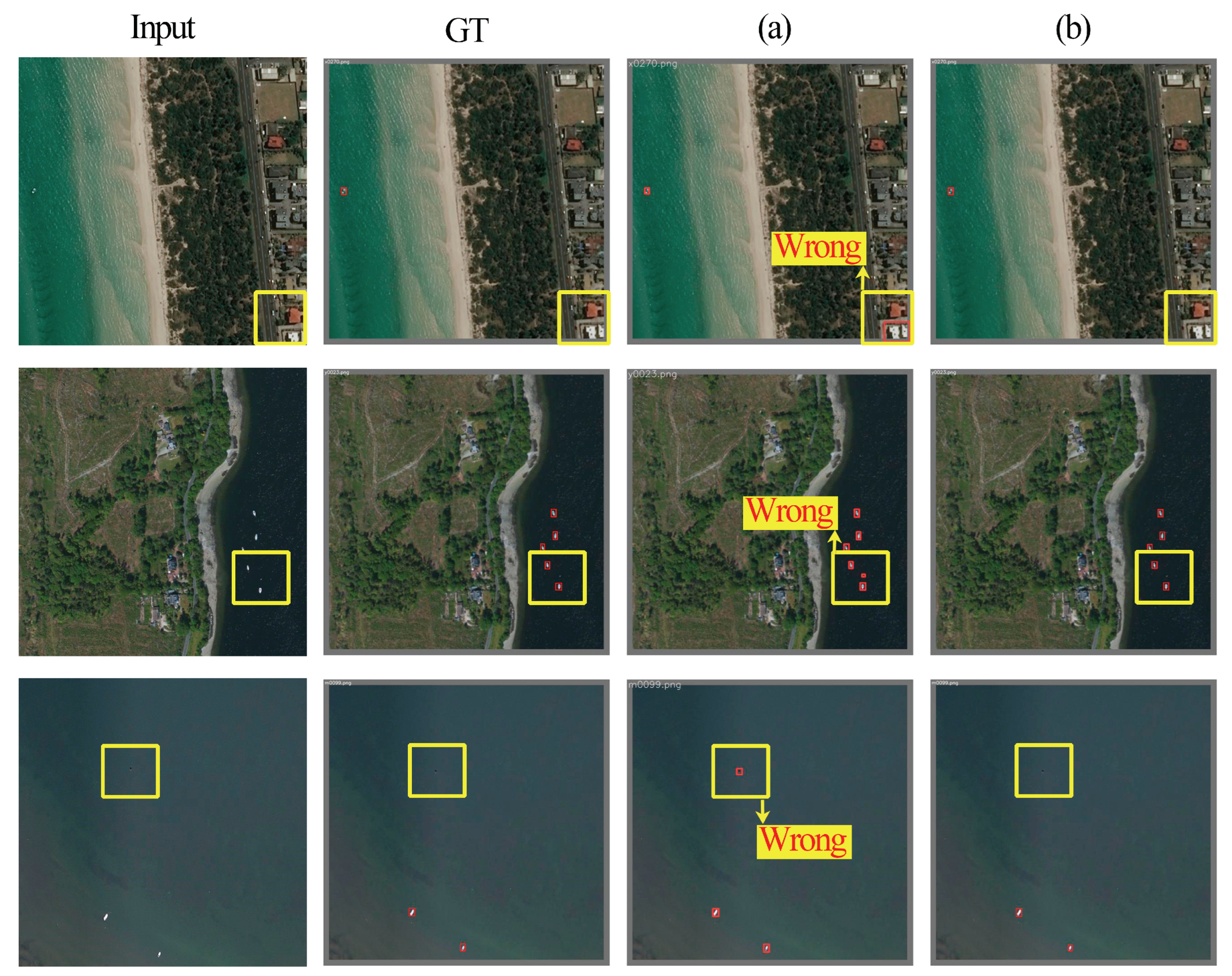

4.3. Ablation Experiments

4.3.1. Effect of High-Resolution Feature Fusion Network

4.3.2. Effect of Swin-Transformer-Based Mixed Attention Module

4.3.3. Effect of Auxiliary Detection Structure

4.4. Comparison with Other Methods

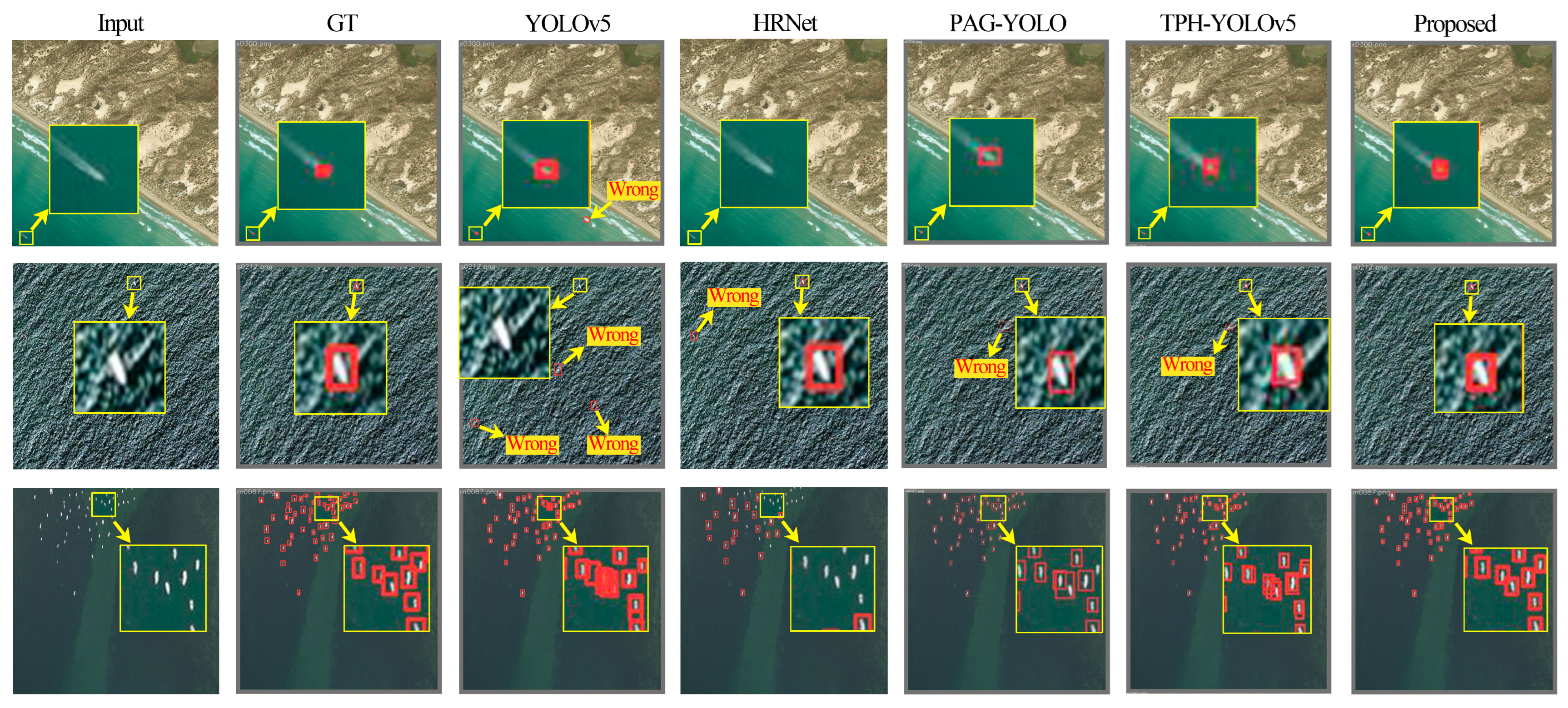

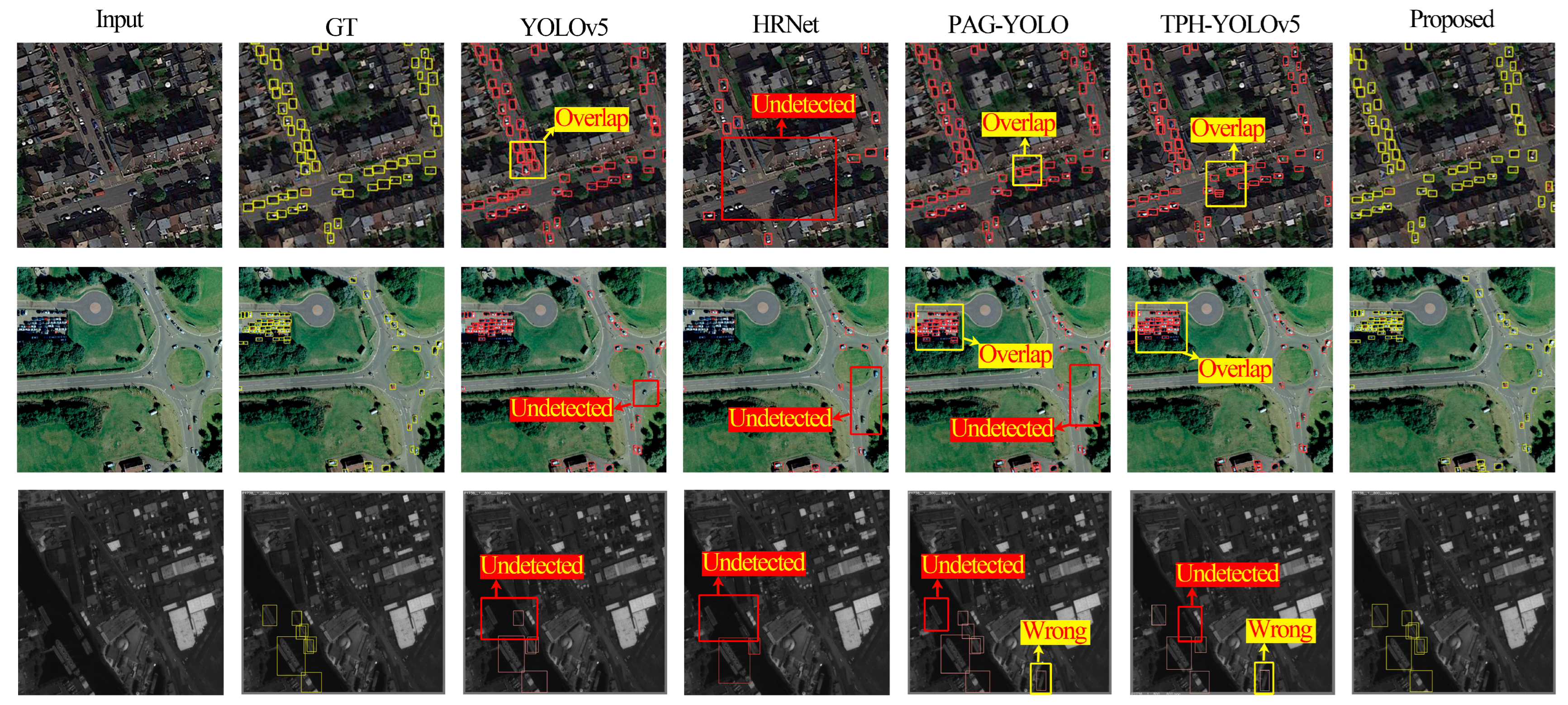

4.4.1. Results on MASATI Dataset

4.4.2. Results from the VEDAI Dataset

4.4.3. Results from the DOTA Dataset

4.4.4. Efficiency Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ren, X.; Bai, Y.; Liu, G.; Zhang, P. YOLO-Lite: An Efficient Lightweight Network for SAR Ship Detection. Remote Sens. 2023, 15, 3771. [Google Scholar] [CrossRef]

- Xiong, J.; Zeng, H.; Cai, G.; Li, Y.; Chen, J.M.; Miao, G. Crown Information Extraction and Annual Growth Estimation of a Chinese Fir Plantation Based on Unmanned Aerial Vehicle–Light Detection and Ranging. Remote Sens. 2023, 15, 3869. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, D.; Wang, X.; Chen, B.; Ding, C.; Gao, S. Sensing Travel Source–Sink Spatiotemporal Ranges Using Dockless Bicycle Trajectory via Density-Based Adaptive Clustering. Remote Sens. 2023, 15, 3874. [Google Scholar] [CrossRef]

- Wang, S.; Cai, Z.; Yuan, J. Automatic SAR Ship Detection Based on Multifeature Fusion Network in Spatial and Frequency Domains. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-Resolution Representations for Labeling Pixels and Regions. arXiv 2019, arXiv:1904.04514. [Google Scholar]

- Xu, H.; Tang, X.; Ai, B.; Yang, F.; Wen, Z.; Yang, X. Feature-Selection High-Resolution Network With Hypersphere Embedding for Semantic Segmentation of VHR Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Shao, J.; Du, B.; Wu, C.; Gong, M.; Liu, T. Hrsiam: High-resolution siamese network, towards space-borne satellite video tracking. IEEE Trans. Image Process. 2021, 30, 3056–3068. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection With Transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Zhou, Y.; Chen, S.; Zhao, J.; Yao, R.; Xue, Y.; Saddik, A.E. CLT-Det: Correlation Learning Based on Transformer for Detecting Dense Objects in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4708915. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Huang, Y.; Li, M.; Yang, G. Enhancing Multiscale Representations With Transformer for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605116. [Google Scholar] [CrossRef]

- Zhang, X.; Yuan, S.; Luan, F.; Lv, J.; Liu, G. Similarity Mask Mixed Attention for YOLOv5 Small Ship Detection of Optical Remote Sensing Images. In Proceedings of the 2022 WRC Symposium on Advanced Robotics and Automation (WRC SARA), Beijing, China, 20 August 2022; pp. 263–268. [Google Scholar]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin transformer embedding UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408715. [Google Scholar] [CrossRef]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 483–499. [Google Scholar]

- Wang, W.; Xie, E.; Song, X.; Zang, Y.; Wang, W.; Lu, T.; Yu, G.; Shen, C. Efficient and accurate arbitrary-shaped text detection with pixel aggregation network. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8440–8449. [Google Scholar]

- Mboga, N.; Grippa, T.; Georganos, S.; Vanhuysse, S.; Smets, B.; Dewitte, O.; Wolff, E.; Lennert, M. Fully convolutional networks for land cover classification from historical panchromatic aerial photographs. ISPRS J. Photogramm. Remote Sens. 2020, 167, 385–395. [Google Scholar] [CrossRef]

- Abriha, D.; Szabó, S. Strategies in training deep learning models to extract building from multisource images with small training sample sizes. Int. J. Digit. Earth 2023, 16, 1707–1724. [Google Scholar] [CrossRef]

- Solórzano, J.V.; Mas, J.F.; Gallardo-Cruz, J.A.; Gao, Y.; de Oca, A.F.M. Deforestation detection using a spatio-temporal deep learning approach with synthetic aperture radar and multispectral images. ISPRS J. Photogramm. Remote Sens. 2023, 199, 87–101. [Google Scholar] [CrossRef]

- Hao, X.; Yin, L.; Li, X.; Zhang, L.; Yang, R. A Multi-Objective Semantic Segmentation Algorithm Based on Improved U-Net Networks. Remote Sens. 2023, 15, 1838. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, Z.; Lu, X.; Cao, G.; Yang, Y.; Jiao, L.; Liu, F. ViT-YOLO: Transformer-based YOLO for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 2799–2808. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. You Only Learn One Representation: Unified Network for Multiple Tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Yu, G.; Chang, Q.; Lv, W.; Xu, C.; Cui, C.; Ji, W.; Dang, Q.; Deng, K.; Wang, G.; Du, Y.; et al. PP-PicoDet: A better real-time object detector on mobile devices. arXiv 2021, arXiv:2111.00902. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012; ChristopherSTAN; Liu, C.; Laughing; tkianai; Hogan, A.; lorenzomammana; et al. Ultralytics/yolov5 2020, April 12, 2021. Available online: https://github.com/ultralytics/yolov5/tree/v5.0 (accessed on 12 April 2021).

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Van Etten, A. You only look twice: Rapid multi-scale object detection in satellite imagery. arXiv 2018, arXiv:1805.09512. [Google Scholar]

- Han, W.; Kuerban, A.; Yang, Y.; Huang, Z.; Liu, B.; Gao, J. Multi-vision network for accurate and real-time small object detection in optical remote sensing images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6001205. [Google Scholar] [CrossRef]

- Zhang, K.; Shen, H. Multi-Stage Feature Enhancement Pyramid Network for Detecting Objects in Optical Remote Sensing Images. Remote Sens. 2022, 14, 579. [Google Scholar] [CrossRef]

- Kim, M.; Jeong, J.; Kim, S. ECAP-YOLO: Efficient Channel Attention Pyramid YOLO for Small Object Detection in Aerial Image. Remote Sens. 2021, 13, 4851. [Google Scholar] [CrossRef]

- Hu, J.; Zhi, X.; Shi, T.; Zhang, W.; Cui, Y.; Zhao, S. PAG-YOLO: A Portable Attention-Guided YOLO Network for Small Ship Detection. Remote Sens. 2021, 13, 3059. [Google Scholar] [CrossRef]

- Zhang, J.; Lei, J.; Xie, W.; Fang, Z.; Li, Y.; Du, Q. SuperYOLO: Super resolution assisted object detection in multimodal remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Shi, T.; Gong, J.; Hu, J.; Zhi, X.; Zhang, W.; Zhang, Y.; Zhang, P.; Bao, G. Feature-Enhanced CenterNet for Small Object Detection in Remote Sensing Images. Remote Sens. 2022, 14, 5488. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Huang, X.; Dong, M.; Li, J.; Guo, X. A 3-d-swin transformer-based hierarchical contrastive learning method for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, L.; Cheng, S.; Li, Y. SwinSUNet: Pure transformer network for remote sensing image change detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Gallego, A.J.; Pertusa, A.; Gil, P. Automatic Ship Classification from Optical Aerial Images with Convolutional Neural Networks. Remote Sens. 2018, 10, 511. [Google Scholar] [CrossRef]

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 3974–3983. [Google Scholar]

- Dong, R.; Xu, D.; Zhao, J.; Jiao, L.; An, J. Sig-NMS-based faster R-CNN combining transfer learning for small target detection in VHR optical remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8534–8545. [Google Scholar] [CrossRef]

| Model | Structure | Multi-Ship | Single-Ship | Coast | All Classes | ||||

|---|---|---|---|---|---|---|---|---|---|

| HR-FFN | STMA | Auxiliary Detection Structure | mAP (%) | Precision (%) | Recall (%) | mAP (%) | |||

| Baseline1 1 | - | - | - | 80.7 | 72.9 | 62.4 | 78.1 | 70.0 | 70.9 |

| HRTP-Net | ✓ | - | - | 81.3 | 81.4 | 65.0 | 82.5 | 72.5 | 75.0 |

| - | ✓ | - | 78.8 | 80.2 | 64.7 | 82.2 | 67.4 | 73.4 | |

| - | ✓ | ✓ | 80.9 | 80.6 | 68.7 | 80.3 | 71.1 | 75.6 | |

| ✓ | ✓ | - | 79.8 | 85.7 | 65.3 | 82.0 | 74.0 | 75.7 | |

| ✓ | ✓ | ✓ | 84.3 | 82.8 | 67.0 | 84.6 | 72.0 | 77.3 | |

| Model | Structure | All Classes | |||

|---|---|---|---|---|---|

| E-SPP | Residual Connection | Precision (%) | Recall (%) | mAP (%) | |

| Baseline2 1 | - | - | 78.9 | 70.7 | 73.3 |

| HRTP-Net | ✓ | - | 81.3 | 71.4 | 75.2 |

| - | ✓ | 76.7 | 71.5 | 73.6 | |

| ✓ | ✓ | 82.5 | 72.5 | 75.0 | |

| Model | Structure | All Classes | ||

|---|---|---|---|---|

| MAM | Precision (%) | Recall (%) | mAP (%) | |

| HRTP-Net | - | 82.9 | 70.9 | 75.4 |

| ✓ | 84.6 | 72.0 | 77.3 | |

| Model | Multi-Ship | Single-Ship | Coast | All Classes | ||

|---|---|---|---|---|---|---|

| mAP (%) | Precision (%) | Recall (%) | mAP (%) | |||

| YOLOR [33] | 75.7 | 64.1 | 52.7 | 75.9 | 66.4 | 63.1 |

| YOLOv4 [34] | 79.4 | 74.0 | 61.5 | 76.8 | 69.0 | 70.5 |

| YOLOv5 [37] | 80.7 | 72.9 | 62.4 | 78.1 | 70.0 | 70.9 |

| YOLOv7 [35] | 77.7 | 81.2 | 59.3 | 79.6 | 70.5 | 71.3 |

| PicoDet [36] | 81.7 | 61.1 | 68.4 | 80.1 | 64.3 | 70.1 |

| Faster R-CNN [5] | 70.0 | 77.2 | 38.7 | 77.6 | 64.4 | 59.2 |

| Cascade R-CNN [38] | 77.4 | 76.8 | 58.5 | 77.5 | 62.1 | 67.2 |

| HRNet [11] | 69.4 | 83.9 | 49.1 | 70.4 | 74.1 | 66.3 |

| SuperYOLO [44] | 80.3 | 73.4 | 65.5 | 76.7 | 70.5 | 72.7 |

| PAG-YOLO [43] | 82.8 | 81.9 | 65.8 | 83.3 | 71.3 | 75.5 |

| TPH-YOLOv5 [16] | 80.8 | 82.2 | 66.8 | 79.5 | 71.6 | 75.2 |

| Proposed | 84.3 | 82.8 | 67.0 | 84.6 | 72.0 | 77.3 |

| Model | Plane | Boat | Camping_car | Car | Pick_up | Tractor | Truck | Van | Others | All Classes |

|---|---|---|---|---|---|---|---|---|---|---|

| mAP (%) | mAP (%) | |||||||||

| YOLOR [33] | 45.8 | 10.5 | 54.7 | 74.0 | 64.4 | 30.3 | 17.4 | 11.1 | 13.1 | 35.7 |

| YOLOv4 [34] | 67.0 | 38.7 | 61.0 | 85.5 | 74.0 | 48.6 | 60.4 | 15.0 | 28.7 | 53.2 |

| YOLOv5 [37] | 78.4 | 25.3 | 68.3 | 82.6 | 74.1 | 40.4 | 53.3 | 27.3 | 30.5 | 53.4 |

| YOLOv7 [35] | 25.6 | 11.4 | 56.2 | 87.9 | 75.9 | 56.0 | 30.5 | 10.5 | 11.5 | 36.5 |

| PicoDet [36] | 44.6 | 19.1 | 61.7 | 79.1 | 61.0 | 31.1 | 32.7 | 13.5 | 18.2 | 40.1 |

| Faster R-CNN [5] | 65.9 | 53.2 | 68.0 | 60.6 | 61.8 | 45.5 | 55.8 | 17.3 | 51.7 | 53.3 |

| Cascade R-CNN [38] | 53.2 | 68.4 | 70.3 | 72.0 | 67.5 | 40.8 | 49.0 | 39.7 | 50.8 | 56.9 |

| HRNet [11] | 42.3 | 62.9 | 65.5 | 77.4 | 74.0 | 44.0 | 45.9 | 17.9 | 42.4 | 52.5 |

| Super YOLO [44] | 58.7 | 28.5 | 73.3 | 87.5 | 80.1 | 52.3 | 51.7 | 37.8 | 21.8 | 52.5 |

| PAG-YOLO [43] | 74.7 | 37.7 | 68.4 | 82.5 | 72.8 | 43.3 | 53.8 | 14.0 | 31.8 | 53.2 |

| TPH-YOLOv5 [16] | 71.8 | 31.1 | 62.1 | 81.9 | 70.6 | 41.4 | 55.4 | 40.2 | 35.6 | 54.5 |

| Proposed | 88.2 | 47.5 | 61.6 | 83.9 | 76.1 | 58.6 | 62.9 | 36.8 | 26.5 | 60.2 |

| Model | Small Vehicle | Ship | All Classes | ||

|---|---|---|---|---|---|

| mAP (%) | Precision (%) | Recall (%) | mAP (%) | ||

| YOLOR [33] | 60.4 | 84.3 | 85.3 | 68.5 | 72.3 |

| YOLOv4 [34] | 59.3 | 86.7 | 84.4 | 67.0 | 73.0 |

| YOLOv5 [37] | 58.5 | 86.2 | 84.0 | 68.5 | 72.4 |

| YOLOv7 [35] | 59.7 | 86.5 | 86.1 | 67.1 | 73.1 |

| PicoDet [36] | 59.9 | 84.9 | 85.9 | 65.9 | 72.4 |

| Faster R-CNN [5] | 26.0 | 53.3 | 64.6 | 41.8 | 39.6 |

| Cascade R-CNN [38] | 42.0 | 53.4 | 62.2 | 48.7 | 44.7 |

| HRNet [11] | 26.4 | 53.9 | 71.9 | 43.4 | 40.1 |

| SuperYOLO [44] | 62.9 | 83.5 | 85.9 | 68.9 | 73.2 |

| PAG-YOLO [43] | 58.4 | 86.5 | 84.3 | 68.8 | 72.4 |

| TPH-YOLOv5 [16] | 59.8 | 86.2 | 87.4 | 65.0 | 73.0 |

| Proposed | 61.6 | 85.7 | 84.5 | 69.8 | 73.7 |

| Methods | GFLOPs | Params (M) | FPS |

|---|---|---|---|

| YOLOR [33] | 119.3 | 52.49 | 84 |

| YOLOv4 [34] | 20.9 | 9.11 | 152 |

| YOLOv5 [37] | 16.3 | 7.05 | 256 |

| YOLOv7 [35] | 103.2 | 36.48 | 109 |

| PicoDet [36] | 13.7 | 1.35 | 175 |

| Faster R-CNN [5] | 91.0 | 41.12 | 109 |

| Cascade R-CNN [38] | 118.81 | 68.93 | 73 |

| HRNet [11] | 83.19 | 27.08 | 56 |

| SuperYOLO [44] | 31.59 | 7.051 | 84 |

| PAG-YOLO [43] | 16.4 | 7.10 | 222 |

| TPH-YOLOv5 [16] | 15.5 | 7.07 | 192 |

| Proposed | 81.9 | 16.58 | 119 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Liu, Q.; Chang, H.; Sun, H. High-Resolution Network with Transformer Embedding Parallel Detection for Small Object Detection in Optical Remote Sensing Images. Remote Sens. 2023, 15, 4497. https://doi.org/10.3390/rs15184497

Zhang X, Liu Q, Chang H, Sun H. High-Resolution Network with Transformer Embedding Parallel Detection for Small Object Detection in Optical Remote Sensing Images. Remote Sensing. 2023; 15(18):4497. https://doi.org/10.3390/rs15184497

Chicago/Turabian StyleZhang, Xiaowen, Qiaoyuan Liu, Hongliang Chang, and Haijiang Sun. 2023. "High-Resolution Network with Transformer Embedding Parallel Detection for Small Object Detection in Optical Remote Sensing Images" Remote Sensing 15, no. 18: 4497. https://doi.org/10.3390/rs15184497

APA StyleZhang, X., Liu, Q., Chang, H., & Sun, H. (2023). High-Resolution Network with Transformer Embedding Parallel Detection for Small Object Detection in Optical Remote Sensing Images. Remote Sensing, 15(18), 4497. https://doi.org/10.3390/rs15184497