Geometrical Characterization of Hazelnut Trees in an Intensive Orchard by an Unmanned Aerial Vehicle (UAV) for Precision Agriculture Applications

Abstract

:1. Introduction

2. Materials and Methods

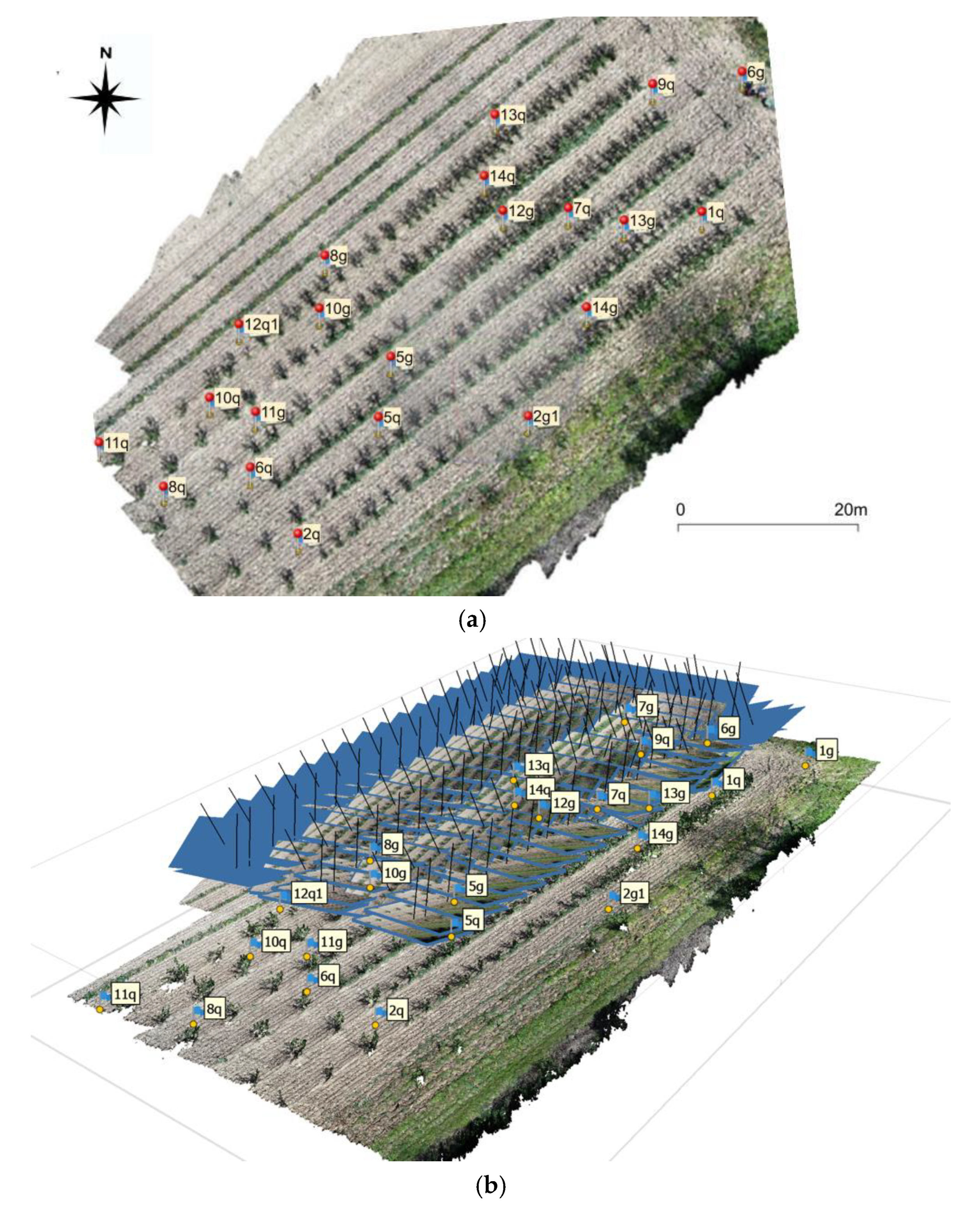

2.1. Study Site Description and Tree Sampling

- (A)

- 625 trees ha−1, spaced 4 m between rows and 4 m on the row, used as a control treatment—the common density used by farmers;

- (B)

- 1250 trees ha−1, spaced 4 m × 2 m;

- (C)

- 2500 trees ha−1, spaced 4 m × 1 m.

2.2. Manual Measurements

2.3. Acquisition of UAV Images

2.4. Point Cloud Reconstruction

2.5. Recognition of Hazelnut Trees

- The 3D point cloud was divided into two point clouds: a “canopy” point cloud and a “ground” point cloud, using the classification procedure of Agisoft Metashape. Each point cloud was exported separately to the open-source software Cloud Compare (Paris, France). In Figure 2a, the “canopy” point cloud exported on Cloud Compare was reported. The obtained 3D point cloud is affected by noise, especially in the lower part of the canopy. Thus, filters available on Cloud Compare software for automatic noise removal have been used. Rasterisation of the “canopy” and ”ground” point clouds was done, resulting in Digital Surface Model (DSM) DSMcanopy and DSMground, respectively, with a resolution of 0.01 m × 0.01 m. To the DSMcanopy no interpolation to fill empty areas was performed because the holes could represent essential data for the evaluation of the penetration of the light through the canopy; instead, to the DSMground a weighted average interpolation was applied to fill some holes in the original cloud. Finally, the two DSMs were imported into QGis (Figure 2b) to operate the analysis described below.

- 2.

- The ortophoto obtained from the elaboration of the multispectral images was used, in a GIS environment, to obtain the NDVI map using the formula:

2.6. Assessment of the Canopy Volume

- Method 1: the canopy was assimilated to a cylinder (Figure 5a), and the volume was assessed as follows:

- Method 2: the volume was evaluated considering the shape of the canopy obtained from the 3D point cloud (Figure 5b). From the raster file of the canopy, DSMcanopy, the volume was obtained in a GIS environment by evaluating the volume between the DSM and a horizontal plane passing through the lowest point of the same.

2.7. Evaluation of Model Accuracy

3. Results

3.1. Assessing Errors of the Point Cloud Reconstruction

3.2. Recognition of Hazelnut Trees

3.3. Comparison between Geometrical Characteristics Obtained from Manual and UAV Methods in the Two Tree Densities

3.4. Comparison between the Volume Obtained by UAV and Manual Surveys

3.5. Comparison between Geometrical Characteristics and Canopy Volumes between the Tree Densities

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Portarena, S.; Gavrichkova, O.; Brugnoli, E.; Battistelli, A.; Proietti, S.; Moscatello, S.; Famiani, F.; Tombesi, S.; Zadra, C.; Farinelli, D. Carbon allocation strategies and water uptake in young grafted and own-rooted hazelnut (Corylus avellana L.) cultivars. Tree Physiol. 2022, 42, 939–957. [Google Scholar] [CrossRef]

- Pacchiarelli, A.; Priori, S.; Chiti, T.; Silvestri, C.; Cristofori, V. Carbon Sequestration of Hazelnut Orchards in Central Italy. SSRN 2022, 333, 107955. [Google Scholar] [CrossRef]

- Beyhan, N.; Marangoz, D. An investigation of the relationship between reproductive growth and yield loss in hazelnut. Sci. Hortic. 2007, 113, 208–215. [Google Scholar] [CrossRef]

- Fideghelli, C.; De Salvador, F.R. World hazelnut situation and perspective. Acta Hortic. 2009, 845, 39–52. [Google Scholar] [CrossRef]

- Sun, G.; Wang, X.; Ding, Y.; Lu, W.; Sun, Y. Remote Measurement of Apple Orchard Canopy Information Using Unmanned Aerial Vehicle Photogrammetry. Agronomy 2019, 9, 774. [Google Scholar] [CrossRef] [Green Version]

- Altieri, G.; Maffia, A.; Pastore, V.; Amato, M.; Celano, G. Use of high-resolution multispectral UAVs to calculate projected ground area in Corylus avellana L. tree orchard. Sensors 2022, 22, 7103. [Google Scholar] [CrossRef]

- Mu, Y.; Fujii, Y.; Takata, D.; Zheng, B.Y.; Noshita, K.; Honda, K.; Ninomiya, S.; Guo, W. characterisation of peach tree crown by using high-resolution images from an unmanned aerial vehicle. Hortic. Res. 2018, 5, 74. [Google Scholar] [CrossRef] [Green Version]

- Anifantis, A.S.; Camposeo, S.; Vivaldi, G.A.; Santoro, F.; Pascuzzi, S. Comparison of UAV photogrammetry and 3D modeling techniques with other currently used methods for estimation of the tree row volume of a super-high-density olive orchard. Agriculture 2019, 9, 233. [Google Scholar] [CrossRef] [Green Version]

- Pannico, A.; Cirillo, C.; Giaccone, M.; Scognamiglio, P.; Romano, R.; Caporaso, N.; Sacchi, R.; Basile, B. Fruit position within the canopy affects kernel lipid composition of hazelnuts. J. Sci. Food Agric. 2017, 97, 4790–4799. [Google Scholar] [CrossRef] [Green Version]

- Dewi, C.; Chen, R.-C. Decision making based on IoT data collection for precision agriculture. Stud. Comput. Intell. 2019, 830, 31–42. [Google Scholar] [CrossRef]

- Vergni, L.; Vinci, A.; Todisco, F. Effectiveness of the new standardized deficit distance index and other meteorological indices in the assessment of agricultural drought impacts in central Italy. J. Hydrol. 2021, 603, 126986. [Google Scholar] [CrossRef]

- Park, S.; Ryu, D.; Fuentes, S.; Chung, H.; O’Connell, M.; Kim, J. Mapping Very-High-Resolution Evapotranspiration from Unmanned Aerial Vehicle (UAV) Imagery. ISPRS Int. J. Geo-Inf. 2021, 10, 211. [Google Scholar] [CrossRef]

- Narvaez, F.Y.; Reina, G.; Torres-Torriti, M.; Kantor, G.; Cheein, F.A. A Survey of Ranging and Imaging Techniques for Precision Agriculture Phenotyping. IEEE ASME Trans. Mechatron. 2017, 22, 2428–2439. [Google Scholar] [CrossRef]

- Caruso, G.; Palai, G.; D’Onofrio, C.; Marra, F.P.; Gucci, R.; Caruso, T. Detecting biophysical and geometrical characteristics of the canopy of three olive cultivars in hedgerow planting systems using an UAV and VIS-NIR cameras. Acta Hortic. 2021, 1314, 269–274. [Google Scholar] [CrossRef]

- Liu, J.; Xiang, J.; Jin, Y.; Liu, R.; Yan, J.; Wang, L. Boost Precision Agriculture with Unmanned Aerial Vehicle Remote Sensing and Edge Intelligence: A Survey. Remote. Sens. 2021, 13, 4387. [Google Scholar] [CrossRef]

- Velusamy, P.; Rajendran, S.; Mahendran, R.K.; Naseer, S.; Shafiq, M.; Choi, J.-G. Unmanned Aerial Vehicles (UAV) in Precision Agriculture: Applications and Challenges. Energies 2022, 15, 217. [Google Scholar] [CrossRef]

- Zhang, C.; Valente, J.; Kooistra, L.; Guo, L.; Wang, W. Orchard management with small unmanned aerial vehicles: A survey of sensing and analysis approaches. Precis. Agric. 2021, 22, 2007–2052. [Google Scholar] [CrossRef]

- Stateras, D.; Kalivas, D. Assessment of Olive Tree Canopy Characteristics and Yield Forecast Model Using High Resolution UAV Imagery. Agriculture 2020, 10, 385. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; de Castro, A.I.; Peña, J.M.; Jiménez-Brenes, F.M.; Arquero, O.; Lovera, M.; López-Granados, F. Mapping the 3D structure of almond trees using UAV acquired photogrammetric point clouds and object-based image analysis. Biosyst. Eng. 2018, 176, 172–184. [Google Scholar] [CrossRef]

- Hobart, M.; Pflanz, M.; Weltzien, C.; Schirrmann, M. Growth Height Determination of Tree Walls for Precise Monitoring in Apple Fruit Production Using UAV Photogrammetry. Remote Sens. 2020, 12, 1656. [Google Scholar] [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J., Jr. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Electron. Agric. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Gay, P. Unsupervised detection of vineyards by 3D point-cloud UAV photogrammetry for precision agriculture. Comput. Electron. Agric. 2018, 155, 84–95. [Google Scholar] [CrossRef]

- Pagliai, A.; Ammoniaci, M.; Sarri, D.; Lisci, R.; Perria, R.; Vieri, M.; D’Arcangelo, M.; Storchi, P.; Kartsiotis, S.-P. Comparison of Aerial and Ground 3D Point Clouds for Canopy Size Assessment in Precision Viticulture. Remote Sens. 2022, 14, 1145. [Google Scholar] [CrossRef]

- Blanco, V.; Blaya Ros, P.J.; Castillo, C.; Soto, F.; Torres, R.; Domingo, R. Potential of UAS-Based Remote Sensing for Estimating Tree Water Status and Yield in Sweet Cherry Trees. Remote Sens. 2020, 12, 2359. [Google Scholar] [CrossRef]

- Gallardo-Salazar, J.L.; Pompa-García, M. Detecting individual tree attributes and multispectral indices using unmanned aerial vehicles: Applications in a pine clonal orchard. Remote Sens. 2020, 12, 4144. [Google Scholar] [CrossRef]

- Farinelli, D.; Luciani, E.; Villa, F.; Manzo, A.; Tombesi, S. First selection of non-suckering rootstocks for hazelnut cultivars. Acta Hortic. 2022, 1346, 699–708. [Google Scholar] [CrossRef]

- Franco, S. Use of remote sensing to evaluate the spatial distribution of hazelnut cultivation: Results of a study performed in an Italian production area. In Proceedings of the IV International Symposium on Hazelnut, Ordu, Turkey, 30 July 1996; Volume 445, pp. 381–398. [Google Scholar] [CrossRef]

- Reis, S.; Taşdemir, K. Identification of hazelnut fields using spectral and Gabor textural features. ISPRS J. Photogramm. Remote Sens. 2011, 66, 652–661. [Google Scholar] [CrossRef]

- Sener, M.; Altintas, B.; Kurc, H.C. Planning and controlling of hazelnut production areas with the remote sensing techniques. J. Nat. Sci. 2013, 16, 16–23. [Google Scholar]

- Raparelli, E.; Lolletti, D. Research, innovation and development on Corylus avellana through the bibliometric approach. Int. J. Fruit Sci. 2020, 20 (Suppl. 3), S1280–S1296. [Google Scholar] [CrossRef]

- Vinci, A.; Traini, C.; Farinelli, D.; Brigante, R. Assessment of the geometrical characteristics of hazelnut intensive orchard by an Unmanned Aerial Vehicle (UAV). In Proceedings of the 2022 IEEE Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Perugia, Italy, 3–5 November 2022; pp. 218–222. [Google Scholar] [CrossRef]

- Farinelli, D.; Boco, M.; Tombesi, A. Influence of canopy density on fruit growth and flower formation. Acta Hortic. 2005, 686, 247–252. [Google Scholar] [CrossRef]

- DJI P4 Multispectral User Manual v1.4. 2020. Available online: https://dl.djicdn.com/downloads/p4-multispectral/20190927/P4_Multispectral_User_Manual_v1.0_EN.pdf (accessed on 10 November 2022).

- Brigante, R.; Cencetti, C.; De Rosa, P.; Fredduzzi, A.; Radicioni, F.; Stoppini, A. Use of aerial multispectral images for spatial analysis of flooded riverbed-alluvial plain systems: The case study of the Paglia River (Central Italy). Geomat. Nat. Hazards Risk 2017, 8, 1126–1143. [Google Scholar] [CrossRef] [Green Version]

- Agisoft LLC. Agisoft Metashape User Manual, Professional edition, version 1.6; Agisoft LLC: Saint Petersburg, Russia, 2020; p. 166. Available online: https://www.agisoft.com/pdf/metashape-pro_1_6_en.pdf (accessed on 22 January 2021).

- Vinci, A.; Todisco, F.; Brigante, R.; Mannocchi, F.; Radicioni, F. A smartphone camera for the structure from motion reconstruction for measuring soil surface variations and soil loss due to erosion. Hydrol. Res. 2017, 48, 673–685. [Google Scholar] [CrossRef]

- Vinci, A.; Todisco, F.; Vergni, L.; Torri, D. A comparative evaluation of random roughness indices by rainfall simulator and photogrammetry. Catena 2020, 188, 104468. [Google Scholar] [CrossRef]

- Vergni, L.; Vinci, A.; Todisco, F.; Santaga, F.S.; Vizzari, M. Comparing Sentinel-1, Sentinel-2, and Landsat-8 data in the early recognition of irrigated areas in central Italy. J. Agric. Eng. 2021, 52, 1265. [Google Scholar] [CrossRef]

- Vergni, L.; Todisco, F.; Vinci, A. Setup and calibration of the rainfall simulator of the Masse experimental station for soil erosion studies. Catena 2018, 167, 448–455. [Google Scholar] [CrossRef]

- Baiocchi, V.; Brigante, R.; Dominici, D.; Milone, M.V.; Mormile, M.; Radicioni, F. Automatic three-dimensional features extraction: The case study of L’Aquila for collapse identification after April 06, 2009 earthquake. Eur. J. Remote Sens. 2014, 47, 413–435. [Google Scholar] [CrossRef]

- Brigante, R.; Radicioni, F. Use of multispectral sensors with high spatial resolution for territorial and environmental analysis. Geogr. Tech. 2014, 9, 9–20. [Google Scholar]

- Patrick, A.; Li, C. High Throughput Phenotyping of Blueberry Bush Morphological Traits Using Unmanned Aerial Systems. Remote Sens. 2017, 9, 1250. [Google Scholar] [CrossRef] [Green Version]

- Assirelli, A.; Romano, E.; Bisaglia, C.; Lodolini, E.M.; Neri, D.; Brambilla, M. Canopy index evaluation for precision management in an intensive olive orchard. Sustainability 2021, 13, 8266. [Google Scholar] [CrossRef]

- Qi, Y.; Dong, X.; Chen, P.; Lee, K.-H.; Lan, Y.; Lu, X.; Jia, R.; Deng, J.; Zhang, Y. Canopy Volume Extraction of Citrus reticulate Blanco cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning. Remote Sens. 2021, 13, 3437. [Google Scholar] [CrossRef]

- Llorens Calveras, J.; Gil, E.; Llop Casamada, J.; Escolà, A. Variable rate dosing in precision viticulture: Use of electronic devices to improve application efficiency. Crop Prot. 2010, 29, 239–248. [Google Scholar] [CrossRef] [Green Version]

- Comba, L.; Biglia, A.; Aimonino, D.R.; Barge, P.; Tortia, C.; Gay, P. 2D and 3D data fusion for crop monitoring in precision agriculture. In Proceedings of the 2019 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Portici, Italy, 24–26 October 2019; pp. 62–67. [Google Scholar] [CrossRef]

- Lou, S.W.; Zhao, Q.; Gao, Y.G.; Zhang, J.S. The effect of different density to canopy microclimate and quality of cotton. Cotton Sci. 2010, 22, 260–266. [Google Scholar] [CrossRef]

- Kaggwa-Asiimwe, R.; Andrade-Sanchez, P.; Wang, G. Plant architecture influences growth and yield response of upland cotton to population density. Field Crops Res. 2013, 145, 52–59. [Google Scholar] [CrossRef]

- Antonietta, M.; Fanello, D.D.; Acciaresi, H.A.; Guiamet, J.J. Senescence and yield responses to plant density in stay green and earlier-senescing maise hybrids from Argentina. Field Crops Res. 2014, 155, 111–119. [Google Scholar] [CrossRef]

| Marker | Optimization Results | |||||

|---|---|---|---|---|---|---|

| Coordinates—UTM WGS84 (East, North, Elevation) | Error (m) | Error (pix) | X_Error (m) | Y_Error (m) | Z_Error (m) | |

| 1g * | 288164.725, 4760938.522, 209.729 | 0.0277 | 0.581 | −0.007 | −0.005 | 0.026 |

| 1q * | 288153.160, 4760935.254, 209.606 | 0.0090 | 0.356 | 0.002 | −0.007 | −0.005 |

| 2g * | 288137.825, 4760917.443, 209.311 | 0.0139 | 0.377 | 0.005 | 0.000 | −0.013 |

| 2q * | 288116.441, 4760906.698, 209.130 | 0.0062 | 0.467 | −0.004 | −0.002 | −0.004 |

| 5g * | 288124.670, 4760922.909, 209.294 | 0.0190 | 0.420 | 0.005 | −0.005 | −0.018 |

| 5q | 288123.709, 4760917.385, 209.210 | 0.0103 | 0.496 | 0.004 | −0.003 | −0.009 |

| 6g * | 288156.715, 4760947.916, 209.616 | 0.0168 | 0.778 | 0.002 | 0.002 | 0.017 |

| 6q | 288111.239, 4760912.695, 209.183 | 0.0364 | 0.564 | 0.001 | 0.006 | 0.036 |

| 7g * | 288149.338, 4760956.827, 209.692 | 0.0114 | 0.673 | −0.010 | −0.004 | −0.001 |

| 7q | 288141.161, 4760936.219, 209.476 | 0.0109 | 0.398 | 0.005 | 0.002 | 0.009 |

| 8g * | 288117.658, 4760932.856, 209.445 | 0.0290 | 0.368 | −0.017 | 0.004 | −0.023 |

| 8q * | 288102.296, 4760910.906, 209.173 | 0.0151 | 0.308 | 0.004 | 0.002 | 0.014 |

| 9q | 288148.778, 4760947.375, 209.627 | 0.0177 | 0.614 | 0.003 | −0.001 | 0.018 |

| 10g | 288117.309, 4760927.744, 209.340 | 0.0187 | 0.417 | 0.010 | 0.016 | −0.002 |

| 10q | 288106.538, 4760919.344, 209.259 | 0.0138 | 0.561 | 0.002 | 0.001 | 0.014 |

| 11g | 288111.331, 4760917.929, 209.245 | 0.0153 | 0.265 | −0.004 | −0.006 | −0.013 |

| 11q * | 288094.596, 4760915.234, 209.174 | 0.0138 | 0.238 | −0.007 | −0.006 | 0.010 |

| 12g * | 288135.050, 4760936.353, 209.437 | 0.0079 | 0.264 | 0.006 | 0.005 | 0.001 |

| 12q | 288109.050, 4760926.411, 209.326 | 0.0044 | 0.571 | 0.003 | −0.001 | 0.003 |

| 13g | 288146.303, 4760934.779, 209.484 | 0.0052 | 0.402 | 0.002 | 0.004 | −0.002 |

| 13q * | 288134.027, 4760945.735, 209.564 | 0.0233 | 0.475 | −0.010 | −0.006 | −0.020 |

| 14g | 288143.029, 4760927.062, 209.471 | 0.0326 | 0.443 | 0.000 | −0.004 | −0.032 |

| 14q * | 288133.127, 4760939.804, 209.507 | 0.0128 | 0.609 | 0.007 | 0.010 | −0.003 |

| Average Error | Control Points (*) | 0.0173 m | ||||

| Check Points | 0.0193 | |||||

| Rc | hc | htree | htrunk | |

|---|---|---|---|---|

| R | 0.694 | 0.544 | 0.728 | 0.071 |

| RMSE (m) | 0.115 | 0.282 | 0.230 | 0.184 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vinci, A.; Brigante, R.; Traini, C.; Farinelli, D. Geometrical Characterization of Hazelnut Trees in an Intensive Orchard by an Unmanned Aerial Vehicle (UAV) for Precision Agriculture Applications. Remote Sens. 2023, 15, 541. https://doi.org/10.3390/rs15020541

Vinci A, Brigante R, Traini C, Farinelli D. Geometrical Characterization of Hazelnut Trees in an Intensive Orchard by an Unmanned Aerial Vehicle (UAV) for Precision Agriculture Applications. Remote Sensing. 2023; 15(2):541. https://doi.org/10.3390/rs15020541

Chicago/Turabian StyleVinci, Alessandra, Raffaella Brigante, Chiara Traini, and Daniela Farinelli. 2023. "Geometrical Characterization of Hazelnut Trees in an Intensive Orchard by an Unmanned Aerial Vehicle (UAV) for Precision Agriculture Applications" Remote Sensing 15, no. 2: 541. https://doi.org/10.3390/rs15020541