Self-Attention Convolutional Long Short-Term Memory for Short-Term Arctic Sea Ice Motion Prediction Using Advanced Microwave Scanning Radiometer Earth Observing System 36.5 GHz Data

Abstract

:1. Introduction

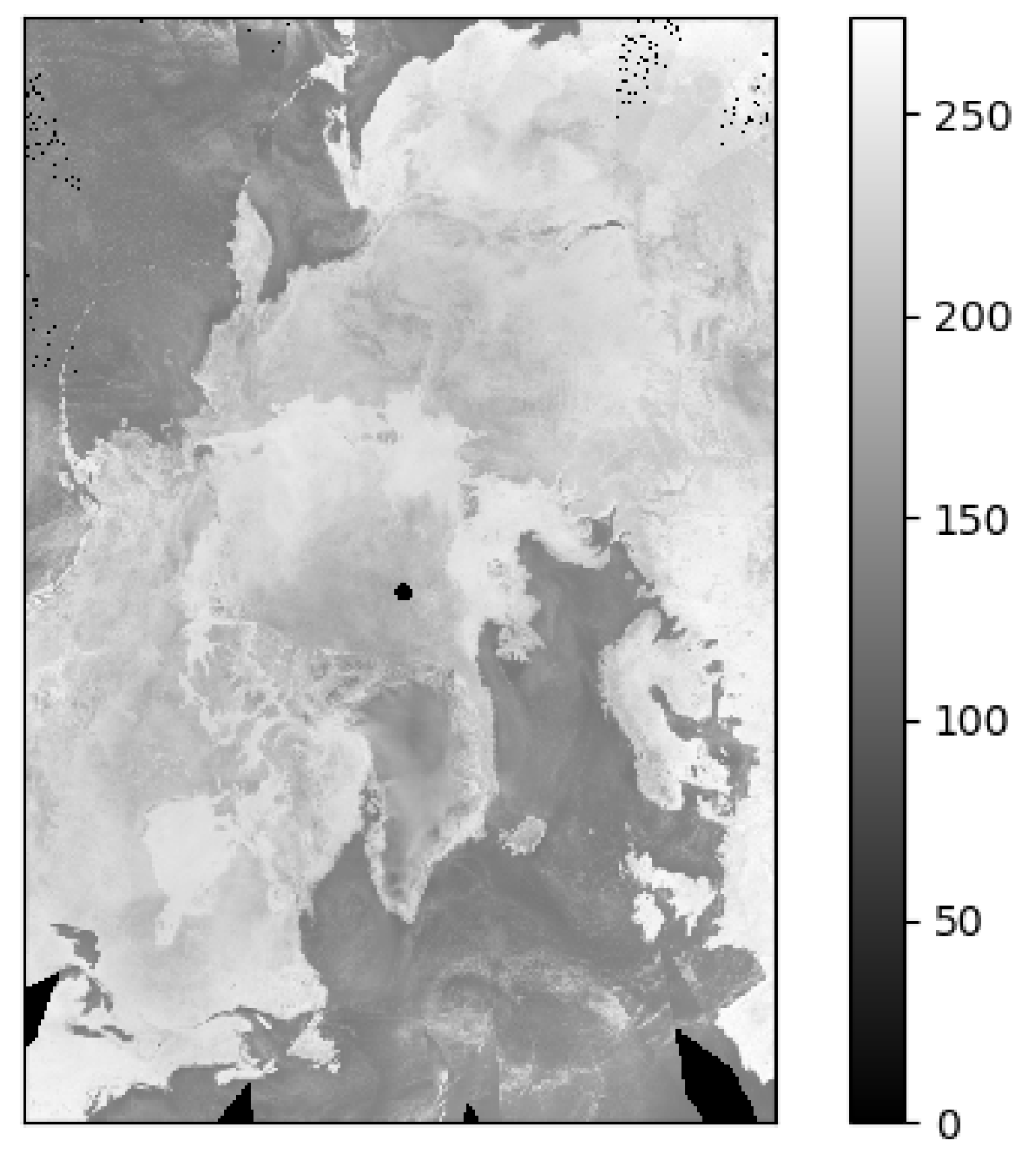

2. Data

3. Methods

3.1. Optical Flow

- Efficiency : tailored for large-scale imagery, ensuring computational efficiency;

- Precision: offers high-accuracy optical flow estimations, capturing detailed pixel movements, ideal for tasks demanding precision such as object tracking and motion analysis;

- Versatility: applicable across various domains, from computer vision to autonomous driving, enhancing algorithmic performance and visual outcomes;

- Adaptability: excellently handles intricate motion scenarios and dynamic backdrops, managing swift and non-rigid motions and multiple object movements within scenes;

- Real-time capability: due to its efficiency and precision, it is apt for real-time applications, facilitating instantaneous visual feedback.

3.2. Network Architecture

3.2.1. SAM Module

- represents the input gate. It is a value processed through the sigmoid function and controls how much of the new information should be added to the memory . This gate mechanism determines how much of the previous memory should be retained in the current time step .

- represents new memory candidate values, processed through the hyperbolic tangent function. It indicates the new information to be added to the memory .

- is the memory at the current time step . Memory serves as the model’s internal state and is responsible for storing information about the input sequence. Its update is controlled by the input gate and the new information , as well as the forgetting of the previous time step’s memory .

- is the output gate. It is a value processed through the sigmoid function and controls how the information in the memory affects the output. This gate mechanism determines how much memory information should influence the output value .

- is the output at time step and is obtained by multiplying the memory with the output gate . This determines the impact of memory information on the model’s output.

- The weight matrices , , , , and along with bias terms , and are parameters used to control the gating and memory updates.

3.2.2. Base Model

- represents the self-attention module.

- represents the result of applying a Self-Attention module to the input at time step . Self-Attention helps the model capture relationships between different elements in the input sequence.

- is the result of applying a Self-Attention module to the previous time step’s hidden state . It aids the model in capturing internal relationships within the hidden state.

- represents the input gate. It is a value processed through the sigmoid function () and controls how much of the new information should be added to the cell state .

- is the forget gate. The forget gate, also processed through the sigmoid function, determines how much information from the previous time step’s cell state should be retained in the current time step.

- represents new information, which is processed through the hyperbolic tangent (tanh) function. It is used to calculate the value to be added to the cell state and serves as a candidate cell state.

- is the cell state. It is the internal memory of the LSTM unit and stores information about the input data. The forget gate controls what information is retained and forgotten from the previous time step’s cell state, while and control the addition of new information.

- is the output gate. It is a value processed through the sigmoid function and determines the extent to which information from the cell state is passed to the hidden state .

- is the hidden state at time step . It is the primary output of the LSTM unit. It is obtained by multiplying the cell state by the output gate and processing the result through the hyperbolic tangent function.

- , , , , , , , are weight matrices that are used to linearly combine the transformed input sequence and the previous hidden state to compute the input gate, forget gate, candidate cell state value, and output gate. These weight matrices are learned as model parameters during training. , , , are bias terms that help adjust the behavior of the gates and the computation of the candidate cell state, similar to a standard LSTM. These bias terms are also learned as model parameters during training.

3.2.3. SA-ConvLSTM

3.3. Network Parameters

4. Results

4.1. Training Results

4.2. Comparison with Reference Optical Flow

4.3. Comparison with Drift Data Inherent in the AMSR-E Product

4.4. Comparison with Previous Methods

4.5. Visualization Example

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Meredith, M.; Sommerkorn, M.; Cassotta, S.; Derksen, C.; Ekaykin, A.; Hollowed, A.; Kofinas, G.; Mackintosh, A.; Melbourne-Thomas, J.; Muelbert, M.; et al. Polar regions. In IPCC Special Report on the Ocean and Cryosphere in a Changing Climate; IPCC: Geneva, Switzerland, 2019; Chapter 3. [Google Scholar]

- Pithan, F.; Mauritsen, T. Arctic amplification dominated by temperature feedbacks in contemporary climate models. Nat. Geosci. 2014, 7, 181–184. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Bushuk, M.; Winton, M.; Hurlin, B.; Delworth, T.; Harrison, M.; Jia, L.; Lu, F.; Rosati, A.; Yang, X. Subseasonal-to-Seasonal Arctic Sea Ice Forecast Skill Improvement from Sea Ice Concentration Assimilation. J. Clim. 2022, 35, 4233–4252. [Google Scholar] [CrossRef]

- Smith, G.C.; Liu, Y.; Benkiran, M.; Chikhar, K.; Surcel Colan, D.; Gauthier, A.A.; Testut, C.E.; Dupont, F.; Lei, J.; Roy, F.; et al. The Regional Ice Ocean Prediction System v2: A pan-Canadian ocean analysis system using an online tidal harmonic analysis. Geosci. Model Dev. 2021, 14, 1445–1467. [Google Scholar] [CrossRef]

- Dirkson, A.; Merryfield, W.J.; Monahan, A. Impacts of sea ice thickness initialization on seasonal Arctic sea ice predictions. J. Clim. 2017, 30, 1001–1017. [Google Scholar] [CrossRef]

- Lavergne, T.; Down, E. A Climate Data Record of Year-Round Global Sea Ice Drift from the EUMETSAT OSI SAF. Earth Syst. Sci. Data Discuss. 2023, 1–38. [Google Scholar]

- Schweiger, A.J.; Zhang, J. Accuracy of short-term sea ice drift forecasts using a coupled ice-ocean model. J. Geophys. Res. Ocean. 2015, 120, 7827–7841. [Google Scholar] [CrossRef]

- Hebert, D.A.; Allard, R.A.; Metzger, E.J.; Posey, P.G.; Preller, R.H.; Wallcraft, A.J.; Phelps, M.W.; Smedstad, O.M. Short-term sea ice forecasting: An assessment of ice concentration and ice drift forecasts using the US Navy’s Arctic Cap Nowcast/Forecast System. J. Geophys. Res. Ocean. 2015, 120, 8327–8345. [Google Scholar] [CrossRef]

- Yang, C.Y.; Liu, J.; Xu, S. Seasonal Arctic sea ice prediction using a newly developed fully coupled regional model with the assimilation of satellite sea ice observations. J. Adv. Model. Earth Syst. 2020, 12, e2019MS001938. [Google Scholar] [CrossRef]

- De Silva, L.W.A.; Yamaguchi, H. Grid size dependency of short-term sea ice forecast and its evaluation during extreme Arctic cyclone in August 2016. Polar Sci. 2019, 21, 204–211. [Google Scholar] [CrossRef]

- Zampieri, L.; Goessling, H.F.; Jung, T. Bright prospects for Arctic sea ice prediction on subseasonal time scales. Geophys. Res. Lett. 2018, 45, 9731–9738. [Google Scholar] [CrossRef]

- Spreen, G.; Kwok, R.; Menemenlis, D. Trends in Arctic sea ice drift and role of wind forcing: 1992–2009. Geophys. Res. Lett. 2011, 38, L19501. [Google Scholar] [CrossRef]

- Mu, B.; Luo, X.; Yuan, S.; Liang, X. IceTFT v 1.0.0: Interpretable Long-Term Prediction of Arctic Sea Ice Extent with Deep Learning. Geosci. Model Dev. Discuss. 2023, 16, 4677–4697. [Google Scholar] [CrossRef]

- Liu, Q.; Zhang, R.; Wang, Y.; Yan, H.; Hong, M. Short-Term Daily Prediction of Sea Ice Concentration Based on Deep Learning of Gradient Loss Function. Front. Mar. Sci. 2021, 8, 736429. [Google Scholar] [CrossRef]

- Kim, Y.J.; Kim, H.C.; Han, D.; Lee, S.; Im, J. Prediction of monthly Arctic sea ice concentrations using satellite and reanalysis data based on convolutional neural networks. Cryosphere 2020, 14, 1083–1104. [Google Scholar] [CrossRef]

- Liu, Y.; Bogaardt, L.; Attema, J.; Hazeleger, W. Extended-range arctic sea ice forecast with convolutional long short-term memory networks. Mon. Weather Rev. 2021, 149, 1673–1693. [Google Scholar] [CrossRef]

- Petrou, Z.I.; Tian, Y. Prediction of sea ice motion with recurrent neural networks. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 5422–5425. [Google Scholar]

- Alqushaibi, A.; Abdulkadir, S.J.; Rais, H.M.; Al-Tashi, Q. A review of weight optimization techniques in recurrent neural networks. In Proceedings of the 2020 International Conference on Computational Intelligence (ICCI), Bandar Seri Iskandar, Malaysia, 8–9 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 196–201. [Google Scholar]

- Zhai, J.; Bitz, C.M. A machine learning model of Arctic sea ice motions. arXiv 2021, arXiv:2108.10925. [Google Scholar]

- Farooque, G.; Xiao, L.; Yang, J.; Sargano, A.B. Hyperspectral image classification via a novel spectral–spatial 3D ConvLSTM-CNN. Remote Sens. 2021, 13, 4348. [Google Scholar] [CrossRef]

- Petrou, Z.I.; Tian, Y. Prediction of sea ice motion with convolutional long short-term memory networks. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6865–6876. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.c. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Chen, Y.; Kalantidis, Y.; Li, J.; Yan, S.; Feng, J. Aˆ 2-nets: Double attention networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 2–8 December 2018; Volume 31. [Google Scholar]

- Hoffman, L.; Mazloff, M.R.; Gille, S.T.; Giglio, D.; Bitz, C.M.; Heimbach, P.; Matsuyoshi, K. Machine Learning for Daily Forecasts of Arctic Sea Ice Motion: An Attribution Assessment of Model Predictive Skill. Artif. Intell. Earth Syst. 2023, 2, 230004. [Google Scholar] [CrossRef]

- Lin, Z.; Li, M.; Zheng, Z.; Cheng, Y.; Yuan, C. Self-attention convlstm for spatiotemporal prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11531–11538. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Xie, G.; Li, Q.; Jiang, Y. Self-attentive deep learning method for online traffic classification and its interpretability. Comput. Netw. 2021, 196, 108267. [Google Scholar] [CrossRef]

- Sperber, M.; Niehues, J.; Neubig, G.; Stüker, S.; Waibel, A. Self-attentional acoustic models. arXiv 2018, arXiv:1803.09519. [Google Scholar]

- Won, M.; Chun, S.; Serra, X. Toward interpretable music tagging with self-attention. arXiv 2019, arXiv:1906.04972. [Google Scholar]

- Ruengchaijatuporn, N.; Chatnuntawech, I.; Teerapittayanon, S.; Sriswasdi, S.; Itthipuripat, S.; Hemrungrojn, S.; Bunyabukkana, P.; Petchlorlian, A.; Chunamchai, S.; Chotibut, T.; et al. An explainable self-attention deep neural network for detecting mild cognitive impairment using multi-input digital drawing tasks. Alzheimer’s Res. Ther. 2022, 14, 111. [Google Scholar] [CrossRef] [PubMed]

- Tschudi, M.; Fowler, C.; Maslanik, J.; Stewart, J.; Meier, W. Polar Pathfinder Daily 25 km EASE-Grid Sea Ice Motion Vectors, Version 3 [IABP buoys]. 2016. Available online: https://nsidc.org/data/nsidc-0116/versions/3 (accessed on 7 November 2023).

- Tschudi, M.; Meier, W.; Stewart, J.; Fowler, C.; Maslanik, J. Polar Pathfinder Daily 25 km EASE-Grid Sea Ice Motion Vectors, Version 4, Boulder, Colorado USA, NASA National Snow and Ice Data Center. 2019. Available online: https://nsidc.org/data/nsidc-0116/versions/4 (accessed on 7 November 2023).

- Meier, W.N.; Dai, M. High-resolution sea-ice motions from AMSR-E imagery. Ann. Glaciol. 2006, 44, 352–356. [Google Scholar] [CrossRef]

- Agnew, T.; Lambe, A.; Long, D. Estimating sea ice area flux across the Canadian Arctic Archipelago using enhanced AMSR-E. J. Geophys. Res. Ocean. 2008, 113, C10011. [Google Scholar] [CrossRef]

- Kimura, N.; Nishimura, A.; Tanaka, Y.; Yamaguchi, H. Influence of winter sea-ice motion on summer ice cover in the Arctic. Polar Res. 2013, 32, 20193. [Google Scholar] [CrossRef]

- Girard-Ardhuin, F.; Ezraty, R. Enhanced Arctic sea ice drift estimation merging radiometer and scatterometer data. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2639–2648. [Google Scholar] [CrossRef]

- Haarpaintner, J. Arctic-wide operational sea ice drift from enhanced-resolution QuikScat/SeaWinds scatterometry and its validation. IEEE Trans. Geosci. Remote Sens. 2005, 44, 102–107. [Google Scholar] [CrossRef]

- Meier, W.N.; Markus, T.; Comiso, J. AMSR-E/AMSR2 Unified L3 Daily 12.5 km Brightness Temperatures, Sea Ice Concentration, Motion & Snow Depth Polar Grids, Version 1; Active Archive Center: Boulder, CO, USA, 2018. [Google Scholar]

- Qu, Z.; Su, J. Improved algorithm for determining the freeze onset of Arctic sea ice using AMSR-E/2 data. Remote Sens. Environ. 2023, 297, 113748. [Google Scholar] [CrossRef]

- Meier, W.N.; Stroeve, J. Comparison of sea-ice extent and ice-edge location estimates from passive microwave and enhanced-resolution scatterometer data. Ann. Glaciol. 2008, 48, 65–70. [Google Scholar] [CrossRef]

- Agarwal, A.; Gupta, S.; Singh, D.K. Review of optical flow technique for moving object detection. In Proceedings of the 2016 2nd International Conference on Contemporary Computing and Informatics (IC3I), Greater Noida, India, 14–17 December 2016; pp. 409–413. [Google Scholar] [CrossRef]

- Zhang, Y.; Robinson, A.; Magnusson, M.; Felsberg, M. Leveraging Optical Flow Features for Higher Generalization Power in Video Object Segmentation. In Proceedings of the 2023 IEEE International Conference on Image Processing (ICIP), Kuala Lumpur, Malaysia, 8–11 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 326–330. [Google Scholar]

- Fleet, D.; Weiss, Y. Optical flow estimation. In Handbook of Mathematical Models in Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; pp. 237–257. [Google Scholar]

- Revaud, J.; Weinzaepfel, P.; Harchaoui, Z.; Schmid, C. Epicflow: Edge-preserving interpolation of correspondences for optical flow. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1164–1172. [Google Scholar]

- Weinzaepfel, P.; Revaud, J.; Harchaoui, Z.; Schmid, C. DeepFlow: Large displacement optical flow with deep matching. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1385–1392. [Google Scholar]

- Dollár, P.; Zitnick, C.L. Structured forests for fast edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1841–1848. [Google Scholar]

- Markus, T.; Cavalieri, D.J. An enhancement of the NASA Team sea ice algorithm. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1387–1398. [Google Scholar] [CrossRef]

- Li, B.; Tang, B.; Deng, L.; Zhao, M. Self-attention ConvLSTM and its application in RUL prediction of rolling bearings. IEEE Trans. Instrum. Meas. 2021, 70, 3518811. [Google Scholar] [CrossRef]

- Wang, Y. An improved algorithm of EfficientNet with Self Attention mechanism. In Proceedings of the International Conference on Internet of Things and Machine Learning (IoTML 2021), Harbin, China, 16–18 December 2022; SPIE: Paris, France, 2022; Volume 12174, pp. 202–210. [Google Scholar]

- Lin, Y.L.; Chen, Y.C.; Liu, A.; Lin, S.C.; Hsu, W.Y. Effective Construction of a Reflection Angle Prediction Model for Reflectarrays Using the Hadamard Product Self-Attention Mechanism. In Proceedings of the 2022 IEEE Symposium Series on Computational Intelligence (SSCI), Singapore, 4–7 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1298–1303. [Google Scholar]

- Yang, L.; Wang, H.; Pang, M.; Jiang, Y.; Lin, H. Deep Learning with Attention Mechanism for Electromagnetic Inverse Scattering. In Proceedings of the 2022 IEEE International Symposium on Antennas and Propagation and USNC-URSI Radio Science Meeting (AP-S/URSI), Denver, CO, USA, 10–15 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1712–1713. [Google Scholar]

- Min, L.; Wu, A.; Fan, X.; Li, F.; Li, J. Dim and Small Target Detection with a Combined New Norm and Self-Attention Mechanism of Low-Rank Sparse Inversion. Sensors 2023, 23, 7240. [Google Scholar] [CrossRef] [PubMed]

- Zhong, D.Y. Data for Self-Attention Convolutional Long Short-Term Memory for Short-Term Arctic Sea Ice Motion Prediction Using Advanced Microwave Scanning Radiometer Earth Observing System 36.5 GHz Data. 2023, November. Available online: https://figshare.com/articles/dataset/Data_for_Self-Attention_ConvLSTM_for_Short-term_Arctic_Sea_Ice_Motion_Prediction_Using_AMSR-E_36_5_GHz_Data_/24354901 (accessed on 7 November 2023). [CrossRef]

| Frequency (GHz) | Applications |

|---|---|

| 18.7 (K-band) | Water vapor content in clouds and precipitation, sea surface temperature, sea ice concentration. |

| 23.8 (K-band) | Atmospheric water vapor concentration, soil moisture. |

| 36.5 (Ka-band) | Sea ice concentration and type, sea surface temperature, precipitation estimation. |

| 89.0 (W-band) | Sea ice edges and leads, precipitation estimation, cloud liquid water content. |

| x Axis | y Axis | |||||

|---|---|---|---|---|---|---|

| Fut | RMSE | MAE | RMSE-MAE | RMSE | MAE | RMSE-MAE |

| 1 | 1.17 | 1.15 | 0.02 | 0.91 | 0.87 | 0.04 |

| 2 | 1.57 | 1.55 | 0.02 | 1.34 | 1.31 | 0.03 |

| 3 | 1.85 | 1.82 | 0.03 | 1.67 | 1.64 | 0.02 |

| 4 | 2.03 | 1.99 | 0.04 | 1.91 | 1.88 | 0.03 |

| 5 | 2.09 | 2.05 | 0.04 | 2.00 | 1.96 | 0.04 |

| 6 | 2.12 | 2.08 | 0.04 | 2.03 | 2.00 | 0.03 |

| 7 | 2.14 | 2.10 | 0.04 | 2.06 | 2.02 | 0.04 |

| 8 | 2.15 | 2.11 | 0.04 | 2.07 | 2.03 | 0.04 |

| 9 | 2.16 | 2.12 | 0.04 | 2.08 | 2.04 | 0.04 |

| 10 | 2.17 | 2.13 | 0.04 | 2.09 | 2.05 | 0.04 |

| Avg. | 1.94 | 1.91 | 0.035 | 1.81 | 1.78 | 0.035 |

| x Axis | y Axis | |||||

|---|---|---|---|---|---|---|

| Fut | RMSE | MAE | RMSE-MAE | RMSE | MAE | RMSE-MAE |

| 1 | 1.80 | 1.64 | 0.16 | 1.30 | 1.06 | 0.24 |

| 2 | 2.56 | 2.44 | 0.12 | 2.11 | 1.94 | 0.17 |

| 3 | 3.10 | 2.97 | 0.13 | 2.74 | 2.58 | 0.16 |

| 4 | 3.46 | 3.32 | 0.14 | 3.22 | 3.06 | 0.16 |

| 5 | 3.58 | 3.44 | 0.14 | 3.38 | 3.22 | 0.16 |

| 6 | 3.65 | 3.49 | 0.16 | 3.45 | 3.30 | 0.15 |

| 7 | 3.68 | 3.53 | 0.15 | 3.50 | 3.34 | 0.16 |

| 8 | 3.71 | 3.56 | 0.15 | 3.53 | 3.37 | 0.16 |

| 9 | 3.73 | 3.57 | 0.16 | 3.55 | 3.39 | 0.16 |

| 10 | 3.75 | 3.59 | 0.16 | 3.57 | 3.41 | 0.16 |

| Avg. | 3.30 | 3.15 | 0.147 | 3.03 | 2.86 | 0.168 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, D.; Liu, N.; Yang, L.; Lin, L.; Chen, H. Self-Attention Convolutional Long Short-Term Memory for Short-Term Arctic Sea Ice Motion Prediction Using Advanced Microwave Scanning Radiometer Earth Observing System 36.5 GHz Data. Remote Sens. 2023, 15, 5437. https://doi.org/10.3390/rs15235437

Zhong D, Liu N, Yang L, Lin L, Chen H. Self-Attention Convolutional Long Short-Term Memory for Short-Term Arctic Sea Ice Motion Prediction Using Advanced Microwave Scanning Radiometer Earth Observing System 36.5 GHz Data. Remote Sensing. 2023; 15(23):5437. https://doi.org/10.3390/rs15235437

Chicago/Turabian StyleZhong, Dengyan, Na Liu, Lei Yang, Lina Lin, and Hongxia Chen. 2023. "Self-Attention Convolutional Long Short-Term Memory for Short-Term Arctic Sea Ice Motion Prediction Using Advanced Microwave Scanning Radiometer Earth Observing System 36.5 GHz Data" Remote Sensing 15, no. 23: 5437. https://doi.org/10.3390/rs15235437

APA StyleZhong, D., Liu, N., Yang, L., Lin, L., & Chen, H. (2023). Self-Attention Convolutional Long Short-Term Memory for Short-Term Arctic Sea Ice Motion Prediction Using Advanced Microwave Scanning Radiometer Earth Observing System 36.5 GHz Data. Remote Sensing, 15(23), 5437. https://doi.org/10.3390/rs15235437