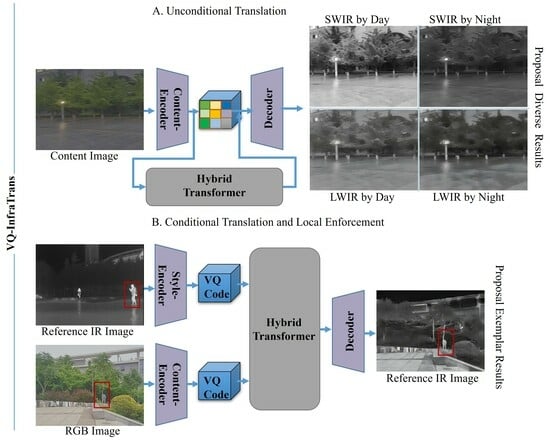

VQ-InfraTrans: A Unified Framework for RGB-IR Translation with Hybrid Transformer

Abstract

Share and Cite

Sun, Q.; Wang, X.; Yan, C.; Zhang, X. VQ-InfraTrans: A Unified Framework for RGB-IR Translation with Hybrid Transformer. Remote Sens. 2023, 15, 5661. https://doi.org/10.3390/rs15245661

Sun Q, Wang X, Yan C, Zhang X. VQ-InfraTrans: A Unified Framework for RGB-IR Translation with Hybrid Transformer. Remote Sensing. 2023; 15(24):5661. https://doi.org/10.3390/rs15245661

Chicago/Turabian StyleSun, Qiyang, Xia Wang, Changda Yan, and Xin Zhang. 2023. "VQ-InfraTrans: A Unified Framework for RGB-IR Translation with Hybrid Transformer" Remote Sensing 15, no. 24: 5661. https://doi.org/10.3390/rs15245661

APA StyleSun, Q., Wang, X., Yan, C., & Zhang, X. (2023). VQ-InfraTrans: A Unified Framework for RGB-IR Translation with Hybrid Transformer. Remote Sensing, 15(24), 5661. https://doi.org/10.3390/rs15245661