Dynamic Detection of Forest Change in Hunan Province Based on Sentinel-2 Images and Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

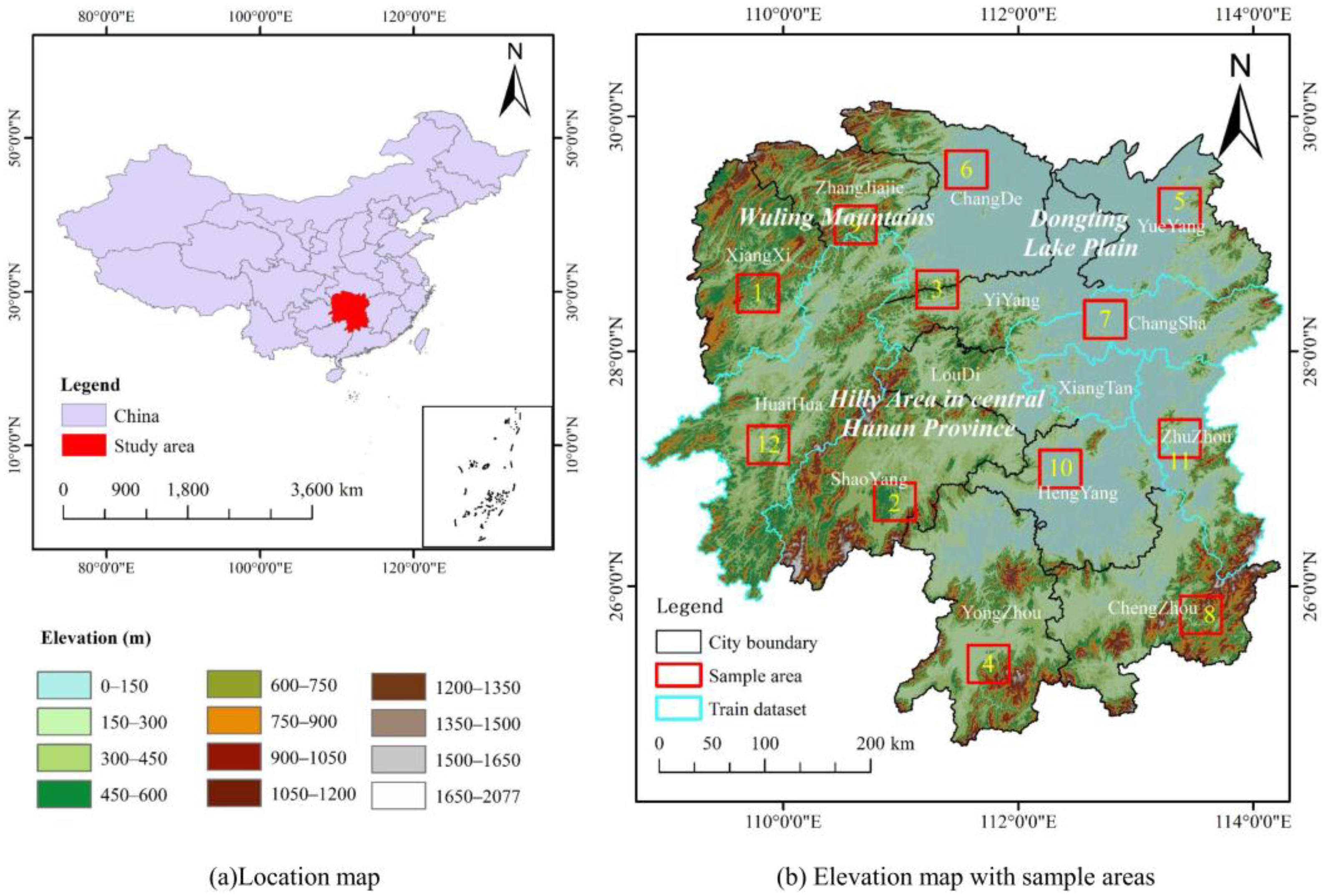

2.1. Study Area

2.2. Data and Preprocessing

2.2.1. Data Sources

2.2.2. Dataset

2.2.3. Data Augmentation

2.3. Change Detection Model

2.3.1. The U-Net++ Model

2.3.2. Loss Function

2.3.3. Accuracy Evaluation Metrics

2.4. Dynamic Detection of Forest Change

2.4.1. Annual Forest Change Detection

2.4.2. Quarterly Forest Change Detection

3. Results

3.1. Change Detection Results in the Dataset

3.2. Annual Forest Change Detection Results

3.3. Quarterly Forest Change Dynamics Detection and Mapping

4. Discussion

4.1. Performance Evaluation of Forest Change Detection Models

4.2. Performance Evaluation of Annual Forest Change Detection

4.3. The Advantages of Quarterly Forest Change Dynamics Detection

4.4. Outlook

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Lechner, A.M.; Foody, G.M.; Boyd, D.S. Applications in Remote Sensing to Forest Ecology and Management. One Earth 2020, 2, 405–412. [Google Scholar] [CrossRef]

- Carney, J.; Gillespie, T.W.; Rosomoff, R. Assessing forest change in a priority West African mangrove ecosystem: 1986–2010. Geoforum 2014, 53, 126–135. [Google Scholar] [CrossRef]

- Boyd, D.S.; Danson, F.M. Satellite remote sensing of forest resources: Three decades of research development. Prog. Phys. Geogr. Earth Environ. 2005, 29, 1–26. [Google Scholar] [CrossRef]

- Panigrahy, R.K.; Kale, M.P.; Dutta, U.; Mishra, A.; Banerjee, B.; Singh, S. Forest cover change detection of Western Ghats of Maharashtra using satellite remote sensing based visual interpretation technique. Curr. Sci. 2010, 98, 657–664. Available online: https://www.jstor.org/stable/24111818 (accessed on 18 January 2022).

- Chen, J.; Gong, P.; He, C.; Pu, R.; Shi, P. Land-use/land-cover change detection using improved change-vector analysis. Photogramm. Eng. Remote Sens. 2003, 69, 369–379. [Google Scholar] [CrossRef] [Green Version]

- Asokan, A.; Anitha, J. Change detection techniques for remote sensing applications: A survey. Earth Sci. Inform. 2019, 12, 143–160. [Google Scholar] [CrossRef]

- Chen, X.; Chen, J.; Shi, Y.; Yamaguchi, Y. An automated approach for updating land cover maps based on integrated change detection and classification methods. ISPRS J. Photogramm. Remote Sens. 2012, 71, 86–95. [Google Scholar] [CrossRef]

- Zhu, Z. Change detection using landsat time series: A review of frequencies, preprocessing, algorithms, and applications. ISPRS J. Photogramm. Remote Sens. 2017, 130, 370–384. [Google Scholar] [CrossRef]

- Singh, A. Review Article Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef] [Green Version]

- Lu, D.; Mausel, P.; Brondízio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2401. [Google Scholar] [CrossRef]

- Fan, H.; Fu, X.; Zhang, Z.; Wu, Q. Phenology-Based Vegetation Index Differencing for Mapping of Rubber Plantations Using Landsat OLI Data. Remote Sens. 2015, 7, 6041–6058. [Google Scholar] [CrossRef] [Green Version]

- Huesca, M.; García, M.; Roth, K.L.; Casas, A.; Ustin, S.L. Canopy structural attributes derived from AVIRIS imaging spectroscopy data in a mixed broadleaf/conifer forest. Remote Sens. Environ. 2016, 182, 208–226. [Google Scholar] [CrossRef] [Green Version]

- Jin, S.; Sader, S.A. Comparison of time series tasseled cap wetness and the normalized difference moisture index in detecting forest disturbances. Remote Sens. Environ. 2005, 94, 364–372. [Google Scholar] [CrossRef]

- Xie, G.; Niculescu, S. Mapping and Monitoring of Land Cover/Land Use (LCLU) Changes in the Crozon Peninsula (Brittany, France) from 2007 to 2018 by Machine Learning Algorithms (Support Vector Machine, Random Forest, and Convolutional Neural Network) and by Post-classification Comparison (PCC). Remote Sens. 2021, 13, 3899. [Google Scholar] [CrossRef]

- Alexakis, E.B.; Armenakis, C. Evaluation of UNet and UNet++ architectures in high resolution image change detection applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1507–1514. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Lei, T.; Zhang, Q.; Xue, D.; Chen, T.; Meng, H.; Nandi, A.K. End-to-end Change Detection Using a Symmetric Fully Convolutional Network for Landslide Mapping. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3027–3031. [Google Scholar]

- Sefrin, O.; Riese, F.M.; Keller, S. Deep learning for land cover change detection. Remote Sens. 2020, 13, 78. [Google Scholar] [CrossRef]

- Hu, Y.; Xu, X.; Wu, F.; Sun, Z.; Xia, H.; Meng, Q.; Huang, W.; Zhou, H.; Gao, J.; Li, W. Estimating forest stock volume in Hunan Province, China, by integrating in situ plot data, Sentinel-2 images, and linear and machine learning regression models. Remote Sens. 2020, 12, 186. [Google Scholar] [CrossRef] [Green Version]

- Mutanga, O.; Kumar, L. Google earth engine applications. Remote Sens. 2019, 11, 591. [Google Scholar] [CrossRef] [Green Version]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Zhu, J.-J.; Li, F.-Q. Forest degradation/decline: Research and practice. Ying Yong Sheng Tai Xue Bao J. Appl. Ecol. 2007, 18, 1601–1609. Available online: https://europepmc.org/abstract/MED/17886658 (accessed on 18 January 2022).

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Zhang, Z.; Sabuncu, M. Generalized cross entropy loss for training deep neural networks with noisy labels. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 8792–8802. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Hand, D.; Christen, P. A note on using the F-measure for evaluating record linkage algorithms. Stat. Comput. 2018, 28, 539–547. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M.; Sternberg, S.R.; Zhuang, X. Image Analysis Using Mathematical Morphology. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 4, 532–550. [Google Scholar] [CrossRef]

- Mani, J.K.; Varghese, A.O. Remote Sensing and GIS in Agriculture and Forest Resource Monitoring. In Geospatial Technologies in Land Resources Mapping, Monitoring and Management; Reddy, G.P.O., Singh, S.K., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 377–400. [Google Scholar]

| Data Series | Name of Data | Data Source | Spatial Resolution (m) | Time |

|---|---|---|---|---|

| Remote sensing data | Sentinel-2 L1C | European Space Agency (ESA) | 10 | 1 January 2017–31 March 2017, 1 April 2017–30 June 2017, 1 July 2017–30 September 2017, 1 October 2017–31 December 2017, 1 January 2018–31 March 2018, 1 April 2018–30 June 2018, 1 July 2018–30 September 2018, 1 October 2018–31 December 2018, 1 January 2019–31 March 2019, 1 April 2019–30 June 2019, 1 July 2019–30 September 2019, 1 October 2019–31 December 2019, 1 January 2020–15 April 2020, 16 April 2020–30 June 2020, 1 July 2020–30 September 2020, 1 October 2020–31 December 2020, 1 January 2021–31 March 2021, 1 April 2021–30 June 2021, 1 July 2021–30 September 2021, 1 October 2021–31 December 2021 |

| Vector data | Woodland Resources Map | Academy of Forestry Inventory and Planning, State Forestry Administration, P.R. China | / | 2020 |

| Administrative boundary | China Earth System Science Data Sharing Network | / | 2016 |

| Dataset | Train Dataset | Val Dataset | Test Dataset | All Dataset |

|---|---|---|---|---|

| Number of samples | 1148 | 144 | 145 | 1437 |

| Percentage of positive sample area (%) | 1.05 | 0.94 | 1.26 | 1.06 |

| Model | Encoder | Loss | Precision | Recall | F1-score | Mean Time/min |

|---|---|---|---|---|---|---|

| STANet | resnet18 | CELoss | 0.7081 | 0.6380 | 0.6712 | 1.55 |

| DeepLabV3+ | efficientnet-b0 | CELoss | 0.7714 | 0.7178 | 0.7437 | 12.35 |

| Linknet | efficientnet-b0 | CELoss | 0.7854 | 0.7220 | 0.7524 | 0.86 |

| U-Net | efficientnet-b0 | CELoss | 0.7894 | 0.7415 | 0.7647 | 0.82 |

| U-Net++ | efficientnet-b0 | CELoss | 0.7954 | 0.7478 | 0.7709 | 0.89 |

| Year | Number of Sample Areas | The True Area of Change/km2 | Predicted Area of Change/km2 | Precision | Recall | F1-score |

|---|---|---|---|---|---|---|

| 2017 | 8 | 7.164 | 6.948 | 0.8091 | 0.7848 | 0.7968 |

| 2018 | 9 | 13.506 | 12.785 | 0.8587 | 0.8129 | 0.8352 |

| 2019 | 8 | 13.677 | 13.184 | 0.8051 | 0.7761 | 0.7904 |

| 2020 | 11 | 12.151 | 12.312 | 0.7494 | 0.7593 | 0.7543 |

| 2021 | 12 | 14.608 | 14.267 | 0.8388 | 0.8193 | 0.8289 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiang, J.; Xing, Y.; Wei, W.; Yan, E.; Jiang, J.; Mo, D. Dynamic Detection of Forest Change in Hunan Province Based on Sentinel-2 Images and Deep Learning. Remote Sens. 2023, 15, 628. https://doi.org/10.3390/rs15030628

Xiang J, Xing Y, Wei W, Yan E, Jiang J, Mo D. Dynamic Detection of Forest Change in Hunan Province Based on Sentinel-2 Images and Deep Learning. Remote Sensing. 2023; 15(3):628. https://doi.org/10.3390/rs15030628

Chicago/Turabian StyleXiang, Jun, Yuanjun Xing, Wei Wei, Enping Yan, Jiawei Jiang, and Dengkui Mo. 2023. "Dynamic Detection of Forest Change in Hunan Province Based on Sentinel-2 Images and Deep Learning" Remote Sensing 15, no. 3: 628. https://doi.org/10.3390/rs15030628

APA StyleXiang, J., Xing, Y., Wei, W., Yan, E., Jiang, J., & Mo, D. (2023). Dynamic Detection of Forest Change in Hunan Province Based on Sentinel-2 Images and Deep Learning. Remote Sensing, 15(3), 628. https://doi.org/10.3390/rs15030628