1. Introduction

Freshwater estuaries and wetlands are among the most productive and biodiverse ecosystems that provide beneficial services for people and wildlife. Along with socio-economic contributions to society such as fishing and recreation, wetlands improve the water quality of nearby water bodies, mitigate floods in coastal areas, sequestrate carbon, and maintain nutrient cycling from nearby agriculture areas [

1]. In recent decades, a dramatic decline in wetlands has been observed due to the combined effects of climate change and human influence [

2,

3]. Wetland plants play one of the most important roles in the food chain and energy flow of wetlands. They provide critical habitat to diverse communities from bacteria to fish and birds, and they are seen as a biological indicator of wetlands’ health [

4]. As a result of major hydrological changes, human interaction, urbanization, and the increase in agricultural areas, urban wetlands’ native species are often replaced with invasive plants, which have negative implications on the ecosystem conditions [

5]. Therefore, a better understanding of the spatial distribution of wetland plants and their classification is critical for wetland monitoring and their sustainable development.

Satellite and airborne multispectral imagery have been widely used to collect spatial and temporal information about wetland ecosystems at different scales. While the use of Landsat [

6,

7,

8] and Sentinel-2 imagery [

9,

10] is common and considered a standard approach in mapping wetland vegetation [

11], their relatively coarse spatial resolution remains a challenge. Thus, high-resolution images (≤5 m spatial resolution) are attracting interest from geospatial scientists who have recognized the advantages of their spectral information for detecting small features [

12]. To use the advantage of high-resolution satellite sensors, the Commercial Small-satellite (Smallsat) Data Acquisition (CSDA) program ensures a cost-effective means of monitoring wetlands [

13]. Ref. [

14] used RapidEye images to classify wetland vegetation communities in iSimangaliso Wetland Park with a high overall accuracy of 86%. Refs. [

15,

16] showed the great potential of high-resolution QuickBird and IKONOS imagery in mapping plant communities and monitoring invasive plants in the Hudson River estuary by achieving the overall accuracy ranges of 64.9–73.6% and 45–77%, respectively. High classification accuracies were reached in several studies where WorldView-2 was used [

17,

18]. Ref. [

19] used WorldView-3 to classify wetland vegetation from the Millrace Flats Wildlife Management Area with a high overall accuracy of 93.33%. High accuracies were achieved with WorldView-3 in studies conducted by [

20] over the Mai Po Nature Reserve of Hong Kong (up to 94.4%), and by [

21] over the Akgol wetland area of Konya, Turkey (up to 96%).

In recent years, the use of an unmanned aerial vehicle (UAV) for mapping and monitoring wetland plants is seen as an economical way of obtaining remote sensing images at any desired time, and as a great addition to remote sensing research due to very high (sub-meter) spatial resolution [

22,

23,

24]. UAVs are also seen as an alternative to the tedious fieldwork required to conduct and assess supervised classification. Due to on-demand remote sensing capabilities and very high spatial resolution, UAV images can be used to validate airborne and satellite data with a minimal disturbance to ecosystems [

12,

25]. With the development of UAV technology, a new idea of fusing and combining satellite imagery with UAV data or UAV-derived information using different strategies has been steadily emerging since 2008. In the scientific literature review, ref. [

25] identified four main strategies of synergy between UAV and satellite imagery used in more than a hundred peer-reviewed articles across different fields: data comparison, multiscale explanation, data fusion, and model calibration. In the ‘data comparison’ strategy, the complementary natures of UAV and satellite data, such as a larger extent of satellite and very fine spatial resolution of UAV imagery, are exploited for the identification of features. The ‘multiscale explanation’ strategy combines information acquired at different scales to interpret data by capturing regional spatial and temporal trends with satellites and extracting fine features with UAVs. The strongest synergy between UAV and satellite data is obtained in ‘data fusion’, where different spatial, spectra, and temporal information of each data source are used to derive a new dataset. In the ‘model calibration’ strategy, UAV imagery is used to calibrate and validate satellite data, for instance, in the process of supervised classification. Furthermore, the study of [

25] found that the synergy between UAV and satellite imagery was largely utilized in studies related to agriculture [

26,

27], forest [

28], or land cover [

29], and that the concept is under-exploited in wetland-related studies. Classification of wetland vegetation is mainly performed by combining WorldView-2 imagery and LiDAR data [

30] or Landsat 8 OLI, ALOS-1 PALSAR, Sentinel-1, and LiDAR-derived topographic metrics [

31,

32], and only a handful of wetland-related studies have considered the UAV and satellite synergy. The approach was shown to be promising in the studies of [

12,

33], where UAV-derived information was combined with the WorldView-3 and RapidEye or fused with the sub-meter wideband multispectral satellite JL101K imagery, respectively. It is expected that UAV imagery will be progressively used in the process of data fusion with satellite data and/or for their calibration and validation.

The importance of machine learning techniques in the classification of wetland vegetation has been recognized for some time already. Machine learning techniques can handle non-linearity and complex training data that are typical for wetlands as well as complex relations between multi-source input data and estimation of parameters [

25]. However, their performance cannot be easily generalized as the results depend on training data, input features, and number of classes. Methods such as random forest and decision trees [

30,

34], rule-based [

21,

35], support vector machine [

12,

18,

20,

21,

36,

37], k-Nearest Neighbor [

17], and neural network [

19,

22] are now commonly used for different combinations of input features. For instance, ref. [

12] considered texture, normalized difference vegetation index, LiDAR, and digital elevation model (DEM) to improve classification accuracy in the UAV-WorldView-3 integrated approach using the SVM while [

19] reported that the principal component analysis (PC)-based feature selection technique and vegetation indices were effective in improving classification accuracy using the rule-based method and SVM classifiers to classify WorldView-3 imagery.

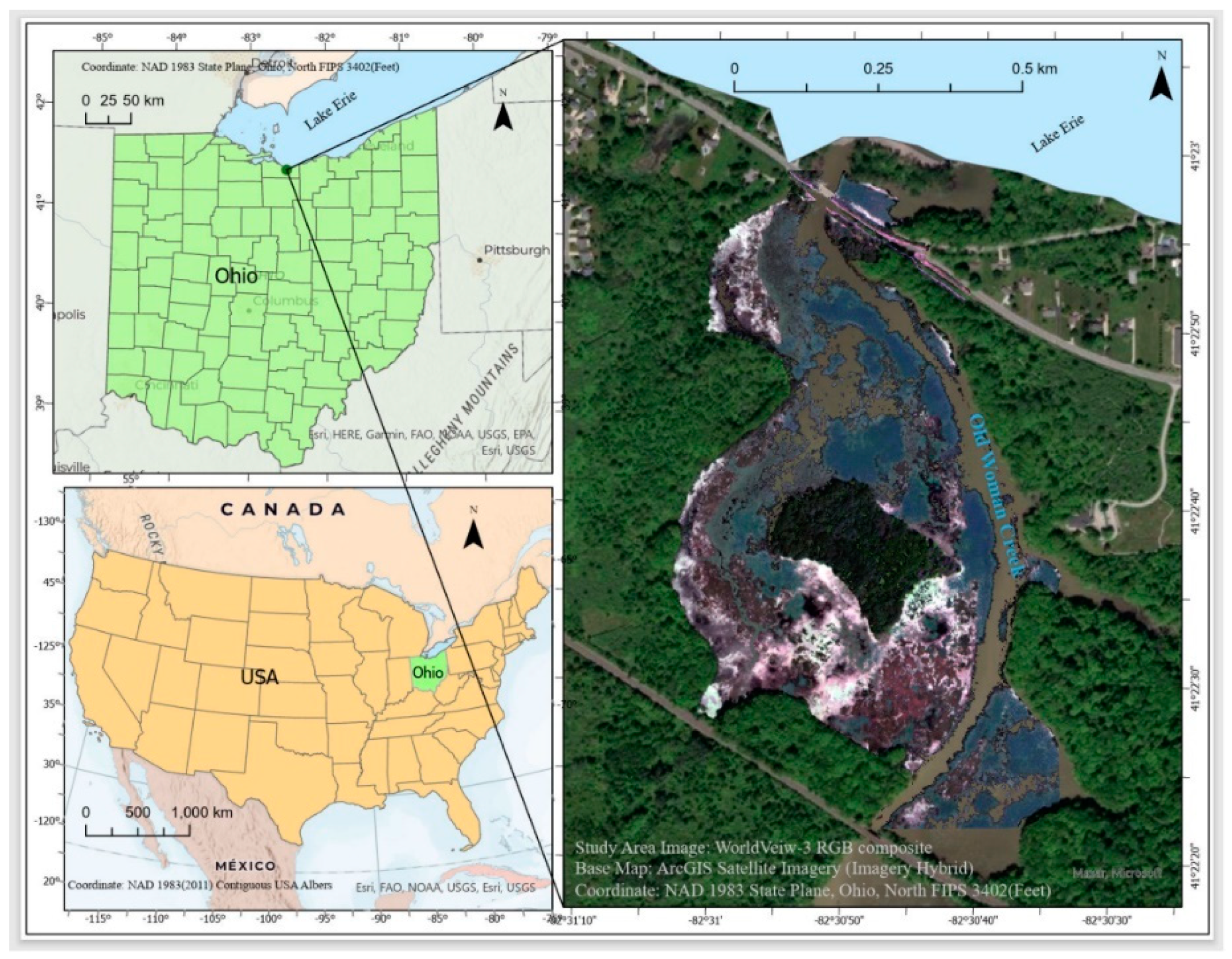

To demonstrate the ability of the very high spatial resolution of UAV-derived information in providing complementary information to the super spectral observation capabilities of WorldView-3, the current study underlines the importance of classifying wetland plants using machine learning techniques and the UAV-WorldView-3 integrated approach. The focus is on mapping five dominant wetland plants in the Old Woman Creek (OWC) estuary, USA, while using a UAV image to train and validate an array of input features and machine learning algorithms to classify WorldView-3 satellite imagery. The goal is to contribute toward filling research gaps in the classification of wetland plants based on the integration of UAV and high-resolution satellite imagery while selecting the best combinations of spectral information, including single bands, vegetation indices, and statistical features such as texture and principal components (PCs). Two classification approaches used in this study are: (1) pixel-based classification using maximum likelihood (ML), support vector machine (SVM), and neural network (NN) classifiers, and (2) object-based classification using naïve Bayes (NB), support vector machine (SVM), and k-nearest neighbors (k-NN) classifiers. The aim is to compare the performance of parametric (ML, NN, NB) and nonparametric classifiers (SVM, k-NN), and to compare the performance of the same classifier (SVM) for both the pixel- and object-based approaches.

4. Discussion

In this study, an array of machine learning classifiers in combination with different features is used to demonstrate the advantage of the integration of UAV and WorldView-3 imagery in mapping dominant plants in the OWC estuary. All classifiers perform well with an overall accuracy of over 85% (

Figure 9). In general, the best results are achieved with the eight bands with or without additional features. The best-performing classifiers are pixel-based SVM and object-based NB with an overall accuracy of over 93% (93.76% and 93.30%, respectively). The performance of the traditional ML classifier is also strong with an overall accuracy of 92.29%; it exhibits an inconsiderably lower overall accuracy than pixel-based SVM and NB. The k-NN classifier demonstrates the weakest performance of all, while the NN and object-based SVM classifier produce good results with overall accuracy being slightly over 90% (

Figure 10).

Our results are consistent with the study of [

12], where the same UAV-WorldView-3 integration concept was used in the classification of nine habitat classes in estuarine environments of the Rachel Carson Reserve in North Carolina, USA. Their results showed a classification accuracy of 93% for the integration of UAV with WorldView-3. In their approach ROIs were directly derived from UAV imagery, in which case they had to perform a validation of UAV-derived ROIs, while we used field training and validation samples to create UAV-derived ROIs. Similarly, ref. [

33] found that the combination of UAV and JK101K multispectral imagery achieved a higher overall accuracy (82.8%) than the original JK101K image itself in the classification of vegetation communities of the karst wetland in Guilin, China, using various machine learning algorithms. To validate the UAV-WorldView-3 integration approach, we compared our results with the study of [

64] who classified vegetation plants in the Old Woman Creek estuary based on the same WorldView-3 dataset. The UAV-WorldView-3 integration in our study has yielded a considerably higher overall accuracy for all classifiers when compared with the study of [

64] who reported an overall accuracy of <78% for SVM for a smaller number of classes. Ref. [

64] also found that the best overall accuracy in the classification of wetland plants in the Old Woman Creek was reached by using eight bands of WorldView-3. When compared with the study of [

22], where UAV-derived classifications were conducted over the Old Woman Creek estuary in 2017 for the same number of plant classes, our pixel-based classification results suggest that the fine spectral information of WorldView-3 imagery in combination with the UAV data produces higher overall accuracies than the UAV itself based on single bands. In other words, additional information, such as time series of NDVI and CHM must be used in the UAV-driven classifications to exceed the overall accuracies reached in the current study. Both studies suggest that additional features are needed to improve object-based classification. Ref. [

18] demonstrated that ML and SVM exhibited somewhat lower overall accuracy (75% and 71%, respectively) than observed in our study when single bands of WorldView-2 were used to detect 17 classes of freshwater marsh species/land-cover classes in the Wax Lake delta. As in the current study, ML and SVM did not perform significantly differently, and the errors of omission and commission were varied vastly for different combinations of single bands. Ref. [

37] reported an overall accuracy of 75.77% for SVM when used over several Lake Erie wetlands in classification of land cover and plant species such as

Phragmites. Generally, the classification of plant species represents a greater challenge than the classification of land cover in a complex wetland environment, suggesting that the overall accuracies achieved in our study are highly satisfactory.

Performance of pixel-based and object-based classifications for wetland vegetation classification varies in the literature [

65,

66,

67]. However, the overall accuracy can be considerably affected by segmentation scale selection and thus pixel-based results are generally accepted as more reliable [

67]. Based on our observation of the iteration results, we suggest that pixel-based classifiers reach a higher overall accuracy with less input features. This generally aligns with the study of [

68]. It is worth mentioning that pixel-based SVM reaches its best performance with the radial basis function (RBF) kernel while object-based SVM performs best with the linear kernel in this study. The radial biased kernel function performed best for pixel-based SVM, and the linear kernel function performed best for the object-based classification. For the radial biased kernel function, C = 100 and gamma = 0.001 were defined as parameters, while for the linear kernel function C = 2 was chosen. Several studies used the RBF kernel effectively for both pixel-based and object-based classification in their studies [

22,

69].

There is not a consistent trend found in this study with respect to which additional features significantly improve the overall accuracy of the classifiers. PC1 and PC2 do not make any major impact on the pixel-based classifications, while, for the object-based classifications, PC2 contributes to the results when combined with six bands. The importance of vegetation indices, NDVI in particular, is obvious for almost all classifiers. Several studies demonstrated the effectiveness of NDVI derived from WorldView-3/2 near-infrared bands. Each near-infrared band of WorldView-3 (N1 and N2) contains a wide spectral range (135 nm and 182 nm, respectively) that is essential in providing useful information in classification [

17,

70]. While some researchers used only the N2 band for calculating NDVI and found it effective for improving classification accuracy [

71,

72,

73], Refs. [

70] and [

17] calculated two NDVIs using N1 and N2 bands separately and found both of them effectively increased the classification accuracy. In this study, the best performance of classifiers was reached when NDVI and variance images are based on the N band (average of N1 and N2). Variance is an important input for both ML and NB. Given that both ML and NB are parametric and Bayesian classifiers, this common feature may be related to their statistical structure. No other GLCM information improved classification. Any increase in the number of features beyond those shown in the tables resulted in reduced overall accuracy, which is consistent with some other studies [

74]. Perhaps a higher number of features makes the classification process complex for classifiers to perform at their best [

75].

In the current study, pixel-based SVM exhibits relatively low errors of omission and commission for most of the plant types, except for

Phragmites. We surmise that the small size of

Phragmites patches contributes to their low separability.

Phragmites are mainly misclassified as cattail, most likely due to the similarity of their spectral signatures (

Figure 5). It is suggested that a very high spatial resolution of UAV is required to detect this plant in the OWC estuary where the error of omission was found to be as low as 1.59% [

22,

76]. The use of temporal data was also found to be important to map

Phragmites. Several studies confirmed that

Phragmites reflect radiation that is distinguishable from other species during the end of the summer or early summer, which helps in detecting

Phragmites and other invasive species [

17,

76,

77].

The final point to strengthen the recommendation of pixel-based SVM to map wetland plants in a setting similar to the OWC estuary while using the UAV-WorldView-3 integration approach is that this classification method (1) exhibits the best overall performance based on eight bands with no other additional features, (2) generally produces the lowest OEs and CEs for most of the plant types, (3) is the least variable over different combinations of input features, and (4) is time and effort efficient when compared with the object-based classification methods [

78]. Overall, the results demonstrate that UAV can be highly effective in training and validating satellite imagery in the classification of wetland vegetation using various classifiers. We anticipate that the approach will become widely used in the future and that this study produces solid results to encourage further research.

Although the in-situ sampling points were placed within the relatively homogeneous strata (

Figure 3 and

Figure 4), species identification of dominant plants in ROIs of the UAV and WorldView-3 images resulted in some uncertainties due to the variability of spectral information acquired over each ROI. In particular, the process of clustering of UAV pixels (the Grow ROIs function) used in this study does not have to necessarily match with the real distribution of each dominant species. As the function is based on a similar spectral signal, the automatic detection may select different species that share similar biochemical properties [

79]. However, given that each cluster was relatively small (maximum nine UAV pixels) and clearly visible in the field and on the UAV image, the errors introduced by the function are minor in our study. The selecting process of WorldView-3 ROIs over the UAV clustered pixels, however, may impact the species identifications to a somewhat greater extent, as each WorldView-3 pixel covers a larger area. Combining the two processes, several factors are expected to alter the ROI’s reflectance of a selected plant species acquired over a complex wetland ecosystem such as the OWC estuary, and they are mainly related to: (1) the presence of a certain percentage of water, soil, and/or other-than-dominant plant species, as observed in some sampling areas; (2) the slight differences in the health status of plants from the same species, as observed in some locations; (3) the flowering stage of some individual plants; (4) differences in intrinsic properties and genotype of each individual plant; and (5) ill-posed surface reflectance retrieval where some individuals of different species may have the same or a very similar spectral signature [

79]. The leaf spectral information, collected during the field campaign for each dominant plant species, confirms our observations and expectations; the variability of spectral reflectance within the same species is relatively high for all plant types (

Figure 5). Some uncertainties in this study may be related to the inability to identify hidden thin patches of

Phragmites under the dense tree canopies near the estuary, and to some shadows created by tree canopies despite the masking of forested area. We do not expect any major uncertainties related to the field sampling design of ROIs or delineation of ROIs on the UAV image as the in-situ sampling points were placed with the homogeneous strata where the spectral variability of a given plant type was not considerable and soil exposure was minimal [

79]. The segmentation process, although carefully parametrized, could cause some minor errors and confusion of

Phragmites and cattail due to their similar spectral signatures. The selections of segmentation scale and parameters have an impact on the image-object identification for subsequent input into a model [

67], and thus we suggest to further explore the impacts of segmentation scale and parameters on model performance. The cross-validation method, applied to compensate for the relatively low (but still sufficient) number of samples per class, minimize the potential uncertainties of the results due to the sampling design. Despite the fact that the UAV technology has numerous advantages such as high spatial resolution, the ability to acquire UAV imagery at a time specified by a user, low-labor intensity, cost-effectiveness, and reduced data variability due to the human factor, more work has to be performed in data standardization, processing, and results interpretation to better evaluate the classification and change detection processes [

80,

81,

82]. While satellite data assure standardized data formats and the reliable pre-processing and inter-comparison of satellite products, protocols for acquisition and pre-processing of UAV imagery are user specific and are not consistent among users. Common causes of errors such as misregistration and inconsistency of reflectance measurements between different UAV sensors, and measurement uncertainties caused by different qualities of UAV sensors are some major limitations related to the interoperability and intercalibration that scientists are facing.