An Unsupervised Saliency-Guided Deep Convolutional Neural Network for Accurate Burn Mapping from Sentinel-1 SAR Data

Abstract

1. Introduction

- Proposing a fully automatic framework for unsupervised burned area mapping.

- Developing a saliency-guided network for a particular case of burned area detection using Sentinel-1 C-band intensity data.

- Investigating the potential of DCNN for saliency-guided classification methods.

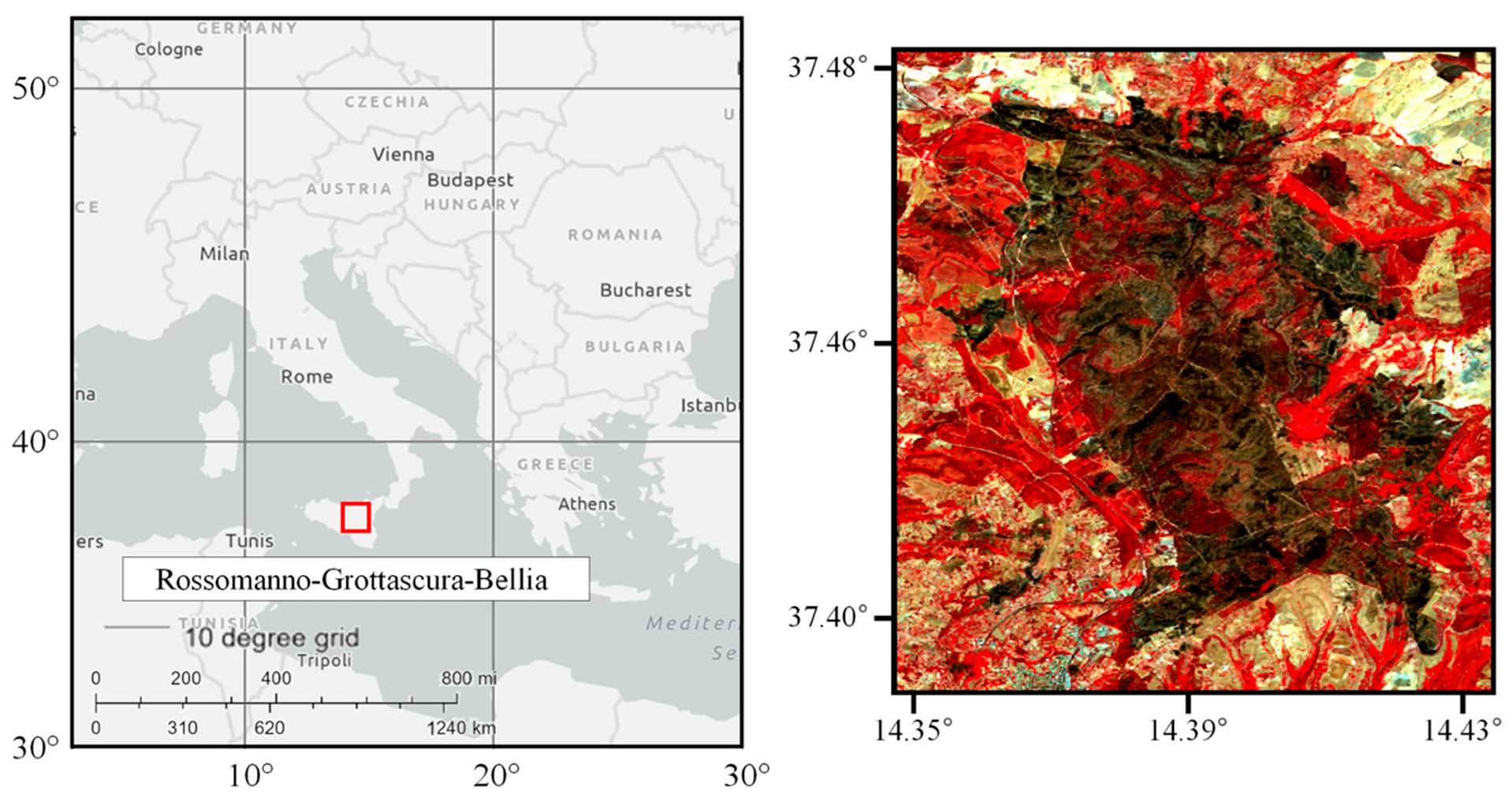

2. Study Areas and Data

2.1. Study Area

2.2. Sentinel-1 SAR Data

2.3. Reference Data

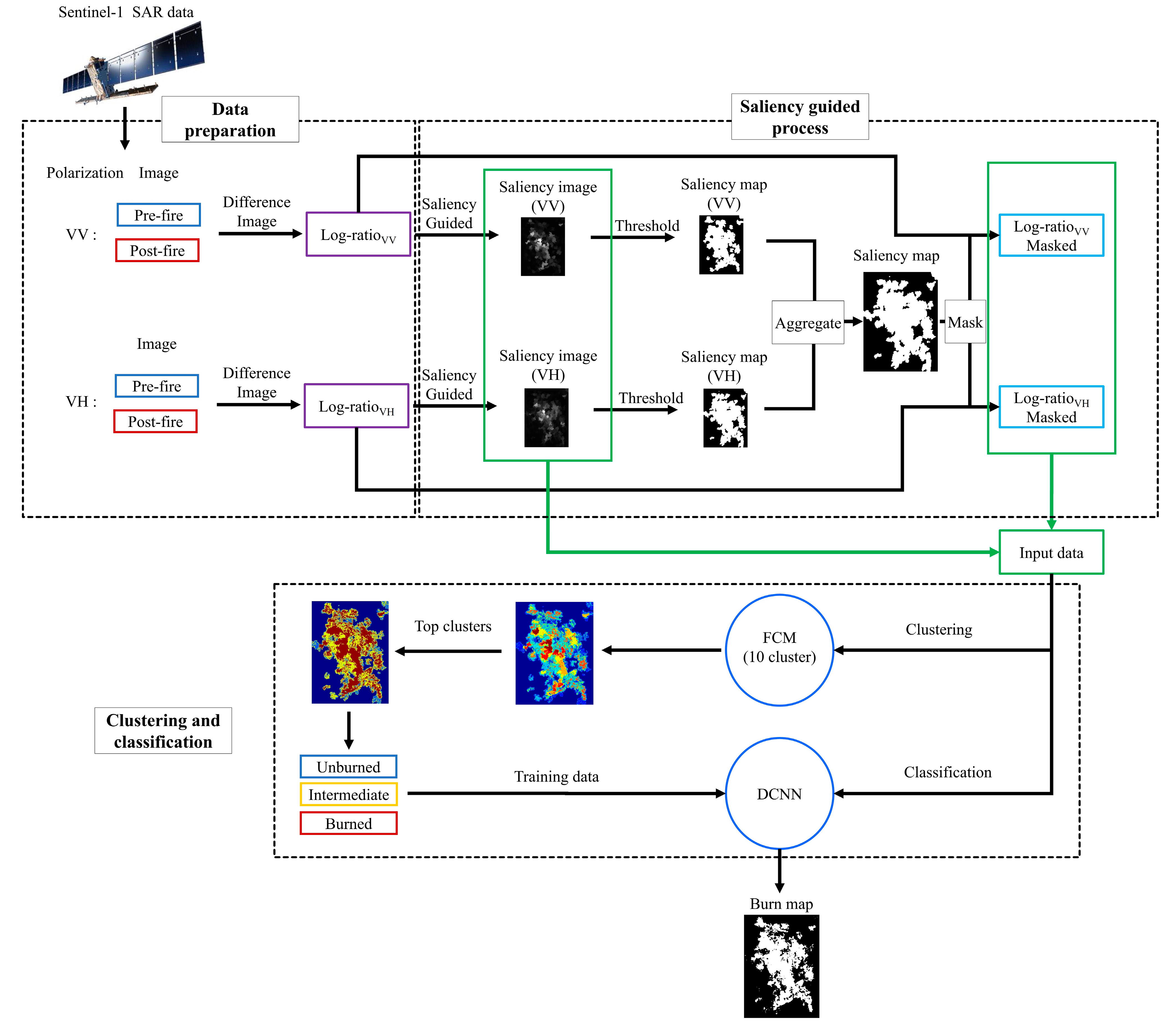

3. Proposed Methodology

3.1. Log-Ratio

3.2. Salient Region Detection

3.3. Fuzzy C-Means Clustering

3.4. Deep Convolutional Neural Network

3.5. Evaluation Parameters

4. Results

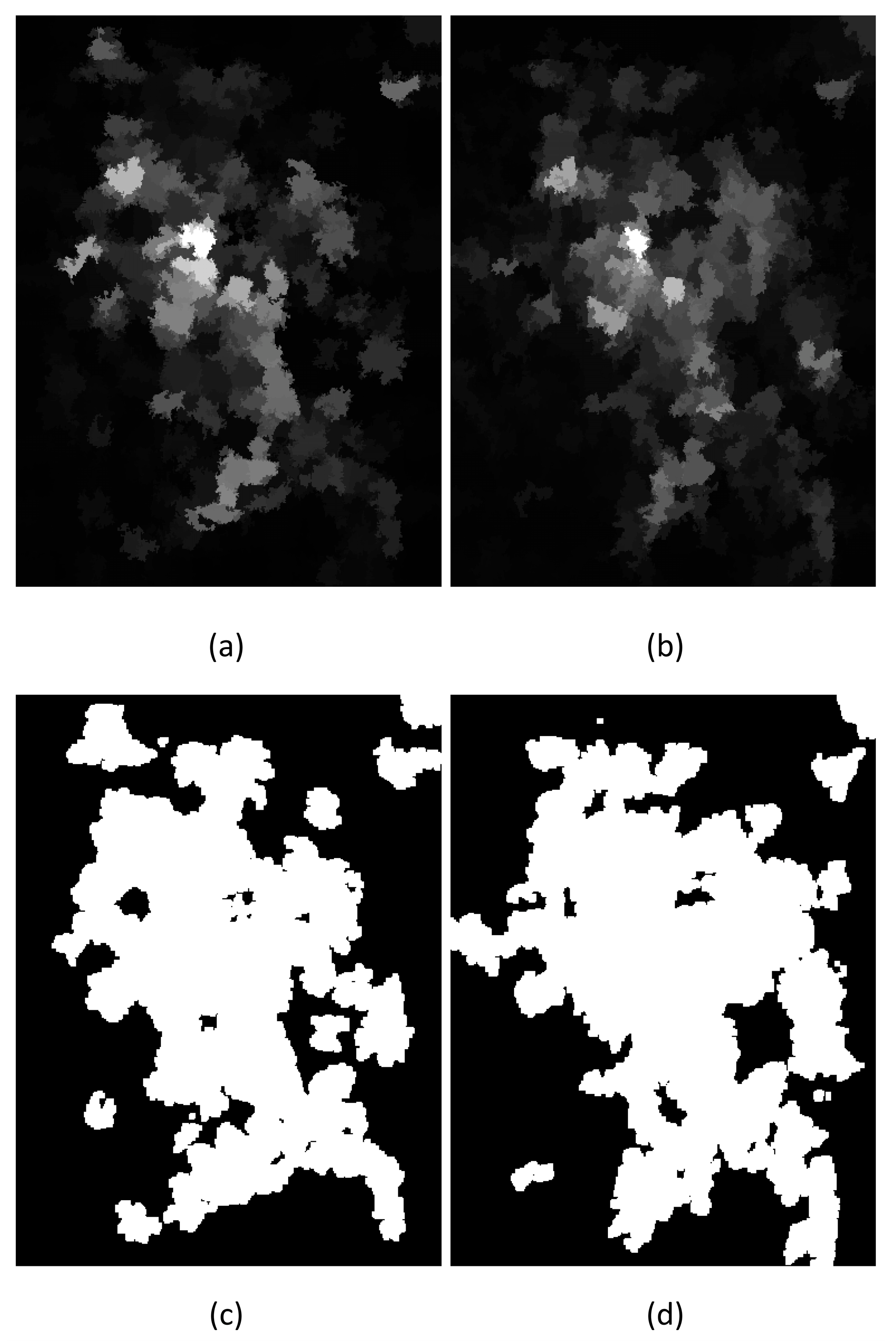

4.1. Saliency-Guided Image and Map

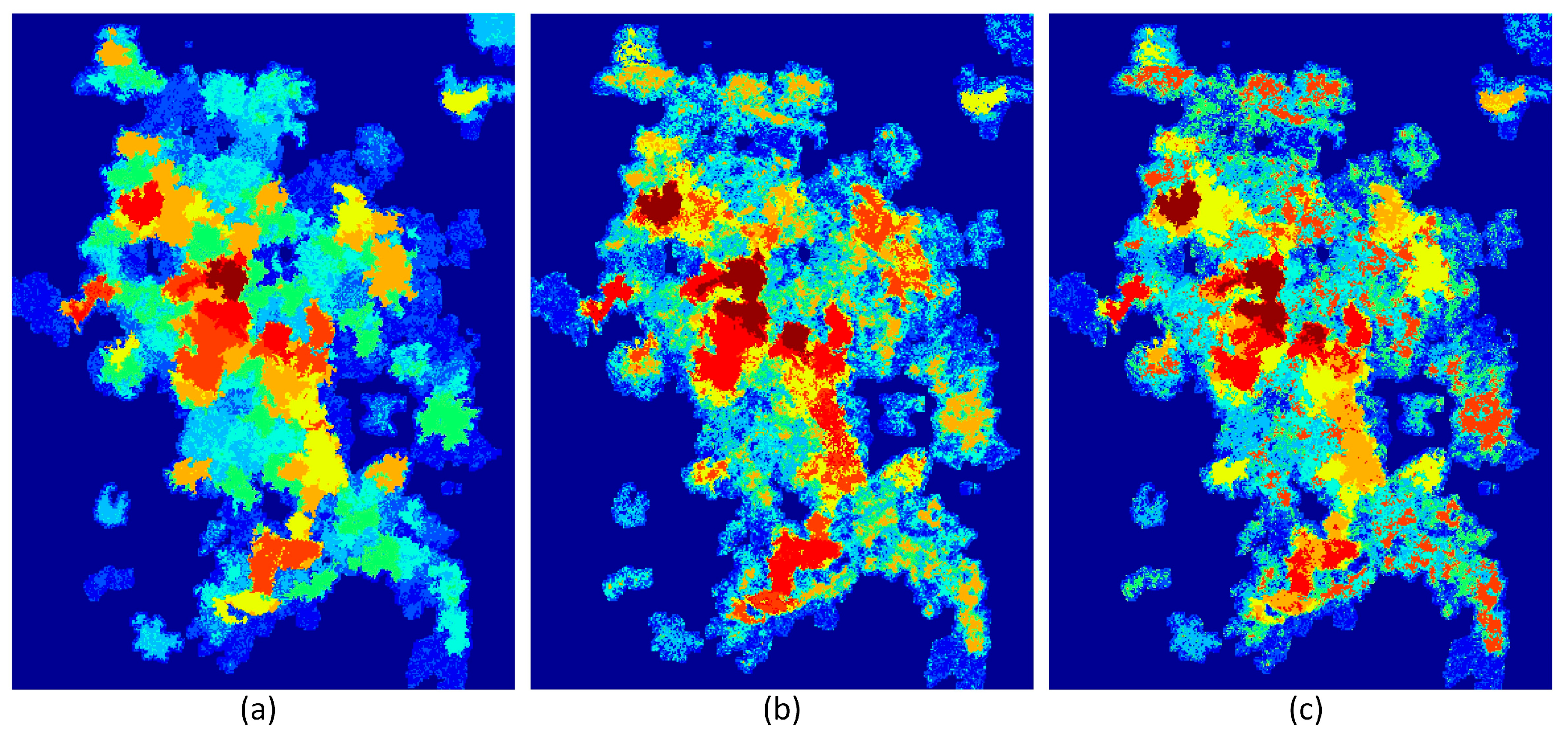

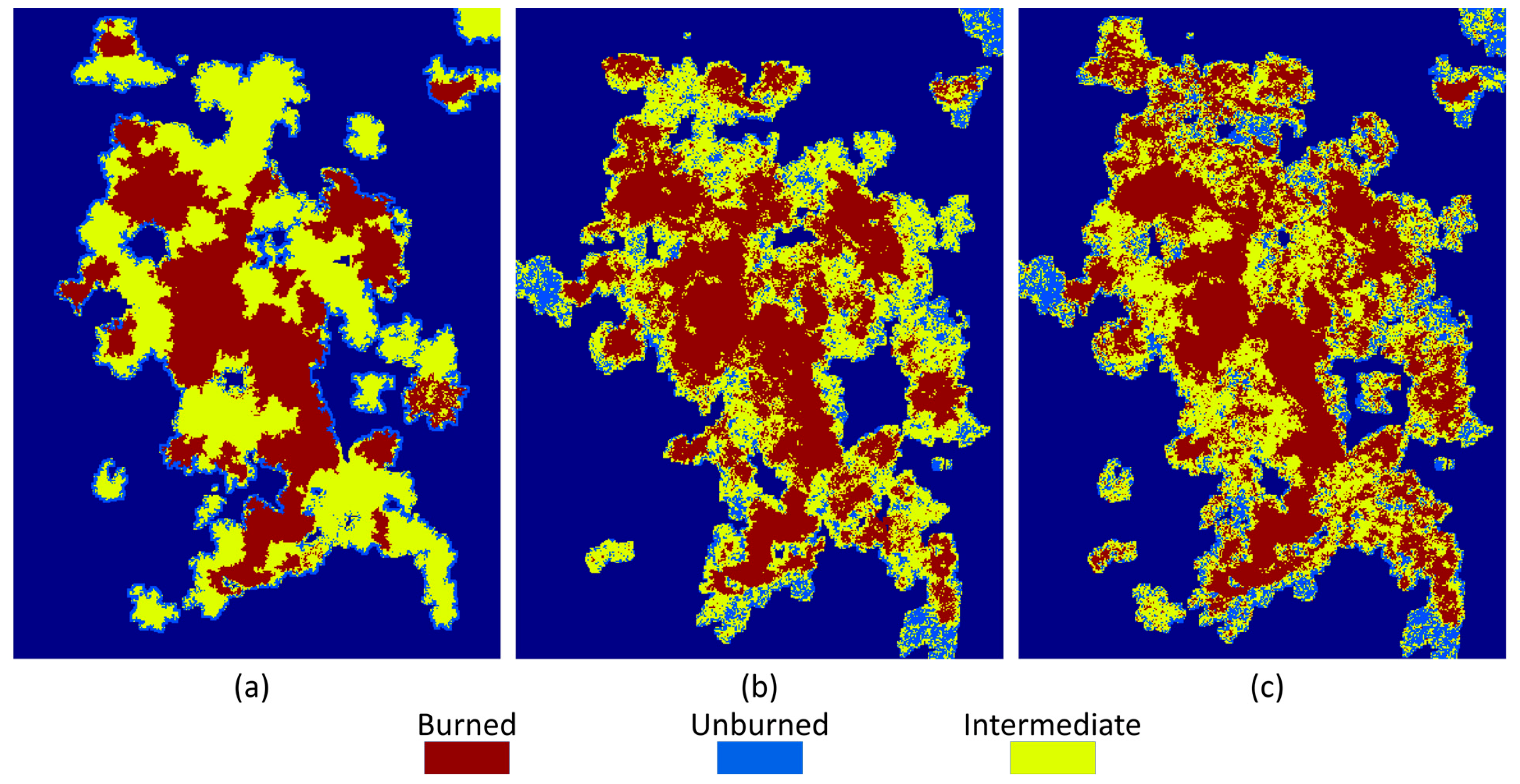

4.2. Fuzzy C-Means Clustering

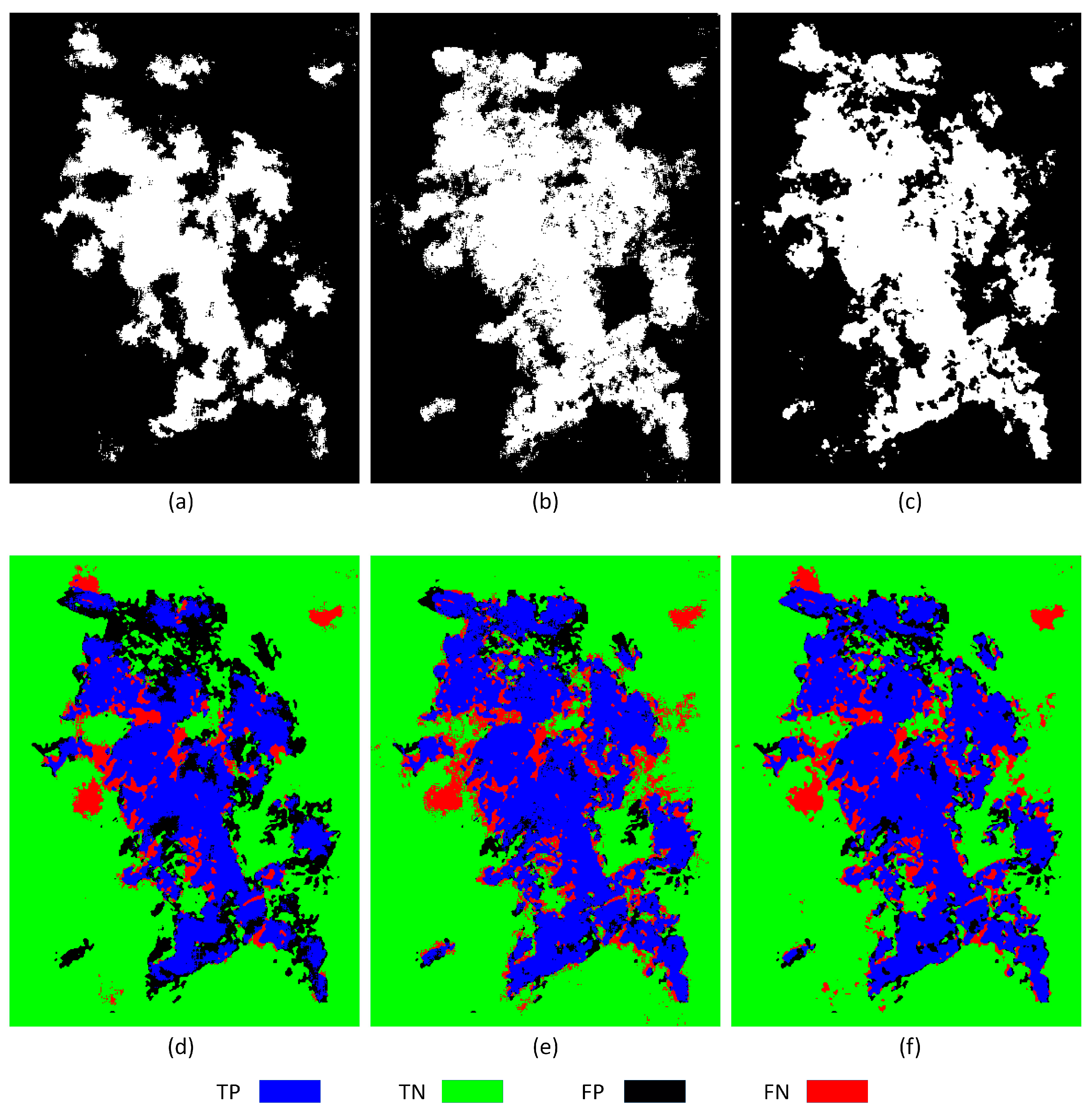

4.3. Deep Convolutional Neural Network

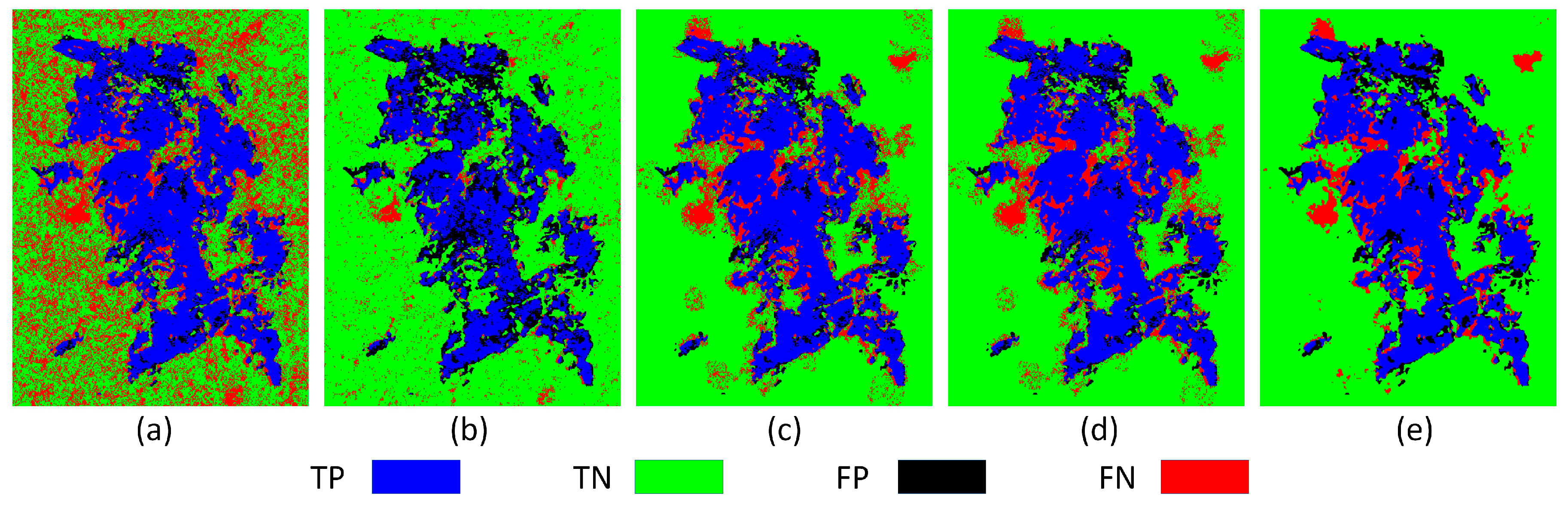

4.4. Comparing to Other Methods

4.5. Efficiency Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chuvieco, E. (Ed.) Earth Observation of Wildland Fires in Mediterranean Ecosystems; Springer: Berlin/Heidelberg, Germany, 2009; ISBN 978-3-642-01753-7. [Google Scholar]

- Rosa, I.M.D.; Pereira, J.M.C.; Tarantola, S. Atmospheric Emissions from Vegetation Fires in Portugal (1990–2008): Estimates, Uncertainty Analysis, and Sensitivity Analysis. Atmos. Chem. Phys. 2011, 11, 2625–2640. [Google Scholar] [CrossRef]

- Gitas, I.; Mitri, G.; Veraverbeke, S.; Polychronaki, A. Advances in Remote Sensing of Post-Fire Vegetation Recovery Monitoring—Review. In Remote Sensing of Biomass—Principles and Applications; Fatoyinbo, L., Ed.; InTech: Vienna, Austria, 2012; ISBN 978-953-51-0313-4. [Google Scholar]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; San Miguel, J.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical Background and Current Developments for Mapping Burned Area from Satellite Earth Observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Lasaponara, R.; Tucci, B. Identification of Burned Areas and Severity Using SAR Sentinel-1. IEEE Geosci. Remote Sens. Lett. 2019, 16, 917–921. [Google Scholar] [CrossRef]

- Roy, D.P.; Lewis, P.E.; Justice, C.O. Burned Area Mapping Using Multi-Temporal Moderate Spatial Resolution Data—A Bi-Directional Reflectance Model-Based Expectation Approach. Remote Sens. Environ. 2002, 83, 263–286. [Google Scholar] [CrossRef]

- Miller, J.D.; Knapp, E.E.; Key, C.H.; Skinner, C.N.; Isbell, C.J.; Creasy, R.M.; Sherlock, J.W. Calibration and Validation of the Relative Differenced Normalized Burn Ratio (RdNBR) to Three Measures of Fire Severity in the Sierra Nevada and Klamath Mountains, California, USA. Remote Sens. Environ. 2009, 113, 645–656. [Google Scholar] [CrossRef]

- Loboda, T.V.; Hoy, E.E.; Giglio, L.; Kasischke, E.S. Mapping Burned Area in Alaska Using MODIS Data: A Data Limitations-Driven Modification to the Regional Burned Area Algorithm. Int. J. Wildland Fire 2011, 20, 487. [Google Scholar] [CrossRef]

- Maier, S.W.; Russell-Smith, J. Measuring and Monitoring of Contemporary Fire Regimes in Australia Using Satellite Remote Sensing. In Flammable Australia: Fire Regimes, Biodiversity and Ecosystems in a Changing World; CSIRO Publishing: Collingwood, VIC, Australia, 2012; pp. 79–95. [Google Scholar]

- Boschetti, L.; Roy, D.P.; Justice, C.O.; Humber, M.L. MODIS–Landsat Fusion for Large Area 30 m Burned Area Mapping. Remote Sens. Environ. 2015, 161, 27–42. [Google Scholar] [CrossRef]

- Verhegghen, A.; Eva, H.; Ceccherini, G.; Achard, F.; Gond, V.; Gourlet-Fleury, S.; Cerutti, P. The Potential of Sentinel Satellites for Burnt Area Mapping and Monitoring in the Congo Basin Forests. Remote Sens. 2016, 8, 986. [Google Scholar] [CrossRef]

- Quintano, C.; Fernández-Manso, A.; Fernández-Manso, O. Combination of Landsat and Sentinel-2 MSI Data for Initial Assessing of Burn Severity. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 221–225. [Google Scholar] [CrossRef]

- Tanase, M.A.; Santoro, M.; de la Riva, J.; Prez-Cabello, F.; Le Toan, T. Sensitivity of X-, C-, and L-Band SAR Backscatter to Burn Severity in Mediterranean Pine Forests. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3663–3675. [Google Scholar] [CrossRef]

- Washaya, P.; Balz, T.; Mohamadi, B. Coherence Change-Detection with Sentinel-1 for Natural and Anthropogenic Disaster Monitoring in Urban Areas. Remote Sens. 2018, 10, 1026. [Google Scholar] [CrossRef]

- Engelbrecht, J.; Theron, A.; Vhengani, L.; Kemp, J. A Simple Normalized Difference Approach to Burnt Area Mapping Using Multi-Polarisation C-Band SAR. Remote Sens. 2017, 9, 764. [Google Scholar] [CrossRef]

- Imperatore, P.; Azar, R.; Calo, F.; Stroppiana, D.; Brivio, P.A.; Lanari, R.; Pepe, A. Effect of the Vegetation Fire on Backscattering: An Investigation Based on Sentinel-1 Observations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4478–4492. [Google Scholar] [CrossRef]

- De Luca, G.; Silva, J.M.N.; Modica, G. A Workflow Based on Sentinel-1 SAR Data and Open-Source Algorithms for Unsupervised Burned Area Detection in Mediterranean Ecosystems. GISci. Remote Sens. 2021, 58, 516–541. [Google Scholar] [CrossRef]

- Khosravi, I.; Safari, A.; Homayouni, S.; McNairn, H. Enhanced Decision Tree Ensembles for Land-Cover Mapping from Fully Polarimetric SAR Data. Int. J. Remote Sens. 2017, 38, 7138–7160. [Google Scholar] [CrossRef]

- Qi, Z.; Yeh, A.G.-O.; Li, X.; Lin, Z. A Novel Algorithm for Land Use and Land Cover Classification Using RADARSAT-2 Polarimetric SAR Data. Remote Sens. Environ. 2012, 118, 21–39. [Google Scholar] [CrossRef]

- Zhang, L.; Zou, B.; Zhang, J.; Zhang, Y. Classification of Polarimetric SAR Image Based on Support Vector Machine Using Multiple-Component Scattering Model and Texture Features. EURASIP J. Adv. Signal Process. 2009, 2010, 1–9. [Google Scholar] [CrossRef]

- Tao, C.; Chen, S.; Li, Y.; Xiao, S. PolSAR Land Cover Classification Based on Roll-Invariant and Selected Hidden Polarimetric Features in the Rotation Domain. Remote Sens. 2017, 9, 660. [Google Scholar] [CrossRef]

- Chen, Z.; Lu, Z.; Gao, H.; Zhang, Y.; Zhao, J.; Hong, D.; Zhang, B. Global to Local: A Hierarchical Detection Algorithm for Hyperspectral Image Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Dalsasso, E.; Denis, L.; Tupin, F. SAR2SAR: A Semi-Supervised Despeckling Algorithm for SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4321–4329. [Google Scholar] [CrossRef]

- Jiang, X.; Liang, S.; He, X.; Ziegler, A.D.; Lin, P.; Pan, M.; Wang, D.; Zou, J.; Hao, D.; Mao, G.; et al. Rapid and Large-Scale Mapping of Flood Inundation via Integrating Spaceborne Synthetic Aperture Radar Imagery with Unsupervised Deep Learning. ISPRS J. Photogramm. Remote Sens. 2021, 178, 36–50. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, H.; Chen, J.; Lei, L.; Ji, K.; Kuang, G. Adversarial Self-Supervised Learning for Robust SAR Target Recognition. Remote Sens. 2021, 13, 4158. [Google Scholar] [CrossRef]

- Wang, S.; Quan, D.; Liang, X.; Ning, M.; Guo, Y.; Jiao, L. A Deep Learning Framework for Remote Sensing Image Registration. ISPRS J. Photogramm. Remote Sens. 2018, 145, 148–164. [Google Scholar] [CrossRef]

- Zhang, Y.; Hui, J.; Qin, Q.; Sun, Y.; Zhang, T.; Sun, H.; Li, M. Transfer-Learning-Based Approach for Leaf Chlorophyll Content Estimation of Winter Wheat from Hyperspectral Data. Remote Sens. Environ. 2021, 267, 112724. [Google Scholar] [CrossRef]

- Shahabi, H.; Rahimzad, M.; Tavakkoli Piralilou, S.; Ghorbanzadeh, O.; Homayouni, S.; Blaschke, T.; Lim, S.; Ghamisi, P. Unsupervised Deep Learning for Landslide Detection from Multispectral Sentinel-2 Imagery. Remote Sens. 2021, 13, 4698. [Google Scholar] [CrossRef]

- Xie, W.; Lei, J.; Fang, S.; Li, Y.; Jia, X.; Li, M. Dual Feature Extraction Network for Hyperspectral Image Analysis. Pattern Recognit. 2021, 118, 107992. [Google Scholar] [CrossRef]

- Wei, H.; Xu, X.; Ou, N.; Zhang, X.; Dai, Y. DEANet: Dual Encoder with Attention Network for Semantic Segmentation of Remote Sensing Imagery. Remote Sens. 2021, 13, 3900. [Google Scholar] [CrossRef]

- Zhang, X.; Pun, M.-O.; Liu, M. Semi-Supervised Multi-Temporal Deep Representation Fusion Network for Landslide Mapping from Aerial Orthophotos. Remote Sens. 2021, 13, 548. [Google Scholar] [CrossRef]

- Tian, Y.; Dong, Y.; Yin, G. Early Labeled and Small Loss Selection Semi-Supervised Learning Method for Remote Sensing Image Scene Classification. Remote Sens. 2021, 13, 4039. [Google Scholar] [CrossRef]

- Du, X.; Zheng, X.; Lu, X.; Doudkin, A.A. Multisource Remote Sensing Data Classification with Graph Fusion Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10062–10072. [Google Scholar] [CrossRef]

- Li, Z.; Huang, H.; Zhang, Z.; Pan, Y. Manifold Learning-Based Semisupervised Neural Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, Z.; Zhao, X.; Cai, W.; Yang, N.; Hu, H.; Huang, X.; Cao, Y.; Cai, W. Unsupervised Self-Correlated Learning Smoothy Enhanced Locality Preserving Graph Convolution Embedding Clustering for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An Unsupervised Pan-Sharpening Method for Remote Sensing Image Fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Huang, A.; Shen, R.; Di, W.; Han, H. A Methodology to Reconstruct LAI Time Series Data Based on Generative Adversarial Network and Improved Savitzky-Golay Filter. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102633. [Google Scholar] [CrossRef]

- Ansith, S.; Bini, A.A. Land Use Classification of High Resolution Remote Sensing Images Using an Encoder Based Modified GAN Architecture. Displays 2022, 74, 102229. [Google Scholar] [CrossRef]

- Jafarzadeh, H.; Mahdianpari, M.; Gill, E.W. Wet-GC: A Novel Multimodel Graph Convolutional Approach for Wetland Classification Using Sentinel-1 and 2 Imagery with Limited Training Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5303–5316. [Google Scholar] [CrossRef]

- Zhang, Z.; Ding, Y.; Zhao, X.; Siye, L.; Yang, N.; Cai, Y.; Zhan, Y. Multireceptive Field: An Adaptive Path Aggregation Graph Neural Framework for Hyperspectral Image Classification. Expert Syst. Appl. 2023, 217, 119508. [Google Scholar] [CrossRef]

- Wang, H.; Chen, X.; Zhang, T.; Xu, Z.; Li, J. CCTNet: Coupled CNN and Transformer Network for Crop Segmentation of Remote Sensing Images. Remote Sens. 2022, 14, 1956. [Google Scholar] [CrossRef]

- Cai, W.; Ning, X.; Zhou, G.; Bai, X.; Jiang, Y.; Li, W.; Qian, P. A Novel Hyperspectral Image Classification Model Using Bole Convolution with Three-Direction Attention Mechanism: Small Sample and Unbalanced Learning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- Zhang, P.; Nascetti, A.; Ban, Y.; Gong, M. An Implicit Radar Convolutional Burn Index for Burnt Area Mapping with Sentinel-1 C-Band SAR Data. ISPRS J. Photogramm. Remote Sens. 2019, 158, 50–62. [Google Scholar] [CrossRef]

- Ban, Y.; Zhang, P.; Nascetti, A.; Bevington, A.R.; Wulder, M.A. Near Real-Time Wildfire Progression Monitoring with Sentinel-1 SAR Time Series and Deep Learning. Sci. Rep. 2020, 10, 1322. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Dong, J.; Li, B.; Xu, Q.; Xie, C. Change Detection from Synthetic Aperture Radar Images Based on Neighborhood-Based Ratio and Extreme Learning Machine. J. Appl. Remote Sens. 2016, 10, 046019. [Google Scholar] [CrossRef]

- Celik, T. Unsupervised Change Detection in Satellite Images Using Principal Component Analysis and $k$-Means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Krinidis, S.; Chatzis, V. A Robust Fuzzy Local Information C-Means Clustering Algorithm. IEEE Trans. Image Process. 2010, 19, 1328–1337. [Google Scholar] [CrossRef] [PubMed]

- Geng, J.; Ma, X.; Zhou, X.; Wang, H. Saliency-Guided Deep Neural Networks for SAR Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7365–7377. [Google Scholar] [CrossRef]

- Shang, R.; Qi, L.; Jiao, L.; Stolkin, R.; Li, Y. Change Detection in SAR Images by Artificial Immune Multi-Objective Clustering. Eng. Appl. Artif. Intell. 2014, 31, 53–67. [Google Scholar] [CrossRef]

- Gong, M.; Li, Y.; Jiao, L.; Jia, M.; Su, L. SAR Change Detection Based on Intensity and Texture Changes. ISPRS J. Photogramm. Remote Sens. 2014, 93, 123–135. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, X.; Hou, B.; Liu, G. Using Combined Difference Image and $k$ -Means Clustering for SAR Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2014, 11, 691–695. [Google Scholar] [CrossRef]

- Hou, B.; Wei, Q.; Zheng, Y.; Wang, S. Unsupervised Change Detection in SAR Image Based on Gauss-Log Ratio Image Fusion and Compressed Projection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3297–3317. [Google Scholar] [CrossRef]

- Majidi, M.; Ahmadi, S.; Shah-Hosseini, R. A Saliency-Guided Neighbourhood Ratio Model for Automatic Change Detection of SAR Images. Int. J. Remote Sens. 2020, 41, 9606–9627. [Google Scholar] [CrossRef]

- Zheng, Y.; Jiao, L.; Liu, H.; Zhang, X.; Hou, B.; Wang, S. Unsupervised Saliency-Guided SAR Image Change Detection. Pattern Recognit. 2017, 61, 309–326. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Philipp, M.B.; Levick, S.R. Exploring the Potential of C-Band SAR in Contributing to Burn Severity Mapping in Tropical Savanna. Remote Sens. 2019, 12, 49. [Google Scholar] [CrossRef]

- Donezar, U.; De Blas, T.; Larrañaga, A.; Ros, F.; Albizua, L.; Steel, A.; Broglia, M. Applicability of the MultiTemporal Coherence Approach to Sentinel-1 for the Detection and Delineation of Burnt Areas in the Context of the Copernicus Emergency Management Service. Remote Sens. 2019, 11, 2607. [Google Scholar] [CrossRef]

- Gong, M.; Cao, Y.; Wu, Q. A Neighborhood-Based Ratio Approach for Change Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2012, 9, 307–311. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Abdikan, S.; Bayik, C.; Sekertekin, A.; Bektas Balcik, F.; Karimzadeh, S.; Matsuoka, M.; Balik Sanli, F. Burned Area Detection Using Multi-Sensor SAR, Optical, and Thermal Data in Mediterranean Pine Forest. Forests 2022, 13, 347. [Google Scholar] [CrossRef]

| Orbit | Date | |

|---|---|---|

| Descending | 24 July 2017 | Pre-fire |

| Ascending | 24 July 2017 | Pre-fire |

| Ascending | 29 July 2017 | Pre-fire |

| Descending | 30 July 2017 | Pre-fire |

| Descending | 17 August 2017 | Post-fire |

| Ascending | 17 August 2017 | Post-fire |

| Ascending | 22 August 2017 | Post-fire |

| Descending | 23 August 2017 | Post-fire |

| Input Polarization | P | R | F1 | OA |

|---|---|---|---|---|

| VV | 59.33% | 79.67% | 68.01% | 82.75% |

| VH | 84.58% | 75.55% | 79.81% | 86.78% |

| Both | 82.33% | 78.75% | 80.50% | 87.67% |

| Model | P | R | F1 | OA |

|---|---|---|---|---|

| FCM | 85.91% | 48.75% | 62.20% | 67.74% |

| FCM-DCNN | 62.33% | 79.17% | 69.75% | 83.29% |

| SG-FCM-SVM | 84.53% | 72.34% | 77.96% | 85.23% |

| SG-FCM-DNN | 84.89% | 72.96% | 78.47% | 85.61% |

| SG-FCM-DCNN | 82.33% | 78.75% | 80.50% | 87.67% |

| Model | Processing Time (s) |

|---|---|

| Saliency-guided | 114 ± 27 (for both polarizations) |

| Fuzzy c-means | 18 ± 5 |

| SVM | 2 ± 1 |

| DNN | 21 ± 6 |

| DCNN | 65 ± 13 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radman, A.; Shah-Hosseini, R.; Homayouni, S. An Unsupervised Saliency-Guided Deep Convolutional Neural Network for Accurate Burn Mapping from Sentinel-1 SAR Data. Remote Sens. 2023, 15, 1184. https://doi.org/10.3390/rs15051184

Radman A, Shah-Hosseini R, Homayouni S. An Unsupervised Saliency-Guided Deep Convolutional Neural Network for Accurate Burn Mapping from Sentinel-1 SAR Data. Remote Sensing. 2023; 15(5):1184. https://doi.org/10.3390/rs15051184

Chicago/Turabian StyleRadman, Ali, Reza Shah-Hosseini, and Saeid Homayouni. 2023. "An Unsupervised Saliency-Guided Deep Convolutional Neural Network for Accurate Burn Mapping from Sentinel-1 SAR Data" Remote Sensing 15, no. 5: 1184. https://doi.org/10.3390/rs15051184

APA StyleRadman, A., Shah-Hosseini, R., & Homayouni, S. (2023). An Unsupervised Saliency-Guided Deep Convolutional Neural Network for Accurate Burn Mapping from Sentinel-1 SAR Data. Remote Sensing, 15(5), 1184. https://doi.org/10.3390/rs15051184