Text Semantic Fusion Relation Graph Reasoning for Few-Shot Object Detection on Remote Sensing Images

Abstract

1. Introduction

- 1.

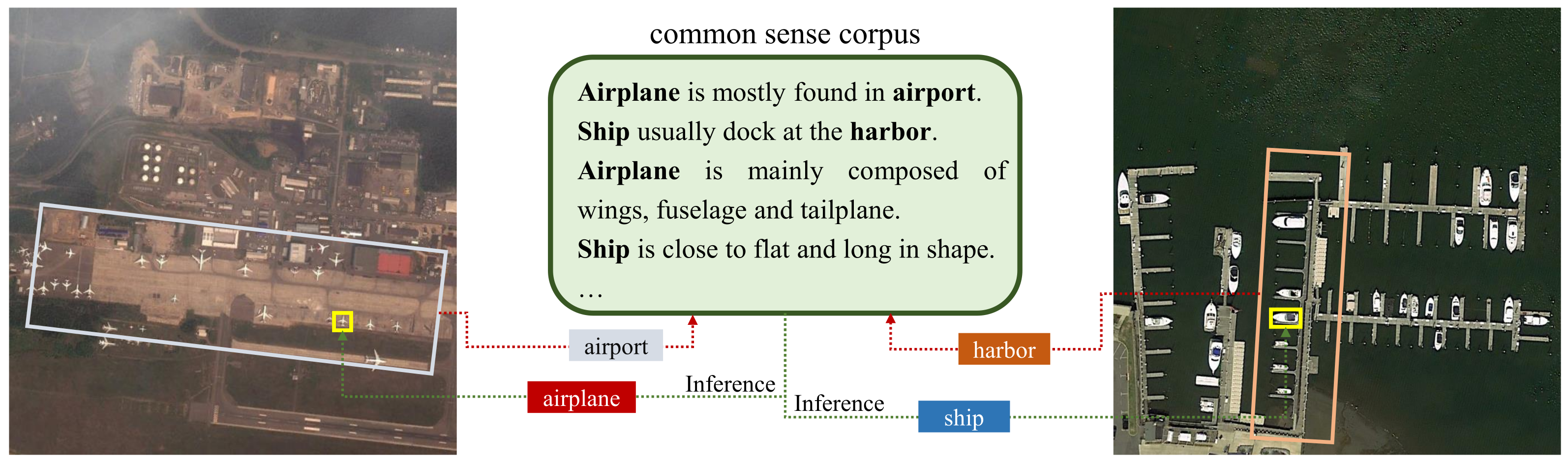

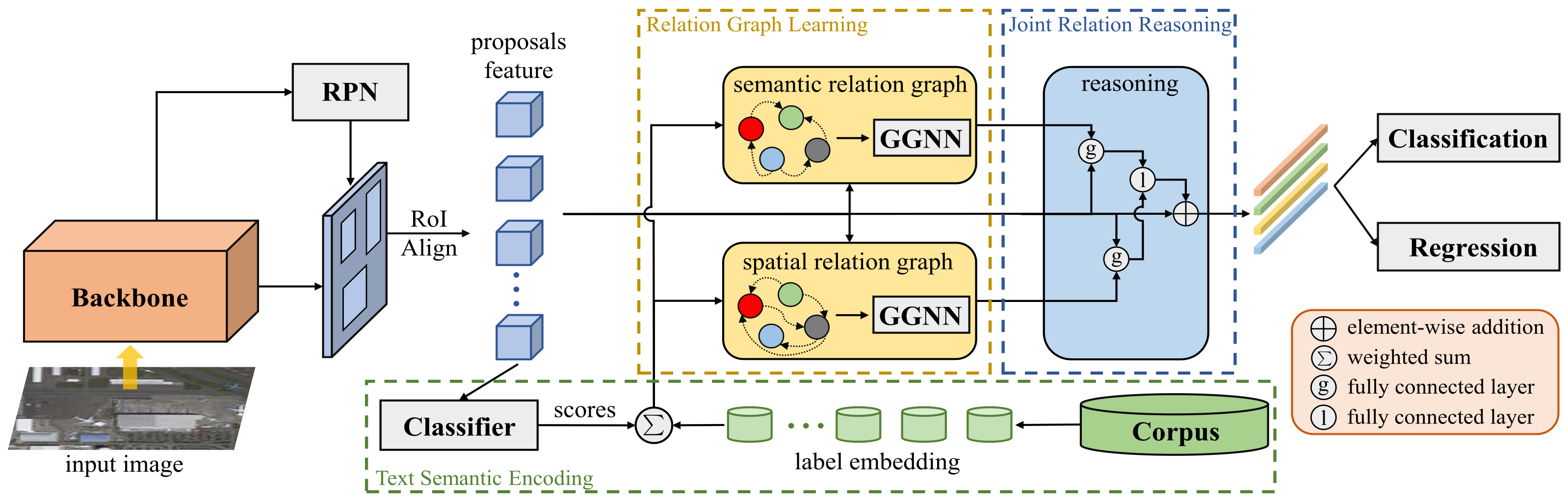

- We build a corpus consisting of a large amount of natural language for the NWPU VHR-10 and DIOR datasets, respectively, that contains descriptions of semantic information about the appearance, attributes, and potential relationships of all classes. Additional common-sense guidance for scarce novel classes is provided by encoding the word vectors of the classes in the corpus and aligning the features in the text and image domains. To the best of our knowledge, this is the first work to explore this method for few-shot object detections on RSI;

- 2.

- A relation graph learning module is designed, where the nodes of the graph consist of each region proposal and the edges compute the semantic and spatial relations that exist between the proposals. With the information interaction of GGNN, the potential semantic and spatial information of each proposal is learned while enhancing the association of base and novel classes;

- 3.

- A joint relation reasoning module is designed to actively combine visual and relational features to simulate the reasoning process. This reduces the dependence of the model on image data and provides more discriminative features for novel classes. The whole model is more interpretable while improving the performance of few-shot detection;

- 4.

- Our TSF-RGR achieves consistent performance on both public benchmark datasets. It outperforms the leading method in the same domain for a wide range of shots settings, especially in the case of extremely sparse shots.

2. Related Work

2.1. Object Detection in Remote Sensing Images

2.2. Few-Shot Object Detection

2.3. Knowledge Reasoning

3. Preliminary Knowledge

3.1. Model Formulation

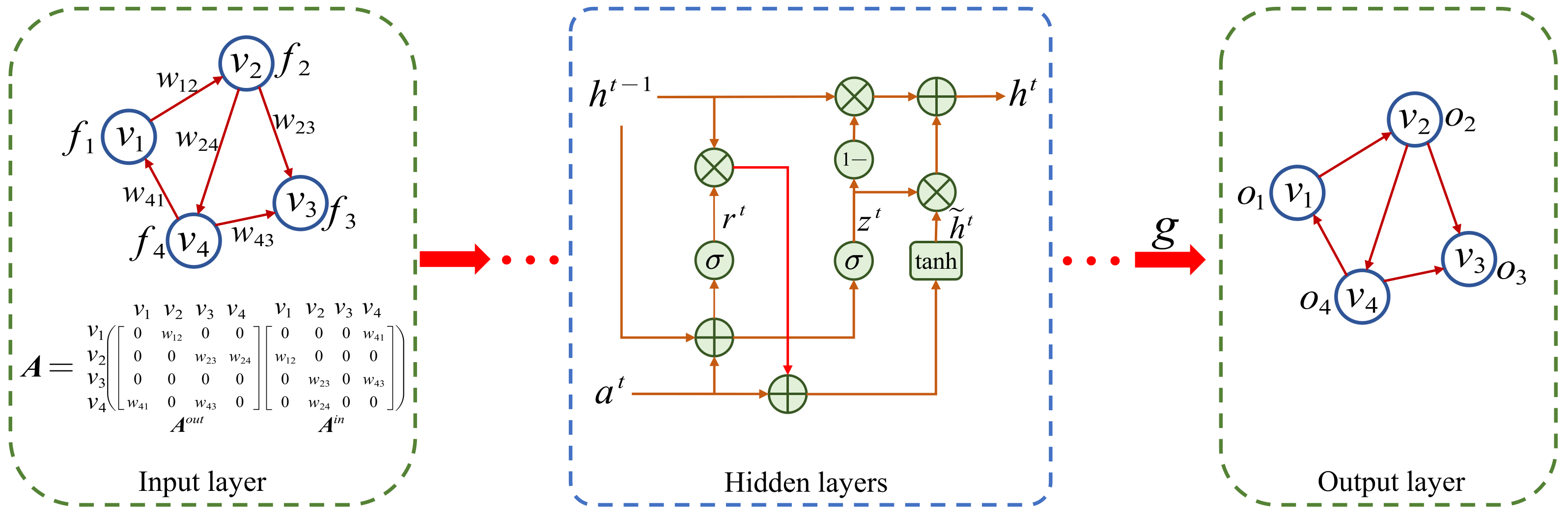

3.2. Gated Graph Neural Networks

4. Proposed Method

4.1. Text Semantic Encoding

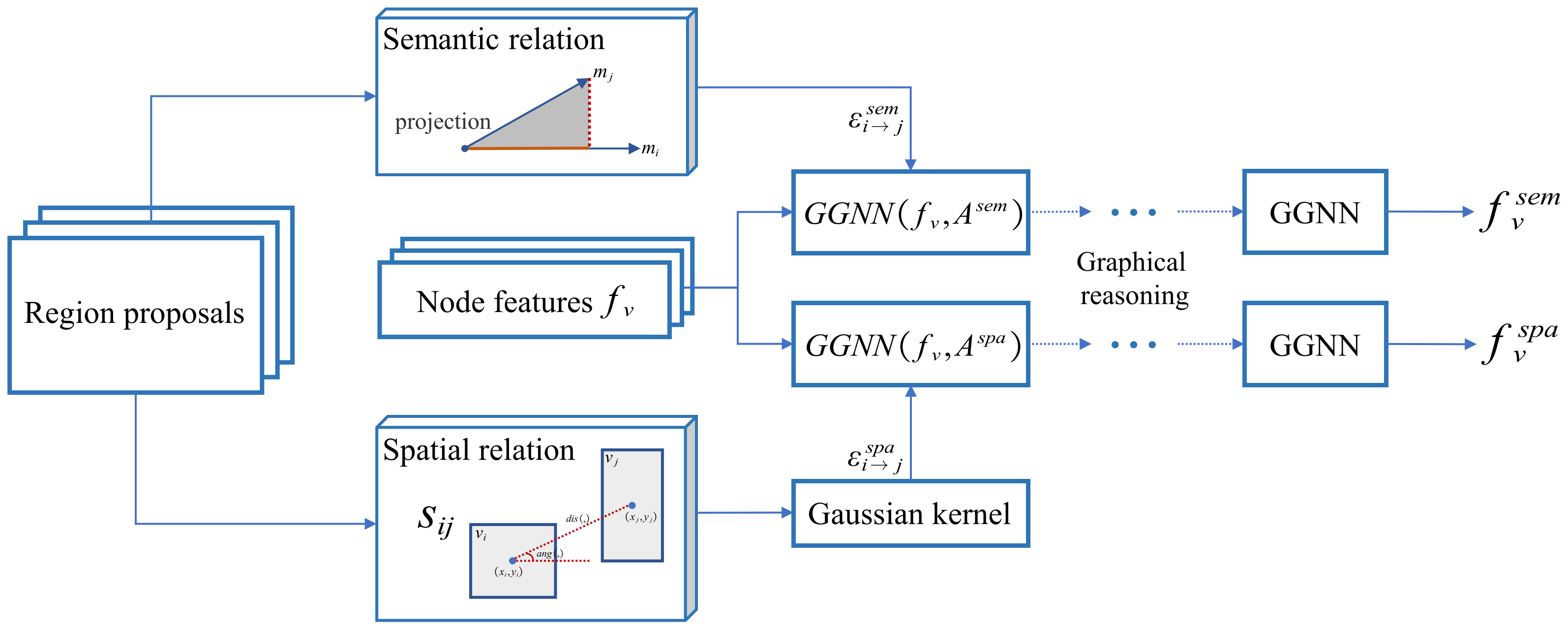

4.2. Relation Graph Learning

4.3. Joint Relation Reasoning

4.4. Two-Stage Fine-Tuning

5. Experiments and Discussion

5.1. Experimental Settings

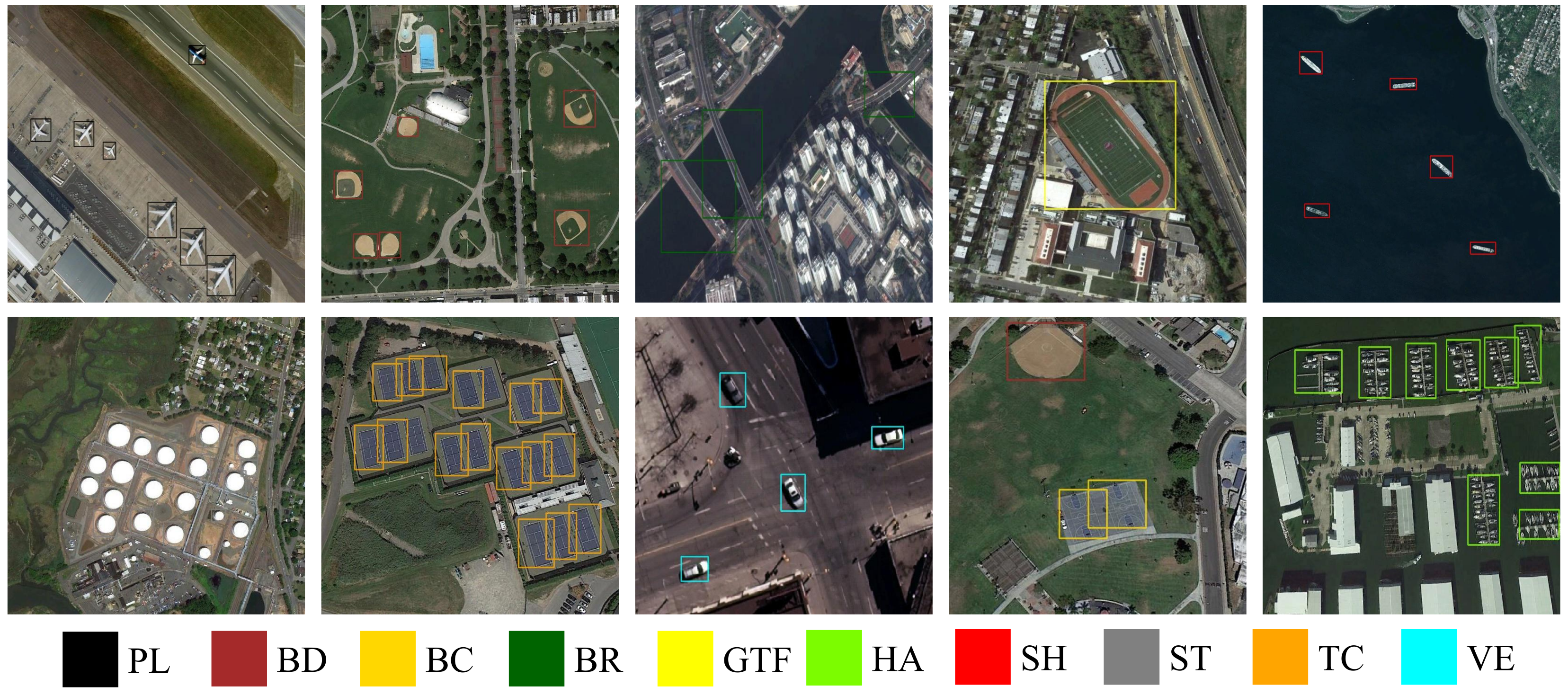

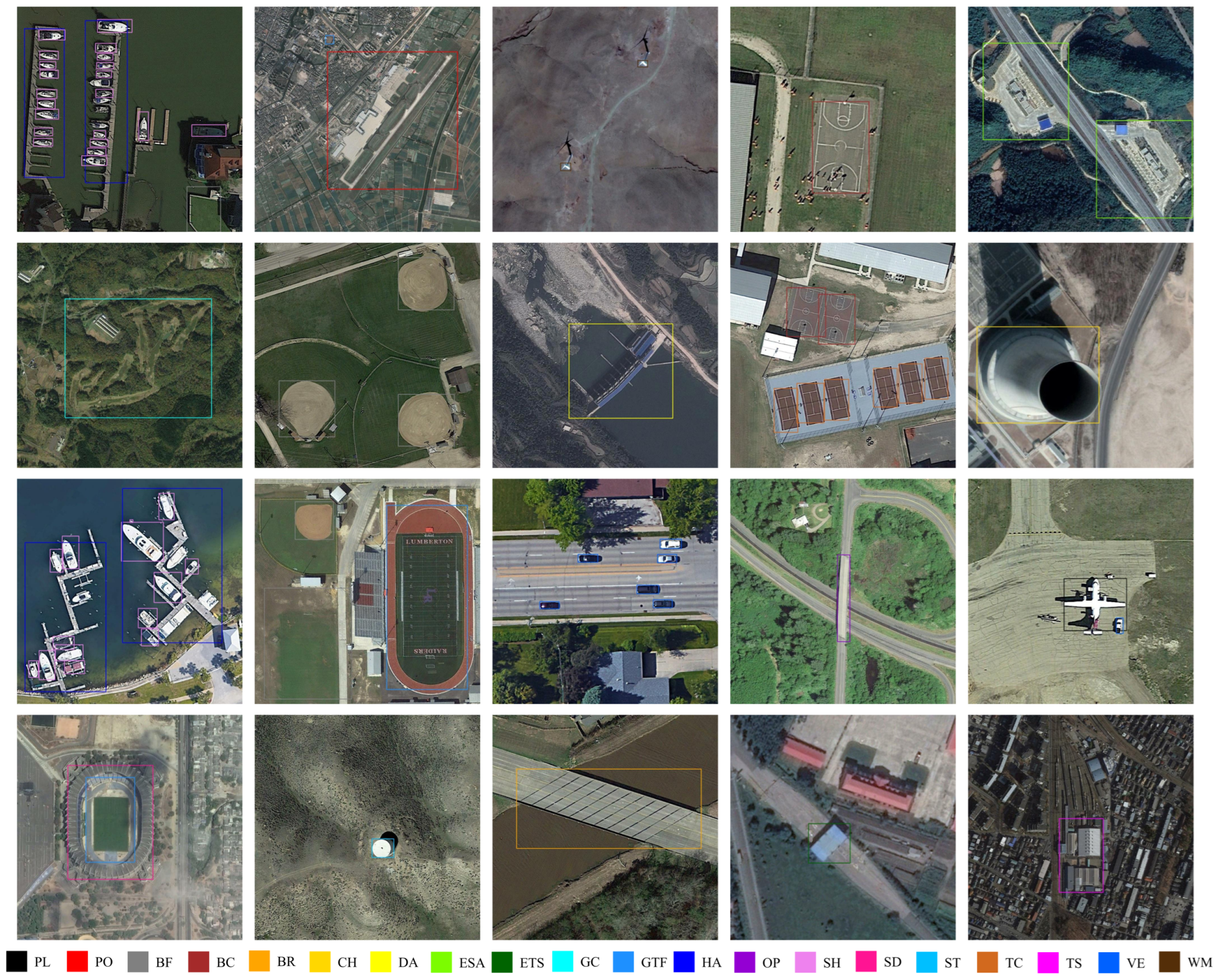

5.1.1. Datasets

5.1.2. Data Preprocessing

5.1.3. Network Setup

5.1.4. Evaluation Metrics

5.2. Performance Comparison

5.3. Ablation Study

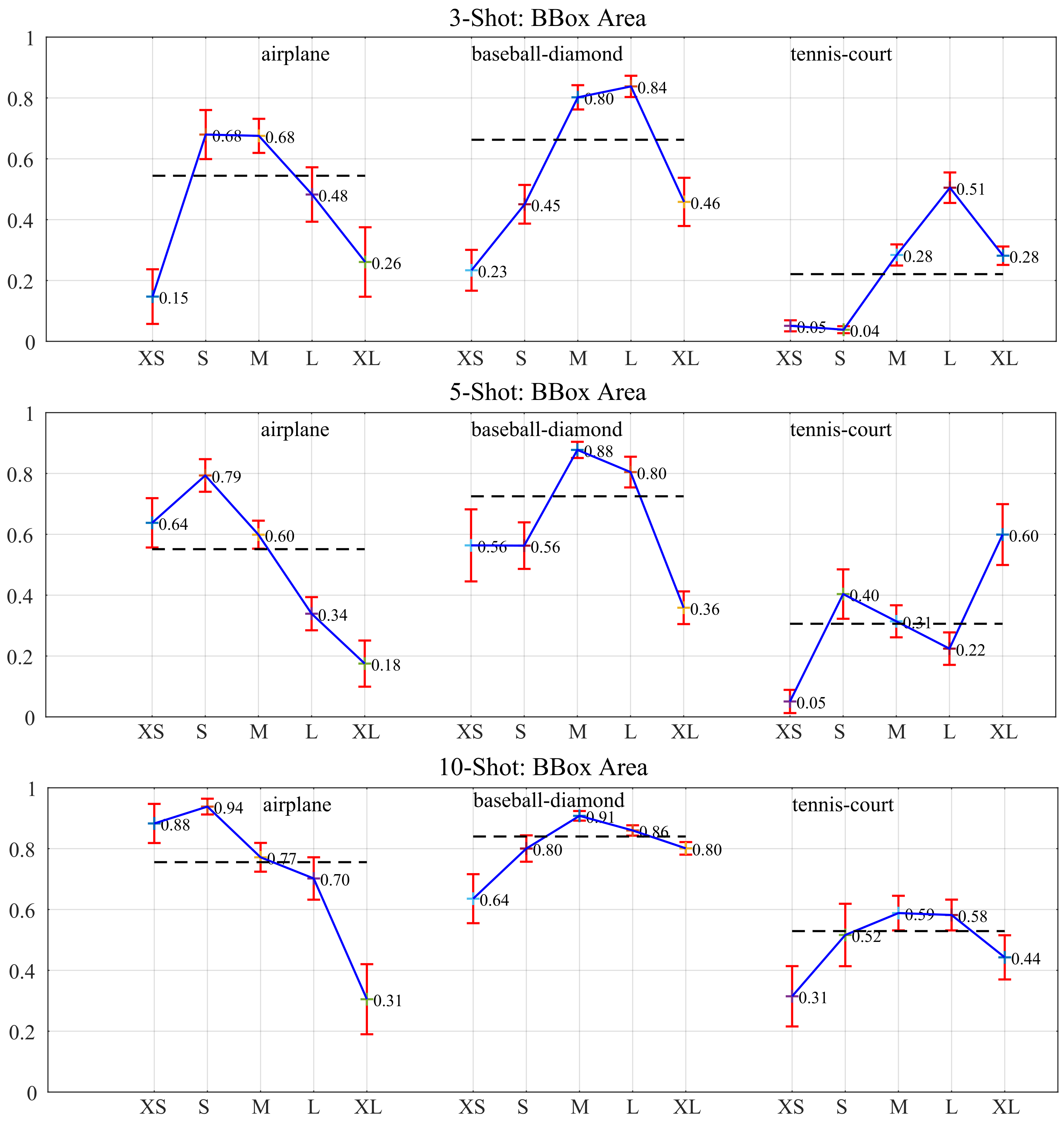

5.4. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| RSI | Remote sensing images |

| FSOD | Few-shot object detection |

| GGNN | Gated graph neural network |

| GRU | Gate recurrent unit |

| TSF-RGR | Text semantic fusion relation graph reasoning |

| TSE | Text semantic encoding |

| RGL | Relation graph learning |

| JRR | Joint relation reasoning |

| AP | Average precision |

| mAP | Mean average precision |

References

- Quan, Y.; Zhong, X.; Feng, W.; Dauphin, G.; Gao, L.; Xing, M. A Novel Feature Extension Method for the Forest Disaster Monitoring Using Multispectral Data. Remote Sens. 2020, 12, 2261. [Google Scholar] [CrossRef]

- Shimoni, M.; Haelterman, R.; Perneel, C. Hypersectral Imaging for Military and Security Applications: Combining Myriad Processing and Sensing Techniques. IEEE Geosci. Remote Sens. Mag. 2019, 7, 101–117. [Google Scholar] [CrossRef]

- Wellmann, T.; Lausch, A.; Andersson, E.; Knapp, S.; Cortinovis, C.; Jache, J.; Scheuer, S.; Kremer, P.; Mascarenhas, A.; Kraemer, R.; et al. Remote sensing in urban planning: Contributions towards ecologically sound policies? Landsc. Urban Plan. 2020, 204, 103921. [Google Scholar] [CrossRef]

- Song, F.; Zhang, S.; Lei, T.; Song, Y.; Peng, Z. MSTDSNet-CD: Multiscale Swin Transformer and Deeply Supervised Network for Change Detection of the Fast-Growing Urban Regions. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, Y.; Bashir, S.M.A.; Khan, M.; Ullah, Q.; Wang, R.; Song, Y.; Guo, Z.; Niu, Y. Remote sensing image super-resolution and object detection: Benchmark and state of the art. Expert Syst. Appl. 2022, 197, 116793. [Google Scholar] [CrossRef]

- Ye, Y.; Ren, X.; Zhu, B.; Tang, T.; Tan, X.; Gui, Y.; Yao, Q. An Adaptive Attention Fusion Mechanism Convolutional Network for Object Detection in Remote Sensing Images. Remote Sens. 2022, 14, 516. [Google Scholar] [CrossRef]

- Ma, W.; Li, N.; Zhu, H.; Jiao, L.; Tang, X.; Guo, Y.; Hou, B. Feature Split–Merge–Enhancement Network for Remote Sensing Object Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Yu, D.; Ji, S. A New Spatial-Oriented Object Detection Framework for Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Xiao, Y.; Marlet, R. Few-Shot Object Detection and Viewpoint Estimation for Objects in the Wild. In Computer Vision—ECCV 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 192–210. [Google Scholar] [CrossRef]

- Qiao, L.; Zhao, Y.; Li, Z.; Qiu, X.; Wu, J.; Zhang, C. DeFRCN: Decoupled Faster R-CNN for Few-Shot Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021. [Google Scholar] [CrossRef]

- Ruiz, L.; Gama, F.; Ribeiro, A. Gated Graph Convolutional Recurrent Neural Networks. In Proceedings of the 2019 27th European Signal Processing Conference (EUSIPCO), Coruna, Spain, 2–6 September 2019. [Google Scholar] [CrossRef]

- Li, Z.; Wang, Y.; Zhang, N.; Zhang, Y.; Zhao, Z.; Xu, D.; Ben, G.; Gao, Y. Deep Learning-Based Object Detection Techniques for Remote Sensing Images: A Survey. Remote Sens. 2022, 14, 2385. [Google Scholar] [CrossRef]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T.; et al. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- Cheng, G.; He, M.; Hong, H.; Yao, X.; Qian, X.; Guo, L. Guiding Clean Features for Object Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Huang, W.; Li, G.; Chen, Q.; Ju, M.; Qu, J. CF2PN: A Cross-Scale Feature Fusion Pyramid Network Based Remote Sensing Target Detection. Remote Sens. 2021, 13, 847. [Google Scholar] [CrossRef]

- Wang, G.; Zhuang, Y.; Chen, H.; Liu, X.; Zhang, T.; Li, L.; Dong, S.; Sang, Q. FSoD-Net: Full-Scale Object Detection From Optical Remote Sensing Imagery. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Qi, G.; Zhang, Y.; Wang, K.; Mazur, N.; Liu, Y.; Malaviya, D. Small Object Detection Method Based on Adaptive Spatial Parallel Convolution and Fast Multi-Scale Fusion. Remote Sens. 2022, 14, 420. [Google Scholar] [CrossRef]

- Zheng, J.; Wang, T.; Zhang, Z.; Wang, H. Object Detection in Remote Sensing Images by Combining Feature Enhancement and Hybrid Attention. Appl. Sci. 2022, 12, 6237. [Google Scholar] [CrossRef]

- Xu, X.; Feng, Z.; Cao, C.; Li, M.; Wu, J.; Wu, Z.; Shang, Y.; Ye, S. An Improved Swin Transformer-Based Model for Remote Sensing Object Detection and Instance Segmentation. Remote Sens. 2021, 13, 4779. [Google Scholar] [CrossRef]

- Li, Q.; Chen, Y.; Zeng, Y. Transformer with Transfer CNN for Remote-Sensing-Image Object Detection. Remote Sens. 2022, 14, 984. [Google Scholar] [CrossRef]

- Karlinsky, L.; Shtok, J.; Harary, S.; Schwartz, E.; Aides, A.; Feris, R.; Giryes, R.; Bronstein, A.M. RepMet: Representative-Based Metric Learning for Classification and Few-Shot Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Wang, X.; Huang, T.E.; Darrell, T.; Gonzalez, J.E.; Yu, F. Frustratingly simple few-shot object detection. arXiv 2020, arXiv:2003.06957. [Google Scholar] [CrossRef]

- Kaul, P.; Xie, W.; Zisserman, A. Label, Verify, Correct: A Simple Few Shot Object Detection Method. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 14237–14247. [Google Scholar] [CrossRef]

- Sun, B.; Li, B.; Cai, S.; Yuan, Y.; Zhang, C. FSCE: Few-Shot Object Detection via Contrastive Proposal Encoding. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual Conference, 19–25 June 2021. [Google Scholar] [CrossRef]

- Fan, Q.; Zhuo, W.; Tang, C.K.; Tai, Y.W. Few-Shot Object Detection With Attention-RPN and Multi-Relation Detector. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Han, G.; Ma, J.; Huang, S.; Chen, L.; Chang, S.F. Few-Shot Object Detection with Fully Cross-Transformer. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Bulat, A.; Guerrero, R.; Martinez, B.; Tzimiropoulos, G. FS-DETR: Few-Shot DEtection TRansformer with prompting and without re-training. arXiv 2022, arXiv:2210.04845. [Google Scholar] [CrossRef]

- Cheng, G.; Yan, B.; Shi, P.; Li, K.; Yao, X.; Guo, L.; Han, J. Prototype-CNN for Few-Shot Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–10. [Google Scholar] [CrossRef]

- Li, X.; Deng, J.; Fang, Y. Few-Shot Object Detection on Remote Sensing Images. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Wolf, S.; Meier, J.; Sommer, L.; Beyerer, J. Double Head Predictor based Few-Shot Object Detection for Aerial Imagery. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021. [Google Scholar] [CrossRef]

- Huang, X.; He, B.; Tong, M.; Wang, D.; He, C. Few-Shot Object Detection on Remote Sensing Images via Shared Attention Module and Balanced Fine-Tuning Strategy. Remote Sens. 2021, 13, 3816. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, C.; Liu, C.; Li, Z. Context Information Refinement for Few-Shot Object Detection in Remote Sensing Images. Remote Sens. 2022, 14, 3255. [Google Scholar] [CrossRef]

- Zhou, Y.; Hu, H.; Zhao, J.; Zhu, H.; Yao, R.; Du, W.L. Few-Shot Object Detection via Context-Aware Aggregation for Remote Sensing Images. IEEE Geosci. Remote. Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Liu, Y.; Sheng, L.; Shao, J.; Yan, J.; Xiang, S.; Pan, C. Multi-Label Image Classification via Knowledge Distillation from Weakly-Supervised Detection. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018. [Google Scholar] [CrossRef]

- Chen, R.; Chen, T.; Hui, X.; Wu, H.; Li, G.; Lin, L. Knowledge Graph Transfer Network for Few-Shot Recognition. Proc. AAAI Conf. Artif. Intell. 2020, 34, 10575–10582. [Google Scholar] [CrossRef]

- Lee, C.W.; Fang, W.; Yeh, C.K.; Wang, Y.C.F. Multi-label Zero-Shot Learning with Structured Knowledge Graphs. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Hu, H.; Gu, J.; Zhang, Z.; Dai, J.; Wei, Y. Relation Networks for Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Xu, H.; Jiang, C.; Liang, X.; Li, Z. Spatial-Aware Graph Relation Network for Large-Scale Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Marino, K.; Salakhutdinov, R.; Gupta, A. The More You Know: Using Knowledge Graphs for Image Classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Mou, L.; Hua, Y.; Zhu, X.X. A Relation-Augmented Fully Convolutional Network for Semantic Segmentation in Aerial Scenes. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Zhu, C.; Chen, F.; Ahmed, U.; Shen, Z.; Savvides, M. Semantic Relation Reasoning for Shot-Stable Few-Shot Object Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Gu, X.; Lin, T.Y.; Kuo, W.; Cui, Y. Zeroshot detection via vision and language knowledge distillation. arXiv 2021, arXiv:2104.13921. [Google Scholar] [CrossRef]

- Xu, H.; Fang, L.; Liang, X.; Kang, W.; Li, Z. Universal-RCNN: Universal Object Detector via Transferable Graph R-CNN. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12492–12499. [Google Scholar] [CrossRef]

- Zhang, S.; Song, F.; Lei, T.; Jiang, P.; Liu, G. MKLM: A multiknowledge learning module for object detection in remote sensing images. Int. J. Remote. Sens. 2022, 43, 2244–2267. [Google Scholar] [CrossRef]

- Kim, G.; Jung, H.G.; Lee, S.W. Few-Shot Object Detection via Knowledge Transfer. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020. [Google Scholar] [CrossRef]

- Shu, X.; Liu, R.; Xu, J. A Semantic Relation Graph Reasoning Network for Object Detection. In Proceedings of the 2021 IEEE 10th Data Driven Control and Learning Systems Conference (DDCLS), Suzhou, China, 14–16 May 2021; pp. 1309–1314. [Google Scholar] [CrossRef]

- Chen, W.; Xiong, W.; Yan, X.; Wang, W.Y. Variational Knowledge Graph Reasoning. arXiv 2018, arXiv:1803.06581. [Google Scholar] [CrossRef]

- Li, A.; Luo, T.; Lu, Z.; Xiang, T.; Wang, L. Large-Scale Few-Shot Learning: Knowledge Transfer With Class Hierarchy. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C. GloVe: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates, Inc.: New York, NY, USA, 2019; Volume 32. [Google Scholar]

- Zhao, Z.; Tang, P.; Zhao, L.; Zhang, Z. Few-Shot Object Detection of Remote Sensing Images via Two-Stage Fine-Tuning. IEEE Geosci. Remote. Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, Z.; Hao, J.; Pan, C.; Ji, G. Oriented Feature Augmentation for Few-Shot Object Detection in Remote Sensing Images. In Proceedings of the 2021 IEEE International Conference on Computer Science, Electronic Information Engineering and Intelligent Control Technology (CEI), Fuzhou, China, 24–26 September 2021. [Google Scholar] [CrossRef]

- Hoiem, D.; Chodpathumwan, Y.; Dai, Q. Diagnosing Error in Object Detectors. In Computer Vision – ECCV 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 340–353. [Google Scholar] [CrossRef]

| Method | Shot | Airplane | Baseball Diamond | Tennis Court | mAP |

|---|---|---|---|---|---|

| Faster R-CNN [11] | 3 | 0.09 | 0.19 | 0.12 | 0.13 |

| 5 | 0.19 | 0.23 | 0.17 | 0.20 | |

| 10 | 0.20 | 0.35 | 0.17 | 0.24 | |

| FSODM [34] | 3 | 0.15 | 0.57 | 0.25 | 0.32 |

| 5 | 0.58 | 0.84 | 0.16 | 0.53 | |

| 10 | 0.60 | 0.88 | 0.48 | 0.65 | |

| PAMS-Det [57] | 3 | 0.21 | 0.76 | 0.16 | 0.37 |

| 5 | 0.55 | 0.88 | 0.20 | 0.55 | |

| 10 | 0.61 | 0.88 | 0.50 | 0.66 | |

| OAF [58] | 3 | 0.18 | 0.87 | 0.24 | 0.43 |

| 5 | 0.28 | 0.89 | 0.65 | 0.60 | |

| 10 | 0.43 | 0.89 | 0.68 | 0.67 | |

| CIR-FSD [37] | 3 | 0.52 | 0.79 | 0.31 | 0.54 |

| 5 | 0.67 | 0.88 | 0.37 | 0.64 | |

| 10 | 0.71 | 0.88 | 0.53 | 0.70 | |

| SAM-BFS [36] | 3 | - | - | - | 0.47 |

| 5 | - | - | - | 0.62 | |

| 10 | - | - | - | 0.75 | |

| TSF-RGR (ours) | 3 | 0.57 | 0.79 | 0.35 | 0.57 |

| 5 | 0.71 | 0.85 | 0.42 | 0.66 | |

| 10 | 0.78 | 0.94 | 0.60 | 0.77 |

| Method | Shot | Airplane | Baseball Field | Tennis Court | Train Station | Wind Mill | mAP |

|---|---|---|---|---|---|---|---|

| Faster R-CNN [11] | 5 | 0.03 | 0.09 | 0.12 | 0.01 | 0.01 | 0.05 |

| 10 | 0.09 | 0.31 | 0.13 | 0.02 | 0.12 | 0.13 | |

| 20 | 0.09 | 0.35 | 0.21 | 0.04 | 0.21 | 0.18 | |

| FSODM [34] | 5 | 0.09 | 0.27 | 0.57 | 0.11 | 0.19 | 0.25 |

| 10 | 0.16 | 0.46 | 0.60 | 0.14 | 0.24 | 0.32 | |

| 20 | 0.22 | 0.50 | 0.66 | 0.16 | 0.29 | 0.36 | |

| PAMS-Det [57] | 5 | 0.14 | 0.54 | 0.24 | 0.17 | 0.31 | 0.28 |

| 10 | 0.17 | 0.55 | 0.41 | 0.17 | 0.34 | 0.33 | |

| 20 | 0.25 | 0.58 | 0.50 | 0.23 | 0.36 | 0.38 | |

| OAF [58] | 5 | 0.26 | 0.60 | 0.69 | 0.25 | 0.09 | 0.38 |

| 10 | 0.30 | 0.63 | 0.69 | 0.31 | 0.11 | 0.41 | |

| 20 | - | - | - | - | - | - | |

| CIR-FSD [37] | 5 | 0.20 | 0.50 | 0.50 | 0.24 | 0.20 | 0.33 |

| 10 | 0.20 | 0.55 | 0.50 | 0.23 | 0.36 | 0.38 | |

| 20 | 0.27 | 0.62 | 0.55 | 0.28 | 0.37 | 0.43 | |

| SAM-BFS [36] | 5 | - | - | - | - | - | 0.38 |

| 10 | - | - | - | - | - | 0.47 | |

| 20 | - | - | - | - | - | 0.51 | |

| TSF-RGR (ours) | 5 | 0.58 | 0.79 | 0.58 | 0.11 | 0.06 | 0.42 |

| 10 | 0.72 | 0.79 | 0.67 | 0.08 | 0.19 | 0.49 | |

| 20 | 0.81 | 0.82 | 0.78 | 0.15 | 0.18 | 0.54 |

| Class | Faster R-CNN [11] | FSODM [34] | PAMS-Det [57] | CIR-FSD [37] | TSF-RGR (Ours) |

|---|---|---|---|---|---|

| basketball court | 0.56 | 0.72 | 0.90 | 0.91 | 0.96 |

| bridge | 0.57 | 0.76 | 0.80 | 0.97 | 0.84 |

| ground track field | 1.00 | 0.91 | 0.99 | 0.99 | 0.96 |

| harbor | 0.66 | 0.87 | 0.84 | 0.80 | 0.92 |

| ship | 0.88 | 0.72 | 0.88 | 0.91 | 0.94 |

| storage tank | 0.49 | 0.71 | 0.89 | 0.88 | 0.93 |

| vehicle | 0.74 | 0.76 | 0.89 | 0.89 | 0.92 |

| mAP | 0.70 | 0.87 | 0.88 | 0.89 | 0.92 |

| Class | Faster R-CNN [11] | FSODM [34] | PAMS-Det [57] | CIR-FSD [37] | TSF-RGR (Ours) |

|---|---|---|---|---|---|

| airport | 0.73 | 0.63 | 0.78 | 0.87 | 0.86 |

| basketball court | 0.69 | 0.80 | 0.79 | 0.88 | 0.90 |

| bridge | 0.26 | 0.32 | 0.52 | 0.55 | 0.52 |

| chimney | 0.72 | 0.72 | 0.69 | 0.79 | 0.79 |

| dam | 0.57 | 0.45 | 0.55 | 0.72 | 0.70 |

| expressway service area | 0.59 | 0.63 | 0.67 | 0.86 | 0.88 |

| expressway toll station | 0.45 | 0.60 | 0.62 | 0.78 | 0.79 |

| golf course | 0.68 | 0.61 | 0.81 | 0.84 | 0.84 |

| ground track field | 0.65 | 0.61 | 0.78 | 0.83 | 0.84 |

| harbor | 0.31 | 0.43 | 0.50 | 0.57 | 0.62 |

| overpass | 0.45 | 0.46 | 0.51 | 0.64 | 0.65 |

| ship | 0.10 | 0.50 | 0.67 | 0.72 | 0.89 |

| stadium | 0.67 | 0.45 | 0.76 | 0.77 | 0.79 |

| storage tank | 0.24 | 0.43 | 0.57 | 0.70 | 0.79 |

| vehicle | 0.19 | 0.39 | 0.54 | 0.56 | 0.55 |

| mAP | 0.48 | 0.54 | 0.65 | 0.74 | 0.76 |

| Baseline | SemR | SpaR | mAP | ||

|---|---|---|---|---|---|

| 3-Shot | 5-Shot | 10-Shot | |||

| ✓ | - | - | 0.42 | 0.56 | 0.69 |

| ✓ | ✓ | - | 0.53 | 0.64 | 0.74 |

| ✓ | - | ✓ | 0.46 | 0.57 | 0.72 |

| ✓ | ✓ | ✓ | 0.57 | 0.66 | 0.77 |

| Baseline | SemR | SpaR | mAP | ||

|---|---|---|---|---|---|

| 5-Shot | 10-Shot | 20-Shot | |||

| ✓ | - | - | 0.37 | 0.44 | 0.47 |

| ✓ | ✓ | - | 0.41 | 0.46 | 0.52 |

| ✓ | - | ✓ | 0.40 | 0.47 | 0.51 |

| ✓ | ✓ | ✓ | 0.42 | 0.49 | 0.54 |

| Ensemble Method | mAP | ||

|---|---|---|---|

| 3-Shot | 5-Shot | 10-Shot | |

| Concat | 0.46 | 0.56 | 0.71 |

| Add | 0.45 | 0.57 | 0.74 |

| JRR | 0.57 | 0.66 | 0.77 |

| Cycle Times | Inference Time | mAP | ||

|---|---|---|---|---|

| 3-Shot | 5-Shot | 10-Shot | ||

| 2 | 0.34 | 0.57 | 0.66 | 0.77 |

| 3 | 0.34 | 0.49 | 0.59 | 0.73 |

| 4 | 0.35 | 0.46 | 0.55 | 0.73 |

| Method | FLOPs (G) | Params (M) | Inference Time (s) |

|---|---|---|---|

| Baseline | 38.94 | 14.60 | 0.38 |

| TSF-RGR (ours) | 42.96 | 20.91 | 0.42 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Song, F.; Liu, X.; Hao, X.; Liu, Y.; Lei, T.; Jiang, P. Text Semantic Fusion Relation Graph Reasoning for Few-Shot Object Detection on Remote Sensing Images. Remote Sens. 2023, 15, 1187. https://doi.org/10.3390/rs15051187

Zhang S, Song F, Liu X, Hao X, Liu Y, Lei T, Jiang P. Text Semantic Fusion Relation Graph Reasoning for Few-Shot Object Detection on Remote Sensing Images. Remote Sensing. 2023; 15(5):1187. https://doi.org/10.3390/rs15051187

Chicago/Turabian StyleZhang, Sanxing, Fei Song, Xianyuan Liu, Xuying Hao, Yujia Liu, Tao Lei, and Ping Jiang. 2023. "Text Semantic Fusion Relation Graph Reasoning for Few-Shot Object Detection on Remote Sensing Images" Remote Sensing 15, no. 5: 1187. https://doi.org/10.3390/rs15051187

APA StyleZhang, S., Song, F., Liu, X., Hao, X., Liu, Y., Lei, T., & Jiang, P. (2023). Text Semantic Fusion Relation Graph Reasoning for Few-Shot Object Detection on Remote Sensing Images. Remote Sensing, 15(5), 1187. https://doi.org/10.3390/rs15051187