Leveraging Saliency in Single-Stage Multi-Label Concrete Defect Detection Using Unmanned Aerial Vehicle Imagery

Abstract

1. Introduction

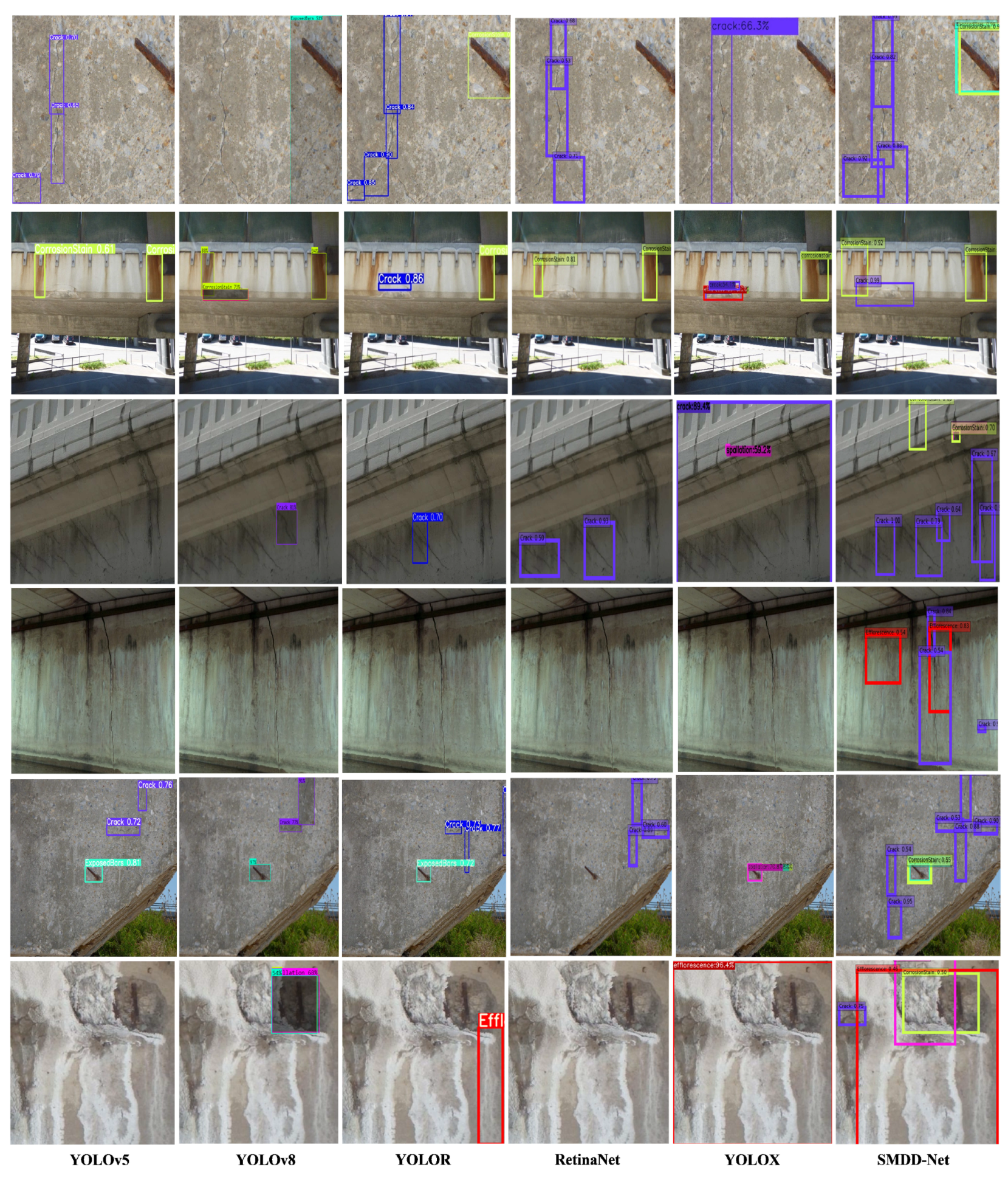

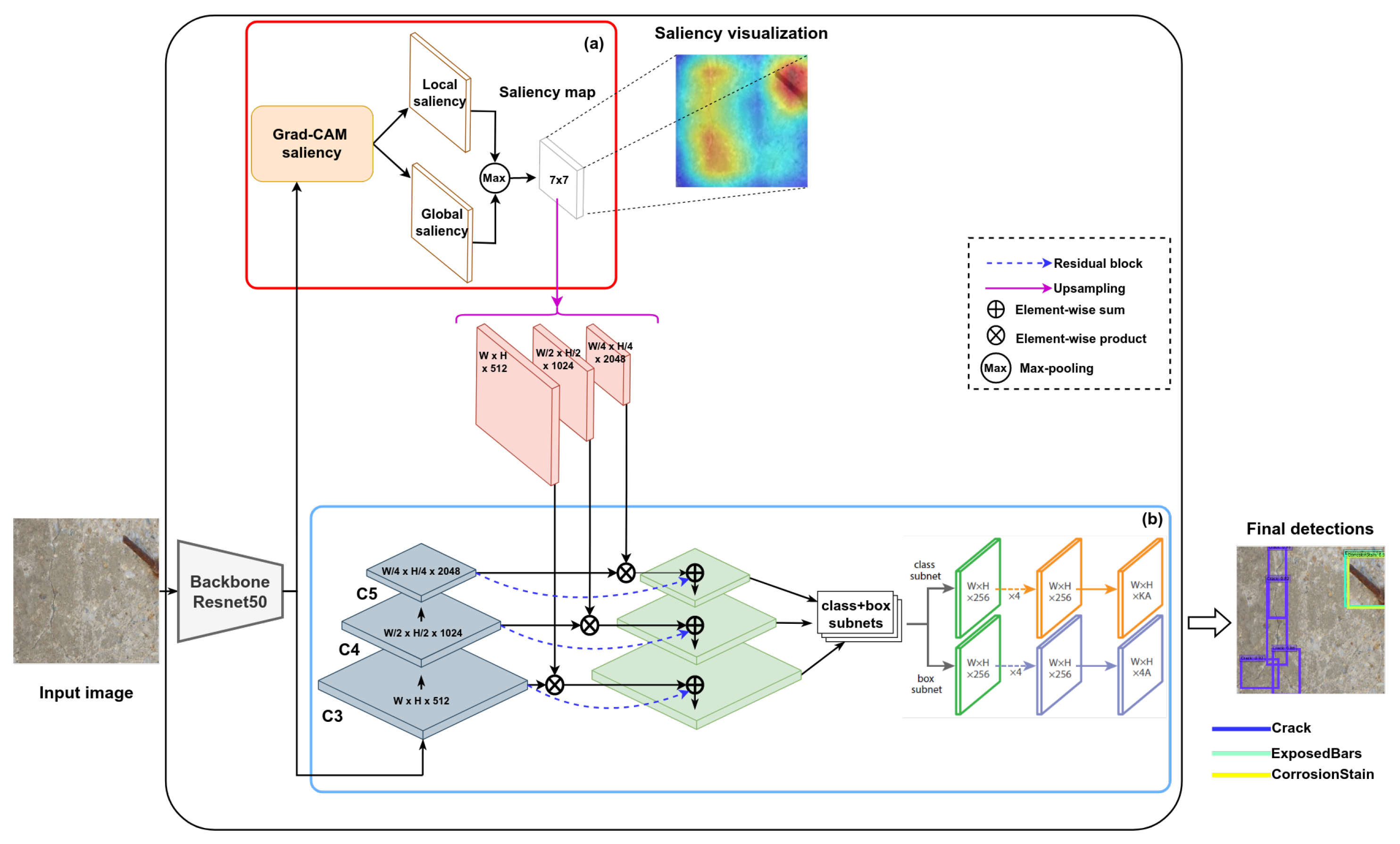

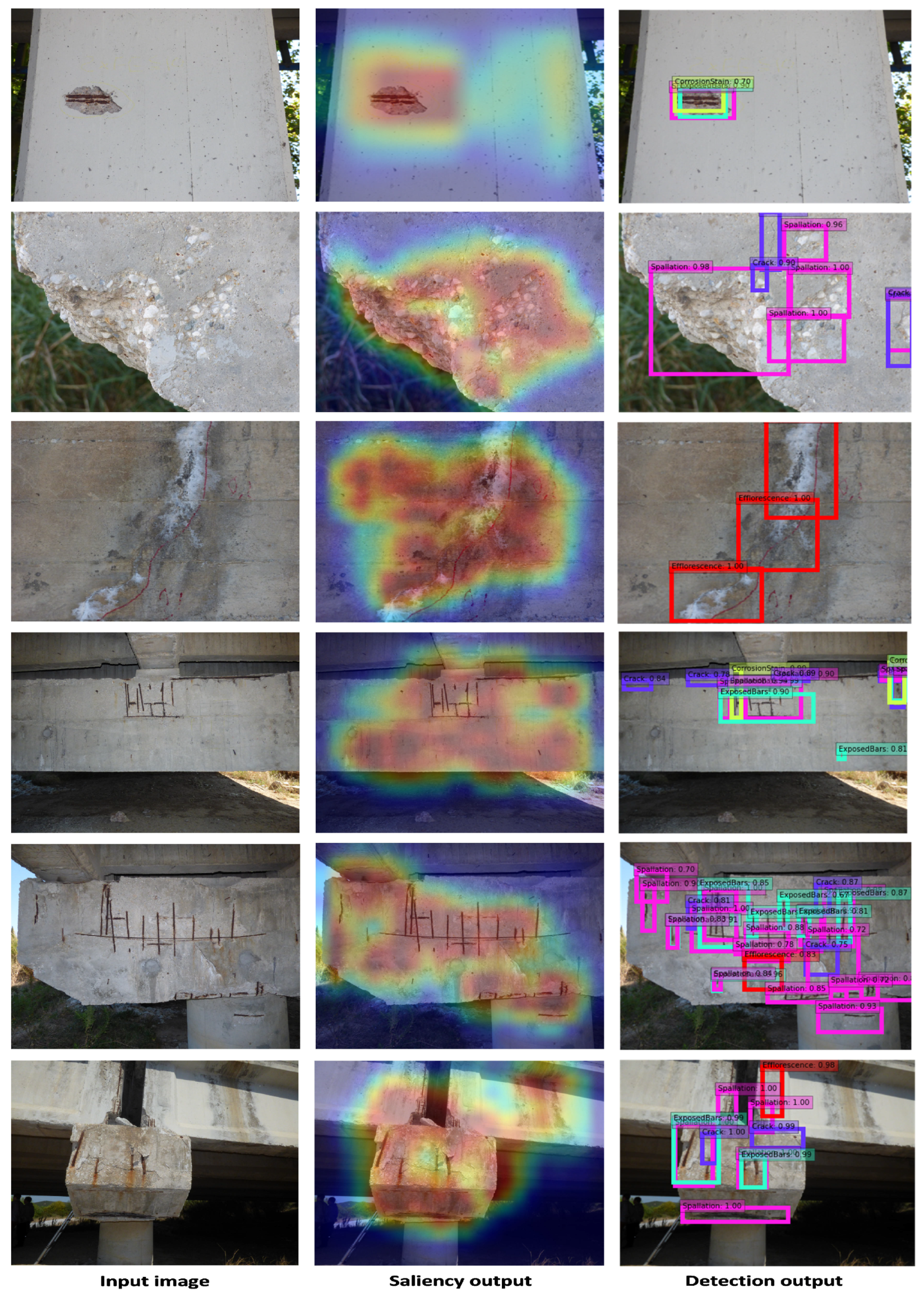

- We propose the SMDD-Net architecture, which integrates attention in single-stage concrete defect detection. The attention module extracts global and local saliency maps, which highlight localised features for better detection of multiple defect classes in the presence of background clutter (e.g., artefacts, bridge structure elements, etc.). Contrary to detection methods that target single defect localisation against uniform backgrounds, SMDD-Net is capable of localising complex defects characterised by variable shapes, a small size, low-contrast, and overlap.

- We propose an attention module that is based on saliency extraction through gradient-based back-propagation of our feature extraction network. The back-propagation is performed via two paths: a global path, which highlights large-sized defect structures, and a local path, which highlights local image characteristics containing small and low-contrast defects. The two paths are fused using inter-channel max-pooling, and the output is added to the pyramidal features through residual skip connections.

- We demonstrate the performance of the SMDD-Net model on the well-known CODEBRIM dataset [12], which contains five classes of defects and several image examples with small, low-contrast, and overlapping defects. Our model leverages the benefits of the two detection paradigms: the high accuracy of two-stage detection and the high speed of one-stage detection. We compared also the performance of our model with state-of-the-art methods using several examples of real-world UAV images.

2. Literature Review

2.1. Two-Stage Concrete Defect Detection

2.2. One-Stage Concrete Defect Detection

3. Proposed Method

3.1. Saliency for Defect Region Proposals

3.2. Multi-Label One-Stage Defect Detection

4. Experiments

4.1. Implementation Details

4.2. Evaluation Metrics

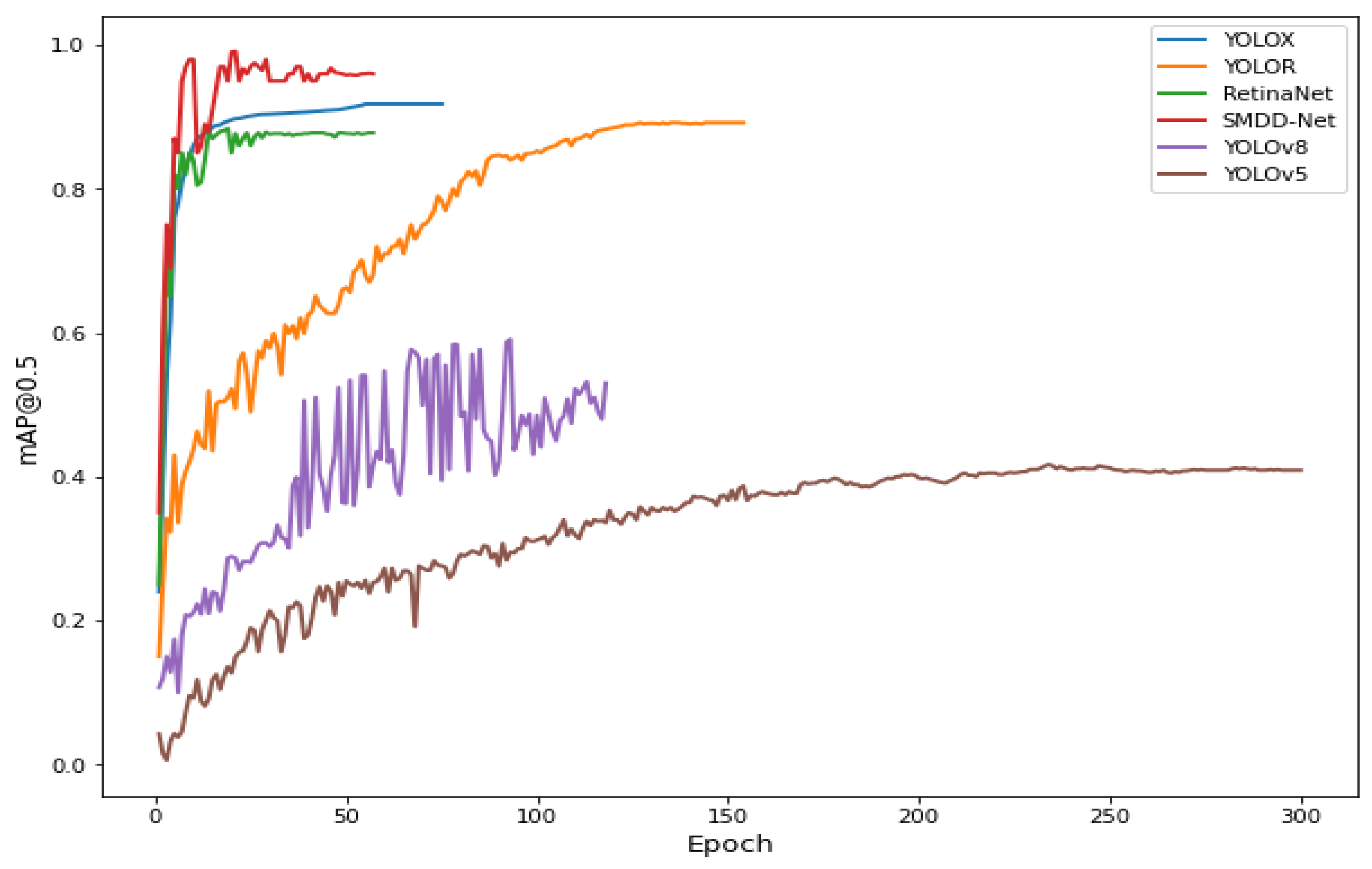

4.3. Results Analysis

| Method | #Param. | One-Stage | Two-Stage | Classification Head | Bounding Box Head | mAP@0.5 (%) | Speed (s) |

|---|---|---|---|---|---|---|---|

| Patel et al. [65] | 74.4 M | - | ✓ | BCE 1 Loss | Smooth L1 Loss | 91.2 | 0.14 |

| Xiong et al. [66] | 52.9 M | ✓ | - | BCE Loss | Smooth L1 Loss | 22.7 | 0.03 |

| YOLOv5-l [67] | 46.1 M | ✓ | - | BCE Loss | Smooth L1 Loss | 41.7 | 0.02 |

| YOLOv8-l [64] | 43.7 M | ✓ | - | BCE Loss | Smooth L1 Loss | 59.6 | 0.02 |

| RetinaNet [35] | 36.5 M | - | Focal Loss | Smooth L1 Loss | 88.4 | 0.07 | |

| YOLOX-l [62] | 54.2 M | ✓ | - | BCE Loss | Smooth L1 Loss | 91.8 | 0.04 |

| YOLOR-P6 [63] | 36.9 M | ✓ | - | BCE Loss | L2 Loss | 89.2 | 0.04 |

| SMDD-Net | 36.5 M | ✓ | - | Focal Loss | Smooth L1 Loss | 99.1 | 0.11 |

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Calvi, G.M.; Moratti, M.; O’Reilly, G.J.; Scattarreggia, N.; Monteiro, R.; Malomo, D.; Calvi, P.M.; Pinho, R. Once upon a time in Italy: The tale of the Morandi Bridge. Struct. Eng. Int. 2019, 29, 198–217. [Google Scholar] [CrossRef]

- Available online: https://nrc.canada.ca/en/research-development/products-services/technical-advisory-services/infrastructure-expertise-technology-assessment (accessed on 28 December 2022).

- Kim, I.H.; Jeon, H.; Baek, S.C.; Hong, W.H.; Jung, H.J. Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle. Sensors 2018, 18, 1881. [Google Scholar] [CrossRef] [PubMed]

- Mandirola, M.; Casarotti, C.; Peloso, S.; Lanese, I.; Brunesi, E.; Senaldi, I. Use of UAS for damage inspection and assessment of bridge infrastructures. Int. J. Disaster Risk Reduct. 2022, 72, 102824. [Google Scholar] [CrossRef]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 based on transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 13, 8085–8094. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.; Maguire, M. Comparison of deep convolutional neural networks and edge detectors for image-based crack detection in concrete. Constr. Build. Mater. 2018, 186, 1031–1045. [Google Scholar] [CrossRef]

- Jahanshahi, M.R.; Kelly, J.S.; Masri, S.F.; Sukhatme, G.S. A Survey and Evaluation of Promising Approaches for Automatic Image-Based Defect Detection of Bridge Structures. Struct. Infrastruct. Eng. 2009, 5, 455–486. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. arXiv 2019, arXiv:1905.05055v1. [Google Scholar] [CrossRef]

- Chen, C.; Seo, H.; Jun, C.; Zhao, Y. A potential Crack Region Method to Detect Crack Using Image Processing of Multiple Thresholding. Signal Image Video Process. 2022, 16, 1673–1681. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Li, C.; Sohn, K.; Yoon, J.; Pfister, T. CutPaste: Self-Supervised Learning for Anomaly Detection and Localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9659–9669. [Google Scholar]

- Mundt, M.; Majumder, S.; Murali, S.; Panetsos, P.; Ramesh, V. Meta-learning convolutional neural architectures for multi-target concrete defect classification with the concrete defect bridge image dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–17 June 2019; pp. 11196–11205. [Google Scholar]

- Hüthwohl, P.; Lu, R.; Brilakis, I. Multi-classifier for reinforced concrete bridge defects. Autom. Constr. 2019, 105, 102824. [Google Scholar] [CrossRef]

- Feroz, S.; Abu Dabous, S. UAV-Based Remote Sensing Applications for Bridge Condition Assessment. Remote Sens. 2021, 13, 1809. [Google Scholar] [CrossRef]

- Cha, Y.J.; Choi, W.; Suh, G.; Mahmoudkhani, S.; Büyüköztürk, O. Autonomous Structural Visual Inspection Using Region-Based Deep Learning for Detecting Multiple Damage Types. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 731–747. [Google Scholar] [CrossRef]

- He, Y.; Jin, Z.; Zhang, J.; Teng, S.; Chen, G.; Sun, X.; Cui, F. Pavement Surface Defect Detection Using Mask Region-Based Convolutional Neural Networks and Transfer Learning. Appl. Sci. 2022, 12, 7364. [Google Scholar] [CrossRef]

- Huang, B.; Zhaom, S.; Kang, F. Image-based automatic multiple-damage detection of concrete dams using region-based convolutional neural networks. J. Civ. Struct. Health Monit. 2022, 1–17. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; pp. 779–788. [Google Scholar]

- Cui, X.; Wang, Q.; Dai, J.; Zhang, R.; Li, S. Intelligent recognition of erosion damage to concrete based on improved YOLO-v3. Mater. Lett. 2021, 302, 130363. [Google Scholar] [CrossRef]

- Deng, J.; Lu, Y.; Lee, V.C.S. Imaging-based crack detection on concrete surfaces using You Only Look Once network. Struct. Monit. 2021, 20, 484–499. [Google Scholar] [CrossRef]

- Jiang, Y.; Pang, D.; Li, C. A deep learning approach for fast detection and classification of concrete damage. Autom. Constr. 2021, 128, 103785. [Google Scholar] [CrossRef]

- Jiang, W.; Liu, M.; Peng, Y.; Wu, L.; Wang, Y. HDCB-Net: A Neural Network With the Hybrid Dilated Convolution for Pixel-Level Crack Detection on Concrete Bridges. IEEE Trans. Ind. Inform. 2021, 17, 5485–5494. [Google Scholar] [CrossRef]

- Bhattacharya, G.; Mandal, B.; Puhan, N.B. Interleaved Deep Artifacts-Aware Attention Mechanism for Concrete Structural Defect Classification. IEEE Trans. Image Process. 2021, 30, 6957–6969. [Google Scholar] [CrossRef]

- Kang, J.; Tariq, S.; Oh, H.; Woo, S.S. A Survey of Deep Learning-Based Object Detection Methods and Datasets for Overhead Imagery. IEEE Access 2022, 10, 20118–20134. [Google Scholar] [CrossRef]

- Larochelle, H.; Hinton, G.E. Learning to combine foveal glimpses with a third-order Boltzmann machine. Adv. Neural Inf. Process. Syst. 2010, 23, 1243–1251. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual Attention Network for Image Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6450–6458. [Google Scholar]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. ResNeSt: Split-Attention Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, New Orleans, LA, USA, 19–20 June 2022; pp. 2735–2745. [Google Scholar]

- Park, J.; Woo, S.; Lee, J.-Y.; Kweon, S. A Simple and Light-Weight Attention Module for Convolutional Neural Networks. Int. J. Comput. Vis. 2020, 128, 783–798. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, G.; Zhang, L. A spatial-channel hierarchical deep learning network for pixel-level automated crack detection. Autom. Constr. 2020, 119, 103357. [Google Scholar] [CrossRef]

- Qiao, W.; Liu, Q.; Wu, X.; Ma, B.; Li, G. Automatic Pixel-Level Pavement Crack Recognition Using a Deep Feature Aggregation Segmentation Network with a scSE Attention Mechanism Module. Sensors 2021, 21, 2902. [Google Scholar] [CrossRef]

- Wan, H.; Gao, L.; Su, M.; Sun, Q.; Huang, L. Attention-Based Convolutional Neural Network for Pavement Crack Detection. Adv. Mater. Sci. Eng. 2021, 2021, 5520515. [Google Scholar] [CrossRef]

- Xiang, X.; Zhang, Y.; El Saddik, A. Pavement crack detection network based on pyramid structure and attention mechanism. IET Image Process. 2020, 14, 1580–1586. [Google Scholar] [CrossRef]

- Bhattacharya, G.; Mandal, B.; Puhan, N.B. Multi-deformation aware attention learning for concrete structural defect classification. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3707–3713. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Haciefendioglu, K.; Basaga, H.B. Concrete Road Crack Detection Using Deep Learning-Based Faster RCNN Method. Iran. J. Sci. Technol. 2022, 46, 1621–1633. [Google Scholar]

- Yao, G.; Wei, F.; Yang, Y.; Sun, Y. Deep-Learning-Based Bughole Detection for Concrete Surface Image. Adv. Civ. Eng. 2019, 2019, 8582963. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Wei, F.; Yao, G.; Yang, Y.; Sun, Y. Instance-level recognition and quantification for concrete surface bughole based on deep learning. Autom. Constr. 2019, 107, 102920. [Google Scholar] [CrossRef]

- Kang, D.; Benipal, S.S.; Gopal, D.L.; Cha, Y.J. Hybrid pixel-level concrete crack segmentation and quantification across complex backgrounds using deep learning. Autom. Constr. 2020, 118, 103291. [Google Scholar] [CrossRef]

- Mishra, M.; Jain, V.; Singh, S.K.; Maity, D. Two-stage method based on the you only look once framework and image segmentation for crack detection in concrete structures. Archit. Struct. Constr. 2022, 1–18. [Google Scholar] [CrossRef]

- Xu, Y.; Wei, S.; Bao, Y.; Li, H. Automatic seismic damage identification of reinforced concrete columns from images by a region-based deep convolutional neural network. Struct. Control Health Monit. 2019, 26, e2313. [Google Scholar] [CrossRef]

- Li, R.; Yuan, Y.; Zhang, W.; Yuan, Y. Unified Vision-Based Methodology for Simultaneous Concrete Defect Detection and Geolocalization. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 527–544. [Google Scholar] [CrossRef]

- Wan, H.; Gao, L.; Yuan, Z.; Qu, H.; Sun, Q.; Cheng, H.; Wang, R. A novel transformer model for surface damage detection and cognition of concrete bridges. Expert Syst. Appl. 2023, 213, 119019. [Google Scholar] [CrossRef]

- Teng, S.; Liu, Z.; Chen, G.; Cheng, L. Concrete Crack Detection Based on Well-Known Feature Extractor Model and the YOLOV2 Network. Appl. Sci. 2021, 11, 813. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Zhang, C.; Chang, C.C.; Jamshidi, M. Concrete bridge surface damage detection using a single-stage detector. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 389–409. [Google Scholar] [CrossRef]

- Wu, P.; Liu, A.; Fu, J.; Ye, X.; Zhao, Y. Autonomous surface crack identification of concrete structures based on an improved one-stage object detection algorithm. Eng. Struct. 2022, 272, 114962. [Google Scholar] [CrossRef]

- Wang, W.; Su, C.; Fu, D. Automatic detection of defects in concrete structures based on deep learning. Structures 2022, 43, 192–199. [Google Scholar] [CrossRef]

- Kumar, P.; Batchu, S.; Swamy, S.N.; Kota, S.R. Real-Time Concrete Damage Detection Using Deep Learning for High Rise Structures. IEEE Access 2021, 9, 112312–112331. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Jeong, E.; Seo, J.; Wacker, J. Literature Review and Technical Survey on Bridge Inspection Using Unmanned Aerial Vehicles. J. Perform. Constr. Facil. 2020, 34, 04020113. [Google Scholar] [CrossRef]

- Cheng, M.M.; Mitra, N.J.; Huang, X.; Torr, P.; Hu, S.M. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 569–582. [Google Scholar] [CrossRef] [PubMed]

- Filali, I.; Allili, M.S.; Benblidia, N. Multi-scale salient object detection using graph ranking and global–local saliency refinement. Signal Process. Image Commun. 2016, 47, 380–401. [Google Scholar] [CrossRef]

- Hou, Q.; Cheng, M.-M.; Hu, X.; Borji, A.; Tu, Z.; Torr, P. Deeply Supervised Salient Object Detection with Short Connections. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3203–3212. [Google Scholar]

- Tan, Z.; Nie, X.; Qian, Q.; Li, N.; Li, H. Learning to Rank Proposals for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 261–318. [Google Scholar]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep Learning for Generic Object Detection: A Survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Girshick, R. Fast RCNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zoph, B.; Cubuk, E.D.; Ghiasi, G.; Lin, T.Y.; Shlens, J.; Le, Q.V. Learning Data Augmentation Strategies for Object Detection. In Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 566–583. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. You only learn one representation: Unified network for multiple tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar]

- Jocher, G. YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 14 February 2023).

- Patel, R.A.; Steinmann, L.; Fehrenbach, J.; Fehrenbach, D.; Dehn, F. Convolution Neural Network-Based Machine Learning Approach for Visual Inspection of Concrete Structures. In Proceedings of the 1st Conference of the European Association on Quality Control of Bridges and Structures: EUROSTRUCT, Padova, Italy, 29 August–1 September 2021; pp. 704–712. [Google Scholar]

- Xiong, R.; Liu, P.; Tang, P. Human Reliability Analysis and Prediction for Visual Inspection in Bridge Maintenance. In Proceedings of the ASCE International Conference on Computing in Civil Engineering 2021, Orlando, FL, USA, 12–14 September 2021; pp. 254–262. [Google Scholar]

- Jocher, G. YOLOv5. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 14 February 2023).

| Classes | AP@0.5 | mAP@0.5 |

|---|---|---|

| Crack | 0.98 | 0.88 |

| Spallation | 0.96 | |

| Efflorescence | 0.80 | |

| Exposed bars | 0.90 | |

| Corrosion stain | 0.74 |

| Scenarios | #Param. | mAP@0.5 |

|---|---|---|

| SMDD-Net without attention module | 36.5 M | 0.88 |

| SMDD-Net without global saliency | 36.5 M | 0.95 |

| SMDD-Net without local saliency | 36.5 M | 0.93 |

| SMDD-Net without residual block | 36.5 M | 0.46 |

| SMDD-Net | 36.5 M | 0.99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hebbache, L.; Amirkhani, D.; Allili, M.S.; Hammouche, N.; Lapointe, J.-F. Leveraging Saliency in Single-Stage Multi-Label Concrete Defect Detection Using Unmanned Aerial Vehicle Imagery. Remote Sens. 2023, 15, 1218. https://doi.org/10.3390/rs15051218

Hebbache L, Amirkhani D, Allili MS, Hammouche N, Lapointe J-F. Leveraging Saliency in Single-Stage Multi-Label Concrete Defect Detection Using Unmanned Aerial Vehicle Imagery. Remote Sensing. 2023; 15(5):1218. https://doi.org/10.3390/rs15051218

Chicago/Turabian StyleHebbache, Loucif, Dariush Amirkhani, Mohand Saïd Allili, Nadir Hammouche, and Jean-François Lapointe. 2023. "Leveraging Saliency in Single-Stage Multi-Label Concrete Defect Detection Using Unmanned Aerial Vehicle Imagery" Remote Sensing 15, no. 5: 1218. https://doi.org/10.3390/rs15051218

APA StyleHebbache, L., Amirkhani, D., Allili, M. S., Hammouche, N., & Lapointe, J.-F. (2023). Leveraging Saliency in Single-Stage Multi-Label Concrete Defect Detection Using Unmanned Aerial Vehicle Imagery. Remote Sensing, 15(5), 1218. https://doi.org/10.3390/rs15051218