High-Resolution Semantic Segmentation of Woodland Fires Using Residual Attention UNet and Time Series of Sentinel-2

Abstract

:1. Introduction

2. Materials and Methods

2.1. Description of the Study Area

2.2. Data

2.3. The Datasets

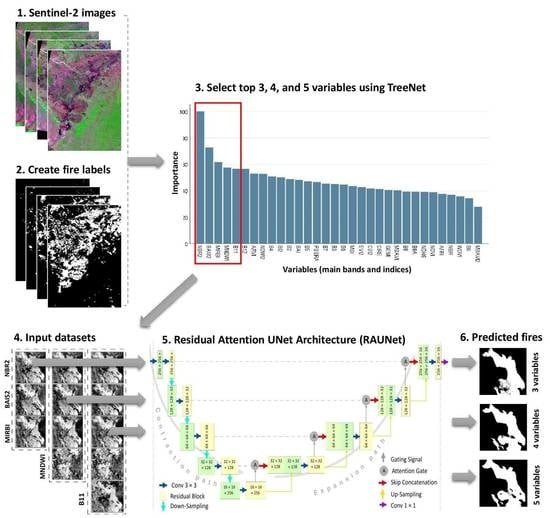

2.3.1. Pre-Processing of Sentinel-2 Images

2.3.2. Fire Label Dataset

2.3.3. Determining the top Variables

2.3.4. Training Datasets

2.4. Residual Attention UNet (RAUNet) Architecture

2.5. Accuracy Assessment

2.5.1. Collecting Testing Data from Forest Fires

2.5.2. Accuracy Assessment of UNet, AUNet, and RAUNet

3. Results

3.1. Top Derivatives of Sentinel-2

3.2. Marginal Effects of Top Derivatives

3.3. Performance of Trained Models Using Three Datasets

3.3.1. Dataset with the Top Three Variables

3.3.2. Dataset with the Top Four Variables

3.3.3. Dataset with the Top Five Variables

4. Discussion

4.1. The Selected Variables and Datasets

4.2. The Performance of the Trained RAUNet

4.3. The Efficiency of Trained Models in Demonstrating the Properties of the Fires

4.4. Application and Outlook

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Archibald, S.; Nickless, A.; Govender, N.; Scholes, R.J.; Lehsten, V. Climate and the inter-annual variability of fire in southern Africa: A meta-analysis using long-term field data and satellite-derived burnt area data. Glob. Ecol. Biogeogr. 2010, 19, 794–809. [Google Scholar] [CrossRef]

- Giglio, L.; Justice, C.; Boschetti, L.; Roy, D. MODIS/Terra+Aqua Burned Area Monthly L3 Global 500m SIN Grid V061; NASA EOSDIS Land Processes DAAC: Washington, DC, USA, 2021. [Google Scholar] [CrossRef]

- Tansey, K.; Grégoire, J.-M.; Defourny, P.; Leigh, R.; Pekel, J.-F.; van Bogaert, E.; Bartholomé, E. A new, global, multi-annual (2000–2007) burnt area product at 1 km resolution. Geophys. Res. Lett. 2008, 35, 1–6. [Google Scholar] [CrossRef]

- Saito, M.; Luyssaert, S.; Poulter, B.; Williams, M.; Ciais, P.; Bellassen, V.; Ryan, C.M.; Yue, C.; Cadule, P.; Peylin, P. Fire regimes and variability in aboveground woody biomass in miombo woodland. J. Geophys. Res. Biogeosci. 2014, 119, 1014–1029. [Google Scholar] [CrossRef] [Green Version]

- Tarimo, B.; Dick, Ø.B.; Gobakken, T.; Totland, Ø. Spatial distribution of temporal dynamics in anthropogenic fires in miombo savanna woodlands of Tanzania. Carbon Balance Manag. 2015, 10, 18. [Google Scholar] [CrossRef] [Green Version]

- Hantson, S.; Pueyo, S.; Chuvieco, E. Global fire size distribution is driven by human impact and climate. Glob. Ecol. Biogeogr. 2015, 24, 77–86. [Google Scholar] [CrossRef]

- Timberlake, J.; Chidumayo, E. Miombo Ecoregion: Vision Report: Report for World Wide Fund for Nature, Harare, Zimbabwe. Occasional Publications in Biodiversity No. 20. Biodiversity Foundation for Africa, Bulawayo. Available online: https://www.readkong.com/page/miombo-ecoregion-vision-report-jonathan-timberlake-8228894 (accessed on 12 September 2021).

- Ryan, C.M.; Pritchard, R.; McNicol, I.; Owen, M.; Fisher, J.A.; Lehmann, C. Ecosystem services from southern African woodlands and their future under global change. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2016, 371, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Fisher, M. Household welfare and forest dependence in Southern Malawi. Environ. Dev. Econ. 2004, 9, 135–154. [Google Scholar] [CrossRef] [Green Version]

- Mittermeier, R.A.; Mittermeier, C.G.; Brooks, T.M.; Pilgrim, J.D.; Konstant, W.R.; Da Fonseca, G.A.B.; Kormos, C. Wilderness and biodiversity conservation. Proc. Natl. Acad. Sci. USA 2003, 100, 10309–10313. [Google Scholar] [CrossRef] [Green Version]

- Campbell, B.; Frost, P.; Byron, N. Miombo woodlands and their use: Overview and key issues. In The Miombo in Transition: Woodlands and Welfare in Africa; Campbell, B.M., Ed.; Center for International Forestry Research: Bogor, Indonesia, 1996; ISBN 9798764072. [Google Scholar]

- Ribeiro, N.S.; Katerere, Y.; Chirwa, P.W.; Grundy, I.M. Miombo Woodlands in a Changing Environment: Securing the Resilience and Sustainability of People and Woodlands; Springer International Publishing: Cham, Switzerland, 2020; ISBN 978-3-030-50103-7. [Google Scholar]

- Whitlock, C.; Higuera, P.E.; McWethy, D.B.; Briles, C.E. Paleoecological perspectives on fire ecology: Revisiting the fire-regime concept. Open Ecol. J. 2010, 3, 6–23. [Google Scholar] [CrossRef] [Green Version]

- Ryan, C.M.; Williams, M. How does fire intensity and frequency affect miombo woodland tree populations and biomass? Ecol. Appl. 2011, 21, 48–60. [Google Scholar] [CrossRef]

- Sá, A.C.L.; Pereira, J.M.C.; Vasconcelos, M.J.P.; Silva, J.M.N.; Ribeiro, N.; Awasse, A. Assessing the feasibility of sub-pixel burned area mapping in miombo woodlands of northern Mozambique using MODIS imagery. Int. J. Remote Sens. 2003, 24, 1783–1796. [Google Scholar] [CrossRef]

- Ribeiro, N.S.; Cangela, A.; Chauque, A.; Bandeira, R.R.; Ribeiro-Barros, A.I. Characterisation of spatial and temporal distribution of the fire regime in Niassa National Reserve, northern Mozambique. Int. J. Wildland Fire 2017, 26, 1021. [Google Scholar] [CrossRef]

- van Wilgen, B.W.; de Klerk, H.M.; Stellmes, M.; Archibald, S. An analysis of the recent fire regimes in the Angolan catchment of the Okavango Delta, Central Africa. Fire Ecol. 2022, 18, 13. [Google Scholar] [CrossRef]

- Mganga, N.D.; Lyaruu, H.V.; Banyikwa, F. Above-ground carbon stock in a forest subjected to decadal frequent fires in western Tanzania. J. Biodivers. Environ. Sci. 2017, 10, 25–34. [Google Scholar]

- Engelbrecht, J.; Theron, A.; Vhengani, L.; Kemp, J. A Simple Normalized Difference Approach to Burnt Area Mapping Using Multi-Polarisation C-Band SAR. Remote Sens. 2017, 9, 764. [Google Scholar] [CrossRef] [Green Version]

- Roteta, E.; Bastarrika, A.; Padilla, M.; Storm, T.; Chuvieco, E. Development of a Sentinel-2 burned area algorithm: Generation of a small fire database for sub-Saharan Africa. Remote Sens. Environ. 2019, 222, 1–17. [Google Scholar] [CrossRef]

- de Bem, P.P.; de Carvalho Júnior, O.A.; de Carvalho, O.L.F.; Gomes, R.A.T.; Fontes Guimarães, R. Performance Analysis of Deep Convolutional Autoencoders with Different Patch Sizes for Change Detection from Burnt Areas. Remote Sens. 2020, 12, 2576. [Google Scholar] [CrossRef]

- Deshpande, M.V.; Pillai, D.; Jain, M. Agricultural burned area detection using an integrated approach utilizing multi spectral instrument based fire and vegetation indices from Sentinel-2 satellite. MethodsX 2022, 9, 101741. [Google Scholar] [CrossRef] [PubMed]

- Mpakairi, K.S.; Kadzunge, S.L.; Ndaimani, H. Testing the utility of the blue spectral region in burned area mapping: Insights from savanna wildfires. Remote Sens. Appl. Soc. Environ. 2020, 20, 100365. [Google Scholar] [CrossRef]

- Vanderhoof, M.K.; Hawbaker, T.J.; Teske, C.; Ku, A.; Noble, J.; Picotte, J. Mapping Wetland Burned Area from Sentinel-2 across the Southeastern United States and Its Contributions Relative to Landsat-8 (2016–2019). Fire 2021, 4, 52. [Google Scholar] [CrossRef]

- Addison, P.; Oommen, T. Utilizing satellite radar remote sensing for burn severity estimation. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 292–299. [Google Scholar] [CrossRef]

- Cardil, A.; Mola-Yudego, B.; Blázquez-Casado, Á.; González-Olabarria, J.R. Fire and burn severity assessment: Calibration of Relative Differenced Normalized Burn Ratio (RdNBR) with field data. J. Environ. Manag. 2019, 235, 342–349. [Google Scholar] [CrossRef] [PubMed]

- Filipponi, F. BAIS2: Burned Area Index for Sentinel-2. In The 2nd International Electronic Conference on Remote Sensing; MDPI: Basel, Switzerland, 2018; p. 364. [Google Scholar]

- Long, T.; Zhang, Z.; He, G.; Jiao, W.; Tang, C.; Wu, B.; Zhang, X.; Wang, G.; Yin, R. 30m Resolution Global Annual Burned Area Mapping Based on Landsat Images and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2018, 1–35. [Google Scholar] [CrossRef]

- Tanase, M.A.; Belenguer-Plomer, M.A.; Roteta, E.; Bastarrika, A.; Wheeler, J.; Fernández-Carrillo, Á.; Tansey, K.; Wiedemann, W.; Navratil, P.; Lohberger, S.; et al. Burned Area Detection and Mapping: Intercomparison of Sentinel-1 and Sentinel-2 Based Algorithms over Tropical Africa. Remote Sens. 2020, 12, 334. [Google Scholar] [CrossRef] [Green Version]

- Alcaras, E.; Costantino, D.; Guastaferro, F.; Parente, C.; Pepe, M. Normalized Burn Ratio Plus (NBR+): A New Index for Sentinel-2 Imagery. Remote Sens. 2022, 14, 1727. [Google Scholar] [CrossRef]

- Hoeser, T.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review-Part I: Evolution and Recent Trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Hoeser, T.; Bachofer, F.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review—Part II: Applications. Remote Sens. 2020, 12, 3053. [Google Scholar] [CrossRef]

- Rashkovetsky, D.; Mauracher, F.; Langer, M.; Schmitt, M. Wildfire Detection From Multisensor Satellite Imagery Using Deep Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7001–7016. [Google Scholar] [CrossRef]

- Knopp, L.; Wieland, M.; Rättich, M.; Martinis, S. A Deep Learning Approach for Burned Area Segmentation with Sentinel-2 Data. Remote Sens. 2020, 12, 2422. [Google Scholar] [CrossRef]

- de Almeida Pereira, G.H.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Pinto, M.M.; Libonati, R.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A deep learning approach for mapping and dating burned areas using temporal sequences of satellite images. ISPRS J. Photogramm. Remote Sens. 2020, 160, 260–274. [Google Scholar] [CrossRef]

- Seydi, S.T.; Saeidi, V.; Kalantar, B.; Ueda, N.; Halin, A.A. Fire-Net: A Deep Learning Framework for Active Forest Fire Detection. J. Sens. 2022, 2022, 8044390. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, P.; Liang, H.; Zheng, C.; Yin, J.; Tian, Y.; Cui, W. Semantic Segmentation and Analysis on Sensitive Parameters of Forest Fire Smoke Using Smoke-Unet and Landsat-8 Imagery. Remote Sens. 2022, 14, 45. [Google Scholar] [CrossRef]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-Wise Attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef] [Green Version]

- Seydi, S.T.; Hasanlou, M.; Chanussot, J. DSMNN-Net: A Deep Siamese Morphological Neural Network Model for Burned Area Mapping Using Multispectral Sentinel-2 and Hyperspectral PRISMA Images. Remote Sens. 2021, 13, 5138. [Google Scholar] [CrossRef]

- Abid, N.; Malik, M.I.; Shahzad, M.; Shafait, F.; Ali, H.; Ghaffar, M.M.; Weis, C.; Wehn, N.; Liwicki, M. Burnt Forest Estimation from Sentinel-2 Imagery of Australia using Unsupervised Deep Learning. In 2021 Digital Image Computing: Techniques and Applications (DICTA); IEEE: Piscataway, NJ, USA, 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Hong, Z.; Tang, Z.; Pan, H.; Zhang, Y.; Zheng, Z.; Zhou, R.; Ma, Z.; Zhang, Y.; Han, Y.; Wang, J.; et al. Active Fire Detection Using a Novel Convolutional Neural Network Based on Himawari-8 Satellite Images. Front. Environ. Sci. 2022, 10, 102. [Google Scholar] [CrossRef]

- Zhang, Q.; Ge, L.; Zhang, R.; Metternicht, G.I.; Du, Z.; Kuang, J.; Xu, M. Deep-learning-based burned area mapping using the synergy of Sentinel-1&2 data. Remote Sens. Environ. 2021, 264, 112575. [Google Scholar] [CrossRef]

- Belenguer-Plomer, M.A.; Tanase, M.A.; Chuvieco, E.; Bovolo, F. CNN-based burned area mapping using radar and optical data. Remote Sens. Environ. 2021, 260, 112468. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Le Folgoc, L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. In Proceedings of the 1st Conference on Medical Imaging with Deep Learning (MIDL 2018), Amsterdam, The Netherlands, 4–6 July 2018. [Google Scholar]

- Monaco, S.; Greco, S.; Farasin, A.; Colomba, L.; Apiletti, D.; Garza, P.; Cerquitelli, T.; Baralis, E. Attention to Fires: Multi-Channel Deep Learning Models for Wildfire Severity Prediction. Appl. Sci. 2021, 11, 11060. [Google Scholar] [CrossRef]

- Tovar, P.; Adarme, M.O.; Feitosa, R.Q. Deforestation Detection in the Amazon Rainforest with Spatial and Channel Attention Mechanisms. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B3-2021, 851–858. [Google Scholar] [CrossRef]

- Yang, C.; Guo, X.; Wang, T.; Yang, Y.; Ji, N.; Li, D.; Lv, H.; Ma, T. Automatic Brain Tumor Segmentation Method Based on Modified Convolutional Neural Network. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2019, 2019, 998–1001. [Google Scholar] [CrossRef]

- Zhang, J.; Jiang, Z.; Dong, J.; Hou, Y.; Liu, B. Attention Gate ResU-Net for Automatic MRI Brain Tumor Segmentation. IEEE Access 2020, 8, 58533–58545. [Google Scholar] [CrossRef]

- Maji, D.; Sigedar, P.; Singh, M. Attention Res-UNet with Guided Decoder for semantic segmentation of brain tumors. Biomed. Signal Process. Control. 2022, 71, 103077. [Google Scholar] [CrossRef]

- Cha, J.; Jeong, J. Improved U-Net with Residual Attention Block for Mixed-Defect Wafer Maps. Appl. Sci. 2022, 12, 2209. [Google Scholar] [CrossRef]

- Li, C.; Liu, Y.; Yin, H.; Li, Y.; Guo, Q.; Zhang, L.; Du, P. Attention Residual U-Net for Building Segmentation in Aerial Images. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2021), Brussels, Belgium, 11–16 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 4047–4050, ISBN 978-1-6654-0369-6. [Google Scholar]

- Men, G.; He, G.; Wang, G. Concatenated Residual Attention UNet for Semantic Segmentation of Urban Green Space. Forests 2021, 12, 1441. [Google Scholar] [CrossRef]

- Maquia, I.; Catarino, S.; Pena, A.R.; Brito, D.R.A.; Ribeiro, N.S.; Romeiras, M.M.; Ribeiro-Barros, A.I. Diversification of African Tree Legumes in Miombo-Mopane Woodlands. Plants 2019, 8, 182. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beck, H.E.; Zimmermann, N.E.; McVicar, T.R.; Vergopolan, N.; Berg, A.; Wood, E.F. Present and future Köppen-Geiger climate classification maps at 1-km resolution. Sci. Data 2018, 5, 180214. [Google Scholar] [CrossRef] [Green Version]

- Republic of Mozambique, Ministry for the Coordination of Environmental Affairs. The 4th National Report on Implementation of the Convention on Biological Diversity in Mozambique; Republic of Mozambique, Ministry for the Coordination of Environmental Affairs: Maputo, Mozambique, 2009; Available online: https://www.cbd.int/doc/world/mz/mz-nr-04-en.pdf (accessed on 14 September 2021).

- FAO. Global Forest Resources Assessment 2015: How Are the World’s Forests Changing? 2nd ed.; Food & Agriculture Organization of the United Nations: Rome, Italy, 2017; ISBN 978-92-5-109283-5. [Google Scholar]

- Chidumayo, E.; Gumbo, D. The Dry Forests and Woodlands of Africa: Managing for Products and Services, 1st ed.; Earthscan: London, UK; Washington, DC, USA, 2010; ISBN 978-1-84971-131-9. [Google Scholar]

- Manyanda, B.J.; Nzunda, E.F.; Mugasha, W.A.; Malimbwi, R.E. Effects of drivers and their variations on the number of stems and aboveground carbon removals in miombo woodlands of mainland Tanzania. Carbon Balance Manag. 2021, 16, 16. [Google Scholar] [CrossRef]

- Key, C.; Benson, N. Measuring and remote sensing of burn severity. In Proceedings Joint Fire Science Conference and Workshop; University of Idaho and International Association of Wildland Fire Moscow: Moscow, Russia, 1999; Volume 2, p. 284. [Google Scholar]

- Lutes, D.C.; Keane, R.E.; Caratti, J.F.; Key, C.H.; Benson, N.C.; Sutherland, S.; Gangi, L.J. FIREMON: Fire Effects Monitoring and Inventory System; General Technical Report RMRS-GTR-164-CD; USDA: Washington, DC, USA, 2006; Volume 164. [Google Scholar] [CrossRef]

- Chuvieco, E.; Martín, M.P.; Palacios, A. Assessment of different spectral indices in the red-near-infrared spectral domain for burned land discrimination. Int. J. Remote Sens. 2002, 23, 5103–5110. [Google Scholar] [CrossRef]

- Trigg, S.; Flasse, S. An evaluation of different bi-spectral spaces for discriminating burned shrub-savannah. Int. J. Remote Sens. 2001, 22, 2641–2647. [Google Scholar] [CrossRef]

- Welikhe, P.; Quansah, J.E.; Fall, S.; McElhenney, W. Estimation of Soil Moisture Percentage Using LANDSAT-based Moisture Stress Index. J. Remote Sens. GIS 2017, 6, 1–5. [Google Scholar] [CrossRef]

- Smith, A.M.; Wooster, M.J.; Drake, N.A.; Dipotso, F.M.; Falkowski, M.J.; Hudak, A.T. Testing the potential of multi-spectral remote sensing for retrospectively estimating fire severity in African Savannahs. Remote Sens. Environ. 2005, 97, 92–115. [Google Scholar] [CrossRef] [Green Version]

- Karnieli, A.; Kaufman, Y.J.; Remer, L.; Wald, A. AFRI—aerosol free vegetation index. Remote Sens. Environ. 2001, 77, 10–21. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Clevers, J. Application of the WDVI in estimating LAI at the generative stage of barley. ISPRS J. Photogramm. Remote Sens. 1991, 46, 37–47. [Google Scholar] [CrossRef]

- Clevers, J. The derivation of a simplified reflectance model for the estimation of leaf area index. Remote Sens. Environ. 1988, 25, 53–69. [Google Scholar] [CrossRef]

- Pinty, B.; Verstraete, M.M. GEMI: A non-linear index to monitor global vegetation from satellites. Vegetatio 1992, 101, 15–20. [Google Scholar] [CrossRef]

- Delegido, J.; Verrelst, J.; Alonso, L.; Moreno, J. Evaluation of Sentinel-2 red-edge bands for empirical estimation of green LAI and chlorophyll content. Sensors 2011, 11, 7063–7081. [Google Scholar] [CrossRef] [Green Version]

- Blackburn, G.A. Quantifying chlorophylls and caroteniods at leaf and canopy scales. Remote Sens. Environ. 1998, 66, 273–285. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Qi, J.; Kerr, Y.; Chehbouni, A. External factor consideration in vegetation index development. In Proceedings of the 6th International Symposium on Physical Measurements and Signatures in Remote Sensing, Val D’Isere, France, 17–22 January 1994; pp. 723–730. Available online: https://ntrs.nasa.gov/citations/19950010656 (accessed on 11 February 2022).

- Gitelson, A.A. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, 1–4. [Google Scholar] [CrossRef] [Green Version]

- Gao, B. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Lacaux, J.P.; Tourre, Y.M.; Vignolles, C.; Ndione, J.A.; Lafaye, M. Classification of ponds from high-spatial resolution remote sensing: Application to Rift Valley Fever epidemics in Senegal. Remote Sens. Environ. 2007, 106, 66–74. [Google Scholar] [CrossRef]

- Friedman, J.H. Stochastic gradient boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Statist. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Kneusel, R.T. Practical Deep Learning: A Python-Based Introduction, 1st ed.; No Starch Press Inc.: San Francisco, CA, USA, 2021; ISBN 978-1-7185-0075-4. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; Available online: https://dblp.org/db/conf/iclr/iclr2015.html (accessed on 11 May 2022).

- Chollet, F. Deep Learning with Python; Manning Publications: New York, NY, USA, 2017; ISBN 1617294438. [Google Scholar]

- TensorFlow. Introduction to the Keras Tuner. Available online: https://www.tensorflow.org/tutorials/keras/keras_tuner (accessed on 7 October 2022).

- Ngadze, F.; Mpakairi, K.S.; Kavhu, B.; Ndaimani, H.; Maremba, M.S. Exploring the utility of Sentinel-2 MSI and Landsat 8 OLI in burned area mapping for a heterogenous savannah landscape. PLoS ONE 2020, 15, e0232962. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M.; Chanussot, J. Burnt-Net: Wildfire burned area mapping with single post-fire Sentinel-2 data and deep learning morphological neural network. Ecol. Indic. 2022, 140, 108999. [Google Scholar] [CrossRef]

- Pinto, M.M.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A Practical Method for High-Resolution Burned Area Monitoring Using Sentinel-2 and VIIRS. Remote Sens. 2021, 13, 1608. [Google Scholar] [CrossRef]

- Zhang, Q.; Ge, L.; Zhang, R.; Metternicht, G.I.; Liu, C.; Du, Z. Towards a Deep-Learning-Based Framework of Sentinel-2 Imagery for Automated Active Fire Detection. Remote Sens. 2021, 13, 4790. [Google Scholar] [CrossRef]

- Zhang, Y.; Ling, F.; Wang, X.; Foody, G.M.; Boyd, D.S.; Li, X.; Du, Y.; Atkinson, P.M. Tracking small-scale tropical forest disturbances: Fusing the Landsat and Sentinel-2 data record. Remote Sens. Environ. 2021, 261, 112470. [Google Scholar] [CrossRef]

- Masolele, R.N.; de Sy, V.; Marcos, D.; Verbesselt, J.; Gieseke, F.; Mulatu, K.A.; Moges, Y.; Sebrala, H.; Martius, C.; Herold, M. Using high-resolution imagery and deep learning to classify land-use following deforestation: A case study in Ethiopia. GIScience Remote Sens. 2022, 59, 1446–1472. [Google Scholar] [CrossRef]

- Yu, T.; Wu, W.; Gong, C.; Li, X. Residual Multi-Attention Classification Network for A Forest Dominated Tropical Landscape Using High-Resolution Remote Sensing Imagery. IJGI 2021, 10, 22. [Google Scholar] [CrossRef]

- John, D.; Zhang, C. An attention-based U-Net for detecting deforestation within satellite sensor imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102685. [Google Scholar] [CrossRef]

- Farasin, A.; Colomba, L.; Palomba, G.; Nini, G. Supervised Burned Areas Delineation by Means of Sentinel-2 Imagery and Convolutional Neural Networks. In CoRe Paper—Using Artificial Intelligence to Exploit Satellite Data in Risk and Crisis Management, Proceedings of the ISCRAM 2020 Conference Proceedings—17th International Conference on Information Systems for Crisis Response and Management, Blacksburg, VA, USA, 24–27 May 2020; Hughes, A.L., McNeill, F., Zobel, C.W., Eds.; Virginia Tech: Blacksburg, VA, USA, 2020; pp. 1060–1071. ISBN 2411-3482. [Google Scholar]

- Ramo, R.; Roteta, E.; Bistinas, I.; van Wees, D.; Bastarrika, A.; Chuvieco, E.; van der Werf, G.R. African burned area and fire carbon emissions are strongly impacted by small fires undetected by coarse resolution satellite data. Proc. Natl. Acad. Sci. USA 2021, 118, e2011160118. [Google Scholar] [CrossRef]

- Zhang, P.; Ban, Y.; Nascetti, A. Learning U-Net without forgetting for near real-time wildfire monitoring by the fusion of SAR and optical time series. Remote Sens. Environ. 2021, 261, 112467. [Google Scholar] [CrossRef]

- Li, H.; Wang, L.; Cheng, S. HARNU-Net: Hierarchical Attention Residual Nested U-Net for Change Detection in Remote Sensing Images. Sensors 2022, 22, 4626. [Google Scholar] [CrossRef]

| Type | Index | Description * and Equation | Reference |

|---|---|---|---|

| Burn Indices | Normalized Burn Ratio (NBR) | NBR highlights burned areas in large fire zones (>500 acres), while mitigating illumination and atmospheric effects. NBR = (B8A − B12)/(B8A + B12) | [60] |

| Normalized Burn Ratio2 (NBR2) | NBR2 modifies the NBR to highlight water sensitivity in vegetation and may be useful in post-fire recovery studies. NBR2 = (B11 − B12)/(B11 + B12) | [61] | |

| Burned Area Index (BAI) | Highlights burned land by emphasizing the charcoal signal in post-fire images. Brighter pixels indicate burned areas. BAI = 1/((0.1 − B4)2 + (0.06 − B8A)2) | [62] | |

| Burned Area Index for Sentinel-2 (BAIS2) | BAIS2 is applied for the detection of both burned areas and active fires. BAIS2 = 1 − √((B6 × B7 × B8A)/B4) × ((B12 − B8A)/√(B12 + B8A) + 1) | [27] | |

| Mid-Infrared Burn Index (MIRBI) | MIRBI is calculated using two reflectance SWIR bands (B11 and B12). It is sensitive to spectral changes due to fire regardless of noises. MIRBI = 10 × B12 − 9.8 × B11 + 2 | [63] | |

| Moisture Stress Index (MSI) | MSI is used for canopy stress analysis, productivity prediction, and biophysical modeling. MSI = B11/B8 | [64] | |

| Char Soil Index2 (CSI2) | In savanna and other fires, two ash endmembers occur: white mineral ash, where fuel has undergone complete combustion; and darker black ash or char, where an unburned fuel component remains. CSI = B8A/B12 | [65] | |

| Vegetation Indices | Aerosol-Free Vegetation Index (AFRI) | AFRI 1600 nm (AFRI1) and AFRI 2100 nm (AFRI2). The advantage of the derived AFRIs is to penetrate the atmospheric column even when aerosols such as smoke or sulfates exist. AFRI1 = (B8A − 0.66 × B11)/(B8A + 0.66 × B11) AFRI2 = (B8A − 0.5 × B12)/(B8A + 0.5 × B12) | [66] |

| Atmospherically Resistant Vegetation Index (ARVI) | ARVI retrieves information regarding the atmosphere opacity. ARVI is, on average, four times less sensitive to atmospheric effects than the NDVI. ARVI = (B8 − (B4 − γ(B2 − B4)))/(B8 + (B4 − γ(B2 − B4))) where γ is a weighting function for the difference in reflectance of the two bands that depends on aerosol type (in this study: γ = 1). | [67] | |

| Normalized Difference Vegetation Index (NDVI) | Provides a measurement for the photosynthetic activity and is strongly in correlation with the density and vitality of the vegetation on the Earth’s surface. NDVI = (B8A − B4)/(B8A + B4) | [68] | |

| Two-band Enhanced Vegetation Index (EVI2) | EVI2 is similar to the NDVI in that it is a measure of vegetation cover, but it is less susceptible to biomass saturation. EVI2 = 2.5 ((B8 − B4)/(B8 + (2.4 × B4) + 1)) | [69] | |

| Weighted Difference Vegetation Index (WDVI) | Corrects near-infrared reflectance for the soil background. WDVI = B8 − (g × B4) where g is the slope of the soil line. | [70,71] | |

| Global Environmental Monitoring Index (GEMI) | Was developed to minimize problems of contamination of the vegetation signal by extraneous factors, and it is vital for the remote sensing of dark surfaces, such as recently burned areas. GEMI = γ (1 − 0.25 × γ) − ((B4 − 0.125)/(1 − B4)) where γ(Sentinel) = (2 ((B8)2 − (B4)2) + 1.5 × B8 + 0.5 × B4)/(B8 + B4 + 0.5). | [72] | |

| Normalized Difference Index 4 and 5 (NDI45) | This index is linear, with less saturation at higher values than the NDVI. It has a good correlation with the green LAI because of the red-edge band usage. NDI45 = (B5 − B4)/(B5 + B4) | [73] | |

| Pigment-Specific Simple Ratio (PSSRa) | PSSRa is sensitive to high concentrations of chlorophyll a, and it was developed to investigate the potential of a range of spectral approaches for quantifying pigments at the scale of the whole plant canopy. PSSRa = B8A/B4 | [74] | |

| Soil Indices | Modified Soil-Adjusted Vegetation Index (MSAVI) | Determines the density of greenness by reducing the soil background influence based on the product of NDVI and WDVI. MSAVI = (2 × B8 + 1 − √((2 × B8 + 1)2 − 8 (B8 − B5)))/2 | [75] |

| Second Modified Soil-Adjusted Vegetation Index (MSAVI2) | MSAVI2 is a good index for areas that are not completely covered with vegetation and have exposed soil surface. It is also quite susceptible to atmospheric conditions. MSAVI2 = (2 × B8A + 1 − √((2 × B8A + 1)2 − 8 (B8A − B4)))/2 | [76] | |

| Red-Edge Chlorophyll Index (CIRed Edge/CIRE) | CIRE was developed to estimate the chlorophyll content of leaves. Chlorophyll is a good indicator of the plant’s production potential. CIRE = B7/B5 − 1 | [77] | |

| Water Indices | Normalized Difference Water Index2 (NDWI2) | This index was developed to detect surface waters in wetland environments and to allow for the measurement of surface water extent. NDWI2 = (B8 − B12)/(B8 + B12) | [78] |

| Modified Normalized Difference Water Index (MNDWI) | This index was developed to enhance open water features, while efficiently suppressing and even removing built-up land, vegetation, and soil noise. MNDWI = (B3 − B11)/(B3 + B11) | [79] | |

| Normalized Pond Index (NDPI) | The NDPI algorithm makes it possible not only to distinguish small ponds and water bodies (<0.01 ha), but also to differentiate vegetation inside ponds from that in their surroundings. NDPI = (B11 − B3)/(B11 + B3) | [80] |

| Dataset | UNet | AUNet | RAUNet | ||||||

|---|---|---|---|---|---|---|---|---|---|

| IoU 1 | AUC 2 | OA 3 | IoU | AUC | OA | IoU | AUC | OA | |

| NBR2 4, BAIS2 5, MIRBI 6 | 0.8809 | 0.8868 | 0.9758 | 0.8703 | 0.8726 | 0.9710 | 0.8562 | 0.8609 | 0.9659 |

| NBR2, BAIS2, MIRBI, MNDWI 7 | 0.8946 | 0.8906 | 0.9793 | 0.8730 | 0.8805 | 0.9713 | 0.9117 | 0.8976 | 0.9830 |

| NBR2, BAIS2, MIRBI, MNDWI, B11 8 | 0.8915 | 0.8976 | 0.9779 | 0.8974 | 0.9005 | 0.9798 | 0.9238 | 0.9088 | 0.9853 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shirvani, Z.; Abdi, O.; Goodman, R.C. High-Resolution Semantic Segmentation of Woodland Fires Using Residual Attention UNet and Time Series of Sentinel-2. Remote Sens. 2023, 15, 1342. https://doi.org/10.3390/rs15051342

Shirvani Z, Abdi O, Goodman RC. High-Resolution Semantic Segmentation of Woodland Fires Using Residual Attention UNet and Time Series of Sentinel-2. Remote Sensing. 2023; 15(5):1342. https://doi.org/10.3390/rs15051342

Chicago/Turabian StyleShirvani, Zeinab, Omid Abdi, and Rosa C. Goodman. 2023. "High-Resolution Semantic Segmentation of Woodland Fires Using Residual Attention UNet and Time Series of Sentinel-2" Remote Sensing 15, no. 5: 1342. https://doi.org/10.3390/rs15051342

APA StyleShirvani, Z., Abdi, O., & Goodman, R. C. (2023). High-Resolution Semantic Segmentation of Woodland Fires Using Residual Attention UNet and Time Series of Sentinel-2. Remote Sensing, 15(5), 1342. https://doi.org/10.3390/rs15051342