1. Introduction

Change detection (CD) is the process of detecting change through remote sensing images taken at different times over the same geographic area. SAR imagery has been used more rarely for change detection tasks than optical imagery, but has always been attractive to scholars due to the independence of atmosphere and sunlight conditions. In recent decades, it has received widespread attention, including urban studies [

1], environmental monitoring [

2], disaster assessment [

3], crop management [

4], and land cover monitoring [

5]. However, the inherent multiplicative speckle noise has always restricted the analysis and application of SAR to change detection tasks [

6].

There are various perspectives for classifying change detection (CD). In terms of the data source, CD can be classified as follows: (1) monomodal change detection (MNCD) and multi-modal change detection (MMCD); (2) from the detection strategy: direct change detection and post-classification comparison; (3) from the scale: pixel-based change detection (PBCD) and object-based change detection (OBCD); (4) from the labeling: unsupervised, semi-supervised, and supervised change detection. Despite the diverse categories, the basic steps typically involve three stages [

7]: (1) pre-processing; (2) generation of the difference image (DI); (3) analyzing the difference image to generate the change map (CM).

The first step usually encompasses geometric correction, co-registration, and denoising. The purpose is to geographically align the bi-temporal images, as well as to suppress the noise. In the second step, the differences between the dual-temporal images are compared pixel by pixel in order to obtain the difference image (DI) representing the change level. The third step is to discriminate the DI into two groups: “changed” and “unchanged”. The DI generation step and CM generation step are widely emphasized in the study.

In the context of SAR change detection, the suppression of speckle noise by the ratio-based method is an important research trend. In the DI generation step, the main approaches are the ratio detector (RD) [

8] and the log-ratio detector (LRD) [

9]. For isolated noise pixels, a mean-ratio detector (MRD) [

10] incorporating neighborhood information was developed to find changes by the ratio of the mean values of local patches. Additionally, other advanced ratio-based detectors, such as the neighborhood-based ratio detector [

11] and the region likelihood ratio detector [

12], have been developed to address specific situations.

Later, the combined difference image (CDI) was proposed to enhance detection by fusing the LRD and the MRD. It is based on the consensus that different detectors target different differences. In the work of Ma et al. [

13], the authors performed wavelet transforms on the LRD and the MRD and then extracted their high- and low-frequency components, respectively. The final DI was reconstructed by fusing LL, LH, HL, and HH under a neighborhood-based fusion rule. Zheng et al. [

14] fused the subtraction DI and the log-ratio DI by experimental trial and error, allowing region consistency and edge information to be retained. In [

15], the authors proposed a novel fusion strategy based on the discrete wavelet transform (DWT). The study explored the rule of selecting the weight averaging for the low frequency and the maximum local contrast coefficients for the high frequency. Experience showed that the CDI fusing the log-ratio DI and the Gauss-log ratio DI enhances the difference intensity of the changed area. Later, the work by Gong et al. [

16] extended the fusion techniques by constructing intensity–texture difference images (ITIDs). The ITID includes the intensity DI (IDI) and the texture DI (TDI), which are generated by the log-ratio detector and Garbo filter banks. Innovatively, the multivariate generalized Gaussian distribution (MGGD) is employed to fit the joint probability (IDI, TDI), which is used as a prior term in the graph cut. Recently, Wang et al. [

17] fused superpixel-level affinities and pixel-level heterogeneous affinities to enhance the multi-scale properties for SAR change detection.

Despite the success of ratio-based methods on CD tasks, they are limited by the properties of first-order statistics and may still fail to detect changes when the average intensity of local neighborhoods remains constant [

10]. One possible solution is to use sliding-window-based statistical analysis to consider higher-order neighborhood characteristics. In this mode, changes are detected by measuring the difference of the statistical distribution of each pixel between dual-temporal images. A classical approach is the assumption of a Gaussian distribution under the KL divergence [

18]. Subsequently, a local image statistical series expansion method based on cumulants was proposed by Inglada and Mercier [

10] to substitute the Gaussian assumption. Immediately afterwards, Zheng and You [

19] proposed a projection-based Jeffrey detector (PJD) by replacing the KL divergence with the Jeffrey divergence. More similarity-based CD methods can be found in the work of Alberga [

20]. However, since the changes are not modeled, it is difficult to select the window size, which affects the misdetections and overdetections.

In recent years, some scholars have obtained difference images through feature transformation and representation. In this paradigm, the amplitudes are replaced by abstract and reliable features. In [

21], the interaction between feature points representing local amplitude attributes was defined as structure features, which were encoded by a graph model based on the ratio similarity. Then, the change can be indicated by the consistency between the graph model constructed on bi-temporal SAR images. In the work of Wan et al. [

22], a sort histogram was created for each pixel to implicitly express the local spatial layout, which weakens the defects of the PBCD method. Besides, a pairwise pixel-structure-representing approach was proposed by Touati et al. [

23] for the heterogeneous CD task. It models the observation field through each image’s own pixel pairs, which is imaging modality-invariant. Recently, a parametric contraction mapping strategy based on spatial fractal decomposition was proposed in [

24] to make dual-temporal images comparable in the presence of intensity heterogeneity. This mapping is based on the consensus that any satellite data can be approximately encoded with their own appropriate spatial transformation part. Moreover, in recent studies, Sun et al. [

25] proposed the NLPG method to build a K-nearest graph and a cross-mapping graph for each pixel, whose discrepancy can reflect the change information. Later, an extended version of NLPG, named IRG-McS [

26], was put forward based on superpixels and iteration.

The DI analysis step can be viewed as a binary partitioning problem. A decision threshold is usually determined to divide the DI into two categories. The Kittler–Illingworth threshold (K&I) [

27], the expectation maximization (EM) threshold, and Otsu [

28] are several widely used estimation methods, and they work under the class conditional distribution assumption and no prior distribution assumption, respectively. Besides, empirical threshold selection is also a common approach, which is called “trial and error” (TAE). Clustering methods such as PCAKmeans [

29], FCM, and FLICM [

30,

31,

32], in an unsupervised segmentation manner, automatically aggregate pixels into “change” and “unchanged” classes without modeling the statistical distribution. Meanwhile, the recently proposed new threshold method HFEM outperforms Otsu and FCM in the case of only a few change areas [

33].

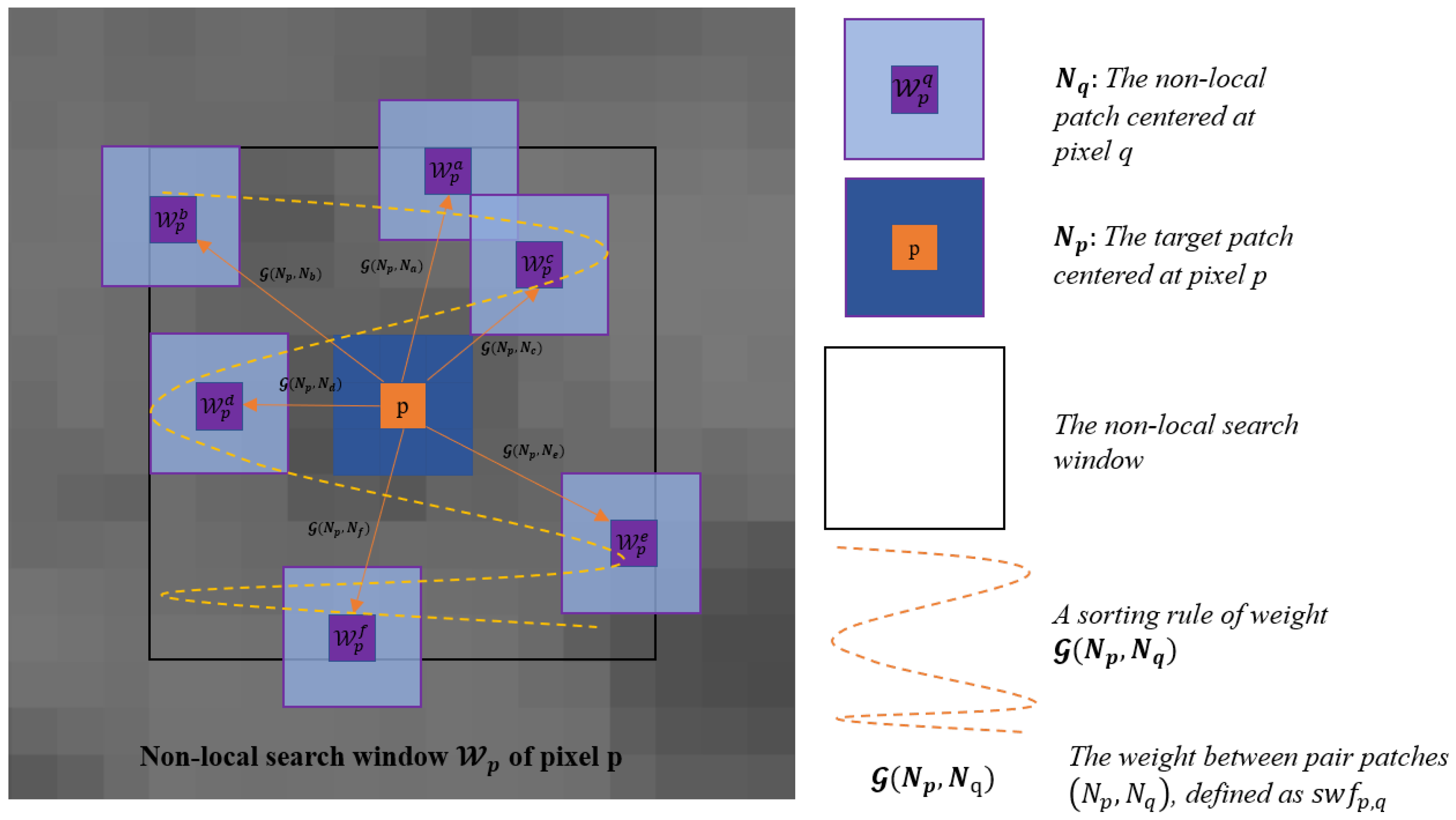

This research focused on improving the performance of the pixel-based change detector in the presence of speckle noise. To this end, we propose an effective method based on the self-similarity of non-local structures. Speckle noise can still produce a large amplitude difference even between pixels pairs that have not changed, even in a monomodal CD task. However, the unchanged areas share the same spatial structure [

25]. Inspired by Sun et al. [

34,

35], we can, therefore, determine whether a pixel has changed by measuring the structural consistency of the corresponding pixels. Unlike them, we focused on SAR change detection. The acquired structural features were dense, i.e., each patch in the non-local scale was taken into account. The spatial structure information of pixels is represented by our proposed non-local structure weight feature (NLSW) and a sorted version (SNLSW). The structure consistency can be measured by the similarity/dissimilarity of NLSW features at the

-norm.

Each component in the NLSW/SNLSW feature, as the weight strength of the mutual interaction between the center pixel and non-local spatial pixel, is a function of the similarity of their neighborhood patches. The Euclidean distance used in the NLM algorithm is not suitable for SAR due to its inability to accurately construct the strength of the structural weights. To overcome this problem, we derived and approximated a similarity metric suitable for the multiplicative noise model based on our previous work [

36], where a dedicated statistical distribution of SAR, i.e., the Nakagami–Rayleigh distribution, was assumed. The derived similarity metric was then used to build the weight components. As a result, pixels associated with changes presented larger differences between the corresponding NLSW/SNLSW features compared to those unchanged pixels.

The conversion of the difference image (DI) to the change map (CM) can be regarded as a threshold segmentation procedure. The constant false alarm rate (CFAR) was employed to automatically extract the change areas with an imposed Rayleigh statistical distribution assumption to avoid the difficulty of estimating the decision threshold using histogram-based methods when the change indicator exhibits a unimodal histogram. Besides, the Otsu threshold, K&I threshold, and TAE were also implemented in the DI analysis step.

The main contributions are as follows:

- (1)

We propose an unsupervised framework for automated SAR change detection. In the DI generation step, we extract the pixelwise non-local structure weight (NLSW) features and detect changes by spatial structure cues. Eventually, the comparability of bi-temporal SAR images (BTSIs) was enhanced.

- (2)

The mathematical proof is given to illustrate the uncertainty of the similarity between patches in the expectation sense when the Euclidean distance is used in multiplicative noise. For this reason, a patch similarity metric, dedicated to the proposed NLSW change detector for BTSIs, was derived and approximated by considering the statistical properties of SAR.

- (3)

The CFAR under the Rayleigh distribution assumption was applied to the DI to generate the final change map, thus avoiding the unimodal histogram problem. The experiments on simulated and real bi-temporal SAR images demonstrated the effectiveness and stability of the proposed method.

The rest of this paper is organized as follows.

Section 2 presents the relevant theories and the proposed framework.

Section 3 presents the experimental results, the parameters’ analysis, and the discussion. The conclusion is provided in

Section 4.