Abstract

Polarization can provide information largely uncorrelated with the spectrum and intensity. Therefore, polarimetric imaging (PI) techniques have significant advantages in many fields, e.g., ocean observation, remote sensing (RS), biomedical diagnosis, and autonomous vehicles. Recently, with the increasing amount of data and the rapid development of physical models, deep learning (DL) and its related technique have become an irreplaceable solution for solving various tasks and breaking the limitations of traditional methods. PI and DL have been combined successfully to provide brand-new solutions to many practical applications. This review briefly introduces PI and DL’s most relevant concepts and models. It then shows how DL has been applied for PI tasks, including image restoration, object detection, image fusion, scene classification, and resolution improvement. The review covers the state-of-the-art works combining PI with DL algorithms and recommends some potential future research directions. We hope that the present work will be helpful for researchers in the fields of both optical imaging and RS, and that it will stimulate more ideas in this exciting research field.

1. Introduction

Various sensing and imaging techniques are developed to record different information from four primary physical quantities related to the optical field: intensity, wavelength, phase, and polarization [1,2,3,4]. For example, traditional monochromatic sensors measure intensity information of optical radiation in a single wavelength [5,6,7,8]. Spectral sensors, such as color cameras and multispectral devices, measure intensity information in multiple wavelengths simultaneously [9,10,11,12]. Holographic cameras record both intensity and phase information of an optical field [13,14,15]. Polarization information relates to such physical properties as physical shape, shading, and roughness [10,11,16,17,18], as well as provides information significantly uncorrelated with other physical information (e.g., the spectral and intensity), and thus has advantages in various applications [4,16,19,20,21,22,23]; Yet, it cannot be directly observed via visual measurements.

The acquisition of polarization information must be via specially designed optical systems, based on which polarization states of light scattered or reflected by scenes or objects can be extracted by inverting measured intensities/powers. As a promising technique, polarimetric imaging (PI) attracts more and more attention in the fields of remote sensing (RS), ocean observation, biomedical imaging, and industrial monitoring [24,25,26], due to its significant performance and advantages in mapping multi-feature information. For example, in scattering media (such as cloud, haze, fog, biological tissues, or turbid water), image quality and contrast can be enhanced by employing PI systems [27,28,29,30,31,32,33,34] since the backscattering is partially polarized [35,36,37]. As polarized light is sensitive to morphological changes in biological tissues’ structures on a microscopic scale, PIs, especially Mueller PIs, are widely used to distinguish healthy and pathological areas [38,39], e.g., skin [40], intestine [41], colon [42], rectum [43] and cervix [44].

In the field of RS, polarization synthetic aperture radar, also known as PolSAR, makes use of polarization diversity to improve the geometrical and geophysical characterization of observed targets [45,46]. Compared with standard synthetic aperture radars (SARs), PolSAR acquires more abundant physical information and allows for more effective recognition of features and physical properties [46,47]. It thus has various applications, including in agriculture, oceanography, forestry, disaster monitoring, and military [43,48,49,50,51,52,53,54,55]. Particularly, its capacity of natural disaster reporting and response significantly affects human livelihoods and man-made infrastructures. For example, the Advanced Land Observing Satellite-2 (ALOS-2), which carries on a Phased Array type L-band Synthetic Aperture Radar-2 (PALSAR-2), has conducted more than 400 emergency observations to identify the damages caused by natural disasters, including earthquakes, floods, heavy rains, and landslips [56,57].

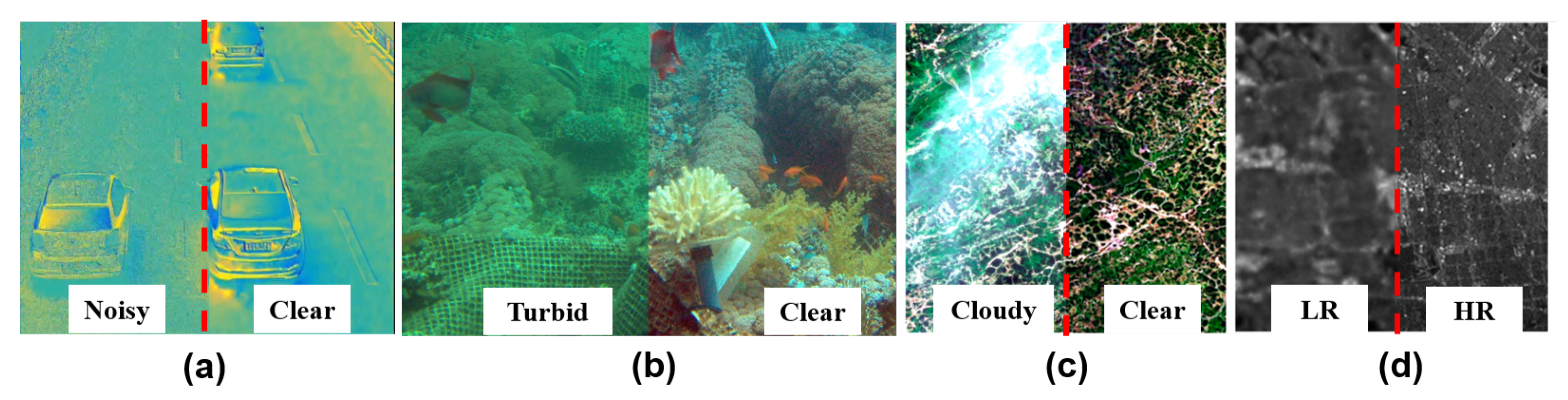

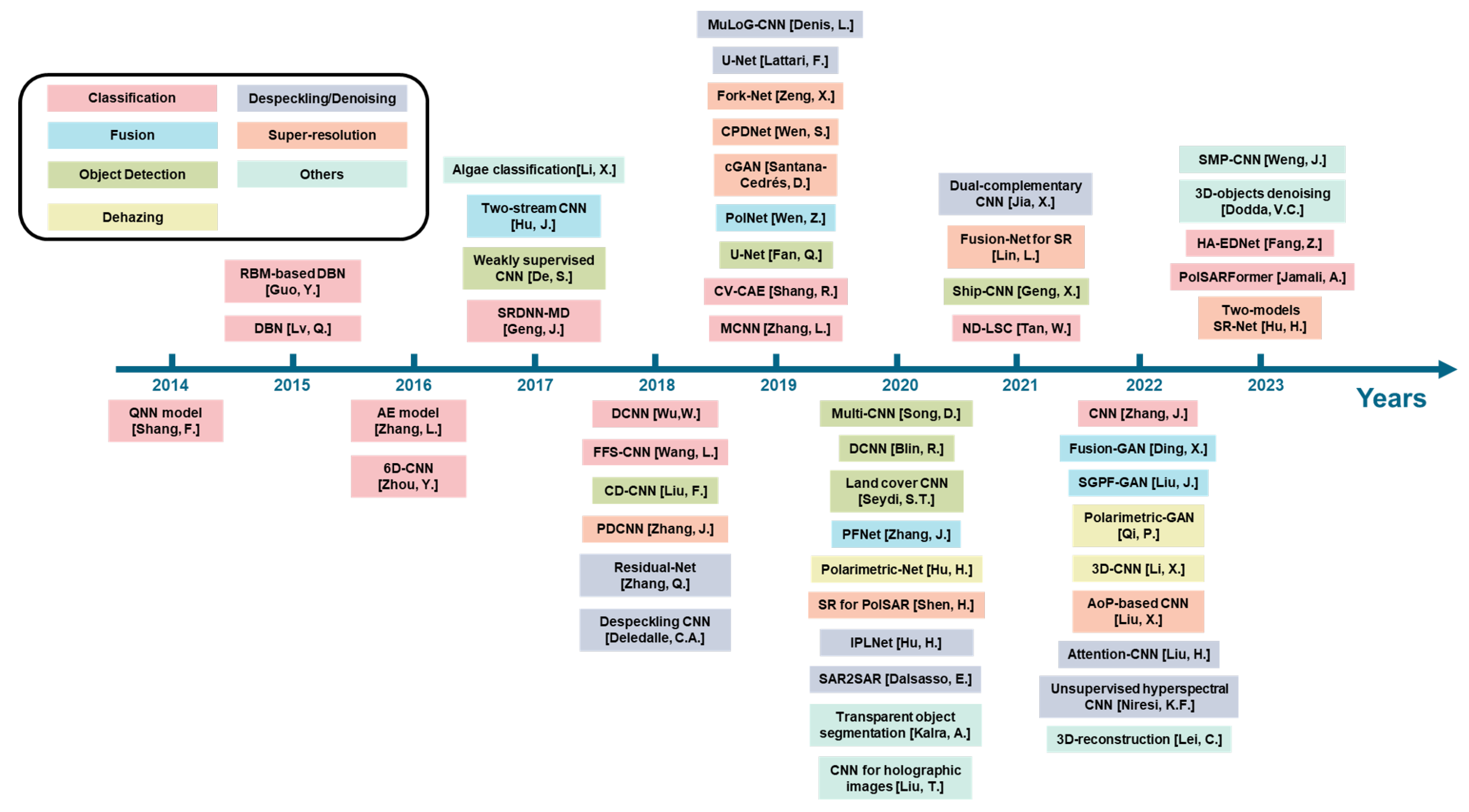

However, PI could suffer from lower image quality, coming from hazy or cloudy effects [6,58,59], complex noise sources [60,61,62,63,64], reduced-resolution or contrast [30,65,66,67,68], as shown in Figure 1, due to limitations of optical systems and particularities of application scenarios. This may significantly affect PI’s application effects, especially in complex conditions and environments [43,69,70].

Figure 1.

Scenes (a) with/without noise (b) turbidity or (c); (d) with low-resolution (LR) or high-resolution (HR) [67].

For example, in automatic driving tasks, adding polarization analysis into the optical imaging (OI) system can compensate drawbacks of conventional intensity-mode-based methods [71,72]. Yet, these essential polarization parameters are non-linearly deduced by polarized intensities and are pretty sensitive to noise [73,74,75,76,77]. This point can be found in Figure 1a, which shows an image of the angle of polarization (AoP) in a low-light condition. In ocean observation, underwater images (as shown in Figure 1b) may suffer from reduced contrast and color distortion [78]. In RS, especially for PolSAR data, existing speckles complicate the processes of interpretation and reduce the precision of parameter inversion [69,79]. In scattering media or some particular atmospheric conditions, images could be blurred, and their quality was significantly reduced owing to the phenomenons of scattering and absorption by existing micro-particles [80,81], such as the clouds in RS images shown in Figure 1c. When processing PolSAR images, a wide swath can be achieved at the expense of a degraded resolution [9,82]. Yet, since wide swath coverage and high resolution are both important, this poses challenges on both system design and algorithm optimization. Figure 1d shows an RS image example with low/high-resolution.

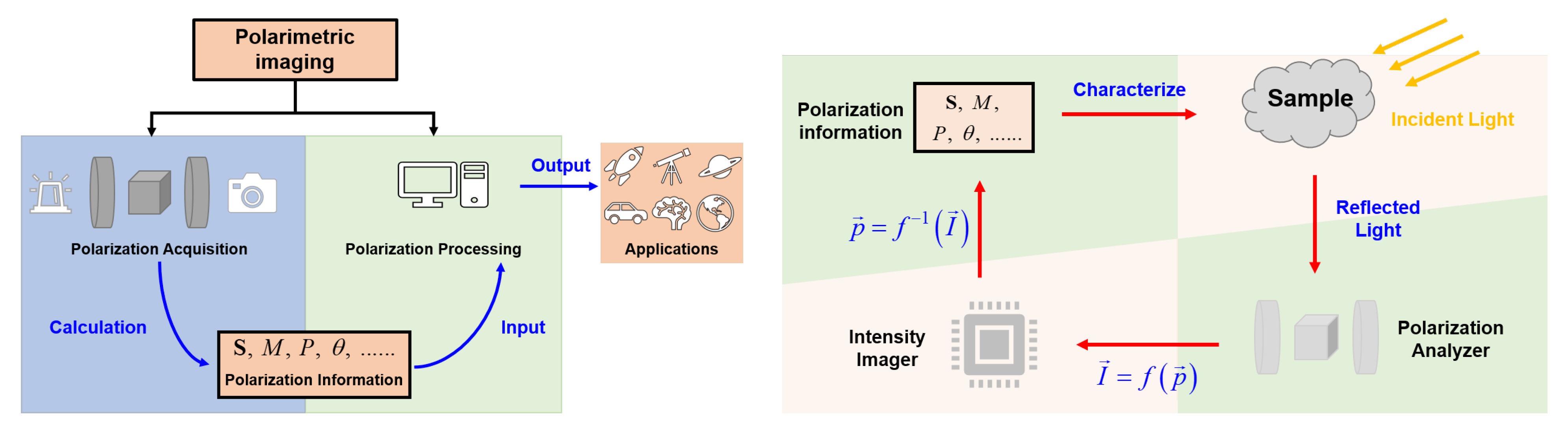

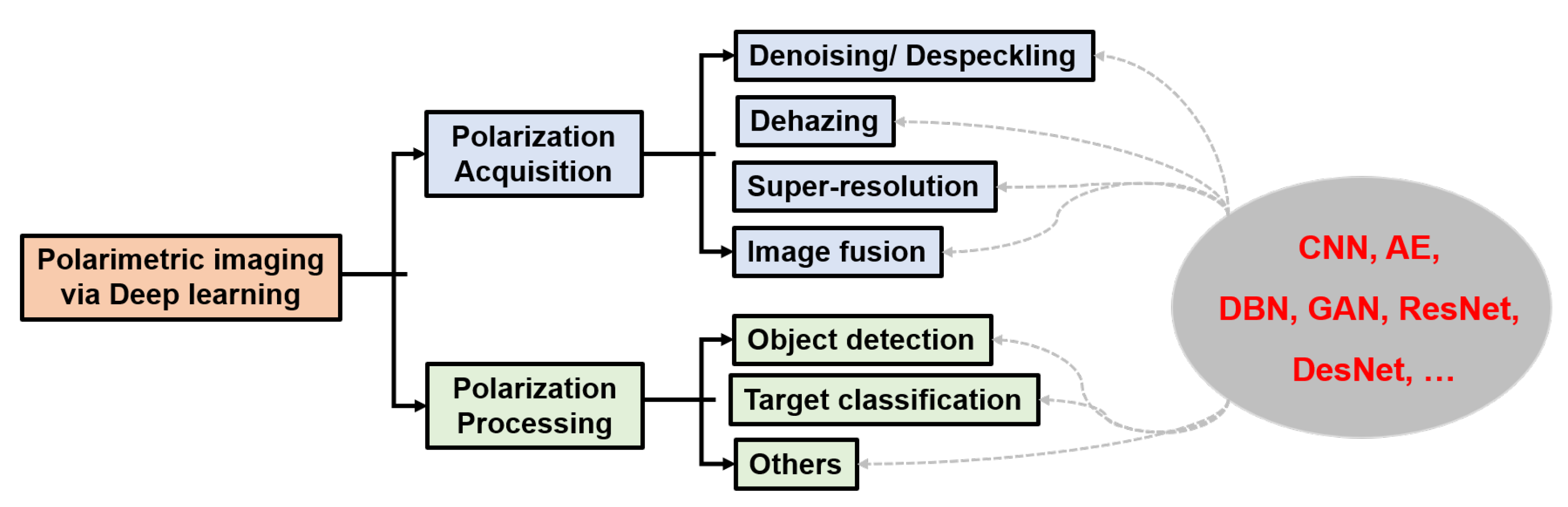

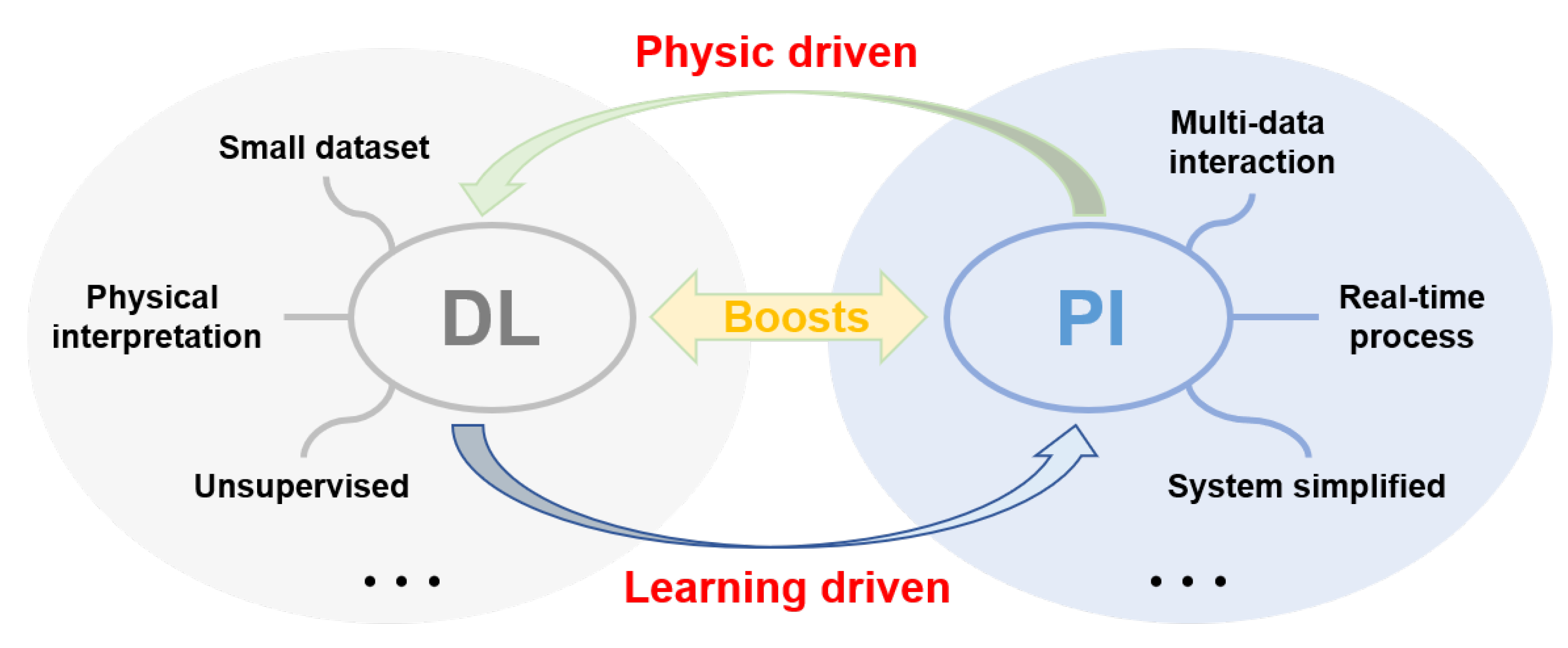

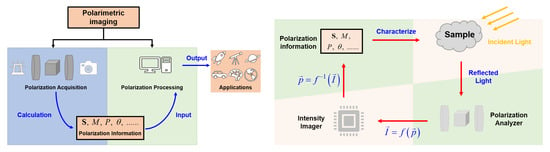

Generally, the existing PI systems mainly involve two aspects: acquisition of polarization information and applications based on this information, as shown on the left of Figure 2. The first aspect of PI, i.e., polarization acquisition, consists in inverting the intensity measurements captured by imagers to retrieve polarization information. The corresponding schematic is proposed on the right of Figure 2. One can get a series of intensity measurements by adjusting the polarization states of incident light and adequately setting the polarization analyzers. By inverting these measurements, we can obtain the polarization information that can be used to characterize the beams or samples [7,83,84,85,86]. This polarimetric information may be the Stokes vector , the Mueller matrix M, the degree of polarization (DoP) P, or AoP [75,87,88]. Based on images of these polarization features, one can perform such applications as target detection [89,90,91,92,93], classification [12,94,95,96,97], discrimination [98,99,100,101]. Polarization information acquisition aims at acquiring high-quality data, that is, clear images with high-resolution and low noise (such images are also illustrated in Figure 1). On the other hand, polarization information application aims at leveraging polarization features to satisfy a given application purpose.

Figure 2.

(Left) General steps of PI: Polarization parameters -Stokes vector, M-Muller matrix, P-degree of polarization, and -angle of polarization. (Right) Schematic of Polarization acquisition: denotes the polarization information of the sample/light-beam, the multi-intensity captured by imagers and f the operator corresponding to the PI system.

Various methods have been proposed for handling the two aspects in Figure 2 to improve image quality and application performance. For example, many approaches based on principles of non-local means [102], total-variation (TV) [103,104], principal component analysis (PCA) [105,106], K-times singular value decomposition (K-SVD) [107], variational Bayesian inference [108], and block matching 3-D filtering (BM3D) [109] were developed and had better performances for noise or speckle removal. These methods, however, are not commonly applicable since they require prior knowledge and manual network parameter modification. In addition, when we consider practical applications, the physical models that relate polarimetric measurements to interested parameters significantly depend similarly on prior knowledge of model parameters. The knowledge often has apparent uncertainty as physical processes are highly complex, which may limit application performance [110,111].

Data-driven machine learning approaches have played important roles in various imaging systems [112,113,114,115]. The rapid advances in machine learning and the increasing availability of “big” polarization data create opportunities for novel methods in PI [116,117,118,119,120]. Moreover, thanks to their powers of being data-driven and deeply-data-learning, deep learning (DL) approaches have been successfully applied for image inversion and processing in recent years [5,69,111,116,121,122,123,124]. In fact, DL can approximate complex nonlinear relationships between interested parameters owing to the multi-layer nonlinear learning, which helps obtain the potential association between different variables for both polarimetric images acquisition and application [5,78]. Besides, DL has shown significant superiority in extracting multi-scale and multi-level features as well as in combining them, which fits very well with inherent variety and multi-dimensionality of polarization [111,116,125]. Thus, it will contribute to a better performance in the two aspects of PI.

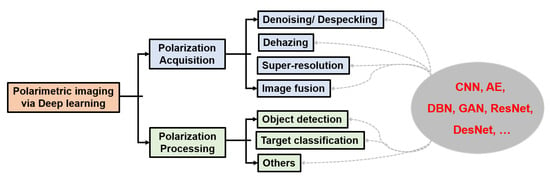

Recently, combining imaging techniques/applications and DL has become a hot topic. Many review articles have already been published to review such works, for example, in domains of RS [116,126] or classification [43,116,127]. However, works related to the DL in PI have not yet been reviewed. The motivation for this work is to introduce a comprehensive review of the major tasks in the field of PI connecting with DL techniques, which may include denoising/despeckling, dehazing, super-resolution, image fusion, classification, object detection, etc. The reviewed works include representative DL-based articles in both the fields of traditional visible OI and RS. The rest is organized as follows: Section 2 introduces polarization and DL principles. Section 3 surveys the latest research in DL-based polarization acquisition and DL-based processing of polarimetric images. Finally, conclusions, critical summary, and outlook toward future research are drawn in Section 4.

2. Principles of Polarization and Deep Learning

2.1. Overview of Polarization and PI

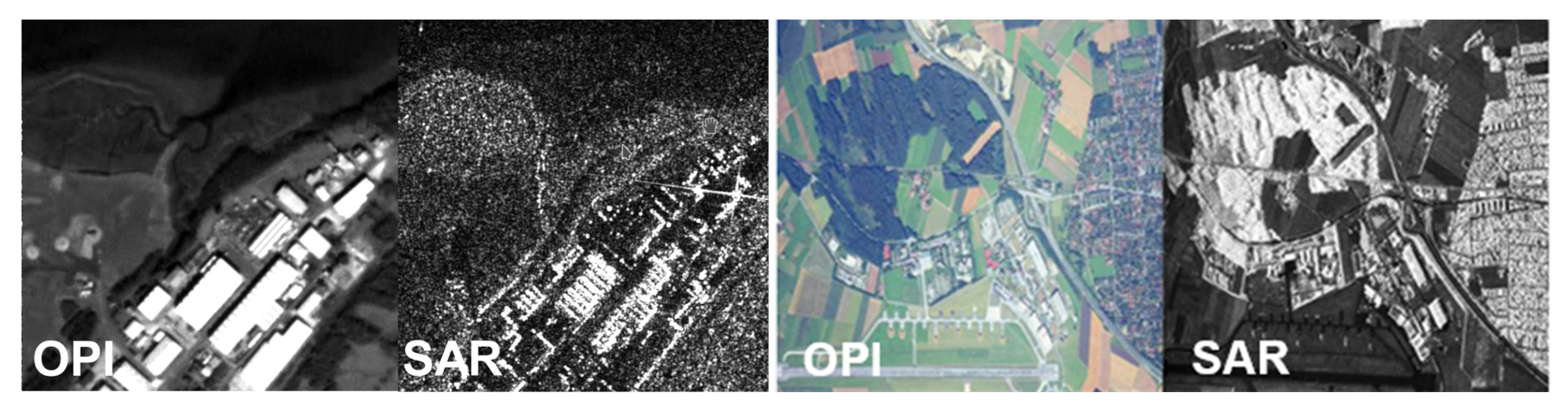

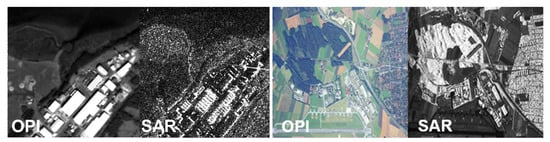

Polarization is a physical characteristic of electromagnetic waves in which there is a specific relationship between the direction and magnitude of the vibrating electric field. Techniques that image the polarization (or polarization parameters) are called PI and are widely used in two fields: optical polarimetric imaging (OPI) system and PolSAR [128,129,130]. In fact, OPI and PolSAR estimate the same polarimetric parameters. The main difference is that they work at different wavebands. For comparison, two examples of SAR (similar to the PolSAR) and OPI related to the same scenes are shown in Figure 3.

Figure 3.

Comparison of SAR and OPI images [131,132].

OPI techniques can be used for both active and passive detection, and have the advantage of low-cost and intuitive image interpretation. On the other hand, PolSAR, or microwave polarimetric detection, is an active remote sensing technique. Since microwaves are less diffused by water, PolSAR is much less affected by rain clouds and fogs than OPI [129,131,132]. Therefore, it can provide full-time and full-weather observation for targets. However, PolSAR has poorer spatial resolution and higher noise than OPI in visible or infrared bands. These characteristics are well observed in Figure 3.

In addition, although OPI and PolSAR measure basically the same physical phenomenon (i.e., polarization), they often use different mathematical formalisms. In the following section, we will introduce the basic of polarization and polarimetric imaging principles. We will review the concepts that are identical in these two fields, such as the Jones vector and matrix, Stokes parameters, DoP, and AoP [4], and describe the concepts that differ, in particular, the scattering matrix/vector and the covariance matrix, which are widely used in PolSAR [133,134].

2.1.1. Jones Vector and Stokes Vector

In the 1940s, Refs. [135,136,137] introduced and developed the Jones formalism, which links a two-element Jones vector that describes the polarization state of light, and the Jones matrix, a matrix that describes optical elements. The Jones vector is complex-valued and describes the amplitude and phase of light as [4]:

In incoherent optical systems, it is handier to characterize polarization properties only by a real-valued quantity (i.e., intensity-mode or power-mode measurement). This is done by Stokes vector [138]. When light waves pass through or interact with a medium, their polarization states change, which is described by a Jones matrix [4]:

A Hermitian matrix, i.e., C, can be deduced by the product of a Jones vector with its conjugate transposition [46], as follows:

where the superscript ∗ represent conjugation, , and are the four Stokes parameters [4,46], and . The Stokes vector, i.e., , can be obtained from only power or intensity measurements, and is sufficient to characterize the magnitude and relative phase, i.e., the polarization of a monochromatic electromagnetic wave [46]. The Stokes vector can also be written as a function of the polarization ellipse parameters: orientation angle , ellipticity angle , and ellipse magnitude A, as [4,88]:

Equation (4) is more commonly seen in the field of OPI. From , one can get other polarization parameters. Three of them are the DoP (i.e., P), the degree of linear polarization (i.e., DoLP), and the AoP (i.e., ):

2.1.2. Scattering Matrix

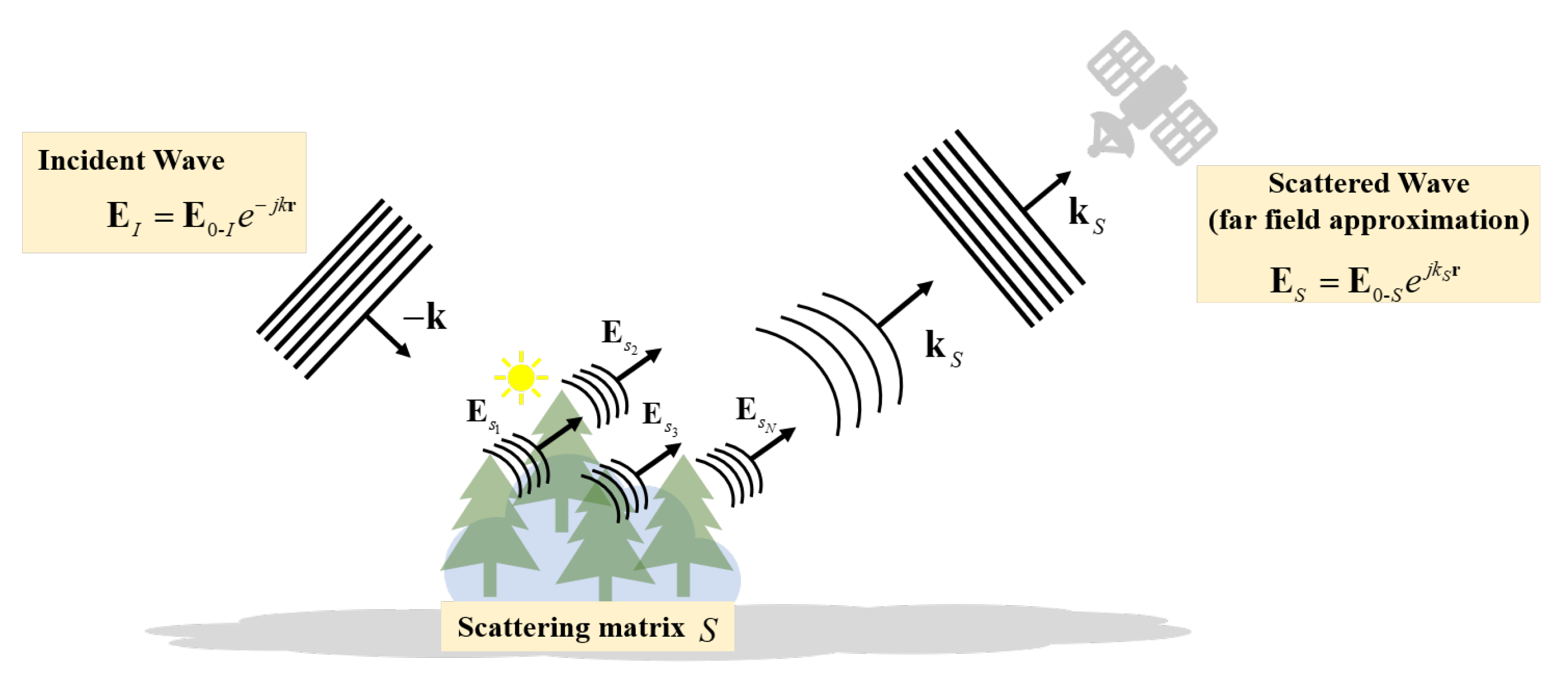

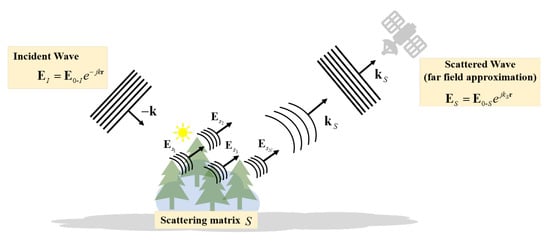

PolSAR is one of the most important applications of PI in the field of RS. Different from OPI, PolSAR works in the microwave band instead of the visible band. It is necessary to introduce the parameters used in PolSAR-based RS. Figure 4 presents a scheme of general PolSAR used to measure a target, characterized by its scattering matrix S [46].

Figure 4.

Interaction of electromagnetic wave and target.

Any electromagnetic wave’s polarization state can be determined by combining two orthogonal Jones vectors linearly [46]. As a PolSAR sensor transmits the horizontally (H) and vertically (V) polarized microwave alternately, while receives independently the returning H and V waves back-scattered in targets [139], the scattering process occurring at the target (shown in Figure 4) is expressed by using the scatter matrix S, whose foundation is same to the Jones matrix in OPI systems shown in Equation (2) and that characterises the coherent scattering of electromagnetic waves. The Scatter matrix is defined as follows:

where the incident and the scattered waves are represented by and , respectively. and related to the returned powers in co-polarized channels, while and are in cross-polarized channels. In particular, is the scattering element of the horizontal transmitting and vertical receiving polarization, with ; Similar definition for . When reciprocal back-scattering, [133,134].

The covariance matrix or coherence matrix is another important parameter commonly used in PolSAR data processing [46,140]. It is defined in the following way. One first “vectorizes” the scattering matrix S and defines the 3D scattering vector below

under the assumption of . One then defines the covariance matrix as the average of the outer product of as [140]:

where the averaging, i.e., , involved in the computation of the covariance matrix can be temporal or spatial. For example, to suppress speckle noises in PolSAR images, one can average on several acquisitions (or “looks”):

where L denotes the number of looks, the scattering vector of the i-th look. According to Equation (8), one knows that the principal diagonal elements in are real-valued; the others are complex-valued and verify , with [141].

As C includes all polarization information of targets, one can obtain useful features by decomposing this matrix [142]. Up to now, many decomposition algorithms have been developed to achieve this purpose, such as (1) the coherent decomposition algorithms including Krogager [143] and Target Scattering Vector Model [144]; and (2) the non-coherent decomposition algorithms including Holm [145], Huynen [146], Vanzyl [147], [148], multiple component scattering model [149], Freeman [150], and adaptive non-negative eigenvalue decomposition [151].

2.2. Overview of Deep Learning

Neural networks are inspired by mammal brains and primate visual systems in particular, which comprise neurons or units with certain activation parameters by a deep architecture. In this way, a given input percept can be represented at multiple levels of abstraction [111,152]. DL, as a class of neural network-based learning algorithms, is composed of multiple layers, which are often referred to as ”hidden.” These layers transform the input into the output by learning progressively higher-level features. Notably, the network depth is typically dependent on the number of multiple hidden. This is why we always named the network “deep learning”.

In recent years, various novel deep architectures have been developed and applied in various fields, where they outperform the traditional non-data-driven methods [110,116,153,154]. We still take the ALOS-2/PALSAR as an example. Ref. [155] proposed a DL-based approach and achieved robust operational deforestation detection with false alarm rate smaller 15%, as well as improved the accuracy by 100% in some areas. In this Section, we will introduce several DL models commonly used in PIs, e.g., the convolutional neural networks (CNN), autoencoders (AE), and deep belief networks (DBN) [116,152,153,156,157,158].

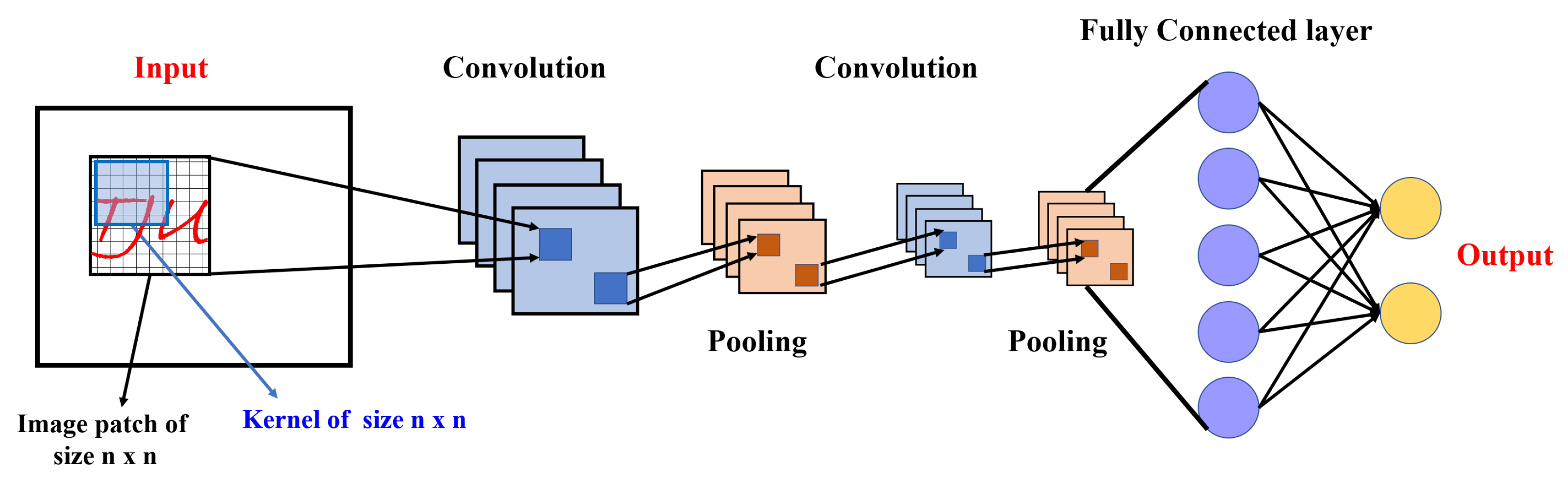

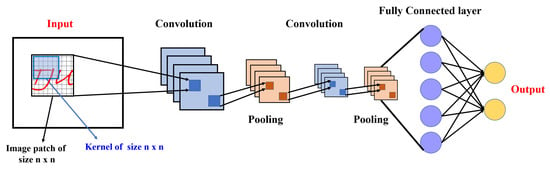

2.2.1. CNN

The concept of CNN was proposed by [159] and then revised by [160]. In the past decades, CNN has attracted more attention and shown outstanding performance in various fields including, but not limited to, optical sensing [161,162] and imaging [163,164]. CNN has the prominent ability to learn highly abstract features and is a trainable multi-layer architecture composed of an input layer, convolutional layers, pooling layers, fully connected layers, and an output layer [165]. One example of the CNN architecture is shown in Figure 5.

Figure 5.

Example of CNN architecture.

Convolutional layer is used to extract image features. The former convolutional layers aim at extracting shallow features, while latter ones aim to further learning abstract features. The convolutional layer computes output multiple feature maps by convoluting the output of the previous layer (or input layer) with a convolution kernel [166,167,168].

Pooling layer aims at reducing the size of the input (former) layer by, for example, sampling convolved feature maps. By this way, useful features of the image are preserved and redundant information is removed, thus effectively preventing the over-fitting problem and speeding up computation [115,158].

Fully connected layer combines features transmitted in the former layers to achieve the final feature representation. It is always used in the last layer and followed by the output layer.

In addition, to boost the performance, many CNN models have been proposed, including the LeNet [169], AlexNet [163], GoogleNet [170], ResNet [171], DenseNet [172]. Notably, CNNs have been widely used in PIs, for such applications as image reconstruction [5,11,58], target recognition [173,174,175], classification for PolSAR data [49,176]. We will introduce these works in Section 3.

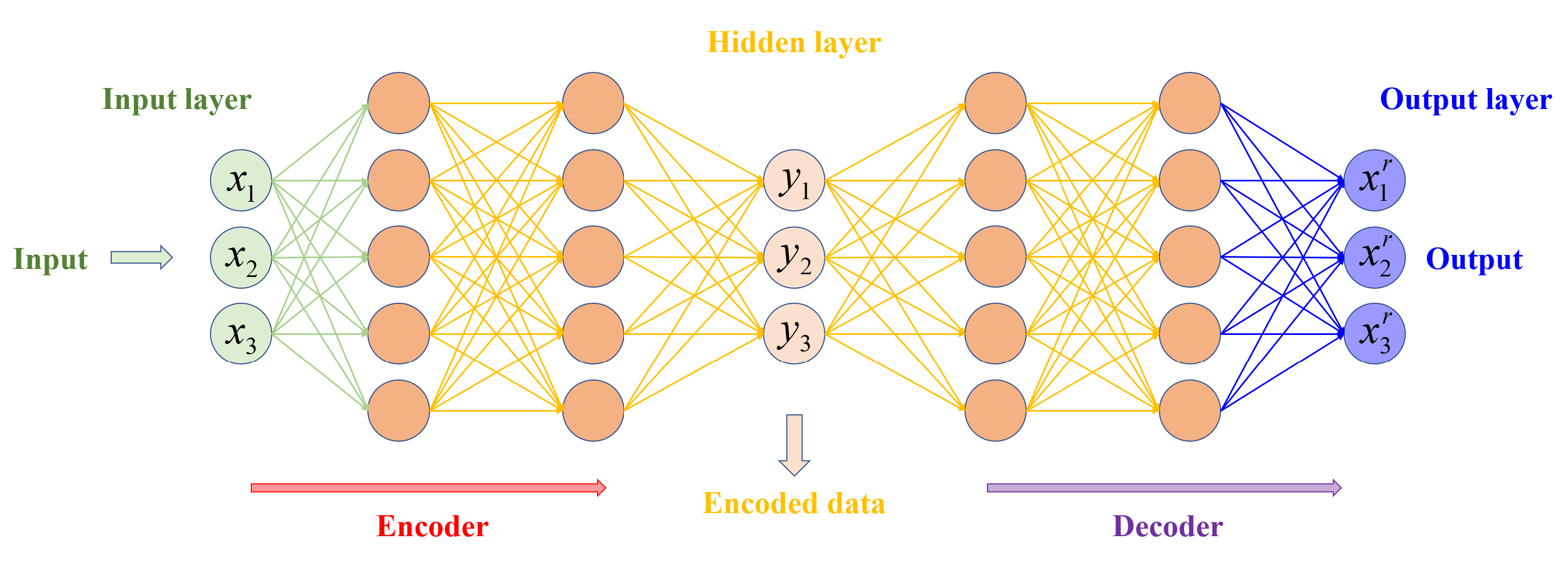

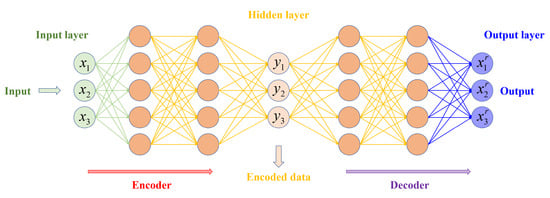

2.2.2. AE

AE is a symmetrical neural network composed of two connected networks: encoder and decoder. Its architecture is shown in Figure 6. The two parts can be considered as hidden layers between the input and output layers [177].

Figure 6.

Example of AE architecture.

In the first encoder part, input data, which always has a high dimension, was reduced to low-dimensional encoded data. During this part, the input data is processed by a linear function and a NAF f to provide encoded data . On the contrary, the decoder part raises the encoded data to a reconstructed data , having the same dimension as . The network is optimized by minimizing the loss function, for example, with a back-propagation algorithm, AE learns features from the input in an unsupervised way.

Many variants of AE have been developed to boost the performance according to special applications, such as the sparse AE [177], convolutional AE [178], and variational AE [179]. In fact, these AEs can be directly employed as the feature extractor from polarization images, for example, in target detection and classification for PolSAR data [180,181,182,183,184].

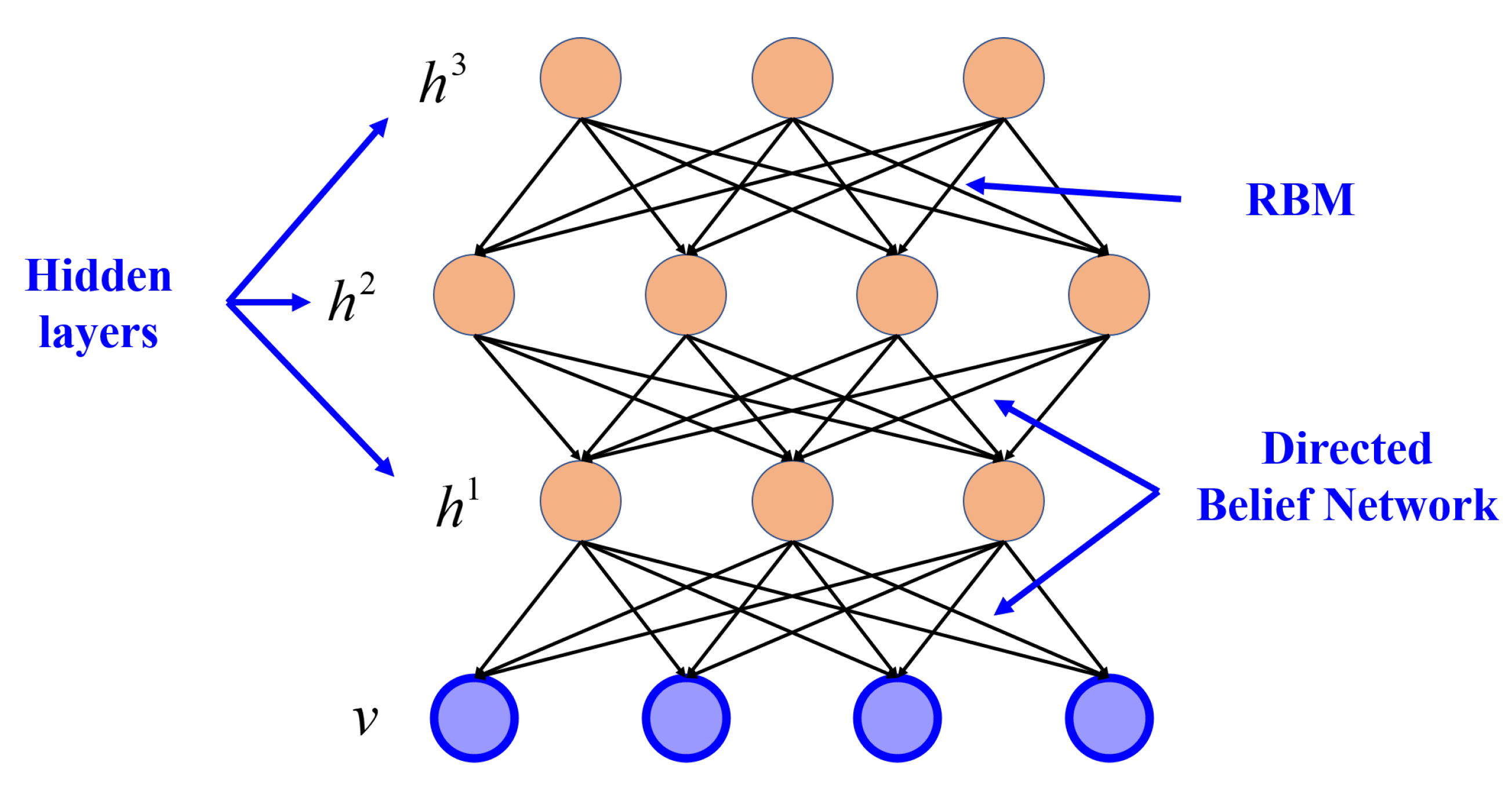

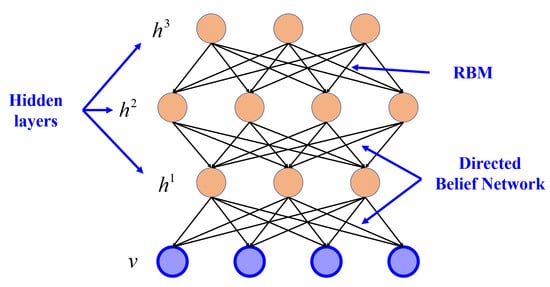

2.2.3. DBN

DBN is an unsupervised probabilistic network [185]. It stacks multi-individual unsupervised networks whose hidden layer is used as the input for the next layer. Usually, DBN comprises the stacking of several Restricted Boltzmann Machines (RBMs) or AEs. DBN has two layers: the hidden layer and the visible layer. Hidden units are conditionally independent of visible ones. Its architecture is shown in Figure 7 [43,111,185].

Figure 7.

Example of Deep belief network architecture.

DBN-based methods have been used in PI applications, especially the object recognition and scene classification [186,187,188,189], and have shown superior performance compared with traditional methods, such as PCA [181] and support vector machines (SVM) [190].

2.2.4. Other Deep Networks

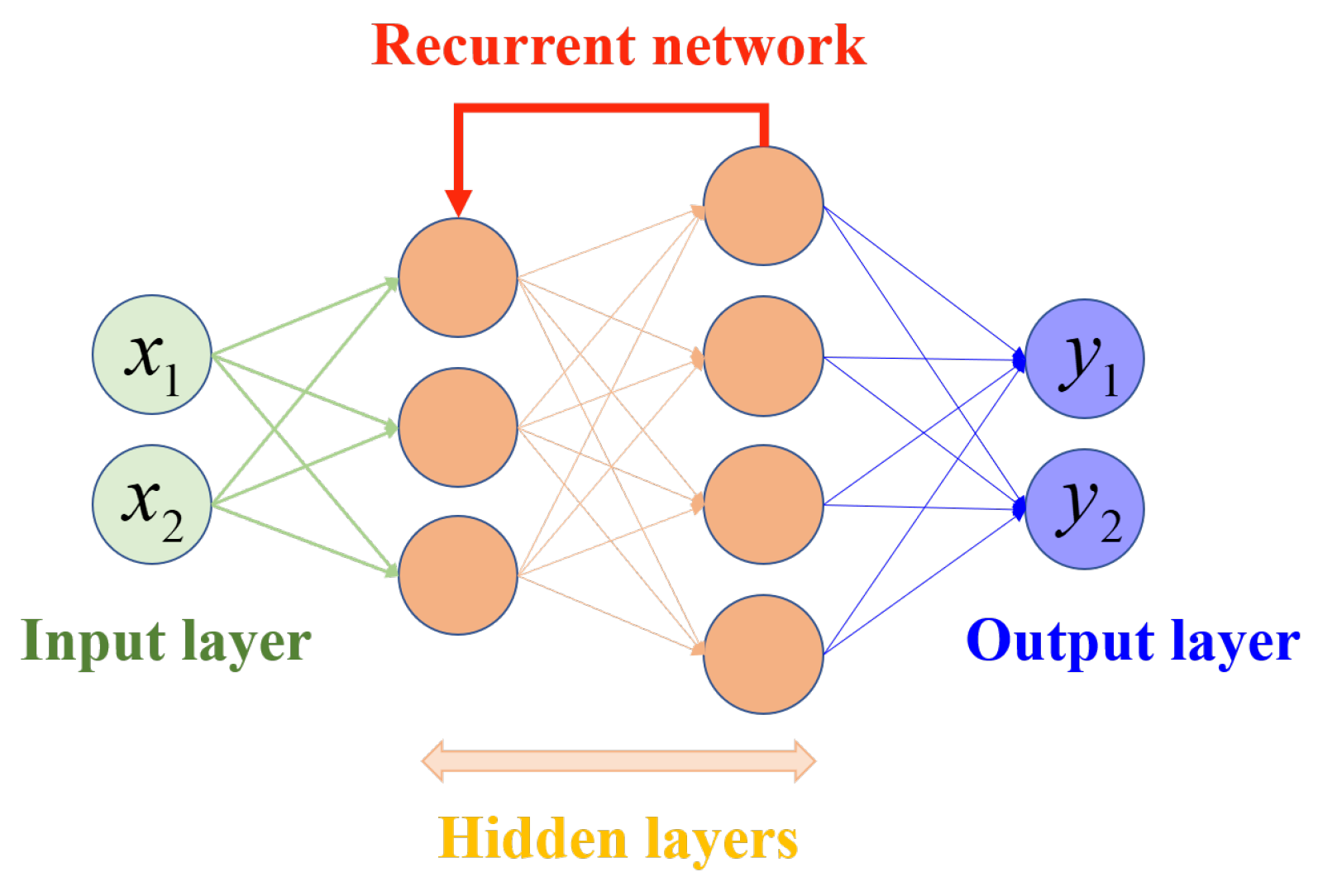

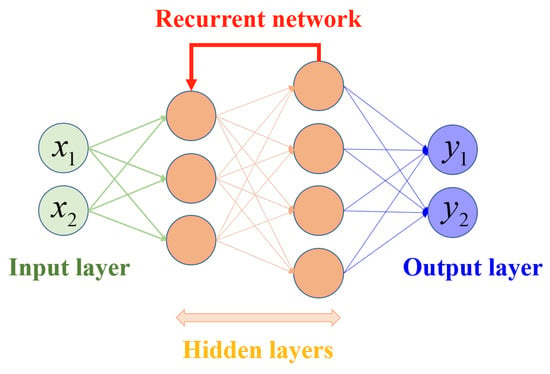

In addition to the above three network models, a few research works in the field of PI are based on other late-model networks, such as the recurrent neural network (RNN) [191,192], generative adversarial network (GAN) [97], residual network [5], and deep stacking network (DSN) [193].

As a typical neural network, RNN uses a loop to store information within the network. This gives the network a memory capacity to preserve information. The current input and prior hidden states are used to compute a new hidden state, as ; where and are hidden states at time t and , respectively; denotes the present input; W is the parameter applied in all time sequences; and f denotes a NAF. Figure 8 presents an example of RNN architecture.

Figure 8.

Example of RNN architecture.

It can be seen that, in contrast with previously introduced networks, RNN provides a feedback loop to previous layers. The advantage of RNN over the feed-forward networks is that it can remember the output and use it to predict the next elements, while the feed-forward one is not able to feed the output back to the network. Therefore, RNN works very well with sequential data. The RNN with gated recurrent unit and the Long Short-Term Memory (LSTM) are two of the most often used RNNs (GRU). They solve problems of vanishing or exploding gradient [121,194], and have been successfully used to handle the classification issues in PolSAR [191,192].

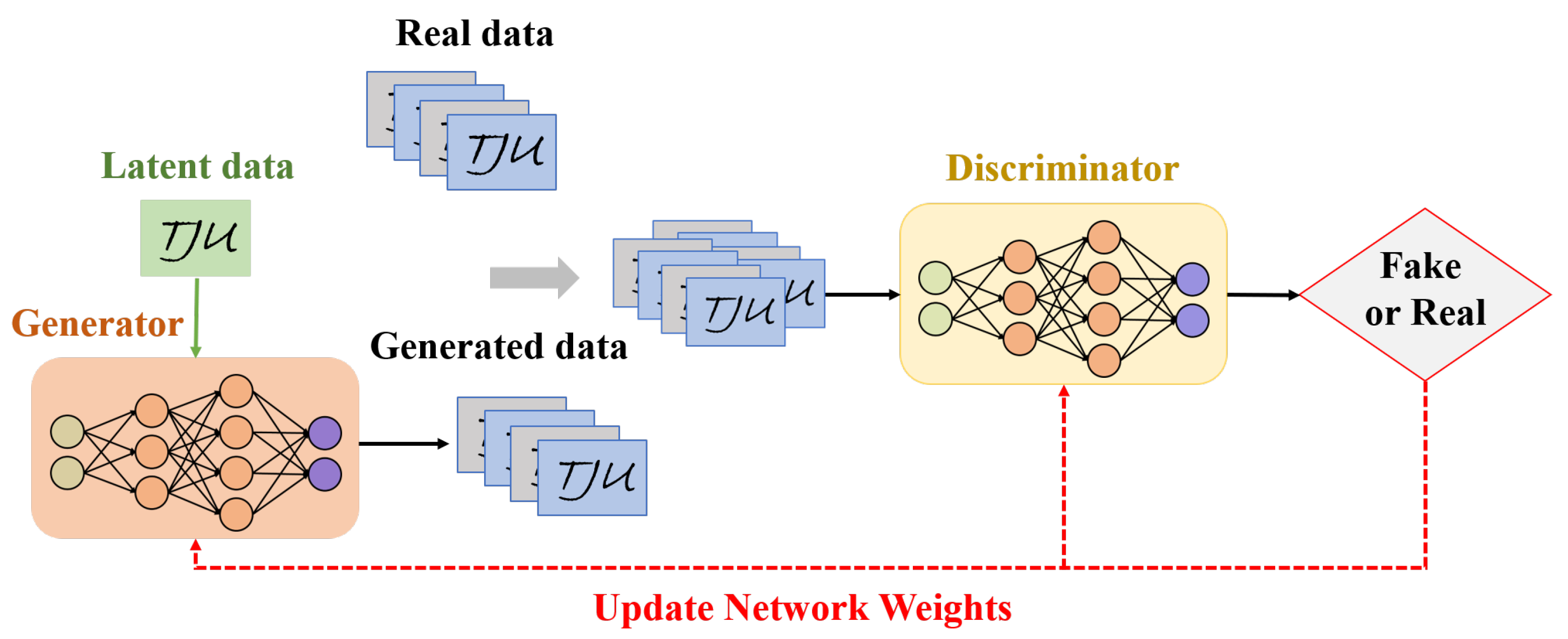

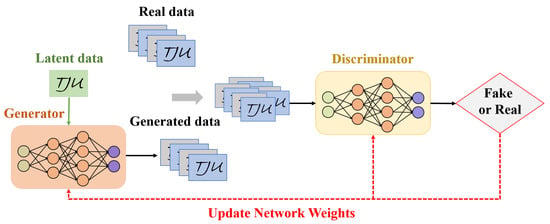

GAN is a class of DL systems developed by [195]. It contains Generator (G) and Discriminator (D), i.e., two sub-networks. The GAN training algorithm involves training both the G and D models in parallel, and having them compete against one another in a zero-sum game. Figure 9 presents an example of GAN architecture.

Figure 9.

Example of GAN architecture.

In other words, “G” tries to mislead “D” into distinguishing between the true and fake. “D” is trained to recognize that information is true whenever it comes from a reliable source and is false when it comes from “G”. GANs are now a very active research topic in image processing applications, and there exist various types of GANs, such as Vanilla GAN, conditional GAN (CGAN), WGAN, StyleGAN, deep convolutional generative adversarial network (DCGAN) [196,197,198].

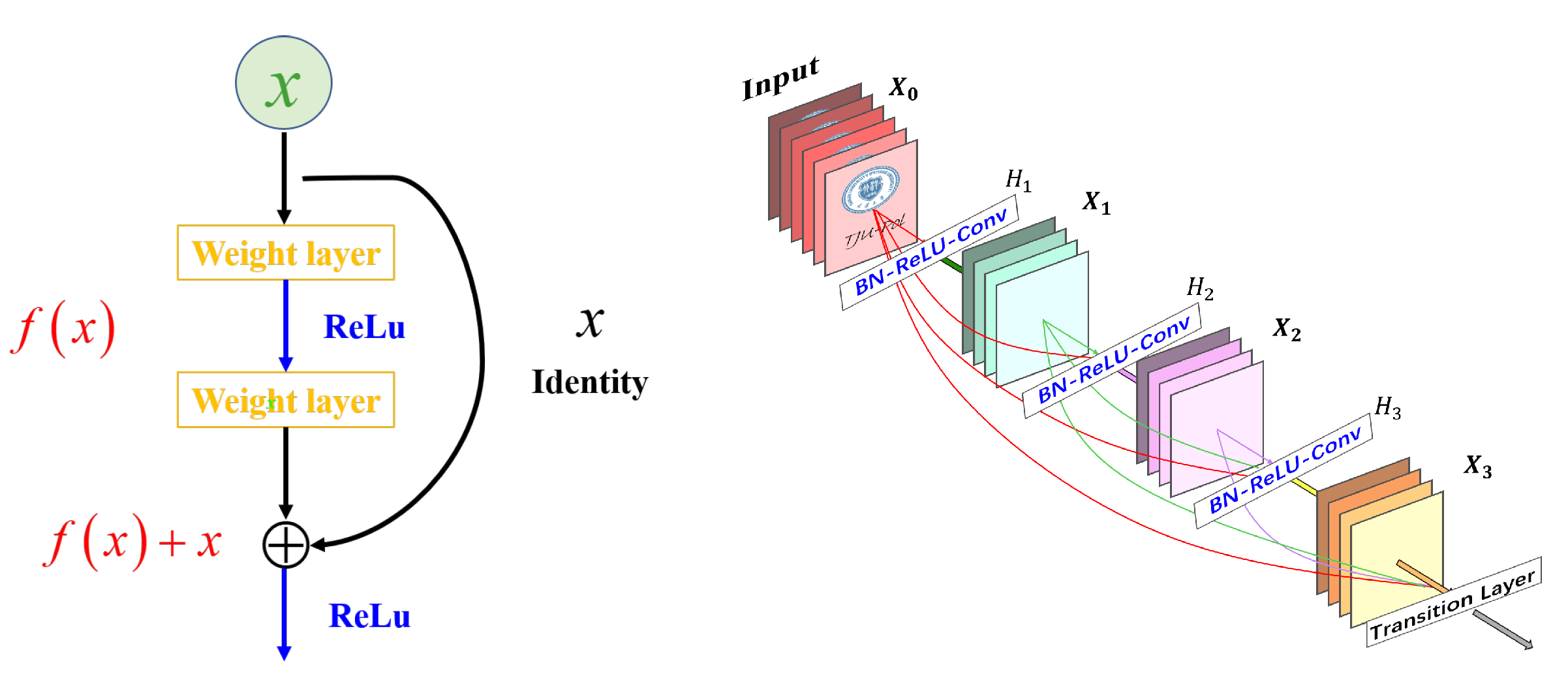

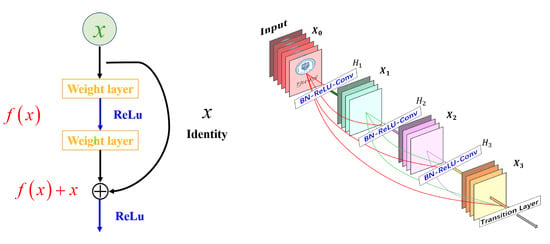

In fact, due to the well-known vanishing gradient problem, deep networks are actually challenging to train. In other words, when the gradient propagates back to earlier layers, repeated multiplications may reduce it to an endlessly small value, saturating the performance or even rapidly decreasing it as the network depth increases. To handle this problem, Ref. [165] proposed ResNet, short for Residual Network, in 2015. The key of ResNet is to create an “identity shortcut connection” that skips one or more layers, which is called a “residual block” and is depicted on the left-side of Figure 10.

Figure 10.

(Left) Residual block. (Right) Densnet.

The ResNet is robust to exploding and vanishing gradients as residual blocks can pass signals directly through, which allows information to be propagated faithfully among multi-layers. Thanks to its excellent performance, the ResNet has become one of the most popular architectures and has successfully been applied in many applications [5,11,165].

To further make use of shortcut connections and connect all layers directly with one another, in 2016, Ref. [172] proposed a novel architecture called Densely Connected network (DensNet) (As shown on the right of Figure 10). The figure shows that each layer’s input consists of all earlier layers’ feature maps, and the related outputs are passed to the subsequent layer. This operation makes networks highly parameter-efficient. In practice, one can always get better performance with fewer layers. DensNet can also be combined with the Resnet, the so-called Residual Dense Network, to further improve image quality in PI systems for such tasks as super-resolution [199], denoising [5] and dehazing [78].

3. PI via Deep Learning

The performance of DL-based methods depends on the learned relations between inputs and outputs. For polarization information acquisition, one needs to obtain a database of intensity images in multi-channels corresponding to different physical or polarimetric realizations of targets. Depending on the practical applications at hand, the detecting instrument may be the traditional Stokes imager [200], Mueller ellipsometer [201], or PolSAR [151]. The DL structures are specially designed to enhance the quality of these outputs by adding physical constraints. The outputs can be intensity images in different channels or the corresponding polarization parameters presented in Figure 2.

The captured polarization information may become the input of the second step of PI, i.e., polarization information applications. In other words, the second step is based on the outputs of the first step, and its output nature depends on the task at hand. For example, it can be a denoised image in denoising/despeckling applications, a clear image in haze and cloud removal, and a feature map in object classification and detection.

Based on captured polarization dataset, physical relations between inputs and outputs can be learned by adjusting connection weights and other parameters of the DNN structure. Consequently, data-driven approaches effectively seek and handle polarimetric information with high imaging performance [5,11,43,58]. Besides, compared with traditional solutions, PI using DL-based solutions are also very fast because it is a feed-forward architecture [121,168,202]. Recently, some researchers have tried to develop DNNs that embed physical priors, models, and constraints into, for example, the forward operators, regularizers, and loss functions [5,11,168,174]. They have verified that such DNNs have significant superiority compared with those that do not consider physical priors.

In the following two sections, we will review DL-based methods for improving the performance of PI in terms of acquisition and application, respectively. A brief outline of these Sections is shown in Figure 11. Specifically, we will introduce a series of representative DL-based works in polarization applications and processing, respectively, and show how DL enhances the PolSAR and OPI.

4. Polarization Information Acquisition

In some scenes, polarimetric images could suffer from the noise, speckles, haze, clouds, or reduced resolution, which compresses their quality and limits practical applications. Employing DL at the level of PI acquisition can significantly improve image quality thanks to the power of data-driven methods and their ability to extract features.

4.1. Denosing and Despeckling

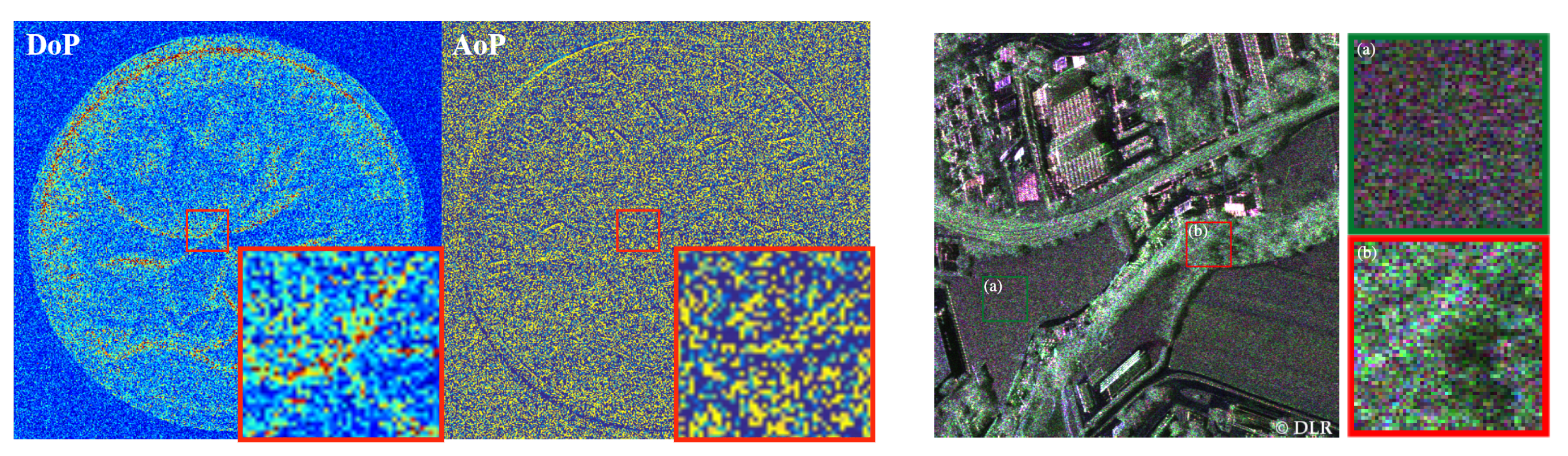

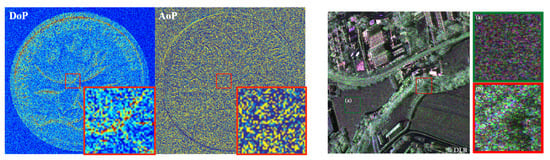

PI aims to measure and image polarization parameters and has been widely applied to many fields. Yet, essential polarization parameters are deduced by intensities or powers via nonlinear operators, which could magnify the noise distorting intensity/power measurements [203,204]. As such, they are quite sensitive to the noise. This is illustrated on the left of Figure 12, where details in noisy DoLP/AoP images are challenging to distinguish [5,205]. In RS, the speckle noise is one of the leading reasons for SAR data quality reduction. Additionally, PolSAR data has a far more complex speckle model than conventional SAR data. This is due to the fact that the speckle noise can be seen both in intensity images and in complex cross-products between various polarization parameters [69,79]. Figure 12 (Right) presents the illustration of non-stationary speckles in a PolSAR image (F-SAR airborne image DLR). As the radiometry and polarimetric behaviors differ, the two enlarged regions appear at different noise levels, making speckles removal more difficult [206].

Figure 12.

(Left) Noisy images of DoP/AoP (Right) F-SAR airborne image DLR.

4.1.1. PI Case

In visible PIs, many non-data-driven denoising methods have been proposed and generally shown positive performances. For instance, Ref. [106] proposed a PCA-based denoising method that fully takes advantage of the spatial connection between various polarization states. To specifically suppress noise, two crucial processes—dimensionality reduction and linear least mean squared-error estimation in the transformed domain—are used. In 2018, Ref. [207] proposed a novel K-SVD-based denoising algorithm for polarization imagers. This algorithm can efficiently eliminate Gaussian noise and well-preserve the targets’ details and edges. In addition, BM3D-based denoising methods have also been employed to handle polarimetric images [109,208] and well preserve the details and edges of these images. However, they have two drawbacks: (1) the type of noise is assumed as additive white Gaussian, while the practical type may be more complex and will be affected by many factors; in other words, the methods can not well address practical applications. (2) Most methods rely on prior knowledge and need human structure parameter tuning; as a result, they are not solid for different conditions [5].

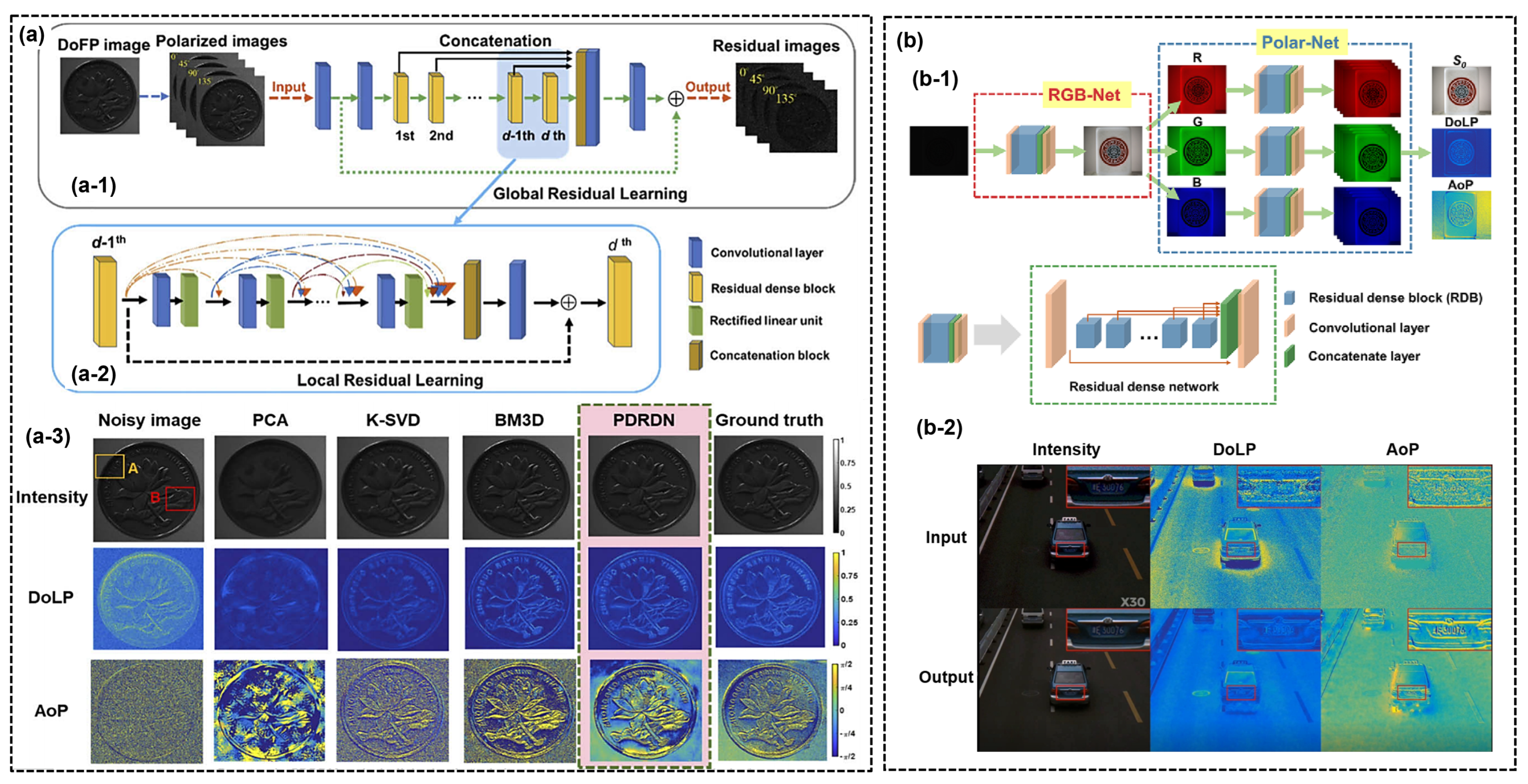

DL methods perform particularly well in various fields thanks to their excellent abilities in extracting features. They are more effective for image denoising or despeckling in complex and strong noisy environments than others. In 2020, Ref. [5] proposed a residual dense network-based denoising method. Its structure is shown on the left side of Figure 13a. This network considers a multi-channel polarization image input and outputs the corresponding residual image. Figure 13(a-3) presents denoising results for different polarization parameters (i.e., intensity, DoLP, and AoP) by varying methods (i.e., the PCA, BM3D, and the proposed DL-based solution). Obviously, the DL-based one has the best performance for all polarization parameters, and all image details are well restored. Especially for such details in AoP images, the noise seems to be removed significantly. Moreover, the efficiency of this method on different materials has been verified. This is the first report about denoising for PI using DL.

Figure 13.

(a) DL-based denoising for visible polarimetric images [5]: (a-1) Network architecture; (a-2) Residual Dense block; (a-3) Restored results comparison. (b) Denoising for chromatic polarization imagers in low-light [11]: (b-1) Network architecture; (b-2) Restored results in outdoor.

As the low photon count, polarimetric images captured in a low-light environment always suffer from strong noises, resulting in low image quality, affecting the accuracy of object detection and recognition [73,209,210]. Therefore, denoising in low-light conditions is another essential task for visible PIs. In 2020, Ref. [11] first collected a chromatic polarimetric image dataset and proposed a three-branches (intensity, DoLP, and AoP) network, called IPLNet, to improve the quality of both polarization and intensity images.

In contrast with the network developed in their previous work [5], the present one has two sub-networks, i.e., RGB-Net and Polar-Net. An RGB feature map is produced using the RGB-Net. The features above are divided into three different channels, followed by the Polar-Net, which aims to predict polarization information. Besides, a polarization-related loss function is well designed to balance the intensity and polarization features in the whole network. Indoor and outdoor (see Figure 13b) experiments were performed to verify its effectiveness. The corresponding models and results can be extended to automatic drive directly to enhance target recognition accuracy in complex conditions further. In 2022, Ref. [211] proposed an attention-based CNN for PI denoising. In this work, a channel attention mechanism is applied to extract polarization features by adjusting the contributions of channels. Another interesting contribution of this work is its adaptive polarization loss, which makes the CNN focus on polarization information.

4.1.2. RS Case

In RS, various methods aim to suppress speckle noises, e.g., multi-look processing [212], filtering [213,214], wavelet-based despeckling, BM3D algorithm [215] and TV methods [216]. These PolSAR speckle filters are based on the traditional adaptive spatial domain filters proposed by [45,213,214]. Besides, although the NLM-filter was initially developed to remove the noise in traditional digital images [217], it was successfully expanded to denoise PolSAR images recently [105,218]. From 2014 to 2016, merging a Wishart fidelity term from the original PolSAR TV model with a non-local regularization term created for complex-valued fourth-order tensor data [104,216,219] innovatively developed TV-based methods for PolSAR despeckling. More details of these methods can be found in two representative reviews of PolSAR despeckling, i.e., Refs. [69,220]. However, the methods also have significant limitations: (1) Due to the nature of local processing, spatial linear filter approaches would be unable to completely preserve edges and features [61]; (2) NLM methods have a low computational efficiency of similar patch searching, which limits their applications. (3) Variational methods significantly depend on model parameters and are time-consuming. In general, these techniques occasionally fail to maintain sharp features in fields with complex textures or generate unwanted block artifacts in images with speckles [61].

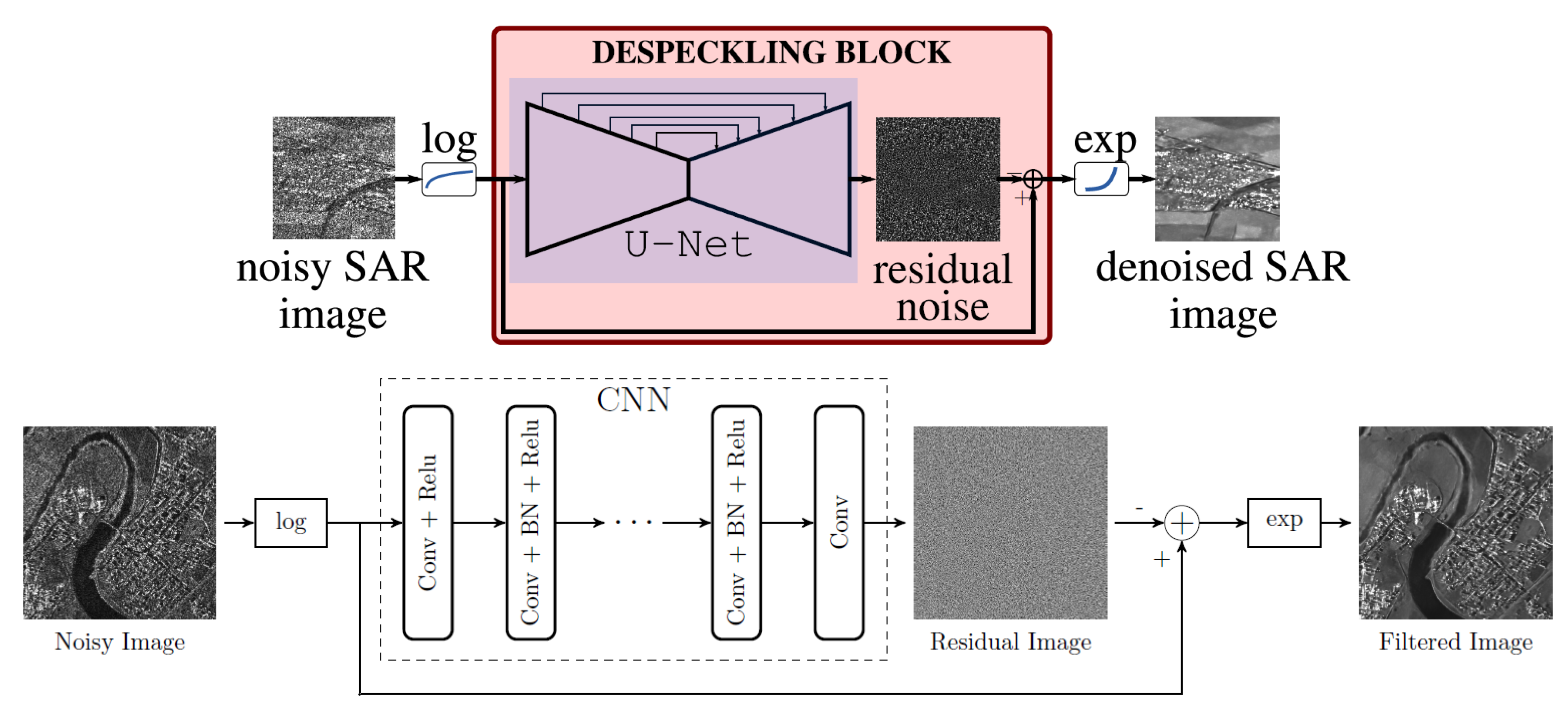

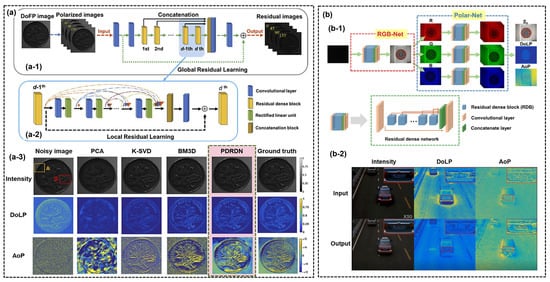

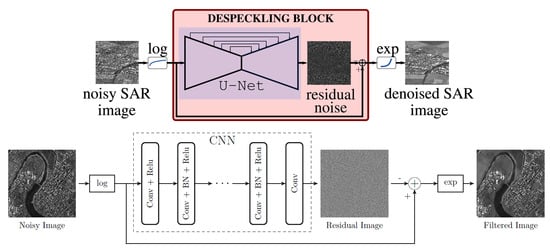

In most traditional denoising methods for SAR images, a statistical model about the signal and speckle is necessary. To release it, some researchers extended DL approaches to SAR image despeckling [51,60,61,221,222]. Some of these methods are based on U-Net [221,222] and Residual-Net [61,223]. The corresponding network structure and denoising results are shown in Figure 14.

Figure 14.

(Up) U-Net [221]. (Down) ResNet [61].

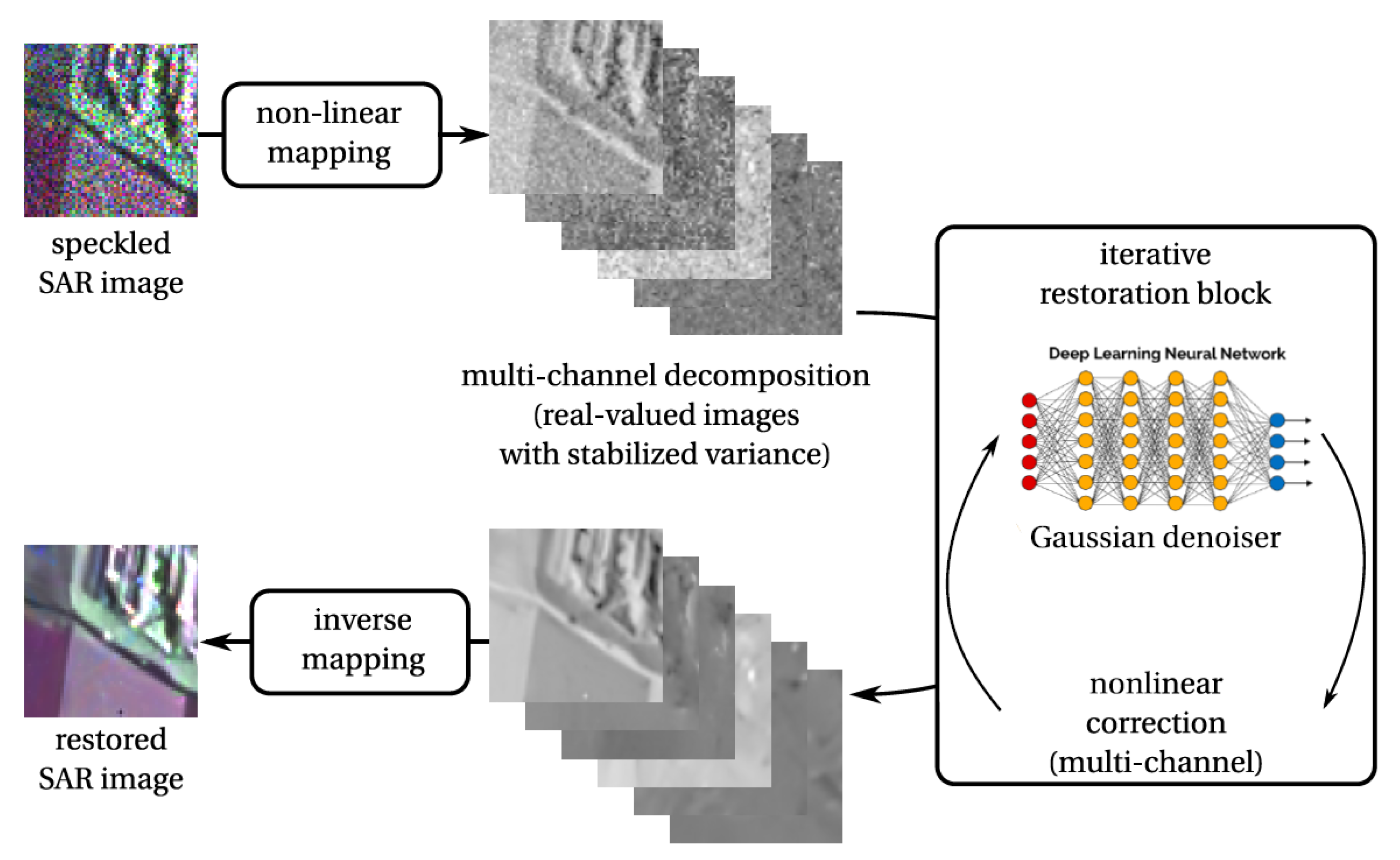

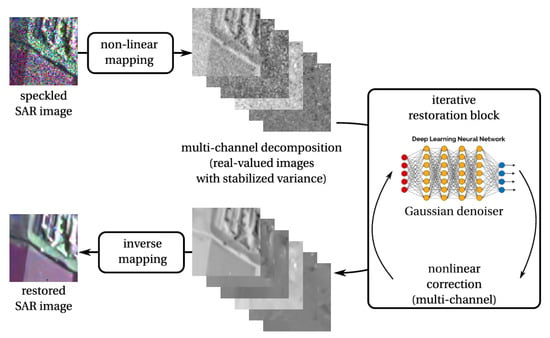

Notably, most DL-based despeckling methods are developed only for intensity-mode images. However, images captured from SAR polarimeters or interferometers have multiple channels and complex values, making the corresponding despeckling process more challenging [63,206,224]. In 2018, Ref. [206] first applied DNNs to despeckling in PolSAR images. It decomposes the complex-valued polarimetric and/or interferometric matrices into real-valued channels with a stabilized variance. And then, a DNN is applied, in an iteration way, until all channels are restored. The bottom part of Figure 15 presents a restoration result on an airborne image captured by the DLR’s ESAR sensor (image over Oberpfaffenhofen provided with PolSAR).

Figure 15.

DNN-based PolSAR despeckling method [63].

The despeckling of PolSAR images can be further developed by adding non-local post-processing into the CNN-based MULog method. In 2019, Ref. [225] designed a novel approach along this line. The first step is a network named MuLoG, using a matrix logarithm transform and a channel decorrelation step to iteratively remove noise in each channel. The patches obtained by the CNN step are filtered in the following step, i.e., non-local filtering, to smooth artifacts. The authors claimed that point-like artifacts in homogeneous areas are significantly reduced via the second step, which verifies that combining the non-local processing and the DL technique is a promising idea for despeckling [225]. In addition to these works, in 2021, Ref. [226] proposed a dual-complementary CNN network, which includes a sub-network to repair the structures and details of noisy RS images. By combining a wavelet transform operation with a shuffling operation to restore image structures and details, this solution can recover structural and textural details with a lower computational cost.

Although the DL technique is a dramatic solution to image denoising or despeckling, it usually needs vast datasets. Significantly, scenarios always suffer from different types of noises or speckles (e.g., the Gaussian, Poisson, sparse, etc.), and it is challenging to obtain enough data corresponding to the same scene but different types of noise. To handle this issue, in 2022, Ref. [227] proposed a user-friendly unsupervised hyperspectral images denoising solution under a deep image prior framework. Extensive experimental results demonstrate that the method can preserve image edges and remove different noises, including mixed types (e.g., Gaussian noise, sparse noise). Besides, as there are no processes of regularization and pre-training, it is more user-friendly than most existing ones.

4.2. Dehazing

As the backscattering light is partially polarized, PI is also an effective solution for restoring imaging in scattering media [28,29,35,58,78,228]. Utilizing polarization information makes it possible to effectively remove the backscattering light (this problem is also collectively known as dehazing) and extract target signals. Dating back to 2001, Ref. [35] originally suggested using the polarization relationship between two orthogonally polarized images and the object radiance to achieve hazy removal. Refs. [228,229] proposed to analyze the DoLP and AoP of backscattering to remove haze/veiling in scattering media. In 2018, Ref. [6] proposed a method combining computer vision and polarimetric analysis, and this method had a significant performance for gray-level image recovery in dense turbid media. However, in these traditional methods, the physical model of the underwater PI system is often simplified and thus different from an actual situation. For example, they consider that backscattering light’s DoP has a specific value and estimate it based on a small local background region [229,230]. This deviates from practical situations.

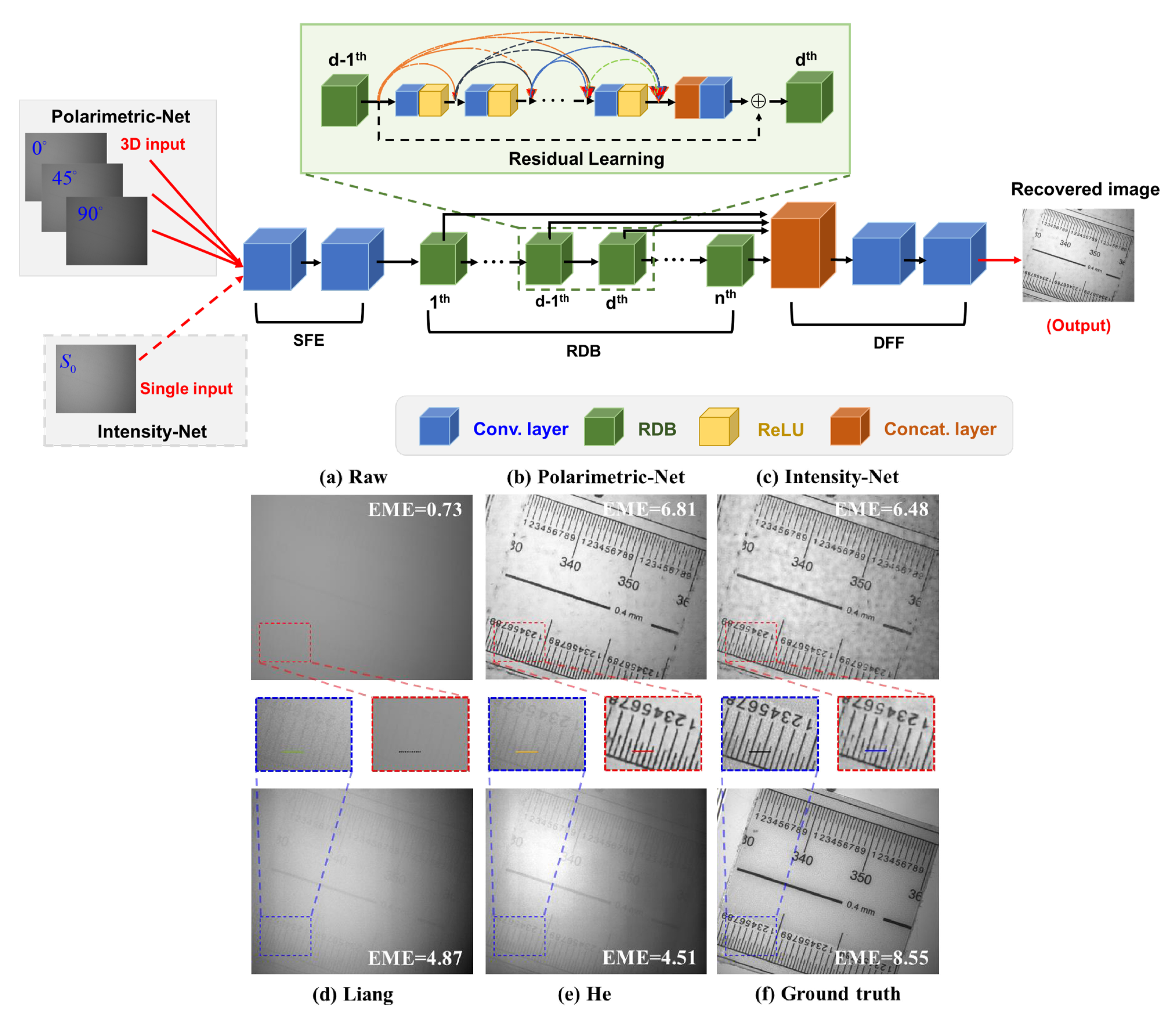

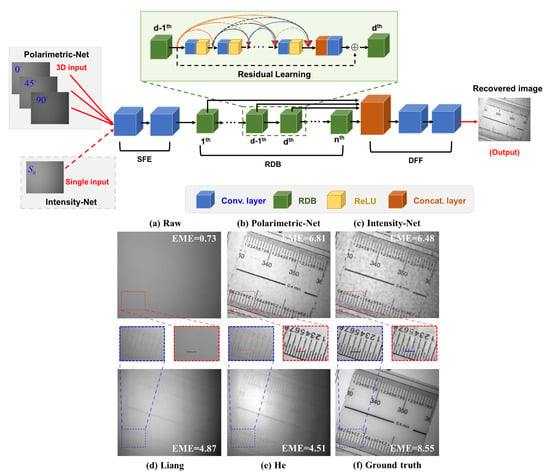

Applying DL for polarimetric dehazing/de-scattering is promising, especially when the scattering media are strong. In 2020, before proposing a dense network for underwater image recovery, Ref. [58] first built a dataset containing 140 groups of image pairs using a DoFP polarization camera. The upper part of Figure 16 shows the network structure. We can see that the input of Polarimetric-Net is a set of polarimetric images, while the Intensity-Net is based only on an intensity image. This design is used to verify the superiority of PI in haze removal. The recovered results by different methods are shown on the down part of Figure 16 for comparison. From the figure, the water is densely turbid, which results in an image of poor quality where the details are severely degraded. In sharp contrast, the image recovered by the method, labeled by Polarimetric-Net, is the best. The details, even the ruler’s scale, can be clearly seen. This is the first report about dehazing with polarimetric DL. The design and the main idea could easily be extended to RS cloud removal.

Figure 16.

(Up) Architecture of polarimetric dense network. (Down) The raw image in turbid water and the images recovered by different methods [58].

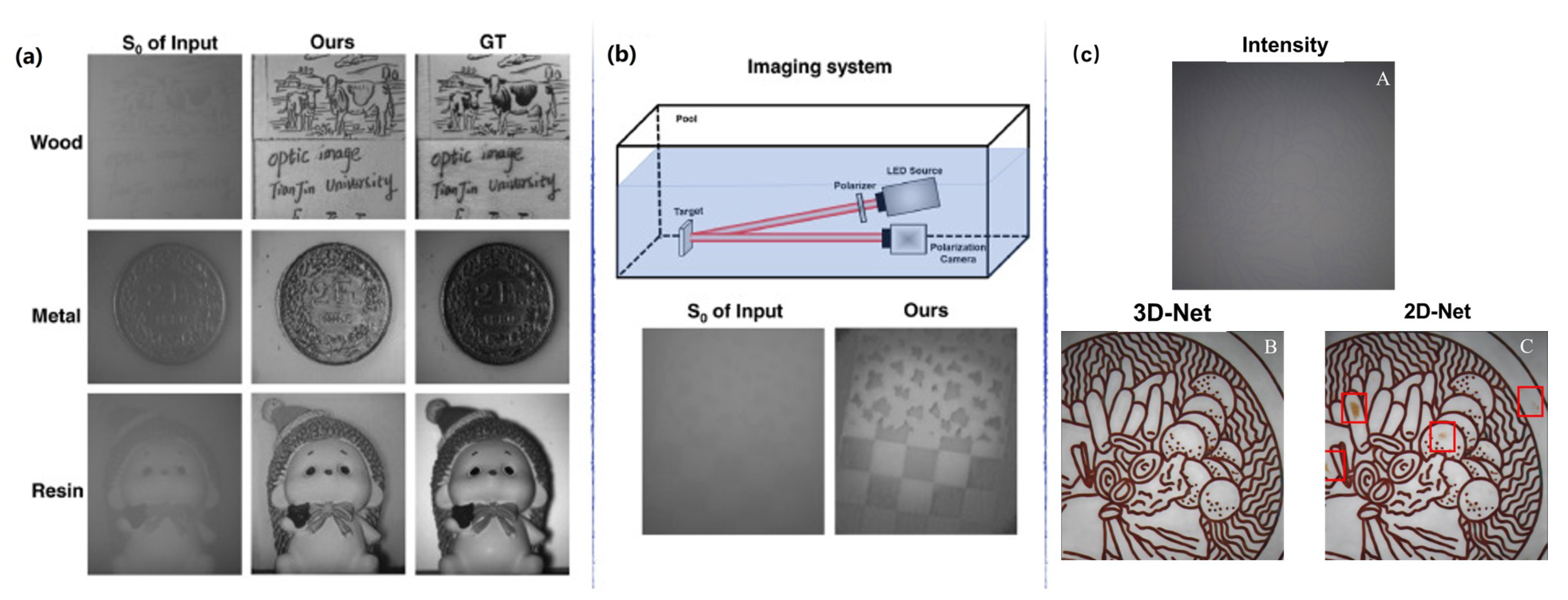

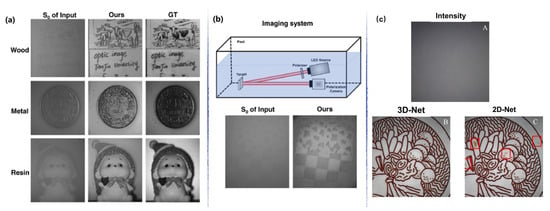

In 2022, to break the dependence on strictly paired images, Ref. [64] proposed an unsupervised polarimetric GAN for underwater-image recovery, and merged polarization losses into the network to boost details restoration. Results (as shown in Figure 17) demonstrate that it improves the PSNR value by an average of 3.4 dB, verifying the effectiveness and superiority in different imaging conditions. For the underwater color polarized images, Ref. [231] proposed a 3D-convolutional neural network to handle the color intensity information and polarization information. This network considers the relationships among different information and contains a well-designed polarization loss. Restoration results demonstrate that it can significantly improve image contrast, restore details, and solve color distortion. Besides, compared with the traditional network structure (i.e., 2D-Net in Figure 17c), this 3D-Net has a significant performance in avoiding artifacts.

Figure 17.

(a) Recovered results for different materials and the experiment in the natural underwater environment. (b) The imaging system includes a target board, a homemade polarized light source, and a polarization camera [64]. (c) Comparison of 3D- and 2D-Network for underwater color polarized images [231], where A is the intensity image, B and C are the results related to 3D-Net and 2D-Net, respectively.

4.3. Super Resolution

While capturing polarimetric images, the resolution may be reduced due to the limitation of detectors and optical systems. For example, DoFP polarimeters are frequently employed in visible PIs to acquire polarimetric data such as the Stokes vector, DoLP, and AoP in a single shot. This exceptional real-time performance is made possible by periodically integrated micro-polarizers on the focus plane; however, doing so lowers the spatial resolution. As a result, they affect the acquisition of the following polarization parameters.

Up to now, various interpolation algorithms have been developed to enhance resolution. This problem is also called demosaicing. The bilinear interpolation algorithm is one of the first two techniques utilized for DoFP imagers and has low computational complexity and accuracy. In contrast, the bicubic interpolation algorithm, though much more computationally intensive, achieves a reduced interpolation error in high contrast areas [232,233,234]. Recently, with the rise of interest for DL, Ref. [235] first proposed a CNN solution for polarization demosaicing, also named PDCNN. This technique divides the mosaicked polarization image into four channels, interpolates them using the bicubic approach, and then feeds the channels into a CNN that combines U-Net and skip connections. They compare the results with other methods for both DoLP and AoP images, which shows that the PDCNN outperforms the others by a large margin. This is the first report in the literature about DL-based demosaicing for PI.

The motivation of Zhang’s approach is to minimize the interpolation error of intensity-mode images with various polarization states. However, for a practical application, researchers really want to see such polarization parameters as intensity, DoLP, and AoP. For this purpose, Fork-Net, a four-layer, end-to-end completely CNN that [234] introduced, attempts to enhance the image quality of the tree parameters (, DoLP, and AoP). Its architecture is simple and it allows for direct map relations between polarization features and mosaicked images. This architecture ensures a coherent optimization strategy and prevents accumulation errors from the stepwise method, which first captures various polarization orientations before computing the DoLP and AoP. In addition, they also designed a customized loss function with a variance constraint to guide network training. Table 1 compares average PSNRs of images produced by different methods. One can see that this network realizes the highest quality of , DoLP, and AoP estimation.

Table 1.

The PSNRs for Different Methods on Test Set.

Subsequently, Ref. [236] extended this method to the case of chromatic polarimetric images and proposed a color polarization demosaicing network, named CPDNet, to jointly handle RGB and polarization image demosaicing issues. In 2019, Ref. [212] presented a conditional generative adversarial network (cGAN) architecture-based. In this network, the generator’s architecture is a U-Net, and the discriminator is based on a PatchGAN. To encourage physically realizable and accurate demosaiced images, they introduced physics-based constraints and Stokes-related losses into loss functions. The performance of this DL-based method for PI, which lacks ground truth, is comparable to that of methods that rely on ground truth. In 2022, Ref. [22] proposed a new AoP loss calculation method and applied it to a well-designed color polarization demosaicking network. This network contains multi-branch and has an increased convergence speed, i.e., three times compared with networks with the traditional AoP calculation method. This benefits from the fact that the AoP calculation method solves the “discontinuity” problem at , thus effectively shortens the network’s optimization paths. We must note that the demosicing is not the total of resolution enhancement because that the reduced resolution of images comes from not only down-sampling, but also the effects of blurring and noisy. In 2023, Ref. [237] considered the fact and designed two models, i.e., “down-sampling” and “down-sampling + blurring + noisy”, and developed a residual dense network-based polarization super resolution solution. Compared to other methods, the method can well restored details of polarization images with a resolution-reduction factor of four.

All the above models and methods can also be directly applied to the resolution enhancement of PolSAR images. PolSAR images sacrifice spatial resolution for more accurate polarization information [46]. This lower resolution may be a limit in some applications, so it is necessary to improve spatial resolution [82,238]. When considering polarimetric channel information, one can obtain a robust reconstruction result, but the process is complex, as well as relationships between different channels are also relatively complicated as the special imaging mode in a coherent superposition of echoes [239]. In other words, it is hard to linearly fit relationships between different polarization channels [82]. It makes the resolution enhancement of PolSAR images more difficult.

Although CNN-based methods have been widely used for despeckling PolSAR images [82,240,241], techniques for improving resolution have yet to be considered. In 2020, Ref. [82] opened this door and proposed a residual CNN for PolSAR image super-resolution. It is the first CNN-based method used to improve PolSAR images’ resolution. The method improves spatial resolution and keeps detailed information, as shown in Figure 18.

Figure 18.

PolSAR image super-resolution performance comparison (Bicubic [242], SRPSC [243], PSSR: The proposed) for the urban and the forest in San Francisco [82].

Compared with traditional methods, the mean PSNR value is improved by up to 12%. In 2021, Ref. [244] proposed a fusion network to produce high-resolution PolSAR images based on fully PolSAR images and single-polarization synthetic aperture radar (SinSAR) images. This network developed a cross-attention mechanism to extract features while taking into account the polarization information of low-resolution PolSAR images and the spatial information of high-resolution single-polarization SAR images. Average PSNR values are increased by 3.6 dB, while MAE values are reduced by 0.07.

4.4. Image Fusion

Image fusion or multi-modal image fusion is another critical step to boost applications, e.g., the detection and classification [245]. It consists of registering images acquired with different imaging modalities. For example, RS aims to obtain data simultaneously with high spectral and spatial resolutions. PolSAR data are the first choice for classification tasks because they can characterize geometric and dielectric properties of scatters [46,246,247]. Therefore, fusing the two data sources, i.e., the hyperspectral and PolSAR images, is of great interest and has high potential application [248].

There are many traditional examples of multi-data sources fusion for RS applications. For example, multispectral and panchromatic image fusion [245,249,250], hyperspectral and multispectral image fusion, hyperspectral and polarimetric image fusion [52,251,252,253]. In particular, for the classification of land cover using PolSAR and hyperspectral data, Ref. [254] developed a hierarchical fusion technique. To be more precise, the hyperspectral data are first utilized to discriminate between vegetation and non-vegetation areas, while the PolSAR data classify non-vegetation areas into manufactured objects, water, or bare soil. Ref. [255] fused PolSAR and hyperspectral data, and a features concatenation was produced by concatenating the hyperspectral data’s features. Then, decision fusion is used to combine the classification results from multiple classifiers. Ref. [256] used the two data sources to detect oil spills. Recently, extending DL methods to RS data fusion has become a hot topic [248,257]. Next, we will introduce some typical examples.

For the question of how to extract features and fuse hyperspectral images and PolSAR data, Ref. [52] proposed an effective solution, a two-stream CNN. For each data, this network generates identical but independent convolutional streams. The two streams are then combined with comparable dimensionalities inside a fusion layer. With this design, informative features from two data sources—namely, hyperspectral and PolSAR—are effectively extracted for fusion and classification purposes. Examples of classification results indicate that the CNN-based fusion approach may effectively extract features and fuse the complementary information from two data sources in a balanced manner.

In 2019, Ref. [97] proposed a generative-discriminative network, named PolNet, for fusing and classifying polarization and spatial features. A generative network and a discriminative network share the same bottom layers in this network. As a benefit, the problem of a finite number of labeled pixels in PolSAR applications can be effectively addressed. This design enables sharing labeled and unlabeled pixels from PolSAR images for training in a semisupervised manner. Additionally, the network informs a Gaussian random field prior and a conditional random field posterior on the learned fusion features and the output label configuration to increase fusion precision. By comparing the label maps and median overall accuracies (OAs), the authors found that the Pol-Net has the best accuracy among the list, and almost all pixels are well matched with ground truths visual quality. It should be noted that the PolSAR images used here are Flevoland.

Ref. [174] suggested using an unsupervised DNN to handle this problem. The suggested network, also known as PFNet, learns maps for fused intensity and DoLP images without using ground truths or complex activity level measurement and fusion rules. The best visual quality can be seen when comparing the PFNet to other approaches. In 2020, using CNN and a feature extractor to provide the distribution of polarization information, Ref. [90] proposed a polarization fusion approach. Experimental results demonstrate that the method can effectively extract polarization information; then, this information can improve the detection rate.

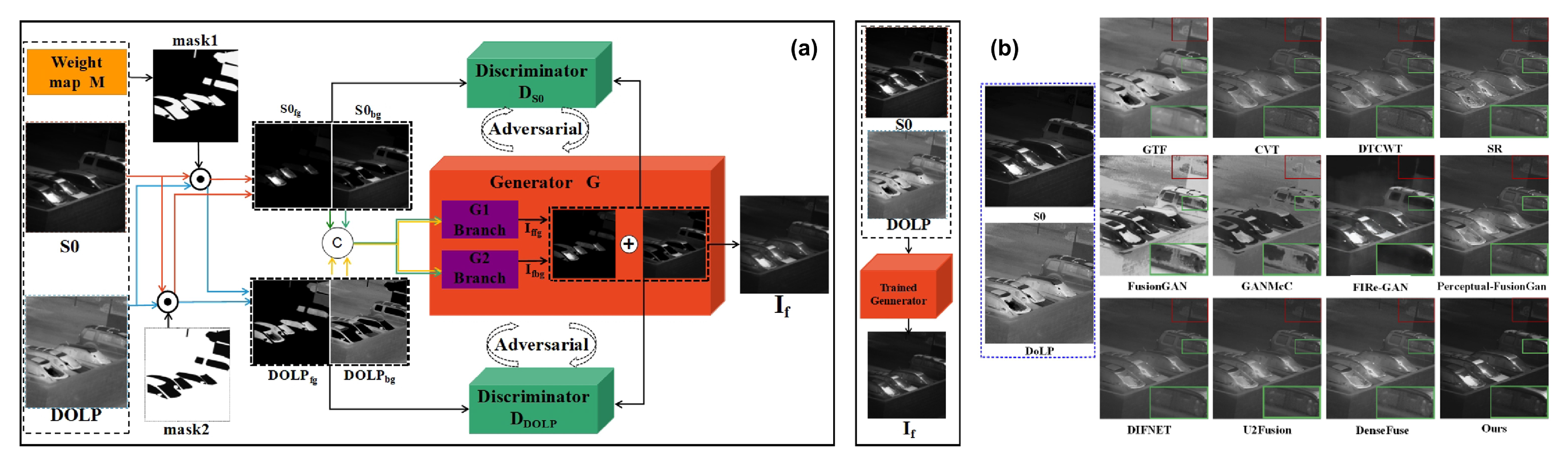

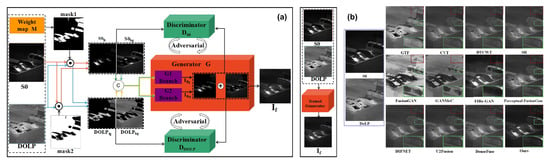

In 2022, Ref. [258] proposed a semantic-guided polarimetric fusion solution, which is based on a dual-discriminator GAN (i.e., SGPF-GAN). This network has a generator and two discriminators, as shown in Figure 19. The dual discriminator aims to identify the polarization/intensity of multiple semantic targets; and the generator’s objective is to construct a fused image by the weighted fusing of each semantic object image. Qualitative and quantitative evaluations verify the superiority of both visual effects and quantitative metrics, as shown by the results of qualitative and quantitative evaluations. Additionally, the performance can be greatly improved by using this fusion approach to detect transparent, camouflaged hidden targets and image segmentation.

Figure 19.

(a) Overall network structure of the proposed SGPF-GAN, (b) A polarized image fusion result [258].

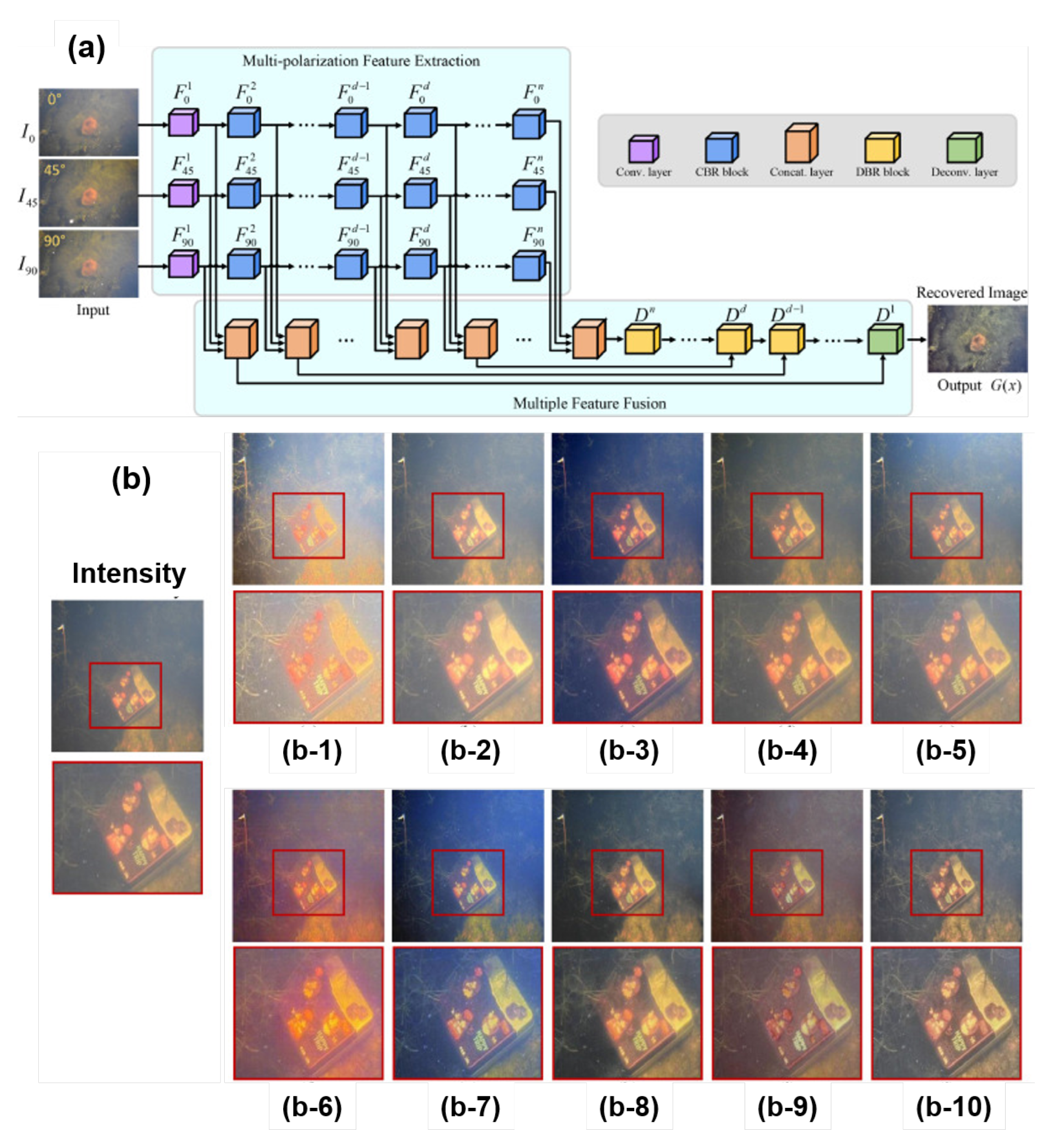

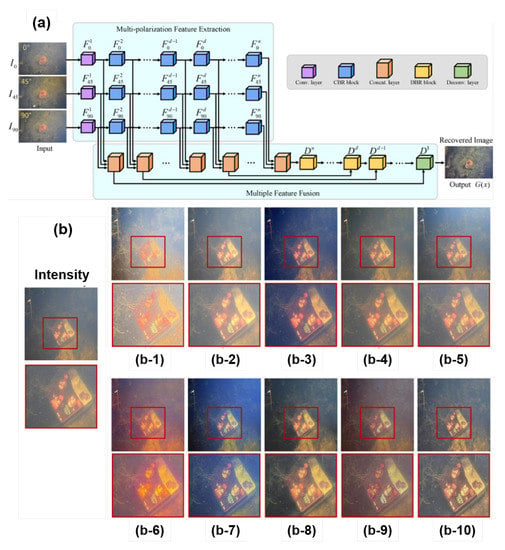

For underwater imaging, in 2022, Ref. [259] proposed a DL-based method that uses a multi-polarization fusion GAN-based solution to learn relationships between objects’ polarization and radiance information, and its network architecture is presented in Figure 20a. Compared with different methods (as shown in Figure 20b), we can observe that this method preserves more details of the foreground and background under turbid water.

Figure 20.

(a) Architecture of the multi-polarization fusion generator network for underwater image recovery [259]. (b) Comparisons of different methods on the images captured in natural underwater environments. (b-1) [260], (b-2) [230], (b-3) [261], (b-4) [262], (b-5) [263], (b-6) [264], (b-7) [265], (b-8) [266], (b-9) [267], (b-10) [259].

5. Polarization Information Applications

Once high-quality polarimetric images have been obtained, various practical applications can be addressed, such as object detection [268,269], segmentation [270,271] and classification [95,96]. Employing deep learning with network architecture and constraints adapted to each application can help to extract useful polarimetric information and thus significantly improve the performance [116,119,121,123,126].

5.1. Object Detection

Polarization information can characterize important physical properties of objects, including geometric structures, material natures, and roughness, even under complex conditions with poor illumination or strong reflections. The fundamental idea behind polarization-encoded imaging is to identify the polarization characteristics of light that come from objects or scenes. Therefore, PI has significant application in object detection [4,46,268].

In visible imaging, as a fundamental task, road scene analysis plays an significant role, e.g., in autonomous vehicles, and advanced driver assistance systems. PI can provide generic features of the target objects under both good and adverse weather conditions [71,175,272]. For example, Ref. [175] explored the advantages of polarization parameters in discriminating objects and powerful of DNNs in extracting features to detect road scene contents in adverse conditions. By this way, detection tasks in adverse conditions (e.g., fog, rain, and low-light) were improved by about 27%.

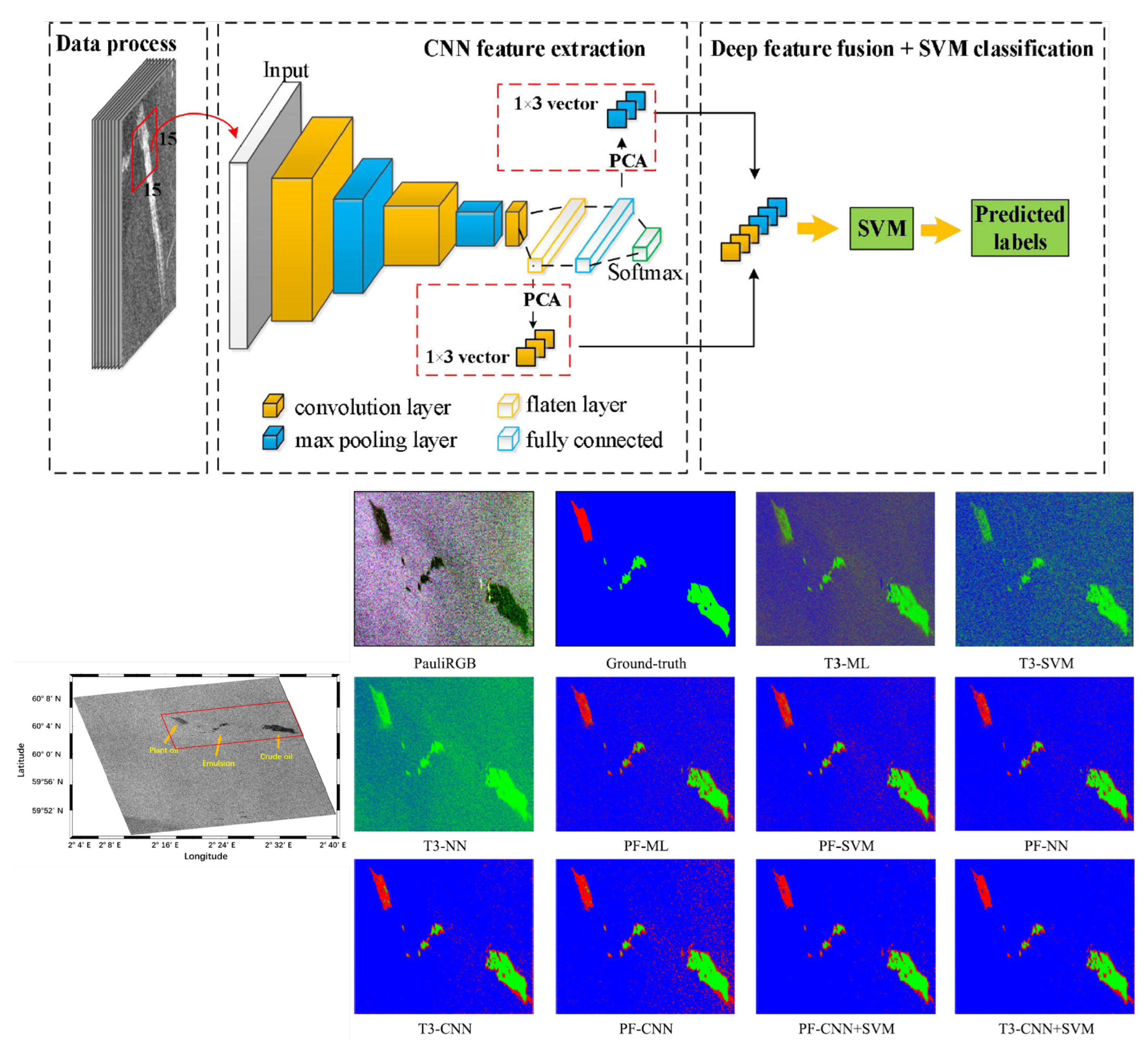

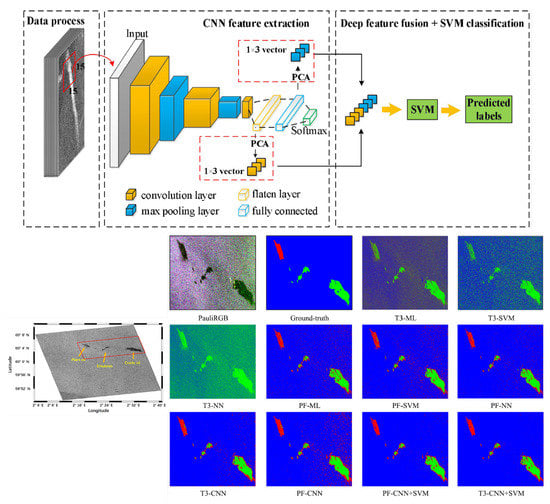

In RS, PI and PolSAR have also shown to be effective methods for finding marine oil spills. The accuracy of conventional detection techniques depends on the quality of feature extraction, which is dependent on artificially derived polarization characteristics [256,273]. DL-based solutions are capable of automatically mining spatial features from data sets. For example, Ref. [273] developed an oil spill detection algorithm, which benefits from the multi-layer deep feature extraction by CNN. Figure 21 presents the flowchart of this algorithm. This figure shows how the PolSAR data (symmetrical complex coherency matrix) is first transformed into a nine-channel data block before being fed to the CNN. For the purpose of extracting two high-level features from the original data, it then constructs a five-layer CNN architecture. By using PCA and an SVM approach using a radial basis function kernel, the features dimension is reduced and merged. The comparison of the results of several approaches for spill detection is shown in Figure 21 (Down). The figure demonstrates how this technique can increase detection precision and successfully identify an oil spill from a biogenic slick.

Figure 21.

(Top) Flowchart of ocean oil spill identification. (Down) The marine oil spill detection classification results of different methods for one dataset [273].

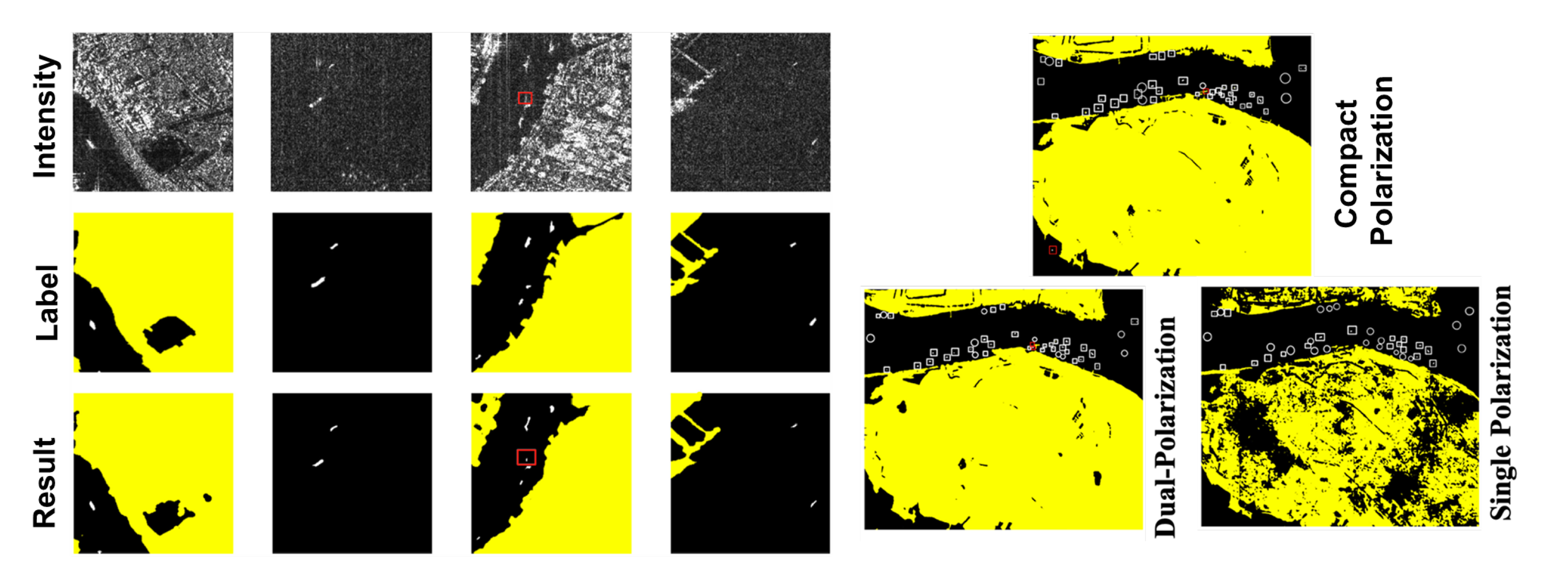

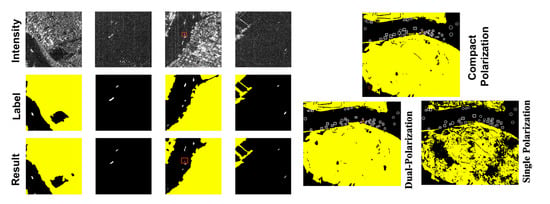

Ship detection, one of the most significant RS applications, is crucial for commercial, fishing, vessel traffic service, and military applications [91,274,275,276]. In 2019, Ref. [91] proposed a pixel-wise detection method for compact polarimetric SAR (CPSAR) images based on a U-Net. It detects ships accurately both near and far away from the shore. However, false alarms can be generated by cross side-lobes. Examples of detection results are shown in the upper part of Figure 22. White and red rectangles refer to detected targets and false alarms, respectively, while white circles refer to missed targets. Compared with the Faster RCNN, the method has an increase of 6.54% and 8.28% in the indices of precision [277] and recall [277], which verifies the ability and advantages in detecting ships. This work also compares CPSAR images with other PolSAR modes such as single polarization and linear dual-polarization configurations, and shows that CPSAR is better at detecting ships, as shown in the bottom part of Figure 22.

Figure 22.

(Up) Illustrations of CPSAR images, corresponding label images, and detection results. (Down) Illustration of results from different polarization modes [91].

Combining PI with DL can also be used for Change Detection (CD), such as urban change, sea ice change, and land cover/use change [92,173,278,279,280,281]. In 2018, Ref. [92] proposed a local restricted CNN for CD of PolSAR images. It can recognize not only different types of change but also reduce noise’s influence. Based on the multi-temporal PolSAR data, Ref. [278] developed a weakly supervised framework for urban CD. The technique achieves label aggregation in feature space using a multi-layer perceptron after learning multi-temporal polarimetric information using a modified unsupervised stacked AE stage. The authors test its efficacy and precision using an L-band UAVSAR dataset (in Los Angeles).

In 2020, Ref. [173] proposed a CNN Framework for the land cover/use CD in RS data from several sources. Three RS benchmark data are used to evaluate its efficiency and dependability (i.e., the multispectral, hyperspectral, and PolSAR). Examples and comparisons with several representative CD methods are shown in Table 2. From the results, i.e., the first column of Table 2, all CD methods’ OA values are higher than 91%, but the proposed method’s OA is over 98%. In addition, the values of BA, sensitivity, and F1-Score are over 90%. The FA, MD, and precision of CD are also greatly improved compared with other methods. In 2021, Ref. [282] proposed a ship detection method for land-contained sea without a traditional sea-land segmentation process. This method includes two stages and can well-addressed the ship detection under complex conditions, i.e., an offshore area. Experimental results demonstrate that the accuracy and the F1 score can reach 99.4% and 0.99, respectively.

Table 2.

The accuracy of different change detection methods for the PolSAR-San Francisco dataset.

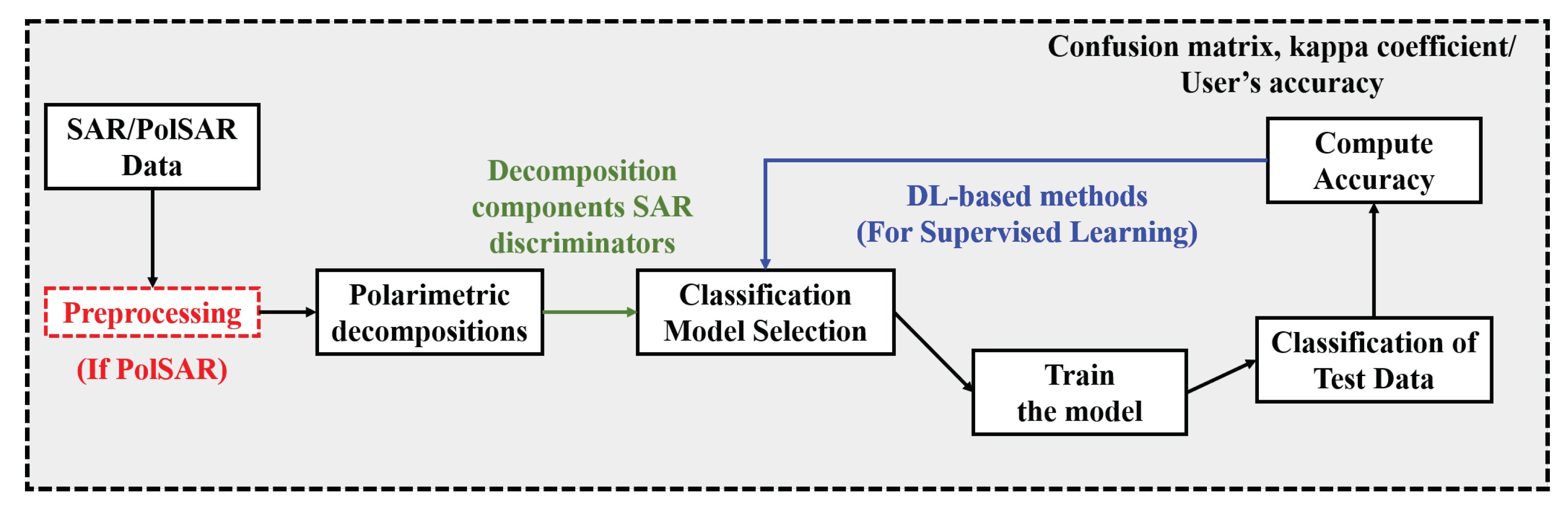

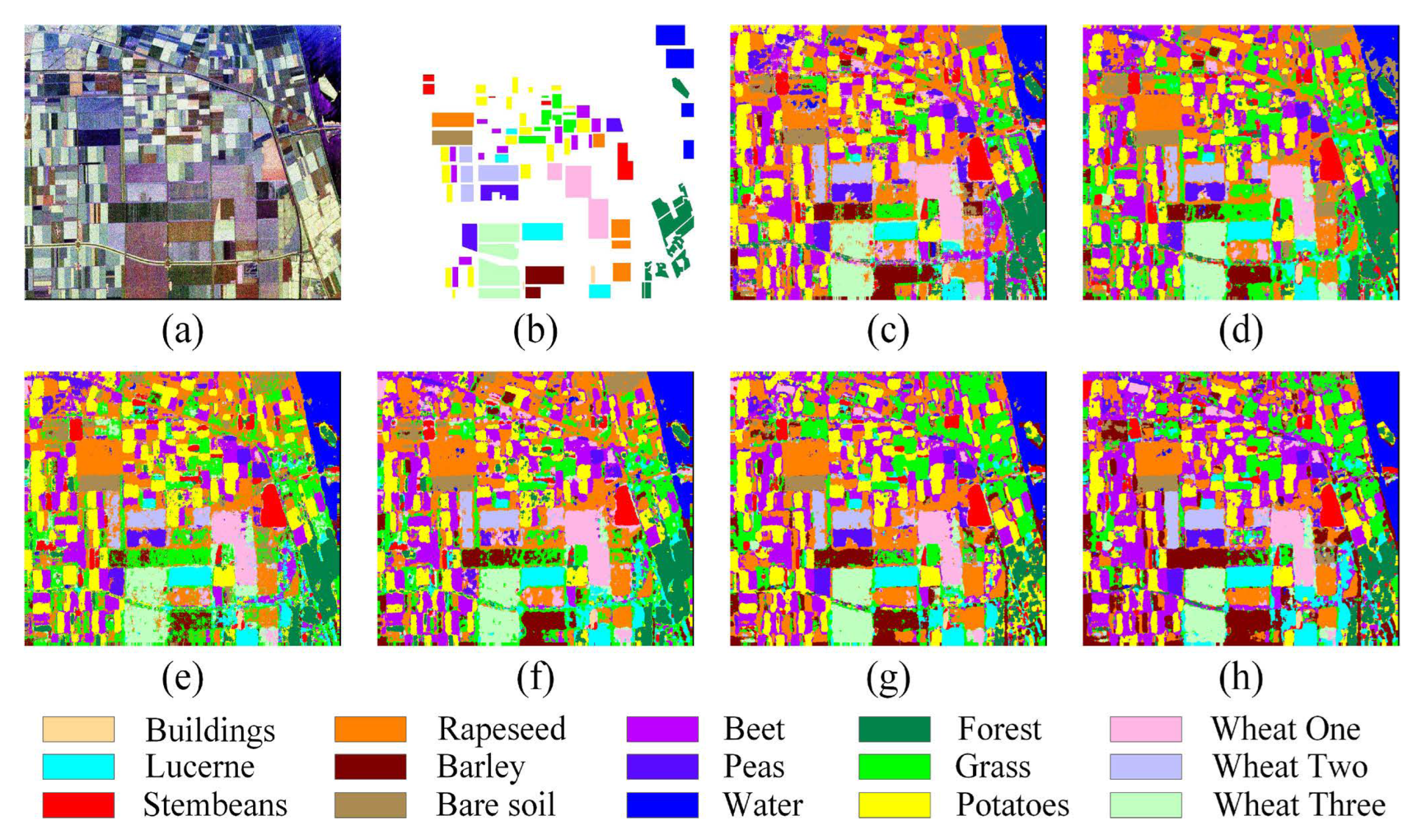

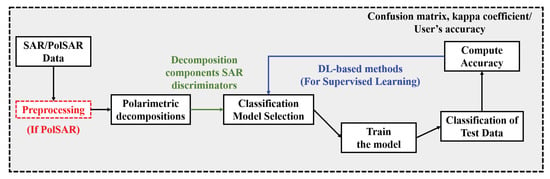

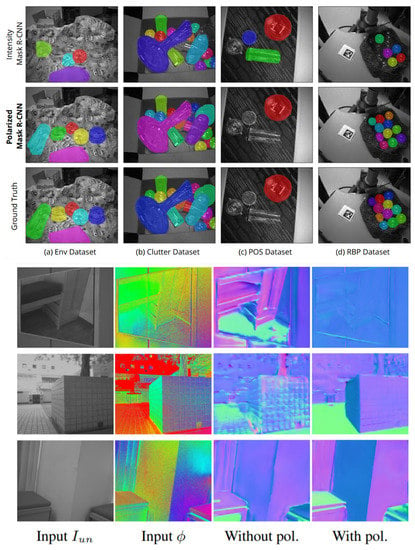

5.2. Target Classification

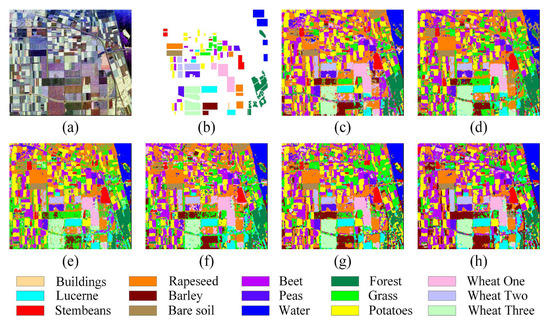

The classification tasks for SAR/PolSAR data are the most prioritized aspects for RI. Moreover, as PolSAR provides more information than other SAR systems, using polarization information obtained by PolSAR images can further improve classification accuracy and has many applications in oceanography, agriculture, forestry, and disaster monitoring [43,95,96,283,284]. Among these applications, classifying land use or cover in PolSAR images is one of the most challenging tasks. It mainly consists of different land classes, such as desert, lake, agricultural, forest, and urban [46,116]. Studies on PolSAR classification help understand different environmental elements and study the corresponding impact [285,286]. Figure 23 presents a general classification scenario [43].

Figure 23.

General classification scenario of SAR images.

In practice, one can perform classification processes based on multi-channel PolSAR or use specific parameters. Back in 2014, Shang and Hirose et al. have applied a neural-network-based technique named quaternion neural-network (QNN) to handle land classification [287,288,289,290]. Compared to methods at that time, the applied QNN method is effective and achieves higher classification performance because the used polarization parameters (e.g., Poincare-sphere-parameter [287,288] and Stokes vector [289]) are multidimensional. With the development of different but fruitful DL models, DL has successfully been used in classifying PolSAR data and well addressed the issue of big and multidimensional polarization data processing.

In 2016, Ref. [181] first designed an AE model for the task of terrain and achieved remarkable improvement in classification accuracy. Ref. [291] proposed a novel PolSAR terrain classification framework using deep CNNs. They used 6-D real-valued data, computed from the coherency matrix, to represent original PolSAR data, and naturally employed spatial information to perform terrain classification thanks to the power of CNN. Table 3 lists the accuracy for the labeled area in the image of Flevoland. From this table, we may observe that the overall accuracy for 15 classes reaches 92.46%. Ref. [292] found that in previous methods, as all PolSAR image’s pixels are classified independently, inherent interrelations of different land covers are always ignored. To solve this problem, they used a fixed-feature-size CNN, named FFS-CNN, to classify the pixels in a patch simultaneously; as a result, this solution is faster than other CNN-based methods.

Table 3.

Accuracy for the labeled area in the image of Flevoland.

Notably, these methods only consider the pixels’ amplitude; as a result, they cannot obtain enough discriminative features. In 2019, Ref. [140] designed a complex-valued convolutional auto-encoder network named CV-CAE. The phase information of PolSAR images could be utilized because all encoding and decoding operations are extended to the complex domain. To further improve performance, they also suggested a post-processing technique called spatial pixel-squares refinement. By calculating a blocky land cover structure, this method can increase refinement efficiency. Figure 24 presents the classification results and compares the performance of different algorithms. The intra-class smoothness and the inter-class distinctness outperform those of the compared algorithms.

Figure 24.

The classification results of our algorithms and compared algorithms (a) WAE [293] (b) WCAE [293] (c) RV-CAE (d) FFS-CNN [292] (e) CV-CAE [140] (f) CV-CAE+SPF [140].

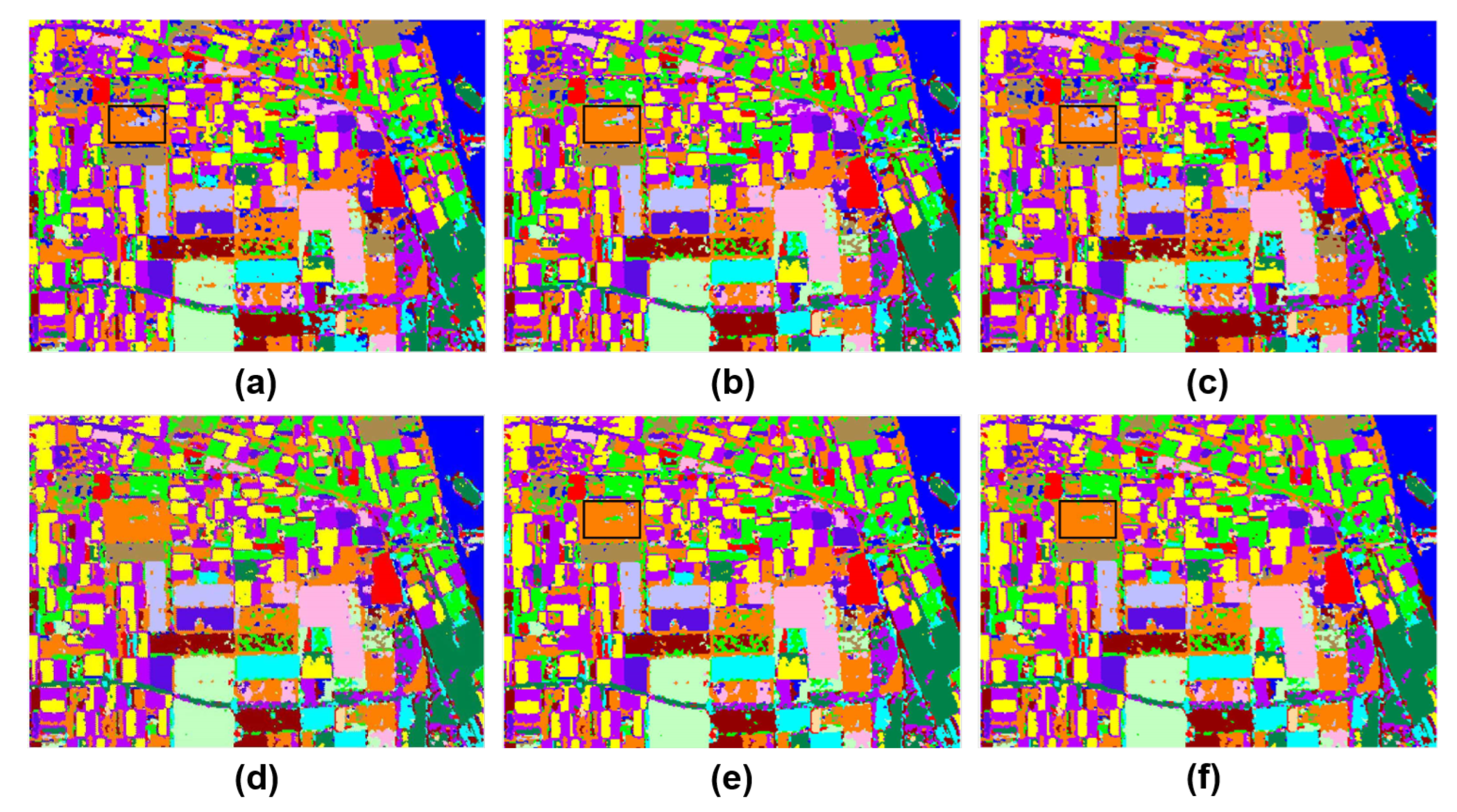

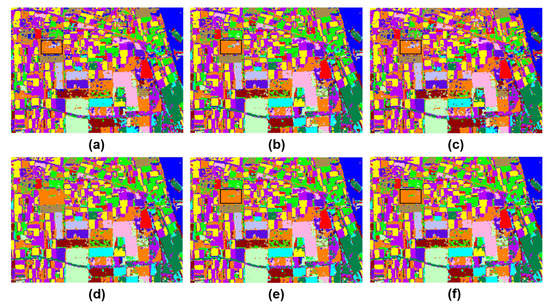

Generally speaking, distinctions between PolSAR and OPI are rarely taken into account in published works. Since complex-valued PolSAR data are frequently converted to real-valued data to fit the OPI processing and prevent complex-valued operations, CNNs are typically not designed for PolSAR classification. This is one of the reasons CNNs are unable to utilize all of their capabilities when doing the PolSAR classification assignment [70]. To solve this problem, in 2019, Ref. [70] developed a CNN architecture specifically for PolSAR image classification. A crucial step in the processing is looking for better PolSAR data as the input. They suggested a multi-task CNN (MCNN) structure for the network architecture to fit the enhanced inputs; MCNN is made up of the interaction module, amplitude branch, and phase branch. They also added a depth-wise separable convolution, known as DMCNN, to MCNN in order to effectively model potential correlations from the PolSAR phase. Figure 25 compares classification performances of different methods. From this figure, we may find that the proposed methods, the improved DMCNN, in particular, reach a better level of terrain completeness in classification maps.

Figure 25.

Classification results of whole map on AIRSAR Flevoland [70]: (a) Pauli RGB map. (b) Ground truth map. (c) CNN-v1. (d) VGG-v1. (e) CNN-v2. (f) VGG-v2. (g) MCNN. (h) DMCNN.

Recently, based on the C-band SAR, i.e., Gaofen-3 satellite with the dual-polarization state, i.e., VV and VH, in the western Arctic Ocean from January to February 2020), Ref. [294] designed a network framework aims to handle classification issue of the Arctic sea ice in winter. The results showed that, using two polarization states (i.e., VH + VV) improves the classification accuracy by 10.05% and 9.35%, respectively, compared with that only using VH or VV polarization.

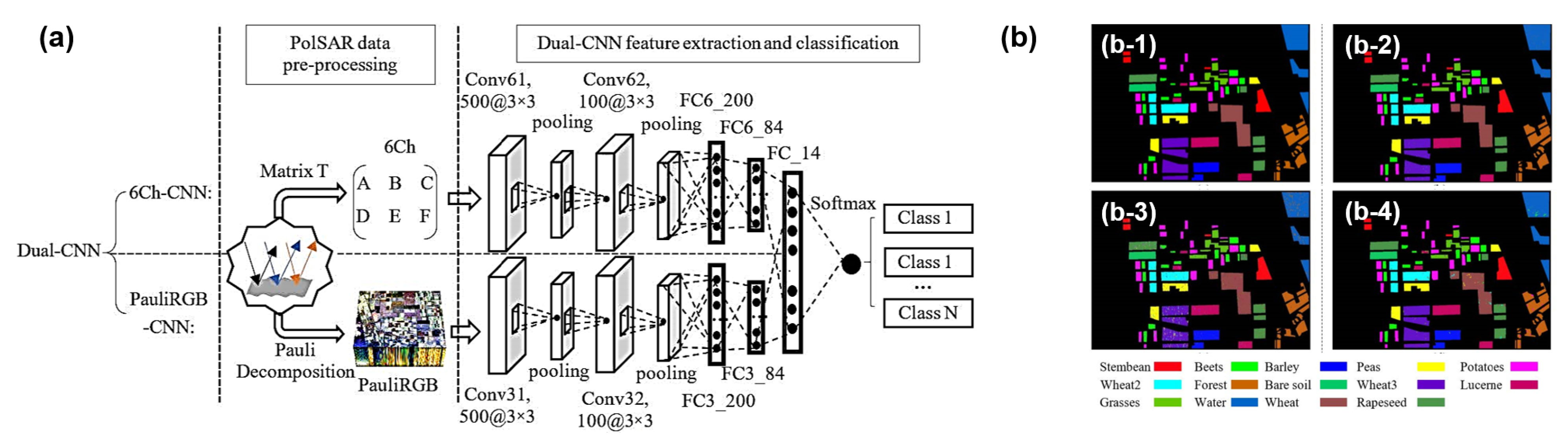

An open question is how to apply PolSAR’s spatial and polarization information at the same time. DCNNs are able to produce high-level spatial features and achieve cutting-edge performance in image analysis because of their sophisticated designs and vast visual databases. However, because PolSAR data are multi-band and complex-valued, a standard model cannot be used to handle PolSAR data straightforwardly. Ref. [295] built a dataset to explore the abilities and DCNN’s potential on PolSAR classification. This work used six pseudo-color images (i.e., intensity, , , , , decomposition image, and Yamaguchi decomposition image) to characterize one random sample in each category. By a transfer learning framework, which incorporates a polarimetric decomposition into a DCNN, taking spatial analytic ability into account, the framework’s validation accuracy ups to 99.5%. Ref. [296] proposed a Dual-CNN for PolSAR classification. The main procedures are displayed in Figure 26 and contain two deep CNNs: extracting polarization features from a coherency matrix deduced 6-channel real matrix (i.e., 6Ch), and extracting spatial features in Pauli RGB images. A fully connected layer combines all extracted polarization and spatial property features. And then, a softmax classifier is used to classify features. The results displayed in Figure 26b verify the effectiveness of combining 6Ch-CNN and PauliRGB-CNN via fully connected layers. They claim that the classification precision on 14 land cover types is 98.56%.

Figure 26.

(a) The main procedures of PolSAR images classification based on the Dual-CNN model. (b) Comparison results with different methods, where (b-1–b-4) represent the classification results of the ground-truth, Dual-CNN, 6Ch-CNN, and PauliRGB-CNN, respectively [296].

According to the type of PolSAR image datasets, i.e., whether prior data is needed, one can divide the classification into supervised and unsupervised methods. In contrast to the unsupervised one, which simply requires scattering and statistical distributions derived from PolSAR data, the supervised one requires human interaction to acquire previous knowledge. Recently, semi-supervised methods have attracted more attention for using labeled and a few unlabeled samples to handle limited training sets. For example, Ref. [184] proposed a super-pixel restrained DNN with multiple decisions (SRDNN-MDs). It extracts effective super-pixel spatial features and reduces speckles based on only a few samples. This method is a semi-supervised classification model and yields higher accuracy.

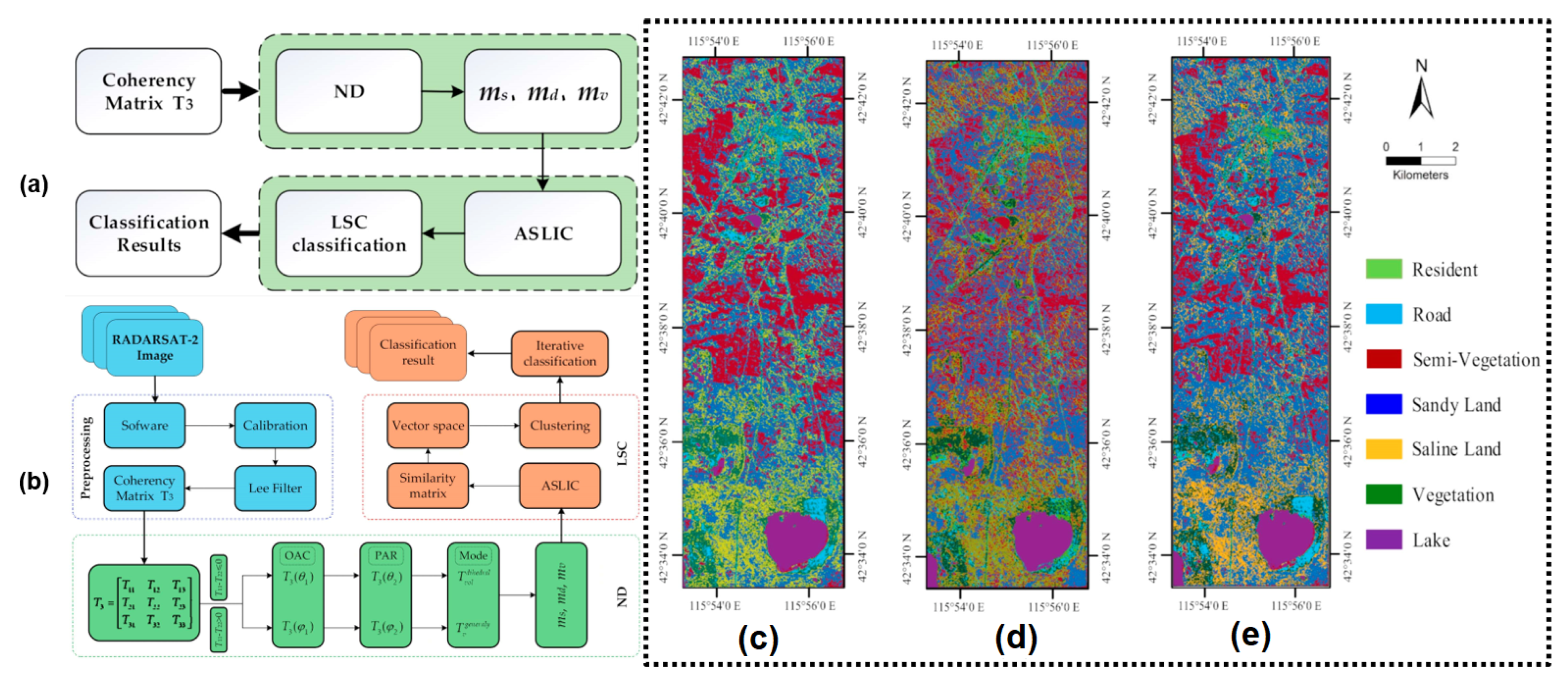

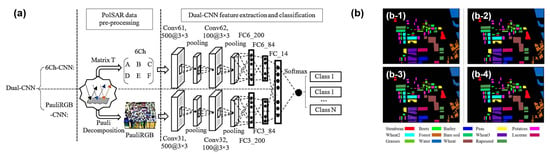

Further, to release the classification from prior knowledge, in 2021, Ref. [297] proposed an unsupervised classification network via a decomposition and large-scale spectral clustering method with super-pixels, also called ND-LSC. Figure 27 depicts the architecture, which mainly consists of two parts.

Figure 27.

(Left) (a) The proposed architecture of the work in this paper. (b) Flowchart of new decomposition and large-scale spectral clustering with superpixels (ND-LSC) unsupervised classification method. (Right) Classification results of three methods in the study area. (c) HFED and spectral clustering with superpixels (HED-SC) [298], (d) Random Forest Classifier (ND-RF) [299], and (e) proposed method (ND-LSC) [297].

They first extracted polarization scattering parameters by a new decomposition (ND); it contributes to understanding the polarimetric scattering mechanisms of sandy land [297]. Then, to speed up the processing of PolSAR images, they used large-scale spectral clustering (LSC), which creates a bipartite graph. The solution is effective and adaptable for wide regions thanks to this design. They tested the efficacy of the approach using the RADARSAT-2 fully PolSAR dataset (Hunshandake Sandy Land in 2016), with an OA value of 95.22%. The detailed results and the corresponding comparison can be found in Table 4 and Figure 27. In 2023, Ref. [284] developed a hybrid attention-based encoder–decoder fully convolutional network (HA-EDNet) to handle the PolSAR classification. The network input can be an arbitrary-size image and used a softmax attention module to boost the accuracy. Considering the insufficient number of labeled data, in 2023, Ref. [300] proposed a vision transformer-based framework (named PolSARFormer) by using 3D and 2D CNNs as feature extractors, as well as the local window attention. Extensive experimental results demonstrated that PolSARFormer got better classification accuracy than the state-of-the-art algorithms; for example, the results over the San Francisco data benchmark illustrated the accuracy improvement compared with the Swin Transformer (5.86%) and FNet (17.63%).

Table 4.

Confusion Matrix of the ND-I SC Method (PA: %; UA: %).

5.3. Others

In the above Sections, we mainly introduced examples of combining DL and PI in RS and some specific visible-wavelength applications. However, combining PI and DL can also be successfully used in other fields, such as biomedical imaging and computer vision [301,302,303,304].

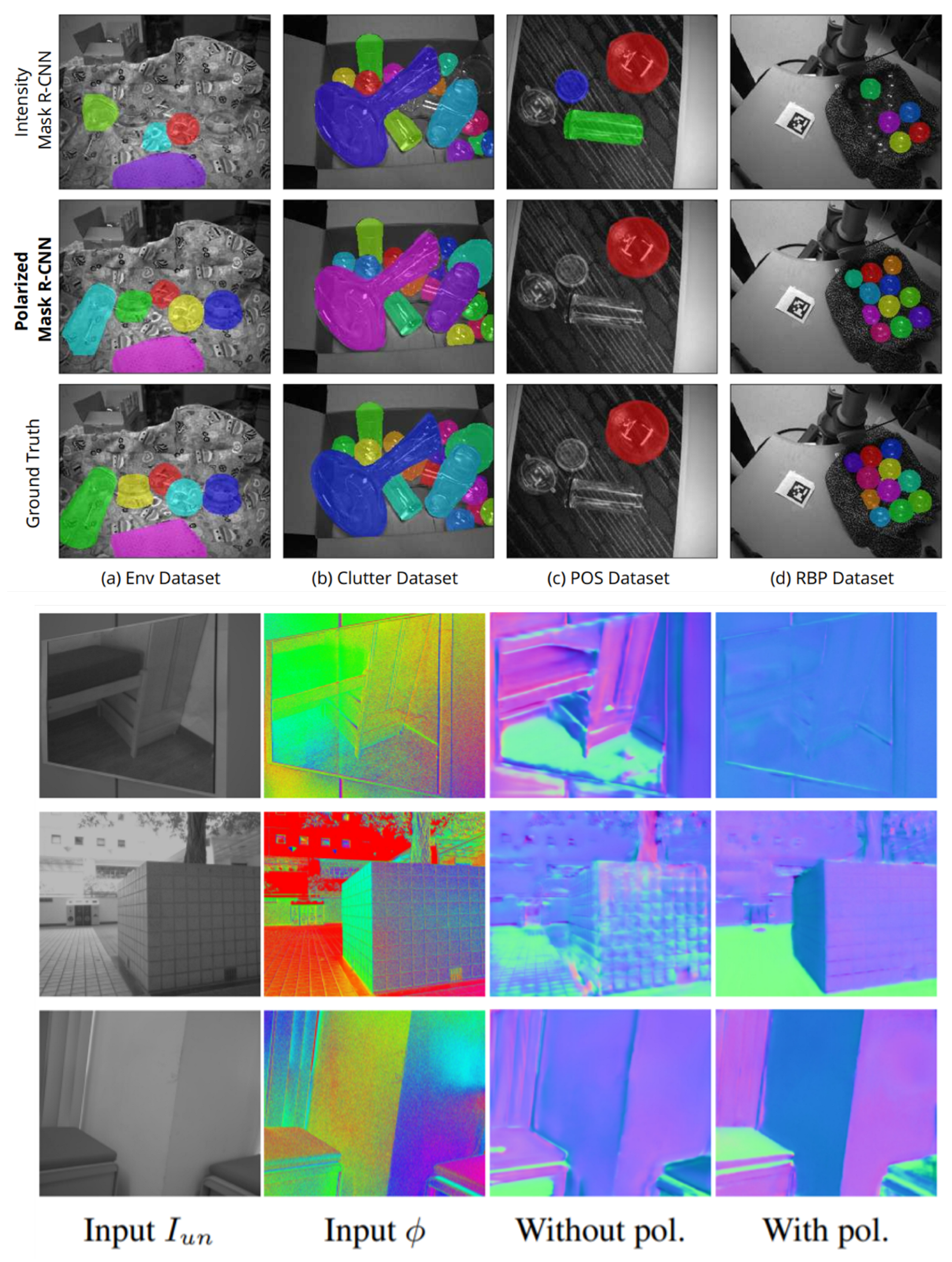

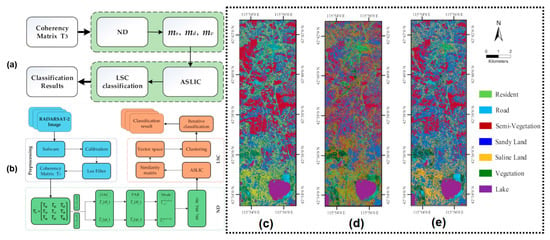

For example, based on a trained deep CNN, Ref. [303], in 2021, found that holographic images can be reconstructed from a single polarization state via a single-shot computational polarization microscope. This work opens a new door for reconstructing multi-dimensional information from one-dimensional input. The main idea can be extended to other fields, such as road detection, to achieve real-time PI. In 2020, Ref. [305] proposed a Polarized CNN to handle the problem of transparent object segmentation and increase the corresponding accuracy. The top of Figure 28 shows the results.

Figure 28.

(Top) Qualitative comparisons [305]. (Bottom) 1st row: polarization provides geometry cues. 2nd and 3rd rows: polarization provides guidance for planes with different surface normal. : unpolarized data; : AoP [306].

Finally, to find effective solutions to the multi-species classification problems of algae, Ref. [304] proposed a Mueller system to classify morphologically similar algae via a CNN and achieved a 97% classification accuracy. This is the first report about the combination of PI and DL in marine biology. Besides, learning-based solutions can further improve the reconstruction accuracy of polarization-based 3D-reconstruction techniques. In 2022, a physics-informed CNN was designed to estimate the scene-level surface normal from a single polarization image. The corresponding indoor and outdoor experiments are presented at the bottom of Figure 28. This approach needs prior knowledge of viewing encoding to help address the increase of polarization ambiguities caused by complex materials and non-orthographic projection in the scene-level polarization shape [306]. Although these applications have large differences, the networks designed for polarization images in all these applications can learn from each other.

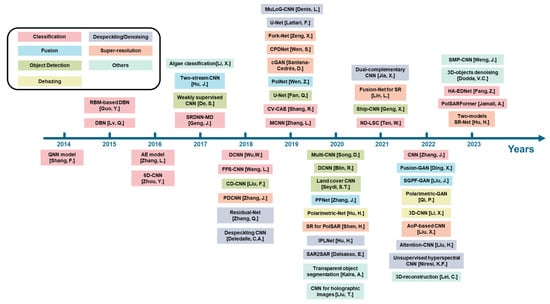

Finally, we summarize the reviewed works chronologically in Figure 29 to help the reader follow the chronological evolution of the methods and the different applications, as well as to find the target works as quickly as possible.

Figure 29.

Chronological evolution of the reviewed works.The references mentioned here are chronologically listed as 2014 [287], 2015 [188,189], 2016 [181,291], 2017 [52,184,278,304], 2018 [92,199,206,235,292,295], 2019 [70,91,97,140,212,221,225,234,236], 2020 [11,58,82,173,174,175,222,273,303,305], 2021 [226,244,282,297], 2022 [22,64,211,227,231,258,259,294,306], and 2023 [210,237,284,300,302].

6. Conclusions

This paper have systematically reviewed advanced DL-based techniques for PI analysis. From two aspects of PI, i.e., the acquisition and the applications, we have shown that the DL-based methods have had significant success in such domains as denoising or despeckling, dehazing, super-resolution, object detection, fusion, and classification. In particular, depending on practical needs, different network models have been designed to handle each application. All the research works reviewed here can be considered strong evidence that DL-based PIs can break the limitations of traditional methods and provide irreplaceable solutions, especially for tasks in complex and hostile conditions. It’s worth noting that the reported DL models and researches largely depend on a special dataset, and it is difficult to guarantee similar performance for other datasets. This is the main disadvantage compared with other representative traditional models. Still, we always believe that the DL techniques are revolutionary in PIs.

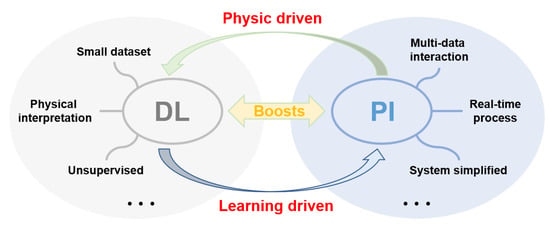

In short, there is an excellent synergy between PI and DL techniques. DL boosts PI and vice versa. PI techniques and related applications enable DL since they constantly develop advanced systems to collect datasets, which is an essential part of DL development physically. Also, DL boosts PI since it enhances the capability and performance of optical technology in a data-learning way [307]. Many desired functions in both DL (e.g., small dataset, physical interpretation, and unsupervised) and PI (e.g., multi-data interaction, real-time process, and system simplified) techniques may be solved by reasonable integration [308,309,310,311,312], as shown in Figure 30. However, the research on the combination of PI and DL is still at the initial stage, and various key questions or directions remain unanswered and need consideration [116,121]. Some of these questions belong to the everyday problems of DL [122,308], and some are caused by the specificity of PI [93]. We list hereafter some potentially interesting topics in this field.

Figure 30.

Synergy between PI and DL techniques.

The number of training samples. Although DL-based models can learn hidden features from the polarimetric images or PolSAR data, their performance and accuracy extremely depend on the number of data available for training. In other words, the more data, the higher quality [308,309]. However, acquiring large datasets for PI systems is difficult, especially for practical applications in complex conditions, such as underwater/ocean images or high-resolution PolSAR data for RS. For example, in Li et al.’s work [5], the used dataset only contains 150 groups of full-size image pairs, and 140 groups in Hu et al.’s work [58]. In order to get enough data, they increase the dataset scale using a well-designed pixels window and flipping it horizontally or vertically with a fixed step of pixels. As a result, they obtained more than 100,000 images. This is indeed a compromise strategy. How to keep the considerable learning performance of DL approaches with fewer samples remains a significant challenge. This problem may be solved by introducing new network architecture, such as that based on transfer learning [309,311], or bound by solid physical and apriori knowledge [313,314,315].

The inherent limitations of PI systems. One needs to invert the acquired multi-intensity images to obtain the polarization information [75,78,85]. This time-consuming process makes it challenging to handle changing scenes in real-time. Although the pseudo-polarimetric method can take the corresponding tasks based on only a single sub-polarized image, such as the dehazing in Li et al.’s work [28], these methods are based on a model with physical approximation. Learning the relations between single-channel and multi-channel data and finding an efficient way to transform them is a possible solution. In the optical imaging fields, the DoFP polarization camera makes it possible to capture the linear Stokes vector in one shot. Yet, the image resolution is reduced due to the integrating pixels [316,317]. Compared with traditional resolution improvement methods, DL techniques may break the limitations of systems computationally [22,318,319].

Embedding physics in network models. DL models were originally derived from the field of computer vision and are thus adapted to input data consisting of a single image without any physical constraint. But in PIs, we have multi-images, and internal physical connections exist between them. Adding these physical connections or prior knowledge can boost networks’ ability [307,310,315,320,321,322]. How to add these physical constraints and where to add them needs to be investigated and balanced. Besides, most existing DL-based models need ground truth to guide the extraction of features and learning. Of course, some models can achieve this function in an unsupervised way, such as the GAN network, but the performance of these methods is limited and always significantly worse than the performance of supervised methods. How to further enhance, especially by adding prior physical knowledge into training, the performance of unsupervised solutions is a burning problem [64,227].