Cloud Mesoscale Cellular Classification and Diurnal Cycle Using a Convolutional Neural Network (CNN)

Abstract

:1. Introduction

- Generating MSC cloud type classification on a fine temporal and spatial resolution compared with currently available products;

- The ability to generate high-temporal-resolution diurnal variability (including during the nighttime) for MCC cloud types from satellite observations;

- The algorithm was shown to be easily transformable to different satellite platforms.

2. Data

2.1. The Research Domain

2.2. SEVIRI

2.3. GOES-ABI

3. Methodology

3.1. Convolutional Neural Network (CNN) Models

3.2. Training and Test Sets

3.3. CNN Model Setup, Architecture, and Hyperparameter Selection

4. Results

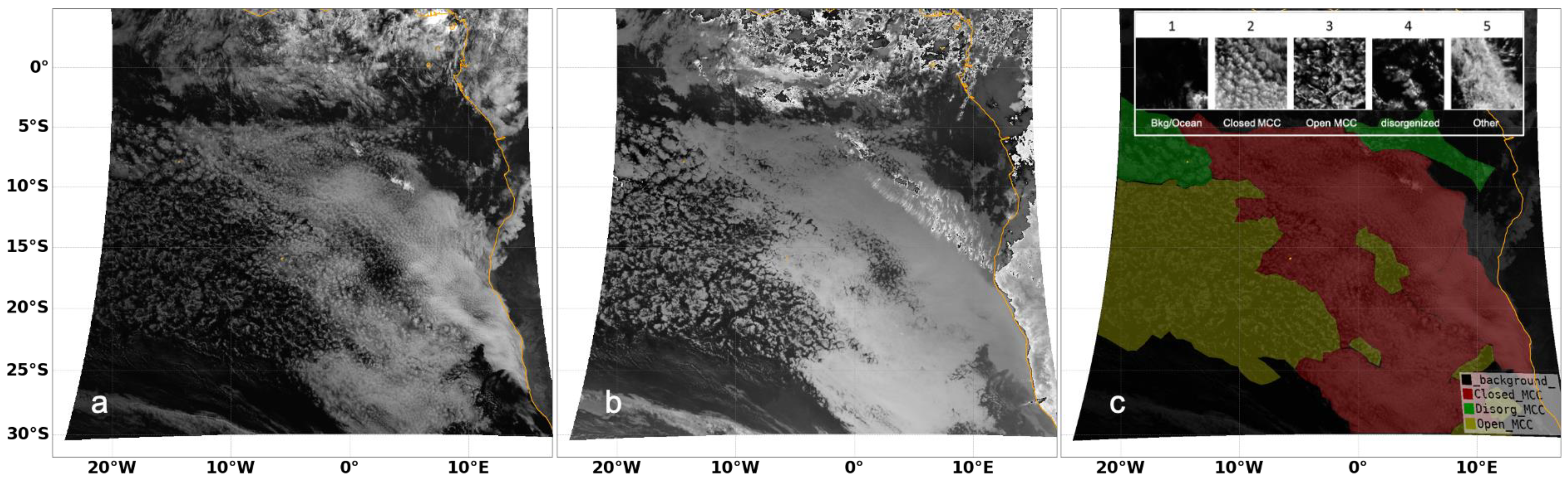

4.1. MCC Semantic Segmentation—VIS Model

4.2. MCC Semantic Segmentation—Comparison between VIS and IR Models

4.3. MCC Cloud Type Diurnal Variations and Seasonal Trends

4.4. Application of IR Model MCC Classification on GOES-ABI

5. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Muhlbauer, A.; McCoy, I.L.; Wood, R. Climatology of stratocumulus cloud morphologies: Microphysical properties and radiative effects. Atmos. Chem. Phys. 2014, 14, 6695–6716. [Google Scholar] [CrossRef] [Green Version]

- McCoy, I.L.; Wood, R.; Fletcher, J.K. Identifying Meteorological Controls on Open and Closed Mesoscale Cellular Convection Associated with Marine Cold Air Outbreaks. JGR Atmos. 2017, 122, 11678–11702. [Google Scholar] [CrossRef] [Green Version]

- Gufan, A.; Lehahn, Y.; Fredj, E.; Price, C.; Kurchin, R.; Koren, I. Segmentation and Tracking of Marine Cellular Clouds observed by Geostationary Satellites. Int. J. Remote Sens. 2016, 37, 1055–1068. [Google Scholar] [CrossRef]

- Wood, R.; Hartmann, D.L. Spatial Variability of Liquid Water Path in Marine Low Cloud: The Importance of Mesoscale Cellular Convection. J. Clim. 2006, 19, 1748–1764. [Google Scholar] [CrossRef]

- Wood, R.; Bretherton, C.S.; Leon, D.; Clarke, A.D.; Zuidema, P.; Allen, G.; Coe, H. An aircraft case study of the spatial transition from closed to open mesoscale cellular convection over the Southeast Pacific. Atmos. Chem. Phys. 2011, 11, 2341–2370. [Google Scholar] [CrossRef] [Green Version]

- Redemann, J.; Wood, R.; Zuidema, P.; Doherty, S.J.; Luna, B.; LeBlanc, S.E.; Diamond, M.S.; Shinozuka, Y.; Chang, I.Y.; Ueyama, R.; et al. An overview of the ORACLES (ObseRvations of Aerosols above CLouds and their intEractionS) project: Aerosol–cloud–radiation interactions in the southeast Atlantic basin. Atmos. Chem. Phys. 2021, 21, 1507–1563. [Google Scholar] [CrossRef]

- Chang, I.; Christopher, S.A. Identifying Absorbing Aerosols Above Clouds from the Spinning Enhanced Visible and Infrared Imager Coupled with NASA A-Train Multiple Sensors. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3163–3173. [Google Scholar] [CrossRef]

- Yuan, T.; Song, H.; Wood, R.; Mohrmann, J.; Meyer, K.; Oreopoulos, L.; Platnick, S. Applying deep learning to NASA MODIS data to create a community record of marine low-cloud mesoscale morphology. Atmos. Meas. Tech. 2020, 13, 6989–6997. [Google Scholar] [CrossRef]

- Vallet, A.; Sakamoto, H. A Multi-Label Convolutional Neural Network for Automatic Image Annotation. J. Inf. Process. 2015, 23, 767–775. [Google Scholar] [CrossRef] [Green Version]

- Watson-Parris, D.; Sutherland, S.A.; Christensen, M.W.; Eastman, R.; Stier, P. A Large-Scale Analysis of Pockets of Open Cells and Their Radiative Impact. Geophys. Res. Lett. 2021, 48, e2020GL092213. [Google Scholar] [CrossRef]

- Segal-Rozenhaimer, M.; Li, A.; Das, K.; Chirayath, V. Cloud detection algorithm for multi-modal satellite imagery using convolutional neural-networks (CNN). Remote Sens. Environ. 2020, 237, 111446. [Google Scholar] [CrossRef]

- Li, A.S.; Chirayath, V.; Segal-Rozenhaimer, M.; Torres-Perez, J.L.; van den Bergh, J. NASA NeMO-Net’s Convolutional Neural Network: Mapping Marine Habitats with Spectrally Heterogeneous Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5115–5133. [Google Scholar] [CrossRef]

- Sakaeda, N.; Wood, R.; Rasch, P.J. Direct and semidirect aerosol effects of southern African biomass burning aerosol. J. Geophys. Res. 2011, 116, D12205. [Google Scholar] [CrossRef]

- Zuidema, P.; Redemann, J.; Haywood, J.; Wood, R.; Piketh, S.; Hipondoka, M.; Formenti, P. Smoke and Clouds above the Southeast Atlantic: Upcoming Field Campaigns Probe Absorbing Aerosol’s Impact on Climate. Bull. Am. Meteorol. Soc. 2016, 97, 1131–1135. [Google Scholar] [CrossRef] [Green Version]

- Swap, R.; Garstang, M.; Macko, S.A.; Tyson, P.D.; Maenhaut, W.; Artaxo, P.; Kållberg, P.; Talbot, R. The long-range transport of southern African aerosols to the tropical South Atlantic. J. Geophys. Res. 1996, 101, 23777–23791. [Google Scholar] [CrossRef]

- Painemal, D.; Kato, S.; Minnis, P. Boundary layer regulation in the southeast Atlantic cloud microphysics during the biomass burning season as seen by the A-train satellite constellation. J. Geophys. Res. Atmos. 2014, 119, 11288–11302. [Google Scholar] [CrossRef]

- Zhang, Z.; Meyer, K.; Yu, H.; Platnick, S.; Colarco, P.; Liu, Z.; Oreopoulos, L. Shortwave direct radiative effects of above-cloud aerosols over global oceans derived from 8 years of CALIOP and MODIS observations. Atmos. Chem. Phys. 2016, 16, 2877–2900. [Google Scholar] [CrossRef] [Green Version]

- Haywood, J.M.; Abel, S.J.; Barrett, P.A.; Bellouin, N.; Blyth, A.; Bower, K.N.; Brooks, M.; Carslaw, K.; Che, H.; Coe, H.; et al. The CLoud–Aerosol–Radiation Interaction and Forcing: Year 2017 (CLARIFY-2017) measurement campaign. Atmos. Chem. Phys. 2021, 21, 1049–1084. [Google Scholar] [CrossRef]

- Zuidema, P.; Chiu, C.; Fairall, C.; Ghan, S.; Kollias, P.; McFarguhar, G.; Mechem, D.; Romps, D.; Wong, H.; Yuter, S.; et al. Layered Atlantic Smoke Interactions with Clouds (LASIC) Science Plan; DOE Office of Science Atmospheric Radiation Measurement (ARM) Program (United States): Washington, DC, USA, 2015; p. 49.

- Wilcox, E.M. Stratocumulus cloud thickening beneath layers of absorbing smoke aerosol. Atmos. Chem. Phys. 2010, 10, 11769–11777. [Google Scholar] [CrossRef] [Green Version]

- Wilcox, E.M. Direct and semi-direct radiative forcing of smoke aerosols over clouds. Atmos. Chem. Phys. 2012, 12, 139–149. [Google Scholar] [CrossRef] [Green Version]

- Lu, Z.; Liu, X.; Zhang, Z.; Zhao, C.; Meyer, K.; Rajapakshe, C.; Wu, C.; Yang, Z.; Penner, J.E. Biomass smoke from southern Africa can significantly enhance the brightness of stratocumulus over the southeastern Atlantic Ocean. Proc. Natl. Acad. Sci. USA 2018, 115, 2924–2929. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Che, H.; Stier, P.; Gordon, H.; Watson-Parris, D.; Deaconu, L. Cloud adjustments dominate the overall negative aerosol radiative effects of biomass burning aerosols in UKESM1 climate model simulations over the south-eastern Atlantic. Atmos. Chem. Phys. 2021, 21, 17–33. [Google Scholar] [CrossRef]

- Diamond, M.S.; Saide, P.E.; Zuidema, P.; Ackerman, A.S.; Doherty, S.J.; Fridlind, A.M.; Gordon, H.; Howes, C.; Kazil, J.; Yamaguchi, T.; et al. Cloud adjustments from large-scale smoke–circulation interactions strongly modulate the southeastern Atlantic stratocumulus-to-cumulus transition. Atmos. Chem. Phys. 2022, 22, 12113–12151. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Zhong, Y.; Fei, F.; Liu, Y.; Zhao, B.; Jiao, H.; Zhang, L. SatCNN: Satellite image dataset classification using agile convolutional neural networks. Remote Sens. Lett. 2017, 8, 136–145. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-Scale Remote-Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef] [Green Version]

- Pešek, O.; Segal-Rozenhaimer, M.; Karnieli, A. Using Convolutional Neural Networks for Cloud Detection on VENμS Images over Multiple Land-Cover Types. Remote Sens. 2022, 14, 5210. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA; pp. 770–778. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Wada, K. Labelme: Image Polygonal Annotation with Python; MIT Computer Science and Artificial Intelligence Laboratory (CSAIL): Cambridge, MA, USA, 2016; Available online: https://github.com/wkentaro/labelme (accessed on 1 October 2021).

- Buda, M.; Saha, A.; Mazurowski, M.A. Association of genomic subtypes of lower-grade gliomas with shape features automatically extracted by a deep learning algorithm. Comput. Biol. Med. 2019, 109, 218–225. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, JMLR Workshop and Conference Proceedings, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Jean, S.; Soille, P. Mathematical Morphology and Its Applications to Image Processing; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Minnis, P.; Sun-Mack, S.; Young, D.F.; Heck, P.W.; Garber, D.P.; Chen, Y.; Spangenberg, D.A.; Arduini, R.F.; Trepte, Q.Z.; Smith, W.L.; et al. CERES Edition-2 Cloud Property Retrievals Using TRMM VIRS and Terra and Aqua MODIS Data—Part I: Algorithms. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4374–4400. [Google Scholar] [CrossRef]

- Yost, C.R.; Minnis, P.; Sun-Mack, S.; Chen, Y.; Smith, W.L. CERES MODIS Cloud Product Retrievals for Edition 4—Part II: Comparisons to CloudSat and CALIPSO. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3695–3724. [Google Scholar] [CrossRef]

- Zhang, J.; Zuidema, P. The diurnal cycle of the smoky marine boundary layer observed during August in the remote southeast Atlantic. Atmos. Chem. Phys. 2019, 19, 14493–14516. [Google Scholar] [CrossRef] [Green Version]

- Eastman, R.; Warren, S.G. Diurnal Cycles of Cumulus, Cumulonimbus, Stratus, Stratocumulus, and Fog from Surface Observations over Land and Ocean. J. Clim. 2014, 27, 2386–2404. [Google Scholar] [CrossRef] [Green Version]

- Wood, R.; Bretherton, C.S.; Hartmann, D.L. Diurnal cycle of liquid water path over the subtropical and tropical oceans: DIURNAL CYCLE of LIQUID WATER PATH. Geophys. Res. Lett. 2002, 29, 7-1–7-4. [Google Scholar] [CrossRef] [Green Version]

- Che, H.; Segal-Rozenhaimer, M.; Zhang, L.; Dang, C.; Zuidema, P.; Sedlacek III, A.J.; Zhang, X.; Flynn, C. Seasonal variations in fire conditions are important drivers in the trend of aerosol optical properties over the south-eastern Atlantic. Atmos. Chem. Phys. 2022, 22, 8767–8785. [Google Scholar] [CrossRef]

- Wood, R.; Comstock, K.K.; Bretherton, C.S.; Cornish, C.; Tomlinson, J.; Collins, D.R.; Fairall, C. Open cellular structure in marine stratocumulus sheets. J. Geophys. Res. 2008, 113, D12207. [Google Scholar] [CrossRef] [Green Version]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Segal Rozenhaimer, M.; Nukrai, D.; Che, H.; Wood, R.; Zhang, Z. Cloud Mesoscale Cellular Classification and Diurnal Cycle Using a Convolutional Neural Network (CNN). Remote Sens. 2023, 15, 1607. https://doi.org/10.3390/rs15061607

Segal Rozenhaimer M, Nukrai D, Che H, Wood R, Zhang Z. Cloud Mesoscale Cellular Classification and Diurnal Cycle Using a Convolutional Neural Network (CNN). Remote Sensing. 2023; 15(6):1607. https://doi.org/10.3390/rs15061607

Chicago/Turabian StyleSegal Rozenhaimer, Michal, David Nukrai, Haochi Che, Robert Wood, and Zhibo Zhang. 2023. "Cloud Mesoscale Cellular Classification and Diurnal Cycle Using a Convolutional Neural Network (CNN)" Remote Sensing 15, no. 6: 1607. https://doi.org/10.3390/rs15061607

APA StyleSegal Rozenhaimer, M., Nukrai, D., Che, H., Wood, R., & Zhang, Z. (2023). Cloud Mesoscale Cellular Classification and Diurnal Cycle Using a Convolutional Neural Network (CNN). Remote Sensing, 15(6), 1607. https://doi.org/10.3390/rs15061607