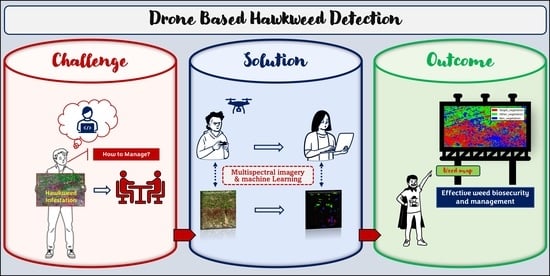

Autonomous Detection of Mouse-Ear Hawkweed Using Drones, Multispectral Imagery and Supervised Machine Learning

Abstract

1. Introduction

1.1. Hawkweed

1.2. Machine Learning Models for Weed Detection

2. Materials and Methods

2.1. Site Description

2.2. Ground Truthing

2.3. Collection of Multispectral UAV Images

2.4. Software and Python Libraries

2.5. Orthomosaics and Raster Alignment

2.6. Region of Interest (ROI) for Training and Validation

2.7. Raster Labelling

2.8. Statistical Analysis for Algorithm Development

2.9. Development of Classification Algorithms and Prediction

2.10. Classification Report

2.11. Data Processing Pipeline

3. Results

3.1. Detection of Hawkweed Flowers (Model 1)

3.1.1. Model Testing Accuracy

3.1.2. Model Validation Accuracy

3.1.3. Ground Truth Verification

3.2. Detection of Hawkweed Foliage (Model 2)

3.2.1. Model Testing Accuracy

3.2.2. Model Validation Accuracy

3.2.3. Prediction Results for Different Region of Interests at the Study Site

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Luna, I.M.; Fernández-Quintanilla, C.; Dorado, J. Is Pasture Cropping a Valid Weed Management Tool? Plants 2020, 9, 135. [Google Scholar] [CrossRef]

- Cousens, R.; Heydel, F.; Giljohann, K.; Tackenberg, O.; Mesgaran, M.; Williams, N. Predicting the Dispersal of Hawkweeds (Hieracium aurantiacum and H. praealtum) in the Australian Alps. In Proceedings of the Eighteenth Australasian Weeds Conference, Melbourne, VIC, Australia, 8–11 October 2012; pp. 5–8. [Google Scholar]

- Rapinel, S.; Mony, C.; Lecoq, L.; Clément, B.; Thomas, A.; Hubert-Moy, L. Evaluation of Sentinel-2 time-series for mapping floodplain grassland plant communities. Remote Sens. Environ. 2019, 223, 115–129. [Google Scholar] [CrossRef]

- Rapinel, S.; Rossignol, N.; Hubert-Moy, L.; Bouzillé, J.-B.; Bonis, A. Mapping grassland plant communities using a fuzzy approach to address floristic and spectral uncertainty. Appl. Veg. Sci. 2018, 21, 678–693. [Google Scholar] [CrossRef]

- De Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef]

- Yano, I.H.; Alves, J.R.; Santiago, W.E.; Mederos, B.J. Identification of weeds in sugarcane fields through images taken by UAV and Random Forest classifier. IFAC-PapersOnLine 2016, 49, 415–420. [Google Scholar] [CrossRef]

- Barrero, O.; Perdomo, S.A. RGB and multispectral UAV image fusion for Gramineae weed detection in rice fields. Precis. Agric. 2018, 19, 809–822. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; De Castro, A.I.; Kelly, M.; Lopez-Granados, F. Weed Mapping in Early-Season Maize Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Amarasingam, N.; Gonzalez, F.; Salgadoe, A.S.A.; Sandino, J.; Powell, K. Detection of White Leaf Disease in Sugarcane Crops Using UAV-Derived RGB Imagery with Existing Deep Learning Models. Remote Sens. 2022, 14, 6137. [Google Scholar] [CrossRef]

- Arnold, T.; De Biasio, M.; Fritz, A.; Leitner, R. UAV-based multispectral environmental monitoring. In Proceedings of the SENSORS, Waikoloa, HI, USA, 1–4 November 2010; pp. 995–998. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Rodríguez, J.; Lizarazo, I.; Prieto, F.; Angulo-Morales, V. Assessment of potato late blight from UAV-based multispectral imagery. Comput. Electron. Agric. 2021, 184, 106061. [Google Scholar] [CrossRef]

- Yang, W.; Xu, W.; Wu, C.; Zhu, B.; Chen, P.; Zhang, L.; Lan, Y. Cotton hail disaster classification based on drone multispectral images at the flowering and boll stage. Comput. Electron. Agric. 2021, 180, 105866. [Google Scholar] [CrossRef]

- Paredes, J.A.; Gonzalez, J.; Saito, C.; Flores, A. Multispectral Imaging System with UAV Integration Capabilities for Crop Analysis. In Proceedings of the IEEE 1st International Symposium on Geoscience and Remote Sensing, GRSS-CHILE, Valdivia, Chile, 15–16 June 2017. [Google Scholar] [CrossRef]

- Brinkhoff, J.; Vardanega, J.; Robson, A.J. Land Cover Classification of Nine Perennial Crops Using Sentinel-1 and -2 Data. Remote Sens. 2020, 12, 96. [Google Scholar] [CrossRef]

- Tu, Y.-H.; Johansen, K.; Phinn, S.; Robson, A. Measuring Canopy Structure and Condition Using Multi-Spectral UAS Imagery in a Horticultural Environment. Remote Sens. 2019, 11, 269. [Google Scholar] [CrossRef]

- Narmilan, A.; Gonzalez, F.; Salgadoe, A.S.A.; Powell, K. Detection of White Leaf Disease in Sugarcane Using Machine Learning Techniques over UAV Multispectral Images. Drones 2022, 6, 230. [Google Scholar] [CrossRef]

- Amarasingam, N.; Salgadoe, A.S.A.; Powell, K.; Gonzalez, L.F.; Natarajan, S. A review of UAV platforms, sensors, and applications for monitoring of sugarcane crops. Remote Sens. Appl. 2022, 26, 100712. [Google Scholar] [CrossRef]

- Khoshboresh-Masouleh, M.; Akhoondzadeh, M. Improving weed segmentation in sugar beet fields using potentials of multispectral unmanned aerial vehicle images and lightweight deep learning. J. Appl. Remote Sens. 2021, 15, 034510. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Vine disease detection in UAV multispectral images using optimized image registration and deep learning segmentation approach. Comput. Electron. Agric. 2020, 174, 105446. [Google Scholar] [CrossRef]

- Modica, G.; De Luca, G.; Messina, G.; Praticò, S. Comparison and assessment of different object-based classifications using machine learning algorithms and UAVs multispectral imagery: A case study in a citrus orchard and an onion crop. Eur. J. Remote Sens. 2021, 54, 431–460. [Google Scholar] [CrossRef]

- Banerjee, B.; Sharma, V.; Spangenberg, G.; Kant, S. Machine Learning Regression Analysis for Estimation of Crop Emergence Using Multispectral UAV Imagery. Remote Sens. 2021, 13, 2918. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. Selecting patterns and features for between- and within- crop-row weed mapping using UAV-imagery. Expert Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef]

- Hamilton, M.; Matthews, R.; Caldwell, J. Needle in a Haystack—Detecting Hawkweeds Using Drones. In Proceedings of the 21st Australasian Weeds Conference, Sydney, Australia, 9–13 September 2018; pp. 126–130. [Google Scholar]

- Etienne, A.; Saraswat, D. Machine learning approaches to automate weed detection by UAV based sensors. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IV; SPIE: Bellingham, WA, USA, 2019; Volume 11008, p. 110080R. [Google Scholar] [CrossRef]

- Islam, N.; Rashid, M.; Wibowo, S.; Wasimi, S.; Morshed, A.; Xu, C.; Moore, S. Machine Learning Based Approach for Weed Detection in Chilli Field Using RGB Images. In Lecture Notes on Data Engineering and Communications Technologies; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2021; Volume 88, pp. 1097–1105. [Google Scholar] [CrossRef]

- Segarra, J.; Buchaillot, M.L.; Araus, J.L.; Kefauver, S.C. Remote Sensing for Precision Agriculture: Sentinel-2 Improved Features and Applications. Agronomy 2020, 10, 641. [Google Scholar] [CrossRef]

- Zhang, S.; Li, X.; Ba, Y.; Lyu, X.; Zhang, M.; Li, M. Banana Fusarium Wilt Disease Detection by Supervised and Unsupervised Methods from UAV-Based Multispectral Imagery. Remote Sens. 2022, 14, 1231. [Google Scholar] [CrossRef]

- Osco, L.P.; Ramos, A.P.M.; Pereira, D.R.; Moriya, A.S.; Imai, N.N.; Matsubara, E.T.; Estrabis, N.; de Souza, M.; Junior, J.M.; Gonçalves, W.N.; et al. Predicting Canopy Nitrogen Content in Citrus-Trees Using Random Forest Algorithm Associated to Spectral Vegetation Indices from UAV-Imagery. Remote Sens. 2019, 11, 2925. [Google Scholar] [CrossRef]

- Narmilan, A. E-Agricultural Concepts for Improving Productivity: A Review. Sch. J. Eng. Technol. (SJET) 2017, 5, 10–17. [Google Scholar] [CrossRef]

- Williams, N.S.G.; Holland, K.D. The ecology and invasion history of hawkweeds (Hieracium species) in Australia. Plant Prot. 2007, 22, 76–80. [Google Scholar]

- Mouse-Ear Hawkweed | NSW Environment and Heritage. Available online: https://www.environment.nsw.gov.au/topics/animals-and-plants/pest-animals-and-weeds/weeds/new-and-emerging-weeds/mouse-ear-hawkweed (accessed on 27 June 2022).

- Hamilton, M.; Cherryand, H.; Turner, P.J. Hawkweed eradication from NSW: Could this be ‘the first’? Plant Prot. 2015, 30, 110–115. [Google Scholar]

- Gustavus, A.; Rapp, W. Exotic Plant Management in Glacier Bay National Park and Preserve; National Park Service: Gustavus, AK, USA, 2006. [Google Scholar]

- NSW WeedWise. Available online: https://weeds.dpi.nsw.gov.au/Weeds/Hawkweeds (accessed on 27 June 2022).

- Hawkweed | State Prohibited Weeds | Weeds | Biosecurity | Agriculture Victoria. Available online: https://agriculture.vic.gov.au/biosecurity/weeds/state-prohibited-weeds/hawkweed (accessed on 27 June 2022).

- Beaumont, L.J.; Gallagher, R.V.; Downey, P.O.; Thuiller, W.; Leishman, M.R.; Hughes, L. Modelling the impact of Hieracium spp. on protected areas in Australia under future climates. Ecography 2009, 32, 757–764. [Google Scholar] [CrossRef]

- Dobrinić, D.; Gašparović, M.; Medak, D. Sentinel-1 and 2 Time-Series for Vegetation Mapping Using Random Forest Classification: A Case Study of Northern Croatia. Remote Sens. 2021, 13, 2321. [Google Scholar] [CrossRef]

- Alexandridis, T.K.; Tamouridou, A.A.; Pantazi, X.E.; Lagopodi, A.L.; Kashefi, J.; Ovakoglou, G.; Polychronos, V.; Moshou, D. Novelty Detection Classifiers in Weed Mapping: Silybum marianum Detection on UAV Multispectral Images. Sensors 2017, 17, 2007. [Google Scholar] [CrossRef] [PubMed]

- Narmilan, A.; Gonzalez, F.; Salgadoe, A.S.A.; Kumarasiri, U.W.L.M.; Weerasinghe, H.A.S.; Kulasekara, B.R. Predicting Canopy Chlorophyll Content in Sugarcane Crops Using Machine Learning Algorithms and Spectral Vegetation Indices Derived from UAV Multispectral Imagery. Remote Sens. 2022, 14, 1140. [Google Scholar] [CrossRef]

- Islam, N.; Rashid, M.; Wibowo, S.; Xu, C.-Y.; Morshed, A.; Wasimi, S.; Moore, S.; Rahman, S. Early Weed Detection Using Image Processing and Machine Learning Techniques in an Australian Chilli Farm. Agriculture 2021, 11, 387. [Google Scholar] [CrossRef]

- Abouzahir, S.; Sadik, M.; Sabir, E. Enhanced Approach for Weeds Species Detection Using Machine Vision. In Proceedings of the 2018 International Conference on Electronics, Control, Optimization and Computer Science (ICECOCS), Kenitra, Morocco, 5–6 December 2018. [Google Scholar] [CrossRef]

- Alam, M.; Alam, M.S.; Roman, M.; Tufail, M.; Khan, M.U. Real-Time Machine-Learning Based Crop/Weed Detection and Classification for Variable-Rate Spraying in Precision Agriculture. In Proceedings of the 2020 7th International Conference on Electrical and Electronics Engineering, Antalya, Turkey, 14–16 April 2020; pp. 273–280. [Google Scholar] [CrossRef]

- Ahmed, F.; Al-Mamun, H.A.; Bari, A.H.; Hossain, E.; Kwan, P. Classification of crops and weeds from digital images: A support vector machine approach. Crop. Prot. 2012, 40, 98–104. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Pantazi, X.; Tamouridou, A.; Alexandridis, T.; Lagopodi, A.; Kashefi, J.; Moshou, D. Evaluation of hierarchical self-organising maps for weed mapping using UAS multispectral imagery. Comput. Electron. Agric. 2017, 139, 224–230. [Google Scholar] [CrossRef]

- El Imanni, H.S.; El Harti, A.; Bachaoui, E.M.; Mouncif, H.; Eddassouqui, F.; Hasnai, M.A.; Zinelabidine, M.I. Multispectral UAV data for detection of weeds in a citrus farm using machine learning and Google Earth Engine: Case study of Morocco. Remote Sens. Appl. 2023, 30, 100941. [Google Scholar] [CrossRef]

- Su, J.; Yi, D.; Coombes, M.; Liu, C.; Zhai, X.; McDonald-Maier, K.; Chen, W.-H. Spectral analysis and mapping of blackgrass weed by leveraging machine learning and UAV multispectral imagery. Comput. Electron. Agric. 2022, 192, 106621. [Google Scholar] [CrossRef]

- Carson, H.W.; Lass, L.W.; Callihan, R.H. Detection of Yellow Hawkweed (Hieracium pratense) with High Resolution Multispectral Digital Imagery. Weed Technol. 1995, 9, 477–483. [Google Scholar] [CrossRef]

- Hung, C.; Sukkarieh, S. Using robotic aircraft and intelligent surveillance systems for orange hawkweed detection. Plant Prot. 2015, 30, 100–102. [Google Scholar]

- Tomkins, K.; Chang, M. NSW Biodiversity Node-Project Summary Report: Developing a Spectral Library for Weed Species in Alpine Vegetation Communities to Monitor Their Distribution Using Remote Sensing; NSW Office of Environment and Heritage: Sydney, Australia, 2018. [Google Scholar]

- Kelly, J.; Rahaman, M.; Mora, J.S.; Zheng, L.; Cherry, H.; Hamilton, M.A.; Dehaan, R.; Gonzalez, F.; Menz, W.; Grant, L. Weed managers guide to remote detection: Understanding opportunities and limitations of technologies for remote detection of weeds. In Proceedings of the 22nd Australasian Weeds Conference Adelaide September 2022, Adelaide, SA, Australia, 25–29 September 2022; pp. 58–62. [Google Scholar]

- Imran, A.; Khan, K.; Ali, N.; Ahmad, N.; Ali, A.; Shah, K. Narrow band based and broadband derived vegetation indices using Sentinel-2 Imagery to estimate vegetation biomass. Glob. J. Environ. Sci. Manag. 2020, 6, 97–108. [Google Scholar] [CrossRef]

- Orusa, T.; Cammareri, D.; Mondino, E.B. A Possible Land Cover EAGLE Approach to Overcome Remote Sensing Limitations in the Alps Based on Sentinel-1 and Sentinel-2: The Case of Aosta Valley (NW Italy). Remote Sens. 2023, 15, 178. [Google Scholar] [CrossRef]

- Orusa, T.; Viani, A.; Cammareri, D.; Mondino, E.B. A Google Earth Engine Algorithm to Map Phenological Metrics in Mountain Areas Worldwide with Landsat Collection and Sentinel-2. Geomatics 2023, 3, 12. [Google Scholar] [CrossRef]

- Marcial-Pablo, M.D.J.; Gonzalez-Sanchez, A.; Jimenez-Jimenez, S.I.; Ontiveros-Capurata, R.E.; Ojeda-Bustamante, W. Estimation of vegetation fraction using RGB and multispectral images from UAV. Int. J. Remote Sens. 2019, 40, 420–438. [Google Scholar] [CrossRef]

- Avola, G.; Di Gennaro, S.F.; Cantini, C.; Riggi, E.; Muratore, F.; Tornambè, C.; Matese, A. Remotely Sensed Vegetation Indices to Discriminate Field-Grown Olive Cultivars. Remote Sens. 2019, 11, 1242. [Google Scholar] [CrossRef]

- Boiarskii, B. Comparison of NDVI and NDRE Indices to Detect Differences in Vegetation and Chlorophyll Content. J. Mech. Contin. Math. Sci. 2019, 4, 20–29. [Google Scholar] [CrossRef]

- Kumar, V.; Sharma, A.; Bhardwaj, R.; Thukral, A.K. Comparison of different reflectance indices for vegetation analysis using Landsat-TM data. Remote Sens. Appl. Soc. Environ. 2018, 12, 70–77. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef]

- Parvathi, S.; Selvi, S.T. Detection of maturity stages of coconuts in complex background using Faster R-CNN model. Biosyst. Eng. 2021, 202, 119–132. [Google Scholar] [CrossRef]

- Tan, L.; Lu, J.; Jiang, H. Tomato Leaf Diseases Classification Based on Leaf Images: A Comparison between Classical Machine Learning and Deep Learning Methods. AgriEngineering 2021, 3, 35. [Google Scholar] [CrossRef]

- Zhang, P.; Li, D. EPSA-YOLO-V5s: A novel method for detecting the survival rate of rapeseed in a plant factory based on multiple guarantee mechanisms. Comput. Electron. Agric. 2022, 193, 106714. [Google Scholar] [CrossRef]

- Cao, J.; Zhang, Z.; Tao, F.; Zhang, L.; Luo, Y.; Zhang, J.; Han, J.; Xie, J. Integrating Multi-Source Data for Rice Yield Prediction across China using Machine Learning and Deep Learning Approaches. Agric. For. Meteorol. 2020, 297, 108275. [Google Scholar] [CrossRef]

- Lottes, P.; Behley, J.; Milioto, A.; Stachniss, C. Fully Convolutional Networks with Sequential Information for Robust Crop and Weed Detection in Precision Farming. IEEE Robot. Autom. Lett. 2018, 3, 2870–2877. [Google Scholar] [CrossRef]

- Osorio, K.; Puerto, A.; Pedraza, C.; Jamaica, D.; Rodríguez, L. A Deep Learning Approach for Weed Detection in Lettuce Crops Using Multispectral Images. AgriEngineering 2020, 2, 32. [Google Scholar] [CrossRef]

- Veeranampalayam Sivakumar, A.N.; Li, J.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid- to Late-Season Weed Detection in UAV Imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

| No | Application | ML Model | Overall Accuracy | References |

|---|---|---|---|---|

| 01 | Weed detection in chili farm | RF, SVM, KNN | RF—96% KNN—63% SVM—94% | [43] |

| 02 | Weed detection in a soybean field | BPNN, SVM | BPNN—96.6% SVM—95.7% | [44] |

| 03 | Real-time weed detection | RF | 95% | [45] |

| 04 | Classification of weeds | SVM | 97% | [46] |

| 05 | Detection of weeds in sugar beet field | ANN, SVM | ANN—92.9% SVM—95% | [47] |

| 06 | Weed mapping | SKN, ANN | SKN—98.6% ANN—98.8% | [48] |

| 07 | Weed detection in citrus farm | RF, KNN | RF—97% KNN—94% | [49] |

| 08 | Mapping of blackgrass weed | RF | 93% | [50] |

| 09 | Detection of yellow hawkweed | supervised, unsupervised | 20% to 90% | [51] |

| Flight Mission No | AGL (m) | GSD (cm/pixel) | Speed (ms−1) | Overlap | Take-off Time |

|---|---|---|---|---|---|

| 1 | 15 | 0.65 | 2 | 75% | 12.10 |

| 2 | 20 | 0.86 | 2.2 | 75% | 12.47 |

| 3 | 25 | 1.10 | 2.8 | 75% | 13.17 |

| 4 | 30 | 1.30 | 3.3 | 75% | 13.32 |

| 5 | 35 | 1.50 | 4 | 75% | 13.35 |

| 6 | 40 | 1.73 | 4.5 | 75% | 13.06 |

| 7 | 45 | 1.95 | 5 | 75% | 12.37 |

| Vegetation Indices | Formula | References |

|---|---|---|

| NDVI | [55,56,57] | |

| GNDVI | [58,59] | |

| NDRE | [58,59,60] | |

| GCI | [61] | |

| MSAVI | [62] | |

| ExG | [63] |

| XGB | SVM | RF | KNN |

|---|---|---|---|

| 100% | 99% | 100% | 100% |

| Matrix | RF | SVM | KNN | XGB | |

|---|---|---|---|---|---|

| Hawkweed flowers | Precision (%) | 100 | 100 | 100 | 100 |

| Recall (%) | 100 | 98 | 100 | 100 | |

| F1 score (%) | 100 | 99 | 100 | 100 | |

| Background (Non hawkweed flowers) | Precision (%) | 100 | 98 | 100 | 100 |

| Recall (%) | 100 | 100 | 100 | 100 | |

| F1 score (%) | 100 | 99 | 100 | 100 |

| Flight No | GSD * (cm/pixel) | Overall Testing Accuracy (%) |

|---|---|---|

| 1 | 0.65 | 100 |

| 2 | 0.86 | 100 |

| 3 | 1.10 | 99 |

| 4 | 1.30 | 99 |

| 5 | 1.50 | 99 |

| 6 | 1.73 | 98 |

| 7 | 1.95 | 97 |

| XGB | SVM | RF | KNN |

|---|---|---|---|

| 100% | 98% | 100% | 100% |

| Matrix | RF | SVM | KNN | XGB | |

|---|---|---|---|---|---|

| Hawkweed flowers | Precision (%) | 100 | 100 | 100 | 100 |

| Recall (%) | 100 | 98 | 100 | 100 | |

| F1 score (%) | 100 | 99 | 100 | 100 | |

| Background | Precision (%) | 100 | 98 | 100 | 100 |

| Recall (%) | 100 | 100 | 100 | 100 | |

| F1 score (%) | 100 | 99 | 100 | 100 |

| XGB | SVM | RF | KNN |

|---|---|---|---|

| 97% | 72% | 97% | 96% |

| Matrix | RF | SVM | KNN | XGB | |

|---|---|---|---|---|---|

| Hawkweed Foliage (Target Vegetation) | Precision (%) | 100 | 63 | 100 | 100 |

| Recall (%) | 94 | 79 | 94 | 94 | |

| F1 score (%) | 97 | 70 | 97 | 97 | |

| Other Vegetation | Precision (%) | 91 | 73 | 91 | 91 |

| Recall (%) | 100 | 41 | 100 | 100 | |

| F1 score (%) | 95 | 52 | 95 | 95 | |

| Non-Vegetation | Precision (%) | 100 | 81 | 100 | 100 |

| Recall (%) | 96 | 98 | 96 | 96 | |

| F1 score (%) | 98 | 89 | 98 | 98 |

| Flight No | GSD * (cm/pixel) | Overall Testing Accuracy (%) |

|---|---|---|

| 1 | 0.65 | 97 |

| 2 | 0.86 | 97 |

| 3 | 1.10 | 95 |

| 4 | 1.30 | 94 |

| 5 | 1.50 | 94 |

| 6 | 1.73 | 92 |

| 7 | 1.95 | 91 |

| XGB | SVM | RF | KNN |

|---|---|---|---|

| 98% | 80% | 97% | 97% |

| Matrix | RF | SVM | KNN | XGB | |

|---|---|---|---|---|---|

| Hawkweed Foliage (Target Vegetation) | Precision (%) | 93 | 54 | 94 | 95 |

| Recall (%) | 94 | 81 | 94 | 96 | |

| F1 score (%) | 94 | 65 | 94 | 95 | |

| Other Vegetation | Precision (%) | 96 | 77 | 96 | 97 |

| Recall (%) | 95 | 52 | 96 | 97 | |

| F1 score (%) | 96 | 63 | 96 | 97 | |

| Non-Vegetation | Precision (%) | 100 | 100 | 100 | 100 |

| Recall (%) | 100 | 98 | 100 | 100 | |

| F1 score (%) | 100 | 99 | 100 | 100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amarasingam, N.; Hamilton, M.; Kelly, J.E.; Zheng, L.; Sandino, J.; Gonzalez, F.; Dehaan, R.L.; Cherry, H. Autonomous Detection of Mouse-Ear Hawkweed Using Drones, Multispectral Imagery and Supervised Machine Learning. Remote Sens. 2023, 15, 1633. https://doi.org/10.3390/rs15061633

Amarasingam N, Hamilton M, Kelly JE, Zheng L, Sandino J, Gonzalez F, Dehaan RL, Cherry H. Autonomous Detection of Mouse-Ear Hawkweed Using Drones, Multispectral Imagery and Supervised Machine Learning. Remote Sensing. 2023; 15(6):1633. https://doi.org/10.3390/rs15061633

Chicago/Turabian StyleAmarasingam, Narmilan, Mark Hamilton, Jane E. Kelly, Lihong Zheng, Juan Sandino, Felipe Gonzalez, Remy L. Dehaan, and Hillary Cherry. 2023. "Autonomous Detection of Mouse-Ear Hawkweed Using Drones, Multispectral Imagery and Supervised Machine Learning" Remote Sensing 15, no. 6: 1633. https://doi.org/10.3390/rs15061633

APA StyleAmarasingam, N., Hamilton, M., Kelly, J. E., Zheng, L., Sandino, J., Gonzalez, F., Dehaan, R. L., & Cherry, H. (2023). Autonomous Detection of Mouse-Ear Hawkweed Using Drones, Multispectral Imagery and Supervised Machine Learning. Remote Sensing, 15(6), 1633. https://doi.org/10.3390/rs15061633