Detection of Artificial Seed-like Objects from UAV Imagery

Abstract

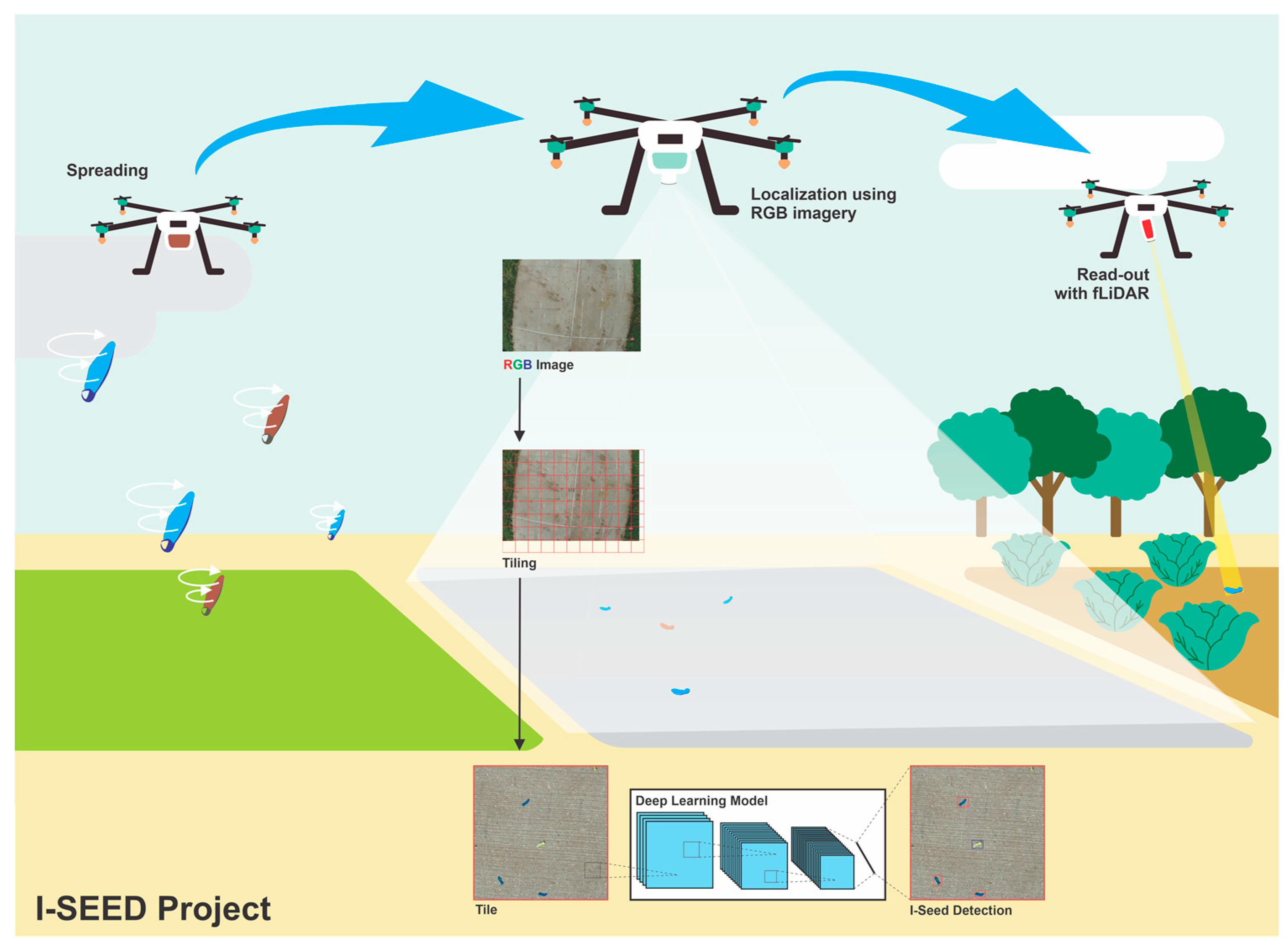

:1. Introduction

2. Materials and Methods

2.1. Sample Preparation

2.2. Data Acquisition

2.3. Datasets

2.4. Data Pre-Processing

2.5. Data Processing

2.5.1. Object Detection Model

2.5.2. Performance Evaluation

2.5.3. Inference in the Full-Size Dataset

3. Results

3.1. Training Comparison

3.2. Inference on Full-Size Image

3.3. Model Assessment

3.4. Parameter Combination

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Details on Data Processing

| Image Size (Pixel) | No. of Images | Null Examples | Annotations | Average Annotations per Image | |||

|---|---|---|---|---|---|---|---|

| I-Seed Blue | I-Seed Original | Total | |||||

| Tiled Dataset | 512 × 512 | 12,300 | 5230 | 10,399 | 7361 | 17,760 | 1.4 |

| Full Size Dataset | 5472 × 3648 | 192 | 0 | 3264 | 3262 | 6526 | 34.0 |

| Hyper Parameter | Value | Hyper Parameter | Value |

|---|---|---|---|

| Initial learning rate | 0.01 | focal loss gamma | 0.0 |

| OneCycleLR learning rate | 0.1 | HSE hue aug | 0.015 |

| momentum | 0.937 | HSE saturation aug | 0.7 |

| weight decay | 0.0005 | HSE value aug | 0.4 |

| warmup epochs | 3.0 | Rotation | 0.0 |

| warmup momentum | 0.8 | Translation | 0.1 |

| warmup bias lr | 0.1 | scale | 0.5 |

| box loss gain | 0.05 | shear | 0.0 |

| class loss gain | 0.5 | perspective | 0.0 |

| class BCELoss | 1.0 | flip up/down | 0.0 |

| object loss gain | 1.0 | flip left/right | 0.5 |

| object BCELoss | 1.0 | mosaic | 1.0 |

| IoU threshold | 0.2 | mixup | 0.0 |

| anchor multiple threshold | 4.0 | copy–paste | 0.0 |

References

- Colomina, I.; Molina, P. Unmanned Aerial Systems for Photogrammetry and Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Sona, G.; Passoni, D.; Pinto, L.; Pagliari, D.; Masseroni, D.; Ortuani, B.; Facchi, A. UAV Multispectral Survey to Map Soil and Crop for Precision Farming Applications. In Proceedings of the Remote Sensing and Spatial Information Sciences Congress: International Archives of the Photogrammetry Remote Sensing and Spatial Information Sciences Congress, Prague, Czech Republic, 19 July 2016; International Society for Photogrammetry and Remote Sensing (ISPRS): Hannover, Germany, 2016; Volume 41, pp. 1023–1029. [Google Scholar]

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T. Object Based Image Analysis for Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, N.-D.; Do, T.; Ngo, T.D.; Le, D.-D. An Evaluation of Deep Learning Methods for Small Object Detection. J. Electr. Comput. Eng. 2020, 2020, 3189691. [Google Scholar] [CrossRef]

- Ammar, A.; Koubaa, A.; Benjdira, B. Deep-Learning-Based Automated Palm Tree Counting and Geolocation in Large Farms from Aerial Geotagged Images. Agronomy 2021, 11, 1458. [Google Scholar] [CrossRef]

- Pearse, G.D.; Tan, A.Y.; Watt, M.S.; Franz, M.O.; Dash, J.P. Detecting and Mapping Tree Seedlings in UAV Imagery Using Convolutional Neural Networks and Field-Verified Data. ISPRS J. Photogramm. Remote Sens. 2020, 168, 156–169. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, G.; Lei, Z.; Hwang, J.-N. Eye in the Sky: Drone-Based Object Tracking and 3d Localization. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 899–907. [Google Scholar]

- Zhao, X.; Pu, F.; Wang, Z.; Chen, H.; Xu, Z. Detection, Tracking, and Geolocation of Moving Vehicle from Uav Using Monocular Camera. IEEE Access 2019, 7, 101160–101170. [Google Scholar] [CrossRef]

- Kellenberger, B.; Marcos, D.; Tuia, D. Detecting Mammals in UAV Images: Best Practices to Address a Substantially Imbalanced Dataset with Deep Learning. Remote Sens. Environ. 2018, 216, 139–153. [Google Scholar] [CrossRef] [Green Version]

- Hong, S.-J.; Han, Y.; Kim, S.-Y.; Lee, A.-Y.; Kim, G. Application of Deep-Learning Methods to Bird Detection Using Unmanned Aerial Vehicle Imagery. Sensors 2019, 19, 1651. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep Learning in Remote Sensing Applications: A Meta-Analysis and Review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.; Chanussot, J. Convolutional Neural Networks for Multimodal Remote Sensing Data Classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Liu, R.; Cheng, Z.; Zhang, L.; Li, J. Remote Sensing Image Change Detection Based on Information Transmission and Attention Mechanism. IEEE Access 2019, 7, 156349–156359. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5966–5978. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More Diverse Means Better: Multimodal Deep Learning Meets Remote-Sensing Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4340–4354. [Google Scholar] [CrossRef]

- Mazzolai, B.; Kraus, T.; Pirrone, N.; Kooistra, L.; De Simone, A.; Cottin, A.; Margheri, L. Towards New Frontiers for Distributed Environmental Monitoring Based on an Ecosystem of Plant Seed-like Soft Robots. In Proceedings of the Conference on Information Technology for Social Good, Rome, Italy, 9–11 September 2021; pp. 221–224. [Google Scholar]

- Tong, K.; Wu, Y.; Zhou, F. Recent Advances in Small Object Detection Based on Deep Learning: A Review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. Uav-Yolo: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, C.; Zhong, J.; Tan, Y. Multiple-Oriented and Small Object Detection with Convolutional Neural Networks for Aerial Image. Remote Sens. 2019, 11, 2176. [Google Scholar] [CrossRef] [Green Version]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft Coco: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Rui, C.; Youwei, G.; Huafei, Z.; Hongyu, J. A Comprehensive Approach for UAV Small Object Detection with Simulation-Based Transfer Learning and Adaptive Fusion. arXiv 2021, arXiv:2109.01800. [Google Scholar]

- Kos, A.; Majek, K.; Belter, D. Where to Look for Tiny Objects? ROI Prediction for Tiny Object Detection in High Resolution Images. In Proceedings of the 2022 17th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 11–13 December 2022; pp. 721–726. [Google Scholar]

- Courtrai, L.; Pham, M.-T.; Lefèvre, S. Small Object Detection in Remote Sensing Images Based on Super-Resolution with Auxiliary Generative Adversarial Networks. Remote Sens. 2020, 12, 3152. [Google Scholar] [CrossRef]

- Pham, M.-T.; Courtrai, L.; Friguet, C.; Lefèvre, S.; Baussard, A. YOLO-Fine: One-Stage Detector of Small Objects under Various Backgrounds in Remote Sensing Images. Remote Sens. 2020, 12, 2501. [Google Scholar] [CrossRef]

- Zhao, K.; Ren, X. Small Aircraft Detection in Remote Sensing Images Based on YOLOv3. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Guangzhou, China, 12–14 January 2019; IOP Publishing: Bristol, UK, 2019; Volume 533, p. 012056. [Google Scholar]

- Nina, W.; Condori, W.; Machaca, V.; Villegas, J.; Castro, E. Small Ship Detection on Optical Satellite Imagery with YOLO and YOLT. In Proceedings of the Advances in Information and Communication: Proceedings of the 2020 Future of Information and Communication Conference (FICC), San Francisco, CA, USA, 5–6 March 2020; Springer: Berlin/Heidelberg, Germany, 2020; Volume 2, pp. 664–677. [Google Scholar]

- Mustafa, H.; Khan, H.A.; Bartholomeus, H.; Kooistra, L. Design of an Active Laser-Induced Fluorescence Observation System from Unmanned Aerial Vehicles for Artificial Seed-like Structures. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology XXIV, Berlin, Germany, 5–8 September 2022; SPIE: Bellingham, WA, USA, 2022; Volume 12262, p. 1226205. [Google Scholar]

- Ali, S.; Siddique, A.; Ateş, H.F.; Güntürk, B.K. Improved YOLOv4 for Aerial Object Detection. In Proceedings of the 2021 29th Signal Processing and Communications Applications Conference (SIU), Istanbul, Turkey, 9–11 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–4. [Google Scholar]

- Pang, J.; Li, C.; Shi, J.; Xu, Z.; Feng, H. R2-CNN: Fast Tiny Object Detection in Large-Scale Remote Sensing Images. arXiv 2019, arXiv:1902.06042. [Google Scholar]

- Jiang, B.; Qu, R.; Li, Y.; Li, C. Object Detection in UAV Imagery Based on Deep Learning: Review. Acta Aeronaut. Astronaut. Sin. 2021, 42, 524519. [Google Scholar]

- Zhang, J.; Xie, T.; Yang, C.; Song, H.; Jiang, Z.; Zhou, G.; Zhang, D.; Feng, H.; Xie, J. Segmenting Purple Rapeseed Leaves in the Field from UAV RGB Imagery Using Deep Learning as an Auxiliary Means for Nitrogen Stress Detection. Remote Sens. 2020, 12, 1403. [Google Scholar] [CrossRef]

- Du, J. Understanding of Object Detection Based on CNN Family and YOLO. In Proceedings of the 2nd International Conference on Machine Vision and Information Technology (CMVIT 2018), Hong Kong, China, 23–25 February 2018; IOP Publishing: Bristol, UK, 2018; Volume 1004, p. 012029. [Google Scholar]

- Roboflow. Available online: https://roboflow.com/annotate (accessed on 15 November 2021).

- Yolov5. Available online: https://github.com/ultralytics/yolov5/releases/v6.0 (accessed on 1 December 2021).

- Malta, A.; Mendes, M.; Farinha, T. Augmented Reality Maintenance Assistant Using Yolov5. Appl. Sci. 2021, 11, 4758. [Google Scholar] [CrossRef]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing Aided Hyper Inference and Fine-Tuning for Small Object Detection. arXiv 2022, arXiv:2202.06934. [Google Scholar]

- Jubayer, F.; Soeb, J.A.; Mojumder, A.N.; Paul, M.K.; Barua, P.; Kayshar, S.; Akter, S.S.; Rahman, M.; Islam, A. Detection of Mold on the Food Surface Using YOLOv5. Curr. Res. Food Sci. 2021, 4, 724–728. [Google Scholar] [CrossRef] [PubMed]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for Autonomous Landing Spot Detection in Faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Shi, J.; Yu, X.; Li, X. Local Motion Blur Detection by Wigner Distribution Function. Optik 2022, 251, 168375. [Google Scholar] [CrossRef]

- Arroyo-Mora, J.P.; Kalacska, M.; Løke, T.; Schläpfer, D.; Coops, N.C.; Lucanus, O.; Leblanc, G. Assessing the Impact of Illumination on UAV Pushbroom Hyperspectral Imagery Collected under Various Cloud Cover Conditions. Remote Sens. Environ. 2021, 258, 112396. [Google Scholar] [CrossRef]

- Ray, S.F. Camera Exposure Determination. The Manual of Photography: Photographic and Digital Imaging; Taylor & Francis: Abingdon, UK, 2000; Volume 2. [Google Scholar]

- Doumit, J. The Effect of Neutral Density Filters on Drones Orthomosaics Classifications for Land-Use Mapping; OSF: Peoria, IL, USA, 2020. [Google Scholar]

- Hein, A.; Kortz, C.; Oesterschulze, E. Tunable Graduated Filters Based on Electrochromic Materials for Spatial Image Control. Sci. Rep. 2019, 9, 15822. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schöberl, M.; Oberdörster, A.; Fößel, S.; Bloss, H.; Kaup, A. Digital Neutral Density Filter for Moving Picture Cameras. In Proceedings of the Computational Imaging VIII, San Jose, CA, USA, 17–21 January 2010; SPIE: Bellingham, WA, USA, 2010; Volume 7533, pp. 170–179. [Google Scholar]

- Bernacki, J. Automatic Exposure Algorithms for Digital Photography. Multimed. Tools Appl. 2020, 79, 12751–12776. [Google Scholar] [CrossRef] [Green Version]

- Jung, H.-K.; Choi, G.-S. Improved YOLOv5: Efficient Object Detection Using Drone Images under Various Conditions. Appl. Sci. 2022, 12, 7255. [Google Scholar] [CrossRef]

- Dadrass Javan, F.; Samadzadegan, F.; Gholamshahi, M.; Ashatari Mahini, F. A Modified YOLOv4 Deep Learning Network for Vision-Based UAV Recognition. Drones 2022, 6, 160. [Google Scholar] [CrossRef]

- Liu, H.; Yu, Y.; Liu, S.; Wang, W. A Military Object Detection Model of UAV Reconnaissance Image and Feature Visualization. Appl. Sci. 2022, 12, 12236. [Google Scholar] [CrossRef]

- Zhao, Z.; Han, J.; Song, L. YOLO-Highway: An Improved Highway Center Marking Detection Model for Unmanned Aerial Vehicle Autonomous Flight. Math. Probl. Eng. 2021, 2021, e1205153. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit Detection and Positioning Technology for a Camellia oleifera C. Abel Orchard Based on Improved YOLOv4-Tiny Model and Binocular Stereo Vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Yue, L.; Shen, H.; Li, J.; Yuan, Q.; Zhang, H.; Zhang, L. Image Super-Resolution: The Techniques, Applications, and Future. Signal Process. 2016, 128, 389–408. [Google Scholar] [CrossRef]

- Benjumea, A.; Teeti, I.; Cuzzolin, F.; Bradley, A. YOLO-Z: Improving Small Object Detection in YOLOv5 for Autonomous Vehicles. arXiv 2021, arXiv:2112.11798. [Google Scholar]

| Parameter | Phantom 4 Pro RTX | Mavic 2 Pro |

|---|---|---|

| Field of View | 84° | 77° |

| Minimum Focus Distance | 3.3′/1 m | 3.3′/1 m |

| Still Image Support | DNG/JPEG | JPEG |

| Mechanical Shutter Speed | 1/2000 to 8 s | N/A |

| Electronic Shutter speed | 8–1/8000 s | 8–1/8000 s |

| Dataset ID | Date | Light Condition | Solar Intensity [W/cm2] | Solar Zenith Angle (°) | Drone Platform | Flight Method | Total Images | Category | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Specular | Diffuse | Tiled Dataset (TD) | Full Size Dataset (FSD) | |||||||||

| Training | Validation | Testing | ||||||||||

| DS1 | 21 Sep. 2021 | Overcast | 157 ± 60 | 305 ± 27.8 | 52.2 ± 0.8 | Phantom 4 Pro RTX | Auto | 359 | P | P | ||

| DS2 | 11 Nov. 2021 | Overcast | 0.98 ± 0.1 | 75 ± 14 | 71.1 ± 1.4 | Phantom 4 Pro RTX | Auto | 816 | P | P | ||

| DS3 | 21 Dec. 2021 | Sunny | 652 ± 41.7 | 32.5 ± 3.3 | 76.4 ± 1.1 | Phantom 4 Pro RTX | Auto | 816 | P | P | ||

| DS4 | 6 Jan. 2022 | Sunny | 11 ± 25.8 | 42.4 ± 34.1 | 77.6 ± 1.0 | Mavic 2 Pro | Manual | 139 | P | P | P | |

| DS5 | 9 Jan. 2022 | Sunny | 60.4 ± 91.7 | 38.3 ± 4.6 | 78.1 ± 1.1 | Mavic 2 Pro | Manual | 131 | P | P | P | |

| DS6 | 17 Jan. 2022 | Overcast | 0.8 ± 6.3 | 59.8 ± 7.8 | 75.3 ± 1.7 | Mavic 2 Pro | Manual | 202 | P | P | P | |

| Variant | Depth Multiple | Width Multiple | Parameters |

|---|---|---|---|

| Yolov5x | 1.33 | 1.25 | 86.7 M |

| Yolov5l | 1.0 | 1.0 | 46.5 M |

| Yolov5m | 0.67 | 0.75 | 21.2 M |

| Yolov5s | 0.33 | 0.50 | 7.2 M |

| Yolov5n | 0.33 | 0.25 | 1.9 M |

| YOLOv5 Variants | Training Accuracy | Speed (ms) | Processing | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | mAP0.5 | Pre-Process | Inference | NMS | Total | Layers | Parameters | |

| YOLOv5n | 0.84 | 0.86 | 0.88 | 1.2 | 1 | 0.8 | 3 | 213 | 1,761,871 |

| YOLOv5s | 0.86 | 0.84 | 0.89 | 1.2 | 2.3 | 0.8 | 4.3 | 213 | 7,015,519 |

| YOLOv5m | 0.83 | 0.77 | 0.82 | 1.2 | 2.3 | 0.8 | 7.2 | 290 | 20,856,975 |

| YOLOv5l | 0.85 | 0.81 | 0.86 | 1.2 | 9.1 | 0.9 | 11.2 | 367 | 46,113,663 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bomantara, Y.A.; Mustafa, H.; Bartholomeus, H.; Kooistra, L. Detection of Artificial Seed-like Objects from UAV Imagery. Remote Sens. 2023, 15, 1637. https://doi.org/10.3390/rs15061637

Bomantara YA, Mustafa H, Bartholomeus H, Kooistra L. Detection of Artificial Seed-like Objects from UAV Imagery. Remote Sensing. 2023; 15(6):1637. https://doi.org/10.3390/rs15061637

Chicago/Turabian StyleBomantara, Yanuar A., Hasib Mustafa, Harm Bartholomeus, and Lammert Kooistra. 2023. "Detection of Artificial Seed-like Objects from UAV Imagery" Remote Sensing 15, no. 6: 1637. https://doi.org/10.3390/rs15061637

APA StyleBomantara, Y. A., Mustafa, H., Bartholomeus, H., & Kooistra, L. (2023). Detection of Artificial Seed-like Objects from UAV Imagery. Remote Sensing, 15(6), 1637. https://doi.org/10.3390/rs15061637