Offshore Hydrocarbon Exploitation Target Extraction Based on Time-Series Night Light Remote Sensing Images and Machine Learning Models: A Comparison of Six Machine Learning Algorithms and Their Multi-Feature Importance

Abstract

1. Introduction

2. Study Area and Datasets

2.1. Study Area

2.2. Datasets

- (1)

- VIIRS Day/Night Band Nighttime Lights Monthly Composite Images

- (2)

- Offshore Platform Records and Sentinel-2 images

3. Methods

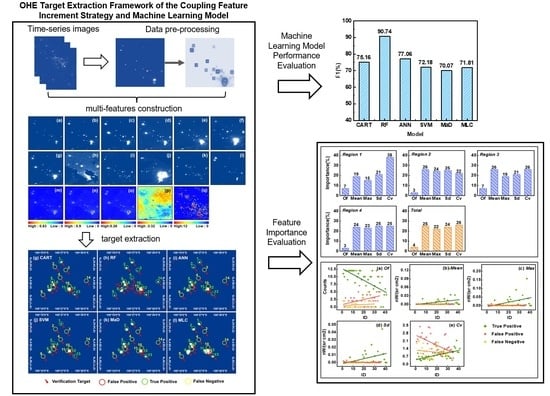

3.1. OHE Target Extraction Framework of the Coupling Feature Increment Strategy and Machine Learning Model

3.2. Machine Learning Algorithms

- (1)

- Classification and Regression Tree (CART)

- (2)

- Random Forest (RF)

- (3)

- Support Vector Machine (SVM)

- (4)

- Artificial Neural Networks (ANN)

- (5)

- Mahalanobis Distance (MaD)

- (6)

- Maximum Likelihood Classification (MLC)

3.3. Accuracy Evaluation Method

4. Results

4.1. OHE Target Extraction Results

4.2. Evaluation of Quantitative Accuracy

4.3. Feature Importance Evaluation

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Meinshausen, M.; Meinshausen, N.; Hare, W.; Raper, S.C.B.; Frieler, K.; Knutti, R.; Frame, D.J.; Allen, M.R. Greenhouse-gas emission targets for limiting global warming to 2 °C. Nature 2009, 458, 1158–1162. [Google Scholar] [CrossRef] [PubMed]

- Salman, M.; Long, X.; Wang, G.; Zha, D. Paris climate agreement and global environmental efficiency: New evidence from fuzzy regression discontinuity design. Energy Policy 2022, 168, 113128. [Google Scholar] [CrossRef]

- Xue, H. Research on distributed streaming parallel computing of large scale wind DFIGs from the perspective of Ecological Marxism. Energy Rep. 2022, 8, 304–312. [Google Scholar] [CrossRef]

- Balat, M. Influence of coal as an energy source on environmental pollution. Energy Sources Part A 2007, 29, 581–589. [Google Scholar] [CrossRef]

- EIA. Greenhouse Gases Effect on Climate. Available online: https://www.eia.gov/energyexplained/energy-and-the-environment/greenhouse-gases-and-the-climate.php (accessed on 5 November 2022).

- IEA. International Energy Agency. Available online: https://www.iea.org (accessed on 6 November 2022).

- Irakulis-Loitxate, I.; Gorrono, J.; Zavala-Araiza, D.; Guanter, L. Satellites Detect a Methane Ultra-emission Event from an Offshore Platform in the Gulf of Mexico. Environ. Sci. Technol. Lett. 2022, 9, 520–525. [Google Scholar] [CrossRef]

- EIA. Offshore Production Nearly 30% of Global Crude Oil Output in 2015. Available online: https://www.eia.gov/todayinenergy/detail.php?id=28492 (accessed on 8 November 2022).

- Watson, S.M. Greenhouse gas emissions from offshore oil and gas activities—Relevance of the Paris Agreement, Law of the Sea, and Regional Seas Programmes. Ocean Coast. Manag. 2020, 185, 104942. [Google Scholar] [CrossRef]

- Nguyen, T.-V.; Barbosa, Y.M.; da Silva, J.A.M.; de Oliveira Junior, S. A novel methodology for the design and optimisation of oil and gas offshore platforms. Energy 2019, 185, 158–175. [Google Scholar] [CrossRef]

- Chen, P.; Wang, J.; Li, D. Oil Platform Investigation by Multi-Temporal SAR Remote Sensing Image. In SPIE Remote Sensing International Society for Optics and Photonics; International Society for Optics and Photonics: Prague, Czech Republic, 2011; Volume 28. [Google Scholar]

- Cheng, L.; Yang, K.; Tong, L.; Liu, Y.; Li, M. Invariant triangle-based stationary oil platform detection from multitemporal synthetic aperture radar data. J. Appl. Remote Sens. 2013, 7, 73537. [Google Scholar] [CrossRef]

- Falqueto, L.E.; Sa, J.A.S.; Paes, R.L.; Passaro, A. Oil Rig Recognition Using Convolutional Neural Network on Sentinel-1 SAR Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1329–1333. [Google Scholar] [CrossRef]

- Liu, C.; Yang, J.; Ou, J.; Fan, D. Offshore Oil Platform Detection in Polarimetric SAR Images Using Level Set Segmentation of Limited Initial Region and Convolutional Neural Network. Remote Sens. 2022, 14, 1729. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Q.; Su, F. Automatic Extraction of Offshore Platforms in Single SAR Images Based on a Dual-Step-Modified Model. Sensors 2019, 19, 231. [Google Scholar] [CrossRef]

- Liu, Y.; Hu, C.; Dong, Y.; Xu, B.; Zhan, W.; Sun, C. Geometric accuracy of remote sensing images over oceans: The use of global offshore platforms. Remote Sens. Environ. 2019, 222, 244–266. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, C.; Sun, J.; Li, H.; Zhan, W.; Yang, Y.; Zhang, S. Satellite data lift the veil on offshore platforms in the South China Sea. Sci. Rep. 2016, 6, 33623. [Google Scholar] [CrossRef]

- Xing, Q.; Meng, R.; Lou, M.; Bing, L.; Liu, X. Remote Sensing of Ships and Offshore Oil Platforms and Mapping the Marine Oil Spill Risk Source in the Bohai Sea. Aquat. Procedia 2015, 3, 127–132. [Google Scholar] [CrossRef]

- Anejionu, O.; Blackburn, G.; Whyatt, D. Satellite survey of gas flares: Development and application of a Landsat-based technique in the Niger Delta. Int. J. Remote Sens. 2014, 35, 1900–1925. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, C.; Yang, Y.; Zhou, M.; Zhan, W.; Cheng, W. Automatic extraction of offshore platforms using time-series Landsat-8 Operational Land Imager data. Remote Sens. Environ. 2016, 175, 73–91. [Google Scholar] [CrossRef]

- Zhu, H.; Jia, G.; Zhang, Q.; Zhang, S.; Lin, X.; Shuai, Y. Detecting Offshore Drilling Rigs with Multitemporal NDWI: A Case Study in the Caspian Sea. Remote Sens. 2021, 13, 1576. [Google Scholar] [CrossRef]

- Anejionu, O.C.D.; Blackburn, G.A.; Whyatt, J.D. Detecting gas flares and estimating flaring volumes at individual flow stations using MODIS data. Remote Sens. Environ. 2015, 158, 81–94. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, W.; Su, F.; Xiao, H.; Wu, Y.; Yao, G. Offshore Hydrocarbon Exploitation Observations from VIIRS NTL Images: Analyzing the Intensity Changes and Development Trends in the South China Sea from 2012 to 2019. Remote Sens. 2021, 13, 946. [Google Scholar] [CrossRef]

- Oliva, P.; Schroeder, W. Assessment of VIIRS 375m active fire detection product for direct burned area mapping. Remote Sens. Environ. 2015, 160, 144–155. [Google Scholar] [CrossRef]

- Elvidge, C.; Zhizhin, M.; Baugh, K.; Hsu, F.-C.; Ghosh, T. Methods for Global Survey of Natural Gas Flaring from Visible Infrared Imaging Radiometer Suite Data. Energies 2015, 9, 14. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Ziskin, D.; Baugh, K.E.; Tuttle, B.T.; Ghosh, T.; Pack, D.W.; Erwin, E.H.; Zhizhin, M. A Fifteen Year Record of Global Natural Gas Flaring Derived from Satellite Data. Energies 2009, 2, 595–622. [Google Scholar] [CrossRef]

- Lu, W.; Liu, Y.; Wang, J.; Xu, W.; Wu, W.; Liu, Y.; Zhao, B.; Li, H.; Li, P. Global proliferation of offshore gas flaring areas. J. Maps 2020, 16, 396–404. [Google Scholar] [CrossRef]

- Elvidge, C.; Zhizhin, M.; Hsu, F.-C.; Baugh, K. VIIRS Nightfire: Satellite Pyrometry at Night. Remote Sens. 2013, 5, 4423–4449. [Google Scholar] [CrossRef]

- Liu, Y.; Hu, C.; Zhan, W.; Sun, C.; Murch, B.; Ma, L. Identifying industrial heat sources using time-series of the VIIRS Nightfire product with an object-oriented approach. Remote Sens. Environ. 2018, 204, 347–365. [Google Scholar] [CrossRef]

- Wenzhao, Z.; Houhe, Z.; Chunrong, L.I.; Han, Y.; Fanyi, L.I.; Jing, H. Petroleum Exploration History and Enlightenment in Pearl River Mouth Basin. Xinjiang Pet. Geol. 2021, 42, 346. [Google Scholar] [CrossRef]

- Cullen, A. Reprint of: Nature and significance of the West Baram and Tinjar Lines, NW Borneo. Mar. Pet. Geol. 2014, 58, 674–686. [Google Scholar] [CrossRef]

- Guo, J.; Yang, S.C.; Hu, W.B.; Song, S.; Wang, Y.B.; Wang, L. Difference Analysis of Hydrocarbon Generation in the Southern Part of the Western Continental Margin of the South China Sea. Mar. Geo. Front. 2021, 37, 1–7. (In Chinese) [Google Scholar] [CrossRef]

- Morley, C.K.; King, R.; Hillis, R.; Tingay, M.; Backe, G. Deepwater fold and thrust belt classification, tectonics, structure and hydrocarbon prospectivity: A review. Earth-Sci. Rev. 2011, 104, 41–91. [Google Scholar] [CrossRef]

- Bennett, M.M.; Smith, L.C. Advances in using multitemporal night-time lights satellite imagery to detect, estimate, and monitor socioeconomic dynamics. Remote Sens. Environ. 2017, 192, 176–197. [Google Scholar] [CrossRef]

- Lee, J.-S.; Wen, J.-H.; Ainsworth, T.L.; Chen, K.-S.; Chen, A.J. Improved Sigma Filter for Speckle Filtering of SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 202–213. [Google Scholar] [CrossRef]

- Wackerman, C.C.; Friedman, K.S.; Pichel, W.G.; Clemente-Colón, P.; Li, X. Automatic Detection of Ships in RADARSAT-1 SAR Imagery. Can. J. Remote Sens. 2001, 27, 371–378. [Google Scholar] [CrossRef]

- Hansen, M.; Dubayah, R.; Defries, R. Classification trees: An alternative to traditional land cover classifiers. Int. J. Remote Sens. 1996, 17, 1075–1081. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and Regression by RandomForest. R News 2002, 2, 5. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Kaper, M.; Meinicke, P.; Grossekathoefer, U.; Lingner, T.; Ritter, H. BCI competition 2003-data set IIb: Support vector machines for the P300 speller paradigm. IEEE Trans. Biomed. Eng. 2004, 51, 1073–1076. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 1995; ISBN 978-147-572-442-4. [Google Scholar]

- Tong, S.; Chang, E. Support vector machine active learning for image retrieval. In Proceedings of the MULTIMEDIA ’01: Proceedings of the Ninth ACM International Conference on Multimedia, Ottawa, ON, Canada, 30 September–5 October 2001. [Google Scholar] [CrossRef]

- Qi, H. Support Vector Machines and Application Research Overview. Comput. Eng. 2004, 30, 6–9. [Google Scholar] [CrossRef]

- Hsu, K.; Gupta, H.V.; Sorooshian, S. Artificial Neural Network Modeling of the Rainfall-Runoff Process. Water Resour. Res. 1995, 31, 2517–2530. [Google Scholar] [CrossRef]

- Chojaczyk, A.A.; Teixeira, A.P.; Neves, L.C.; Cardoso, J.B.; Guedes Soares, C. Review and application of Artificial Neural Networks models in reliability analysis of steel structures. Struct. Saf. 2015, 52, 78–89. [Google Scholar] [CrossRef]

- De Maesschalck, R.; Jouan-Rimbaud, D.; Massart, D.L. The Mahalanobis distance. Chemom. Intell. Lab. Syst. 2000, 50, 1–18. [Google Scholar] [CrossRef]

- Otukei, J.R.; Blaschke, T. Land cover change assessment using decision trees, support vector machines and maximum likelihood classification algorithms. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, S27–S31. [Google Scholar] [CrossRef]

- Wang, B.; Li, C.; Pavlu, V.; Aslam, J. A Pipeline for Optimizing F1-Measure in Multi-Label Text Classification. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 913–918. [Google Scholar]

- Sammut, C.; Webb, G.I. F1-Measure. In Encyclopedia of Machine Learning and Data Mining; Sammut, C., Webb, G.I., Eds.; Springer: Boston, MA, USA, 2017; p. 497. [Google Scholar]

- Chen, W.; Xie, X.; Wang, J.; Pradhan, B.; Hong, H.; Bui, D.T.; Duan, Z.; Ma, J. A comparative study of logistic model tree, random forest, and classification and regression tree models for spatial prediction of landslide susceptibility. Catena 2017, 151, 147–160. [Google Scholar] [CrossRef]

- Youssef, A.M.; Pourghasemi, H.R.; Pourtaghi, Z.S.; Al-Katheeri, M.M. Landslide susceptibility mapping using random forest, boosted regression tree, classification and regression tree, and general linear models and comparison of their performance at Wadi Tayyah Basin, Asir Region, Saudi Arabia. Landslides 2016, 13, 839–856. [Google Scholar] [CrossRef]

- Jing, W.; Yang, Y.; Yue, X.; Zhao, X. A Comparison of Different Regression Algorithms for Downscaling Monthly Satellite-Based Precipitation over North China. Remote Sens. 2016, 8, 835. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, Y.; Jing, W.; Yue, X. Comparison of Different Machine Learning Approaches for Monthly Satellite-Based Soil Moisture Downscaling over Northeast China. Remote Sens. 2018, 10, 31. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Yu, X.; Hyyppä, J.; Vastaranta, M.; Holopainen, M.; Viitala, R. Predicting individual tree attributes from airborne laser point clouds based on the random forests technique. ISPRS J. Photogramm. Remote Sens. 2011, 66, 28–37. [Google Scholar] [CrossRef]

- Tulbure, M.G.; Broich, M.; Stehman, S.V.; Kommareddy, A. Surface water extent dynamics from three decades of seasonally continuous Landsat time series at subcontinental scale in a semi-arid region. Remote Sens. Environ. 2016, 178, 142–157. [Google Scholar] [CrossRef]

- Deng, Y.; Jiang, W.; Tang, Z.; Li, J.; Lv, J.; Chen, Z.; Jia, K. Spatio-Temporal Change of Lake Water Extent in Wuhan Urban Agglomeration Based on Landsat Images from 1987 to 2015. Remote Sens. 2017, 9, 270. [Google Scholar] [CrossRef]

- Sun, C.; Liu, Y.; Zhao, S.; Jin, S. Estimating offshore oil production using DMSP-OLS annual composites. ISPRS J. Photogramm. Remote Sens. 2020, 165, 152–171. [Google Scholar] [CrossRef]

| Model | Year | TP | FN | FP | P (%) | R (%) | F1 (%) |

|---|---|---|---|---|---|---|---|

| 2016 | 17 | 0 | 4 | 80.95 | 100 | 89.47 | |

| CART | 2017 | 16 | 1 | 2 | 84.21 | 94.12 | 88.89 |

| Mean | 82.58 | 97.06 | 89.18 | ||||

| 2016 | 17 | 0 | 1 | 89.47 | 100.00 | 94.44 | |

| RF | 2017 | 16 | 1 | 2 | 94.12 | 94.12 | 94.12 |

| Mean | 91.80 | 97.06 | 94.28 | ||||

| 2016 | 17 | 0 | 3 | 85.00 | 100 | 91.89 | |

| ANN | 2017 | 15 | 2 | 1 | 93.75 | 88.24 | 90.91 |

| Mean | 89.38 | 94.12 | 91.40 | ||||

| 2016 | 16 | 2 | 1 | 88.89 | 94.12 | 91.43 | |

| SVM | 2017 | 16 | 1 | 2 | 84.21 | 94.12 | 88.89 |

| Mean | 86.55 | 94.12 | 90.16 | ||||

| 2016 | 17 | 0 | 13 | 56.67 | 100.00 | 72.34 | |

| MaD | 2017 | 17 | 0 | 14 | 54.84 | 100.00 | 70.83 |

| Mean | 55.58 | 100.00 | 71.59 | ||||

| 2016 | 16 | 1 | 12 | 57.14 | 94.12 | 71.11 | |

| MLC | 2017 | 17 | 0 | 15 | 53.13 | 100.00 | 69.39 |

| Mean | 55.14 | 97.06 | 70.25 |

| Model | Year | TP | FN | FP | P (%) | R (%) | F1 (%) |

|---|---|---|---|---|---|---|---|

| 2016 | 13 | 5 | 2 | 86.67 | 72.22 | 78.79 | |

| CART | 2017 | 11 | 7 | 1 | 91.67 | 61.11 | 73.33 |

| Mean | 89.17 | 66.67 | 76.06 | ||||

| 2016 | 15 | 3 | 0 | 100.00 | 83.33 | 90.91 | |

| RF | 2017 | 15 | 3 | 1 | 93.75 | 83.33 | 88.24 |

| Mean | 96.88 | 83.33 | 89.58 | ||||

| 2016 | 13 | 5 | 2 | 86.67 | 72.22 | 78.79 | |

| ANN | 2017 | 12 | 6 | 0 | 100.00 | 66.67 | 80.00 |

| Mean | 93.34 | 69.45 | 79.34 | ||||

| 2016 | 10 | 8 | 0 | 100.00 | 55.56 | 71.43 | |

| SVM | 2017 | 11 | 7 | 1 | 91.67 | 61.11 | 73.33 |

| Mean | 95.84 | 58.34 | 72.38 | ||||

| 2016 | 13 | 5 | 4 | 76.47 | 72.22 | 74.29 | |

| MaD | 2017 | 12 | 6 | 4 | 75.00 | 66.67 | 70.59 |

| Mean | 75.74 | 69.45 | 72.44 | ||||

| 2016 | 13 | 5 | 3 | 72.22 | 72.22 | 72.22 | |

| MLC | 2017 | 13 | 5 | 4 | 76.47 | 72.22 | 74.29 |

| Mean | 74.35 | 72.22 | 73.26 |

| Model | Year | TP | FN | FP | P (%) | R (%) | F1 (%) |

|---|---|---|---|---|---|---|---|

| 2016 | 8 | 6 | 0 | 100.00 | 57.14 | 72.73 | |

| CART | 2017 | 7 | 7 | 0 | 100.00 | 50.00 | 66.67 |

| Mean | 100.00 | 53.57 | 69.70 | ||||

| 2016 | 14 | 0 | 4 | 77.78 | 100.00 | 87.50 | |

| RF | 2017 | 13 | 1 | 3 | 81.25 | 92.86 | 86.67 |

| Mean | 79.52 | 96.43 | 87.09 | ||||

| 2016 | 8 | 6 | 0 | 100.00 | 57.14 | 72.73 | |

| ANN | 2017 | 8 | 6 | 0 | 100.00 | 57.14 | 72.73 |

| Mean | 100.00 | 57.14 | 72.73 | ||||

| 2016 | 8 | 6 | 1 | 88.89 | 57.14 | 69.57 | |

| SVM | 2017 | 6 | 8 | 0 | 100.00 | 42.86 | 60.00 |

| Mean | 94.45 | 50.00 | 64.79 | ||||

| 2016 | 10 | 4 | 5 | 66.67 | 71.43 | 68.97 | |

| MaD | 2017 | 7 | 7 | 0 | 100.00 | 50.00 | 66.67 |

| Mean | 83.34 | 60.72 | 67.83 | ||||

| 2016 | 10 | 4 | 3 | 76.92 | 71.43 | 74.07 | |

| MLC | 2017 | 8 | 6 | 0 | 100.00 | 57.14 | 72.73 |

| Mean | 88.46 | 64.29 | 73.40 |

| Model | Year | TP | FN | FP | P (%) | R (%) | F1 (%) |

|---|---|---|---|---|---|---|---|

| 2016 | 11 | 11 | 0 | 100.00 | 50.00 | 66.67 | |

| CART | 2017 | 11 | 12 | 0 | 100.00 | 47.83 | 64.71 |

| Mean | 100.00 | 48.92 | 65.69 | ||||

| 2016 | 19 | 3 | 0 | 100.00 | 86.36 | 92.68 | |

| RF | 2017 | 21 | 2 | 2 | 91.3 | 91.30 | 91.30 |

| Mean | 95.65 | 88.83 | 91.99 | ||||

| 2016 | 11 | 11 | 0 | 100.00 | 50.00 | 66.67 | |

| ANN | 2017 | 11 | 12 | 1 | 91.67 | 47.83 | 62.86 |

| Mean | 95.84 | 48.92 | 64.77 | ||||

| 2016 | 9 | 13 | 0 | 100.00 | 40.91 | 58.06 | |

| SVM | 2017 | 11 | 12 | 0 | 100.00 | 47.83 | 64.71 |

| Mean | 100.00 | 44.37 | 61.39 | ||||

| 2016 | 14 | 8 | 1 | 93.33 | 63.64 | 75.68 | |

| MaD | 2017 | 11 | 12 | 2 | 84.62 | 47.83 | 61.12 |

| Mean | 88.98 | 55.74 | 68.40 | ||||

| 2016 | 13 | 9 | 1 | 92.86 | 59.09 | 72.22 | |

| MLC | 2017 | 13 | 10 | 2 | 86.67 | 56.52 | 68.42 |

| Mean | 89.77 | 57.81 | 70.32 |

| Model | Region 1 (%) | Region 2 (%) | Region 3 (%) | Region 4 (%) | Mean (%) |

|---|---|---|---|---|---|

| CART | 89.18 | 76.06 | 69.7 | 65.69 | 75.16 |

| RF | 94.28 | 89.58 | 87.09 | 91.99 | 90.74 |

| ANN | 91.4 | 79.34 | 72.73 | 64.77 | 77.06 |

| SVM | 90.16 | 72.38 | 64.79 | 61.39 | 72.18 |

| MaD | 71.59 | 72.44 | 67.83 | 68.4 | 70.07 |

| MLC | 70.25 | 73.26 | 73.4 | 70.32 | 71.81 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, R.; Wu, W.; Wang, Q.; Liu, N.; Chang, Y. Offshore Hydrocarbon Exploitation Target Extraction Based on Time-Series Night Light Remote Sensing Images and Machine Learning Models: A Comparison of Six Machine Learning Algorithms and Their Multi-Feature Importance. Remote Sens. 2023, 15, 1843. https://doi.org/10.3390/rs15071843

Ma R, Wu W, Wang Q, Liu N, Chang Y. Offshore Hydrocarbon Exploitation Target Extraction Based on Time-Series Night Light Remote Sensing Images and Machine Learning Models: A Comparison of Six Machine Learning Algorithms and Their Multi-Feature Importance. Remote Sensing. 2023; 15(7):1843. https://doi.org/10.3390/rs15071843

Chicago/Turabian StyleMa, Rui, Wenzhou Wu, Qi Wang, Na Liu, and Yutong Chang. 2023. "Offshore Hydrocarbon Exploitation Target Extraction Based on Time-Series Night Light Remote Sensing Images and Machine Learning Models: A Comparison of Six Machine Learning Algorithms and Their Multi-Feature Importance" Remote Sensing 15, no. 7: 1843. https://doi.org/10.3390/rs15071843

APA StyleMa, R., Wu, W., Wang, Q., Liu, N., & Chang, Y. (2023). Offshore Hydrocarbon Exploitation Target Extraction Based on Time-Series Night Light Remote Sensing Images and Machine Learning Models: A Comparison of Six Machine Learning Algorithms and Their Multi-Feature Importance. Remote Sensing, 15(7), 1843. https://doi.org/10.3390/rs15071843