Video SAR Moving Target Shadow Detection Based on Intensity Information and Neighborhood Similarity

Abstract

1. Introduction

- 1.

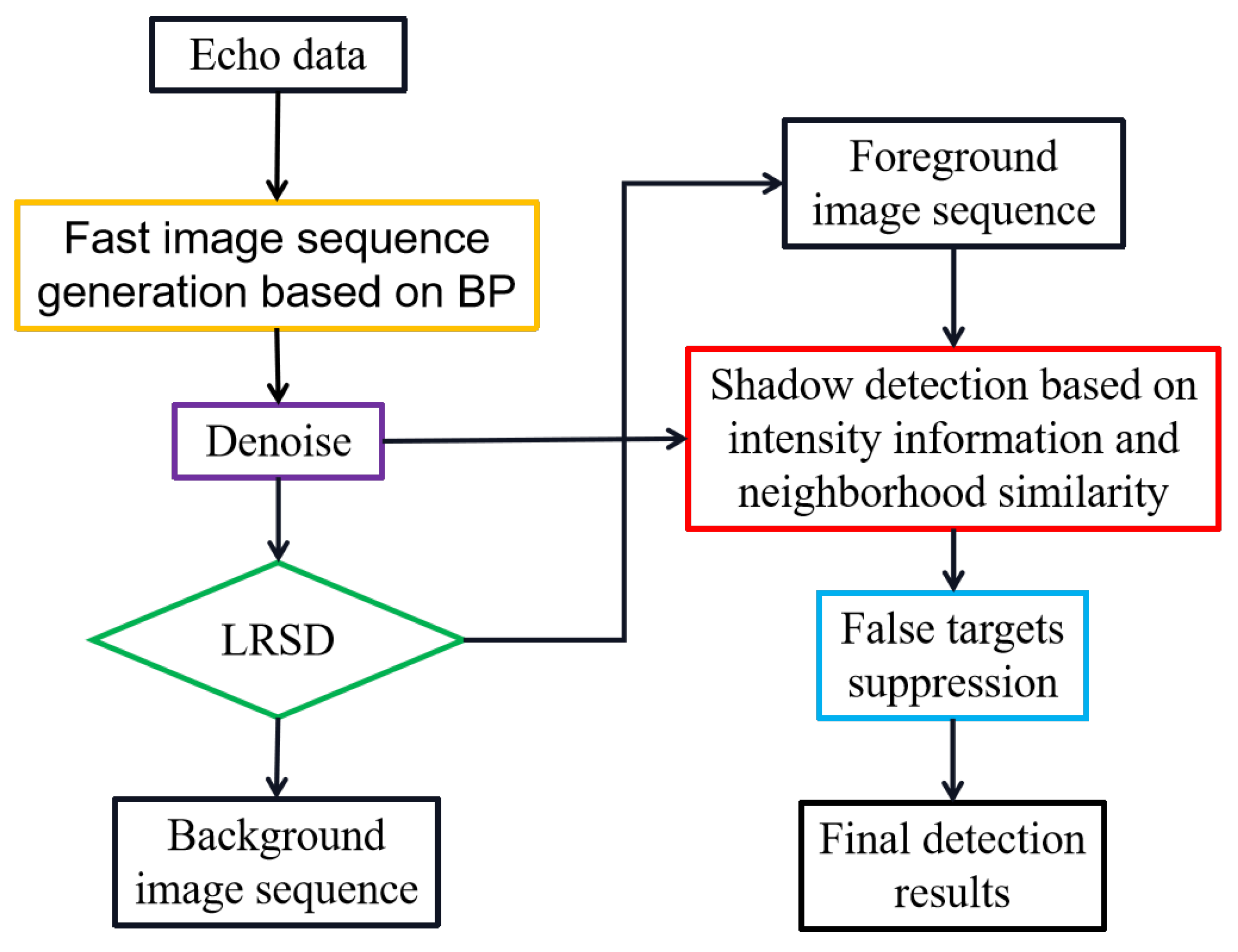

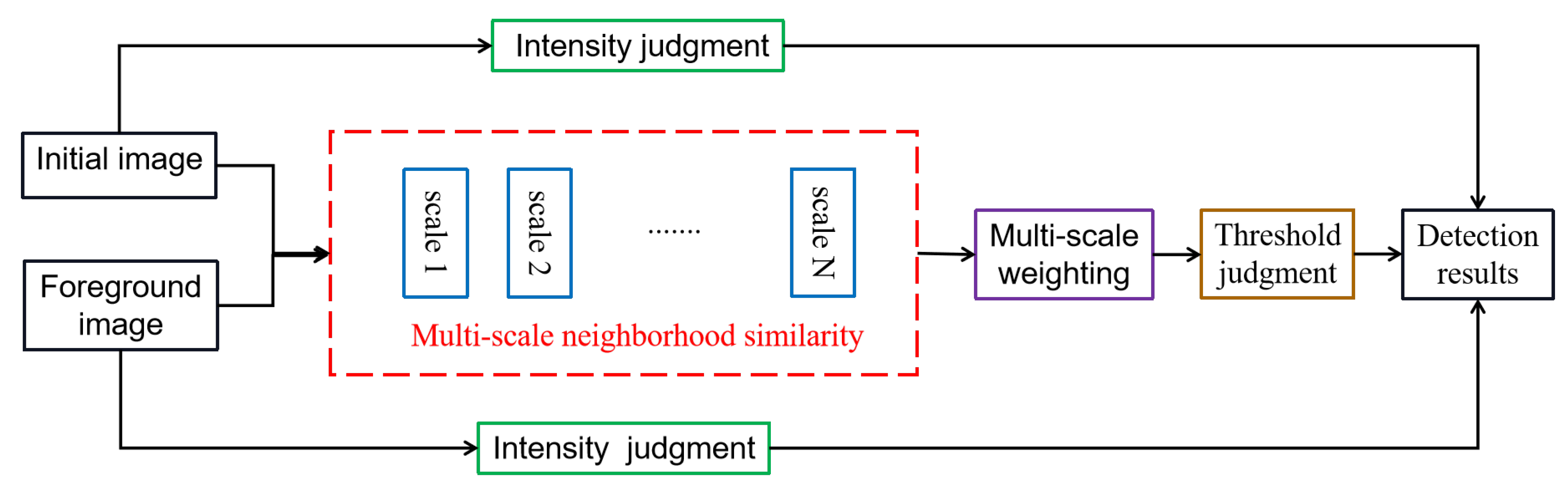

- We propose a new moving target shadow detection method in video SAR, which firstly combines the neighborhood similarity and intensity information of the moving target shadow. The core of this method is that the shadow of the moving object has a high similarity between the original image and the corresponding foreground image. It performs better than the classical multi-frame detection methods in the integrity of detection results and false alarm suppression.

- 2.

- Background and foreground separation is the basis of the proposed shadow detection method. According to the SAR’s mechanism, the scattering variations of static objects are stable within a certain angle [29]. In addition, there is a high overlapping ratio between adjacent images in the image sequence. Therefore, the static objects can be considered low rank. Based on these, this paper introduces the LRSD technology to obtain cleaner background images and better foreground images.

- 3.

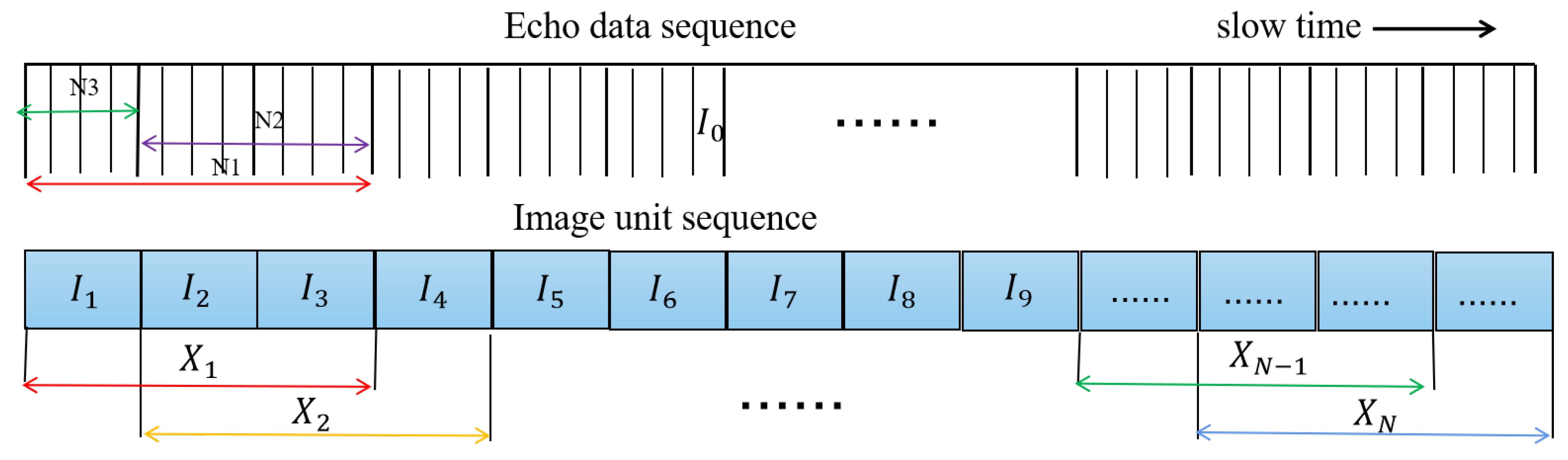

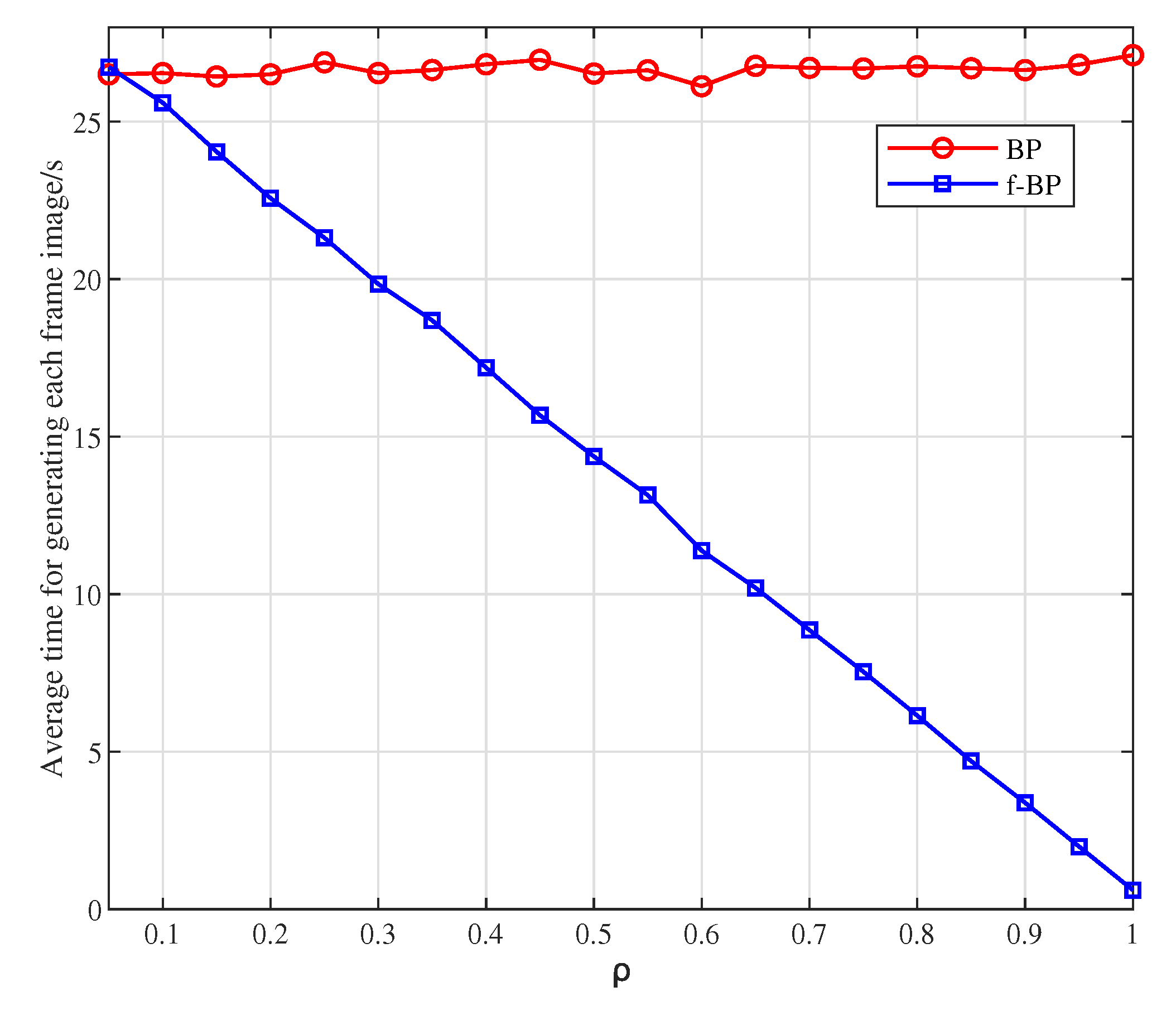

- We propose a method based on the BP algorithm to fast generate image sequence. In practice, there is a lot of data redundancy between overlapping image sequences. Taking advantage of the proposed method can effectively reduce redundancy calculation and improve the efficiency of image sequence generation.

2. Methodology

2.1. Fast Image Sequence Generation

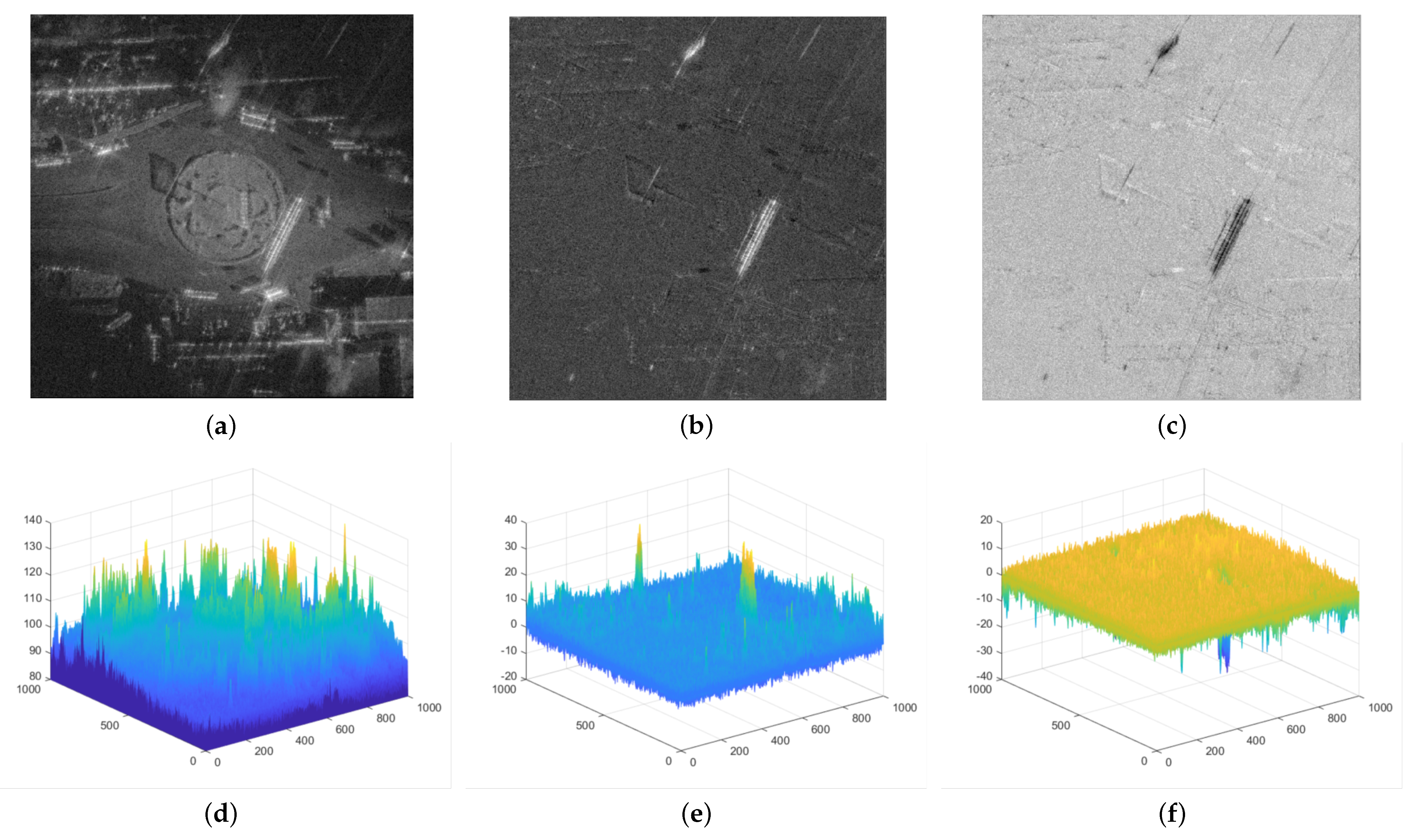

2.2. Background and Foreground Separation

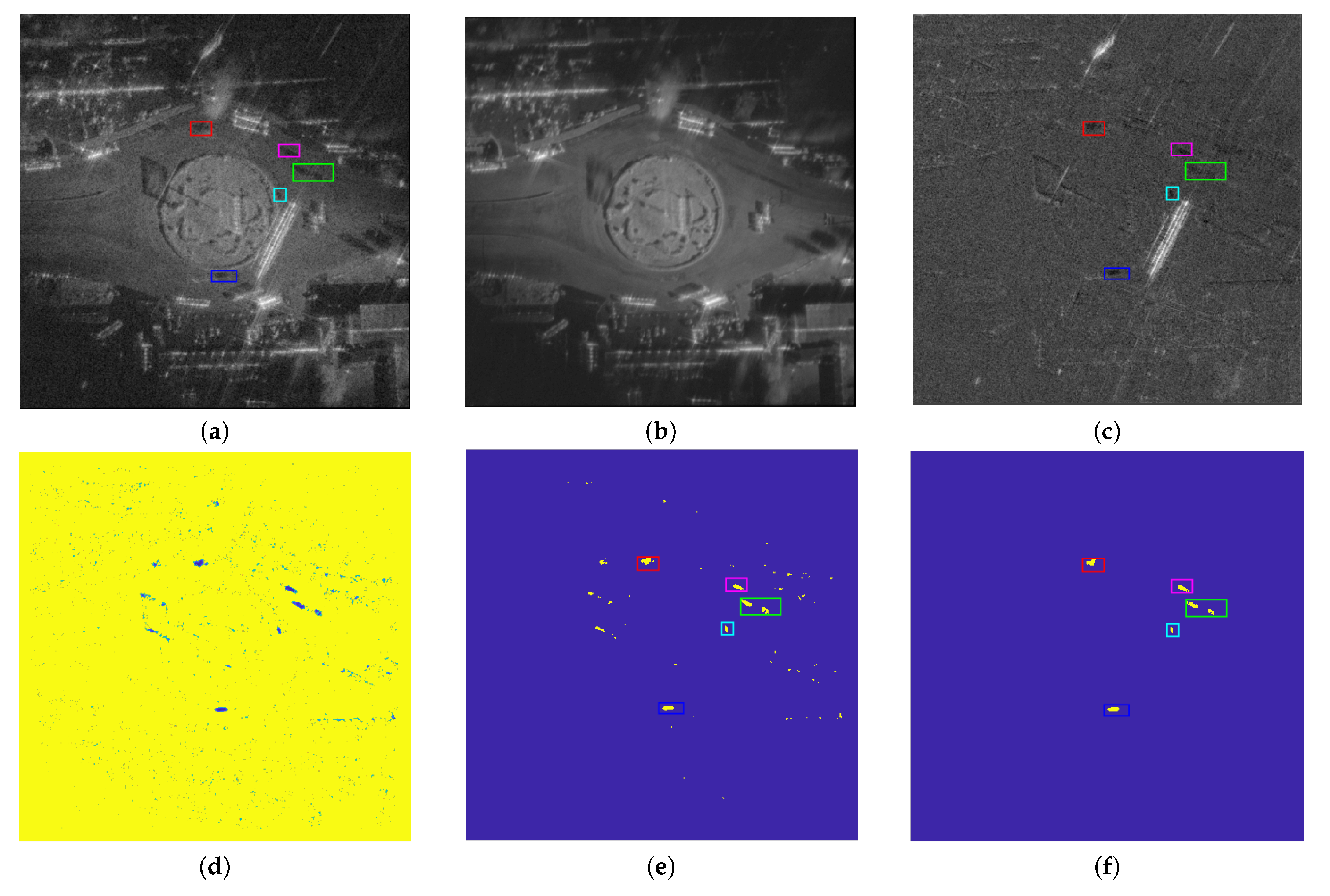

2.3. Multi-Scale Neighborhood Similarity for Shadow Detection

3. Results

3.1. Image Sequence Generation

3.2. Detection Results on Real W-Band Data

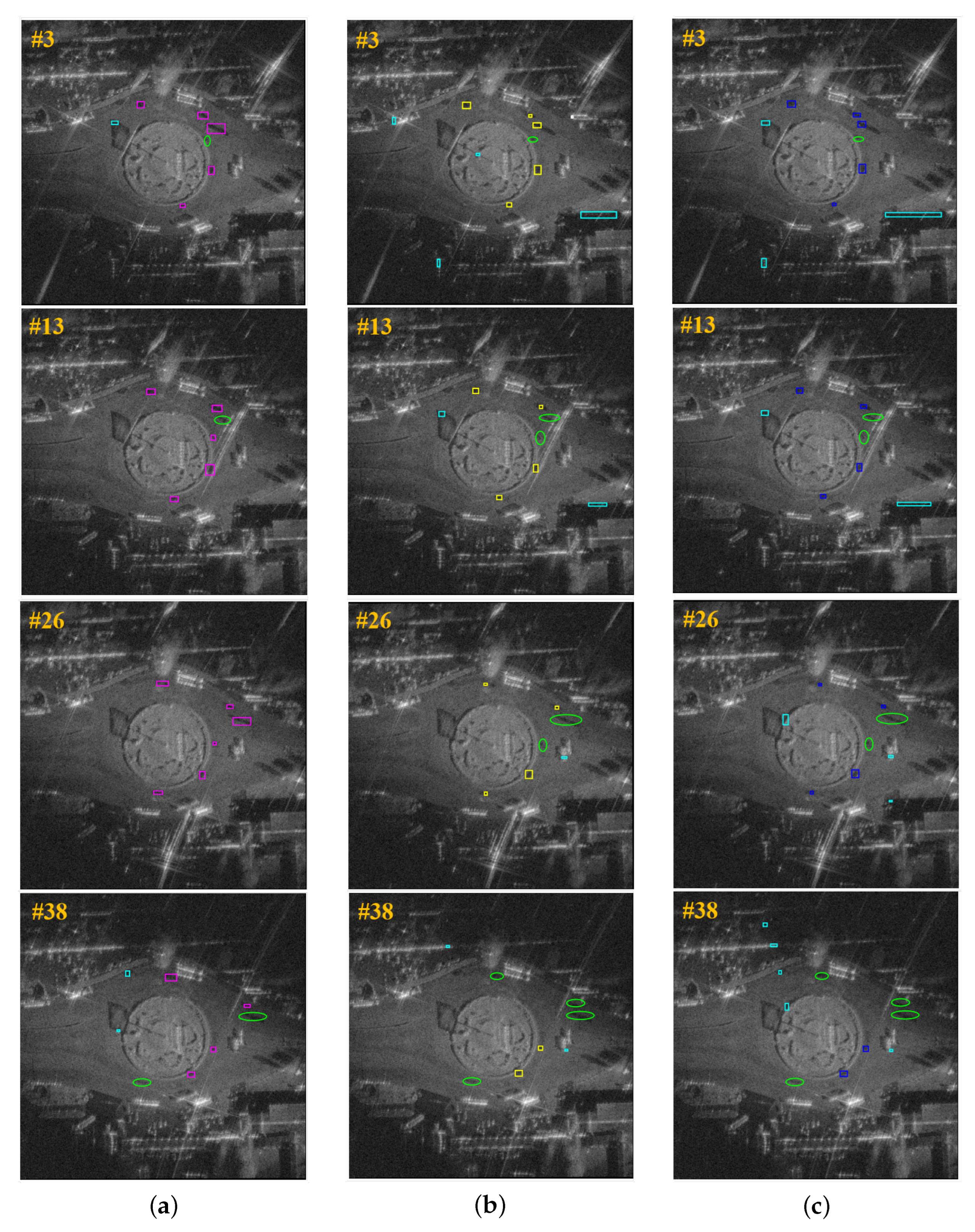

3.3. Performance Comparison on W-Band Data

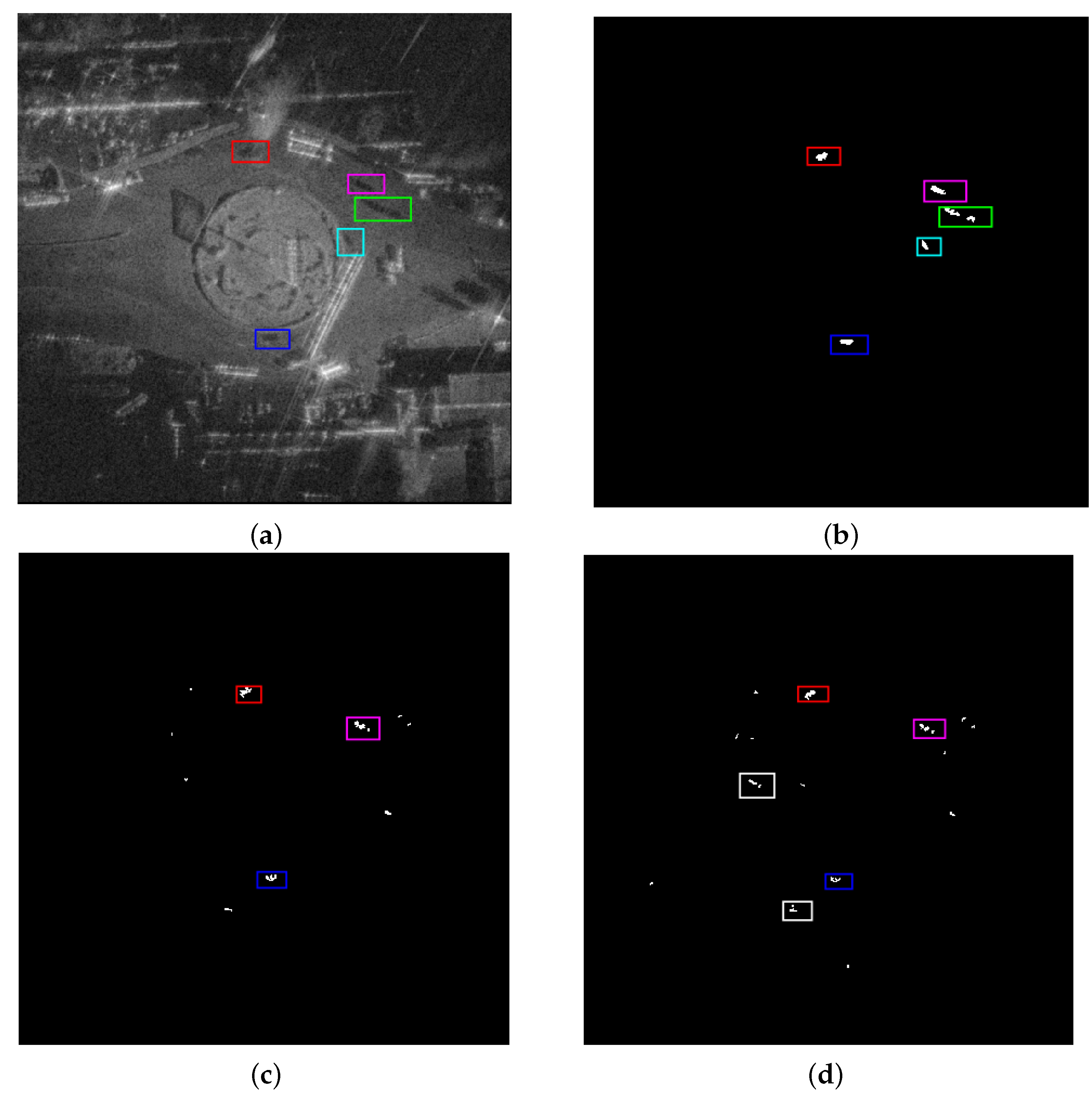

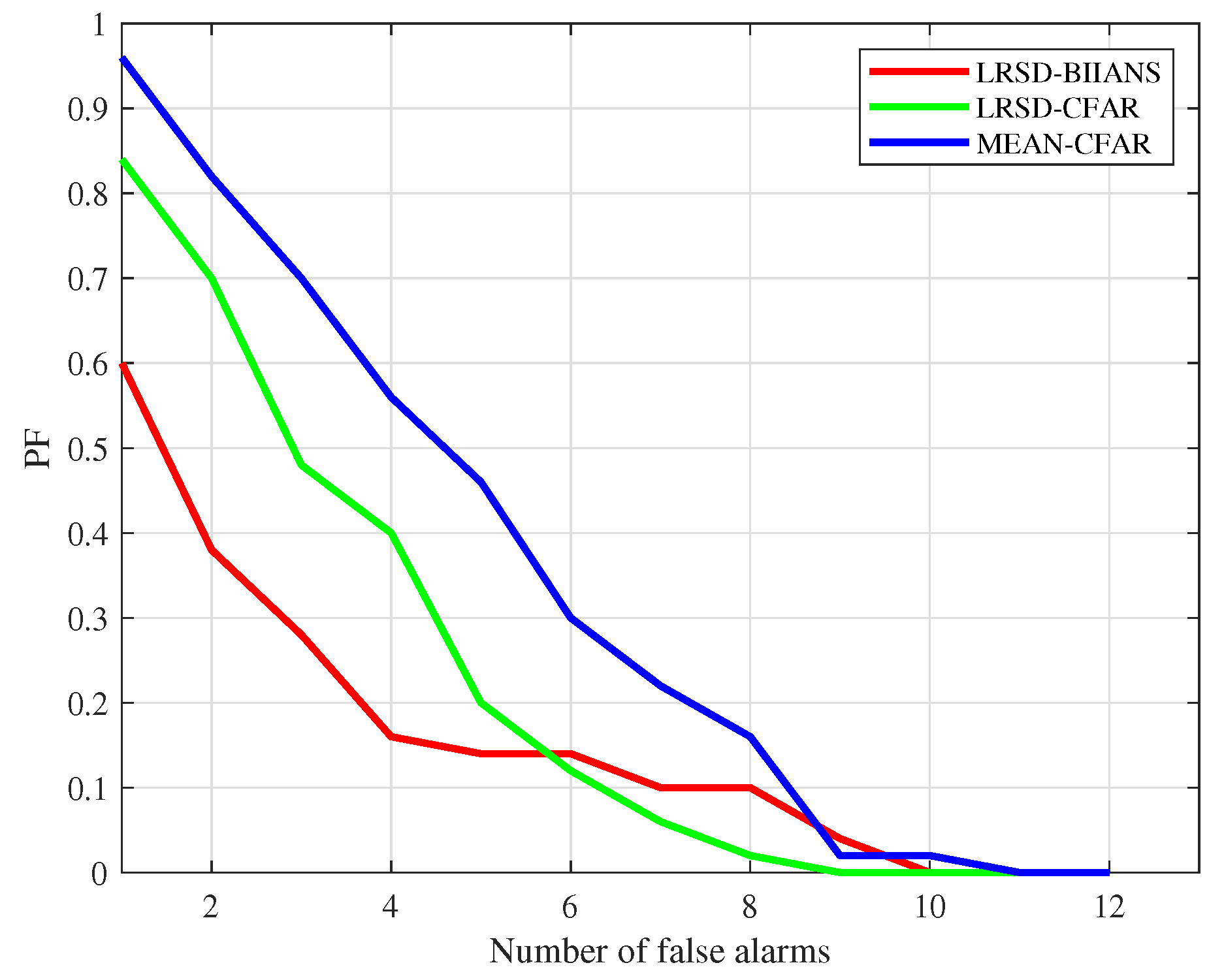

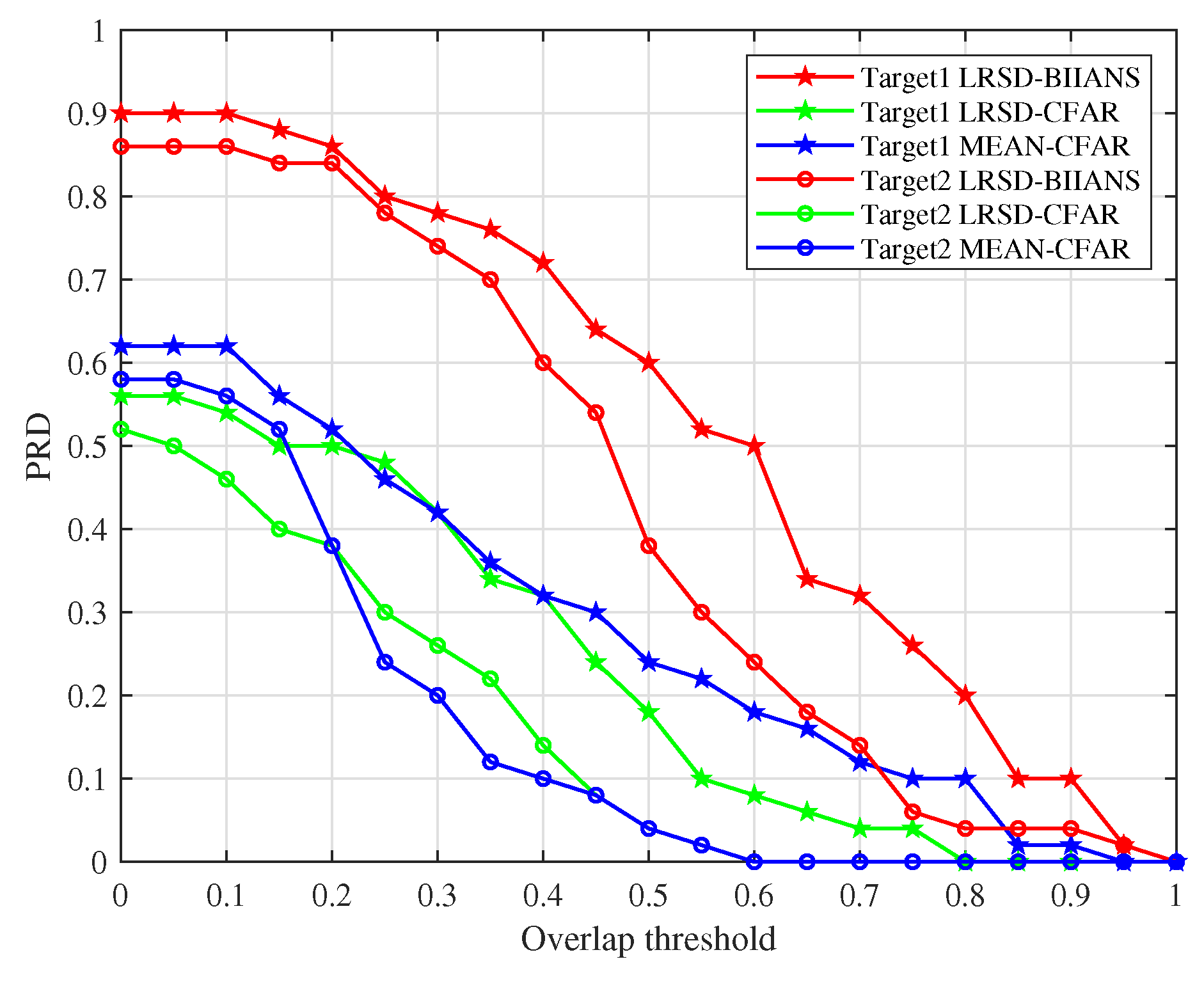

3.3.1. Detection Results of Different Methods

3.3.2. Quantitative Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Balaji, B. A videoSAR mode for the x-band wideband experimental airborne radar. In Proceedings of the Algorithms for Synthetic Aperture Radar Imagery XVII, Orlando, FL, USA, 8–9 April 2010. [Google Scholar]

- Kim, S.H.; Fan, R.; Dominski, F. ViSAR: A 235 GHz radar for airborne applications. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 1549–1554. [Google Scholar]

- Palm, S.; Sommer, R.; Janssen, D.; Tessmann, A.; Stilla, U. Airborne Circular W-Band SAR for Multiple Aspect Urban Site Monitoring. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6996–7016. [Google Scholar] [CrossRef]

- Damini, A.; Mantle, V.; Davidson, G. A new approach to coherent change detection in VideoSAR imagery using stack averaged coherence. In Proceedings of the Radar Conference (RADAR), Ottawa, ON, Canada, 29 April–3 May 2013. [Google Scholar]

- Liu, B.; Zhang, X.; Tang, K.; Liu, M.; Liu, L. Spaceborne Video-SAR moving target surveillance system. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 2348–2351. [Google Scholar]

- Jahangir, M. Moving target detection for synthetic aperture radar via shadow detection. In Proceedings of the 2007 IET International Conference on Radar Systems, Edinburgh, UK, 15–18 October 2007. [Google Scholar]

- Xu, H.; Yang, Z.; Chen, G.; Liao, G.; Tian, M. A Ground Moving Target Detection Approach Based on Shadow Feature With Multichannel High-Resolution Synthetic Aperture Radar. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1572–1576. [Google Scholar] [CrossRef]

- Xu, H.; Yang, Z.; Tian, M.; Sun, Y.; Liao, G. An Extended Moving Target Detection Approach for High-Resolution Multichannel SAR-GMTI Systems Based on Enhanced Shadow-Aided Decision. IEEE Trans. Geosci. Remote Sens. 2018, 56, 715–729. [Google Scholar] [CrossRef]

- Cerutti-Maori, D.; Sikaneta, I. A Generalization of DPCA Processing for Multichannel SAR/GMTI Radars. IEEE Trans. Geosci. Remote Sens. 2013, 51, 560–572. [Google Scholar] [CrossRef]

- Budillon, A.; Schirinzi, G. Performance Evaluation of a GLRT Moving Target Detector for TerraSAR-X Along-Track Interferometric Data. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3350–3360. [Google Scholar] [CrossRef]

- Raney, R.K. Synthetic Aperture Imaging Radar and Moving Targets. IEEE Trans. Aerosp. Electron. Syst. 1971, AES-7, 499–505. [Google Scholar] [CrossRef]

- Raynal, A.M.; Bickel, D.L.; Doerry, A.W. Stationary and Moving Target Shadow Characteristics in Synthetic Aperture Radar. In SPIE Defense + Security, Proceedings of the Radar Sensor Technology XVIII, Baltimore, MA, USA, 5–9 May 2014; SPIE: Baltimore, MD, USA, 2014. [Google Scholar]

- Liu, Z.; An, D.; Huang, X. Moving Target Shadow Detection and Global Background Reconstruction for VideoSAR Based on Single-Frame Imagery. IEEE Access 2019, 7, 42418–42425. [Google Scholar] [CrossRef]

- Ding, J.; Wen, L.; Zhong, C.; Loffeld, O. Video SAR Moving Target Indication Using Deep Neural Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7194–7204. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, S.; Li, H.; Xu, Z. Shadow Tracking of Moving Target Based on CNN for Video SAR System. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4399–4402. [Google Scholar]

- Wen, L.; Ding, J.; Loffeld, O. Video SAR Moving Target Detection Using Dual Faster R-CNN. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2984–2994. [Google Scholar] [CrossRef]

- Bao, J.; Zhang, X.; Zhang, T.; Xu, X. ShadowDeNet: A Moving Target Shadow Detection Network for Video SAR. Remote Sens. 2022, 14, 320. [Google Scholar] [CrossRef]

- Tian, X.; Liu, J.; Mallick, M.; Huang, K. Simultaneous Detection and Tracking of Moving-Target Shadows in ViSAR Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1182–1199. [Google Scholar] [CrossRef]

- Qin, S.; Ding, J.; Wen, L.; Jiang, M. Joint Track-Before-Detect Algorithm for High-Maneuvering Target Indication in Video SAR. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8236–8248. [Google Scholar] [CrossRef]

- Luan, J.; Wen, L.; Ding, J. Multifeature Joint Detection of Moving Target in Video SAR. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X.; Zhang, T.; Yang, Z.; Shi, J.; Zhan, X. Shadow-Background-Noise 3D Spatial Decomposition Using Sparse Low-Rank Gaussian Properties for Video-SAR Moving Target Shadow Enhancement. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Wen, L.; Ding, J.; Cheng, Y.; Xu, Z. Dually Supervised Track-Before-Detect Processing of Multichannel Video SAR Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Yang, X.; Shi, J.; Chen, T.; Hu, Y.; Zhou, Y.; Zhang, X.; Wei, S.; Wu, J. Fast Multi-Shadow Tracking for Video-SAR Using Triplet Attention Mechanism. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Zhao, B.; Han, Y.; Wang, H.; Tang, L.; Liu, X.; Wang, T. Robust Shadow Tracking for Video SAR. IEEE Geosci. Remote Sens. Lett. 2021, 18, 821–825. [Google Scholar] [CrossRef]

- Zhong, C.; Ding, J.; Zhang, Y. Video SAR Moving Target Tracking Using Joint Kernelized Correlation Filter. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1481–1493. [Google Scholar] [CrossRef]

- Wang, H.; Chen, Z.; Zheng, S. Preliminary Research of Low-RCS Moving Target Detection Based on Ka-Band Video SAR. IEEE Geosci. Remote Sens. Lett. 2017, 14, 811–815. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, D.; Xiang, Y.U.; Mao, X. Approach to Moving Targets Shadow Detection for Video SAR. J. Electron. Inf. Technol. 2017, 39, 2197–2202. [Google Scholar]

- Lei, L.; Zhu, D. An approach for detecting moving target in VideoSAR imagery sequence. In Proceedings of the 2016 CIE International Conference on Radar (RADAR), Guangzhou, China, 10–13 October 2016. [Google Scholar]

- Kaplan, L.M. Analysis of multiplicative speckle models for template-based SAR ATR. Aerosp. Electron. Syst. IEEE Trans. 2001, 37, 1424–1432. [Google Scholar] [CrossRef]

- Tan, X.; Li, J. Range-Doppler Imaging via Forward-Backward Sparse Bayesian Learning. IEEE Trans. Signal Process. 2010, 58, 2421–2425. [Google Scholar] [CrossRef]

- Runge, H.; Bamler, R. A Novel High Precision SAR Focussing Algorithm Based On Chirp Scaling. In Proceedings of the International Geoscience & Remote Sensing Symposium, Houston, TX, USA, 26–29 May 1992; pp. 372–375. [Google Scholar]

- Deming, R.; Best, M.; Farrell, S. Polar Format Algorithm for SAR Imaging with Matlab. In Spie Defense + Security, Proceedings of the Algorithms for Synthetic Aperture Radar Imagery XXI, Baltimore, MA, USA, 5–9 May 2014; SPIE: Baltimore, MD, USA, 2014. [Google Scholar]

- Desai, M.; Jenkins, W.K. Convolution backprojection image reconstruction for spotlight mode synthetic aperture radar. IEEE Trans. Image Process. A Publ. IEEE Signal Process. Soc. 1992, 1, 505–517. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Shen, W.; Lin, Y.; Hong, W. Single-Channel Circular SAR Ground Moving Target Detection Based on LRSD and Adaptive Threshold Detector. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Wright, J.; Ganesh, A.; Rao, S.R.; Ma, Y. Robust Principal Component Analysis: Exact Recovery of Corrupted Low-Rank Matrices. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, USA, 7–10 December 2009. [Google Scholar]

- Vaswani, N.; Chi, Y.; Bouwmans, T. Rethinking PCA for Modern Data Sets: Theory, Algorithms, and Applications. Proc. IEEE 2018, 106, 1274–1276. [Google Scholar] [CrossRef]

- Oreifej, O.; Li, X.; Shah, M. Simultaneous Video Stabilization and Moving Object Detection in Turbulence. IEEE Trans. Softw. Eng. 2013, 35, 450–462. [Google Scholar] [CrossRef]

- Pu, W.; Wu, J. OSRanP: A novel way for Radar Imaging Utilizing Joint Sparsity and Low-rankness. IEEE Trans. Comput. Imaging 2020, 6, 868–882. [Google Scholar] [CrossRef]

- Candès, E.J.; Li, X.; Ma, Y.; Wright, J. Robust Principal Component Analysis? J. ACM 2009, 58, 11:1–11:37. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, M.; Ma, Y. The Augmented Lagrange Multiplier Method for Exact Recovery of Corrupted Low-Rank Matrices. arXiv 2010, arXiv:1009.5055. [Google Scholar]

- Cai, J.F.; Candès, E.; Shen, Z. A Singular Value Thresholding Algorithm for Matrix Completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Zhang, S.; Bogus, S.M.; Lippitt, C.D.; Kamat, V.; Lee, S. Implementing Remote-Sensing Methodologies for Construction Research: An Unoccupied Airborne System Perspective. J. Constr. Eng. Manag. 2022, 148, 03122005. [Google Scholar] [CrossRef]

| Parameters | Notation | Value |

|---|---|---|

| Carrier Frequency | 94 GHz | |

| Bandwidth | 900 MHz | |

| Pulse Repetition Frequency | 10,000 Hz | |

| Flight Height | H | 500 m |

| Flight Radius | R | 500 m |

| Flight Velocity | v | 36 m/s |

| Grazing angle | 45° | |

| Transmission Mode | FMCW | |

| Polarization | P | VV |

| Azimuth Beamwidth | 6° | |

| Pixel Spacing | 20 cm | |

| Scene Size | 74 m × 74 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Shen, W.; Xia, L.; Lin, Y.; Shang, S.; Hong, W. Video SAR Moving Target Shadow Detection Based on Intensity Information and Neighborhood Similarity. Remote Sens. 2023, 15, 1859. https://doi.org/10.3390/rs15071859

Zhang Z, Shen W, Xia L, Lin Y, Shang S, Hong W. Video SAR Moving Target Shadow Detection Based on Intensity Information and Neighborhood Similarity. Remote Sensing. 2023; 15(7):1859. https://doi.org/10.3390/rs15071859

Chicago/Turabian StyleZhang, Zhiguo, Wenjie Shen, Linghao Xia, Yun Lin, Shize Shang, and Wen Hong. 2023. "Video SAR Moving Target Shadow Detection Based on Intensity Information and Neighborhood Similarity" Remote Sensing 15, no. 7: 1859. https://doi.org/10.3390/rs15071859

APA StyleZhang, Z., Shen, W., Xia, L., Lin, Y., Shang, S., & Hong, W. (2023). Video SAR Moving Target Shadow Detection Based on Intensity Information and Neighborhood Similarity. Remote Sensing, 15(7), 1859. https://doi.org/10.3390/rs15071859