Underwater 3D Scanning System for Cultural Heritage Documentation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Underwater Cultural Heritage Documentation

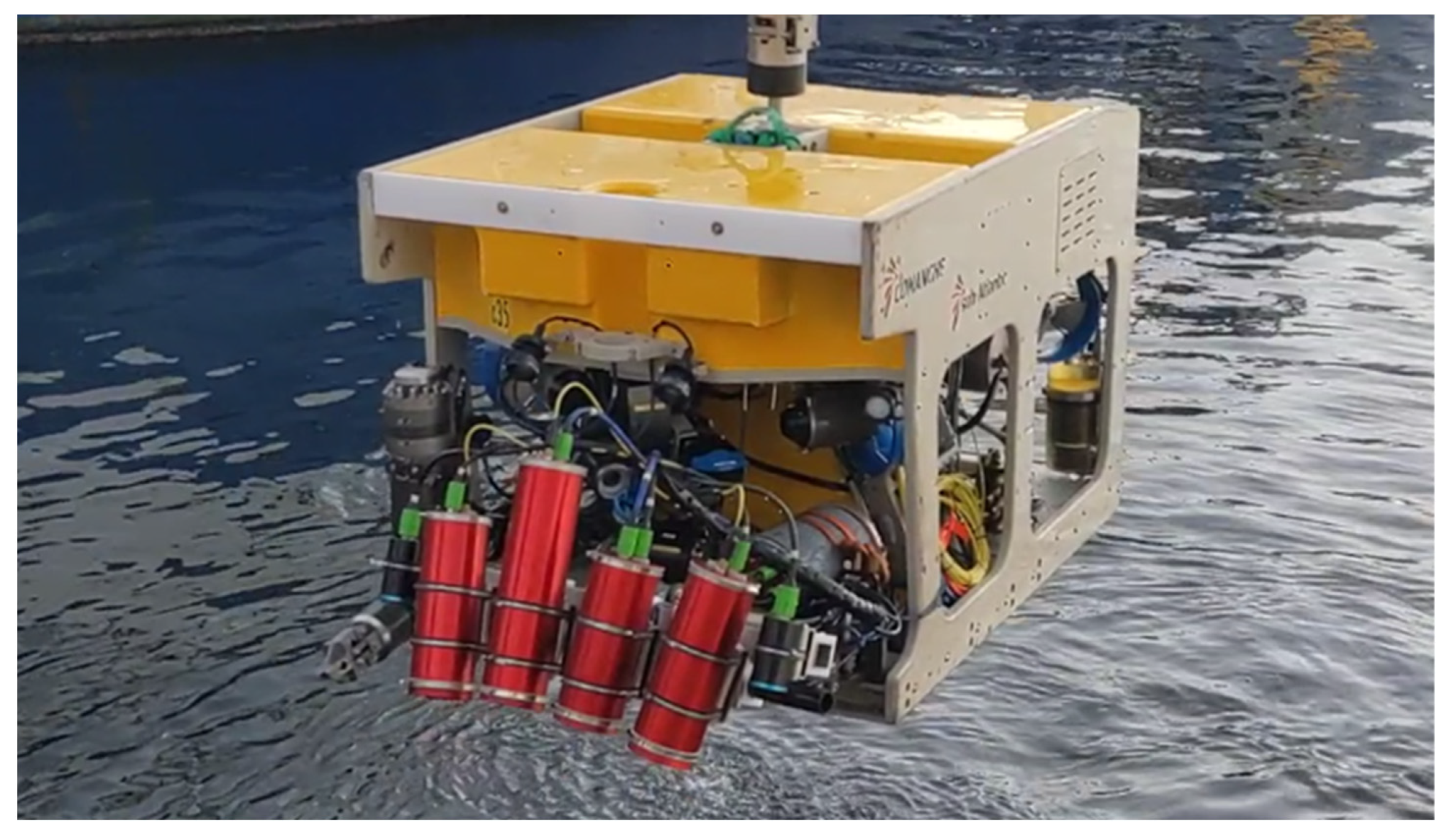

2.2. Hardware Setup

2.3. Scanning and Measurement Principles

2.3.1. Stereo Camera and Structured Illumination

2.3.2. Visual Odometry and IMU

2.3.3. Color Mapping onto 3D Data

2.4. Calibration and Error Estimation

2.4.1. Camera Calibration

2.4.2. Estimation of Systematic Measurement Errors

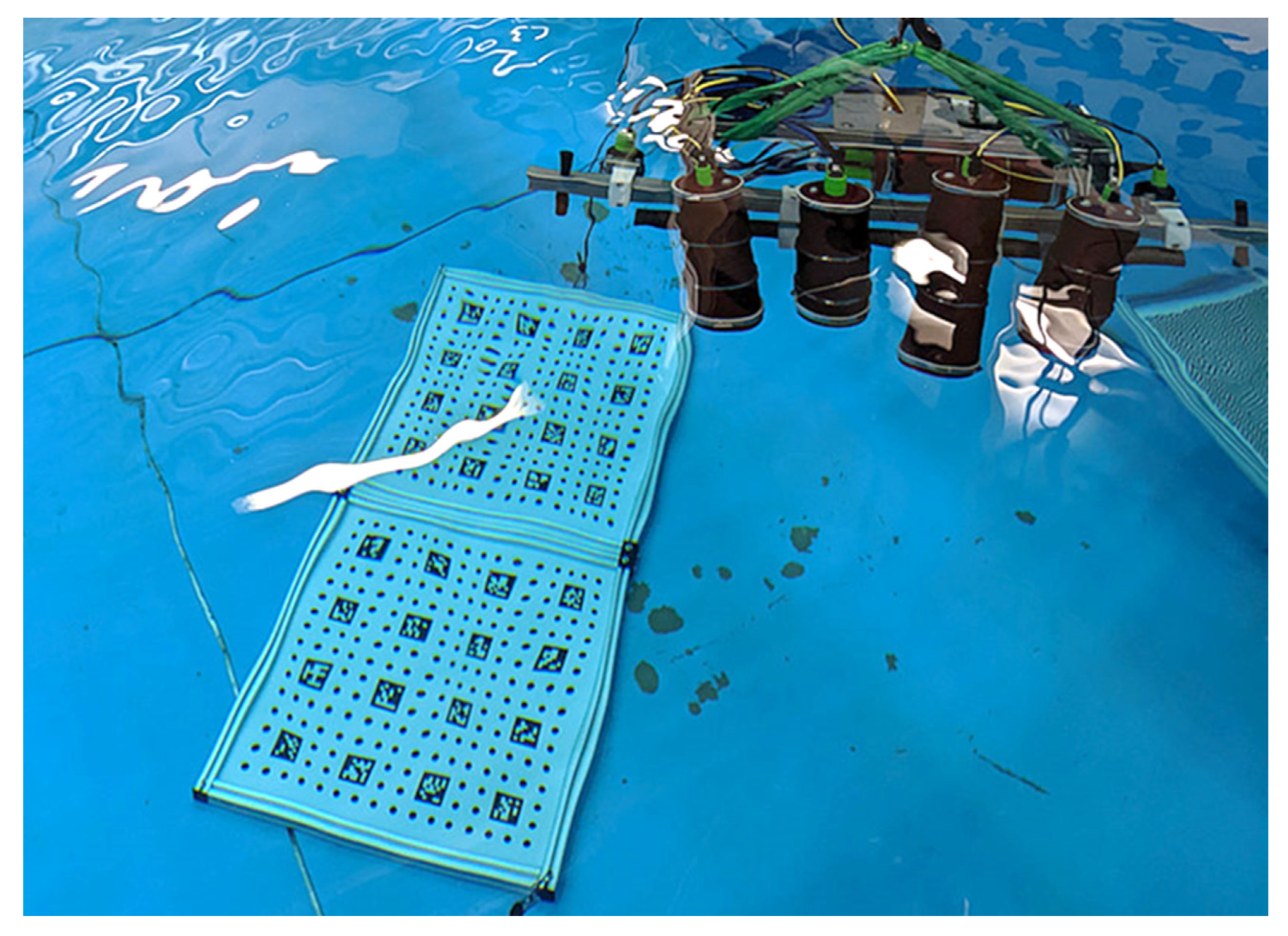

2.5. Underwater Test Scenarios

3. Results

3.1. Measurement Accuracy of the Structured Light Scanner

- Determination of the length of a calibrated ball-bar, defined as the distance between the sphere center points, depending on the measurement distance in repeated measurements;

- Determination of the standard deviation of the sphere surface points;

- Determination of the surface points of a plane normal (1000 mm × 200 mm), evaluation of the flatness of the measured plane surface and the local standard deviation of the sphere surface points.

- Measurements in clear freshwater provide very good accuracy results, comparable to those of 3D scanners for air applications;

- Measurements in seawater yielded acceptable results (errors obtained were approximately a factor two larger than those obtained from water basin measurements).

3.2. Color Camera Reconstruction

3.3. Merging of Single Scans

3.4. System Performance

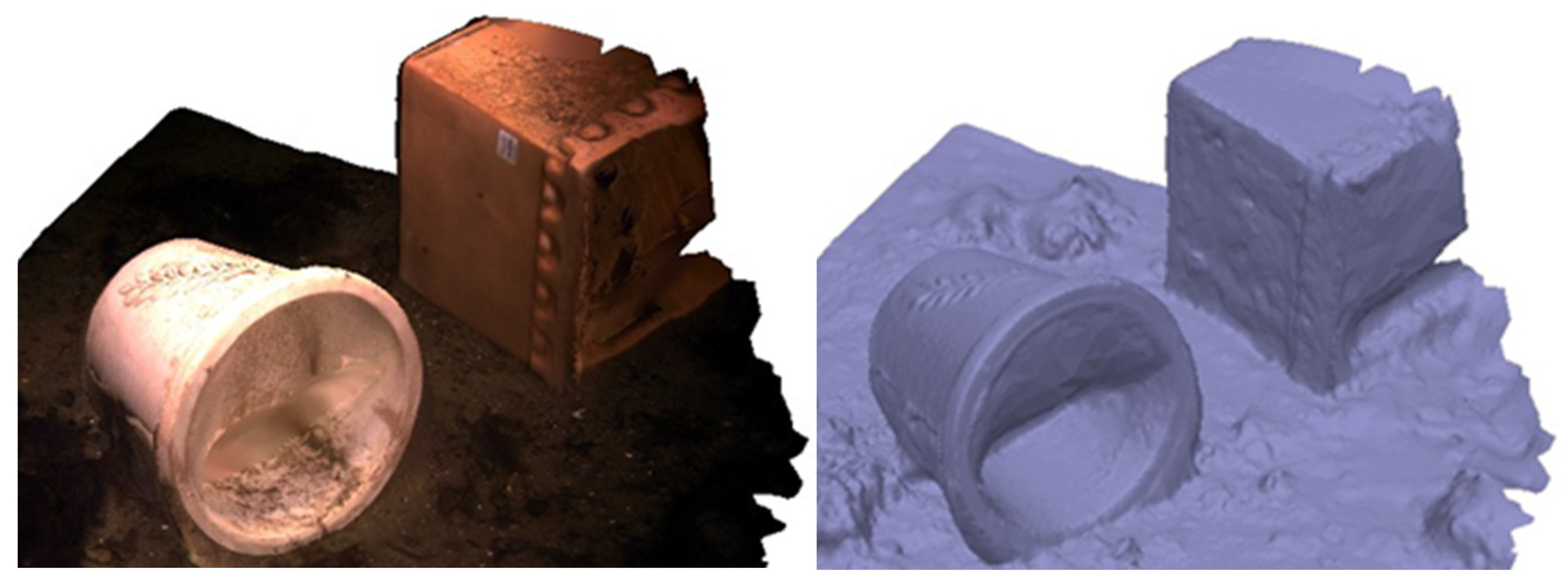

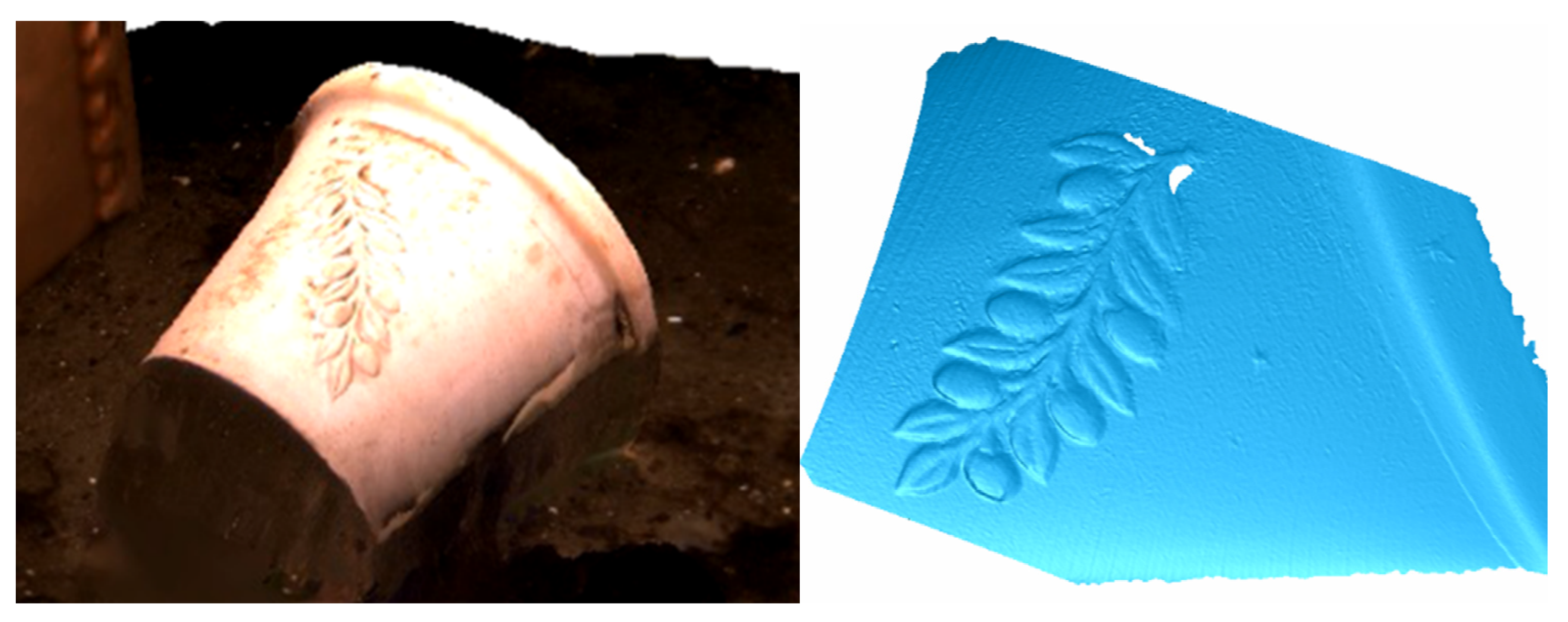

3.5. Examples of Object Reconstruction

3.5.1. Site Mapping

3.5.2. Object Reconstruction

4. Discussion and Conclusions

4.1. Evaluation of the Structured Light Scanner for Cultural Heritage Applications

4.2. Potential Application Scenarios

4.3. Suitability of the 3D Scanning System for Cultural Heritage Documentation Tasks

4.4. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Menna, F.; Agrafiotis, P.; Georopoulos, A. State of the art and applications in archaeological underwater 3D recording and mapping. J. Cult. Herit. 2018, 33, 231–248. [Google Scholar] [CrossRef]

- Collin, C.A.; Archambault, P.; Long, B. Mapping the shallow water seabed habitat with the SHOALS. IEEE Transa. Geosci. Remote Sens. 2008, 46, 2947–2955. [Google Scholar] [CrossRef]

- Georgopoulos, A.; Agrafiotis, P. Documentation of submerged monument using improved two media techniques. In Proceedings of the 2012 18th International Conference on Virtual Systems and Multimedia, Milan, Italy, 2–5 September 2012; pp. 173–180, IEEE 978-1-4673-2565-3/12/. [Google Scholar]

- Roman, C.; Inglis, G.; Rutter, J. Application of structured light imaging for high resolution mapping of underwater archaeological sites. In Proceedings of the Oceans’10 IEEE Sydney, Sydney, NSW, Australia, 24–27 May 2010; pp. 1–9. [Google Scholar]

- Eric, M.; Kovacic, R.; Berginc, G.; Pugelj, M.; Stopinsek, Z.; Solina, F. The impact of the latest 3D technologies on the documentation of underwater heritage sites. In Proceedings of the IEEE Digital Heritage International Congress 2013, Marseille, France, 28 October–1 November 2013; Volume 2, pp. 281–288. [Google Scholar]

- Drap, P. Underwater photogrammetry for archaeology. In Special Applications of Photogrammetry; Da Silva, D.C., Ed.; InTech: London, UK, 2012; pp. 111–136. ISBN 978-953-51-0548-0. [Google Scholar]

- Canciani, M.; Gambogi, P.; Romano, F.G.; Cannata, G.; Drap, P. Low-cost digital photogrammetry for underwater archaeological site survey and artifact insertion. The case study of the Dolia wreck in secche della Meloria-Livorno-Italia. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2003, 34, 95–100. [Google Scholar]

- Passaro, S.; Barra, M.; Saggiomo, R.; Di Giacomo, S.; Leotta, A.; Uhlen, H.; Mazzola, S. Multi-resolution morpho-bathymetric survey results at the Pozzuoli–Baiaunderwater archaeological site (Naples, Italy). J. Archaeol. Sci. 2013, 40, 1268–1278. [Google Scholar] [CrossRef]

- Giordano, F.; Mattei, G.; Parente, C.; Peluso, F.; Santamaria, R. Integrating sensorsinto a marine drone for bathymetric 3D surveys in shallow waters. Sensors 2015, 16, 41. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Campbell, P.B. An Introduction to Archaeology in Underwater Caves; Highfield Press: Scotland, UK, 2018; pp. 5–26. [Google Scholar]

- Argyropoulos, V.; Stratigea, A. Sustainable Management of Underwater Cultural Heritage: The Route from Discovery to Engagement—Open Issues in the Mediterranean. Heritage 2019, 2, 1588–1613. [Google Scholar] [CrossRef] [Green Version]

- Korduan, P.; Förster, T.; Obst, R. Unterwasser-Photogrammetrie zur 3D-Rekonstruktion des Schiffswracks “Darßer Kogge”. Photogramm. Fernerkund. Geoinf. 2003, 5, 373–381. [Google Scholar]

- Balletti, C.; Beltrane, C.; Costa, E.; Guerr, F.; Vernier, P. Underwater photogrammetry and 3D reconstruction of marble cargos shipwrecks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W5, 7–13. [Google Scholar] [CrossRef] [Green Version]

- Zhukovsky, M.O.; Kuznetsov, V.D.; Olkhovsky, S.V. Photogrammetric techniques for 3-D underwater record of the antique time ship from from Phangoria. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-5/W2, 717–721. [Google Scholar] [CrossRef] [Green Version]

- Grzadziel, A. Using Remote Sensing Techniques to Document and Identify the Largest Underwater Object of the Baltic Sea: Case Study of the Only German Aircraft Carrier, Graf Zeppelin. Remote Sens. 2020, 12, 4076. [Google Scholar] [CrossRef]

- Cejka, J.; Zsiros, A.; Liarokapis, F. A hybrid augmented reality guide for underwater cultural heritage sites. Pers. Ubiquitous Comput. 2020, 24, 815–828. [Google Scholar] [CrossRef]

- Gambin, T.; Hyttinen, K.; Sausmekat, M.; Wood, J. Making the Invisible Visible: Underwater Malta—A Virtual Museum for Submerged Cultural Heritage. Remote Sens. 2021, 13, 1558. [Google Scholar] [CrossRef]

- Galceran, E.; Campos, R.; Palomeras, N.; Carreras, M.; Ridao, P. Coverage path planning with realtime replanning for inspection of 3D underwater structures. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 6585–6590. [Google Scholar]

- Davis, A.; Lugsdin, A. Highspeed underwater inspection for port and harbour security using Coda Echoscope 3D sonar. In Proceedings of the Oceans 2005 MTS/IEEE, Washington, DC, USA, 17–23 September 2005. [Google Scholar] [CrossRef]

- Guerneve, T.; Pettilot, Y. Underwater 3D Reconstruction Using BlueView Imaging Sonar. In Proceedings of the OCEANS 2015, Genova, Italy, 18–21 May 2015; IEEE: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- ARIS-Sonars. 2022. Available online: http://soundmetrics.com/Products/ARIS-Sonars (accessed on 2 March 2023).

- 3DatDepth. 2022. Available online: http://www.3datdepth.com/ (accessed on 2 March 2023).

- Yang, P.; Liu, J. Effect of non-unifrom sampling on sonar focusing. In Proceedings of the 14th International Conference on Communication Software and Networks (ICCSN), Chongqing, China, 10–12 June 2021; pp. 109–113. [Google Scholar] [CrossRef]

- Reed, A.; Blanford, T.; Brown, D.C.; Jayasuriya, S. Implicit Neural Representations for Deconvolving SAS Images. In Proceedings of the OCEANS 2021: San Diego—Porto, San Diego, CA, USA, 20–23 September 2021. [Google Scholar] [CrossRef]

- Nadimi, N.; Javidan, R.; Layeghi, K. Efficient detection of underwater natural gas pipeline leak based on synthetic aperture sonar (SAS) systems. J. Mar. Sci. Eng. 2021, 9, 1273. [Google Scholar] [CrossRef]

- Tetlow, S.; Allwood, R.L. The use of a laser stripe illuminator for enhanced underwater viewing. In Proceedings of the Ocean Optics XII, Bergen, Norway, 26 October 1994; Volume 2258, pp. 547–555. [Google Scholar]

- McLeod, D.; Jacobson, J.; Hardy, M.; Embry, C. Autonomous inspection using an underwater 3D LiDAR. In An Ocean in Common, Proceedings of the 2013 OCEANS, San Diego, CA, USA, 23–27 September 2013; IEEE: New York, NY, USA, 2014. [Google Scholar]

- Moore, K.D. Intercalibration method for underwater three-dimensional mapping laser line scan systems. Appl. Opt. 2001, 40, 5991–6004. [Google Scholar] [CrossRef]

- Tan, C.S.; Seet, G.; Sluzek, A.; He, D.M. A novel application of range-gated underwater laser imaging system (ULIS) in near-target turbid medium. Opt. Lasers Eng. 2005, 43, 995–1009. [Google Scholar] [CrossRef]

- CathXOcean. 2022. Available online: https://cathxocean.com/ (accessed on 2 March 2023).

- Voyis. 2022. Available online: https://voyis.com/ (accessed on 2 March 2023).

- Kwon, Y.H.; Casebolt, J. Effects of light refraction on the accuracy of camera calibration and reconstruction in underwater motion analysis. Sports Biomech. 2006, 5, 315–340. [Google Scholar] [CrossRef]

- Telem, G.; Filin, S. Photogrammetric modeling of underwater environments. ISPRS J. Photogramm. Remote Sens. 2010, 65, 433–444. [Google Scholar] [CrossRef]

- Sedlazeck, A.; Koch, R. Perspective and non-perspective camera models in underwater imaging—Overview and error analysis. In Theoretical Foundations of Computer Vision; Springer: Berlin/Heidelberg, Germany, 2011; Volume 7474, pp. 212–242. [Google Scholar]

- Li, R.; Tao, C.; Curran, T.; Smith, R. Digital underwater photogrammetric system for large scale underwater spatial information acquisition. Mar. Geod. 1996, 20, 163–173. [Google Scholar] [CrossRef]

- Maas, H.G. On the accuracy potential in underwater/multimedia photogrammetry. Sensors 2015, 15, 1814–1852. [Google Scholar] [CrossRef]

- Beall, C.; Lawrence, B.J.; Ila, V.; Dellaert, F. 3D reconstruction of underwater structures. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4418–4423. [Google Scholar]

- Skinner, K.A.; Johnson-Roberson, M. Towards real-time underwater 3D reconstruction with plenoptic cameras. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejon, Republic of Korea, 1 October 2016; pp. 2014–2021. [Google Scholar]

- Vaarst. 2023. Available online: https://vaarst.com/subslam-3d-imaging-technology/ (accessed on 2 March 2023).

- Bruno, F.; Bianco, G.; Muzzupappa, M.; Barone, S.; Razionale, A.V. Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J. Photogramm. Remote Sens. 2011, 66, 508–518. [Google Scholar] [CrossRef]

- Bianco, G.; Gallo, A.; Bruno, F.; Muzzupappa, M. A comparative analysis between active and passive techniques for underwater 3D reconstruction of close-range objects. Sensors 2013, 13, 11007–11031. [Google Scholar] [CrossRef] [PubMed]

- Bräuer-Burchardt, C.; Heinze, M.; Schmidt, I.; Kühmstedt, P.; Notni, G. Underwater 3D surface measurement using fringe projection based scanning devices. Sensors 2016, 16, 13. [Google Scholar] [CrossRef] [PubMed]

- Duda, A.; Schwendner, J.; Gaudig, C. SRSL: Monocular self-referenced line structured light. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 717–722. [Google Scholar]

- Bleier, M.; van der Lucht, J.; Nüchter, A. Towards an underwater 3D laser scanning system for mobile mapping. In Proceedings of the IEEE ICRA Workshop on Underwater Robotic Perception (ICRAURP’19), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Bräuer-Burchardt, C.; Munkelt, C.; Bleier, M.; Heinze, M.; Gebhart, I.; Kühmstedt, P.; Notni, G. A New Sensor System for Accurate 3D Surface Measurements and Modeling of Underwater Objects. Appl. Sci. 2022, 12, 4139. [Google Scholar] [CrossRef]

- Heist, S.; Dietrich, P.; Landmann, M.; Kühmstedt, P.; Notni, G. High-speed 3D shape measurement by GOBO projection of aperiodic sinusoidal fringes: A performance analysis. In Proceedings of the SPIE Dimensional Optical Metrology and Inspection for Practical Applications VII, 106670A, Orlando, FL, USA, 14 May 2018; Volume 10667. [Google Scholar] [CrossRef]

- Luhmann, T.; Robson, S.; Kyle, S.; Harley, I. Close Range Photogrammetry; Wiley Whittles Publishing: Caithness, UK, 2006. [Google Scholar]

- Bräuer-Burchardt, C.; Munkelt, C.; Gebhart, I.; Heinze, M.; Heist, S.; Kühmstedt, P.; Notni, G. A-priori calibration of a structured light projection based underwater 3D scanner. J. Mar. Sci. Eng. 2020, 8, 635. [Google Scholar] [CrossRef]

- Bräuer-Burchardt, C.; Munkelt, C.; Gebhart, I.; Heinze, M.; Kühmstedt, P.; Notni, G. Underwater 3D Measurements with Advanced Camera Modelling. PFG-J. Photogramm. Remote Sens. Geoinf. Sci. 2022, 90, 55–67. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef] [Green Version]

- Hou, H.; El-Sheimy, N. Inertial sensors errors modeling using Allan varianc. In Proceedings of the 16th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GPS/GNSS 2003), Portland, OR, USA, 9–12 September 2003; pp. 2860–2867. [Google Scholar]

- Shortis, M. Camera calibration techniques for accurate measurement underwater. In 3D Recording and Interpretation for Maritime Archaeology; Coastal Research Library; McCarthy, J., Benjamin, J., Winton, T., van Duivenvoorde, W., Eds.; Springer: Cham, Switzerland, 2019; Volume 31. [Google Scholar]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Kruck, E. BINGO: Ein Bündelprogramm zur Simultanausgleichung für Ingenieuranwendungen—Möglichkeiten und praktische Ergebnisse. In Proceedings of the ISPRS, Rio de Janeiro, Brazil, 17–29 June 1984. International Archive for Photogrammetry and Remote Sensing. [Google Scholar]

- Bräuer-Burchardt, C.; Kühmstedt, P.; Notni, G. Improvement of measurement accuracy of optical 3D scanners by discrete systematic error estimation. In Combinatorial Image Analysis, Proceedings of the IWCIA 2018, Porto, Portugal, 22–24 November 2018; Barneva, R.P., Brimkov, V., Tavares, J., Eds.; Springer: Cham, Switzerland, 2018; Volume 11255, pp. 202–215. [Google Scholar]

| Sensor S1 | Water Basin | Sensor S2 | Offshore | ||

|---|---|---|---|---|---|

| Distance [m] | Length [mm] | n | Distance [m] | Length [mm] | n |

| 1.54 ± 0.00 | 497.602 ± 0.030 | 6 | 1.16 ± 0.02 | 499.655 ± 0.105 | 10 |

| 1.94 ± 0.01 | 497.873 ± 0.040 | 6 | 1.28 ± 0.01 | 500.067 ± 0.186 | 10 |

| 2.24 ± 0.00 | 498.144 ± 0.037 | 5 | 1.57 ± 0.03 | 501.716 ± 0.167 | 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bräuer-Burchardt, C.; Munkelt, C.; Bleier, M.; Heinze, M.; Gebhart, I.; Kühmstedt, P.; Notni, G. Underwater 3D Scanning System for Cultural Heritage Documentation. Remote Sens. 2023, 15, 1864. https://doi.org/10.3390/rs15071864

Bräuer-Burchardt C, Munkelt C, Bleier M, Heinze M, Gebhart I, Kühmstedt P, Notni G. Underwater 3D Scanning System for Cultural Heritage Documentation. Remote Sensing. 2023; 15(7):1864. https://doi.org/10.3390/rs15071864

Chicago/Turabian StyleBräuer-Burchardt, Christian, Christoph Munkelt, Michael Bleier, Matthias Heinze, Ingo Gebhart, Peter Kühmstedt, and Gunther Notni. 2023. "Underwater 3D Scanning System for Cultural Heritage Documentation" Remote Sensing 15, no. 7: 1864. https://doi.org/10.3390/rs15071864

APA StyleBräuer-Burchardt, C., Munkelt, C., Bleier, M., Heinze, M., Gebhart, I., Kühmstedt, P., & Notni, G. (2023). Underwater 3D Scanning System for Cultural Heritage Documentation. Remote Sensing, 15(7), 1864. https://doi.org/10.3390/rs15071864