Masked Graph Convolutional Network for Small Sample Classification of Hyperspectral Images

Abstract

1. Introduction

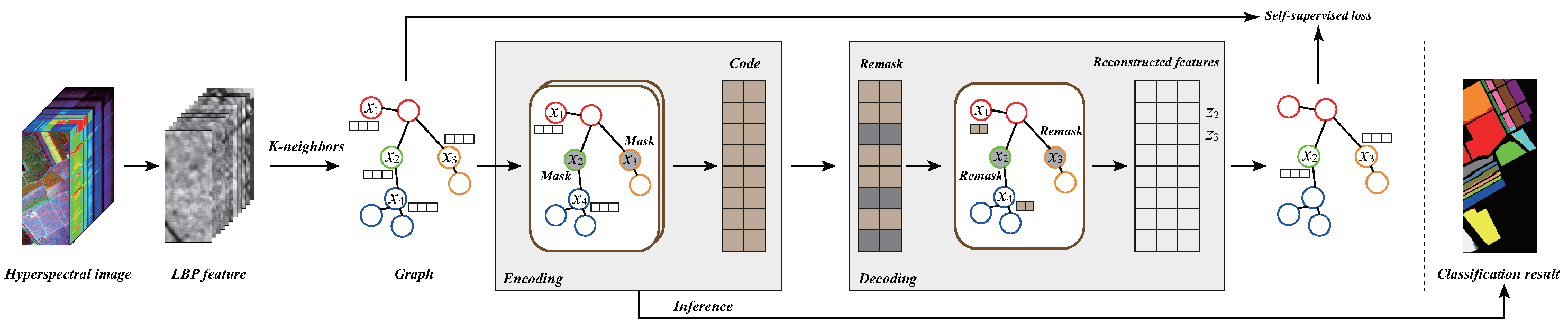

- A graph-based self-supervised learning method with the rotation-invariant uniform local binary pattern (RULBP) features is proposed to deal with the small sample problem of HSI classification. The goal of training is to reconstruct the features with masking. Therefore, the training process does not need to use any label information.

- The RULBP features of the HSI are used to calculate the k-nearest neighbor when constructing the graph so that the deep model could better use the spatial–spectral information of HSIs.

- A large number of classification experiments on three hyperspectral datasets verify the effectiveness of the proposed method for small sample classification.

2. Related Work

2.1. Early Research

2.2. Deep Learning-Based HSI Classification

3. Methodology

3.1. Workflow

3.2. RULBP Feature Extraction

3.3. Graph Construction by K-Neighbors

3.4. Graph-Based Self-Supervised Learning

4. Experimental Results

4.1. HSI Datasets

4.2. Experimental Settings

4.3. Results and Comparison

4.4. Influence of Labeled Training Samples

5. Analysis and Discussion

5.1. Influence of Different Feature Extraction Methods

5.2. Influence of Dimension of RULBP Features

5.3. Influence of Number of Neighbors

5.4. Influence of Mask Ratio

5.5. Execution Efficiency

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, J.; Zhang, H.; Zhang, L.; Huang, X.; Zhang, L. Joint Collaborative Representation With Multitask Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5923–5936. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Zhang, P.; Yu, A.; Fu, Q.; Wei, X. Supervised Deep Feature Extraction for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1909–1921. [Google Scholar] [CrossRef]

- Transon, J.; D’Andrimont, R.; Maugnard, A.; Defourny, P. Survey of Hyperspectral Earth Observation Applications from Space in the Sentinel-2 Context. Remote Sens. 2018, 10, 157. [Google Scholar] [CrossRef]

- Paoletti, M.; Haut, J.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Guan, R.; Li, Z.; Li, T.; Li, X.; Yang, J.; Chen, W. Classification of Heterogeneous Mining Areas Based on ResCapsNet and Gaofen-5 Imagery. Remote Sens. 2022, 14, 3216. [Google Scholar] [CrossRef]

- Shi, C.; Sun, J.; Wang, T.; Wang, L. Hyperspectral Image Classification Based on a 3D Octave Convolution and 3D Multiscale Spatial Attention Network. Remote Sens. 2023, 15, 257. [Google Scholar] [CrossRef]

- Zhao, L.; Tan, K.; Wang, X.; Ding, J.; Liu, Z.; Ma, H.; Han, B. Hyperspectral Feature Selection for SOM Prediction Using Deep Reinforcement Learning and Multiple Subset Evaluation Strategies. Remote Sens. 2023, 15, 127. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, T.; Xie, B.; Mei, S. Hyperspectral Image Super-Resolution Classification with a Small Training Set Using Spectral Variation Extended Endmember Library. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 3001–3004. [Google Scholar] [CrossRef]

- Gao, K.; Liu, B.; Yu, X.; Yu, A. Unsupervised Meta Learning With Multiview Constraints for Hyperspectral Image Small Sample set Classification. IEEE Trans. Image Process. 2022, 31, 3449–3462. [Google Scholar] [CrossRef]

- Tang, C.; Liu, X.; Zhu, E.; Wang, L.; Zomaya, A.Y. Hyperspectral Band Selection via Spatial-Spectral Weighted Region-wise Multiple Graph Fusion-Based Spectral Clustering. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI 2021, Montreal, QC, Canada, 19–27 August 2021; pp. 3038–3044. [Google Scholar] [CrossRef]

- Shafaey, M.A.; Melgani, F.; Salem, M.A.M.; Al-Berry, M.N.; Ebied, H.M.; El-Dahshan, E.S.A.; Tolba, M.F. Pixel-Wise Classification of Hyperspectral Images With 1D Convolutional SVM Networks. IEEE Access 2022, 10, 133174–133185. [Google Scholar] [CrossRef]

- Özdemir, A.O.B.; Gedik, B.E.; Çetin, C.Y.Y. Hyperspectral classification using stacked autoencoders with deep learning. In Proceedings of the 2014 6th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lausanne, Switzerland, 24–27 June 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Li, J. Active learning for hyperspectral image classification with a stacked autoencoders based neural network. In Proceedings of the 2015 7th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Tokyo, Japan, 2–5 June 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Zhong, P.; Gong, Z.; Li, S.; Schönlieb, C.B. Learning to Diversify Deep Belief Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3516–3530. [Google Scholar] [CrossRef]

- Lee, H.; Kwon, H. Going Deeper With Contextual CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Xu, M.; Zhou, J.; Jia, S. Unsupervised Spatial-Spectral CNN-Based Feature Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Liang, L.; Zhang, S.; Li, J. Multiscale DenseNet Meets With Bi-RNN for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5401–5415. [Google Scholar] [CrossRef]

- Huang, L.; Chen, Y. Dual-Path Siamese CNN for Hyperspectral Image Classification With Limited Training Samples. IEEE Geosci. Remote Sens. Lett. 2021, 18, 518–522. [Google Scholar] [CrossRef]

- Ahmad, M.; Khan, A.M.; Mazzara, M.; Distefano, S.; Ali, M.; Sarfraz, M.S. A Fast and Compact 3-D CNN for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Ghaderizadeh, S.; Abbasi-Moghadam, D.; Sharifi, A.; Zhao, N.; Tariq, A. Hyperspectral Image Classification Using a Hybrid 3D-2D Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7570–7588. [Google Scholar] [CrossRef]

- Roy, S.K.; Krishna, G.; Dubey, S.R.; Chaudhuri, B.B. HybridSN: Exploring 3-D–2-D CNN Feature Hierarchy for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 277–281. [Google Scholar] [CrossRef]

- Yu, C.; Han, R.; Song, M.; Liu, C.; Chang, C.I. A Simplified 2D-3D CNN Architecture for Hyperspectral Image Classification Based on Spatial–Spectral Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2485–2501. [Google Scholar] [CrossRef]

- Gao, K.; Yu, X.; Tan, X.; Liu, B.; Sun, Y. Small sample classification for hyperspectral imagery using temporal convolution and attention mechanism. Remote Sens. Lett. 2021, 12, 510–519. [Google Scholar] [CrossRef]

- Gao, K.; Liu, B.; Yu, X.; Qin, J.; Zhang, P.; Tan, X. Deep Relation Network for Hyperspectral Image Few-Shot Classification. Remote Sens. 2020, 12, 923. [Google Scholar] [CrossRef]

- Jia, S.; Jiang, S.; Lin, Z.; Li, N.; Xu, M.; Yu, S. A survey: Deep learning for hyperspectral image classification with few labeled samples. Neurocomputing 2021, 448, 179–204. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Zhang, P.; Tan, X.; Yu, A.; Xue, Z. A semi-supervised convolutional neural network for hyperspectral image classification. Remote Sens. Lett. 2017, 8, 839–848. [Google Scholar] [CrossRef]

- Zhan, Y.; Hu, D.; Wang, Y.; Yu, X. Semisupervised Hyperspectral Image Classification Based on Generative Adversarial Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 212–216. [Google Scholar] [CrossRef]

- Liu, B.; Yu, A.; Yu, X.; Wang, R.; Gao, K.; Guo, W. Deep Multiview Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7758–7772. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Yu, A.; Zhang, P.; Wan, G.; Wang, R. Deep Few-Shot Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2290–2304. [Google Scholar] [CrossRef]

- Zuo, X.; Yu, X.; Liu, B.; Zhang, P.; Tan, X.; Wei, X. Graph inductive learning method for small sample classification of hyperspectral remote sensing images. Eur. J. Remote Sens. 2020, 53, 349–357. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Zhao, X.; Niu, J.; Liu, C.; Ding, Y.; Hong, D. Hyperspectral Image Classification Based on Graph Transformer Network and Graph Attention Mechanism. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Kang, X.; Xiang, X.; Li, S.; Benediktsson, J.A. PCA-Based Edge-Preserving Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7140–7151. [Google Scholar] [CrossRef]

- Zhou, L.; Ma, X.; Wang, X.; Hao, S.; Ye, Y.; Zhao, K. Shallow-to-Deep Spatial-Spectral Feature Enhancement for Hyperspectral Image Classification. Remote Sens. 2023, 15, 261. [Google Scholar] [CrossRef]

- Li, F.; Xu, L.; Wong, A.; Clausi, D.A. Feature Extraction for Hyperspectral Imagery via Ensemble Localized Manifold Learning. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2486–2490. [Google Scholar] [CrossRef]

- Park, S.; Lee, H.J.; Ro, Y.M. Adversarially Robust Hyperspectral Image Classification via Random Spectral Sampling and Spectral Shape Encoding. IEEE Access 2021, 9, 66791–66804. [Google Scholar] [CrossRef]

- Hu, X.; Wang, X.; Zhong, Y.; Zhao, J.; Luo, C.; Wei, L. SPNet: A Spectral Patching Network for End-To-End Hyperspectral Image Classification. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 963–966. [Google Scholar] [CrossRef]

- Liu, B.; Gao, K.; Yu, A.; Ding, L.; Qiu, C.; Li, J. ES2FL: Ensemble Self-Supervised Feature Learning for Small Sample Classification of Hyperspectral Images. Remote Sens. 2022, 14, 4236. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, B.; Yu, X.; Yu, A.; Gao, K.; Ding, L. From Video to Hyperspectral: Hyperspectral Image-Level Feature Extraction with Transfer Learning. Remote Sens. 2022, 14, 5118. [Google Scholar] [CrossRef]

- Fauvel, M.; Benediktsson, J.A.; Chanussot, J.; Sveinsson, J.R. Spectral and Spatial Classification of Hyperspectral Data Using SVMs and Morphological Profiles. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3804–3814. [Google Scholar] [CrossRef]

- Huang, K.K.; Ren, C.X.; Liu, H.; Lai, Z.R.; Yu, Y.F.; Dai, D.Q. Hyperspectral Image Classification via Discriminant Gabor Ensemble Filter. IEEE Trans. Cybern. 2022, 52, 8352–8365. [Google Scholar] [CrossRef]

- Li, W.; Chen, C.; Su, H.; Du, Q. Local Binary Patterns and Extreme Learning Machine for Hyperspectral Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3681–3693. [Google Scholar] [CrossRef]

- Khodadadzadeh, M.; Li, J.; Plaza, A.; Ghassemian, H.; Bioucas-Dias, J.M.; Li, X. Spectral–Spatial Classification of Hyperspectral Data Using Local and Global Probabilities for Mixed Pixel Characterization. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6298–6314. [Google Scholar] [CrossRef]

- Khodadadzadeh, M.; Rajabi, R.; Ghassemian, H. A novel approach for spectral-spatial classification of hyperspectral data based on SVM-MRF method. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 1890–1893. [Google Scholar] [CrossRef]

- Zhang, W.; Du, P.; Lin, C.; Fu, P.; Wang, X.; Bai, X.; Zheng, H.; Xia, J.; Samat, A. An Improved Feature Set for Hyperspectral Image Classification: Harmonic Analysis Optimized by Multiscale Guided Filter. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3903–3916. [Google Scholar] [CrossRef]

- Dundar, T.; Ince, T. Sparse Representation-Based Hyperspectral Image Classification Using Multiscale Superpixels and Guided Filter. IEEE Geosci. Remote Sens. Lett. 2019, 16, 246–250. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X. Patch-Free Bilateral Network for Hyperspectral Image Classification Using Limited Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10794–10807. [Google Scholar] [CrossRef]

- Zhang, M.; Li, W.; Du, Q. Diverse Region-Based CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2018, 27, 2623–2634. [Google Scholar] [CrossRef] [PubMed]

- Gao, K.; Liu, B.; Yu, X.; Zhang, P.; Tan, X.; Sun, Y. Small sample classification of hyperspectral image using model-agnostic meta-learning algorithm and convolutional neural network. Int. J. Remote Sens. 2021, 42, 3090–3122. [Google Scholar] [CrossRef]

- Xu, Q.; Xiao, Y.; Wang, D.; Luo, B. CSA-MSO3DCNN: Multiscale Octave 3D CNN with Channel and Spatial Attention for Hyperspectral Image Classification. Remote Sens. 2020, 12, 188. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral–Spatial Residual Network for Hyperspectral Image Classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 847–858. [Google Scholar] [CrossRef]

- Ma, A.; Filippi, A.M.; Wang, Z.; Yin, Z.; Huo, D.; Li, X.; Güneralp, B. Fast Sequential Feature Extraction for Recurrent Neural Network-Based Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5920–5937. [Google Scholar] [CrossRef]

- Hang, R.; Liu, Q.; Hong, D.; Ghamisi, P. Cascaded Recurrent Neural Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5384–5394. [Google Scholar] [CrossRef]

- Yu, C.; Han, R.; Song, M.; Liu, C.; Chang, C.I. Feedback Attention-Based Dense CNN for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Haut, J.M.; Paoletti, M.E.; Plaza, J.; Plaza, A.; Li, J. Visual Attention-Driven Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8065–8080. [Google Scholar] [CrossRef]

- Bai, J.; Wen, Z.; Xiao, Z.; Ye, F.; Zhu, Y.; Alazab, M.; Jiao, L. Hyperspectral Image Classification Based on Multibranch Attention Transformer Networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Tan, X.; Gao, K.; Liu, B.; Fu, Y.; Kang, L. Deep global-local transformer network combined with extended morphological profiles for hyperspectral image classification. J. Appl. Remote Sens. 2021, 15, 038509. [Google Scholar] [CrossRef]

- Xibing, Z.U.O.; Bing, L.I.U.; Xuchu, Y.U.; Pengqiang, Z.H.A.N.G.; Kuiliang, G.A.O.; Enze, Z.H.U. Graph convolutional network method for small sample classification of hyperspectral images. Acta Geod. Et Cartogr. Sin. 2021, 50, 1358. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. CoRR 2018, 1050, 10-48550. [Google Scholar]

- Zhang, Y.; Li, W.; Zhang, M.; Wang, S.; Tao, R.; Du, Q. Graph Information Aggregation Cross-Domain Few-Shot Learning for Hyperspectral Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–14. [Google Scholar] [CrossRef]

- Lee, H.; Eum, S.; Kwon, H. Exploring Cross-Domain Pretrained Model for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, B.; Yu, X.; Yu, A.; Gao, K.; Ding, L. Perceiving Spectral Variation: Unsupervised Spectrum Motion Feature Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; (Long and Short Papers). Burstein, J., Doran, C., Solorio, T., Eds.; Association for Computational Linguistics: Toronto, ON, Canada, 2019; Volume 1, pp. 4171–4186. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, Virtual, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; NeurIPS: New Orleans, LA, USA, 2020. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R.B. Masked Autoencoders Are Scalable Vision Learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 15979–15988. [Google Scholar] [CrossRef]

| UP | IP | SA | |

|---|---|---|---|

| Spatial size | |||

| Spectral range | 430–860 | 400–2500 | 400–2500 |

| No. of bands | 103 | 200 | 204 |

| GSD | 1.3 | 20 | 3.7 |

| Sensor type | ROSIS | AVIRIS | AVIRIS |

| Areas | Pavia | Indiana | California |

| No. of classes | 9 | 16 | 16 |

| Labeled samples | 42,776 | 10,249 | 54,129 |

| Supervised | Unsupervised | ||||||

|---|---|---|---|---|---|---|---|

| DFSL | RN-FSC | CDPM | Gia-CFSL | UML | SMF-UL | RULBP-MGCN | |

| 1 | 90.79 ± 3.97 | 93.7 ± 2.41 | 70.74 ± 8.92 | 95.27 ± 2.24 | 88.87 ± 2.99 | 96.36 ± 0.98 | 93.19 ± 1.03 |

| 2 | 97.13 ± 1.65 | 94.09 ± 2.22 | 84.71 ± 5.42 | 96.79 ± 1.45 | 93.67 ± 1.14 | 97.89 ± 0.75 | 99.75 ± 0.34 |

| 3 | 71.83 ± 7.14 | 69.89 ± 5.64 | 80.38 ± 3.25 | 75.86 ± 5.48 | 88.09 ± 2.95 | 88.25 ± 2.12 | 90.54 ± 2.09 |

| 4 | 90.08 ± 10.94 | 91.39 ± 8.10 | 90.44 ± 5.62 | 79.84 ± 4.31 | 88.24 ± 2.36 | 68.62 ± 5.67 | 73.79 ± 3.12 |

| 5 | 99.53 ± 0.22 | 99.20 ± 0.42 | 98.61 ± 0.78 | 99.97 ± 0.03 | 97.81 ± 1.87 | 96.38 ± 1.07 | 100.00 ± 0.00 |

| 6 | 66.63 ± 14.14 | 59.74 ± 10.59 | 70.81 ± 8.97 | 64.25 ± 7.12 | 94.81 ± 1.24 | 91.37 ± 1.42 | 89.04 ± 1.15 |

| 7 | 84.72 ± 3.90 | 69.58 ± 7.92 | 90.81 ± 6.67 | 64.37 ± 7.41 | 94.77 ± 1.98 | 85.87 ± 2.69 | 82.57 ± 2.38 |

| 8 | 75.91 ± 6.28 | 78.72 ± 7.42 | 77.24 ± 5.47 | 87.75 ± 4.34 | 89.09 ± 4.67 | 87.51 ± 2.42 | 90.49 ± 2.97 |

| 9 | 96.83 ± 1.28 | 95.97 ± 2.10 | 98.49 ± 1.12 | 99.51 ± 0.37 | 93.16 ± 3.92 | 99.79 ± 0.17 | 73.55 ± 0.86 |

| OA | 85.77 ± 4.62 | 84.24 ± 3.93 | 82.83 ± 2.48 | 86.10 ± 1.92 | 90.41 ± 1.67 | 91.84 ± 1.39 | 92.29 ± 1.27 |

| AA | 85.94 ± 2.58 | 83.59 ± 3.62 | 84.47 ± 2.97 | 84.84 ± 1.88 | 91.06 ± 1.45 | 91.22 ± 1.58 | 88.10 ± 1.13 |

| 81.77 ± 5.51 | 79.66 ± 4.81 | 76.76 ± 3.69 | 82.17 ± 1.99 | 88.41 ± 1.62 | 89.43 ± 2.19 | 90.01 ± 1.86 | |

| Supervised | Unsupervised | ||||||

|---|---|---|---|---|---|---|---|

| DFSL | RN-FSC | CDPM | Gia-CFSL | UML | SMF-UL | RULBP-MGCN | |

| 1 | 60.02 ± 13.70 | 58.67 ± 14.12 | 98.26 ± 0.75 | 88.95 ± 3.74 | 90.01 ± 3.91 | 71.44 ± 10.90 | 90.20 ± 2.85 |

| 2 | 66.82 ± 6.76 | 63.75 ± 5.62 | 55.08 ± 8.64 | 72.80 ± 6.62 | 70.99 ± 6.36 | 74.43 ± 5.09 | 89.85 ± 4.87 |

| 3 | 66.71 ± 4.46 | 47.38 ± 9.72 | 62.14 ± 5.24 | 98.38 ± 0.77 | 79.10 ± 5.01 | 72.46 ± 6.05 | 71.08 ± 2.63 |

| 4 | 61.68 ± 6.48 | 46.69 ± 8.86 | 72.01 ± 4.92 | 97.45 ± 1.69 | 89.69 ± 4.56 | 73.23 ± 4.37 | 90.03 ± 3.99 |

| 5 | 90.83 ± 6.02 | 86.82 ± 5.95 | 84.91 ± 5.51 | 92.79 ± 2.51 | 89.34 ± 3.63 | 84.28 ± 6.32 | 94.17 ± 4.96 |

| 6 | 98.33 ± 0.84 | 93.32 ± 3.33 | 91.68 ± 2.98 | 95.81 ± 1.42 | 96.14 ± 1.24 | 96.89 ± 2.14 | 95.89 ± 2.17 |

| 7 | 47.73 ± 9.86 | 35.83 ± 10.61 | 98.92 ± 0.99 | 76.85 ± 8.83 | 90.01 ± 3.07 | 54.80 ± 8.58 | 82.35 ± 5.69 |

| 8 | 100.00 ± 0.0 | 98.86 ± 0.74 | 92.37 ± 1.95 | 100.00 ± 0.0 | 99.23 ± 0.45 | 99.89 ± 0.07 | 100.00 ± 0.00 |

| 9 | 29.19 ± 7.60 | 30.62 ± 8.97 | 100.00 ± 0.0 | 47.76 ± 9.97 | 100.00 ± 0.0 | 62.05 ± 9.45 | 32.26 ± 9.78 |

| 10 | 59.38 ± 8.59 | 56.14 ± 7.62 | 74.67 ± 6.66 | 69.09 ± 4.91 | 74.02 ± 5.62 | 79.94 ± 4.32 | 79.40 ± 3.42 |

| 11 | 83.47 ± 3.99 | 70.08 ± 8.82 | 55.50 ± 9.92 | 87.46 ± 3.84 | 83.42 ± 4.21 | 91.21 ± 2.19 | 97.10 ± 1.63 |

| 12 | 85.22 ± 5.54 | 36.36 ± 10.72 | 54.92 ± 9.21 | 54.58 ± 7.73 | 78.70 ± 4.06 | 62.54 ± 6.06 | 96.89 ± 1.20 |

| 13 | 95.26 ± 2.86 | 82.91 ± 6.63 | 98.82 ± 0.82 | 98.97 ± 0.79 | 99.51 ± 0.32 | 92.43 ± 1.48 | 91.89 ± 2.12 |

| 14 | 97.89 ± 2.10 | 96.92 ± 2.95 | 83.60 ± 6.74 | 96.71 ± 1.41 | 95.53 ± 1.14 | 99.21 ± 0.64 | 99.60 ± 0.38 |

| 15 | 70.78 ± 4.53 | 65.92 ± 7.62 | 67.45 ± 8.54 | 79.98 ± 6.79 | 95.80 ± 1.77 | 94.88 ± 1.71 | 97.89 ± 0.98 |

| 16 | 69.55 ± 8.94 | 50.49 ± 11.64 | 99.89 ± 0.49 | 90.05 ± 3.42 | 98.92 ± 0.45 | 96.48 ± 0.98 | 86.79 ± 0.37 |

| OA | 75.72 ± 2.08 | 66.32 ± 3.91 | 69.89 ± 2.94 | 80.35 ± 2.04 | 83.78 ± 2.49 | 81.54 ± 2.56 | 90.75 ± 2.04 |

| AA | 71.87 ± 1.75 | 63.80 ± 3.45 | 78.52 ± 2.78 | 80.11 ± 2.43 | 81.77 ± 2.73 | 79.72 ± 2.29 | 87.21 ± 1.98 |

| 72.74 ± 2.29 | 62.24 ± 3.21 | 65.49 ± 2.59 | 77.89 ± 3.75 | 80.30 ± 2.61 | 80.11 ± 2.83 | 89.53 ± 2.33 | |

| Supervised | Unsupervised | ||||||

|---|---|---|---|---|---|---|---|

| DFSL | RN-FSC | CDPM | Gia-CFSL | UML | SMF-UL | RULBP-MGCN | |

| 1 | 100.00 ± 0.0 | 98.14 ± 1.64 | 97.49 ± 1.12 | 99.02 ± 0.12 | 99.99 ± 0.01 | 98.24 ± 0.70 | 99.85 ± 0.12 |

| 2 | 98.90 ± 1.10 | 99.44 ± 0.02 | 99.57 ± 0.12 | 99.67 ± 0.23 | 98.60 ± 0.24 | 98.97 ± 0.88 | 100.00 ± 0.00 |

| 3 | 94.17 ± 3.29 | 92.91 ± 2.63 | 98.09 ± 0.66 | 98.92 ± 0.45 | 96.59 ± 0.92 | 95.04 ± 1.63 | 91.46 ± 1.53 |

| 4 | 97.77 ± 1.98 | 97.95 ± 1.62 | 98.73 ± 1.42 | 91.87 ± 2.42 | 98.05 ± 0.93 | 96.24 ± 1.21 | 84.19 ± 4.86 |

| 5 | 97.81 ± 0.89 | 99.87 ± 0.04 | 98.82 ± 1.01 | 99.99 ± 0.01 | 98.67 ± 1.01 | 98.93 ± 0.62 | 100.00 ± 0.00 |

| 6 | 99.52 ± 0.42 | 99.23 ± 0.42 | 98.86 ± 0.61 | 99.99 ± 0.01 | 98.72 ± 0.62 | 98.14 ± 0.58 | 100.00 ± 0.00 |

| 7 | 99.68 ± 0.64 | 96.52 ± 1.45 | 98.61 ± 0.78 | 99.40 ± 0.26 | 99.04 ± 0.27 | 97.96 ± 1.07 | 100.00 ± 0.00 |

| 8 | 85.93 ± 3.32 | 81.82 ± 2.39 | 68.06 ± 5.94 | 87.22 ± 1.45 | 87.36 ± 2.38 | 93.59 ± 1.24 | 95.20 ± 0.79 |

| 9 | 99.57 ± 3.30 | 99.55 ± 0.04 | 98.58 ± 1.14 | 99.51 ± 0.23 | 98.55 ± 0.93 | 98.55 ± 0.69 | 99.56 ± 0.74 |

| 10 | 85.72 ± 0.25 | 88.04 ± 3.42 | 92.41 ± 2.12 | 96.05 ± 1.14 | 95.58 ± 1.12 | 94.22 ± 1.01 | 99.48 ± 0.89 |

| 11 | 69.79 ± 3.63 | 63.09 ± 5.63 | 95.28 ± 0.42 | 92.36 ± 1.74 | 96.13 ± 1.45 | 94.22 ± 1.62 | 85.05 ± 1.12 |

| 12 | 88.41 ± 10.13 | 93.75 ± 1.24 | 99.93 ± 0.02 | 99.74 ± 0.22 | 99.15 ± 0.21 | 94.68 ± 0.95 | 95.76 ± 1.03 |

| 13 | 94.76 ± 3.93 | 86.80 ± 3.42 | 99.65 ± 0.12 | 95.26 ± 1.45 | 99.15 ± 0.23 | 87.81 ± 2.18 | 81.78 ± 1.21 |

| 14 | 88.07 ± 3.77 | 95.17 ± 2.14 | 96.23 ± 1.14 | 98.19 ± 0.24 | 96.63 ± 1.45 | 82.26 ± 3.17 | 95.17 ± 2.06 |

| 15 | 71.88 ± 6.27 | 65.48 ± 3.45 | 71.59 ± 1.42 | 72.96 ± 2.45 | 92.36 ± 2.44 | 97.45 ± 0.46 | 99.59 ± 0.24 |

| 16 | 99.97 ± 0.04 | 95.01 ± 1.04 | 97.22 ± 2.45 | 98.27 ± 0.45 | 99.42 ± 0.07 | 99.15 ± 0.12 | 99.76 ± 0.06 |

| OA | 89.93 ± 0.54 | 87.88 ± 2.34 | 89.15 ± 1.45 | 92.30 ± 1.96 | 94.35 ± 1.01 | 95.84 ± 0.42 | 97.06 ± 0.38 |

| AA | 92.01 ± 0.74 | 90.67 ± 1.04 | 93.37 ± 1.15 | 95.36 ± 2.40 | 95.06 ± 1.23 | 95.27 ± 0.79 | 95.41 ± 0.66 |

| 88.83 ± 0.59 | 86.57 ± 1.45 | 88.27 ± 2.40 | 91.44 ± 2.03 | 94.94 ± 1.12 | 95.37 ± 0.88 | 96.73 ± 0.74 | |

| PCA | EMP | LBP | RULBP | |

|---|---|---|---|---|

| UP | 80.33 | 89.12 | 90.31 | 92.29 |

| IP | 81.36 | 87.69 | 89.06 | 90.75 |

| SA | 88.09 | 95.34 | 96.17 | 97.06 |

| 11 | 165 | 330 | 495 | |

|---|---|---|---|---|

| UP | 74.47 | 92.29 | 89.60 | 87.11 |

| IP | 72.97 | 84.95 | 90.75 | 90.87 |

| SA | 77.90 | 94.70 | 97.06 | 96.93 |

| Phases | DFSL + SVM | RN-FSC | UML | RULBP-MGCN |

|---|---|---|---|---|

| Pre-training | 118.23 min | 319.84 min | 316.87 min | 23.89 min |

| Training | 9.15 s | 80.47 s | 363.32 s | 113.74 s |

| Classification | 1.88 s | 19.12 s | 141.83 s | 9.73 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Liu, B.; He, P.; Hu, Q.; Gao, K.; Li, H. Masked Graph Convolutional Network for Small Sample Classification of Hyperspectral Images. Remote Sens. 2023, 15, 1869. https://doi.org/10.3390/rs15071869

Liu W, Liu B, He P, Hu Q, Gao K, Li H. Masked Graph Convolutional Network for Small Sample Classification of Hyperspectral Images. Remote Sensing. 2023; 15(7):1869. https://doi.org/10.3390/rs15071869

Chicago/Turabian StyleLiu, Wenkai, Bing Liu, Peipei He, Qingfeng Hu, Kuiliang Gao, and Hui Li. 2023. "Masked Graph Convolutional Network for Small Sample Classification of Hyperspectral Images" Remote Sensing 15, no. 7: 1869. https://doi.org/10.3390/rs15071869

APA StyleLiu, W., Liu, B., He, P., Hu, Q., Gao, K., & Li, H. (2023). Masked Graph Convolutional Network for Small Sample Classification of Hyperspectral Images. Remote Sensing, 15(7), 1869. https://doi.org/10.3390/rs15071869