Few-Shot PolSAR Ship Detection Based on Polarimetric Features Selection and Improved Contrastive Self-Supervised Learning

Abstract

1. Introduction

- (1)

- To our best knowledge, this is the first study introducing self-supervised learning into PolSAR ship detection. An obvious performance gain is achieved by CSSL, especially when the training samples are few.

- (2)

- We propose an improved CSSL method with two new modules. The multi-scale feature fusion module enhances the representation capability via multi-scale feature fusion, and the mix-up auxiliary pathway improves the robustness of the features through a mix-up regularization strategy.

- (3)

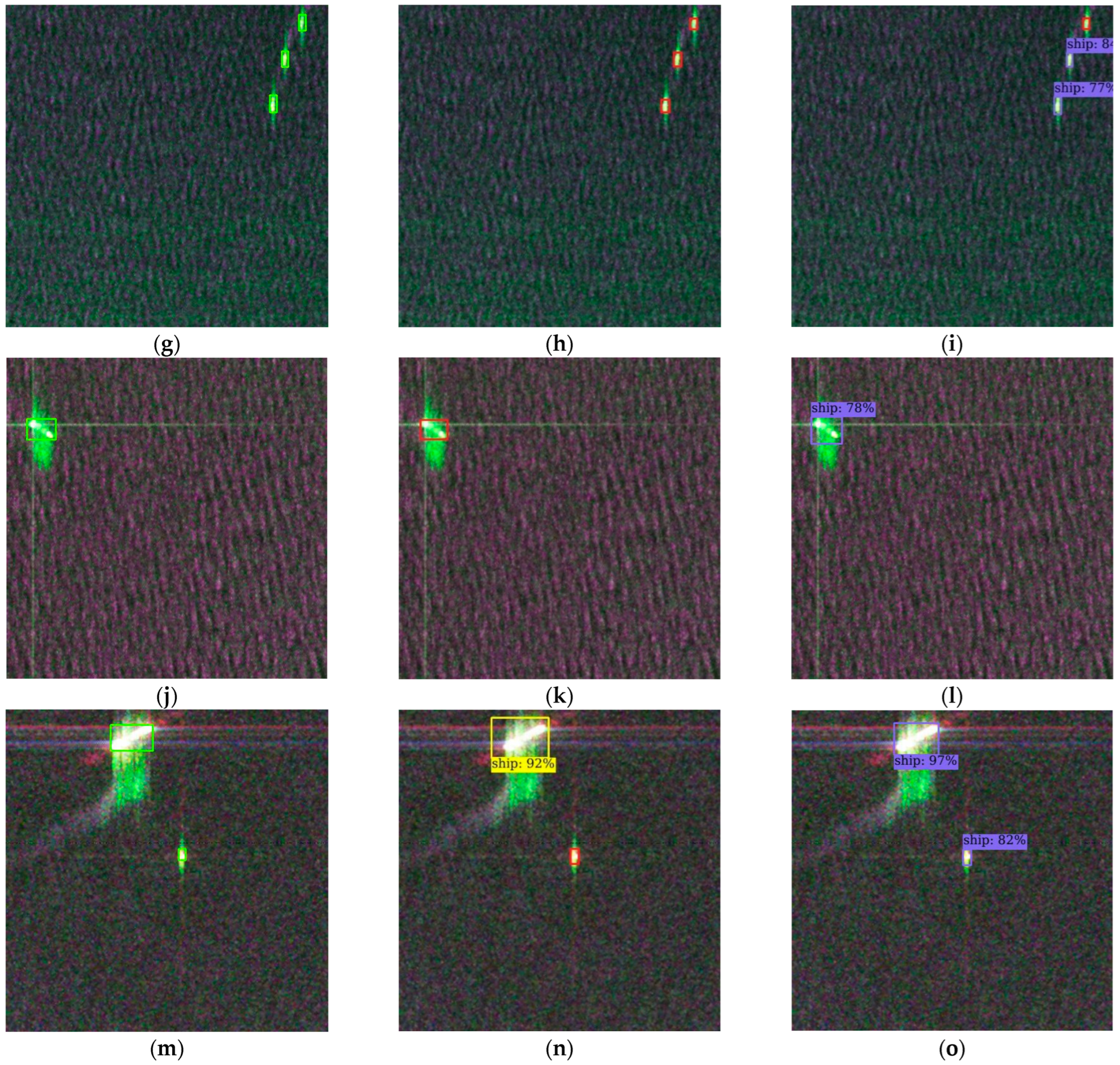

- Eight various polarimetric features are extracted by different polarization decomposition, polarimetric coherence, and speckle filtering, and the effect of them with the proposed improved CSSL method is compared to explore which polarimetric feature is more suitable to our contrastive learning framework.

- (4)

- Comprehensive experiments are conducted to validate the effectiveness of the proposed method, and the results indicate that our method achieves state-of-the-art PolSAR ship detection performance in comparison with recent studies, especially under the few-shot situation. In addition, our method also mitigates the shortcomings of other few-shot learning methods.

2. Methods

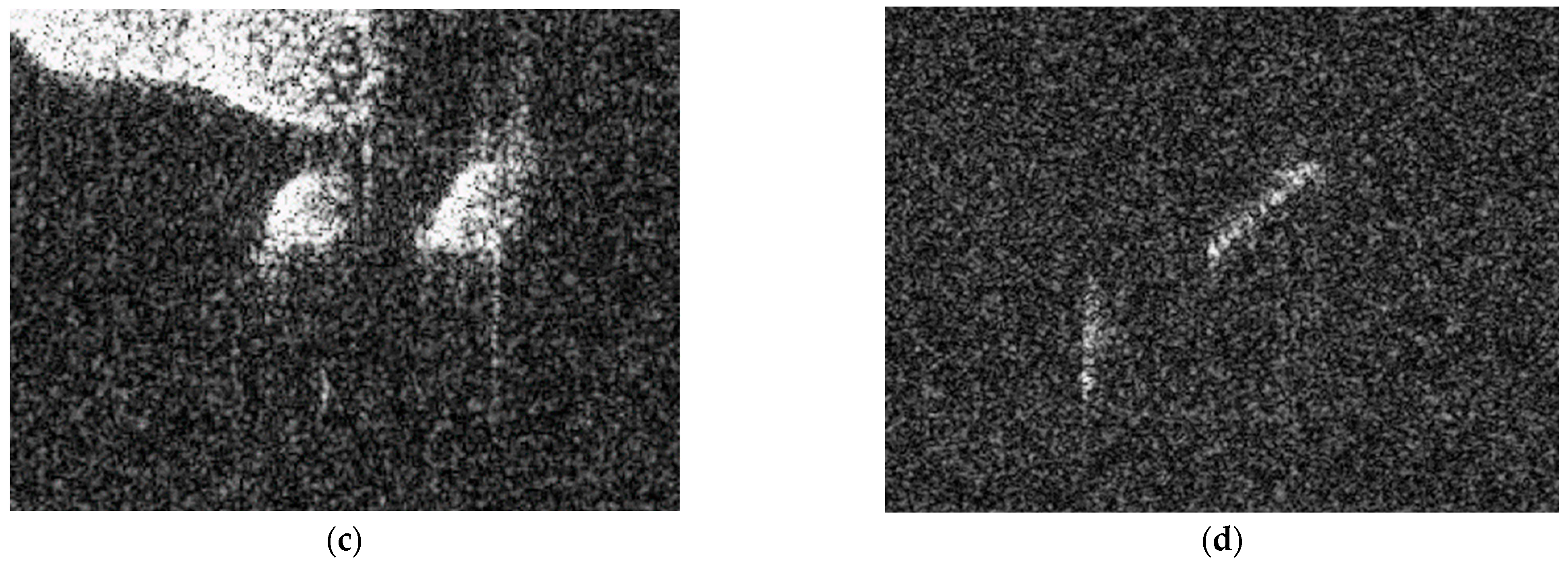

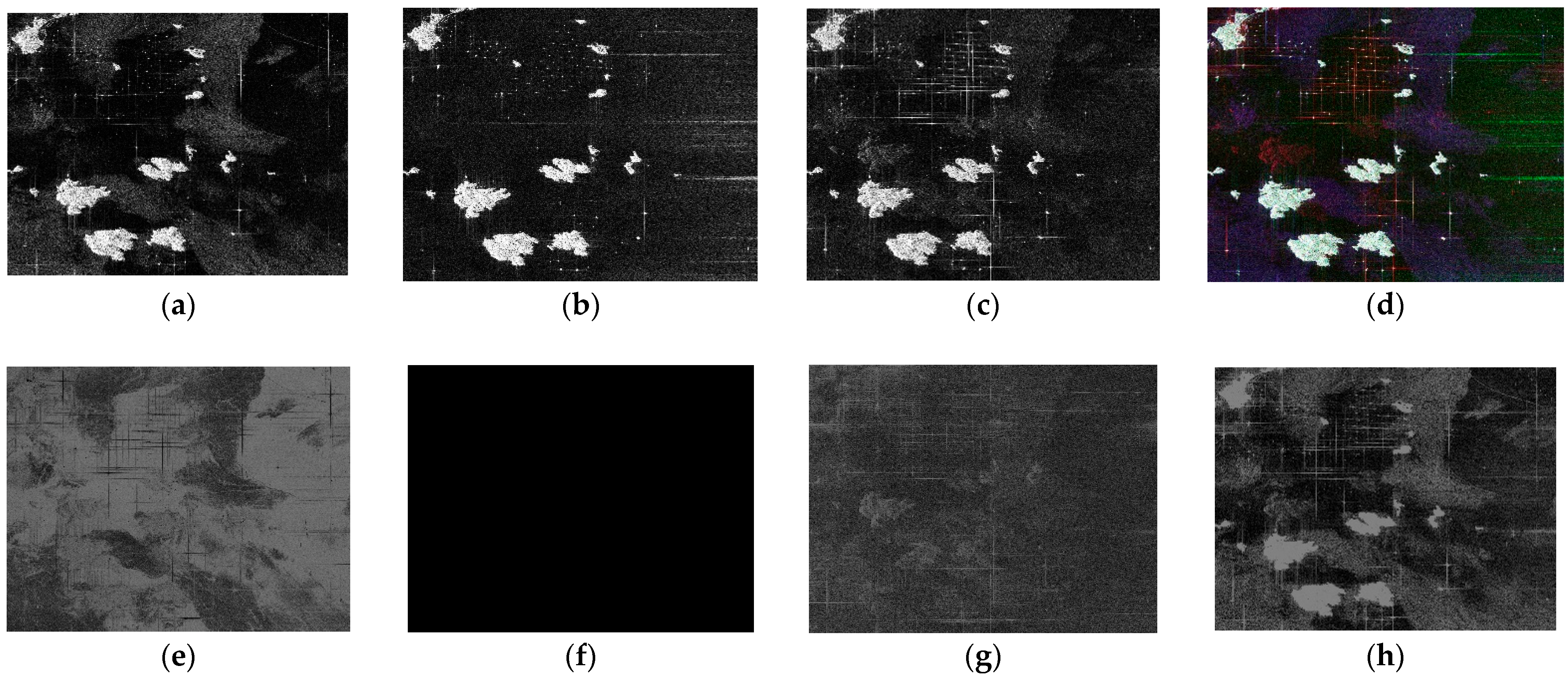

2.1. Input Data Construction by Polarimetric Feature Extraction

2.1.1. Polarization Decomposition

2.1.2. Polarimetric Coherence

2.1.3. Speckle Filtering

2.2. Pre-Training of Feature Extraction Network Based on Improved Contrastive Learning

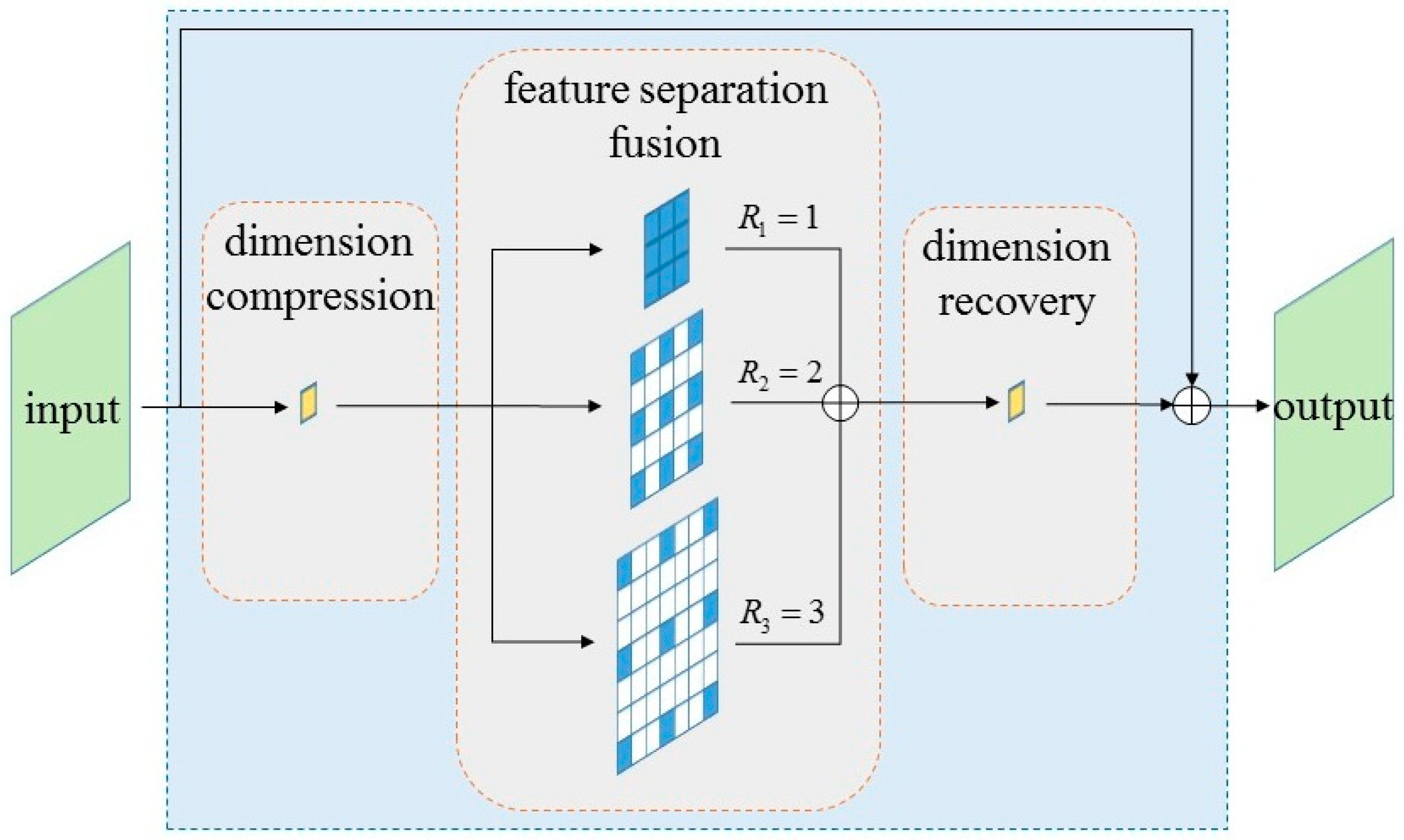

2.2.1. Multi-Scale Feature Fusion Module

2.2.2. Contrastive Learning Framework

2.2.3. Mix-Up Auxiliary Pathway

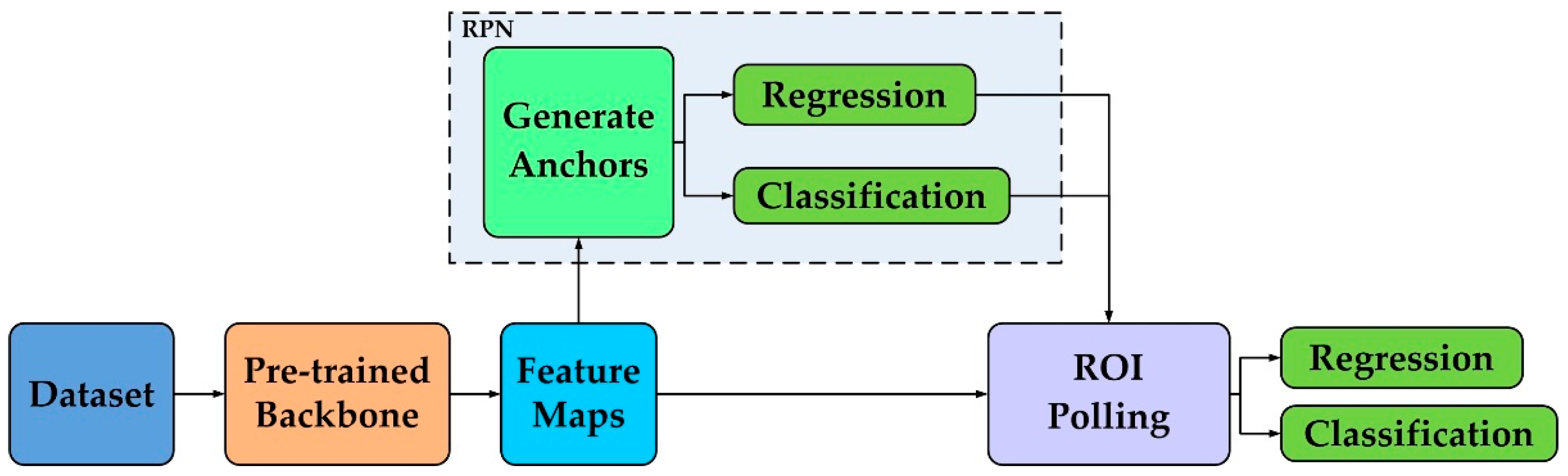

2.3. Few-Shot PolSAR Ship Detection

- 1.

- The meaning of parameters in the above two equations are given as follows:

- represents the probability of the th anchor of the network prediction is a target, and represents the corresponding ground truth;

- represents the offset of the prediction box, and represents the corresponding ground truth;

- is the size of mini-batch, is the number of anchors, and is used to balance the two losses.

- 2.

- The parameters of anchor regression are defined as follows:where , , and are the coordinates of the anchor’s center point, width and height, and , , and are the offsets predicted by the regression. The modified anchor coordinates are calculated by the above equation.

3. Experimental Results

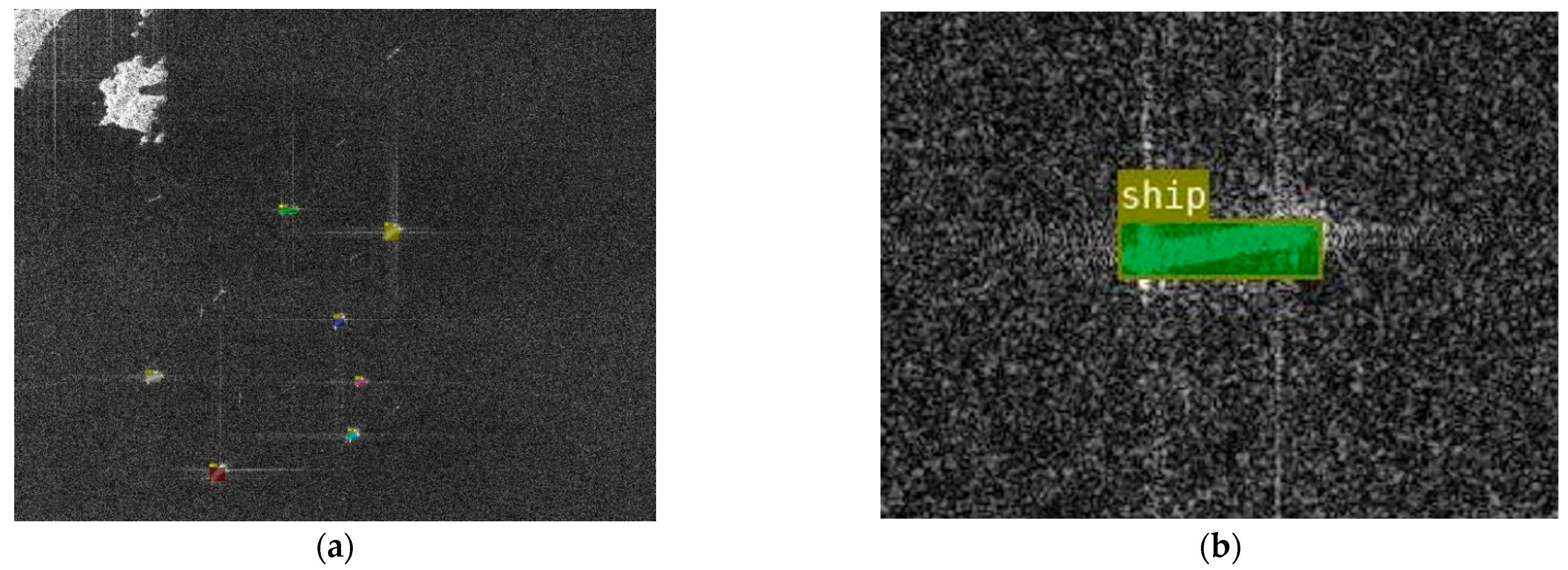

3.1. Data Description

3.2. Experimental Setup and Evaluation Index

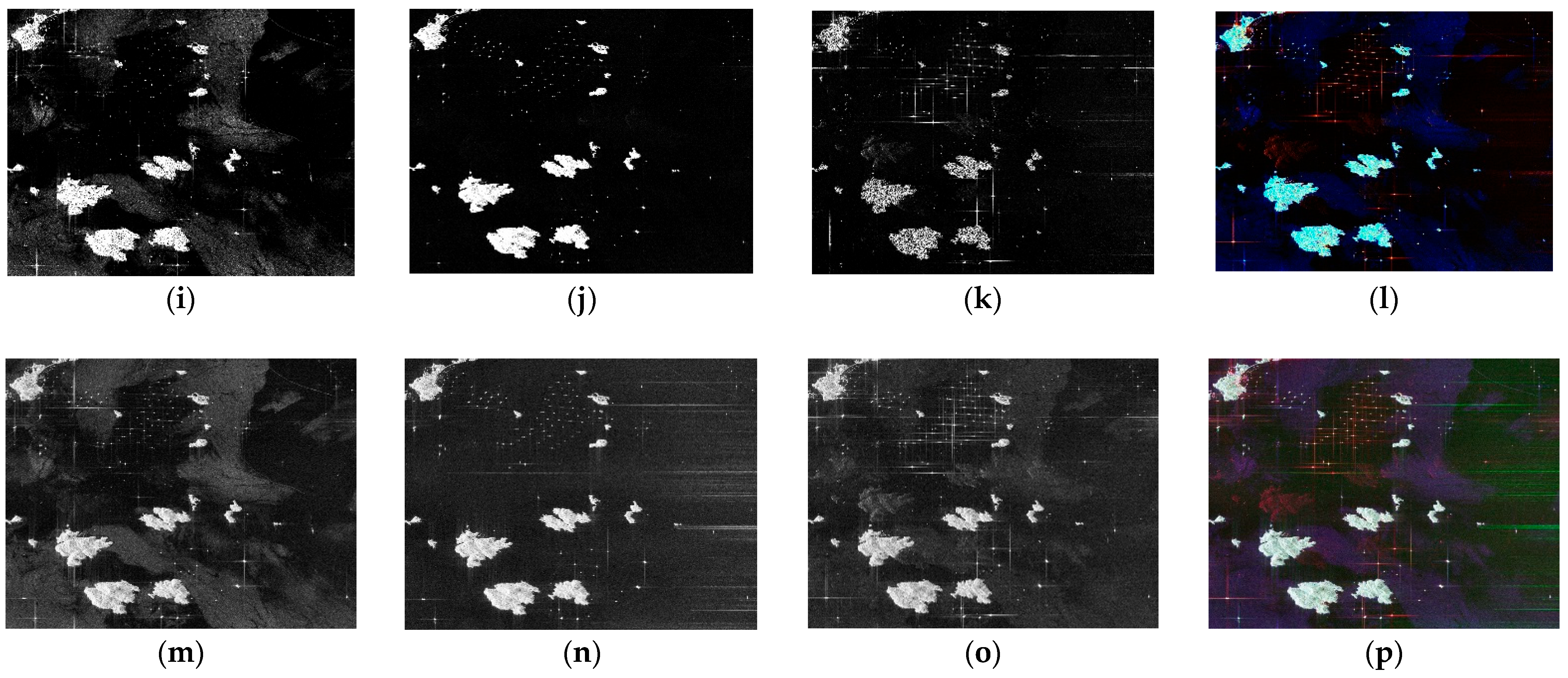

3.3. PolSAR Ship Detection Experiments

3.3.1. Ablation Experiments

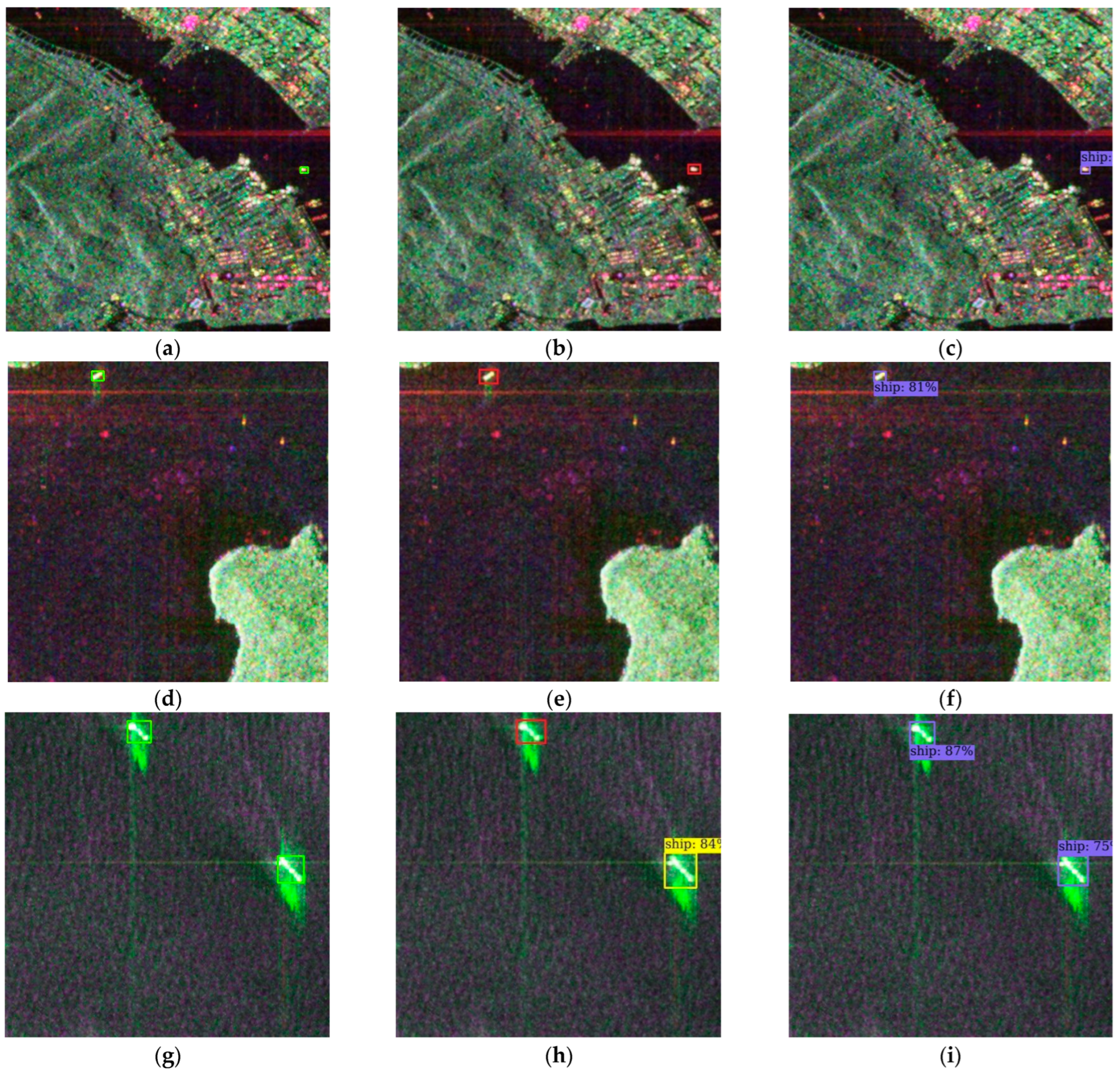

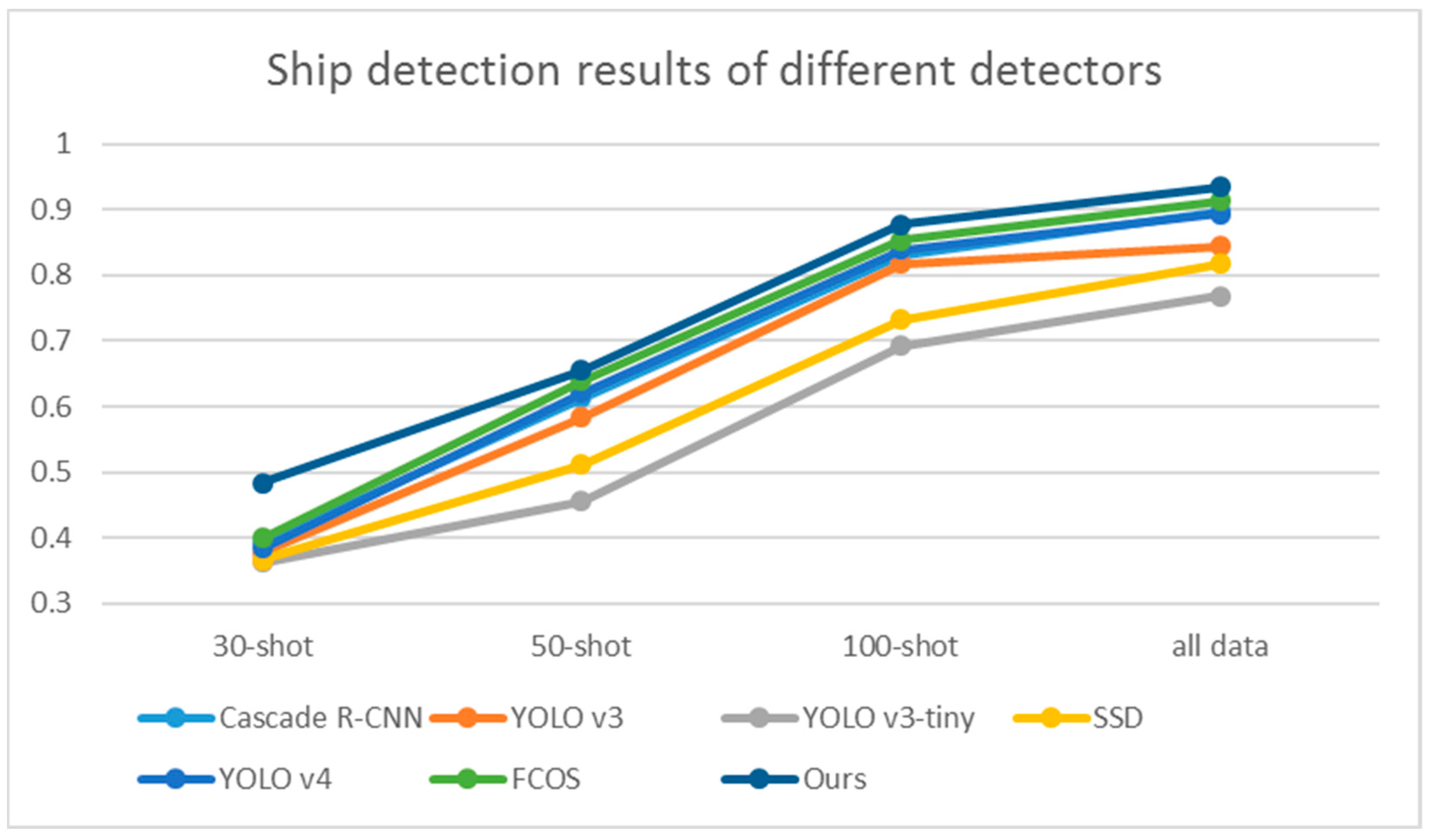

3.3.2. Comparison Experiments

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Moreira, A.; Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A Tutorial on Synthetic Aperture Radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Touzi, R.; Boerner, W.M.; Lee, J.S.; Lueneburg, E. A Review of Polarimetry in the Context of Synthetic Aperture Radar: Concepts and Information Extraction. Can. J. Remote Sens. 2004, 30, 380–407. [Google Scholar] [CrossRef]

- Leng, X.; Ji, K.; Yang, K.; Zou, H. A Bilateral CFAR Algorithm for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2015, 7, 1536–1540. [Google Scholar] [CrossRef]

- Gao, G.; Luo, Y.; Ouyang, K.; Zhou, S. Statistical Modeling of PMA Detector for Ship Detection in High-Resolution Dual-Polarization SAR Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4302–4313. [Google Scholar] [CrossRef]

- Gao, G.; Ouyang, K.; Luo, Y.; Liang, S.; Zhou, S. Scheme of Parameter Estimation for Generalized Gamma Distribution and Its Application to Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1812–1832. [Google Scholar] [CrossRef]

- Gao, G.; Li, G.; Li, Y. Shape Parameter Estimator of the Generalized Gaussian Distribution Based on the MoLC. IEEE Geosci. Remote Sens. Lett. 2018, 15, 350–354. [Google Scholar] [CrossRef]

- Tao, D.; Anfinsen, S.N.; Brekke, C. Robust CFAR Detector Based on Truncated Statistics in Multiple-Target Situations. IEEE Trans. Geosci. Remote Sens. 2016, 54, 117–134. [Google Scholar] [CrossRef]

- Liu, T.; Yang, Z.; Marino, A.; Gao, G.; Yang, J. Robust CFAR Detector Based on Truncated Statistics for Polarimetric Synthetic Aperture Radar. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6731–6747. [Google Scholar] [CrossRef]

- Ringrose, R.; Harris, N. Ship Detection Using Polarimetric SAR Data. In Proceedings of the CEOS SAR Workshop, Toulouse, France, 26–29 October 1999. [Google Scholar]

- Touzi, R.; Charbonneau, F.; Hawkins, R.K.; Murnaghan, K.; Kavoun, X. Ship-Sea Contrast Optimization When Using Polarimetric SARs. In Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium (IGARSS), Sydney, Australia, 9–13 July 2001. [Google Scholar]

- Chen, J.; Chen, Y.; Yang, J. Ship Detection Using Polarization Cross-Entropy. IEEE Geosci. Remote Sens. Lett. 2009, 6, 723–727. [Google Scholar] [CrossRef]

- Sugimoto, M.; Ouchi, K.; Nakamura, Y. On the Novel Use of Model-Based Decomposition in SAR Polarimetry for Target Detection on the Sea. Remote Sens. Lett. 2013, 4, 843–852. [Google Scholar] [CrossRef]

- Yang, J.; Dong, G.; Peng, Y.; Yamaguchi, Y.; Yamada, H. Generalized Optimization of Polarimetric Contrast Enhancement. IEEE Geosci. Remote Sens. Lett. 2004, 1, 171–174. [Google Scholar] [CrossRef]

- Yin, J.; Yang, J.; Xie, C.; Zhang, Q.; Li, Y.; Qi, Y. An Improved Generalized Optimization of Polarimetric Contrast Enhancement and Its Application to Ship Detection. IEICE Trans. Commun. 2013, 96, 2005–2013. [Google Scholar] [CrossRef]

- Gao, G.; Gao, S.; He, J.; Li, G. Ship Detection Using Compact Polarimetric SAR Based on the Notch Filter. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5380–5393. [Google Scholar] [CrossRef]

- Xu, Z.; Tang, B.; Cheng, S. Faint Ship Wake Detection in PolSAR Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1055–1059. [Google Scholar] [CrossRef]

- Kaplan, L.M. Improved SAR Target Detection via Extended Fractal Features. IEEE Trans. Aeros. Electron. Syst. 2001, 37, 436–451. [Google Scholar] [CrossRef]

- De Grandi, G.D.; Lee, J.; Schuler, D.L. Target Detection and Texture Segmentation in Polarimetric SAR Images Using a Wavelet Frame: Theoretical Aspects. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3437–3453. [Google Scholar] [CrossRef]

- Li, J.; Qu, C.; Shao, J. Ship Detection in SAR Images Based on an Improved Faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications, Beijing, China, 13–14 November 2017. [Google Scholar]

- Lin, Z.; Ji, K.; Leng, X.; Kuang, G. Squeeze and Excitation Rank Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 751–755. [Google Scholar] [CrossRef]

- Wang, Z.; Du, L.; Mao, J.; Liu, B.; Yang, D. SAR Target Detection Based on SSD with Data Augmentation and Transfer Learning. IEEE Geosci. Remote Sens. Lett. 2019, 16, 150–154. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, W. High-Speed Ship Detection in SAR Images by Improved Yolov3. In Proceedings of the 2019 16th International Computer Conference on Wavelet Active Media Technology and Information Processing, Chengdu, China, 14–15 December 2019. [Google Scholar]

- Zhu, M.; Hu, G.; Zhou, H.; Wang, S.; Feng, Z.; Yue, S. A Ship Detection Method via Redesigned FCOS in Large-Scale SAR Images. Remote Sens. 2022, 14, 1153. [Google Scholar] [CrossRef]

- Zhu, M.; Hu, G.; Li, S.; Zhou, H.; Wang, S.; Feng, Z. A Novel Anchor-Free Method Based on FCOS + ATSS for Ship Detection in SAR Images. Remote Sens. 2022, 14, 2034. [Google Scholar] [CrossRef]

- Chen, S.; Li, Y.; Wang, X.; Xiao, S.; Sato, M. Modeling and Interpretation of Scattering Mechanisms in Polarimetric Synthetic Aperture Radar: Advances and perspectives. IEEE Signal Proc. Mag. 2014, 31, 79–89. [Google Scholar] [CrossRef]

- Chen, S.; Wang, X.; Xiao, S.; Sato, M. Target Scattering Mechanism in Polarimetric Synthetic Aperture Radar: Interpretation and Application; Springer: Singapore, 2018; pp. 1–225. [Google Scholar]

- Rostami, M.; Kolouri, S.; Eaton, E.; Kim, K. Deep Transfer Learning for Few-Shot SAR Image Classification. Remote Sens. 2019, 11, 1374. [Google Scholar] [CrossRef]

- Rostami, M.; Kolouri, S.; Eaton, E.; Kim, K. SAR Image Classification Using Few-Shot Cross-Domain Transfer Learning. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Longbeach, CA, USA, 16–20 June 2019. [Google Scholar]

- Wang, L.; Bai, X.; Gong, C.; Zhou, F. Hybrid Inference Network for Few-Shot SAR Automatic Target Recognition. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9257–9269. [Google Scholar] [CrossRef]

- Fu, K.; Zhang, T.; Zhang, Y.; Wang, Z.; Sun, X. Few-Shot SAR Target Classification via Metalearning. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Huang, Z.; Pan, Z.; Lei, B. What, Where, and How to Transfer in SAR Target Recognition Based on Deep CNNs. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2324–2336. [Google Scholar] [CrossRef]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Pires, B.A.; Guo, Z.; Azar, M.G.; et al. Bootstrap Your Own Latent-A New Approach to Self-Supervised Learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- Chen, X.; He, K. Exploring Simple Siamese Representation Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Wang, D.; Zhang, J.; Du, B.; Xia, G.S.; Tao, D. An Empirical Study of Remote Sensing Pretraining. arXiv 2022, arXiv:2204.02825. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, S.; Zou, B.; Dong, H. Unsupervised Deep Representation Learning and Few-Shot Classification of PolSAR Images. IEEE Trans. Geosci. Remote Sens. 2020, 60, 1–16. [Google Scholar] [CrossRef]

- Yang, M.; Jiao, L.; Liu, F.; Hou, B.; Yang, S.; Zhang, Y.; Wang, J. Coarse-to-Fine Contrastive Self-Supervised Feature Learning for Land-Cover Classification in SAR Images with Limited Labeled Data. IEEE Trans. Image Process. 2022, 31, 6502–6516. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Cloude, S.R. Target Decomposition Theorems in Radar Scattering. Electron. Lett. 2007, 21, 22–24. [Google Scholar] [CrossRef]

- Cloude, S.R.; Pottier, E. An Entropy Based Classification Scheme for Land Applications of Polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Freeman, A.; Durden, S.L. A Three-Component Scattering Model for Polarimetric SAR Data. IEEE Trans. Geosci. Remote Sens. 1998, 36, 963–973. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Moriyama, T.; Ishido, M.; Yamada, H. Four Component Scattering Model for Polarimetric SAR Image Decomposition. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1699–1706. [Google Scholar] [CrossRef]

- Cui, Y.; Yamaguchi, Y.; Yang, J.; Kobayashi, H.; Park, S.; Singh, G. On Complete Model-Based Decomposition of Polarimetric SAR Coherency Matrix Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1991–2001. [Google Scholar] [CrossRef]

- Touzi, R.; Lopes, A.; Bruniquel, J.; Vachon, P.W. Coherence Estimation for SAR Imagery. IEEE Trans. Geosci. Remote Sens. 1999, 37, 135–149. [Google Scholar] [CrossRef]

- Lee, J.; Grunes, M.R.; Grandi, G. Polarimetric SAR Speckle Filtering and Its Implication for Cassification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2363–2373. [Google Scholar]

- Lang, F.; Yang, J.; Li, D. Adaptive-Window Polarimetric SAR Image Speckle Filtering Based on a Homogeneity Measurement. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5435–5446. [Google Scholar] [CrossRef]

- Cloude, S.R.; Pottier, E. A Review of Target Decomposition Theorems in Radar Polarimetry. IEEE Trans. Geosci. Remote Sens. 1996, 34, 498–518. [Google Scholar] [CrossRef]

- Goodman, J.W. Some Fundamental Properties of Speckle. J. Opt. Soc. Am. 1976, 66, 1145–1150. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond Empirical Risk Minimization. arXiv 2018, arXiv:1710.09412. [Google Scholar]

- Guo, X.; Zhao, T.; Lin, Y.; Du, B. MixSiam: A Mixture-Based Approach to Self-Supervised Representation Learning. arXiv 2021, arXiv:2111.02679. [Google Scholar]

- Pang, D.; Pan, C.; Zi, X. GF-3: The Watcher of the Vast Territory. Aerosp. China 2016, 9, 8–12. [Google Scholar]

- Shen, Z.; Liu, Z.; Li, J.; Jiang, Y.; Chen, Y.; Xue, X. DSOD: Learning Deeply Supervised Object Detectors from Scratch. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Adarsh, P.; Rathi, P.; Kumar, M. YOLO v3-Tiny: Object Detection and Recognition Using One Stage Improved Model. In Proceedings of the 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 6–7 March 2020. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Ultralytics. YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 November 2021).

- Huang, X.; He, B.; Tong, M.; Wang, D.; He, C. Few-Shot Object Detection on Remote Sensing Images via Shared Attention Module and Balanced Fine-Tuning Strategy. Remote Sens. 2021, 13, 3816. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Zhu, P.; Jia, X.; Tang, X.; Jiao, L. Generalized Few-Shot Object Detection in Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2023, 195, 353–364. [Google Scholar] [CrossRef]

- Wang, X.; Huang, T.; Gonzalez, J.; Darrell, T.; Yu, F. Frustratingly Simple Few-Shot Object Detection. In Proceedings of the International Conference on Machine Learning, Virtual Event, 13–18 July 2020. [Google Scholar]

| Train with Our Method | Train from Scratch | |||||||

|---|---|---|---|---|---|---|---|---|

| All Data | 100-Shot | 50-Shot | 30-Shot | All Data | 100-Shot | 50-Shot | 30-Shot | |

| Pauli | 0.93 | 0.859 | 0.594 | 0.127 | 0.887 | 0.801 | 0.505 | 0.013 |

| Cloude | 0.756 | 0.594 | 0.192 | 0.034 | 0.634 | 0.423 | 0.078 | 0.01 |

| Freeman | 0.83 | 0.772 | 0.468 | 0.035 | 0.781 | 0.586 | 0.382 | 0.006 |

| Yamaguchi | 0.861 | 0.667 | 0.296 | 0.089 | 0.785 | 0.631 | 0.073 | 0.01 |

| Cui | 0.921 | 0.792 | 0.673 | 0.333 | 0.836 | 0.679 | 0.514 | 0.167 |

| Coherence | 0.325 | 0.142 | 0.03 | 0.029 | 0.093 | 0.057 | 0.005 | 0 |

| Refined Lee | 0.931 | 0.897 | 0.696 | 0.267 | 0.898 | 0.842 | 0.634 | 0.118 |

| Adaptive | 0.935 | 0.877 | 0.655 | 0.483 | 0.901 | 0.816 | 0.556 | 0.11 |

| Method | Average Precision (AP) |

|---|---|

| Original SimSiam | 0.919 |

| SimSiam and MFFM | 0.923 |

| SimSiam and MUAP | 0.929 |

| Our method | 0.935 |

| Train with Our Method | Train from Scratch | |

|---|---|---|

| ResNet-18 | 0.935 | 0.901 |

| ResNet-34 | 0.942 | 0.923 |

| ResNet-50 | 0.902 | 0.854 |

| ResNet-101 | 0.87 | 0.739 |

| All Data | 100-Shot | 50-Shot | 30-Shot | |

|---|---|---|---|---|

| Cascade R-CNN | 0.898 | 0.831 | 0.612 | 0.392 |

| YOLO v3 | 0.844 | 0.817 | 0.583 | 0.377 |

| YOLO v3-tiny | 0.769 | 0.693 | 0.456 | 0.362 |

| SSD | 0.818 | 0.733 | 0.511 | 0.366 |

| YOLO v4 | 0.894 | 0.839 | 0.62 | 0.384 |

| FCOS | 0.914 | 0.853 | 0.639 | 0.4 |

| Ours | 0.935 | 0.877 | 0.655 | 0.483 |

| Method | All Data | 100-Shot | 50-Shot | 30-Shot |

|---|---|---|---|---|

| Faster R-CNN | 0.901 | 0.816 | 0.556 | 0.11 |

| Faster R-CNN and pre-training | 0.935 | 0.877 | 0.655 | 0.483 |

| YOLO v5 | 0.887 | 0.838 | 0.612 | 0.389 |

| YOLO v5 and pre-training | 0.939 | 0.894 | 0.696 | 0.552 |

| FCOS | 0.914 | 0.853 | 0.639 | 0.4 |

| FCOS and pre-training | 0.942 | 0.903 | 0.717 | 0.583 |

| All Data | 100-Shot | 50-Shot | 30-Shot | |

|---|---|---|---|---|

| SAMBFS-FSDet | 0.893 | 0.805 | 0.551 | 0.433 |

| G-FSDet | 0.904 | 0.824 | 0.577 | 0.456 |

| Ours | 0.935 | 0.877 | 0.655 | 0.483 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, W.; Pan, Z.; Yang, J. Few-Shot PolSAR Ship Detection Based on Polarimetric Features Selection and Improved Contrastive Self-Supervised Learning. Remote Sens. 2023, 15, 1874. https://doi.org/10.3390/rs15071874

Qiu W, Pan Z, Yang J. Few-Shot PolSAR Ship Detection Based on Polarimetric Features Selection and Improved Contrastive Self-Supervised Learning. Remote Sensing. 2023; 15(7):1874. https://doi.org/10.3390/rs15071874

Chicago/Turabian StyleQiu, Weixing, Zongxu Pan, and Jianwei Yang. 2023. "Few-Shot PolSAR Ship Detection Based on Polarimetric Features Selection and Improved Contrastive Self-Supervised Learning" Remote Sensing 15, no. 7: 1874. https://doi.org/10.3390/rs15071874

APA StyleQiu, W., Pan, Z., & Yang, J. (2023). Few-Shot PolSAR Ship Detection Based on Polarimetric Features Selection and Improved Contrastive Self-Supervised Learning. Remote Sensing, 15(7), 1874. https://doi.org/10.3390/rs15071874