Visual Detection and Association Tracking of Dim Small Ship Targets from Optical Image Sequences of Geostationary Satellite Using Multispectral Radiation Characteristics

Abstract

:1. Introduction

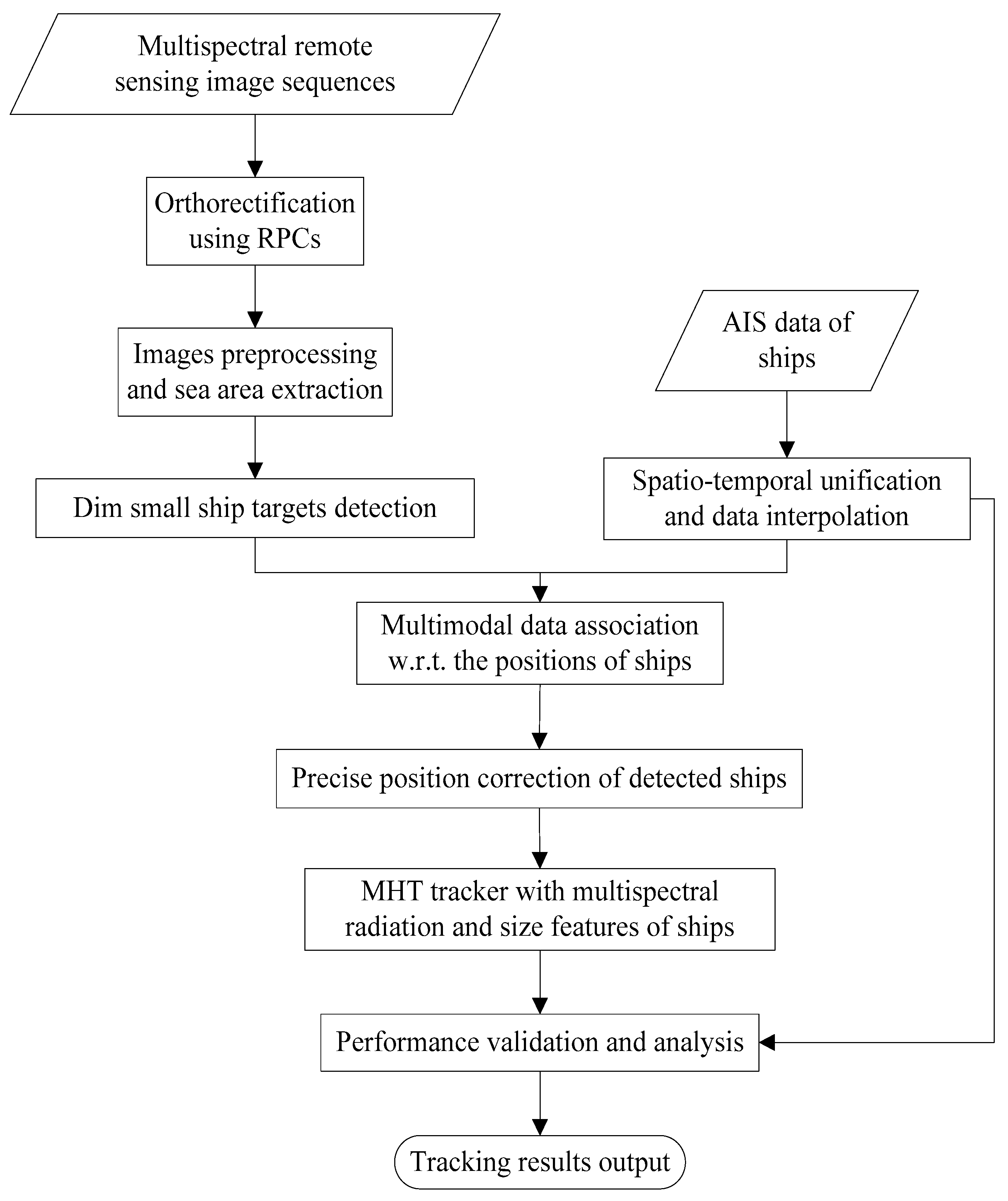

2. Methodological Framework

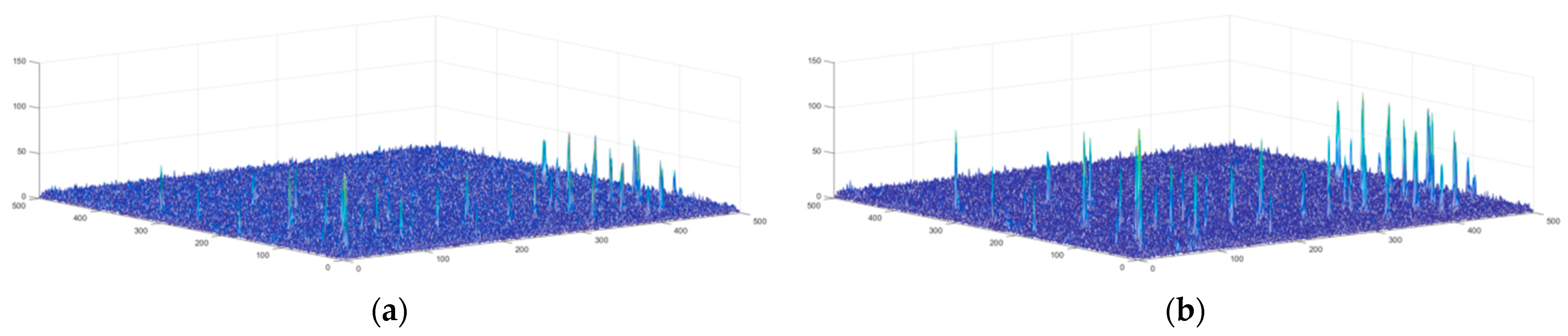

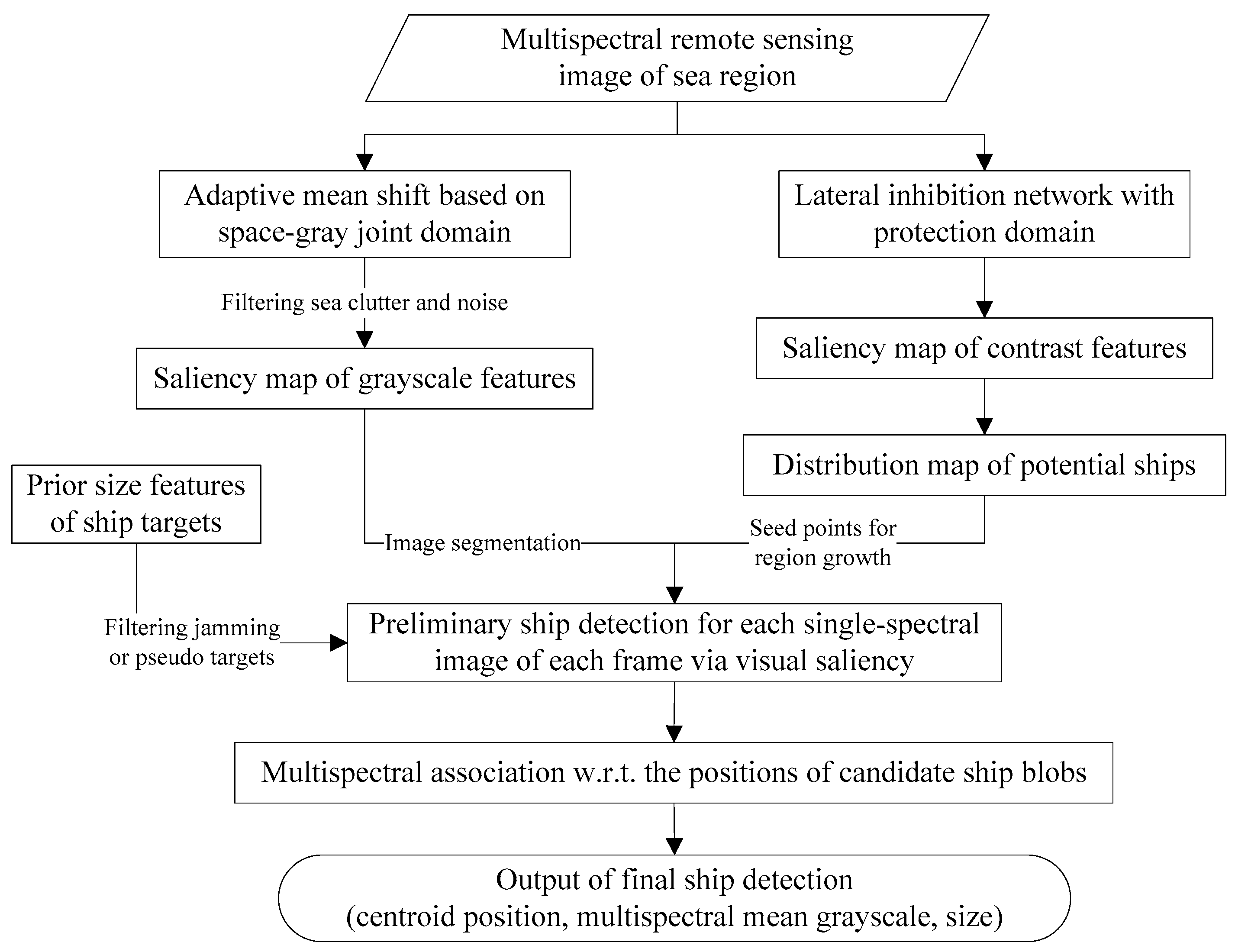

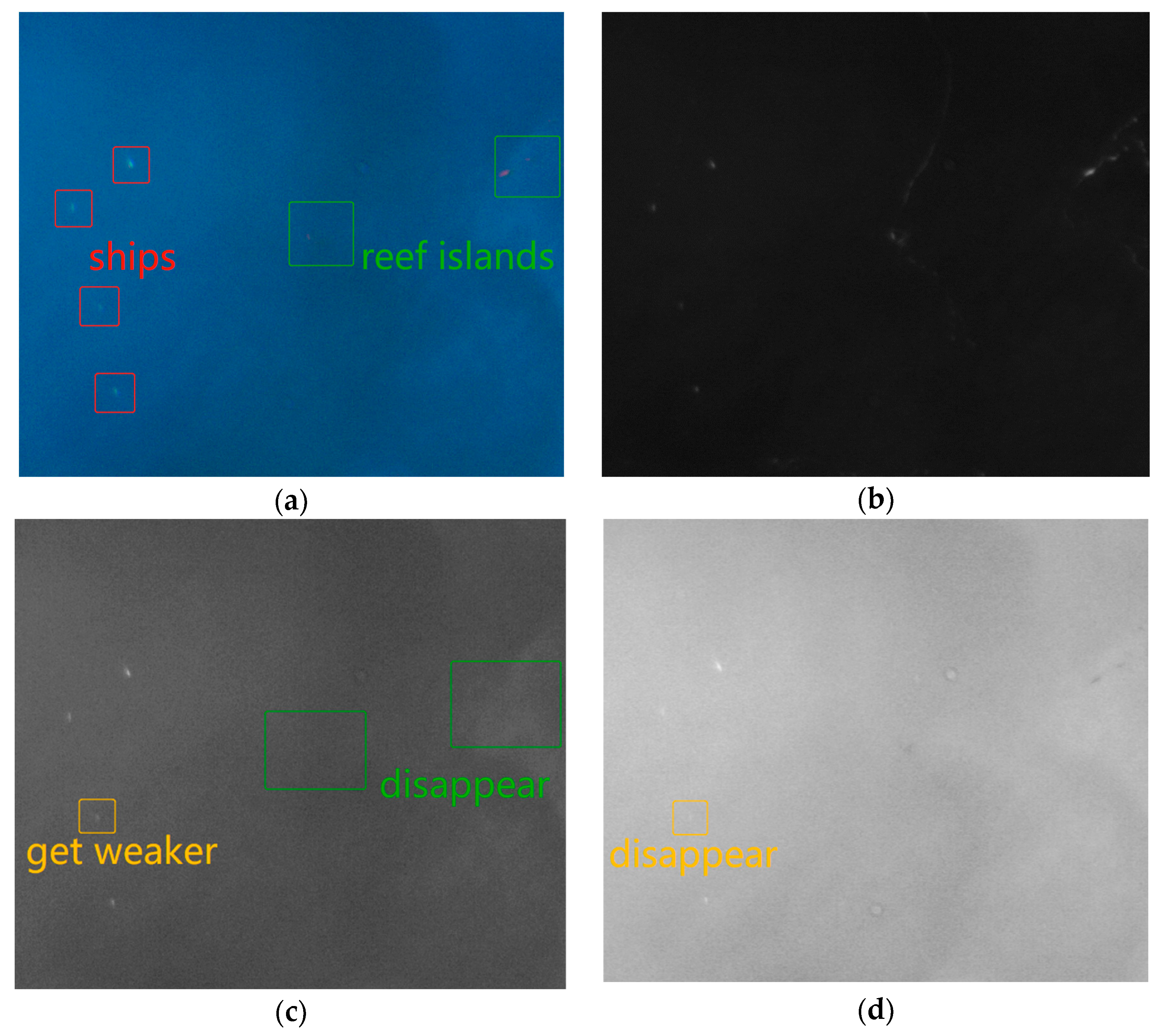

2.1. Preliminary Ship Detection in Single-Spectral Image of Each Frame

2.1.1. Adaptive Mean Shift with Space-Gray Joint Domain

2.1.2. Lateral Inhibition Network with Protection Domain

2.2. Ship Positions Association across Multispectral Detections and across Multimodal Data

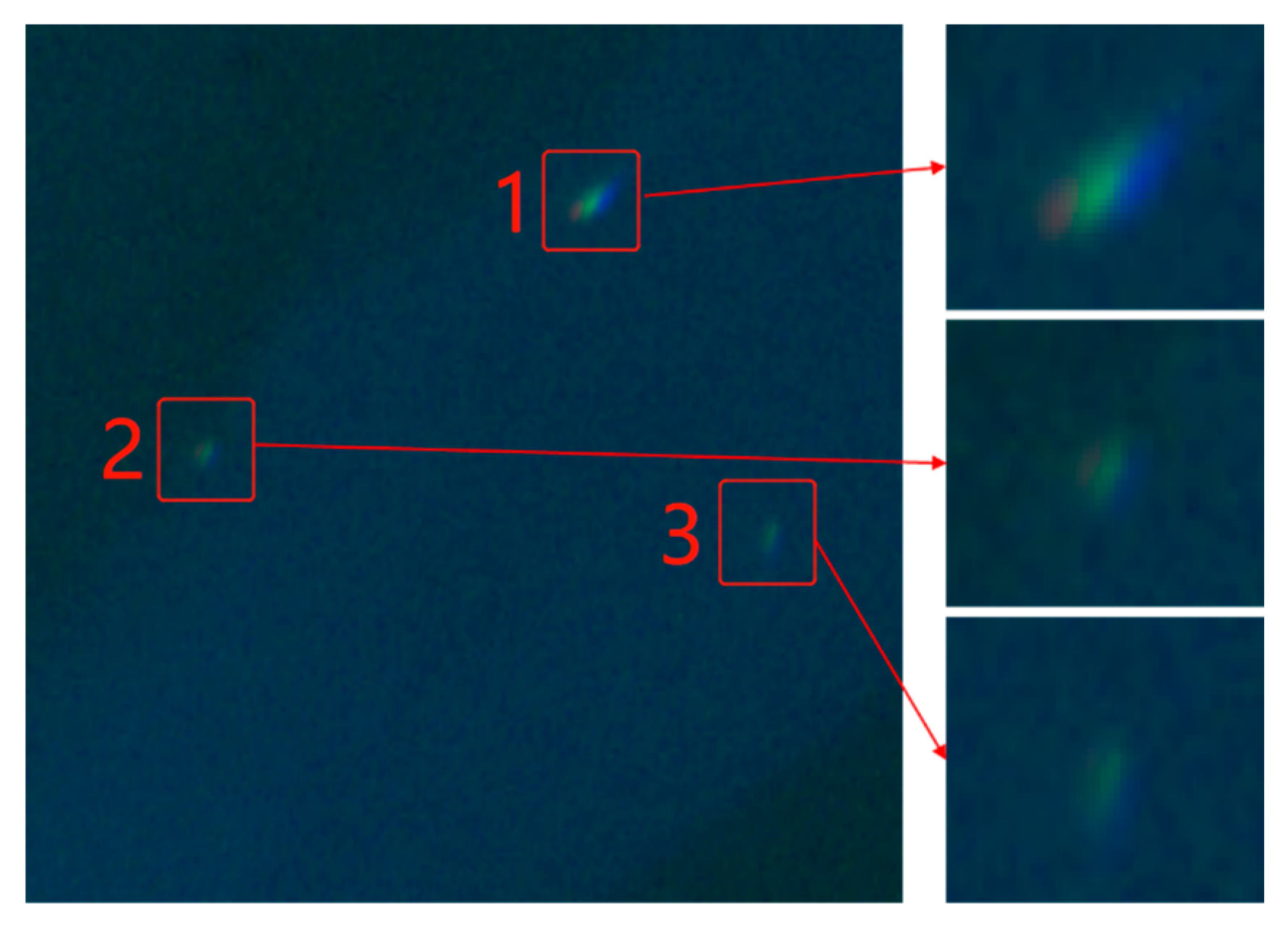

2.2.1. Multispectral Association for Final Ship Detection

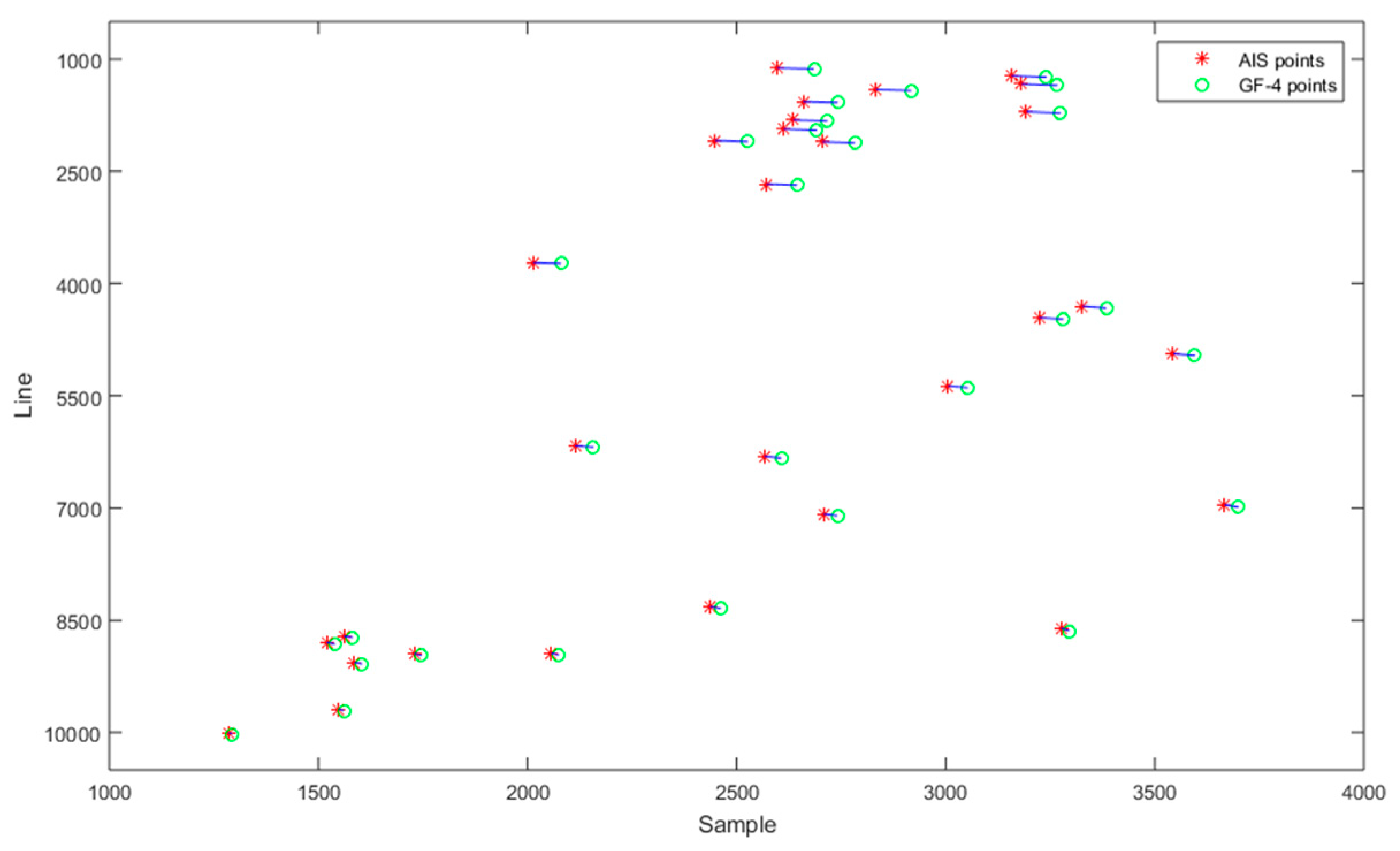

2.2.2. Multimodal Data Association for Ship Positions Correction

2.3. Multi-Frame Association Tracking

2.3.1. Motion Modeling of Ships

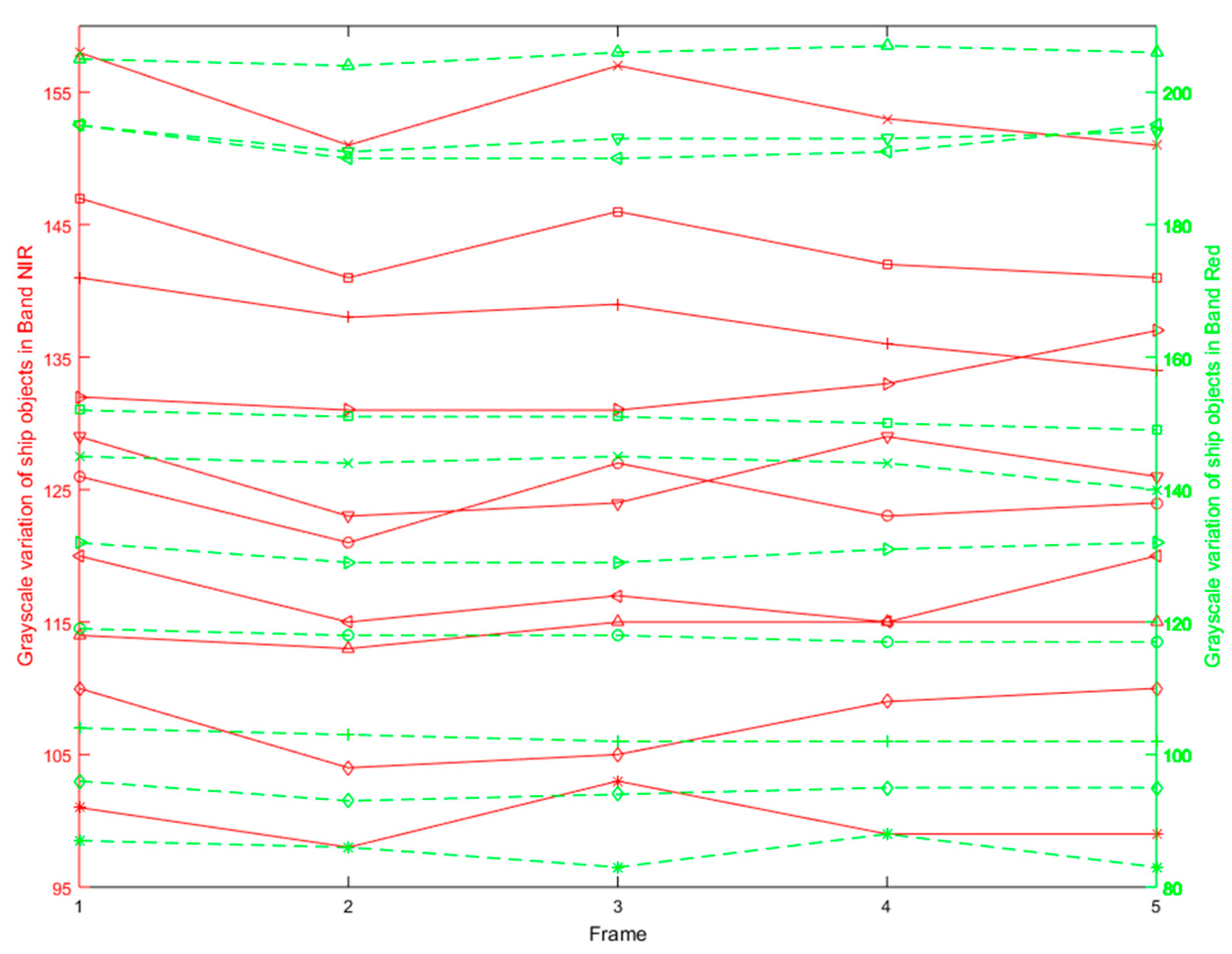

2.3.2. MHT with Multispectral Saliency Characteristics

3. Experiments and Analysis

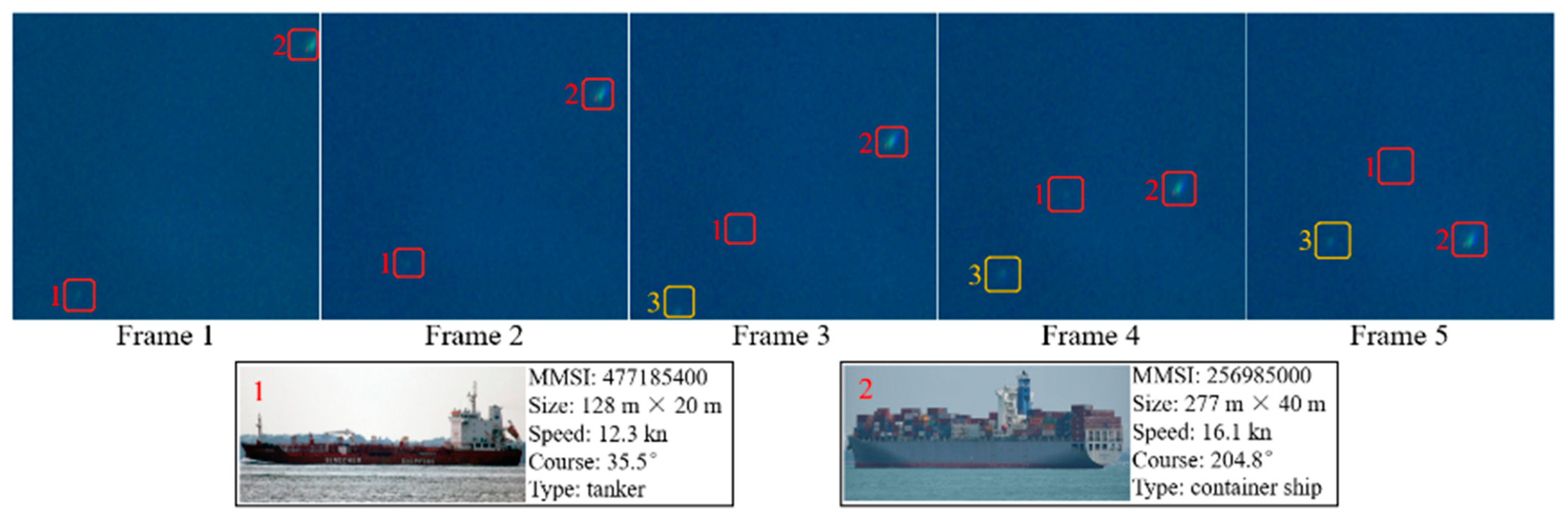

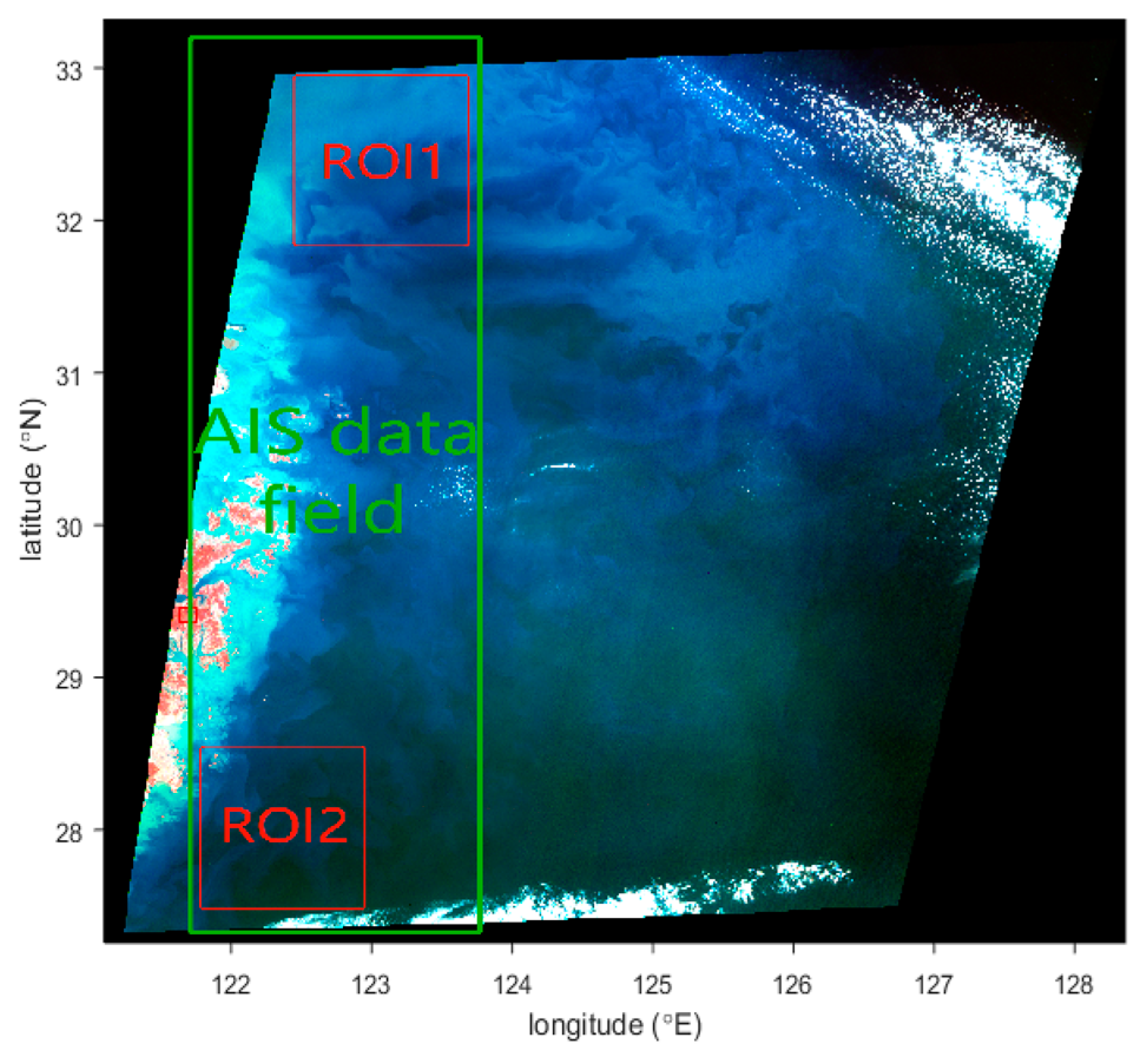

3.1. Research Area and Dataset

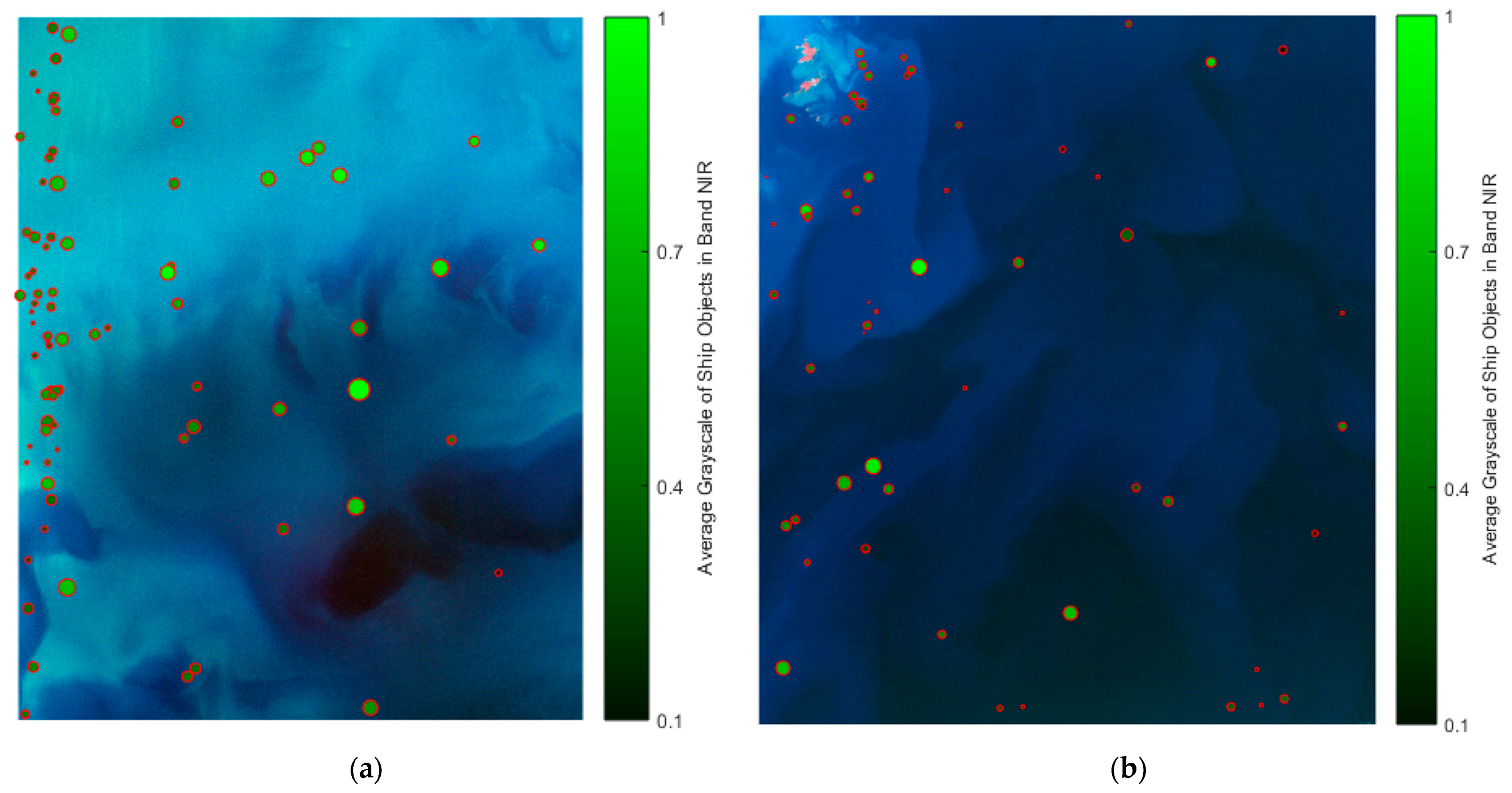

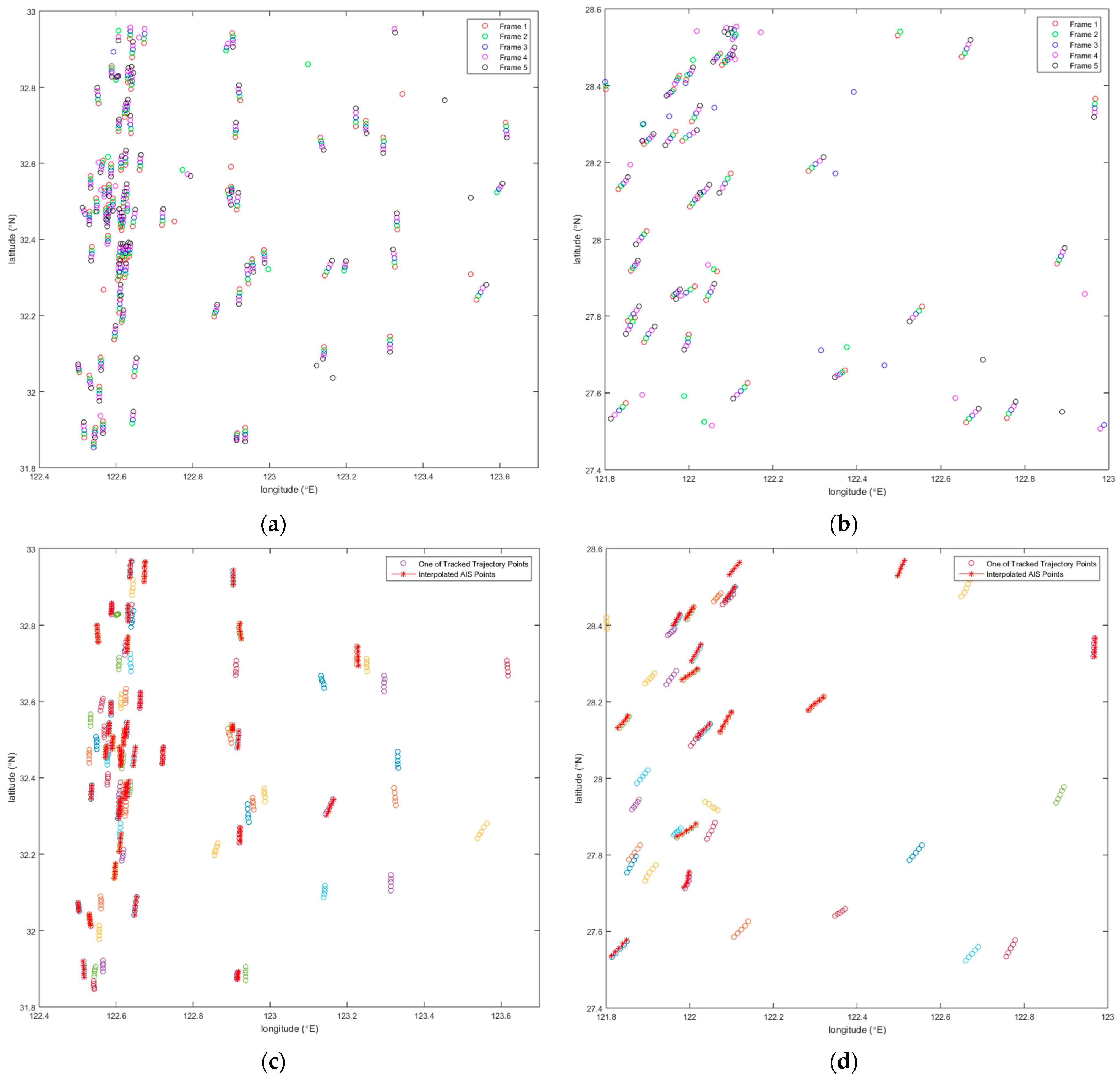

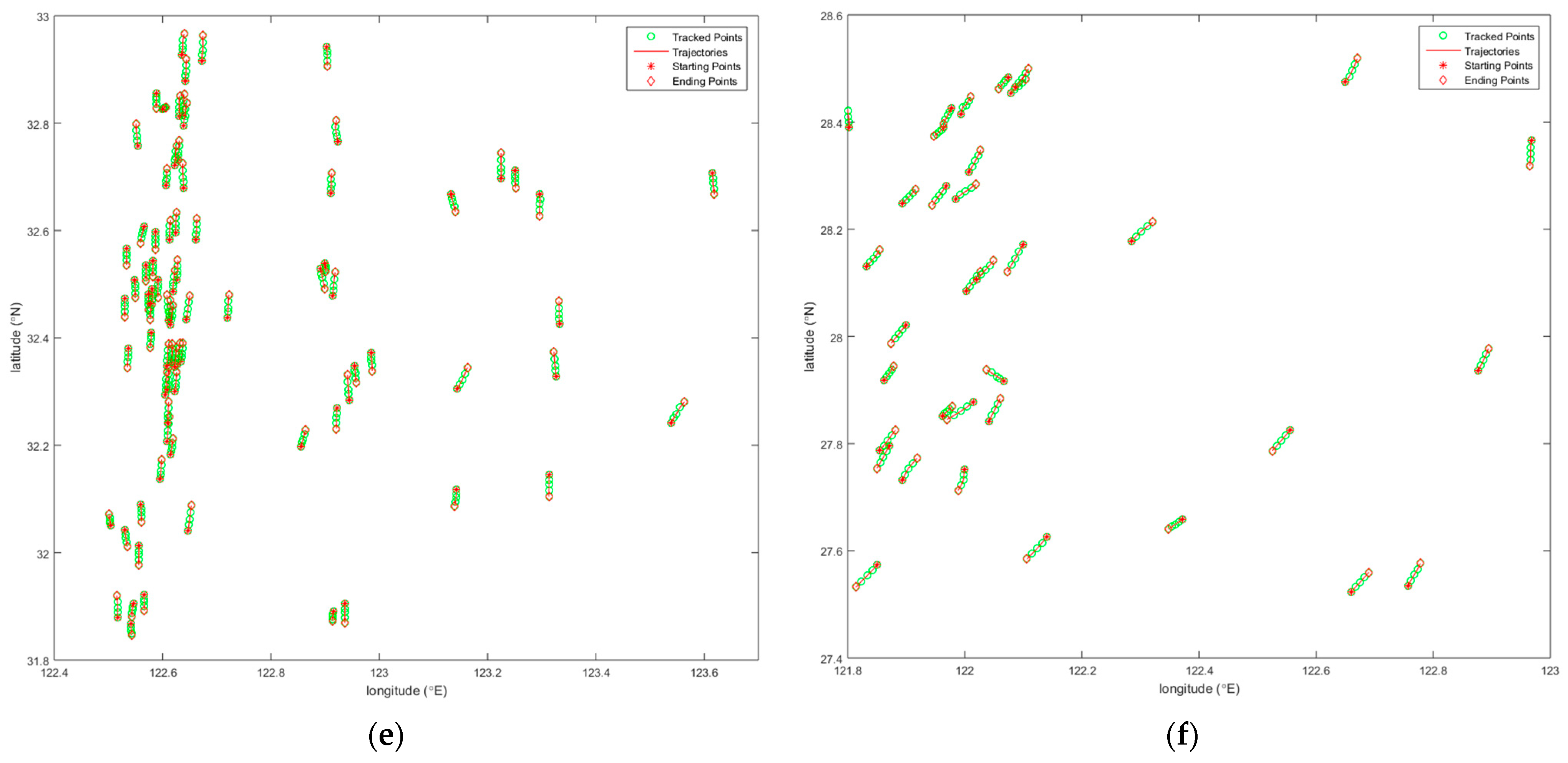

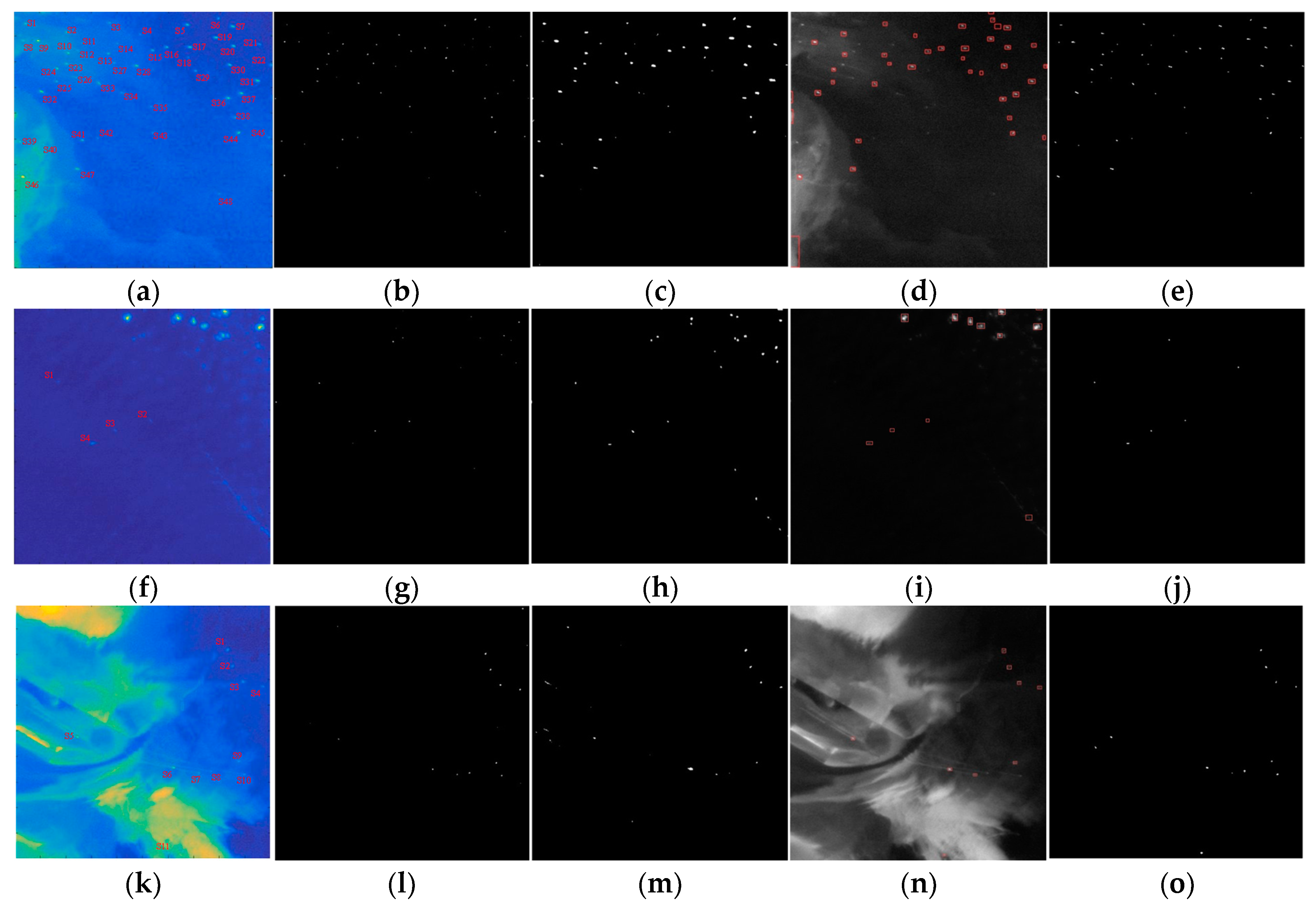

3.2. Results and Analysis

3.3. Comparison and Evaluation

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kanjir, U.; Greidanus, H.; Oštir, K. Vessel Detection and Classification from Spaceborne Optical Images: A Literature Survey. Remote Sens. Environ. 2018, 207, 1–26. [Google Scholar] [CrossRef]

- Soldi, G.; Gaglione, D.; Forti, N.; Di Simone, A.; Daffina, F.C.; Bottini, G.; Quattrociocchi, D.; Millefiori, L.M.; Braca, P.; Carniel, S.; et al. Space-Based Global Maritime Surveillance. Part I: Satellite Technologies. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 8–28. [Google Scholar] [CrossRef]

- Yu, W.; You, H.; Lv, P.; Hu, Y.; Han, B. A Moving Ship Detection and Tracking Method Based on Optical Remote Sensing Images from the Geostationary Satellite. Sensors 2021, 21, 7547. [Google Scholar] [CrossRef]

- Zhang, Z.; Shao, Y.; Tian, W.; Wei, Q.; Zhang, Y.; Zhang, Q. Application Potential of GF-4 Images for Dynamic Ship Monitoring. IEEE Geosci. Remote Sens. Lett. 2017, 14, 911–915. [Google Scholar] [CrossRef]

- Yao, L.; Liu, Y.; He, Y. A Novel Ship-Tracking Method for GF-4 Satellite Sequential Images. Sensors 2018, 18, 2007. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Yao, L.; Xiong, W.; Jing, T.; Zhou, Z. Ship Target Tracking Based on a Low-resolution Optical Satellite in Geostationary Orbit. Int. J. Remote Sens. 2018, 39, 2991–3009. [Google Scholar] [CrossRef]

- Wang, Q.; Hu, Y.; Pan, Z.; Liu, F.; Han, B. Spatiotemporal Data Fusion and CNN Based Ship Tracking Method for Sequential Optical Remote Sensing Images from the Geostationary Satellite. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6518305. [Google Scholar] [CrossRef]

- Yang, G.; Li, B.; Ji, S.; Gao, F.; Xu, Q. Ship Detection from Optical Satellite Images Based on Sea Surface Analysis. IEEE Geosci. Remote Sens. Lett. 2014, 11, 641–645. [Google Scholar] [CrossRef]

- Li, T.; Liu, Z.; Xie, R.; Ran, L. An Improved Superpixel-Level CFAR Detection Method for Ship Targets in High-Resolution SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 184–194. [Google Scholar] [CrossRef]

- Liu, Y.; Yao, L.; Xiong, W.; Zhou, Z. GF-4 Satellite and Automatic Identification System Data Fusion for Ship Tracking. IEEE Geosci. Remote Sens. Lett. 2019, 16, 281–285. [Google Scholar] [CrossRef]

- Lin, L.; Wang, S.; Tang, Z. Using Deep Learning to Detect Small Targets in Infrared Oversampling Images. J. Syst. Eng. Electron. 2018, 29, 71–76. [Google Scholar]

- Li, Q.; Mou, L.; Liu, Q.; Wang, Y.; Zhu, X. HSF-Net: Multiscale Deep Feature Embedding for Ship Detection in Optical Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 7147–7161. [Google Scholar] [CrossRef]

- Wang, N.; Li, B.; Xu, Q.; Wang, Y. Automatic Ship Detection in Optical Remote Sensing Images Based on Anomaly Detection and SPP-PCANet. Remote Sens. 2019, 11, 47. [Google Scholar] [CrossRef] [Green Version]

- Wu, J.; Pan, Z.; Lei, B.; Hu, Y. LR-TSDet: Towards Tiny Ship Detection in Low-Resolution Remote Sensing Images. Remote Sens. 2021, 13, 3890. [Google Scholar] [CrossRef]

- Cheng, M.M.; Mitra, N.J.; Huang, X.; Torr, P.H.; Hu, S.M. Global Contrast Based Salient Region Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 569–582. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wei, Y.; You, X.; Li, H. Multiscale Patch-based Contrast Measure for Small Infrared Target Detection. Pattern Recognit. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Bai, X.; Bi, Y. Derivative Entropy-Based Contrast Measure for Infrared Small-Target Detection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2452–2466. [Google Scholar] [CrossRef]

- Li, H.C.; Chen, L.; Li, F.; Huang, M.Y. Ship Detection and Tracking Method for Satellite Video Based on Multiscale Saliency and Surrounding Contrast Analysis. J. Appl. Remote Sens. 2019, 13, 026511. [Google Scholar] [CrossRef]

- Li, C.; Luo, B.; Hong, H.; Su, X.; Wang, Y.; Liu, J.; Wang, C.; Zhang, J.; Wei, L. Object Detection Based on Global-Local Saliency Constraint in Aerial Images. Remote Sens. 2020, 12, 1435. [Google Scholar] [CrossRef]

- Dong, L.; Wang, B.; Zhao, M.; Xu, W. Robust Infrared Maritime Target Detection Based on Visual Attention and Spatiotemporal Filtering. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3037–3050. [Google Scholar] [CrossRef]

- Du, P.; Hamdulla, A. Infrared Small Target Detection Using Homogeneity-Weighted Local Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2020, 17, 514–518. [Google Scholar] [CrossRef]

- Mazzarella, F.; Vespe, M.; Santamaria, C. SAR Ship Detection and Self-reporting Data Fusion Based on Traffic Knowledge. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1685–1689. [Google Scholar] [CrossRef]

- Granstrom, K.; Lundquist, C.; Orguner, O. Extended Target Tracking Using a Gaussian-Mixture PHD Filter. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 3268–3286. [Google Scholar] [CrossRef] [Green Version]

- Fortmann, T.; Bar-Shalom, Y.; Scheffe, M. Sonar Tracking of Multiple Targets Using Joint Probabilistic Data Association. IEEE J. Ocean. Eng. 1983, 8, 173–184. [Google Scholar] [CrossRef] [Green Version]

- Reid, D. An Algorithm for Tracking Multiple Targets. IEEE Trans. Autom. Control 1979, 24, 843–854. [Google Scholar] [CrossRef]

- Ren, X.; Huang, Z.; Sun, S.; Liu, D.; Wu, J. An Efficient MHT Implementation Using GRASP. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 86–101. [Google Scholar] [CrossRef]

- Sheng, H.; Chen, J.; Zhang, Y.; Ke, W.; Xiong, Z.; Yu, J. Iterative Multiple Hypothesis Tracking with Tracklet-level Association. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 3660–3672. [Google Scholar] [CrossRef]

- Xiao, F.; Yuan, F.; Cheng, E. Detection and Tracking Method of Maritime Moving Targets Based on Geosynchronous Orbit Satellite Optical Images. Electronics 2020, 9, 1092. [Google Scholar] [CrossRef]

- Lukezic, A.; Vojir, T.; Cehovin Zajc, L.; Matas, J.; Kristan, M. Discriminative Correlation Filter with Channel and Spatial Reliability. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6309–6318. [Google Scholar]

- Yang, T.; Zhou, S.; Xu, A.; Yin, J. A Method for Tree Image Segmentation Combined Adaptive Mean Shifting with Image Abstraction. J. Inf. Process. Syst. 2020, 16, 1424–1436. [Google Scholar]

- Hong, Y.; Yi, J.; Zhao, D. Improved Mean Shift Segmentation Approach for Natural Images. Appl. Math. Comput. 2007, 185, 940–952. [Google Scholar] [CrossRef]

- Zhao, X.; Hu, X.; Liao, Y.; He, T.; Zhang, T.; Zou, X.; Tian, J. Accurate MR Image Super-resolution via Lightweight Lateral Inhibition Network. Comput. Vis. Image Underst. 2020, 201, 103075. [Google Scholar] [CrossRef]

| Region | Frame | Ship Detection | Ship Tracking | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| NTP | NFP | NFN | Precision (%) | Recall (%) | NTP | NFP | NFN | Precision (%) | Recall (%) | ||

| ROI1 | 1 | 82 | 10 | 5 | 89.13 | 94.25 | 83 | 1 | 3 | 98.81 | 96.51 |

| 2 | 85 | 12 | 3 | 87.63 | 96.59 | ||||||

| 3 | 84 | 9 | 3 | 90.32 | 96.55 | ||||||

| 4 | 83 | 15 | 3 | 84.69 | 96.51 | ||||||

| 5 | 82 | 9 | 4 | 90.11 | 95.35 | ||||||

| ROI2 | 1 | 37 | 4 | 2 | 90.24 | 94.87 | 37 | 0 | 2 | 100.00 | 94.87 |

| 2 | 37 | 8 | 2 | 82.22 | 94.87 | ||||||

| 3 | 36 | 15 | 3 | 70.59 | 92.31 | ||||||

| 4 | 38 | 10 | 2 | 79.17 | 95.00 | ||||||

| 5 | 35 | 7 | 4 | 83.33 | 89.74 | ||||||

| Total Performance | Our Method | 599 | 99 | 31 | 85.82 | 95.08 | 120 | 1 | 5 | 99.17 | 96.00 |

| Liu et al. [6] | - | - | - | 37.68 | 81.36 | - | - | - | 91.56 | 79.66 | |

| Yao et al. [5] | - | - | - | 47.60 | 89.00 | - | - | - | 98.50 | 87.40 | |

| Yu et al. [3] | - | - | - | 70.70 | 94.80 | - | - | - | 98.20 | 93.10 | |

| Wang et al. [7] | - | - | - | 78.60 | 93.20 | - | - | - | 95.70 | 94.90 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, F.; Zhao, G.; Zhang, G.; Li, Z.; Ding, K. Visual Detection and Association Tracking of Dim Small Ship Targets from Optical Image Sequences of Geostationary Satellite Using Multispectral Radiation Characteristics. Remote Sens. 2023, 15, 2069. https://doi.org/10.3390/rs15082069

Meng F, Zhao G, Zhang G, Li Z, Ding K. Visual Detection and Association Tracking of Dim Small Ship Targets from Optical Image Sequences of Geostationary Satellite Using Multispectral Radiation Characteristics. Remote Sensing. 2023; 15(8):2069. https://doi.org/10.3390/rs15082069

Chicago/Turabian StyleMeng, Fan, Guocan Zhao, Guojun Zhang, Zhi Li, and Kaimeng Ding. 2023. "Visual Detection and Association Tracking of Dim Small Ship Targets from Optical Image Sequences of Geostationary Satellite Using Multispectral Radiation Characteristics" Remote Sensing 15, no. 8: 2069. https://doi.org/10.3390/rs15082069

APA StyleMeng, F., Zhao, G., Zhang, G., Li, Z., & Ding, K. (2023). Visual Detection and Association Tracking of Dim Small Ship Targets from Optical Image Sequences of Geostationary Satellite Using Multispectral Radiation Characteristics. Remote Sensing, 15(8), 2069. https://doi.org/10.3390/rs15082069