Uncertainty Modelling of Laser Scanning Point Clouds Using Machine-Learning Methods

Abstract

:1. Introduction

1.1. Contribution

1.2. Outline

2. Data Preparation

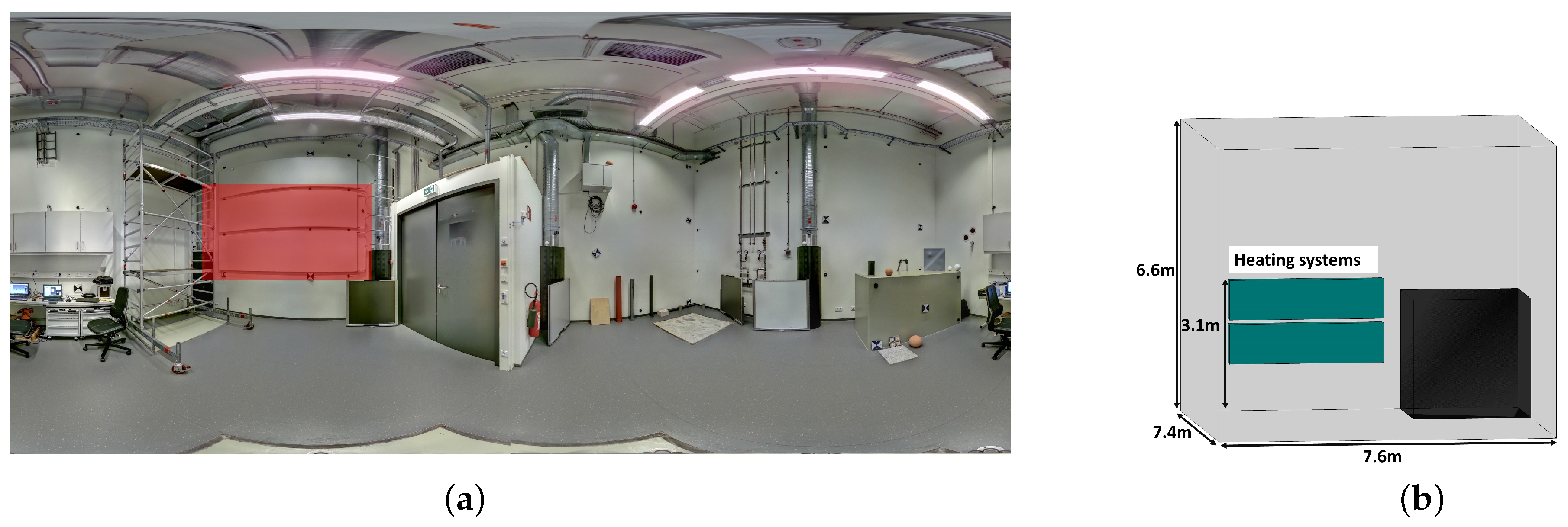

2.1. Environment

2.2. Sensors

2.2.1. Reference: Leica AT960 and Leica LAS XL

2.2.2. Z+F Imager 5016

2.3. Data Acquisition and Registration

- 1.

- The reference point cloud, as shown in Figure 3, was acquired using a combination of the Leica AT 960 laser tracker and the handheld Leica LAS-XL scanner. The handheld scanner was moved both horizontally and vertically to achieve high point density. Additionally, 28 targets were measured with the laser tracker, in combination with corner cube reflectors placed on target mounts, to assist with the registration of the TLS point cloud.

- 2.

- To capture variations in distance and angle of impact, 49 scans were recorded with the Z+F Imager 5016 at a constant quality level (Quality+). The viewpoints for the scans were distributed throughout the entire room, as illustrated in Figure 4a. Furthermore, TLS targets were attached to the target mounts.

2.4. Comparison of TLS and Reference Point Clouds

- Meshing of the point cloud.A mesh is created from the reference point cloud using Poisson surface reconstruction [29]. This approach is preferred over other methods, such as ball pivoting, alpha shapes, and marching cubes, because it produces better results for closed geometries and ensures the topological correctness. Additionally, Poisson surface reconstruction is more robust to sensor noise compared to other methods [30]. As a result, the noise is effectively filtered out and a smooth surface is generated, which accurately represents the geometry. Furthermore, the high point density of the reference point cloud, obtained through multiple measurements, further improves the accuracy of the reconstructed geometry.

- Raycasting. Raycasting is used to determine the intersection point with the reference mesh for each TLS point, which is achieved by employing the Open3D library in Python [31] (Figure 5b).

- 1.

- If an intersection exists, calculate the distance between the intersection point and TLS sensor , as well as the distance between the TLS sensor and TLS point .

- 2.

- Calculate the distance residuals as .

- 3.

- Save the following information: (1) the object ID, indicating to which object the TLS point belongs, and (2) the distance residuals.

2.5. Feature Engineering

- Intensity: The intensity of the reflected laser beam provides information about the reflectivity of the object and the scan geometry (distance and angle of impact). The raw intensity values can range from 0 to 5 mio. increments for the Z+F Imager 5016. For the regression, the raw intensity values are scaled between 0 and 1 using the formula:

- Distance: As the distance increases, the spot size increases, affecting the distance measurement. Distance calculations are performed between TLS points and TLS viewpoint.

- Angle of impact (): The angle between the direction vector from the sensor to the point and the point’s surface affects the spot size and distance measurement [12]. The angle of impact is calculated in Equation (3) using the point’s normal vector N and is determined through principle component analysis:

- Spot size (major axis): Depending on the angle of impact, the emitted round laser spot deforms into an ellipse. The spot size’s major axis of the ellipse depends on the divergence angle , the spot size at the beginning , and the distance d [32]. It can be calculated using the following formula:

- Curvature: The curvature provides information about the object’s shape and is calculated from the eigenvalues resulting from the principal component analysis of the k-nearest neighbourhood of a point [33]. With and , the curvature can be calculated as follows:

3. Uncertainty Modelling

3.1. Data Analysis

3.2. Regression

3.3. Multiple Linear Regression

3.4. Multiple Nonlinear Regression

3.5. XGBoost Regressor

Hyperparameter

- Max_depth: The max_depth parameter determines the depth limit of the trees within a model. Increasing the max_depth value creates more intricate models. Search space = [2–8].

- N_estimators: The number of trees within a model is controlled by the n_estimators parameter. Raising this value generally enhances the performance of the model, but it can also increase the risk of overfitting. Search space = [20–150].

- eta: The learning rate governs the size of the optimizer’s steps when updating weights in the model. A smaller eta value results in more precise but slower updates, whereas a larger eta value leads to quicker but less precise updates. Search space = [0–1].

- reg_lambda: The lambda parameter, also known as the L2 regularization term on weights, regulates the weight values in the model by adding a penalty term to the loss function. Search space = [0–20].

- alpha: The alpha parameter is responsible for the L1 regularization term on the weight values of the model by adding a penalty term to the loss function. Search space = [0–20].

- subsample: The subsample parameter determines the proportion of observations used for each tree in the model. Search space = [0–1].

- colsample_by_tree: The colsample_by_tree parameter regulates the fraction of features used for constructing each tree in the model. Setting a lower colsample_by_tree value results in smaller and less intricate models that can assist in avoiding overfitting. Search space = [0–1].

- min_child_weight: The min_child_weight parameter specifies the minimum sum of instance weights needed in a node before the node will be split. Setting a larger min_child_weight value results in a more conservative tree as it requires more samples to consider splitting a node. Search space = [0–20].

3.6. Training of the Models Using Cross Validation

- Input point cloud: This consisted of 49 TLS scans from two different objects (two heating systems), resulting in 98 independent objects.

- Validation data (1): A validation data set was created by randomly selecting objects from the 98 scanned objects until its size was greater than 20% of the entire input point cloud. The validation data set was independent from the training data set as no temporal correlation was present.

- Training data (2) and Test data (3): The remaining point cloud was randomly split into training (80%) and test (20%) data. The test data set was not independent from the training data set as correlations existed due to data from the same scans.

4. Analysis of Regression Results

4.1. Feature Importance

Quality of the Regression

4.2. Distance Calibration Using XGBoost Regressor

4.3. Real Case Application

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| TLS | terrestrial laser scanner |

| ML | machine learning |

| GUM | Guide to the Expression of Uncertainty in Measurement |

| RMSE | root-mean-square error |

| CART | classification and regression trees |

References

- Joint Committee for Guides in Metrology. Evaluation of Measurement Data—Guide to the Expression of Uncertainty in Measurement. 2008. Available online: https://www.iso.org/sites/JCGM/GUM-JCGM100.htm (accessed on 9 March 2023).

- Alkhatib, H.; Neumann, I.; Kutterer, H. Uncertainty modeling of random and systematic errors by means of Monte Carlo and fuzzy techniques. J. Appl. Geod. 2009, 3, 67–79. [Google Scholar] [CrossRef]

- Alkhatib, H.; Kutterer, H. Estimation of Measurement Uncertainty of kinematic TLS Observation Process by means of Monte-Carlo Methods. J. Appl. Geod. 2013, 7, 125–134. [Google Scholar] [CrossRef]

- Neitzel, F. Untersuchung des Achssystems und des Taumelfehlers terrestrischer Laserscanner mit tachymetrischem Messprinzip. In Terrestrisches Laser-Scanning (TLS 2006), Schriftenreihe des DVW, Band 51; Wißner-Verlag: Augsburg, Germany, 2006; pp. 15–34. [Google Scholar]

- Neitzel, F. Gemeinsame Bestimmung von Ziel-, Kippachsenfehler und Exzentrizität der Zielachse am Beispiel des Laserscanners Zoller+ Fröhlich Imager 5003. In Photogrammetrie-Laserscanning-Optische 3D-Messtechnik, Beiträge der Oldenburger 3D-Tage; Herbert Wichmann Verlag: Heidelberg, Germany, 2006; pp. 174–183. [Google Scholar]

- Holst, C.; Kuhlmann, H. Challenges and present fields of action at laser scanner based deformation analyses. J. Appl. Geod. 2016, 2016, 17–25. [Google Scholar] [CrossRef]

- Medić, T.; Holst, C.; Janßen, J.; Kuhlmann, H. Empirical stochastic model of detected target centroids: Influence on registration and calibration of terrestrial laser scanners. J. Appl. Geod. 2019, 13, 179–197. [Google Scholar] [CrossRef]

- Medić, T.; Holst, C.; Kuhlmann, H. Optimizing the Target-based Calibration Procedure of Terrestrial Laser Scanners. In Allgemeine Vermessungs-Nachrichten: AVN; Zeitschrift für alle Bereiche der Geodäsie und Geoinformation; VDE Verlag: Berlin, Germany, 2020; pp. 27–36. [Google Scholar]

- Muralikrishnan, B.; Ferrucci, M.; Sawyer, D.; Gerner, G.; Lee, V.; Blackburn, C.; Phillips, S.; Petrov, P.; Yakovlev, Y.; Astrelin, A.; et al. Volumetric performance evaluation of a laser scanner based on geometric error model. Precis. Eng.-J. Int. Soc. Precis. Eng. Nanotechnol. 2015, 40, 139–150. [Google Scholar] [CrossRef]

- Gordon, B. Zur Bestimmung von Messunsicherheiten Terrestrischer Laserscanner. Ph.D. Thesis, Technische Universität Darmstadt, Darmstadt, Germany, 2008. [Google Scholar]

- Juretzko, M. Reflektorlose Video-Tachymetrie: Ein Integrales Verfahren zur Erfassung Geometrischer und Visueller Informationen. Ph.D. Thesis, Ruhr-Universität Bochum, Bochum, Germany, 2004. [Google Scholar]

- Soudarissanane, S.; Lindenbergh, R.; Menenti, M.; Teunissen, P. Scanning geometry: Influencing factor on the quality of terrestrial laser scanning points. ISPRS J. Photogramm. Remote Sens. 2011, 66, 389–399. [Google Scholar] [CrossRef]

- Zámevcníková, M. Towards the Influence of the Angle of Incidence and the Surface Roughness on Distances in Terrestrial Laser Scanning. In FIG Working Week 2017; FIG: Helsinki, Finland, 2017. [Google Scholar]

- Linzer, F.; Papčová, M.; Neuner, H. Quantification of Systematic Distance Deviations for Scanning Total Stations Using Robotic Applications. In Contributions to International Conferences on Engineering Surveying; Kopáčik, A., Kyrinovič, P., Erdélyi, J., Paar, R., Marendić, A., Eds.; Springer Proceedings in Earth and Environmental Sciences; Springer: Cham, Switzerland, 2021; pp. 98–108. [Google Scholar] [CrossRef]

- Wujanz, D.; Burger, M.; Mettenleiter, M.; Neitzel, F. An intensity-based stochastic model for terrestrial laser scanners. ISPRS J. Photogramm. Remote Sens. 2017, 125, 146–155. [Google Scholar] [CrossRef]

- Kauker, S.; Schwieger, V. A synthetic covariance matrix for monitoring by terrestrial laser scanning. J. Appl. Geod. 2017, 11, 77–87. [Google Scholar] [CrossRef]

- Zhao, X.; Kermarrec, G.; Kargoll, B.; Alkhatib, H.; Neumann, I. Influence of the simplified stochastic model of TLS measurements on geometry-based deformation analysis. J. Appl. Geod. 2019, 13, 199–214. [Google Scholar] [CrossRef]

- Schmitz, B.; Holst, C.; Medic, T.; Lichti, D.D.; Kuhlmann, H. How to Efficiently Determine the Range Precision of 3D Terrestrial Laser Scanners. Sensors 2019, 19, 1466. [Google Scholar] [CrossRef] [PubMed]

- Kermarrec, G.; Alkhatib, H.; Neumann, I. On the Sensitivity of the Parameters of the Intensity-Based Stochastic Model for Terrestrial Laser Scanner. Case Study: B-Spline Approximation. Sensors 2018, 18, 2964. [Google Scholar] [CrossRef] [PubMed]

- Stenz, U.; Hartmann, J.; Paffenholz, J.A.; Neumann, I. High-Precision 3D Object Capturing with Static and Kinematic Terrestrial Laser Scanning in Industrial Applications—Approaches of Quality Assessment. Remote Sens. 2020, 12, 290. [Google Scholar] [CrossRef]

- Stenz, U.; Hartmann, J.; Paffenholz, J.A.; Neumann, I. A Framework Based on Reference Data with Superordinate Accuracy for the Quality Analysis of Terrestrial Laser Scanning-Based Multi-Sensor-Systems. Sensors 2017, 17, 1886. [Google Scholar] [CrossRef] [PubMed]

- Hastie, T.J.; Friedman, J.H.; Tibshirani, R. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer: New York, NY, USA, 2017. [Google Scholar]

- Hartmann, J.; Heiken, M.; Alkhatib, H.; Neumann, I. Automatic quality assessment of terrestrial laser scans. J. Appl. Geod. 2023. [Google Scholar] [CrossRef]

- Urbas, U.; Vlah, D.; Vukašinović, N. Machine learning method for predicting the influence of scanning parameters on random measurement error. Meas. Sci. Technol. 2021, 32, 065201. [Google Scholar] [CrossRef]

- Hexagon Manufacturing Intelligence. Leica Absolute Tracker AT960 Datasheet 2023. Available online: https://hexagon.com/de/products/leica-absolute-tracker-at960?accordId=E4BF01077B2743729F2C0E768C0BC7AB (accessed on 17 March 2023).

- Hexagon Manufacturing Intelligence. Leica-Laser Tracker Systems. 2023. Available online: https://www.hexagonmi.com/de-de/products/laser-tracker-systems (accessed on 9 March 2023).

- Zoller + Fröhlich GmbH. Z+F IMAGER® Z+F IMAGER 5016: Data Sheet. 2022. Available online: https://scandric.de/wp-content/uploads/ZF-IMAGER-5016_Datenblatt-D_kompr.pdf (accessed on 9 March 2023).

- technet GmbH. Scantra, Version 3.0.1. 2023. Available online: https://www.technet-gmbh.com/produkte/scantra/ (accessed on 9 March 2023).

- Kazhdan, M.; Chuang, M.; Rusinkiewicz, S.; Hoppe, H. Poisson Surface Reconstruction with Envelope Constraints. Comput. Graph. Forum 2020, 39, 173–182. [Google Scholar] [CrossRef]

- Wiemann, T.; Annuth, H.; Lingemann, K.; Hertzberg, J. An Extended Evaluation of Open Source Surface Reconstruction Software for Robotic Applications. J. Intell. Robot. Syst. 2015, 77, 149–170. [Google Scholar] [CrossRef]

- Zhou, Q.Y.; Park, J.; Koltun, V. Open3D: A Modern Library for 3D Data Processing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

- Sheng, Y. Quantifying the Size of a Lidar Footprint: A Set of Generalized Equations. IEEE Geosci. Remote Sens. Lett. 2008, 5, 419–422. [Google Scholar] [CrossRef]

- Hackel, T.; Wegner, J.; Schindler, K. Contour Detection in Unstructured 3D Point Clouds. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1610–1618. [Google Scholar] [CrossRef]

- Koch, K.R. Parameterschätzung und Hypothesentests in Linearen Modellen; Dümmler: Bonn, Germany, 1997; Volume 7892. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Krishnapuram, B., Shah, M., Smola, A., Aggarwal, C., Shen, D., Rastogi, R., Eds.; ACM: New York, NY, USA, 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Xgboost developers. XGboost Parameter Documentation. 2023. Available online: https://xgboost.readthedocs.io/en/stable/parameter.html (accessed on 9 March 2023).

- Bergstra, J.; Yamins, D.; Cox, D. Making a Science of Model Search: Hyperparameter Optimization in Hundreds of Dimensions for Vision Architectures. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 115–123. [Google Scholar]

| Sensor | Specifications |

|---|---|

| Leica AT960 LR | Angle accuracy: ±15 m + 6 m/m (Maximum permissible error) |

| Range accuracy: ±0.5 m/m (Maximum permissible error) | |

| Measurement range (6 DoF): 40 m | |

| Leica LAS XL | Measurement uncertainty plane surface: ±0.225 mm (one standard deviation) |

| Measurement range: 30 cm | |

| Data acquisition rate: 143,000 pts/s | |

| Z+F Imager 5016 | Vertical accuracy: mm/m (one standard deviation) |

| Horizontal accuracy: mm/m (one standard deviation) | |

| Range noise: 0.16–0.2 mm (one standard deviation) | |

| Measurement range: 0.3–365 m | |

| Data acquisition rate: 1 mio. pts/s | |

| Vertical resolution: | |

| Horiontal resolution: |

| Variable | Feature | Unit | Min | Max | Mean | std |

|---|---|---|---|---|---|---|

| Intensity | - | 0.0131 | 1.019 | 0.2933 | 0.1044 | |

| Angle of impact | rad | 0.174 | 1.570 | 0.952 | 0.323 | |

| Distance | m | 0.5516 | 8.2893 | 2.0918 | 1.6516 | |

| Spot size | m | 0.0035 | 0.0102 | 0.0038 | 0.0003 | |

| Curvature | - | 0.00000 | 0.00086 | 0.00033 | 0.00018 | |

| y | Distance residuals | m | −0.0198 | 0.0195 | 0.00056 | 0.00073 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hartmann, J.; Alkhatib, H. Uncertainty Modelling of Laser Scanning Point Clouds Using Machine-Learning Methods. Remote Sens. 2023, 15, 2349. https://doi.org/10.3390/rs15092349

Hartmann J, Alkhatib H. Uncertainty Modelling of Laser Scanning Point Clouds Using Machine-Learning Methods. Remote Sensing. 2023; 15(9):2349. https://doi.org/10.3390/rs15092349

Chicago/Turabian StyleHartmann, Jan, and Hamza Alkhatib. 2023. "Uncertainty Modelling of Laser Scanning Point Clouds Using Machine-Learning Methods" Remote Sensing 15, no. 9: 2349. https://doi.org/10.3390/rs15092349