Improving Land Use and Land Cover Information of Wunbaik Mangrove Area in Myanmar Using U-Net Model with Multisource Remote Sensing Datasets

Abstract

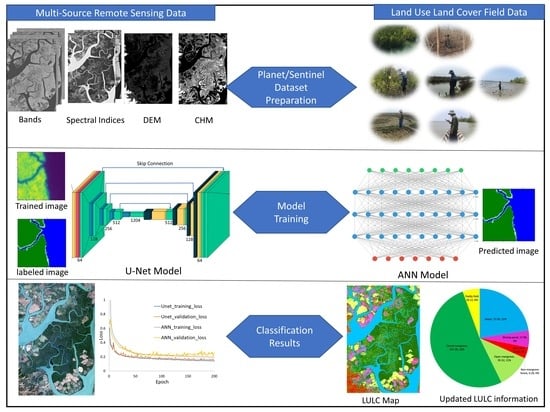

:1. Introduction

2. Materials and Methods

2.1. Study Area

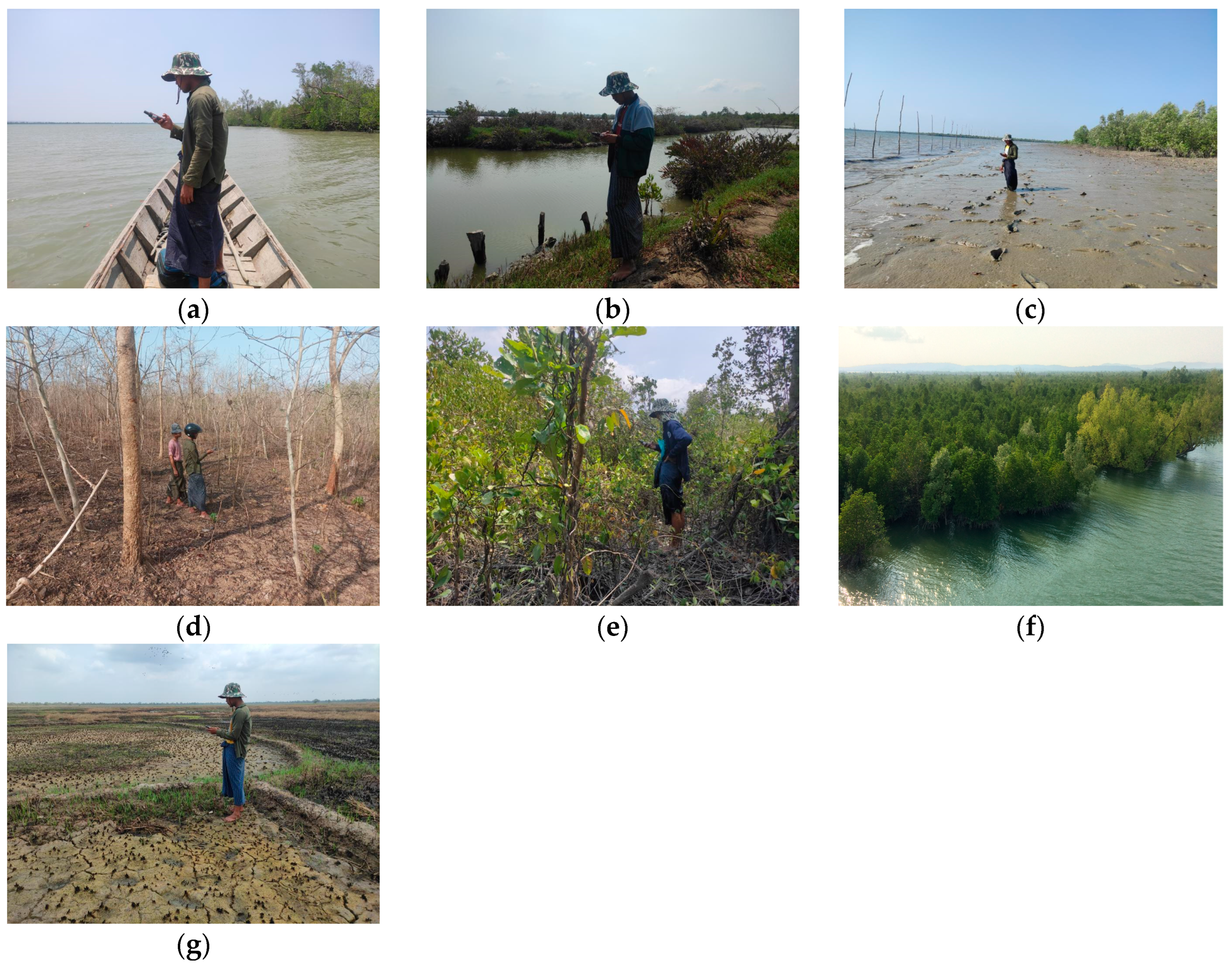

2.2. Ground Truth Data Collection and Creating a Labeled Image

2.3. Earth Observation Data

2.3.1. Satellite Band and Spectral Indices

2.3.2. Topographic and Canopy Height Information

2.4. Deep Learning Models for LULC Classification

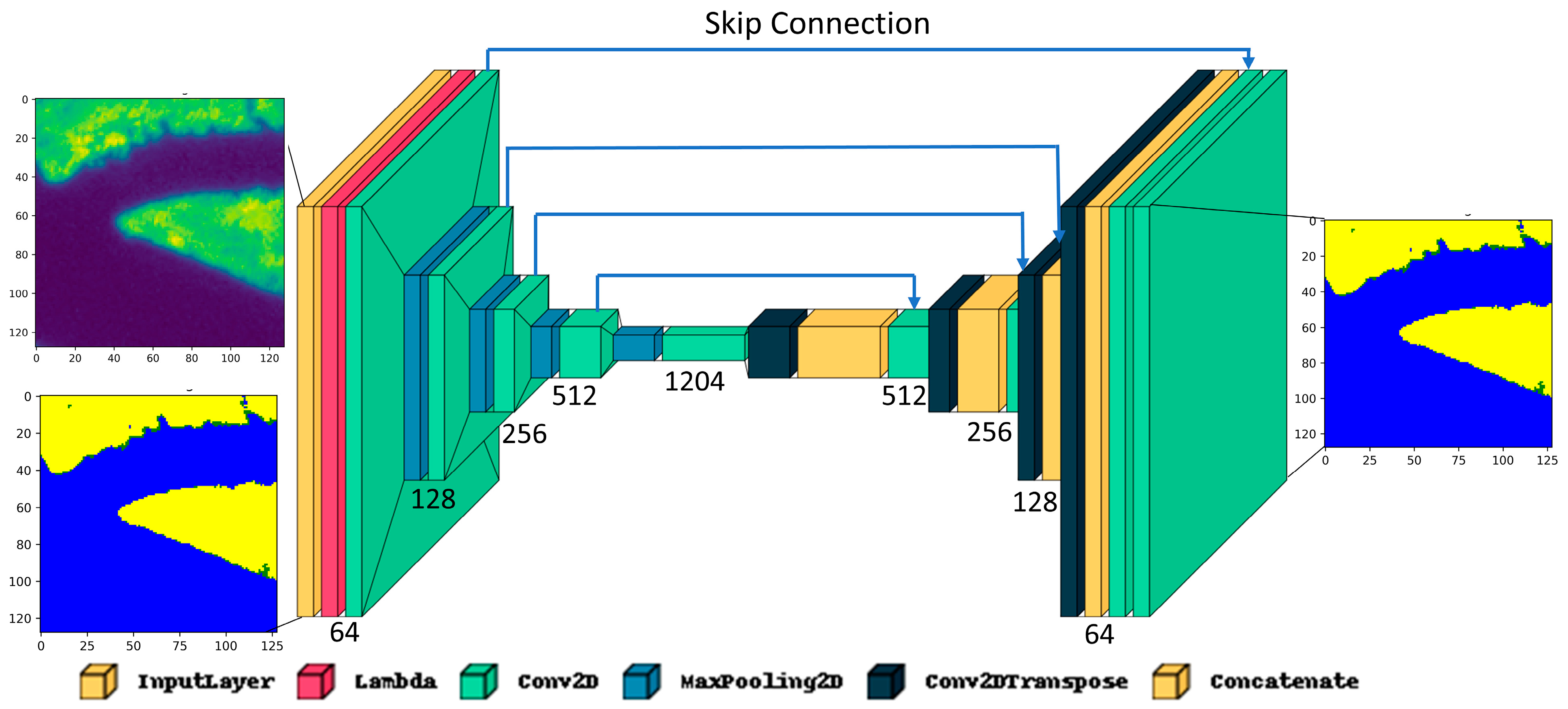

2.4.1. U-Net Model

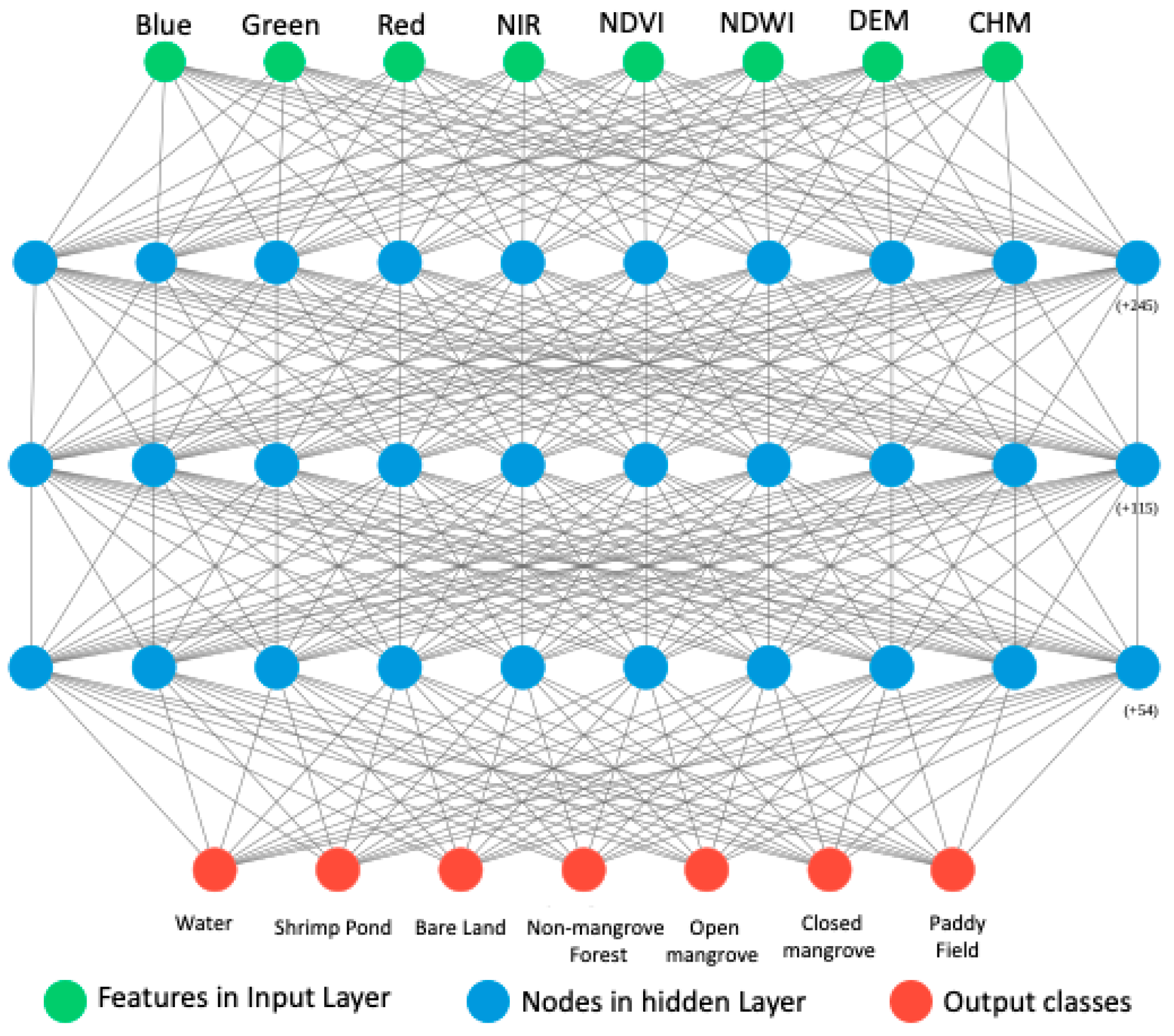

2.4.2. ANN Model

2.4.3. Assessment of Model Performance

2.5. LULC Classification Workflow

3. Results

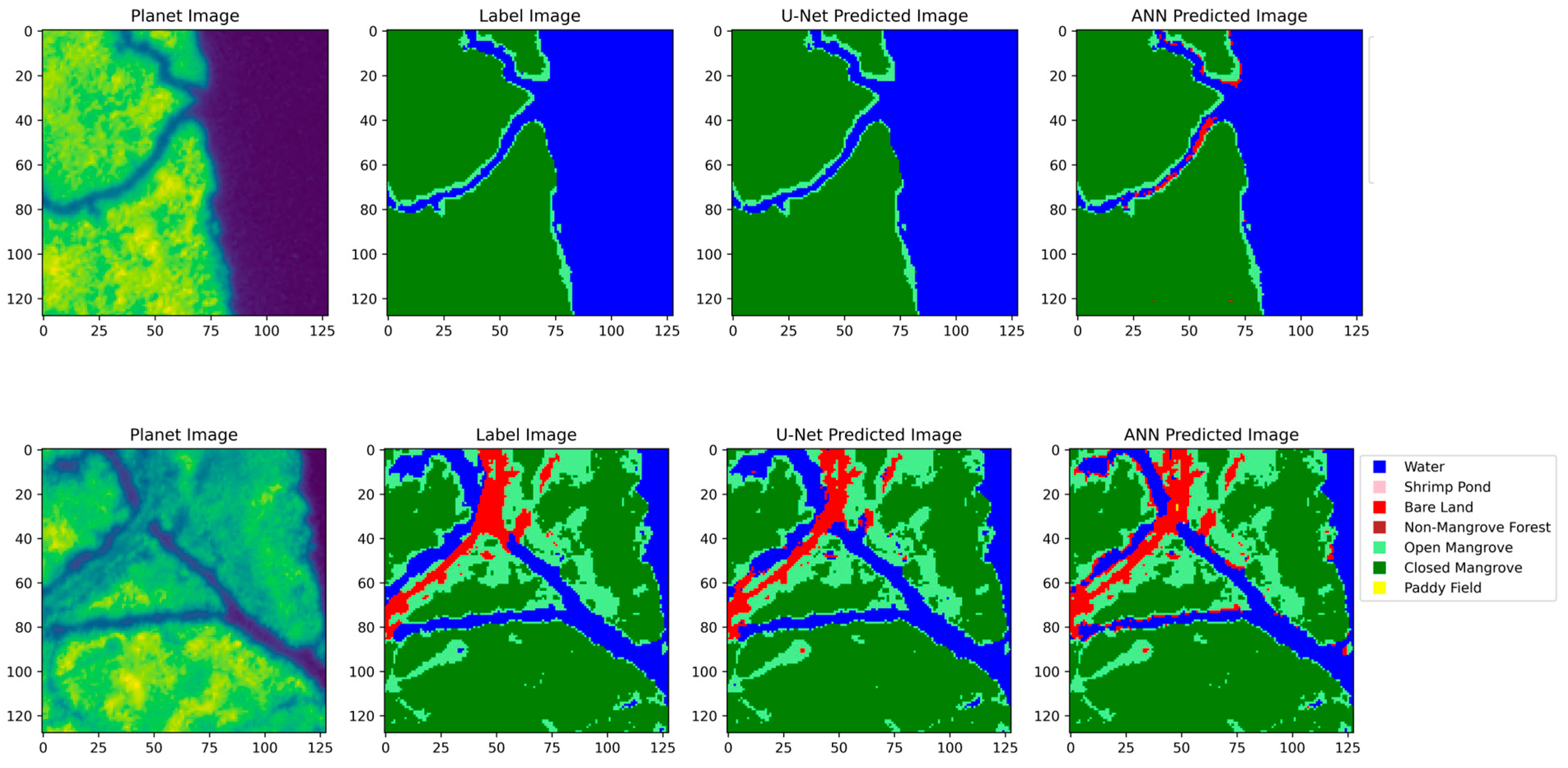

3.1. Model Performance with PlanetScope Imagery

3.2. Model Performance with Sentinel-2 Imagery

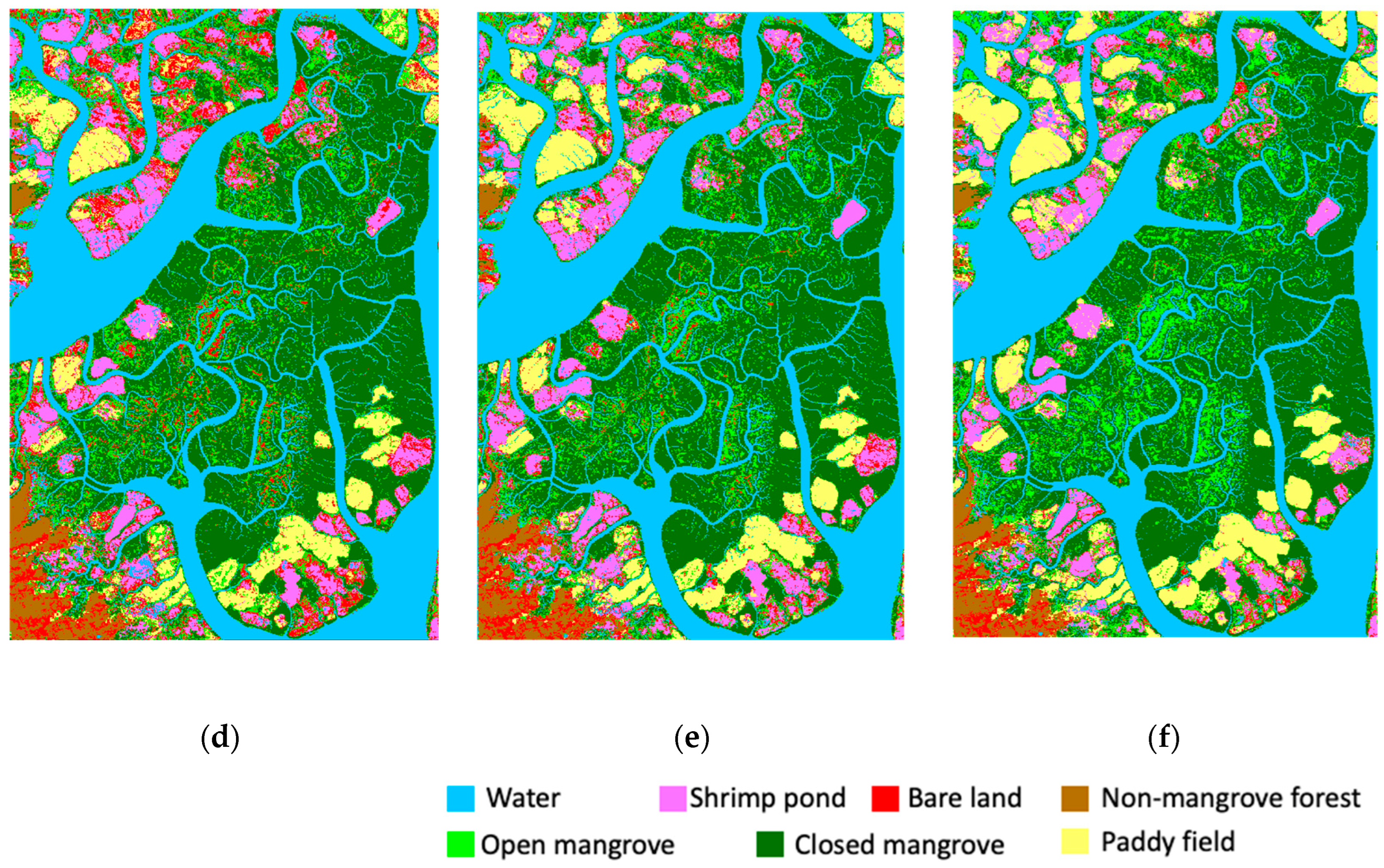

3.3. LULC Classification Map for the Whole Study Area

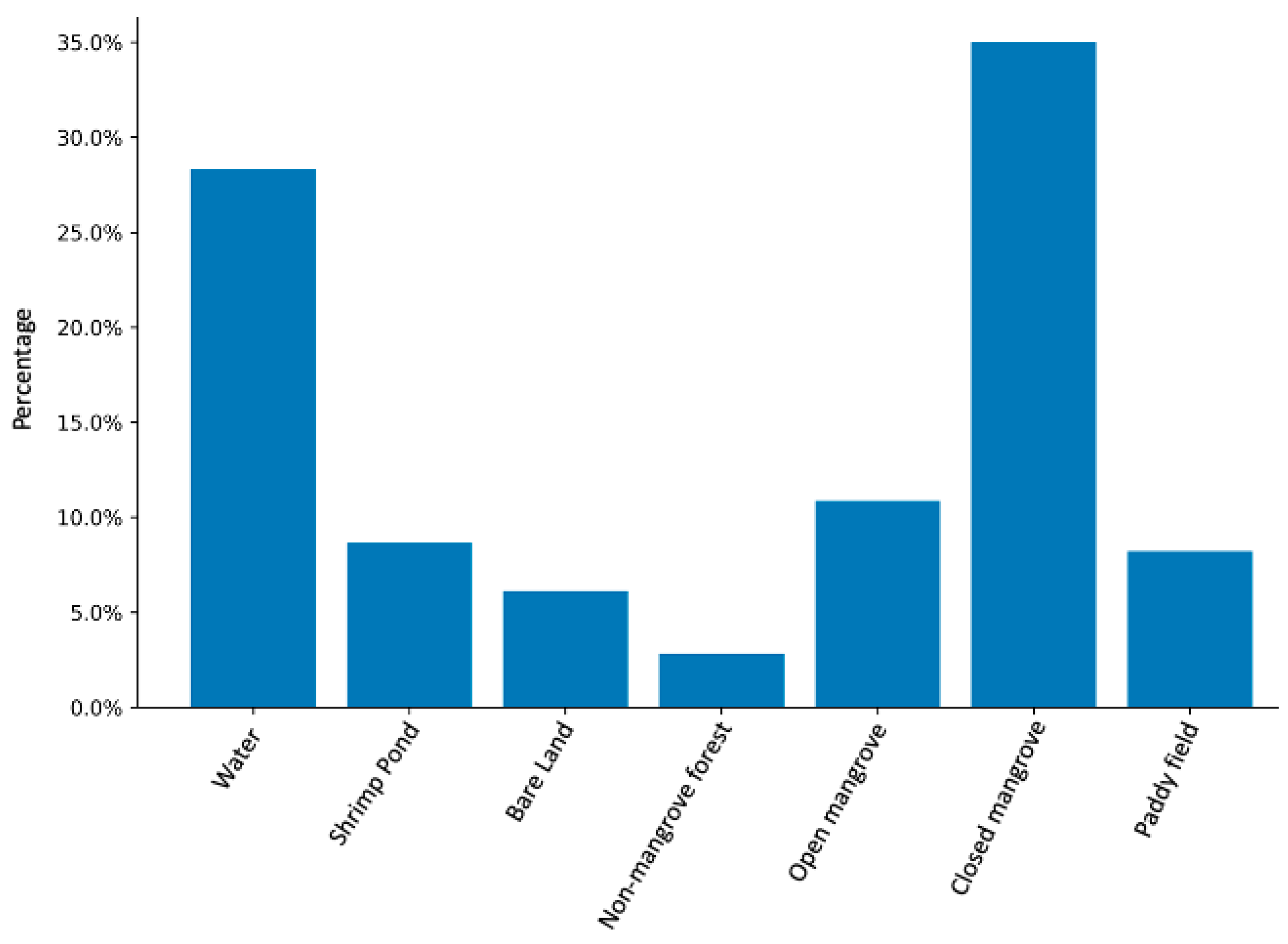

3.4. Estimating LULC Area for Wunbaik Mangrove Forest Managment

4. Discussion

4.1. Complexity of Land Use Compared to Land Cover

4.2. Integration of DEM and CHM

4.3. Model Performance and Labeled Data Requirement

4.4. Challenges and Opportunities of Sustainable Wunbaik Mangrove Mangement

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brander, L.M.; Wagtendonk, A.J.; Hussain, S.S.; McVittie, A.; Verburg, P.H.; de Groot, R.S.; van der Ploeg, S. Ecosystem service values for mangroves in Southeast Asia: A meta-analysis and value transfer application. Ecosyst. Serv. 2012, 1, 62–69. [Google Scholar] [CrossRef]

- Hawkins, S.J.; Evans, A.J.; Dale, A.C.; Firth, L.B.; Hughes, D.J.; Smith, I.P. Ecosystem Services and Disservices of Mangrove Forests and Salt Marshes. In Oceanography and Marine Biology; Taylor & Francis: Hoboken, NJ, USA, 2017; Volume 55, ISBN 9781351987592. [Google Scholar]

- Richards, D.R.; Friess, D.A. Rates and drivers of mangrove deforestation in Southeast Asia, 2000–2012. Proc. Natl. Acad. Sci. USA 2016, 113, 344–349. [Google Scholar] [CrossRef] [PubMed]

- Friess, D.A.; Rogers, K.; Lovelock, C.E.; Krauss, K.W.; Hamilton, S.E.; Lee, S.Y.; Lucas, R.; Primavera, J.; Rajkaran, A.; Shi, S. The State of the World’s Mangrove Forests: Past, Present, and Future. Annu. Rev. Environ. Resour. 2019, 44, 89–115. [Google Scholar] [CrossRef]

- Awuku-Sowah, E.M.; Graham, N.A.J.; Watson, N.M. Investigating mangrove-human health relationships: A review of recently reported physiological benefits. Dialogues Health 2022, 1, 100059. [Google Scholar] [CrossRef]

- Chen, R.; Yang, H.; Yang, G.; Liu, Y.; Zhang, C.; Long, H.; Xu, H.; Meng, Y.; Feng, H. Land-Use Mapping with Multi-Temporal Sentinel Images Based on Google Earth Engine in Southern Xinjiang Uygur Autonomous Region, China. Remote Sens. 2023, 15, 3958. [Google Scholar] [CrossRef]

- Wang, L.; Jia, M.; Yin, D.; Tian, J. A review of remote sensing for mangrove forests: 1956–2018. Remote Sens. Environ. 2019, 231, 111223. [Google Scholar] [CrossRef]

- Pham, T.; Yokoya, N.; Yoshino, K.; Bui, D.; Friess, D. Remote Sensing Approaches for Monitoring Mangrove Species, Structure, and Biomass: Opportunities and Challenges. Remote Sens. 2019, 11, 230. [Google Scholar] [CrossRef]

- Giri, C. Recent advancement in mangrove forests mapping and monitoring of the world using earth observation satellite data. Remote Sens. 2021, 13, 563. [Google Scholar] [CrossRef]

- Nguyen, H.H.; Nghia, N.H.; Nguyen, H.T.T.; Le, A.T.; Tran, L.T.N.; Duong, L.V.K.; Bohm, S.; Furniss, M.J. Classification methods for mapping mangrove extents and drivers of change in Thanh Hoa province, Vietnam during 2005–2018. For. Soc. 2020, 4, 225–242. [Google Scholar] [CrossRef]

- Manna, S.; Raychaudhuri, B. Mapping distribution of Sundarban mangroves using Sentinel-2 data and new spectral metric for detecting their health condition. Geocarto Int. 2020, 35, 434–452. [Google Scholar] [CrossRef]

- Zheng, Y.; Takeuchi, W. Quantitative assessment and driving force analysis of mangrove forest changes in china from 1985 to 2018 by integrating optical and radar imagery. ISPRS Int. J. Geo-Inf. 2020, 9, 513. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Thakur, S.; Mondal, I.; Ghosh, P.B.; Das, P.; De, T.K. A review of the application of multispectral remote sensing in the study of mangrove ecosystems with special emphasis on image processing techniques. Spat. Inf. Res. 2020, 28, 39–51. [Google Scholar] [CrossRef]

- Green, E.P.; Clark, C.D.; Mumby, P.J.; Edwards, A.J.; Ellis, A.C. Remote sensing techniques for mangrove mapping. Int. J. Remote Sens. 1998, 19, 935–956. [Google Scholar] [CrossRef]

- Ahmad, A.; Quegan, S. Comparative analysis of supervised and unsupervised classification on multispectral data. Appl. Math. Sci. 2013, 7, 3681–3694. [Google Scholar] [CrossRef]

- Toosi, N.B.; Soffianian, A.R.; Fakheran, S.; Pourmanafi, S.; Ginzler, C.; Waser, L.T. Comparing different classification algorithms for monitoring mangrove cover changes in southern Iran. Glob. Ecol. Conserv. 2019, 19, e00662. [Google Scholar] [CrossRef]

- Ge, G.; Shi, Z.; Zhu, Y.; Yang, X.; Hao, Y. Land use/cover classification in an arid desert-oasis mosaic landscape of China using remote sensed imagery: Performance assessment of four machine learning algorithms. Glob. Ecol. Conserv. 2020, 22, e00971. [Google Scholar] [CrossRef]

- Campomanes, F.; Pada, A.V.; Silapan, J. Mangrove classification using support vector machines and random forest algorithm: A comparative study. In Proceedings of the GEOBIA 2016: Solutions and Synergies, Enschede, The Netherlands, 14–16 September 2016; pp. 2–6. [Google Scholar]

- Castelo-Cabay, M.; Piedra-Fernandez, J.A.; Ayala, R. Deep learning for land use and land cover classification from the Ecuadorian Paramo. Int. J. Digit. Earth 2022, 15, 1001–1017. [Google Scholar] [CrossRef]

- Han, W.; Zhang, X.; Wang, Y.; Wang, L.; Huang, X.; Li, J.; Wang, S.; Chen, W.; Li, X.; Feng, R.; et al. A survey of machine learning and deep learning in remote sensing of geological environment: Challenges, advances, and opportunities. ISPRS J. Photogramm. Remote Sens. 2023, 202, 87–113. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. Joint Deep Learning for land cover and land use classification. Remote Sens. Environ. 2019, 221, 173–187. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Fan, Z.; Zhan, T.; Gao, Z.; Li, R.; Liu, Y.; Zhang, L.; Jin, Z.; Xu, S. Land Cover Classification of Resources Survey Remote Sensing Images Based on Segmentation Model. IEEE Access 2022, 10, 56267–56281. [Google Scholar] [CrossRef]

- Chen, J.; Sasaki, J. Mapping of subtidal and intertidal seagrass meadows via application of the feature pyramid network to unmanned aerial vehicle orthophotos. Remote Sens. 2021, 13, 4880. [Google Scholar] [CrossRef]

- Mas, J.F.; Flores, J.J. The application of artificial neural networks to the analysis of remotely sensed data. Int. J. Remote Sens. 2008, 29, 617–663. [Google Scholar] [CrossRef]

- Dai, X.L.; Khorram, S. Remotely sensed change detection based on artificial neural networks. Photogramm. Eng. Remote Sens. 1999, 65, 1187–1194. [Google Scholar]

- Wei, Y.; Cheng, Y.; Yin, X.; Xu, Q.; Ke, J.; Li, X. Deep Learning-Based Classification of High-Resolution Satellite Images for Mangrove Mapping. Appl. Sci. 2023, 13, 8526. [Google Scholar] [CrossRef]

- Islam, M.D.; Di, L.; Mia, M.R.; Sithi, M.S. Deforestation Mapping of Sundarbans Using Multi-Temporal Sentinel-2 Data and Transfer Learning. In Proceedings of the 2022 10th International Conference on Agro-geoinformatics (Agro-Geoinformatics), Quebec City, QC, Canada, 11–14 July 2022; pp. 1–4. [Google Scholar]

- Chun, B.B.; Mat Jafri, M.Z.; San, L.H. Comparison of remote sensing approach for mangrove mapping over Penang Island. In Proceedings of the 2012 International Conference on Computer and Communication Engineering, Kuala Lumpur, Malaysia, 3–5 July 2012; pp. 258–262. [Google Scholar]

- Stanley, D.O.; Broadhead, J.; Aung Aung, M. The Atlas and Guidelines for Mangrove Management in Wunbaik Reserved Forest; Forest Department; Food and Agriculture Organization of the United Nations FAO-UN: Yangon, Myanmar, 2011; 132p. [Google Scholar]

- Saw, A.A.; Kanzaki, M. Local Livelihoods and Encroachment into a Mangrove Forest Reserve: A Case Study of the Wunbaik Reserved Mangrove Forest, Myanmar. Procedia Environ. Sci. 2015, 28, 483–492. [Google Scholar] [CrossRef]

- Saw, A.A. Deforestation and Local Livelihood Strategy: A Case of Encroachment into the Wunbaik Reserved Mangrove Forest, Myanmar. Ph.D. Thesis, Kyoto University, Kyoto, Japan, 2017. [Google Scholar]

- Maung, W.S.; Sasaki, J. Assessing the natural recovery of mangroves after human disturbance using neural network classification and sentinel-2 imagery in wunbaik mangrove forest, Myanmar. Remote Sens. 2021, 13, 52. [Google Scholar] [CrossRef]

- Xu, C.; Wang, J.; Sang, Y.; Li, K.; Liu, J.; Yang, G. An Effective Deep Learning Model for Monitoring Mangroves: A Case Study of the Indus Delta. Remote Sens. 2023, 15, 2220. [Google Scholar] [CrossRef]

- Teillet, P.M.; Staenz, K.; Williams, D.J. Effects of spectral, spatial, and radiometric characteristics on remote sensing vegetation indices of forested regions. Remote Sens. Environ. 1997, 61, 139–149. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Alsaaideh, B.; Al-Hanbali, A.; Tateishi, R.; Kobayashi, T.; Hoan, N.T. Mangrove Forests Mapping in the Southern Part of Japan Using Landsat ETM+ with DEM. J. Geogr. Inf. Syst. 2013, 5, 369–377. [Google Scholar] [CrossRef]

- Eiumnoh, A.; Shrestha, R.P. Application of DEM data to Landsat image classification: Evaluation in a tropical wet-dry landscape of Thailand. Photogramm. Eng. Remote Sens. 2000, 66, 297–304. [Google Scholar]

- Alsaaideh, B.; Al-Hanbali, A.; Tateishi, R.; Nguyen Thanh, H. The integration of spectral analyses of Landsat ETM+ with the DEM data for mapping mangrove forests. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 1914–1917. [Google Scholar]

- Ou, J.; Tian, Y.; Zhang, Q.; Xie, X.; Zhang, Y.; Tao, J.; Lin, J. Coupling UAV Hyperspectral and LiDAR Data for Mangrove Classification Using XGBoost in China’s Pinglu Canal Estuary. Forests 2023, 14, 1838. [Google Scholar] [CrossRef]

- Yamazaki, D.; Ikeshima, D.; Neal, J.C.; O’Loughlin, F.; Sampson, C.C.; Kanae, S.; Bates, P.D. MERIT DEM: A new high-accuracy global digital elevation model and its merit to global hydrodynamic modeling. AGUFM 2017, 2017, H12C-04. [Google Scholar]

- Lang, N.; Jetz, W.; Schindler, K.; Wegner, J.D. A high-resolution canopy height model of the Earth. arXiv 2022, arXiv:2204.08322. [Google Scholar] [CrossRef] [PubMed]

- Lang, N.; Schindler, K.; Wegner, J.D. Country-wide high-resolution vegetation height mapping with Sentinel-2. Remote Sens. Environ. 2019, 233, 111347. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Vanneschi, L.; Silva, S. Artificial Neural Networks. Natural Computing Series. 2023. Available online: https://link.springer.com/chapter/10.1007/978-3-031-17922-8_7 (accessed on 13 November 2023).

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and accurate deep network learning by exponential linear units (ELUs). In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, 2–4 May 2016; pp. 1–14. [Google Scholar]

- Cococcioni, M.; Rossi, F.; Ruffaldi, E.; Saponara, S. A Novel Posit-based Fast Approximation of ELU Activation Function for Deep Neural Networks. In Proceedings of the 2020 IEEE International Conference on Smart Computing (SMARTCOMP), Bologna, Italy, 14–17 September 2020; pp. 244–246. [Google Scholar]

- Devi, T.; Deepa, N. A novel intervention method for aspect-based emotion Using Exponential Linear Unit (ELU) activation function in a Deep Neural Network. In Proceedings of the 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021; pp. 1671–1675. [Google Scholar]

- Zhang, Y.; Wu, J.; Chen, W.; Chen, Y.; Tang, X. Prostate segmentation using z-net. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging, Venice, Italy, 8–11 April 2019; pp. 11–14. [Google Scholar]

- Pan, X.; Liu, Z.; He, C.; Huang, Q. Modeling urban expansion by integrating a convolutional neural network and a recurrent neural network. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102977. [Google Scholar] [CrossRef]

- Wang, X.; Jing, S.; Dai, H.; Shi, A. High-resolution remote sensing images semantic segmentation using improved UNet and SegNet. Comput. Electr. Eng. 2023, 108, 108734. [Google Scholar] [CrossRef]

- Rwanga, S.S.; Ndambuki, J.M. Accuracy Assessment of Land Use/Land Cover Classification Using Remote Sensing and GIS. Int. J. Geosci. 2017, 8, 611–622. [Google Scholar] [CrossRef]

- Van Beers, F.; Lindström, A.; Okafor, E.; Wiering, M.A. Deep neural networks with intersection over union loss for binary image segmentation. In Proceedings of the ICPRAM 2019—8th International Conference on Pattern Recognition Applications and Methods, Prague, Czech Republic, 19–21 February 2019; pp. 438–445. [Google Scholar]

- Yacouby, R.; Axman, D. Probabilistic Extension of Precision, Recall, and F1 Score for More Thorough Evaluation of Classification Models. In Proceedings of the First Workshop on Evaluation and Comparison ofNLP Systems, Eval4NLP 2020, Online, 20 November 2020; pp. 79–91. Available online: https://aclanthology.org/2020.eval4nlp-1.9/ (accessed on 13 November 2023).

- Zaabar, N.; Niculescu, S.; Kamel, M.M. Application of Convolutional Neural Networks with Object-Based Image Analysis for Land Cover and Land Use Mapping in Coastal Areas: A Case Study in Ain Témouchent, Algeria. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5177–5189. [Google Scholar] [CrossRef]

- Altarez, R.D.D.; Apan, A.; Maraseni, T. Deep learning U-Net classification of Sentinel-1 and 2 fusions effectively demarcates tropical montane forest’s deforestation. Remote Sens. Appl. Soc. Environ. 2023, 29, 100887. [Google Scholar] [CrossRef]

- Gupta, K.; Mukhopadhyay, A.; Giri, S.; Chanda, A.; Datta Majumdar, S.; Samanta, S.; Mitra, D.; Samal, R.N.; Pattnaik, A.K.; Hazra, S. An index for discrimination of mangroves from non-mangroves using LANDSAT 8 OLI imagery. MethodsX 2018, 5, 1129–1139. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.; Huang, K.; Sun, W.; Meng, X.; Mao, D.; Ge, Y. Enhanced mangrove vegetation index based on hyperspectral images for mapping mangrove. ISPRS J. Photogramm. Remote Sens. 2022, 189, 236–254. [Google Scholar] [CrossRef]

- Marsocci, V.; Scardapane, S. Continual Barlow Twins: Continual Self-Supervised Learning for Remote Sensing Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5049–5060. [Google Scholar] [CrossRef]

- Li, Z.; Chen, H.; Wu, J.; Li, J.; Jing, N. SegMind: Semisupervised Remote Sensing Image Semantic Segmentation with Masked Image Modeling and Contrastive Learning Method. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- Saha, S.; Shahzad, M.; Mou, L.; Song, Q.; Zhu, X.X. Unsupervised Single-Scene Semantic Segmentation for Earth Observation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Giri, C.; Ochieng, E.; Tieszen, L.L.; Zhu, Z.; Singh, A.; Loveland, T.; Masek, J.; Duke, N. Status and distribution of mangrove forests of the world using earth observation satellite data. Glob. Ecol. Biogeogr. 2011, 20, 154–159. [Google Scholar] [CrossRef]

| Annotation Code | Description of LULC Classes | Number of GPS Points |

|---|---|---|

| 0 | Water: areas mainly covered by marine water. | 20 |

| 1 | Shrimp pond: man-made ponds used for shrimp farming, characterized by the presence of water and often geometric shapes. | 31 |

| 2 | Bare land: unvegetated areas such as tidal flat in mangrove areas or exposed soil in terrestrial areas. | 22 |

| 3 | Non-mangrove forest: forested areas consisting of tree cover that is not dominated by mangrove species. | 25 |

| 4 | Open mangrove: mangrove areas with a relatively sparse canopy, allowing more sunlight to reach the ground. | 38 |

| 5 | Closed mangrove: dense mangrove areas with a thick canopy, where mangrove trees form a dense cover. | 58 |

| 6 | Paddy field: agricultural fields used for cultivating rice, typically similar shapes to shrimp ponds. | 30 |

| Total | 224 | |

| Title 1 | Acquisition Date | Cloud Coverage | Band Used | Spatial Resolution | Processing Level |

|---|---|---|---|---|---|

| PlanetScope | 21 January 2023 | 0 | RGB, NIR | 3 | - |

| Sentinel-2 | 14 February 2023 | 0 | RGB, NIR | 10 | Level-2A |

| LULC Class | IoU | F1 Score | Precision | Recall | ||||

|---|---|---|---|---|---|---|---|---|

| U-Net | ANN | U-Net | ANN | U-Net | ANN | U-Net | ANN | |

| Water | 0.97 | 0.96 | 0.97 | 0.97 | 0.97 | 0.98 | 0.97 | 0.96 |

| Shrimp pond | 0.82 | 0.72 | 0.84 | 0.79 | 0.85 | 0.88 | 0.82 | 0.72 |

| Bare land | 0.76 | 0.84 | 0.73 | 0.70 | 0.70 | 0.60 | 0.76 | 0.84 |

| Non-mangrove forest | 0.92 | 0.83 | 0.91 | 0.85 | 0.89 | 0.87 | 0.92 | 0.83 |

| Open mangrove | 0.91 | 0.95 | 0.93 | 0.94 | 0.95 | 0.94 | 0.91 | 0.94 |

| Closed mangrove | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.98 | 0.99 | 0.98 |

| Paddy field | 0.92 | 0.89 | 0.92 | 0.90 | 0.92 | 0.92 | 0.92 | 0.92 |

| Average Score | 0.82 | 0.79 | 0.94 | 0.93 | 0.94 | 0.94 | 0.94 | 0.93 |

| Overall accuracy on testing dataset (%) | 94.05 | 92.82 | ||||||

| LULC Class | IoU | F1 Score | Precision | Recall | ||||

|---|---|---|---|---|---|---|---|---|

| U-Net | ANN | U-Net | ANN | U-Net | ANN | U-Net | ANN | |

| Water | 0.94 | 0.89 | 0.94 | 0.91 | 0.94 | 0.94 | 0.94 | 0.89 |

| Shrimp pond | 0.83 | 0.91 | 0.82 | 0.77 | 0.81 | 0.67 | 0.83 | 0.91 |

| Bare land | 0.53 | 0.22 | 0.54 | 0.34 | 0.55 | 0.55 | 0.53 | 0.22 |

| Non-mangrove forest | 0.89 | 0.85 | 0.91 | 0.87 | 0.93 | 0.89 | 0.91 | 0.85 |

| Open mangrove | 0.60 | 0.43 | 0.64 | 0.49 | 0.68 | 0.57 | 0.64 | 0.43 |

| Closed mangrove | 0.96 | 0.94 | 0.93 | 0.89 | 0.91 | 0.84 | 0.93 | 0.94 |

| Paddy field | 0.92 | 0.91 | 0.92 | 0.91 | 0.92 | 0.91 | 0.92 | 0.91 |

| Average Score | 0.71 | 0.63 | 0.87 | 0.81 | 0.87 | 0.81 | 0.87 | 0.82 |

| Overall accuracy on testing dataset (%) | 86.94 | 82.08 | ||||||

| LULC Class | Whole Study Area | Wunbaik Reserved Mangrove Forest | ||

|---|---|---|---|---|

| Area (km2) | Portion (%) | Area (km2) | Portion (%) | |

| Water | 149.96 | 28.14 | 72.94 | 22.51 |

| Shrimp pond | 44.89 | 8.42 | 17.99 | 5.55 |

| Bare land | 32.98 | 6.19 | 11.84 | 3.65 |

| Non-mangrove forest | 15.15 | 2.84 | 0.29 | 0.09 |

| Open mangrove | 57.00 | 10.70 | 35.52 | 10.96 |

| Closed mangrove | 187.50 | 35.19 | 167.38 | 51.65 |

| Paddy field | 45.36 | 8.51 | 18.12 | 5.59 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maung, W.S.; Tsuyuki, S.; Guo, Z. Improving Land Use and Land Cover Information of Wunbaik Mangrove Area in Myanmar Using U-Net Model with Multisource Remote Sensing Datasets. Remote Sens. 2024, 16, 76. https://doi.org/10.3390/rs16010076

Maung WS, Tsuyuki S, Guo Z. Improving Land Use and Land Cover Information of Wunbaik Mangrove Area in Myanmar Using U-Net Model with Multisource Remote Sensing Datasets. Remote Sensing. 2024; 16(1):76. https://doi.org/10.3390/rs16010076

Chicago/Turabian StyleMaung, Win Sithu, Satoshi Tsuyuki, and Zhiling Guo. 2024. "Improving Land Use and Land Cover Information of Wunbaik Mangrove Area in Myanmar Using U-Net Model with Multisource Remote Sensing Datasets" Remote Sensing 16, no. 1: 76. https://doi.org/10.3390/rs16010076

APA StyleMaung, W. S., Tsuyuki, S., & Guo, Z. (2024). Improving Land Use and Land Cover Information of Wunbaik Mangrove Area in Myanmar Using U-Net Model with Multisource Remote Sensing Datasets. Remote Sensing, 16(1), 76. https://doi.org/10.3390/rs16010076