High-Speed Spatial–Temporal Saliency Model: A Novel Detection Method for Infrared Small Moving Targets Based on a Vectorized Guided Filter

Abstract

:1. Introduction

1.1. Related Works

1.2. Motivation

- (1)

- A novel high-speed spatial–temporal saliency model (HS-STSM) is proposed, which simultaneously extracts the temporal saliency of the target from the inter-frame information of IR image sequences and the local anisotropy saliency in the spatial domain.

- (2)

- To enhance the extraction of spatial saliency of the target, this paper proposes a novel fast spatial filtering algorithm via a guided filter. This approach is combined with edge suppression using local prior weights, which serves to further reduce background residuals and highlight the target.

- (3)

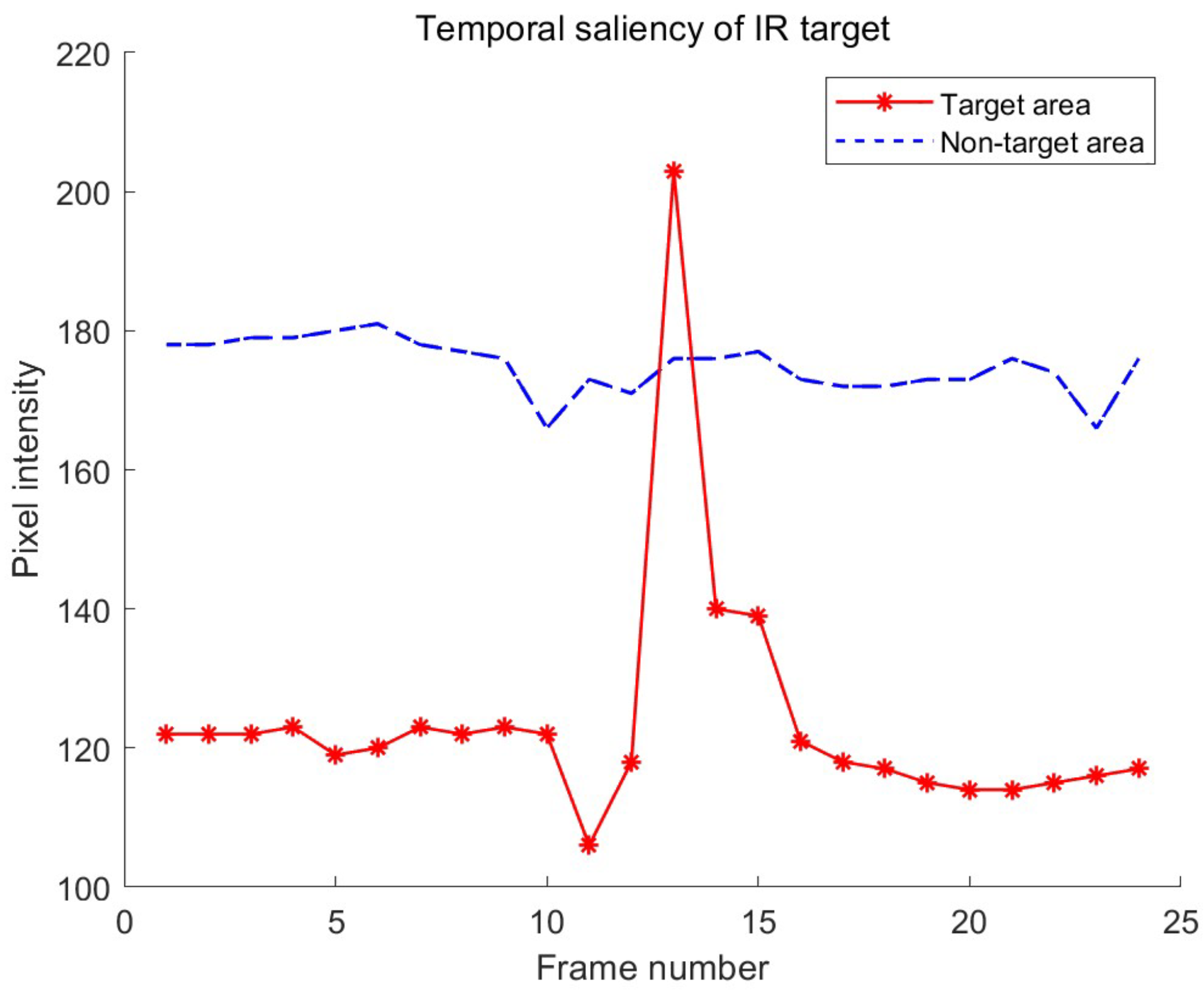

- Achieving real-time performance in IR target detection is crucial. To address this, the vectorization of IR image sequences is introduced into the filtering process. This method significantly improves the speed of multi-frame detection and the extraction of the temporal saliency of the IR target, resulting in superior detection efficiency while maintaining high levels of detection accuracy.

- (4)

- Both qualitative and quantitative experimental results on five real sequences demonstrate that our model performs advanced, fast, and robust detection of IR small moving targets.

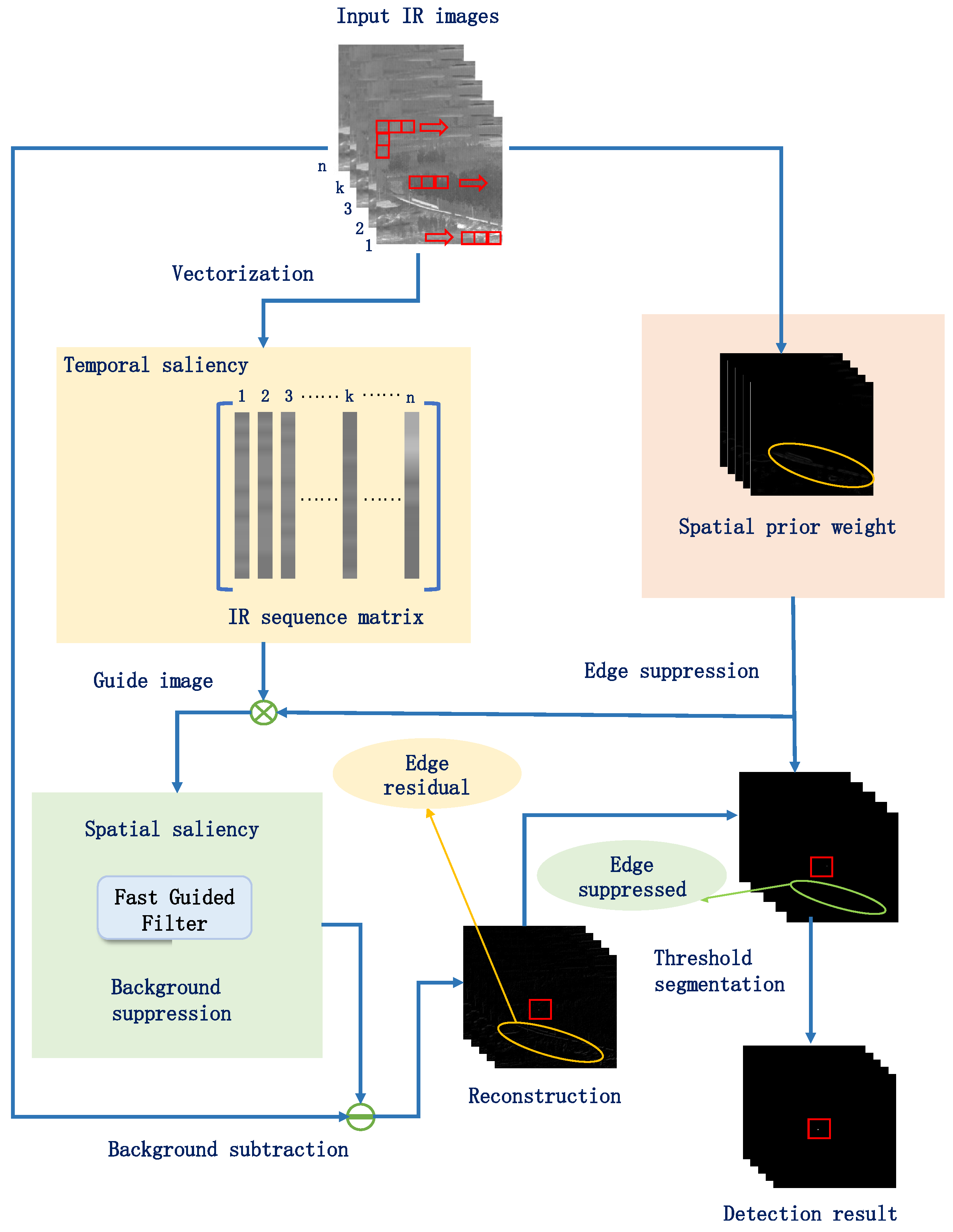

2. Proposed Model

2.1. Spatial–Temporal Saliency

2.2. Vectorization of IR Image Sequence

2.3. Filtering Process Based on Vectorized Guided Filter

2.4. Edge Suppression Based on Prior Weights

2.5. Adaptive Threshold Segmentation

2.6. The Flowchart of the Proposed Model

- The input consists of an IR image sequence;

- The spatial prior weight map is constructed by Formulas (9)–(12);

- Each pixel of the current image is mapped into the column vector in left-to-right and top-to-bottom order to construct an input matrix for the filtering process, as shown in Figure 3;

- The fast guided filter is utilized for the extraction of spatial saliency and background suppression in the filtering process;

- The filtered image is subtracted from the original image to perform background suppression;

- The reconstruction process involves placing each pixel in the IR sequence matrix back to their original positions in the IR images;

- Spatial prior weights are integrated into the reconstructed infrared image to suppress edge residuals in the background;

- Finally, the adaptive threshold segmentation, as shown in Formula (13), is performed on the recovered target detection result map to obtain the final target image.

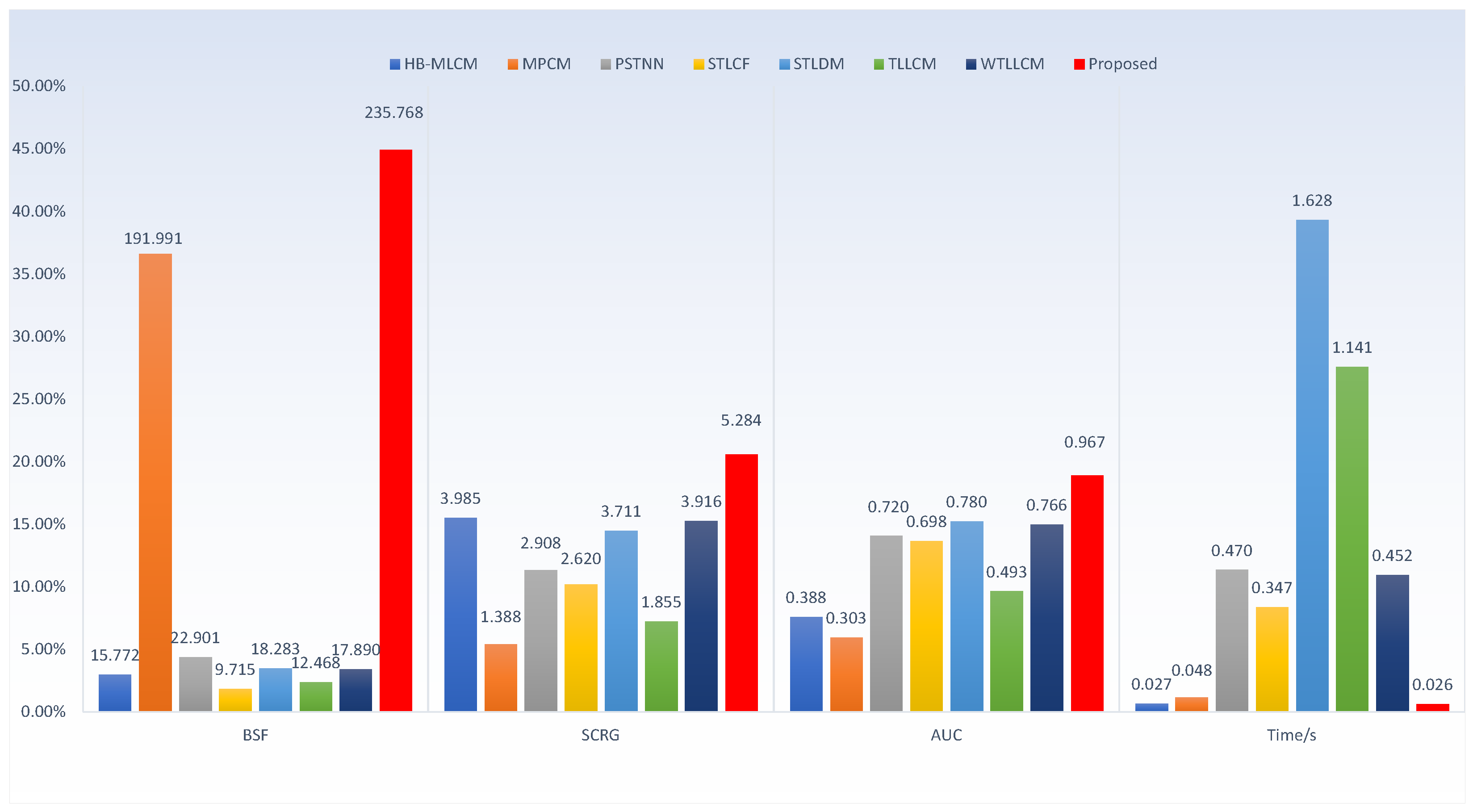

3. Experimental Results and Analysis

3.1. Evaluation Metrics

- First, the definition of the signal-to-clutter ratio (SCR) is established as follows:where and denote the grayscale mean of the target region and the surrounding neighborhood region, respectively, and represents the grayscale standard deviation of the neighborhood region. The signal-to-clutter ratio gain (SCRG) is frequently utilized to assess the effectiveness of clutter suppression and target enhancement. From SCR, SCRG is calculated as follows:where and represent the SCR value of the detection result and the input IR image, respectively.

- To measure the effect of the method on background suppression, the background suppression factor (BSF) is calculated as follows:where and represent the standard deviation of the background region in the original input image and the detection result, respectively.

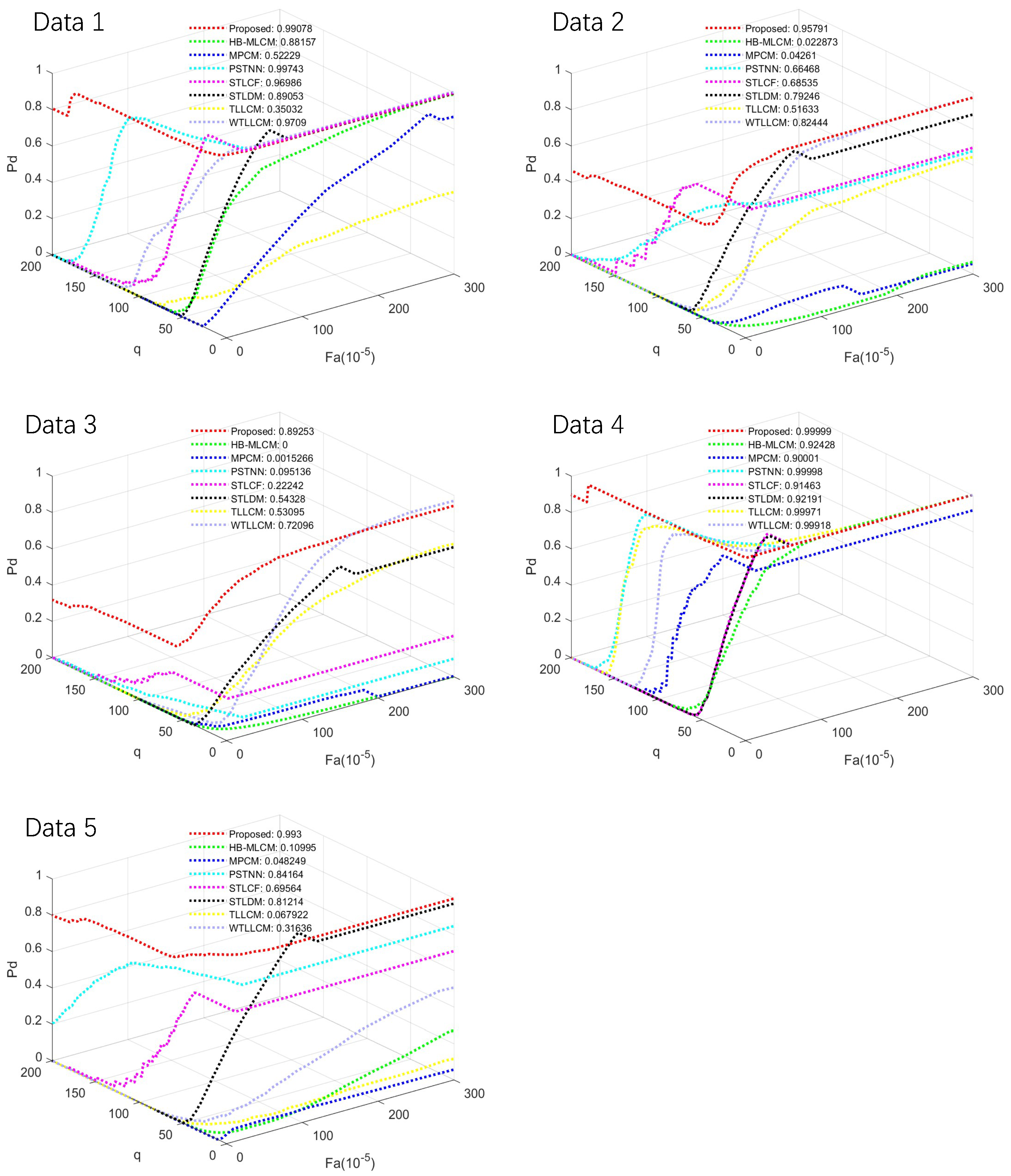

- The detection rate and false alarm rate are used for comprehensive evaluation of the detection performance of the method across the entire image sequence. The detection rate is calculated as follows:where is the number of successful detection and is the number of targets to be detected in the IR sequence. The false alarm rate is calculated as follows:where is the number of non-target pixels and is the total number of pixels in the IR sequence. Further, the receiver operating characteristic curve (ROC) can be plotted according to the change in the threshold, using the detection rate as the horizontal coordinate and the false alarm rate as the vertical coordinate. Furthermore, the AUC value, i.e., the area under the curve, which is the area enclosed by the ROC curve and the axes, is a direct indicator of the target detection performance.

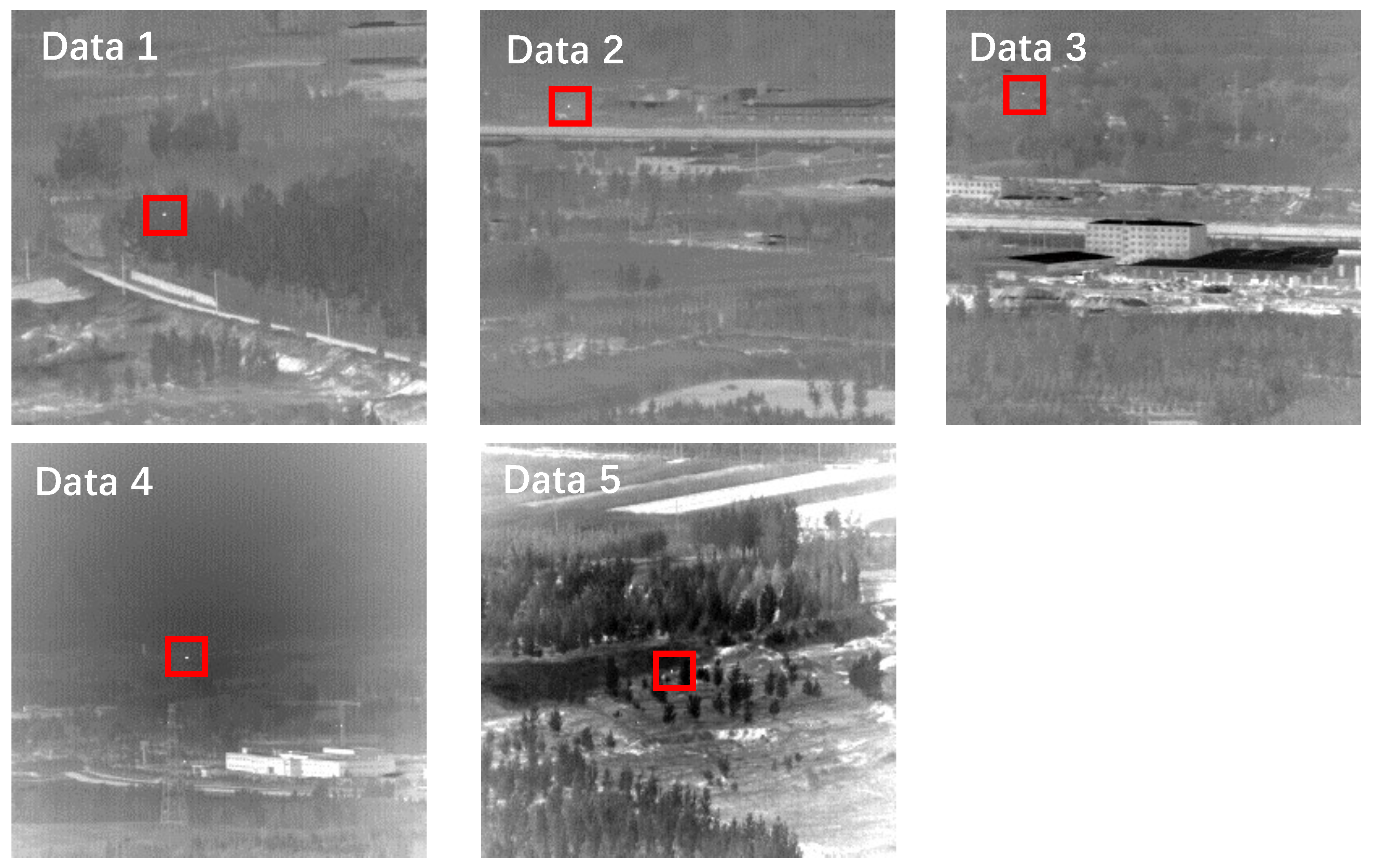

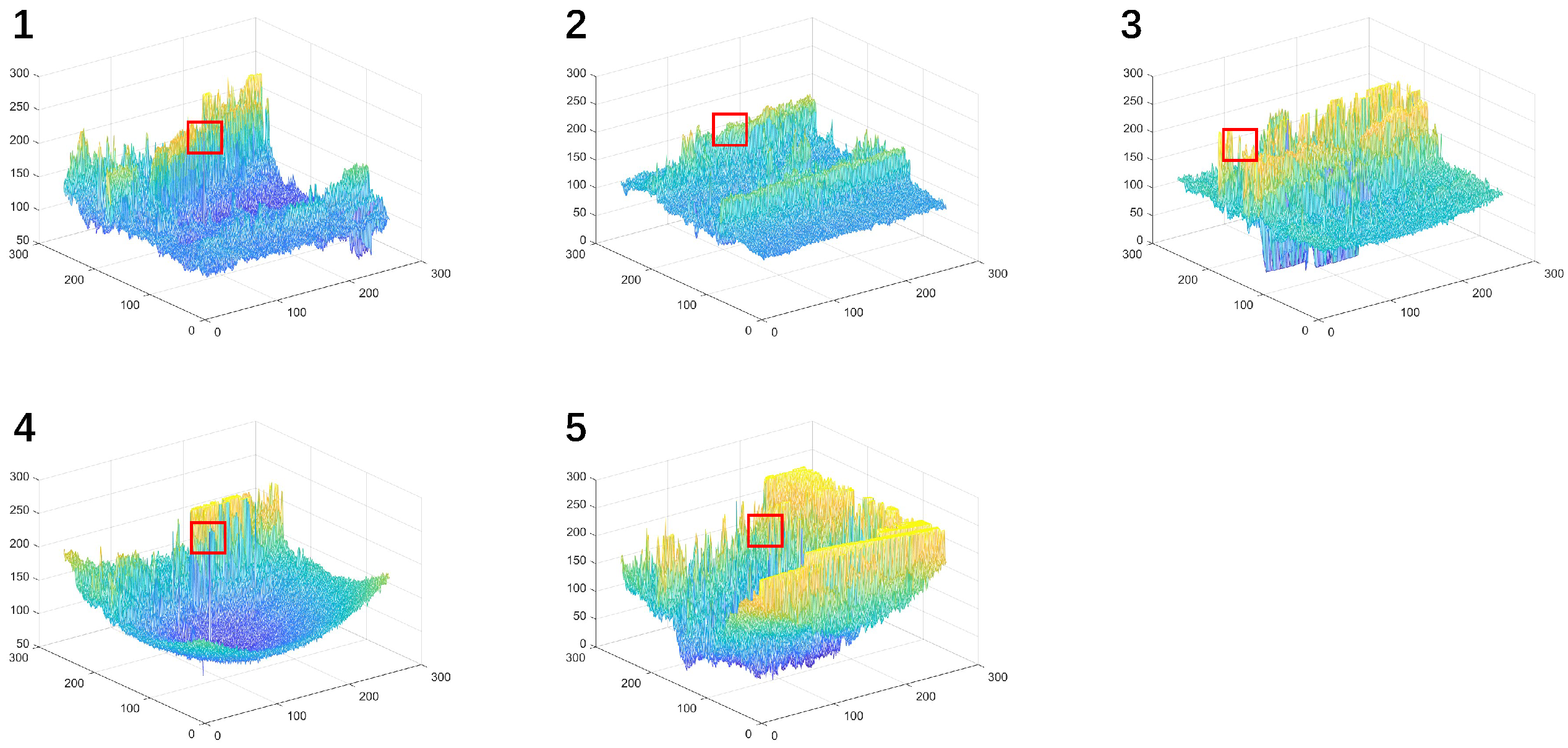

3.2. Description of the Dataset

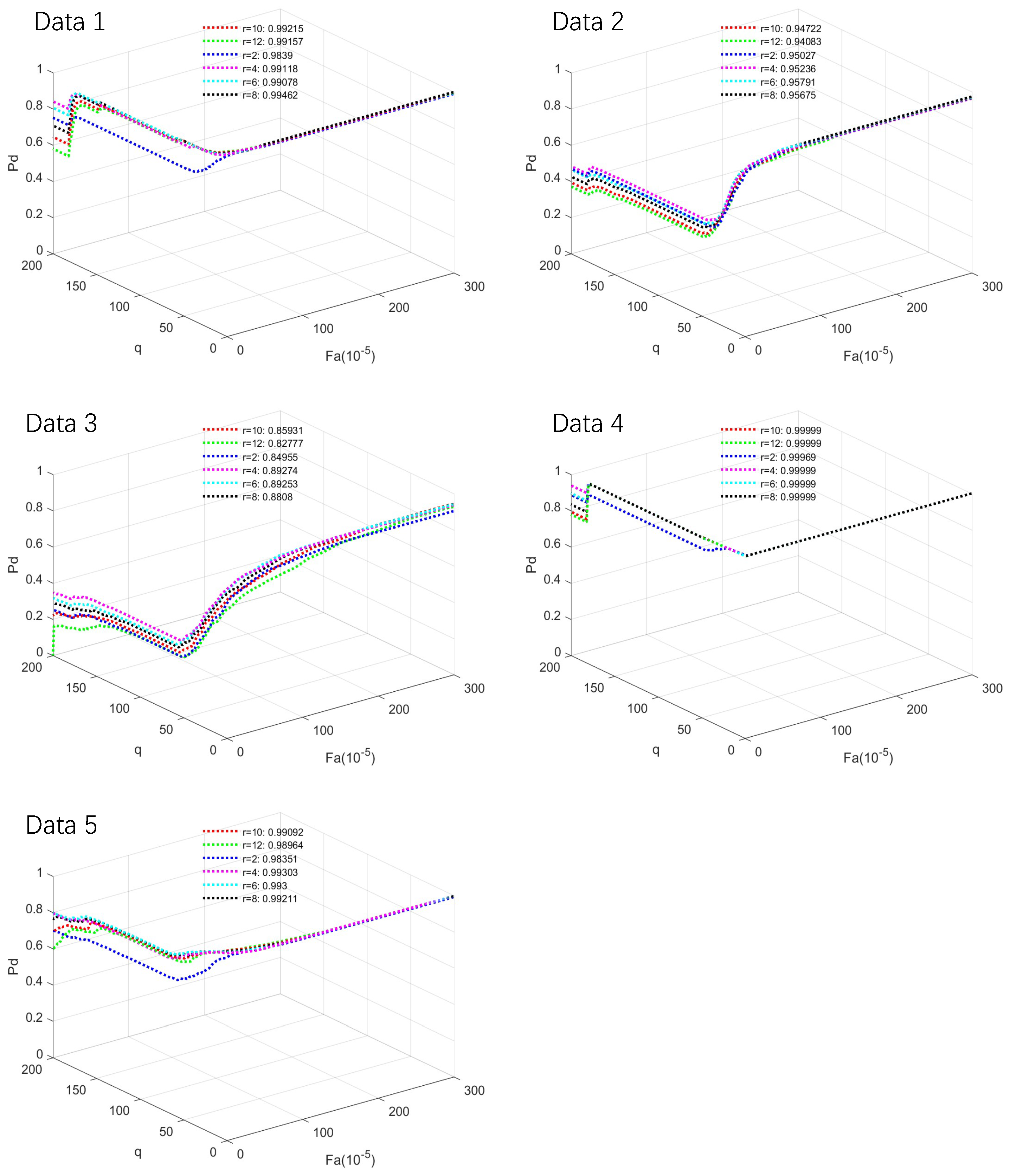

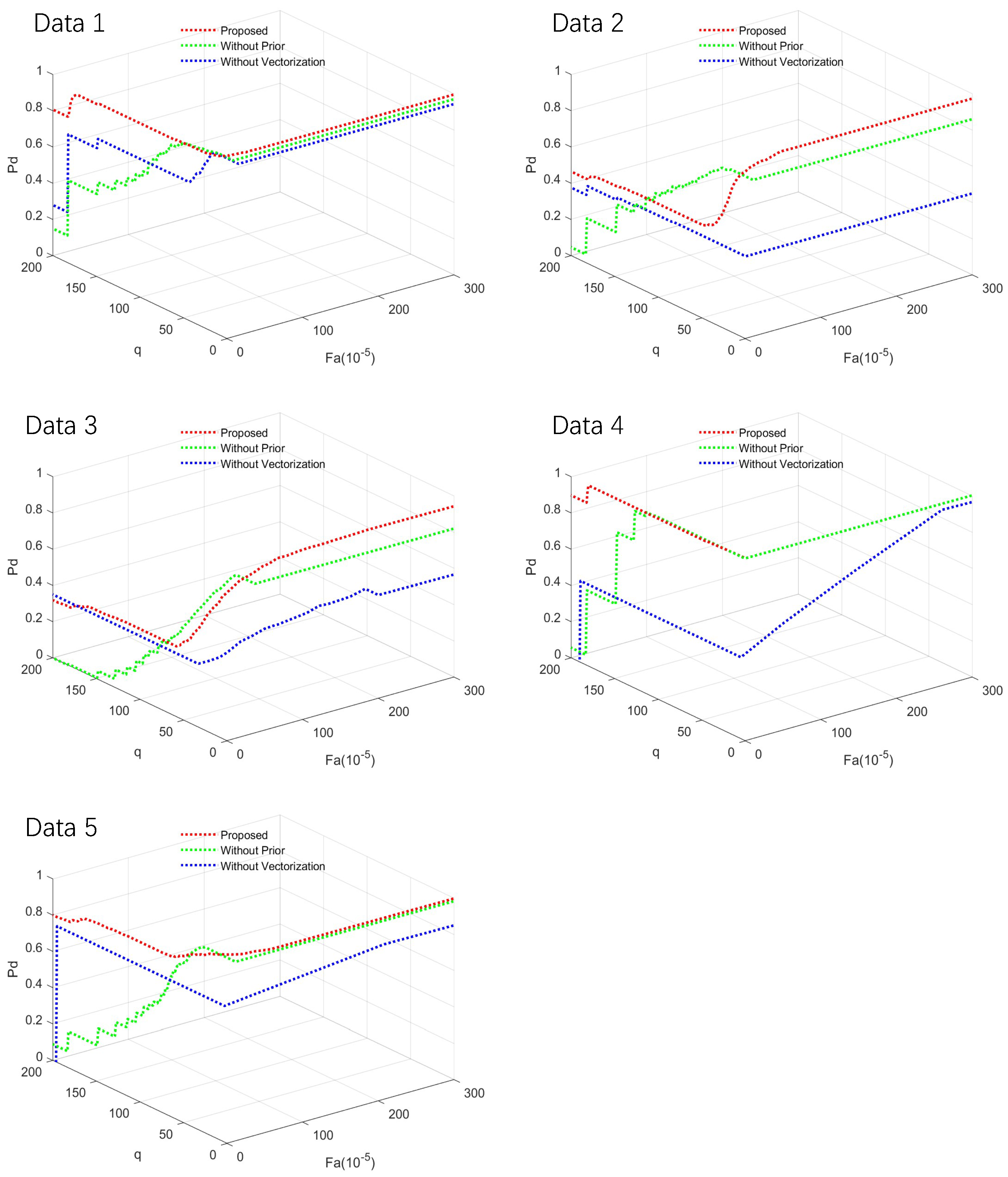

3.3. Parameter Analysis

3.4. Ablation Experiments

3.5. Qualitative Experiments

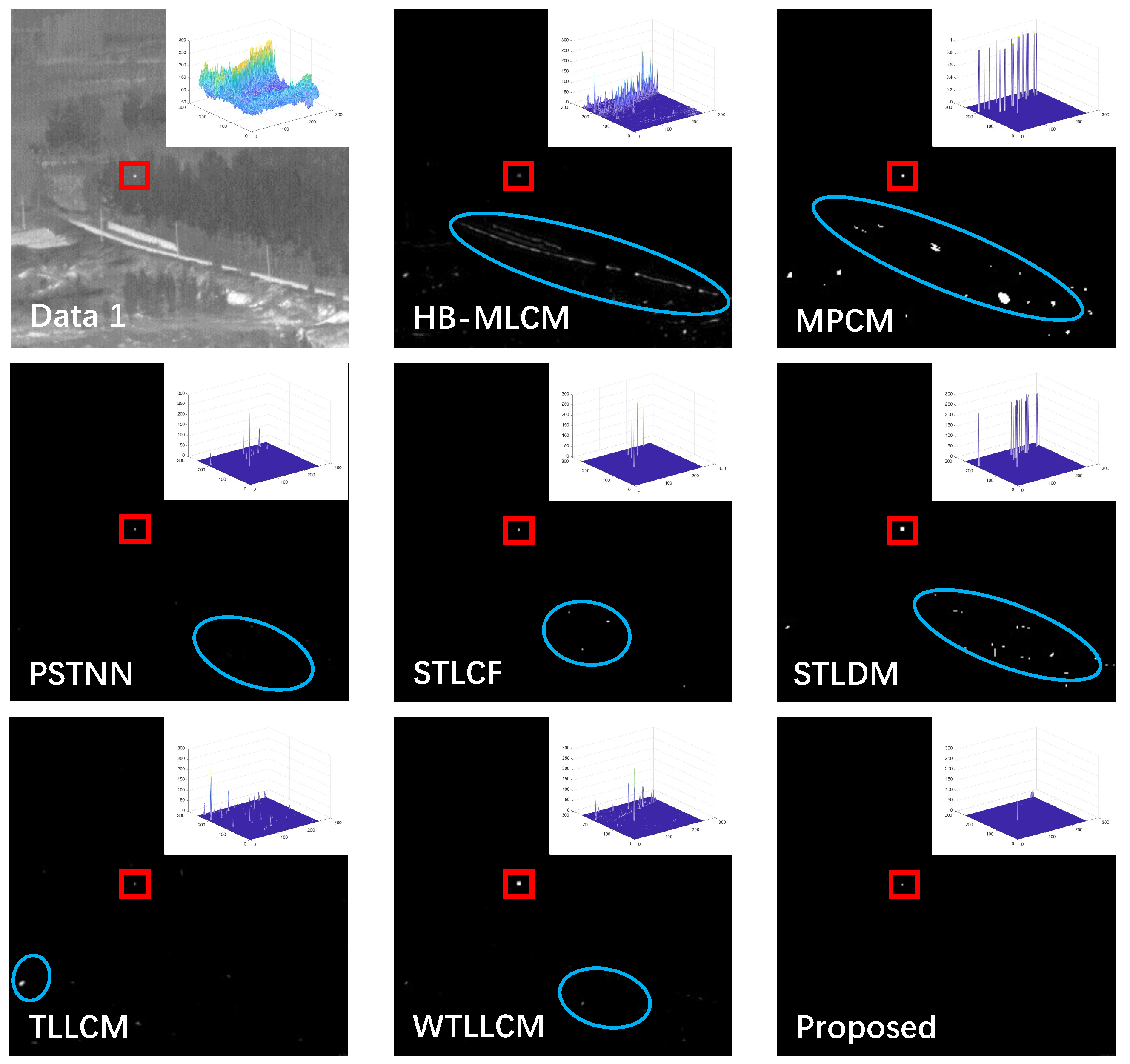

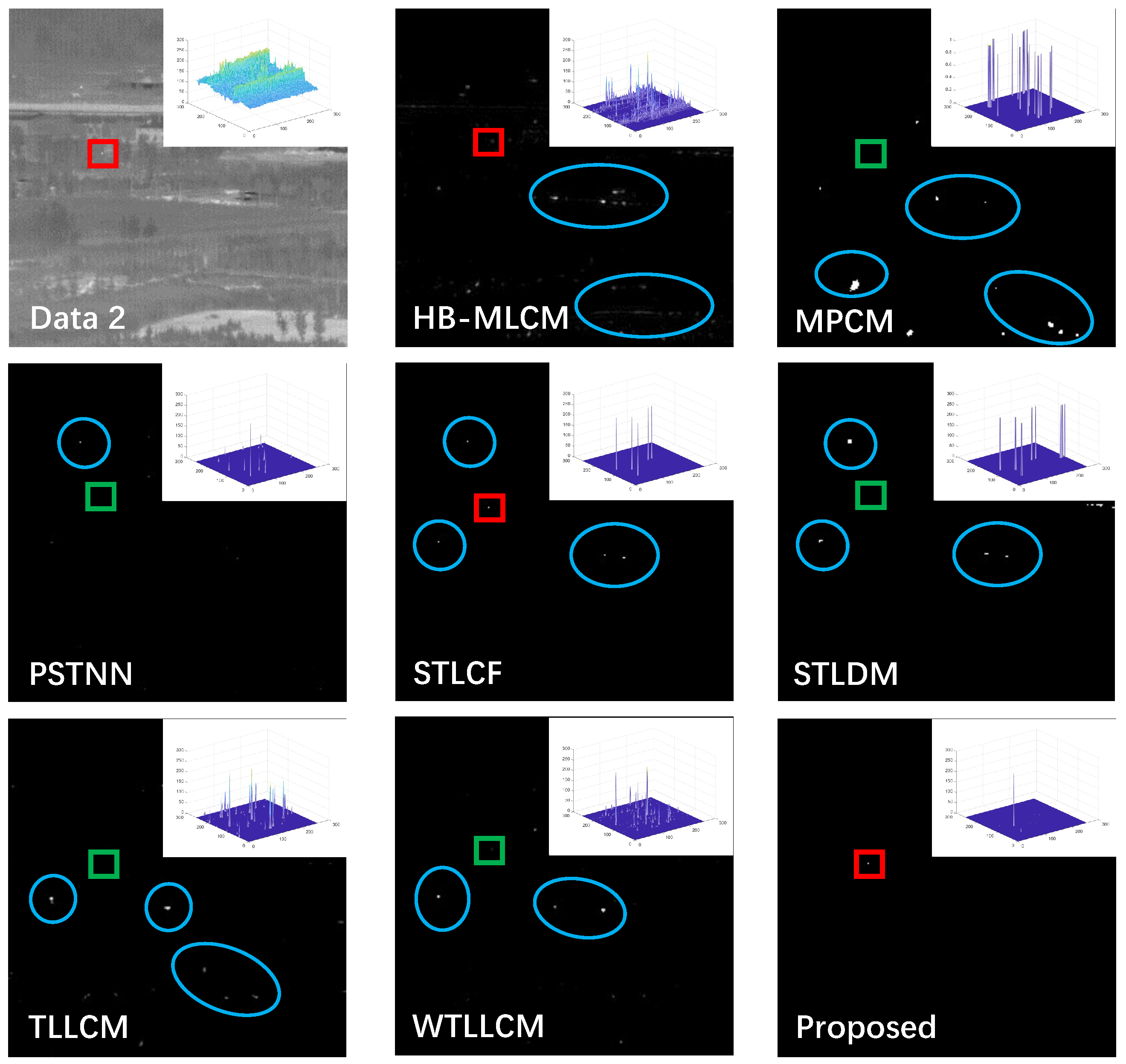

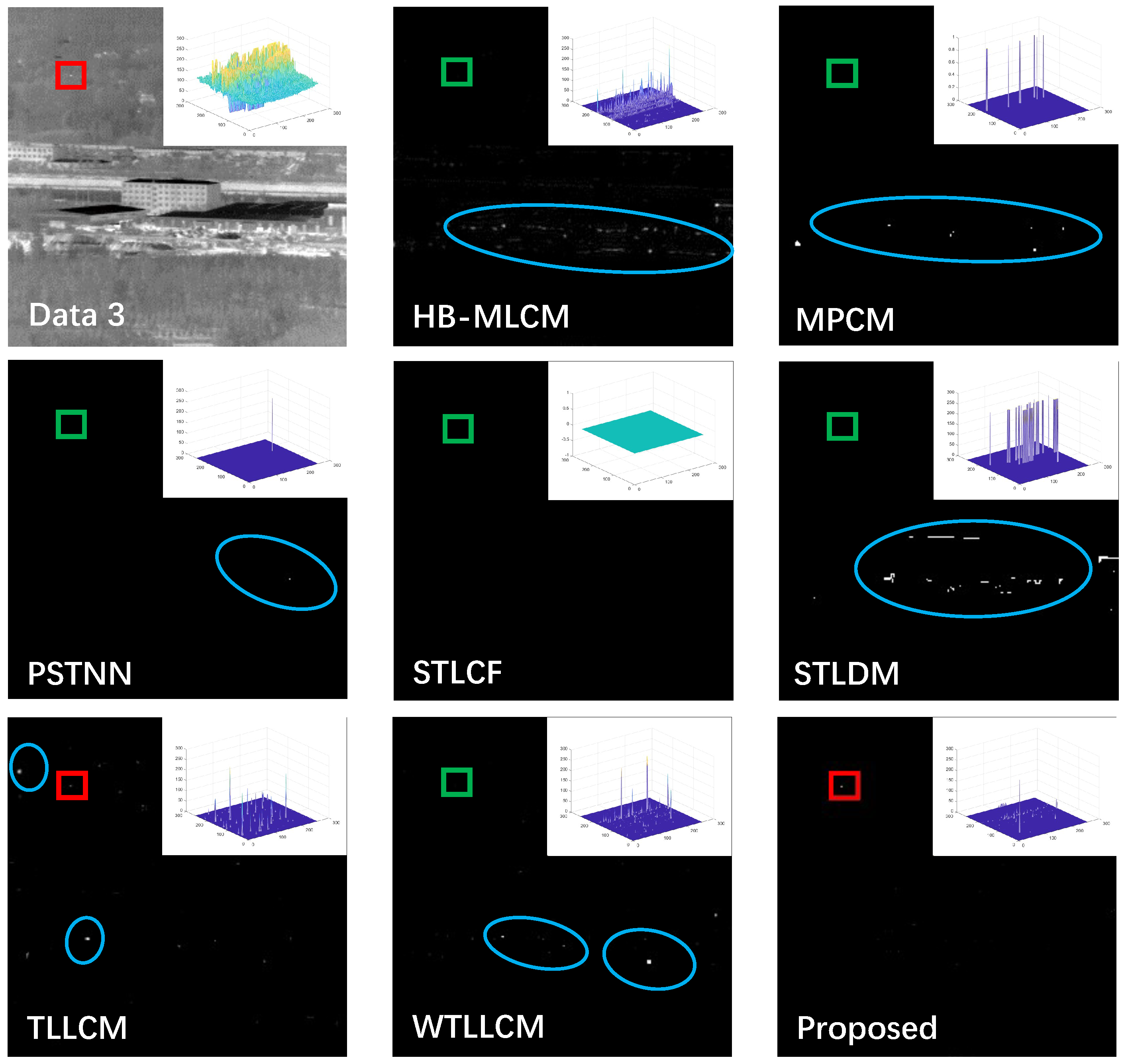

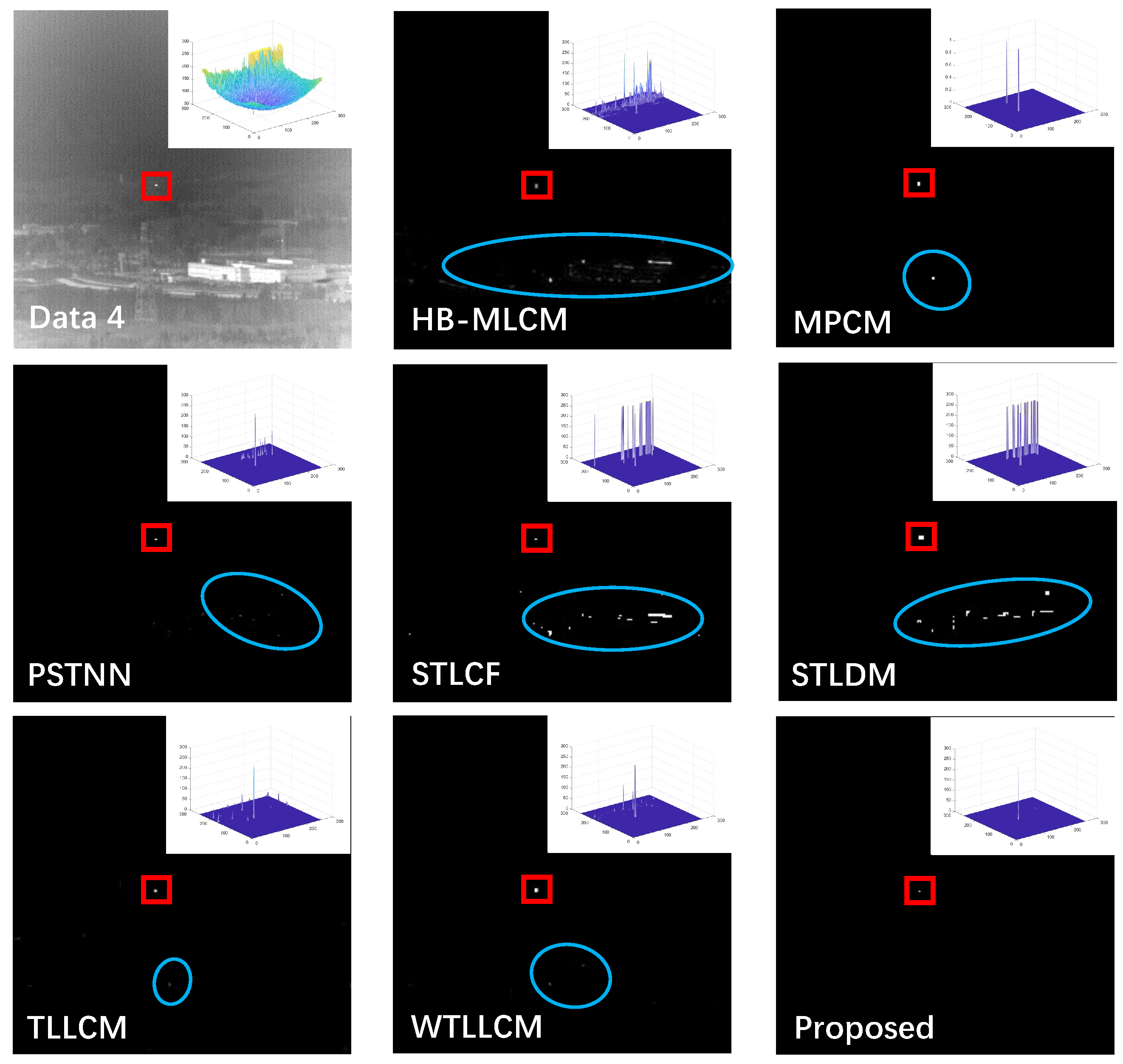

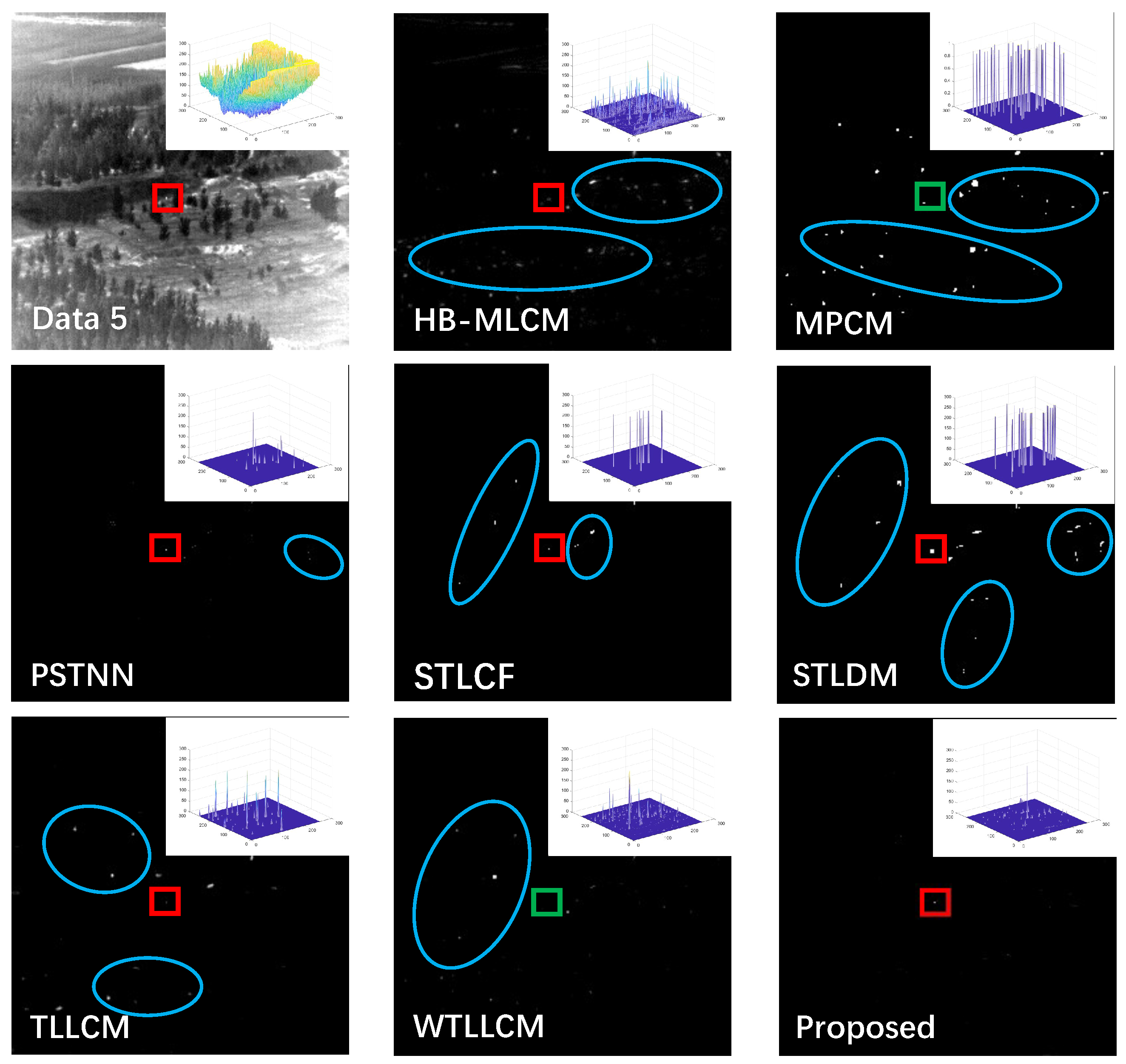

Qualitative Comparison with State-of-the-Art Methods

3.6. Quantitative Analysis

3.7. Detection Efficiency

3.8. Intuitive Effect

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Eysa, R.; Hamdulla, A. Issues on Infrared Dim Small Target Detection and Tracking. In Proceedings of the 2019 International Conference on Smart Grid and Electrical Automation (ICSGEA), Xiangtan, China, 10–11 August 2019; pp. 452–456. [Google Scholar] [CrossRef]

- Tong, Z.; Can, C.; Xing, F.W.; Qiao, H.H.; Yu, C.H. Improved small moving target detection method in infrared sequences under a rotational background. Appl. Opt. 2018, 57, 9279–9286. [Google Scholar] [CrossRef] [PubMed]

- Tom, V.T.; Peli, T.; Leung, M.; Bondaryk, J.E. Morphology-based algorithm for point target detection in infrared backgrounds. In Proceedings of the Defense, Security, and Sensing, Orlando, FL, USA, 22 October 1993. [Google Scholar]

- Deshpande, S.D.; Er, M.H.; Venkateswarlu, R.; Chan, P. Max-mean and max-median filters for detection of small targets. In Proceedings of the Optics and Photonics, Denver, CO, USA, 4 October 1999. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No.98CH36271), Bombay, India, 7 January 1998; pp. 839–846. [Google Scholar] [CrossRef]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef] [PubMed]

- Dai, Y.; Wu, Y. Reweighted Infrared Patch-Tensor Model With Both Nonlocal and Local Priors for Single-Frame Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3752–3767. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, Z. Infrared Small Target Detection Based on Partial Sum of the Tensor Nuclear Norm. Remote Sens. 2019, 11, 382. [Google Scholar] [CrossRef]

- Hu, Y.; Ma, Y.; Pan, Z.; Liu, Y. Infrared Dim and Small Target Detection from Complex Scenes via Multi-Frame Spatial-Temporal Patch-Tensor Model. Remote Sens. 2022, 14, 2234. [Google Scholar] [CrossRef]

- Chen, C.L.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A Local Contrast Method for Small Infrared Target Detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 574–581. [Google Scholar] [CrossRef]

- Wei, Y.; You, X.; Li, H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recognit. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Shi, Y.; Wei, Y.; Yao, H.; Pan, D.; Xiao, G. High-Boost-Based Multiscale Local Contrast Measure for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2018, 15, 33–37. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Liu, C.; Zhang, H.; Zhao, Q. A Local Contrast Method for Infrared Small-Target Detection Utilizing a Tri-Layer Window. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1822–1826. [Google Scholar] [CrossRef]

- Cui, H.; Li, L.; Liu, X.; Su, X.; Chen, F. Infrared Small Target Detection Based on Weighted Three-Layer Window Local Contrast. IEEE Geosci. Remote Sens. Lett. 2022, 19, 7505705. [Google Scholar] [CrossRef]

- Deng, L.; Zhu, H.; Tao, C.; Wei, Y. Infrared moving point target detection based on spatial–temporal local contrast filter. Infrared Phys. Technol. 2016, 76, 168–173. [Google Scholar] [CrossRef]

- Du, P.; Hamdulla, A. Infrared Moving Small-Target Detection Using Spatial–Temporal Local Difference Measure. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1817–1821. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, Y.; Pan, Z.; Hu, Y. Method of Infrared Small Moving Target Detection Based on Coarse-to-Fine Structure in Complex Scenes. Remote Sens. 2023, 15, 1508. [Google Scholar] [CrossRef]

- Wang, W.; Qin, H.; Cheng, W.; Wang, C.; Leng, H.; Zhou, H. Small target detection in infrared image using convolutional neural networks. In Proceedings of the AOPC 2017: Optical Sensing and Imaging Technology and Applications, Beijing, China, 4–6 June 2017; Jiang, Y., Gong, H., Chen, W., Li, J., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2017; Volume 10462, p. 1046250. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems—Volume 2, NIPS’14, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Wang, Z.; Yang, J.; Pan, Z.; Liu, Y.; Lei, B.; Hu, Y. APAFNet: Single-Frame Infrared Small Target Detection by Asymmetric Patch Attention Fusion. IEEE Geosci. Remote Sens. Lett. 2023, 20, 7000405. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided Image Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J. Fast Guided Filter. arXiv 2015, arXiv:1505.00996. [Google Scholar]

- Gao, C.Q.; Tian, J.; Wang, P. Generalised-structure-tensor-based infrared small target detection. Electron. Lett. 2008, 44, 1349–1351. [Google Scholar] [CrossRef]

- Hui, B.; Song, Z.; Fan, H.; Zhong, P.; Hu, W.; Zhang, X.; Ling, J.; Su, H.; Jin, W.; Zhang, Y.; et al. A dataset for infrared detection and tracking of dim-small aircraft targets under ground/air background. China Sci. Data 2020, 5, 12. [Google Scholar]

| Sequence | Frames | Image Size | Average SCR | Target Size |

|---|---|---|---|---|

| Data 1 | 399 | 256 × 256 | 6.07 | 3 × 3 to 4 × 4 |

| Data 2 | 1500 | 256 × 256 | 5.20 | 1 × 1 to 2 × 2 |

| Data 3 | 750 | 256 × 256 | 3.42 | 1 × 1 to 3 × 3 |

| Data 4 | 1599 | 256 × 256 | 3.84 | 2 × 2 to 4 × 4 |

| Data 5 | 499 | 256 × 256 | 2.20 | 3 × 3 to 5 × 5 |

| Method | Data 1 | Data 2 | Data 3 | Data 4 | Data 5 |

|---|---|---|---|---|---|

| Proposed | 0.9908 | 0.9579 | 0.8925 | 1.0000 | 0.9930 |

| Without prior | 0.9641 | 0.8502 | 0.7919 | 0.9979 | 0.9698 |

| Without vectorization | 0.9351 | 0.4458 | 0.5037 | 0.7500 | 0.8129 |

| Methods | Parameters |

|---|---|

| HB-MLCM | Window size: , , |

| MPCM | Window size: , , , mean filter size: |

| PSTNN | Patch size: , step: 40, , |

| STLCF | Window size: , frames |

| STLDM | Frames |

| TLLCM | Gaussian filter kernel |

| WTLLCM | Window size: , |

| Proposed | Window radius: , regularization parameter: |

| Data 1 | Data 2 | Data 3 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Methods | BSF | SCRG | AUC | BSF | SCRG | AUC | BSF | SCRG | AUC |

| HB-MLCM | 17.320 | 4.465 | 0.8816 | 16.343 | 3.107 | 0.0229 | 5.642 | 4.741 | 0 |

| MPCM | 402.936 | 2.907 | 0.5223 | 185.529 | 0.359 | 0.0426 | NaN | 0.032 | 0.0015 |

| PSTNN | 18.799 | 4.941 | 0.9974 | 8.690 | 2.156 | 0.6647 | 14.545 | 0.205 | 0.0951 |

| STLCF | 6.314 | 4.189 | 0.9699 | 7.160 | 2.245 | 0.6854 | 11.349 | 0.494 | 0.2224 |

| STLDM | 10.127 | 3.241 | 0.8905 | 8.500 | 2.443 | 0.7925 | 10.884 | 2.606 | 0.5433 |

| TLLCM | 6.144 | 1.407 | 0.3503 | 3.735 | 2.484 | 0.5163 | 8.173 | 1.647 | 0.5310 |

| WTLLCM | 12.210 | 5.564 | 0.9709 | 5.157 | 5.014 | 0.8244 | 10.245 | 2.845 | 0.7210 |

| Proposed | 396.504 | 5.762 | 0.9908 | 199.205 | 3.176 | 0.9579 | 32.117 | 4.965 | 0.8925 |

| Data 4 | Data 5 | |||||

|---|---|---|---|---|---|---|

| Methods | BSF | SCRG | AUC | BSF | SCRG | AUC |

| HB-MLCM | 8.800 | 5.428 | 0.9243 | 30.757 | 2.184 | 0.1100 |

| MPCM | 242.064 | 2.236 | 0.9000 | 129.428 | 1.407 | 0.0482 |

| PSTNN | 24.889 | 2.030 | 1.0000 | 47.581 | 5.210 | 0.8416 |

| STLCF | 5.185 | 1.967 | 0.9146 | 18.567 | 4.203 | 0.6956 |

| STLDM | 5.653 | 3.717 | 0.9219 | 56.253 | 6.547 | 0.8121 |

| TLLCM | 30.354 | 2.812 | 0.9997 | 13.933 | 0.923 | 0.0679 |

| WTLLCM | 45.199 | 2.944 | 0.9992 | 16.641 | 3.214 | 0.3164 |

| Proposed | 64.105 | 4.757 | 1.0000 | 650.755 | 7.758 | 0.9930 |

| Method | Time | ||||

|---|---|---|---|---|---|

| Data 1 | Data 2 | Data 3 | Data 4 | Data 5 | |

| HB-MCLM | 0.026 | 0.030 | 0.026 | 0.031 | 0.022 |

| MPCM | 0.032 | 0.053 | 0.062 | 0.041 | 0.050 |

| PSTNN | 0.210 | 0.527 | 0.891 | 0.428 | 0.293 |

| STLCF | 0.315 | 0.327 | 0.375 | 0.333 | 0.383 |

| STLDM | 1.571 | 1.650 | 1.634 | 1.582 | 1.702 |

| TLLCM | 1.078 | 1.143 | 1.165 | 1.132 | 1.186 |

| WTLLCM | 0.054 | 0.805 | 1.145 | 0.216 | 0.038 |

| Proposed | 0.030 | 0.025 | 0.024 | 0.028 | 0.021 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aliha, A.; Liu, Y.; Zhou, G.; Hu, Y. High-Speed Spatial–Temporal Saliency Model: A Novel Detection Method for Infrared Small Moving Targets Based on a Vectorized Guided Filter. Remote Sens. 2024, 16, 1685. https://doi.org/10.3390/rs16101685

Aliha A, Liu Y, Zhou G, Hu Y. High-Speed Spatial–Temporal Saliency Model: A Novel Detection Method for Infrared Small Moving Targets Based on a Vectorized Guided Filter. Remote Sensing. 2024; 16(10):1685. https://doi.org/10.3390/rs16101685

Chicago/Turabian StyleAliha, Aersi, Yuhan Liu, Guangyao Zhou, and Yuxin Hu. 2024. "High-Speed Spatial–Temporal Saliency Model: A Novel Detection Method for Infrared Small Moving Targets Based on a Vectorized Guided Filter" Remote Sensing 16, no. 10: 1685. https://doi.org/10.3390/rs16101685

APA StyleAliha, A., Liu, Y., Zhou, G., & Hu, Y. (2024). High-Speed Spatial–Temporal Saliency Model: A Novel Detection Method for Infrared Small Moving Targets Based on a Vectorized Guided Filter. Remote Sensing, 16(10), 1685. https://doi.org/10.3390/rs16101685