A Systematic Literature Review and Bibliometric Analysis of Semantic Segmentation Models in Land Cover Mapping

Abstract

:1. Introduction

2. Materials and Methods

2.1. Research Questions (RQs)

- RQ1. What are the emerging patterns in land cover mapping?

- RQ2. What are the domain studies of semantic segmentation models in land cover mapping?

- RQ3. What are the data used in semantic segmentation models for land cover mapping?

- RQ4. What are the architecture and performances of semantic segmentation methodologies used in land cover mapping?

2.2. Search Strategy

2.3. Study Selection Criteria

2.4. Eligibility and Data Analysis

2.5. Data Synthesis

3. Results and Discussion

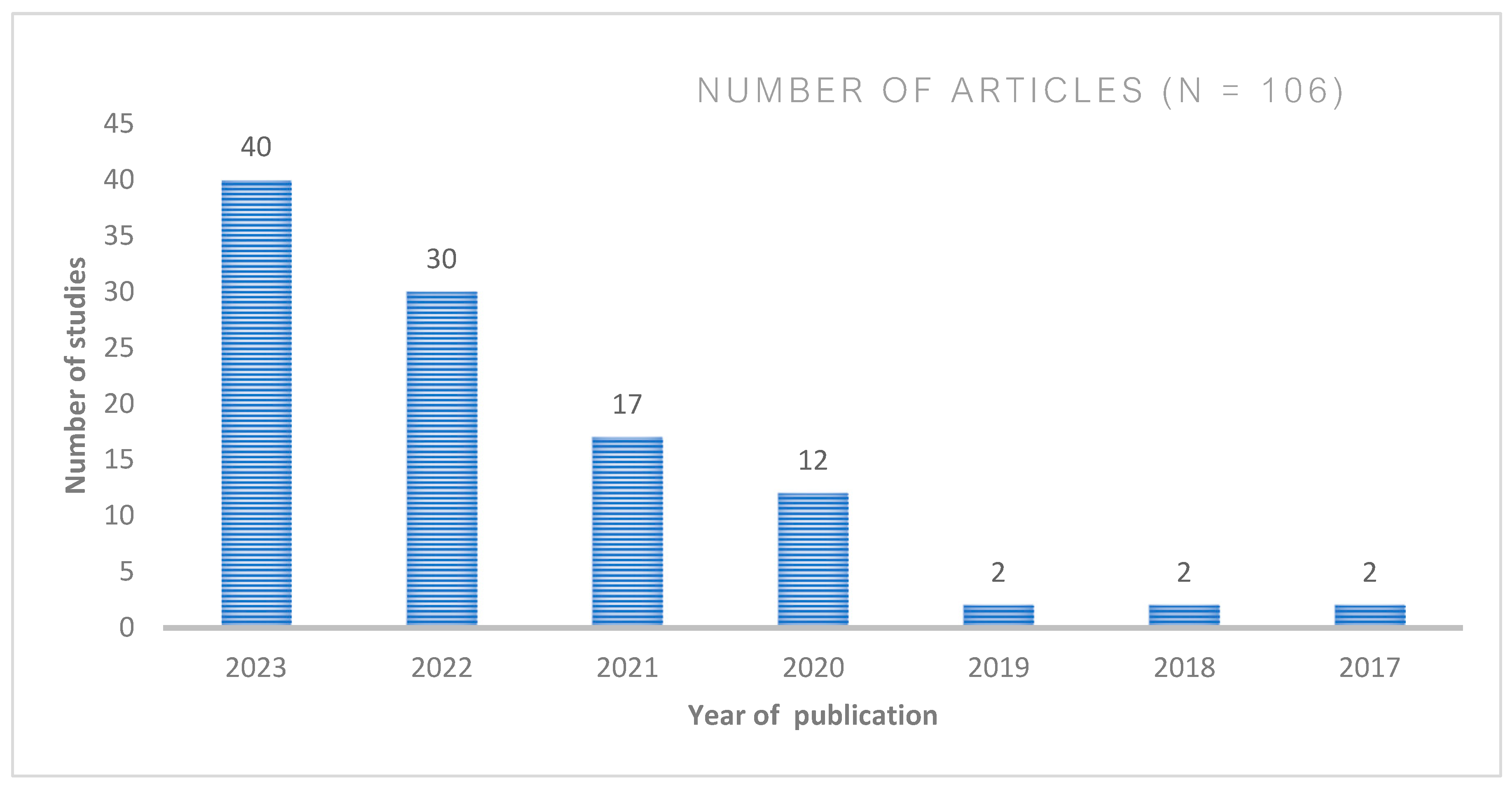

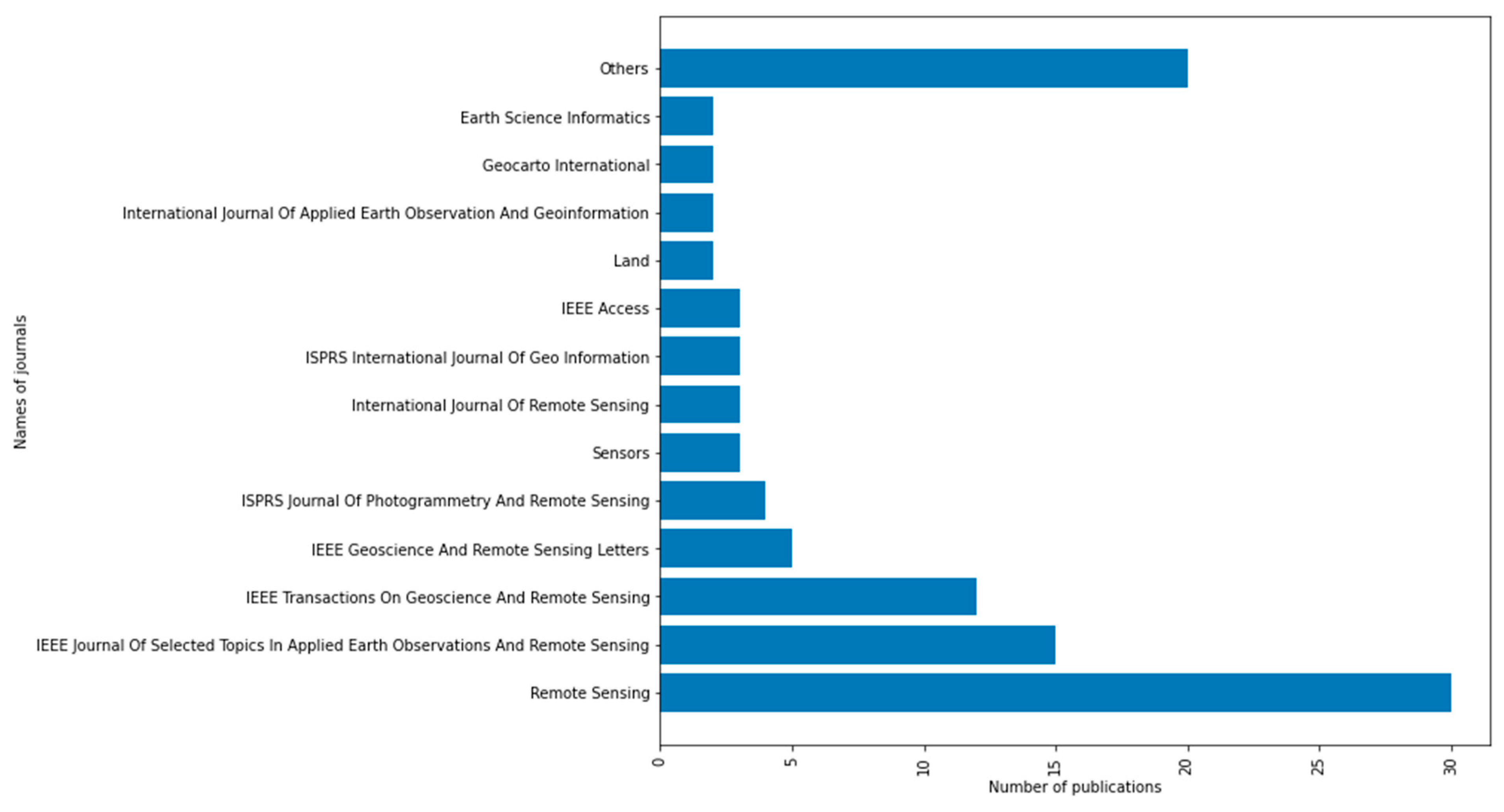

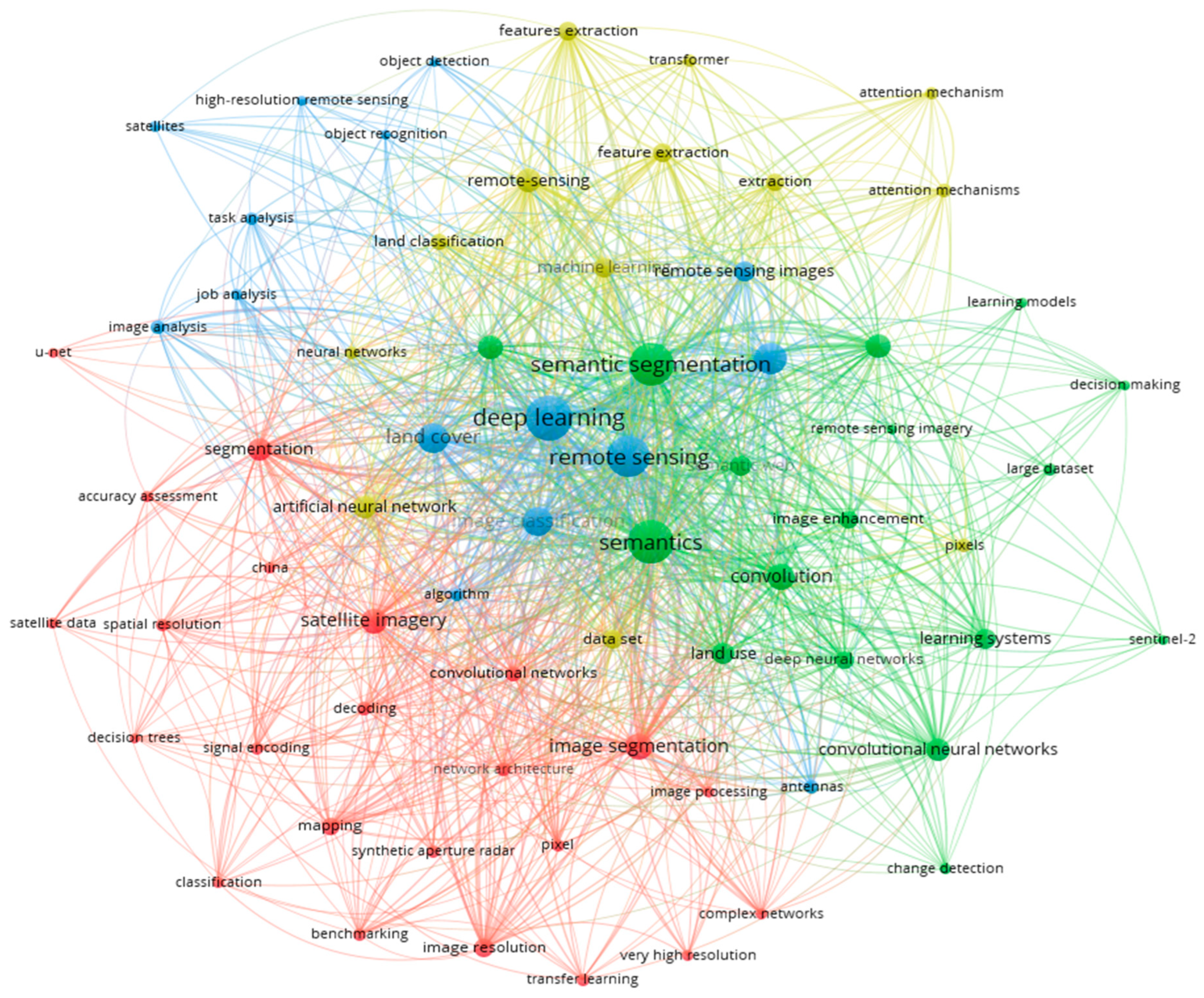

3.1. RQ1. What Are the Emerging Patterns in Land Cover Mapping?

- Annual distribution of research studies

- Leading Journals

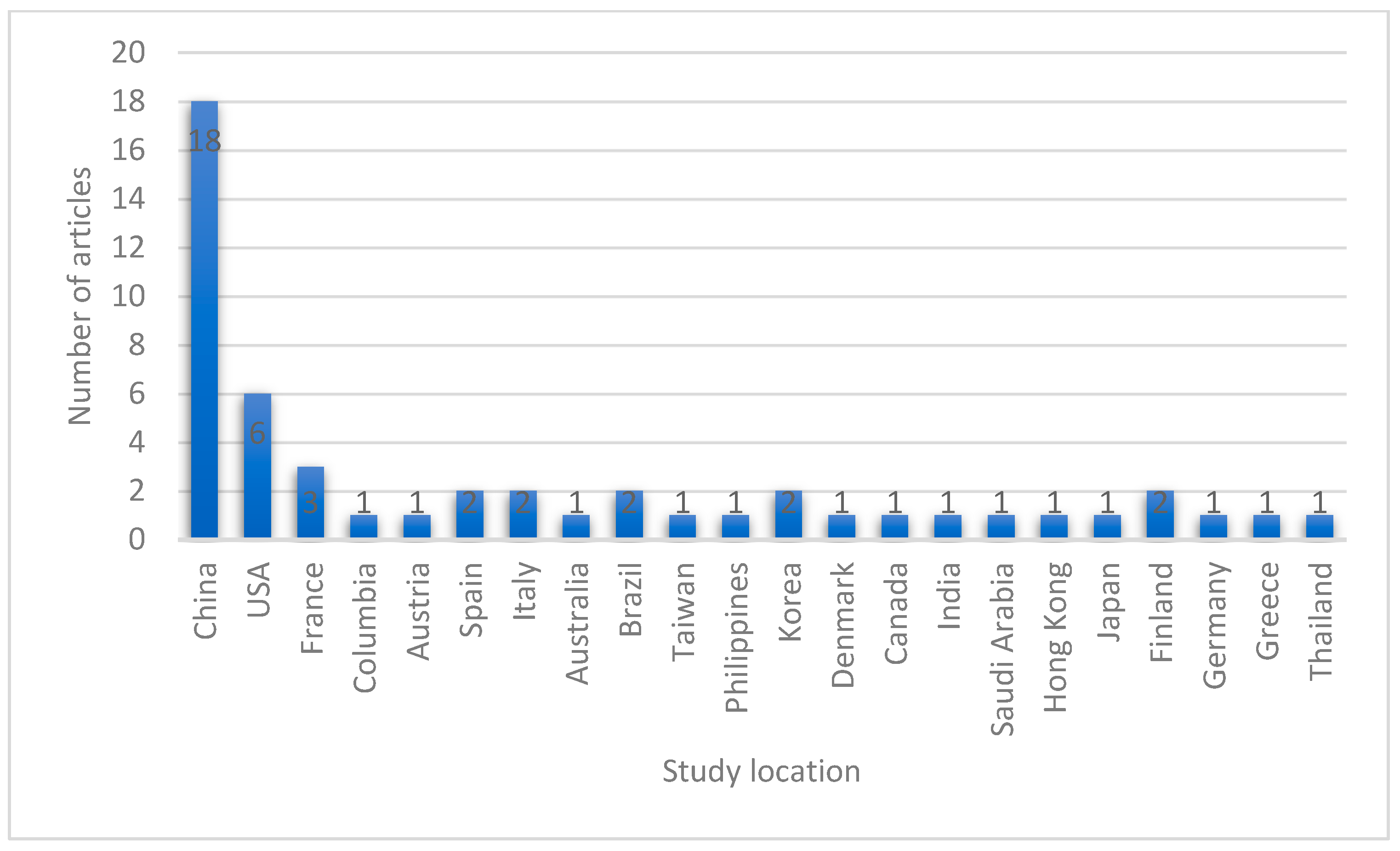

- Geographic distribution of studies

- Leading Themes and Timelines

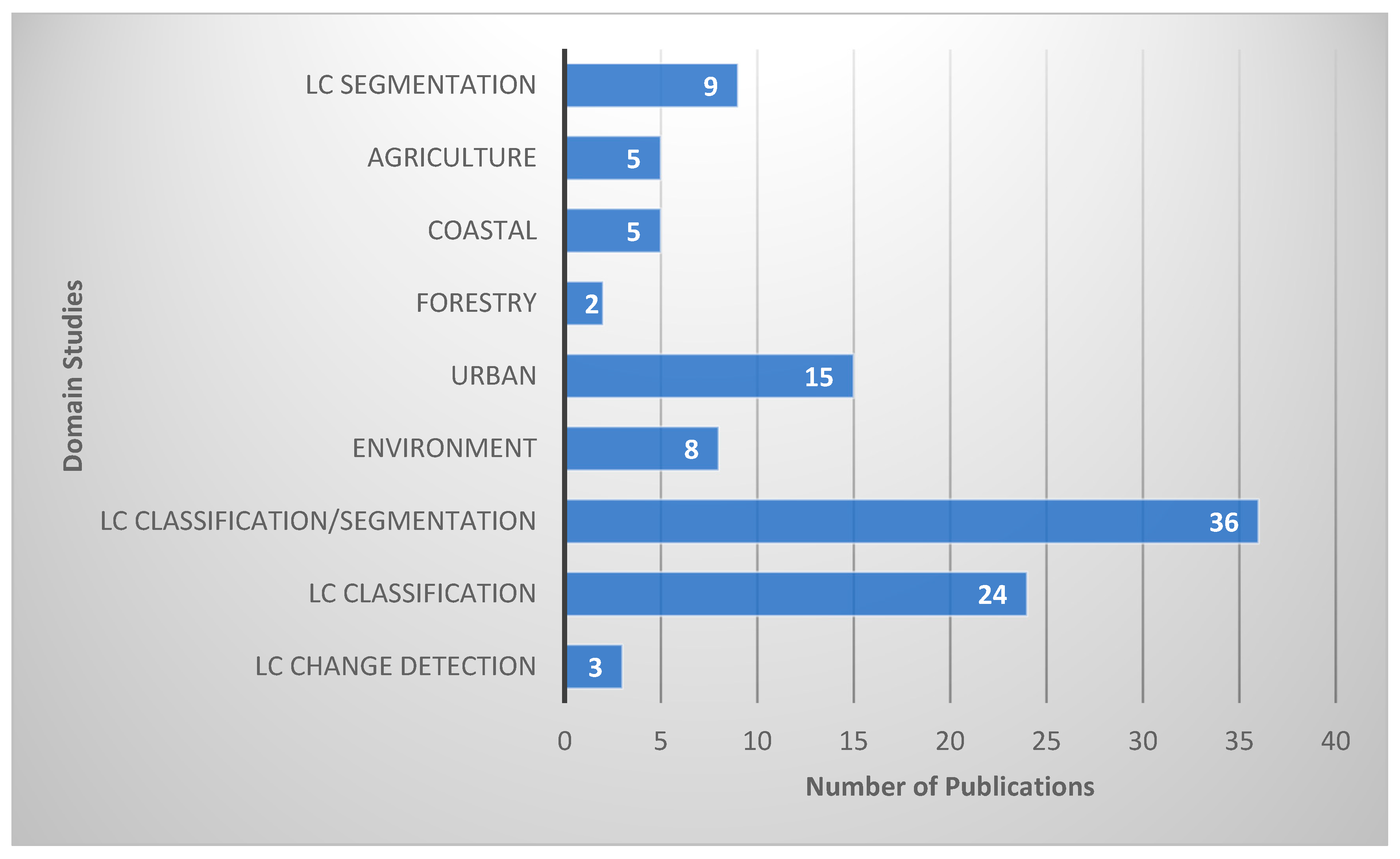

3.2. RQ2. What Are Domain Studies of Semantic Segmentation Models in Land Cover Mapping?

- Land Cover Studies

- Urban

- Precision Agriculture

- Environment

- Forest

- Coastal Areas

3.3. RQ3. What Are the Data Used in Semantic Segmentation Models for Land Cover Mapping?

- Study Locations

- Data Sources

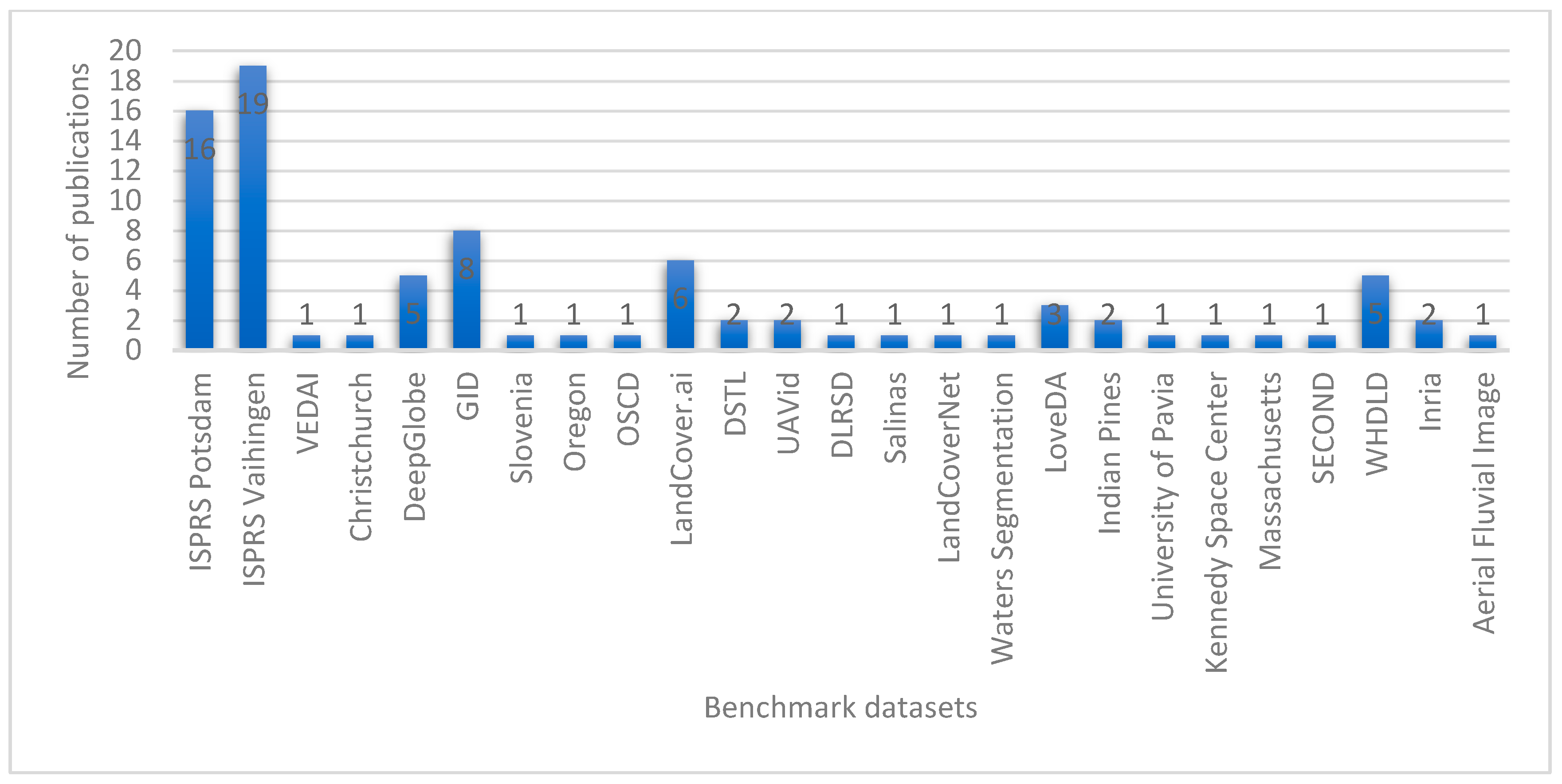

- Benchmark datasets

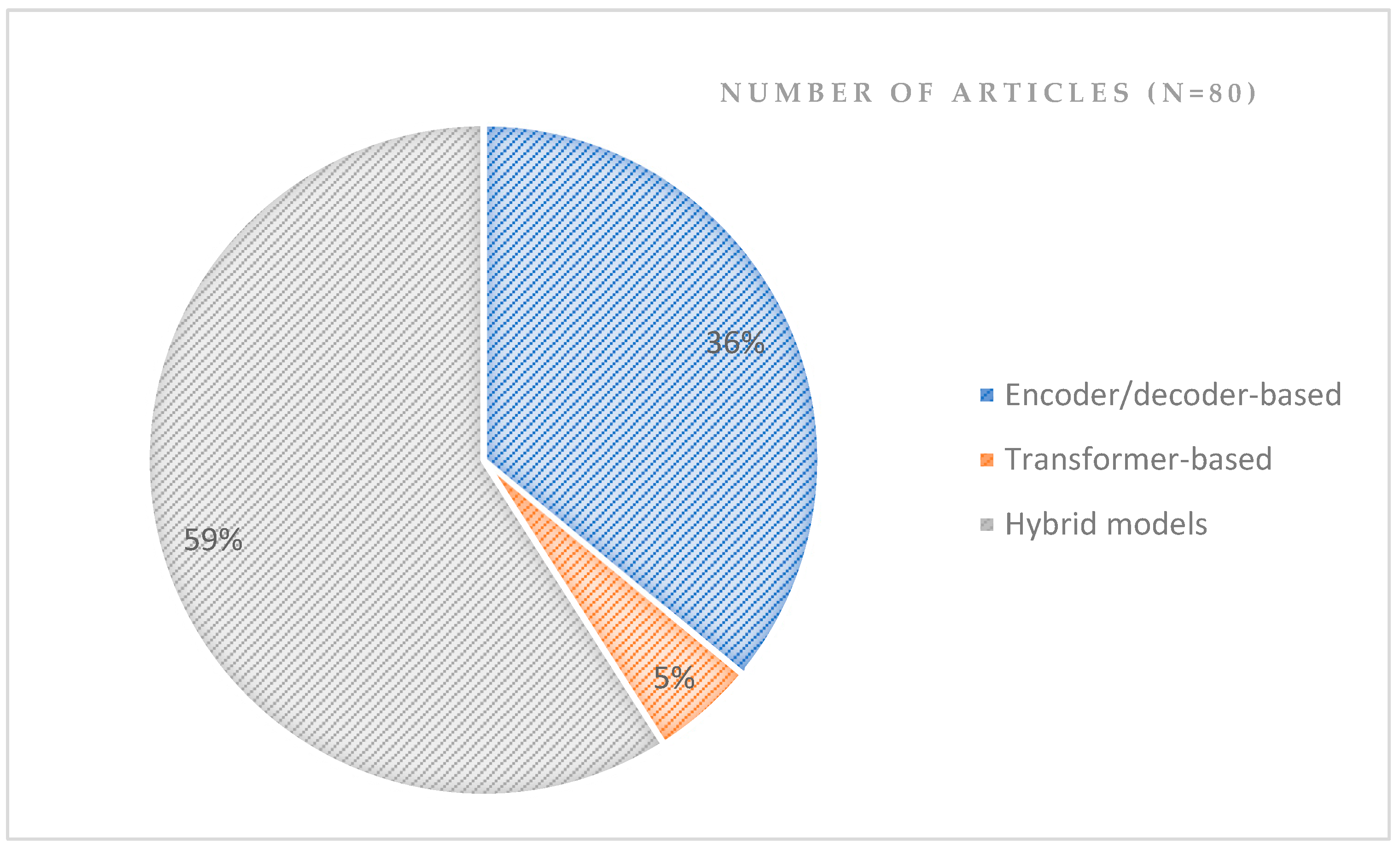

3.4. RQ4. What Are the Architecture and Performances of Semantic Segmentation Methodologies Used in Land Cover Mapping?

- Encoder-Decoder based structure

- Transformer-based structure

- Hybrid-based structure

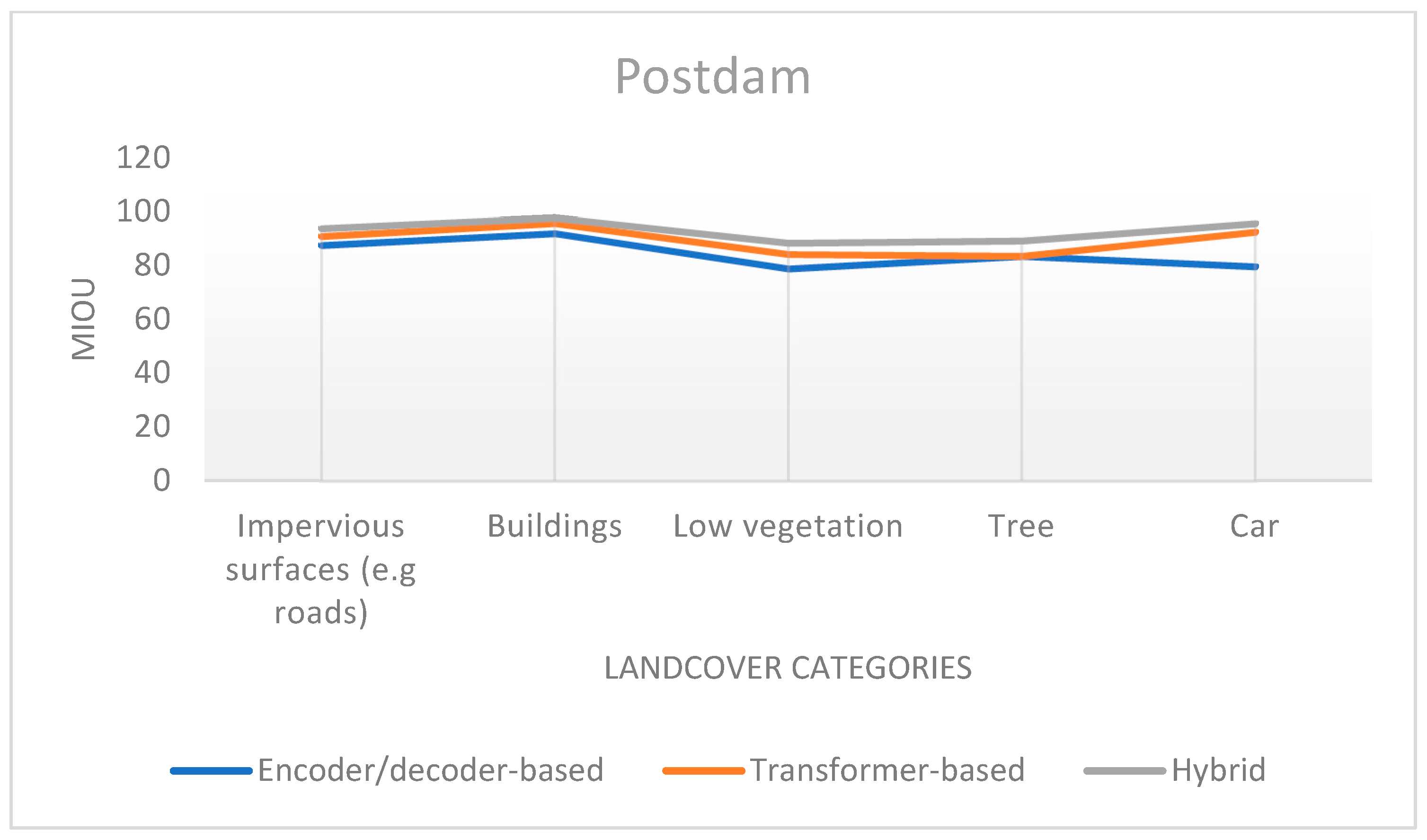

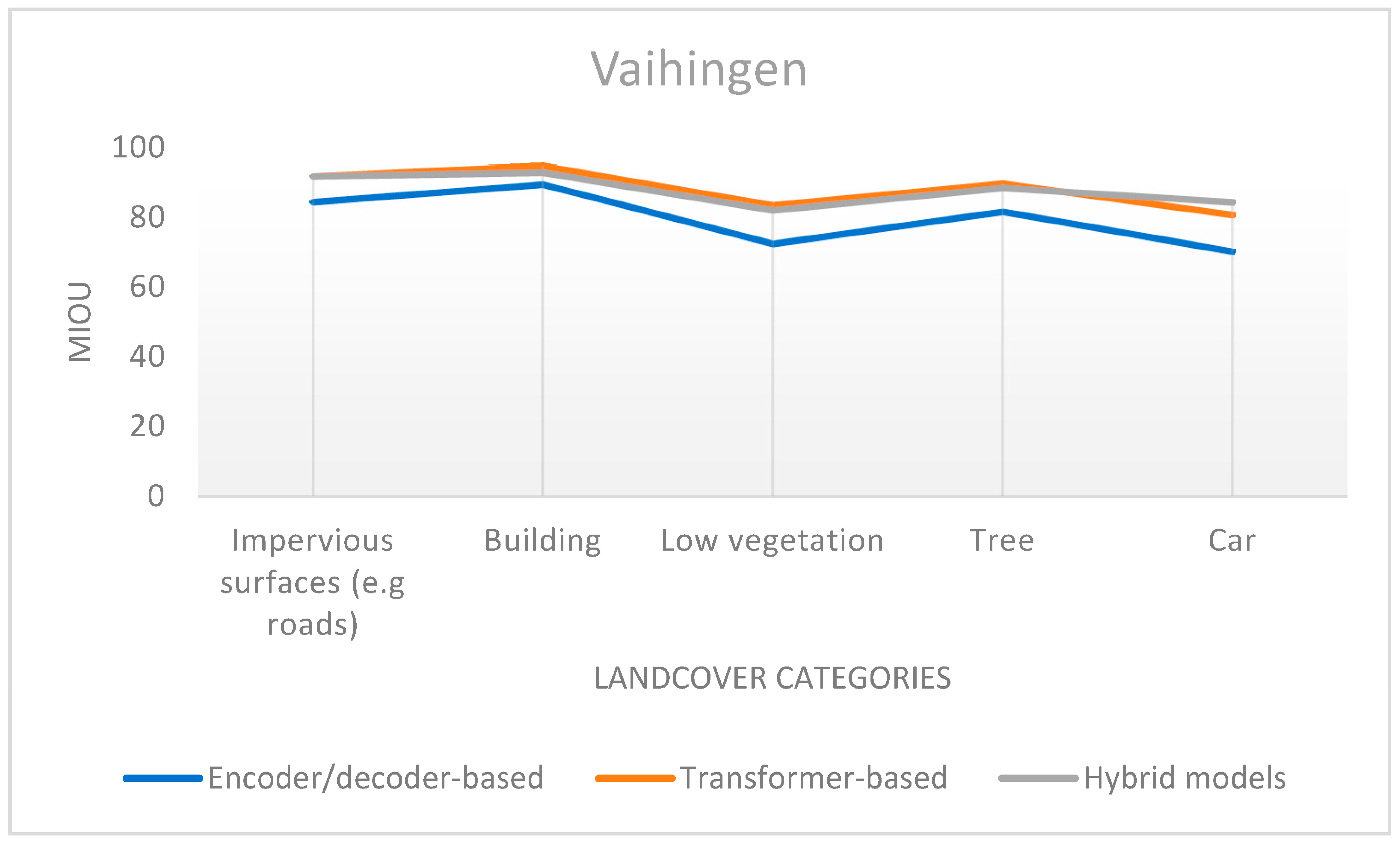

- Performance analysis of semantic segmentation model structures on ISPRS 2-D labelling Potsdam and Vaihingen datasets

- Common experimental training settings

4. Challenges, Future Insights and Directions

4.1. Land Cover Mapping

- Extracting boundary information

- Generating Precise Land Cover Maps

4.2. Semantic Segmentation Methodologies

- Enhancing deep learning model performance

- Analysis of RS images

- Unlabeled and Imbalance RS data

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BANet | Bilateral Awareness Network |

| CNN | Convolutional Neural Networks |

| DCNN | Deep Convolutional Neural Network |

| DEANET | Dual Encoder with Attention Network |

| DGFNET | Dual-Gate Fusion Network |

| DL | Deep Learning |

| DSM | Digital Surface Model |

| FCN | Fully Convolutional Networks |

| GF-2 | GaoFen-2 |

| GF-3 | GaoFen-3 |

| GID | GaoFen Image Data |

| HFENet | Hierarchical Feature Extraction Network |

| HMRT | Hybrid Multi-resolution and Transformer semantic extraction Network |

| IEEE | Institute of Electrical and Electronics Engineers |

| IoU | Mean Intersection over Union |

| ISPRS | International Society for Photogrammetry and Remote Sensing |

| LC | Land Cover |

| LiDAR | Light Detection and Ranging data |

| LoveDA | Land-cOVEr Domain Adaptive |

| LULC | Land Use and Land Cover |

| MARE | Multi-Attention REsu-Net |

| MDPI | Multidisciplinary Digital Publishing Institute |

| MIoU | Mean Intersection over Union |

| NLP | Natural Language Processing |

| OA | Overall Accuracy |

| PolSAR | Polarimetric Synthetic Aperture Radar |

| RAANET | Residual ASPP with Attention Net |

| RQ | Research Question |

| RS | Remote Sensing |

| RSI | Remote Sensing Imaginary |

| SAR | Synthetic Aperture Radar |

| SBANet | Semantic Boundary Awareness Network |

| SEG-ESRGAN | Segmentation Enhanced Super-Resolution Generative Adversarial Network |

| SOCNN | Superpixel-Optimized convolutional neural network |

| SOTA | State-Of-The-Art |

| UAS | Unmanned Aircraft System |

| UAV | Unmanned Aerial Vehicle |

| VEDAI | VEhicle Detection in Aerial Imagery |

| WHDLD | Wuhan Dense Labeling Dataset |

References

- Vali, A.; Comai, S.; Matteucci, M. Deep Learning for Land Use and Land Cover Classification Based on Hyperspectral and Multispectral Earth Observation Data: A Review. Remote Sens. 2020, 12, 2495. [Google Scholar] [CrossRef]

- Ma, J.; Wu, L.; Tang, X.; Liu, F.; Zhang, X.; Jiao, L. Building Extraction of Aerial Images by a Global and Multi-Scale Encoder-Decoder Network. Remote Sens. 2020, 12, 2350. [Google Scholar] [CrossRef]

- Pourmohammadi, P.; Adjeroh, D.A.; Strager, M.P.; Farid, Y.Z. Predicting Developed Land Expansion Using Deep Convolutional Neural Networks. Environ. Model. Softw. 2020, 134, 104751. [Google Scholar] [CrossRef]

- Di Pilato, A.; Taggio, N.; Pompili, A.; Iacobellis, M.; Di Florio, A.; Passarelli, D.; Samarelli, S. Deep Learning Approaches to Earth Observation Change Detection. Remote Sens. 2021, 13, 4083. [Google Scholar] [CrossRef]

- Wei, P.; Chai, D.; Lin, T.; Tang, C.; Du, M.; Huang, J. Large-Scale Rice Mapping under Different Years Based on Time-Series Sentinel-1 Images Using Deep Semantic Segmentation Model. ISPRS J. Photogramm. Remote Sens. 2021, 174, 198–214. [Google Scholar] [CrossRef]

- Dal Molin Jr., R.; Rizzoli, P. Potential of Convolutional Neural Networks for Forest Mapping Using Sentinel-1 Interferometric Short Time Series. Remote Sens. 2022, 14, 1381. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, X.; Huang, J.; Wang, H.; Xin, Q. Fine-Grained Building Change Detection from Very High-Spatial-Resolution Remote Sensing Images Based on Deep Multitask Learning. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8000605. [Google Scholar] [CrossRef]

- Trenčanová, B.; Proença, V.; Bernardino, A. Development of Semantic Maps of Vegetation Cover from UAV Images to Support Planning and Management in Fine-Grained Fire-Prone Landscapes. Remote Sens. 2022, 14, 1262. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Z.; Zhang, J.; Wei, A. MSANet: An Improved Semantic Segmentation Method Using Multi-Scale Attention for Remote Sensing Images. Remote Sens. Lett. 2022, 13, 1249–1259. [Google Scholar] [CrossRef]

- Scepanovic, S.; Antropov, O.; Laurila, P.; Rauste, Y.; Ignatenko, V.; Praks, J. Wide-Area Land Cover Mapping with Sentinel-1 Imagery Using Deep Learning Semantic Segmentation Models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10357–10374. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep Learning for Visual Understanding: A Review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Huang, J.; Weng, L.; Chen, B.; Xia, M. DFFAN: Dual Function Feature Aggregation Network for Semantic Segmentation of Land Cover. ISPRS Int. J. Geoinf. 2021, 10, 125. [Google Scholar] [CrossRef]

- Chen, S.; Wu, C.; Mukherjee, M.; Zheng, Y. Ha-Mppnet: Height Aware-Multi Path Parallel Network for High Spatial Resolution Remote Sensing Image Semantic Seg-Mentation. ISPRS Int. J. Geoinf. 2021, 10, 672. [Google Scholar] [CrossRef]

- Hao, S.; Zhou, Y.; Guo, Y. A Brief Survey on Semantic Segmentation with Deep Learning. Neurocomputing 2020, 406, 302–321. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, X.; Wang, Q.; Dai, F.; Gong, Y.; Zhu, K. Symmetrical Dense-Shortcut Deep Fully Convolutional Networks for Semantic Segmentation of Very-High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1633–1644. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Munich, Germany, 5–9 October 2015; Volume 9351. [Google Scholar]

- Chen, B.; Xia, M.; Huang, J. Mfanet: A Multi-Level Feature Aggregation Network for Semantic Segmentation of Land Cover. Remote Sens. 2021, 13, 731. [Google Scholar] [CrossRef]

- Weng, L.; Pang, K.; Xia, M.; Lin, H.; Qian, M.; Zhu, C. Sgformer: A Local and Global Features Coupling Network for Semantic Segmentation of Land Cover. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 6812–6824. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like Transformer for Efficient Semantic Segmentation of Remote Sensing Urban Scene Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Xiao, D.; Kang, Z.; Fu, Y.; Li, Z.; Ran, M. Csswin-Unet: A Swin-Unet Network for Semantic Segmentation of Remote Sensing Images by Aggregating Contextual Information and Extracting Spatial Information. Int. J. Remote Sens. 2023, 44, 7598–7625. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Martinez-Gonzalez, P.; Garcia-Rodriguez, J. A Survey on Deep Learning Techniques for Image and Video Semantic Segmentation. Appl. Soft Comput. J. 2018, 70, 41–65. [Google Scholar] [CrossRef]

- Lateef, F.; Ruichek, Y. Survey on Semantic Segmentation Using Deep Learning Techniques. Neurocomputing 2019, 338, 321–348. [Google Scholar] [CrossRef]

- Yuan, X.; Shi, J.; Gu, L. A Review of Deep Learning Methods for Semantic Segmentation of Remote Sensing Imagery. Expert. Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Manley, K.; Nyelele, C.; Egoh, B.N. A Review of Machine Learning and Big Data Applications in Addressing Ecosystem Service Research Gaps. Ecosyst. Serv. 2022, 57, 101478. [Google Scholar] [CrossRef]

- Tian, T.; Chu, Z.; Hu, Q.; Ma, L. Class-Wise Fully Convolutional Network for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2021, 13, 3211. [Google Scholar] [CrossRef]

- Wan, L.; Tian, Y.; Kang, W.; Ma, L. D-TNet: Category-Awareness Based Difference-Threshold Alternative Learning Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5633316. [Google Scholar] [CrossRef]

- Picon, A.; Bereciartua-Perez, A.; Eguskiza, I.; Romero-Rodriguez, J.; Jimenez-Ruiz, C.J.; Eggers, T.; Klukas, C.; Navarra-Mestre, R. Deep Convolutional Neural Network for Damaged Vegetation Segmentation from RGB Images Based on Virtual NIR-Channel Estimation. Artif. Intell. Agric. 2022, 6, 199–210. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, X.; Li, J. DWin-HRFormer: A High-Resolution Transformer Model With Directional Windows for Semantic Segmentation of Urban Construction Land. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5400714. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Wang, D.; Duan, C.; Wang, T.; Meng, X. Transformer Meets Convolution: A Bilateral Awareness Network for Semantic Segmentation of Very Fine Resolution Urban Scene Images. Remote Sens. 2021, 13, 3065. [Google Scholar] [CrossRef]

- Akcay, O.; Kinaci, A.C.; Avsar, E.O.; Aydar, U. Semantic Segmentation of High-Resolution Airborne Images with Dual-Stream DeepLabV3+. ISPRS Int. J. Geoinf. 2022, 11, 23. [Google Scholar] [CrossRef]

- Sun, Z.; Zhou, W.; Ding, C.; Xia, M. Multi-Resolution Transformer Network for Building and Road Segmentation of Remote Sensing Image. ISPRS Int. J. Geoinf. 2022, 11, 165. [Google Scholar] [CrossRef]

- Chen, T.-H.K.; Qiu, C.; Schmitt, M.; Zhu, X.X.; Sabel, C.E.; Prishchepov, A.V. Mapping Horizontal and Vertical Urban Densification in Denmark with Landsat Time-Series from 1985 to 2018: A Semantic Segmentation Solution. Remote Sens. Environ. 2020, 251, 112096. [Google Scholar] [CrossRef]

- Wu, F.; Wang, C.; Zhang, H.; Li, J.; Li, L.; Chen, W.; Zhang, B. Built-up Area Mapping in China from GF-3 SAR Imagery Based on the Framework of Deep Learning. Remote Sens. Environ. 2021, 262, 112515. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, S.; Zeng, J.; Li, T.; Guo, Q.; Jin, S. A Framework for Land Use Scenes Classification Based on Landscape Photos. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6124–6141. [Google Scholar] [CrossRef]

- Xu, L.; Shi, S.; Liu, Y.; Zhang, H.; Wang, D.; Zhang, L.; Liang, W.; Chen, H. A Large-Scale Remote Sensing Scene Dataset Construction for Semantic Segmentation. Int. J. Image Data Fusion 2023, 14, 299–323. [Google Scholar] [CrossRef]

- Sirous, A.; Satari, M.; Shahraki, M.M.; Pashayi, M. A Conditional Generative Adversarial Network for Urban Area Classification Using Multi-Source Data. Earth Sci. Inf. 2023, 16, 2529–2543. [Google Scholar] [CrossRef]

- Vasavi, S.; Sri Somagani, H.; Sai, Y. Classification of Buildings from VHR Satellite Images Using Ensemble of U-Net and ResNet. Egypt. J. Remote Sens. Space Sci. 2023, 26, 937–953. [Google Scholar] [CrossRef]

- Kang, J.; Fernandez-Beltran, R.; Sun, X.; Ni, J.; Plaza, A. Deep Learning-Based Building Footprint Extraction with Missing Annotations. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3002805. [Google Scholar] [CrossRef]

- Yu, J.; Zeng, P.; Yu, Y.; Yu, H.; Huang, L.; Zhou, D. A Combined Convolutional Neural Network for Urban Land-Use Classification with GIS Data. Remote Sens. 2022, 14, 1128. [Google Scholar] [CrossRef]

- Wei, P.; Chai, D.; Huang, R.; Peng, D.; Lin, T.; Sha, J.; Sun, W.; Huang, J. Rice Mapping Based on Sentinel-1 Images Using the Coupling of Prior Knowledge and Deep Semantic Segmentation Network: A Case Study in Northeast China from 2019 to 2021. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102948. [Google Scholar] [CrossRef]

- Liu, S.; Peng, D.; Zhang, B.; Chen, Z.; Yu, L.; Chen, J.; Pan, Y.; Zheng, S.; Hu, J.; Lou, Z.; et al. The Accuracy of Winter Wheat Identification at Different Growth Stages Using Remote Sensing. Remote Sens. 2022, 14, 893. [Google Scholar] [CrossRef]

- Bem, P.P.D.; de Carvalho Júnior, O.A.; Carvalho, O.L.F.D.; Gomes, R.A.T.; Guimarāes, R.F.; Pimentel, C.M.M. Irrigated Rice Crop Identification in Southern Brazil Using Convolutional Neural Networks and Sentinel-1 Time Series. Remote Sens. Appl. 2021, 24, 100627. [Google Scholar] [CrossRef]

- Niu, B.; Feng, Q.; Su, S.; Yang, Z.; Zhang, S.; Liu, S.; Wang, J.; Yang, J.; Gong, J. Semantic Segmentation for Plastic-Covered Greenhouses and Plastic-Mulched Farmlands from VHR Imagery. Int. J. Digit. Earth 2023, 16, 4553–4572. [Google Scholar] [CrossRef]

- Sykas, D.; Sdraka, M.; Zografakis, D.; Papoutsis, I. A Sentinel-2 Multiyear, Multicountry Benchmark Dataset for Crop Classification and Segmentation With Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 3323–3339. [Google Scholar] [CrossRef]

- Descals, A.; Wich, S.; Meijaard, E.; Gaveau, D.L.A.; Peedell, S.; Szantoi, Z. High-Resolution Global Map of Smallholder and Industrial Closed-Canopy Oil Palm Plantations. Earth Syst. Sci. Data 2021, 13, 1211–1231. [Google Scholar] [CrossRef]

- He, J.; Lyu, D.; He, L.; Zhang, Y.; Xu, X.; Yi, H.; Tian, Q.; Liu, B.; Zhang, X. Combining Object-Oriented and Deep Learning Methods to Estimate Photosynthetic and Non-Photosynthetic Vegetation Cover in the Desert from Unmanned Aerial Vehicle Images with Consideration of Shadows. Remote Sens. 2023, 15, 105. [Google Scholar] [CrossRef]

- Wan, L.; Li, S.; Chen, Y.; He, Z.; Shi, Y. Application of Deep Learning in Land Use Classification for Soil Erosion Using Remote Sensing. Front. Earth Sci. 2022, 10, 849531. [Google Scholar] [CrossRef]

- Cho, A.Y.; Park, S.-E.; Kim, D.-J.; Kim, J.; Li, C.; Song, J. Burned Area Mapping Using Unitemporal PlanetScope Imagery With a Deep Learning Based Approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 242–253. [Google Scholar] [CrossRef]

- Bergado, J.R.; Persello, C.; Reinke, K.; Stein, A. Predicting Wildfire Burns from Big Geodata Using Deep Learning. Saf. Sci. 2021, 140, 105276. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, P.; Liang, H.; Zheng, C.; Yin, J.; Tian, Y.; Cui, W. Semantic Segmentation and Analysis on Sensitive Parameters of Forest Fire Smoke Using Smoke-Unet and Landsat-8 Imagery. Remote Sens. 2022, 14, 45. [Google Scholar] [CrossRef]

- Liu, C.-C.; Zhang, Y.-C.; Chen, P.-Y.; Lai, C.-C.; Chen, Y.-H.; Cheng, J.-H.; Ko, M.-H. Clouds Classification from Sentinel-2 Imagery with Deep Residual Learning and Semantic Image Segmentation. Remote Sens. 2019, 11, 119. [Google Scholar] [CrossRef]

- Ji, W.; Chen, Y.; Li, K.; Dai, X. Multicascaded Feature Fusion-Based Deep Learning Network for Local Climate Zone Classification Based on the So2Sat LCZ42 Benchmark Dataset. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 449–467. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. Tree, Shrub, and Grass Classification Using Only RGB Images. Remote Sens. 2020, 12, 1333. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Bester, M.S.; Guillen, L.A.; Ramezan, C.A.; Carpinello, D.J.; Fan, Y.; Hartley, F.M.; Maynard, S.M.; Pyron, J.L. Semantic Segmentation Deep Learning for Extracting Surface Mine Extents from Historic Topographic Maps. Remote Sens. 2020, 12, 4145. [Google Scholar] [CrossRef]

- Zhou, G.; Xu, J.; Chen, W.; Li, X.; Li, J.; Wang, L. Deep Feature Enhancement Method for Land Cover With Irregular and Sparse Spatial Distribution Features: A Case Study on Open-Pit Mining. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4401220. [Google Scholar] [CrossRef]

- Lee, S.-H.; Han, K.-J.; Lee, K.; Lee, K.-J.; Oh, K.-Y.; Lee, M.-J. Classification of Landscape Affected by Deforestation Using High-resolution Remote Sensing Data and Deep-learning Techniques. Remote Sens. 2020, 12, 3372. [Google Scholar] [CrossRef]

- Yu, T.; Wu, W.; Gong, C.; Li, X. Residual Multi-Attention Classification Network for a Forest Dominated Tropical Landscape Using High-Resolution Remote Sensing Imagery. ISPRS Int. J. Geoinf. 2021, 10, 22. [Google Scholar] [CrossRef]

- Pashaei, M.; Kamangir, H.; Starek, M.J.; Tissot, P. Review and Evaluation of Deep Learning Architectures for Efficient Land Cover Mapping with UAS Hyper-Spatial Imagery: A Case Study over a Wetland. Remote Sens. 2020, 12, 959. [Google Scholar] [CrossRef]

- Fang, B.; Chen, G.; Chen, J.; Ouyang, G.; Kou, R.; Wang, L. Cct: Conditional Co-Training for Truly Unsupervised Remote Sensing Image Segmentation in Coastal Areas. Remote Sens. 2021, 13, 3521. [Google Scholar] [CrossRef]

- Buchsteiner, C.; Baur, P.A.; Glatzel, S. Spatial Analysis of Intra-Annual Reed Ecosystem Dynamics at Lake Neusiedl Using RGB Drone Imagery and Deep Learning. Remote Sens. 2023, 15, 3961. [Google Scholar] [CrossRef]

- Wang, Z.; Mahmoudian, N. Aerial Fluvial Image Dataset for Deep Semantic Segmentation Neural Networks and Its Benchmarks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4755–4766. [Google Scholar] [CrossRef]

- Chen, J.; Chen, G.; Wang, L.; Fang, B.; Zhou, P.; Zhu, M. Coastal Land Cover Classification of High-Resolution Remote Sensing Images Using Attention-Driven Context Encoding Network. Sensors 2020, 20, 7032. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Zhou, Y.; Zhang, Y.; Zhong, L.; Wang, J.; Chen, J. DKDFN: Domain Knowledge-Guided Deep Collaborative Fusion Network for Multimodal Unitemporal Remote Sensing Land Cover Classification. ISPRS J. Photogramm. Remote Sens. 2022, 186, 170–189. [Google Scholar] [CrossRef]

- Tzepkenlis, A.; Marthoglou, K.; Grammalidis, N. Efficient Deep Semantic Segmentation for Land Cover Classification Using Sentinel Imagery. Remote Sens. 2023, 15, 2027. [Google Scholar] [CrossRef]

- Billson, J.; Islam, M.D.S.; Sun, X.; Cheng, I. Water Body Extraction from Sentinel-2 Imagery with Deep Convolutional Networks and Pixelwise Category Transplantation. Remote Sens. 2023, 15, 1253. [Google Scholar] [CrossRef]

- Bergamasco, L.; Bovolo, F.; Bruzzone, L. A Dual-Branch Deep Learning Architecture for Multisensor and Multitemporal Remote Sensing Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2147–2162. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, B.; Chen, Z.; Bai, Y.; Chen, P. A Multi-Temporal Network for Improving Semantic Segmentation of Large-Scale Landsat Imagery. Remote Sens. 2022, 14, 5062. [Google Scholar] [CrossRef]

- Yang, X.; Chen, Z.; Zhang, B.; Li, B.; Bai, Y.; Chen, P. A Block Shuffle Network with Superpixel Optimization for Landsat Image Semantic Segmentation. Remote Sens. 2022, 14, 1432. [Google Scholar] [CrossRef]

- Boonpook, W.; Tan, Y.; Nardkulpat, A.; Torsri, K.; Torteeka, P.; Kamsing, P.; Sawangwit, U.; Pena, J.; Jainaen, M. Deep Learning Semantic Segmentation for Land Use and Land Cover Types Using Landsat 8 Imagery. ISPRS Int. J. Geoinf. 2023, 12, 14. [Google Scholar] [CrossRef]

- Bergado, J.R.; Persello, C.; Stein, A. Recurrent Multiresolution Convolutional Networks for VHR Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6361–6374. [Google Scholar] [CrossRef]

- Karila, K.; Matikainen, L.; Karjalainen, M.; Puttonen, E.; Chen, Y.; Hyyppä, J. Automatic Labelling for Semantic Segmentation of VHR Satellite Images: Application of Airborne Laser Scanner Data and Object-Based Image Analysis. ISPRS Open J. Photogramm. Remote Sens. 2023, 9, 100046. [Google Scholar] [CrossRef]

- Zhang, X.; Du, L.; Tan, S.; Wu, F.; Zhu, L.; Zeng, Y.; Wu, B. Land Use and Land Cover Mapping Using Rapideye Imagery Based on a Novel Band Attention Deep Learning Method in the Three Gorges Reservoir Area. Remote Sens. 2021, 13, 1225. [Google Scholar] [CrossRef]

- Zhu, Y.; Geis, C.; So, E.; Jin, Y. Multitemporal Relearning with Convolutional LSTM Models for Land Use Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3251–3265. [Google Scholar] [CrossRef]

- Fan, Z.; Zhan, T.; Gao, Z.; Li, R.; Liu, Y.; Zhang, L.; Jin, Z.; Xu, S. Land Cover Classification of Resources Survey Remote Sensing Images Based on Segmentation Model. IEEE Access 2022, 10, 56267–56281. [Google Scholar] [CrossRef]

- Clark, A.; Phinn, S.; Scarth, P. Pre-Processing Training Data Improves Accuracy and Generalisability of Convolutional Neural Network Based Landscape Semantic Segmentation. Land 2023, 12, 1268. [Google Scholar] [CrossRef]

- Mohammadimanesh, F.; Salehi, B.; Mahdianpari, M.; Gill, E.; Molinier, M. A New Fully Convolutional Neural Network for Semantic Segmentation of Polarimetric SAR Imagery in Complex Land Cover Ecosystem. ISPRS J. Photogramm. Remote Sens. 2019, 151, 223–236. [Google Scholar] [CrossRef]

- Wenger, R.; Puissant, A.; Weber, J.; Idoumghar, L.; Forestier, G. Multimodal and Multitemporal Land Use/Land Cover Semantic Segmentation on Sentinel-1 and Sentinel-2 Imagery: An Application on a MultiSenGE Dataset. Remote Sens. 2023, 15, 151. [Google Scholar] [CrossRef]

- Xia, J.; Yokoya, N.; Adriano, B.; Zhang, L.; Li, G.; Wang, Z. A Benchmark High-Resolution GaoFen-3 SAR Dataset for Building Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5950–5963. [Google Scholar] [CrossRef]

- Kotru, R.; Turkar, V.; Simu, S.; De, S.; Shaikh, M.; Banerjee, S.; Singh, G.; Das, A. Development of a Generalized Model to Classify Various Land Covers for ALOS-2 L-Band Images Using Semantic Segmentation. Adv. Space Res. 2022, 70, 3811–3821. [Google Scholar] [CrossRef]

- Mehra, A.; Jain, N.; Srivastava, H.S. A Novel Approach to Use Semantic Segmentation Based Deep Learning Networks to Classify Multi-Temporal SAR Data. Geocarto Int. 2022, 37, 163–178. [Google Scholar] [CrossRef]

- Pešek, O.; Segal-Rozenhaimer, M.; Karnieli, A. Using Convolutional Neural Networks for Cloud Detection on VENμS Images over Multiple Land-Cover Types. Remote Sens. 2022, 14, 5210. [Google Scholar] [CrossRef]

- Jing, H.; Wang, Z.; Sun, X.; Xiao, D.; Fu, K. PSRN: Polarimetric Space Reconstruction Network for PolSAR Image Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10716–10732. [Google Scholar] [CrossRef]

- Zhang, R.; Chen, J.; Feng, L.; Li, S.; Yang, W.; Guo, D. A Refined Pyramid Scene Parsing Network for Polarimetric SAR Image Semantic Segmentation in Agricultural Areas. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4014805. [Google Scholar] [CrossRef]

- Garg, R.; Kumar, A.; Bansal, N.; Prateek, M.; Kumar, S. Semantic Segmentation of PolSAR Image Data Using Advanced Deep Learning Model. Sci. Rep. 2021, 11, 15365. [Google Scholar] [CrossRef]

- Zheng, N.-R.; Yang, Z.-A.; Shi, X.-Z.; Zhou, R.-Y.; Wang, F. Land Cover Classification of Synthetic Aperture Radar Images Based on Encoder—Decoder Network with an Attention Mechanism. J. Appl. Remote Sens. 2022, 16, 014520. [Google Scholar] [CrossRef]

- Shi, X.; Fu, S.; Chen, J.; Wang, F.; Xu, F. Object-Level Semantic Segmentation on the High-Resolution Gaofen-3 FUSAR-Map Dataset. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3107–3119. [Google Scholar] [CrossRef]

- Yoshida, K.; Pan, S.; Taniguchi, J.; Nishiyama, S.; Kojima, T.; Islam, M.T. Airborne LiDAR-Assisted Deep Learning Methodology for Riparian Land Cover Classification Using Aerial Photographs and Its Application for Flood Modelling. J. Hydroinformatics 2022, 24, 179–201. [Google Scholar] [CrossRef]

- Arief, H.A.; Strand, G.-H.; Tveite, H.; Indahl, U.G. Land Cover Segmentation of Airborne LiDAR Data Using Stochastic Atrous Network. Remote Sens. 2018, 10, 973. [Google Scholar] [CrossRef]

- Xu, Z.; Su, C.; Zhang, X. A Semantic Segmentation Method with Category Boundary for Land Use and Land Cover (LULC) Mapping of Very-High Resolution (VHR) Remote Sensing Image. Int. J. Remote Sens. 2021, 42, 3146–3165. [Google Scholar] [CrossRef]

- Liu, M.; Zhang, P.; Shi, Q.; Liu, M. An Adversarial Domain Adaptation Framework with KL-Constraint for Remote Sensing Land Cover Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3002305. [Google Scholar] [CrossRef]

- Lee, D.G.; Shin, Y.H.; Lee, D.C. Land Cover Classification Using SegNet with Slope, Aspect, and Multidirectional Shaded Relief Images Derived from Digital Surface Model. J. Sens. 2020, 2020, 8825509. [Google Scholar] [CrossRef]

- Wang, Y.; Shi, H.; Zhuang, Y.; Sang, Q.; Chen, L. Bidirectional Grid Fusion Network for Accurate Land Cover Classification of High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5508–5517. [Google Scholar] [CrossRef]

- Shi, H.; Fan, J.; Wang, Y.; Chen, L. Dual Attention Feature Fusion and Adaptive Context for Accurate Segmentation of Very High-Resolution Remote Sensing Images. Remote Sens. 2021, 13, 3715. [Google Scholar] [CrossRef]

- He, S.; Lu, X.; Gu, J.; Tang, H.; Yu, Q.; Liu, K.; Ding, H.; Chang, C.; Wang, N. RSI-Net: Two-Stream Deep Neural Network for Remote Sensing Images-Based Semantic Segmentation. IEEE Access 2022, 10, 34858–34871. [Google Scholar] [CrossRef]

- Yang, N.; Tang, H. Semantic Segmentation of Satellite Images: A Deep Learning Approach Integrated with Geospatial Hash Codes. Remote Sens. 2021, 13, 2723. [Google Scholar] [CrossRef]

- Boguszewski, A.; Batorski, D.; Ziemba-Jankowska, N.; Dziedzic, T.; Zambrzycka, A. LandCover.Ai: Dataset for Automatic Mapping of Buildings, Woodlands, Water and Roads from Aerial Imagery. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Gao, J.; Weng, L.; Xia, M.; Lin, H. MLNet: Multichannel Feature Fusion Lozenge Network for Land Segmentation. J. Appl. Remote Sens. 2022, 16, 016513. [Google Scholar] [CrossRef]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raska, R. DeepGlobe 2018: A Challenge to Parse the Earth through Satellite Images. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; Volume 2018. [Google Scholar]

- Wei, H.; Xu, X.; Ou, N.; Zhang, X.; Dai, Y. Deanet: Dual Encoder with Attention Network for Semantic Segmentation of Remote Sensing Imagery. Remote Sens. 2021, 13, 3900. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; Volume 2017. [Google Scholar]

- Li, W.; He, C.; Fang, J.; Zheng, J.; Fu, H.; Yu, L. Semantic Segmentation-Based Building Footprint Extraction Using Very High-Resolution Satellite Images and Multi-Source GIS Data. Remote Sens. 2019, 11, 403. [Google Scholar] [CrossRef]

- Ji, S.; Wang, D.; Luo, M. Generative Adversarial Network-Based Full-Space Domain Adaptation for Land Cover Classification from Multiple-Source Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3816–3828. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, L.; Wu, Y.; Wu, G.; Guo, Z.; Waslander, S.L. Aerial Imagery for Roof Segmentation: A Large-Scale Dataset towards Automatic Mapping of Buildings. ISPRS J. Photogramm. Remote Sens. 2019, 147, 42–55. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-Detect: Vehicle Detection and Classification through Semantic Segmentation of Aerial Images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Shukla, N.; Chakraborty, S.; Alamri, A. Multi-Object Segmentation in Complex Urban Scenes from High-Resolution Remote Sensing Data. Remote Sens. 2021, 13, 3710. [Google Scholar] [CrossRef]

- Khan, S.D.; Alarabi, L.; Basalamah, S. Deep Hybrid Network for Land Cover Semantic Segmentation in High-Spatial Resolution Satellite Images. Information 2021, 12, 230. [Google Scholar] [CrossRef]

- Liu, R.; Tao, F.; Liu, X.; Na, J.; Leng, H.; Wu, J.; Zhou, T. RAANet: A Residual ASPP with Attention Framework for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 3109. [Google Scholar] [CrossRef]

- Sang, Q.; Zhuang, Y.; Dong, S.; Wang, G.; Chen, H. FRF-Net: Land Cover Classification from Large-Scale VHR Optical Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1057–1061. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, F.; Xiang, Y.; You, H. Article Dgfnet: Dual Gate Fusion Network for Land Cover Classification in Very High-Resolution Images. Remote Sens. 2021, 13, 3755. [Google Scholar] [CrossRef]

- Niu, X.; Zeng, Q.; Luo, X.; Chen, L. FCAU-Net for the Semantic Segmentation of Fine-Resolution Remotely Sensed Images. Remote Sens. 2022, 14, 215. [Google Scholar] [CrossRef]

- Wu, M.; Zhang, C.; Liu, J.; Zhou, L.; Li, X. Towards Accurate High Resolution Satellite Image Semantic Segmentation. IEEE Access 2019, 7, 55609–55619. [Google Scholar] [CrossRef]

- Li, J.; Wang, H.; Zhang, A.; Liu, Y. Semantic Segmentation of Hyperspectral Remote Sensing Images Based on PSE-UNet Model. Sensors 2022, 22, 9678. [Google Scholar] [CrossRef]

- Salgueiro, L.; Marcello, J.; Vilaplana, V. SEG-ESRGAN: A Multi-Task Network for Super-Resolution and Semantic Segmentation of Remote Sensing Images. Remote Sens. 2022, 14, 5862. [Google Scholar] [CrossRef]

- Marsocci, V.; Scardapane, S.; Komodakis, N. MARE: Self-Supervised Multi-Attention REsu-Net for Semantic Segmentation in Remote Sensing. Remote Sens. 2021, 13, 3275. [Google Scholar] [CrossRef]

- Wang, S.; Mu, X.; Yang, D.; He, H.; Zhao, P. Attention Guided Encoder-Decoder Network with Multi-Scale Context Aggregation for Land Cover Segmentation. IEEE Access 2020, 8, 215299–215309. [Google Scholar] [CrossRef]

- Feng, D.; Zhang, Z.; Yan, K. A Semantic Segmentation Method for Remote Sensing Images Based on the Swin Transformer Fusion Gabor Filter. IEEE Access 2022, 10, 77432–77451. [Google Scholar] [CrossRef]

- Bai, J.; Wen, Z.; Xiao, Z.; Ye, F.; Zhu, Y.; Alazab, M.; Jiao, L. Hyperspectral Image Classification Based on Multibranch Attention Transformer Networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 3196661. [Google Scholar] [CrossRef]

- Meng, X.; Yang, Y.; Wang, L.; Wang, T.; Li, R.; Zhang, C. Class-Guided Swin Transformer for Semantic Segmentation of Remote Sensing Imagery. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6517505. [Google Scholar] [CrossRef]

- Wang, D.; Yang, R.; Zhang, Z.; Liu, H.; Tan, J.; Li, S.; Yang, X.; Wang, X.; Tang, K.; Qiao, Y.; et al. P-Swin: Parallel Swin Transformer Multi-Scale Semantic Segmentation Network for Land Cover Classification. Comput. Geosci. 2023, 175, 105340. [Google Scholar] [CrossRef]

- Dong, R.; Fang, W.; Fu, H.; Gan, L.; Wang, J.; Gong, P. High-Resolution Land Cover Mapping through Learning with Noise Correction. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4402013. [Google Scholar] [CrossRef]

- Shen, X.; Weng, L.; Xia, M.; Lin, H. Multi-Scale Feature Aggregation Network for Semantic Segmentation of Land Cover. Remote Sens. 2022, 14, 6156. [Google Scholar] [CrossRef]

- Luo, Y.; Wang, J.; Yang, X.; Yu, Z.; Tan, Z. Pixel Representation Augmented through Cross-Attention for High-Resolution Remote Sensing Imagery Segmentation. Remote Sens. 2022, 14, 5415. [Google Scholar] [CrossRef]

- Yuan, X.; Chen, Z.; Chen, N.; Gong, J. Land Cover Classification Based on the PSPNet and Superpixel Segmentation Methods with High Spatial Resolution Multispectral Remote Sensing Imagery. J. Appl. Remote Sens. 2021, 15, 034511. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Shangguan, B.; Wang, M.; Wu, Z. A Multi-Level Context-Guided Classification Method with Object-Based Convolutional Neural Network for Land Cover Classification Using Very High Resolution Remote Sensing Images. Int. J. Appl. Earth Obs. Geoinf. 2020, 88, 102086. [Google Scholar] [CrossRef]

- Van den Broeck, W.A.J.; Goedemé, T.; Loopmans, M. Multiclass Land Cover Mapping from Historical Orthophotos Using Domain Adaptation and Spatio-Temporal Transfer Learning. Remote Sens. 2022, 14, 5911. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, T.; Wang, B. Curriculum-Style Local-to-Global Adaptation for Cross-Domain Remote Sensing Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5611412. [Google Scholar] [CrossRef]

- Li, A.; Jiao, L.; Zhu, H.; Li, L.; Liu, F. Multitask Semantic Boundary Awareness Network for Remote Sensing Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5400314. [Google Scholar] [CrossRef]

- Shan, L.; Wang, W. DenseNet-Based Land Cover Classification Network with Deep Fusion. IEEE Geosci. Remote Sens. Lett. 2022, 19. [Google Scholar] [CrossRef]

- Safarov, F.; Temurbek, K.; Jamoljon, D.; Temur, O.; Chedjou, J.C.; Abdusalomov, A.B.; Cho, Y.-I. Improved Agricultural Field Segmentation in Satellite Imagery Using TL-ResUNet Architecture. Sensors 2022, 22, 9784. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.-Q.; Tang, P.; Zhang, W.; Zhang, Z. CNN-Enhanced Heterogeneous Graph Convolutional Network: Inferring Land Use from Land Cover with a Case Study of Park Segmentation. Remote Sens. 2022, 14, 5027. [Google Scholar] [CrossRef]

- Wang, D.; Yang, R.; Liu, H.; He, H.; Tan, J.; Li, S.; Qiao, Y.; Tang, K.; Wang, X. HFENet: Hierarchical Feature Extraction Network for Accurate Landcover Classification. Remote Sens. 2022, 14, 4244. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, M.; Wang, Y.; Shang, J.; Liu, X.; Li, B.; Song, A.; Li, Q. Automated Delineation of Agricultural Field Boundaries from Sentinel-2 Images Using Recurrent Residual U-Net. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102557. [Google Scholar] [CrossRef]

- Maggiolo, L.; Marcos, D.; Moser, G.; Serpico, S.B.; Tuia, D. A Semisupervised CRF Model for CNN-Based Semantic Segmentation with Sparse Ground Truth. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5606315. [Google Scholar] [CrossRef]

- Barthakur, M.; Sarma, K.K.; Mastorakis, N. Modified Semi-Supervised Adversarial Deep Network and Classifier Combination for Segmentation of Satellite Images. IEEE Access 2020, 8, 117972–117985. [Google Scholar] [CrossRef]

- Wang, Q.; Luo, X.; Feng, J.; Li, S.; Yin, J. CCENet: Cascade Class-Aware Enhanced Network for High-Resolution Aerial Imagery Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6943–6956. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, L.; Zhang, B.Y.J.; Sun, J.; Dong, S.; Wang, X.; Li, Y.; Xu, J.; Chu, W.; Dong, Y.; et al. Land Cover Classification in a Mixed Forest-Grassland Ecosystem Using LResU-Net and UAV Imagery. J. Res. 2022, 33, 923–936. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building Extraction in Very High Resolution Remote Sensing Imagery Using Deep Learning and Guided Filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Li, L.; Yao, J.; Liu, Y.; Yuan, W.; Shi, S.; Yuan, S. Optimal Seamline Detection for Orthoimage Mosaicking by Combining Deep Convolutional Neural Network and Graph Cuts. Remote Sens. 2017, 9, 701. [Google Scholar] [CrossRef]

- Cecili, G.; De Fioravante, P.; Congedo, L.; Marchetti, M.; Munafò, M. Land Consumption Mapping with Convolutional Neural Network: Case Study in Italy. Land 2022, 11, 1919. [Google Scholar] [CrossRef]

- Abadal, S.; Salgueiro, L.; Marcello, J.; Vilaplana, V. A Dual Network for Super-Resolution and Semantic Segmentation of Sentinel-2 Imagery. Remote Sens. 2021, 13, 4547. [Google Scholar] [CrossRef]

- Henry, C.J.; Storie, C.D.; Palaniappan, M.; Alhassan, V.; Swamy, M.; Aleshinloye, D.; Curtis, A.; Kim, D. Automated LULC Map Production Using Deep Neural Networks. Int. J. Remote Sens. 2019, 40, 4416–4440. [Google Scholar] [CrossRef]

- Shojaei, H.; Nadi, S.; Shafizadeh-Moghadam, H.; Tayyebi, A.; Van Genderen, J. An Efficient Built-up Land Expansion Model Using a Modified U-Net. Int. J. Digit. Earth 2022, 15, 148–163. [Google Scholar] [CrossRef]

- Dong, X.; Zhang, C.; Fang, L.; Yan, Y. A Deep Learning Based Framework for Remote Sensing Image Ground Object Segmentation. Appl. Soft Comput. 2022, 130, 109695. [Google Scholar] [CrossRef]

- Guo, X.; Chen, Z.; Wang, C. Fully Convolutional Densenet with Adversarial Training for Semantic Segmentation of High-Resolution Remote Sensing Images. J. Appl. Remote Sens. 2021, 15, 016520. [Google Scholar] [CrossRef]

- Zhang, B.; Wan, Y.; Zhang, Y.; Li, Y. JSH-Net: Joint Semantic Segmentation and Height Estimation Using Deep Convolutional Networks from Single High-Resolution Remote Sensing Imagery. Int. J. Remote Sens. 2022, 43, 6307–6332. [Google Scholar] [CrossRef]

- Li, W.; Chen, K.; Shi, Z. Geographical Supervision Correction for Remote Sensing Representation Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5411520. [Google Scholar] [CrossRef]

- Shi, W.; Qin, W.; Chen, A. Towards Robust Semantic Segmentation of Land Covers in Foggy Conditions. Remote Sens. 2022, 14, 4551. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, P.; Zhao, L. Fast and Accurate Land Cover Classification on Medium Resolution Remote Sensing Images Using Segmentation Models. Int. J. Remote Sens. 2021, 42, 3277–3301. [Google Scholar] [CrossRef]

- Dechesne, C.; Mallet, C.; Le Bris, A.; Gouet-Brunet, V. Semantic Segmentation of Forest Stands of Pure Species Combining Airborne Lidar Data and Very High Resolution Multispectral Imagery. ISPRS J. Photogramm. Remote Sens. 2017, 126, 129–145. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, W.; Cao, J.; Xie, G. MKANet: An Efficient Network with Sobel Boundary Loss for Land-Cover Classification of Satellite Remote Sensing Imagery. Remote Sens. 2022, 14, 4514. [Google Scholar] [CrossRef]

- Li, W.; Chen, K.; Chen, H.; Shi, Z. Geographical Knowledge-Driven Representation Learning for Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5405516. [Google Scholar] [CrossRef]

- Liu, W.; Liu, J.; Luo, Z.; Zhang, H.; Gao, K.; Li, J. Weakly Supervised High Spatial Resolution Land Cover Mapping Based on Self-Training with Weighted Pseudo-Labels. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102931. [Google Scholar] [CrossRef]

- Liu, Q.; Kampffmeyer, M.; Jenssen, R.; Salberg, A.-B. Dense Dilated Convolutions Merging Network for Land Cover Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6309–6320. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, H.; Lu, F.; Xue, R.; Yang, G.; Zhang, L. Breaking the Resolution Barrier: A Low-to-High Network for Large-Scale High-Resolution Land-Cover Mapping Using Low-Resolution Labels. ISPRS J. Photogramm. Remote Sens. 2022, 192, 244–267. [Google Scholar] [CrossRef]

- Yuan, Q.; Mohd Shafri, H.Z. Multi-Modal Feature Fusion Network with Adaptive Center Point Detector for Building Instance Extraction. Remote Sens. 2022, 14, 4920. [Google Scholar] [CrossRef]

- Mboga, N.; D’aronco, S.; Grippa, T.; Pelletier, C.; Georganos, S.; Vanhuysse, S.; Wolff, E.; Smets, B.; Dewitte, O.; Lennert, M.; et al. Domain Adaptation for Semantic Segmentation of Historical Panchromatic Orthomosaics in Central Africa. ISPRS Int. J. Geoinf. 2021, 10, 523. [Google Scholar] [CrossRef]

- Zhang, Z.; Doi, K.; Iwasaki, A.; Xu, G. Unsupervised Domain Adaptation of High-Resolution Aerial Images via Correlation Alignment and Self Training. IEEE Geosci. Remote Sens. Lett. 2021, 18, 746–750. [Google Scholar] [CrossRef]

- Simms, D.M. Fully Convolutional Neural Nets In-the-Wild. Remote Sens. Lett. 2020, 11, 1080–1089. [Google Scholar] [CrossRef]

- Liu, C.; Du, S.; Lu, H.; Li, D.; Cao, Z. Multispectral Semantic Land Cover Segmentation from Aerial Imagery with Deep Encoder-Decoder Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 5000105. [Google Scholar] [CrossRef]

- Sun, P.; Lu, Y.; Zhai, J. Mapping Land Cover Using a Developed U-Net Model with Weighted Cross Entropy. Geocarto Int. 2022, 37, 9355–9368. [Google Scholar] [CrossRef]

- Chen, J.; Sun, B.; Wang, L.; Fang, B.; Chang, Y.; Li, Y.; Zhang, J.; Lyu, X.; Chen, G. Semi-Supervised Semantic Segmentation Framework with Pseudo Supervisions for Land-Use/Land-Cover Mapping in Coastal Areas. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102881. [Google Scholar] [CrossRef]

| Data Sources | Number of Articles | References |

|---|---|---|

| RS Satellites | ||

| Sentinel-2 | 7 | [45,64,65,66,67] |

| Landsat | 5 | [33,68,69,70] |

| Worldview-03 | 2 | [71,72] |

| Rapid eye | 1 | [73] |

| Worldview-02 | 1 | [74] |

| Quickbird | 1 | [74] |

| ZY-3 | 1 | [48] |

| PlanetScope | 1 | [49] |

| GF-2 | 2 | [48,75] |

| Aerial images | ||

| Phantom m multi-rotor AUS | 1 | [59] |

| Quadcopter drone | 1 | [61] |

| Vexcel Ultracam Eagle Camera | 1 | [76] |

| DJI-Phantom 4 pro UAV | 1 | [47] |

| SAR SAT | ||

| RADARSAT-2 | 1 | [77] |

| Sentinel-1 | 6 | [10,41,43,64,65,78] |

| GF-3 | 1 | [79] |

| ALOS-2 | 1 | [80] |

| Others | ||

| Earth digitalglobe | 2 | [44,60] |

| Mobile phone | 1 | [35] |

| Lidar Sources | 1 | [37] |

| Models | Datasets | Performance Metrics | Limitation/Future Work |

|---|---|---|---|

| RAANet [108] | LoveDA, ISPRS Vaihingen | MIoU = 77.28, MIoU = 73.47 | Accuracy can be improved with optimization. |

| PSE-UNet Model [113] | Salinas Dataset | MIoU = 88.50 | Inaccurate segmentation of land cover features with low frequencies, superfluous parameter redundancy, and unvalidated generalization capabilities. |

| SEG-ESRGAN [114] | Sentinel-2 and WorldView-2 image pairs. | MIoU = 62.78 | The assessment of utilizing medium-resolution images has not been tested |

| Class-wise FCN [26] | Vaihingen, Potsdam | MIoU = 72.35, MIoU = 76.88 | Enhancements in performance can be achieved through class-wise considerations for multiple classes, along with improved and more efficient implementations. |

| MARE [115] | Vaihingen | MIoU = 81.76 | Improve performance through parameter optimization and extend approach incorporating other self-supervised algorithms. |

| Feature fusion with dual attention and flexible contextual adaptation [94] | Vaihingen, GaoFen-2 | MIoU = 70.51, MIoU = 56.98 | Computational complexity issue. |

| Deanet [100] | LandCover.ai, DSTL dataset, DeepGlobe | MIoU = 90.28, MIoU = 52.70, MIoU = 71.80 | Suboptimal performance. Future efforts involve incorporating the spatial attention module into a single unified backbone network. |

| An encoder-decoder framework featuring attention-guided multi-scale context integration [116] | GF-2 images | MIoU = 62.3% | Reduced accuracy on imbalance data. |

| Models | Data | Performance | Limitation |

|---|---|---|---|

| Swin-S-GF [117], | GID | OA = 89.15 MIoU = 80.14 | Computational complexity issue and slow convergence speed. |

| CG-Swin [119] | Vaihingen, Potsdam | OA = 91.68 MIoU = 83.39, OA = 91.93 MIoU = 87.61 | Extending CG-Swin to accommodate multi-modal data sources for more comprehensive and robust classification. |

| BANet [30] | Vaihingen, Potsdam, UAVid dataset | MIoU = 81.35, MIoU = 86.25, MIoU = 64.6 | Combine convolution and Transformer as a hybrid structure to improve performance. |

| Spectral spatial transformer [118] | Indian dataset | OA = 0.94 | Computational complexity issue |

| Sgformer [18] | Landcover dataset | MIOU = 0.85 | Computational complexity issue and slow convergence speed. |

| Parallel Swin Transformer [120] | Postdam, GID WHDLD | OA = 89.44, OA = 84.67, OA = 84.86 | Performance can be improved. |

| Models | Datasets | Performance Metrics | Limitation |

|---|---|---|---|

| RSI-Net [95] | Vaihingen, Potsdam, GID | OA = 91.83, OA = 93.31, OA = 93.67 | Limitation in segmentation of pixel-wise semantics. Enhanced feature map fusion decoders can lead to performance improvements. |

| HMRT [32] | Potsdam | OA = 85.99 MIoU = 74.14 | Parameter complexity issue, decrease in segmentation accuracy due to a lot of noise. Optimization is required. |

| UNetFormer [19] | UAVid, Vaihingen, Potsdam, LoveDA | MIoU = 67.8, OA = 91.0 MIoU = 82.7, OA = 91.3 MIoU = 86.8, MIoU = 52.4 | Investigate the Transformer’s potential and practicality in addressing geospatial vision tasks is open for research. |

| (TL-ResUNet) model [130] | DeepGlobe | IoU = 0.81 | Improve classification performance is open for research, and developing real time and automated solution for land use land cover. |

| CNN-enhanced heterogeneous GCN [131] | Beijing dataset, Shenzhen dataset. | MIoU = 70.48, MIoU = 62.45 | Future endeavor is to optimize the utilization of pretrained deep CNN features and GCN features across various segmentation scales. |

| HFENet [132] | MZData, LandCover Dataset, WHU Building Dataset | MIoU = 87.19, MIoU = 89.69, MIoU = 92.12 | Time and space complexity issues. Future work can be to automatically fine-tune the parameters to attain the optimal performance of the model. |

| Model’s Structures | Batch Size | Epochs | Learning Rate | Data Augmentation | Backbone | Popular Optimizer | Parameters | Evaluation Metrics |

|---|---|---|---|---|---|---|---|---|

| Encoder/decoder-based | 4, 8, 16, 64 | 100–500 | 0.01 | Yes | ResNet | SGD | Low–High | MIoU, OA, F1 |

| Transformer-based | 6, 8 | 100–200 | 0.0006 | Yes | ResNet/Swintiny | Adam | High | MIoU, OA, F1 |

| Hybrid models | 8, 16 | 40–100 | 0.0006 | Yes | ResNet | Adam | Low–High | MIoU, OA, F1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ajibola, S.; Cabral, P. A Systematic Literature Review and Bibliometric Analysis of Semantic Segmentation Models in Land Cover Mapping. Remote Sens. 2024, 16, 2222. https://doi.org/10.3390/rs16122222

Ajibola S, Cabral P. A Systematic Literature Review and Bibliometric Analysis of Semantic Segmentation Models in Land Cover Mapping. Remote Sensing. 2024; 16(12):2222. https://doi.org/10.3390/rs16122222

Chicago/Turabian StyleAjibola, Segun, and Pedro Cabral. 2024. "A Systematic Literature Review and Bibliometric Analysis of Semantic Segmentation Models in Land Cover Mapping" Remote Sensing 16, no. 12: 2222. https://doi.org/10.3390/rs16122222