Precise Motion Compensation of Multi-Rotor UAV-Borne SAR Based on Improved PTA

Abstract

:1. Introduction

2. Materials and Methods

2.1. Principle of Motion Compensation

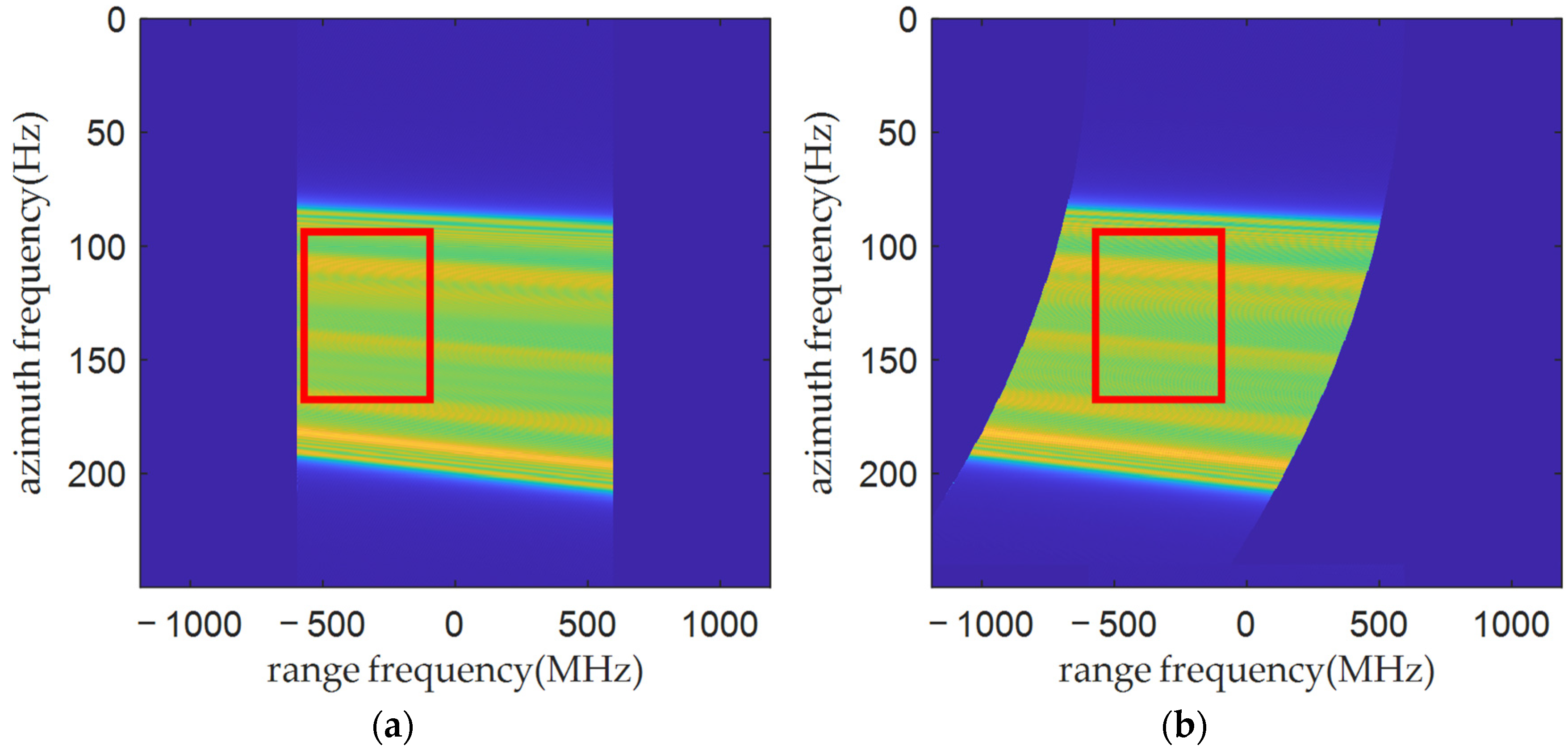

2.2. Spectrum of Signal with Errors

2.3. The Calculations of Accurate Errors in the Two-Dimensional Frequency Domain

- (1)

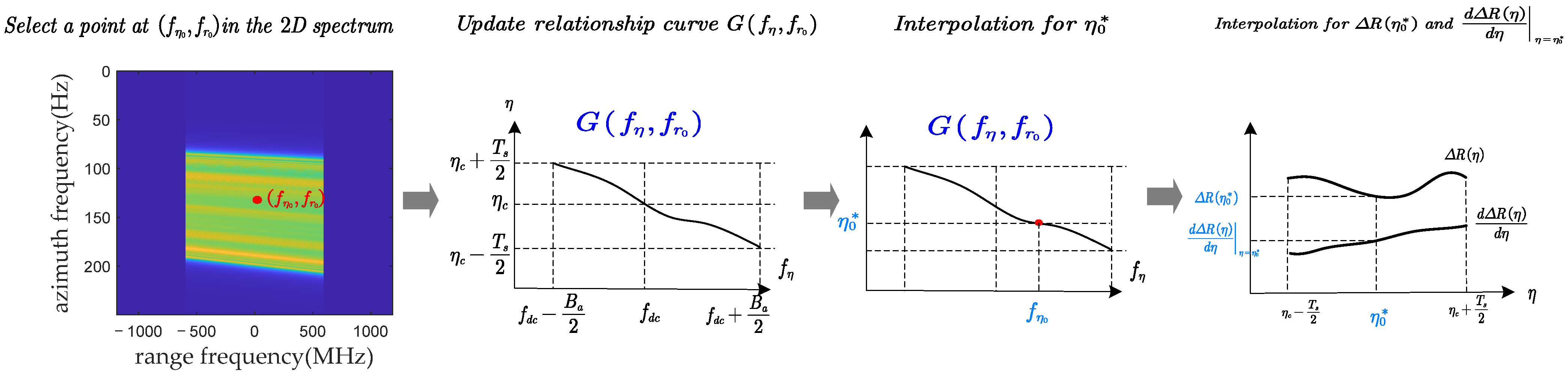

- Select a point at the two-dimensional spectrum: We select a point on the two-dimensional spectrum, assuming it is located at the azimuth frequency and range frequency .

- (2)

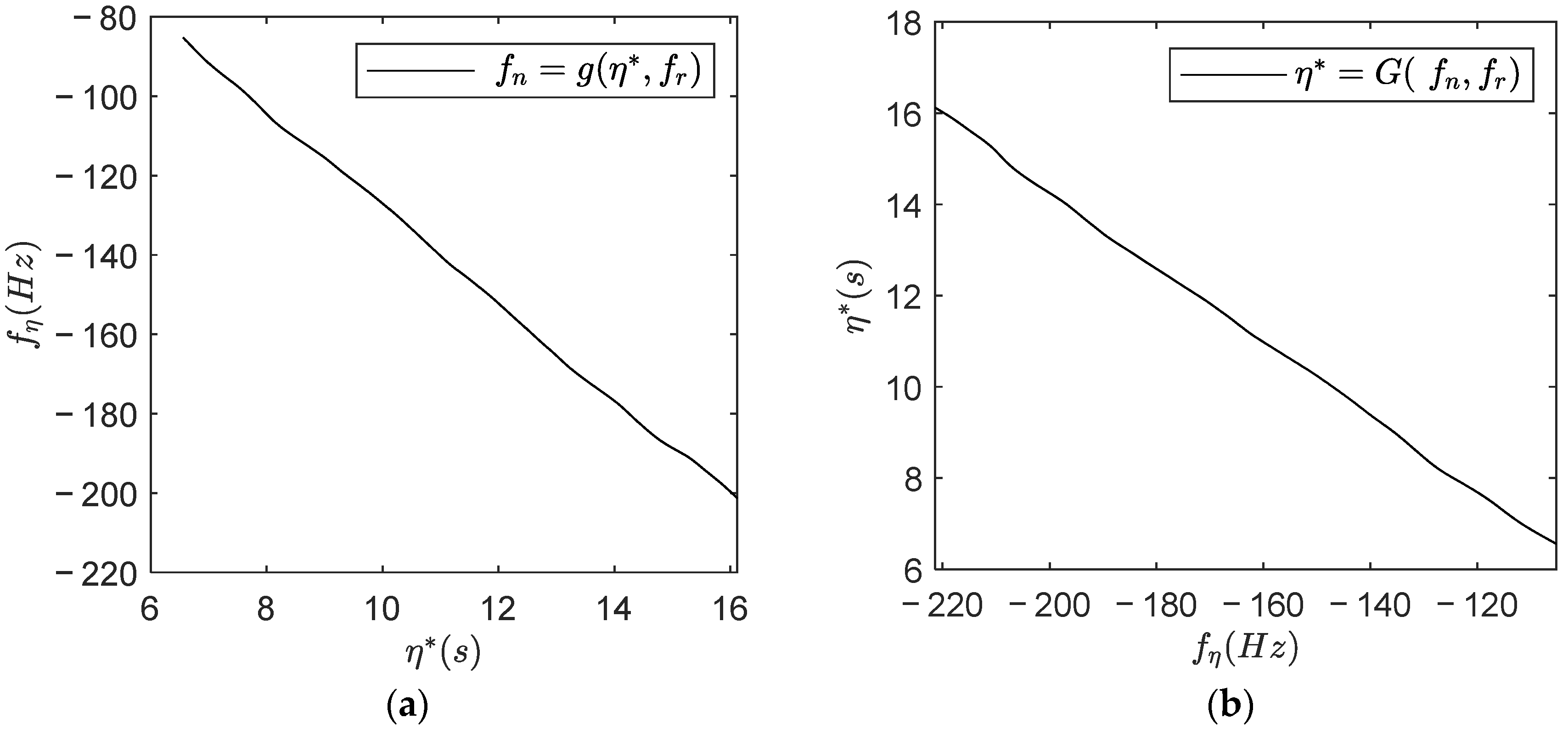

- Update the relationship between and at = : For range frequency , a curve illustrating the relationship between and can be obtained based on Equation (6). This curve is similar to the one shown in Figure 2b, corresponding to the function = G(, ).

- (3)

- Interpolate the azimuth stationary phase time: For the point located at (, ), is the azimuth stationary phase time corresponding to the azimuth frequency . Based on the curve corresponding to the function = G(, ), we can obtain by interpolation.

- (4)

- Calculate the precise error in Equation (11): After obtaining the stationary phase time , and can be further obtained by interpolation. Then, can be obtained.

- (5)

- Calculate the complete error in the two-dimensional frequency domain: Repeat Steps 1–4 to calculate the errors at each frequency point in the two-dimensional frequency domain.

- (6)

- Perform Stolt interpolation on : The is the actual error in the two-dimensional frequency domain before Stolt interpolation. To obtain the actual error after imaging, Stolt interpolation needs to be performed on .

2.4. An Improved PTA for Refocusing Building Surfaces

- (1)

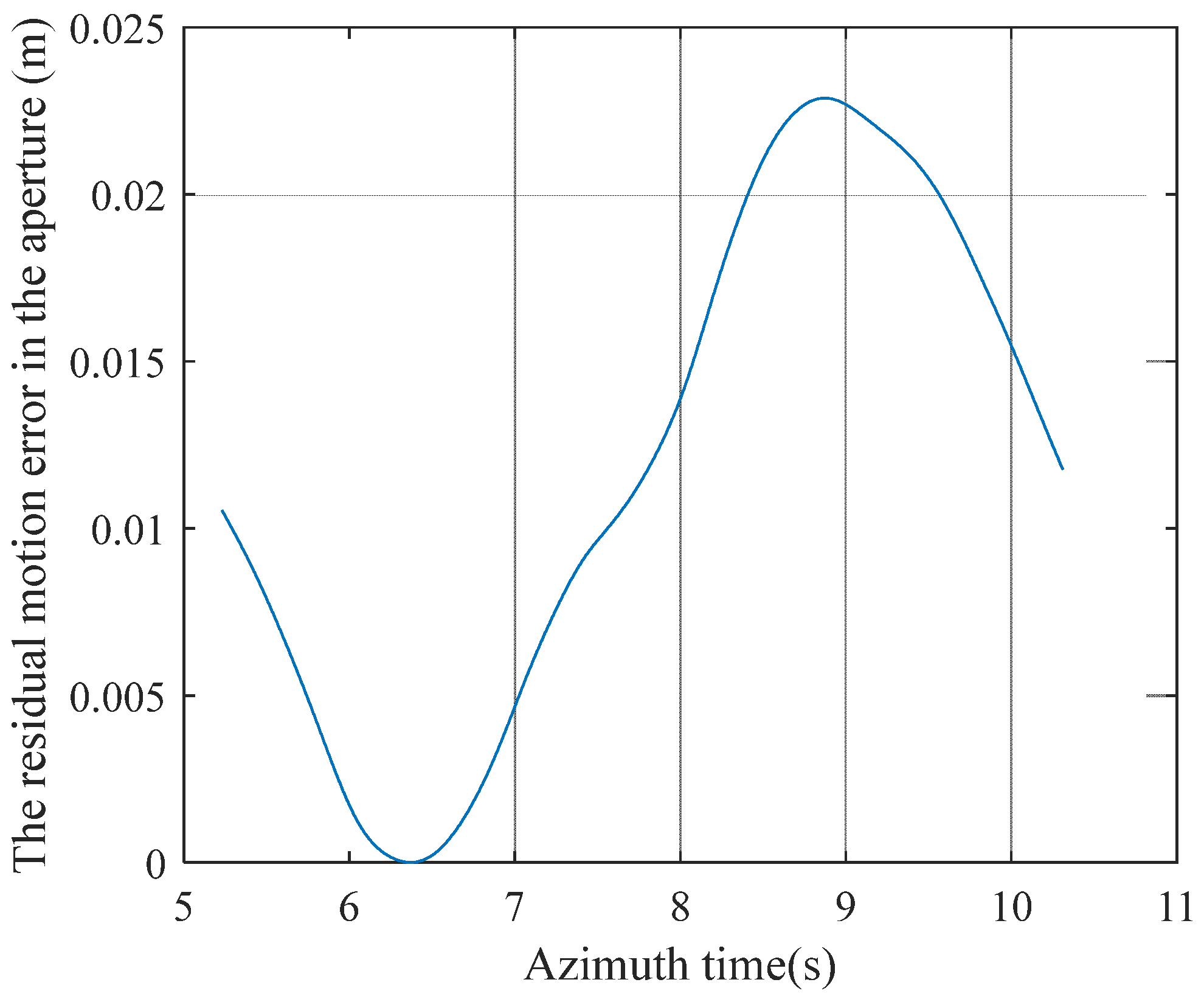

- Motion compensation: OSA is performed on the RAW data to compensate for range-varying motion errors. After performing MOCO using the OSA, the residual error is generally small and therefore does not destroy the one-to-one relationship between and .

- (2)

- Azimuthal resampling is performed to eliminate errors due to non-uniform sampling.

- (3)

- The ω-k algorithm is used for imaging to produce SAR images. At this stage, ground targets in the SAR images are well-focused, while building targets are severely defocused.

- (4)

- A pixel point is selected, N pixel points are taken along the range and azimuth directions centered on this pixel point to obtain the local SAR image. Based on practical experience, N is typically set to 64 or 128.

- (5)

- A two-dimensional fast Fourier transform (FFT) is performed on the selected local SAR image to obtain the signal in the two-dimensional frequency domain.

- (6)

- Based on the position of the pixels selected in Step 4 and combined with the elevation data, the exact error as described in Equation (11) is calculated according to the method proposed in this paper.

- (7)

- Phase compensation is performed in the two-dimensional frequency domain and the two-dimensional inverse fast Fourier transform (IFFT) is performed to obtain focused local SAR images. At this stage, the center of the local image is well-focused.

- (8)

- The pixel selected in Step 4 is replaced with the center pixel of the focused local image obtained in Step 7.

- (9)

- The next pixel is selected and the above process is repeated until all pixels are processed.

3. Experimental Results and Analysis

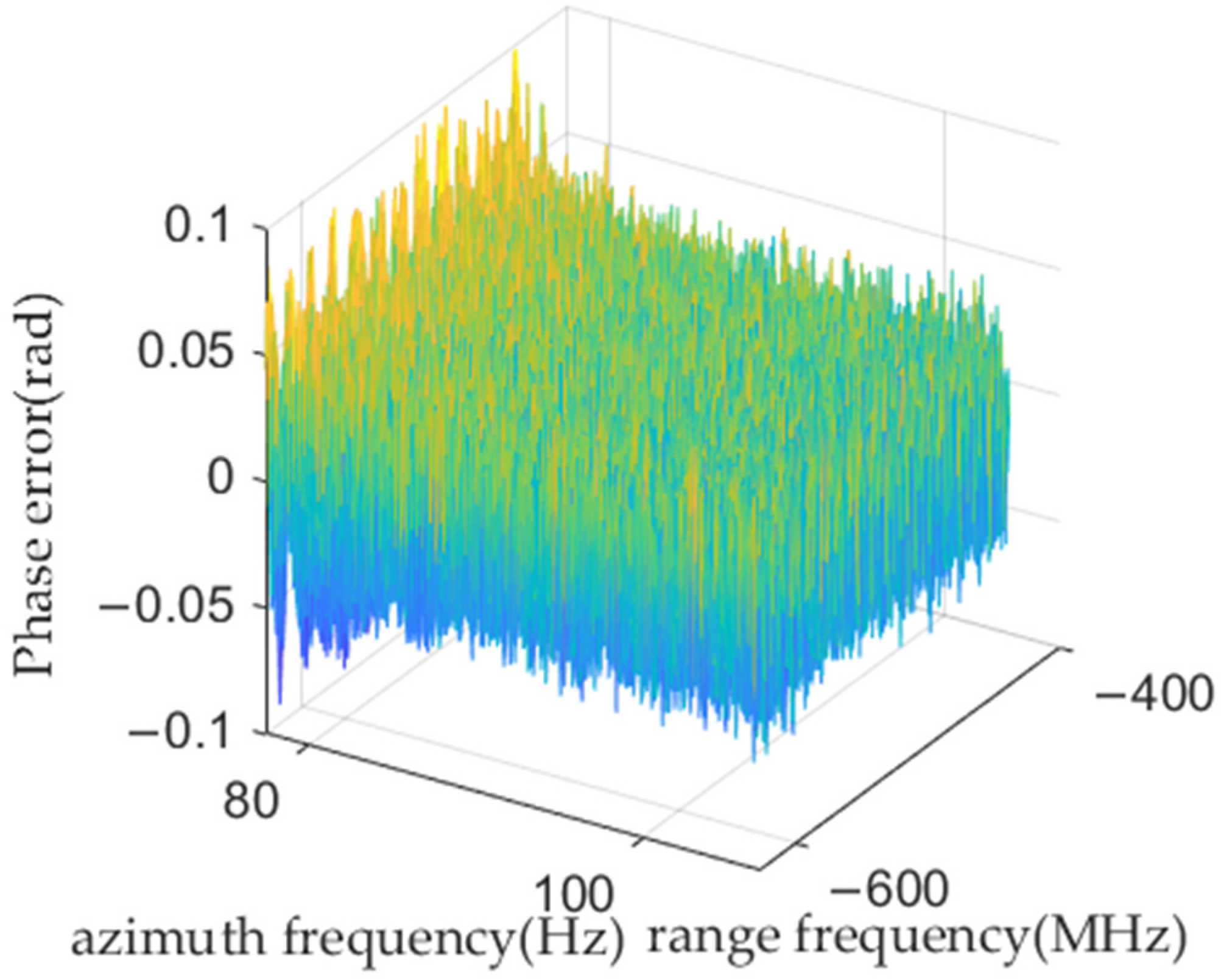

3.1. Calculation Accuracy Analysis

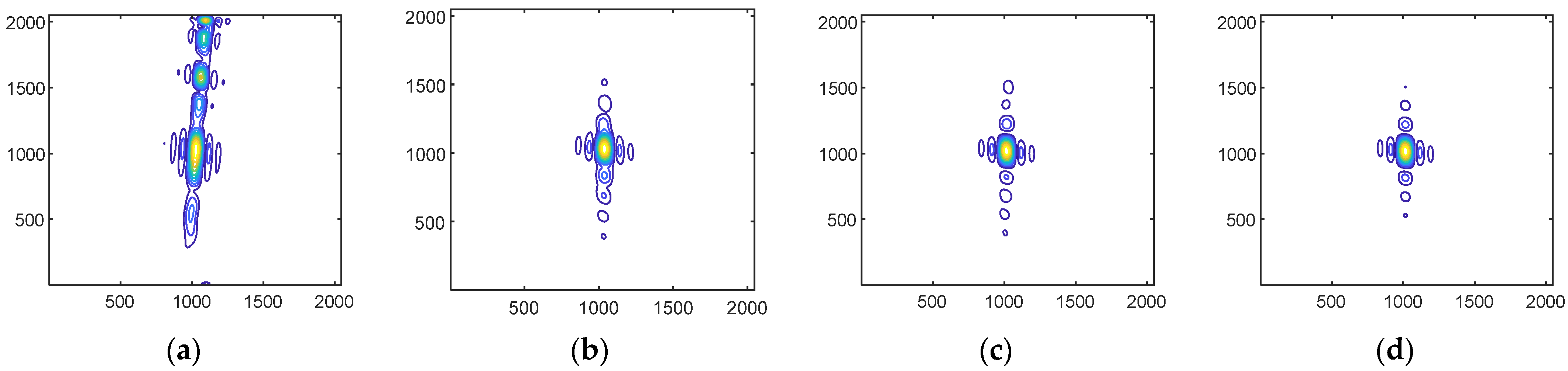

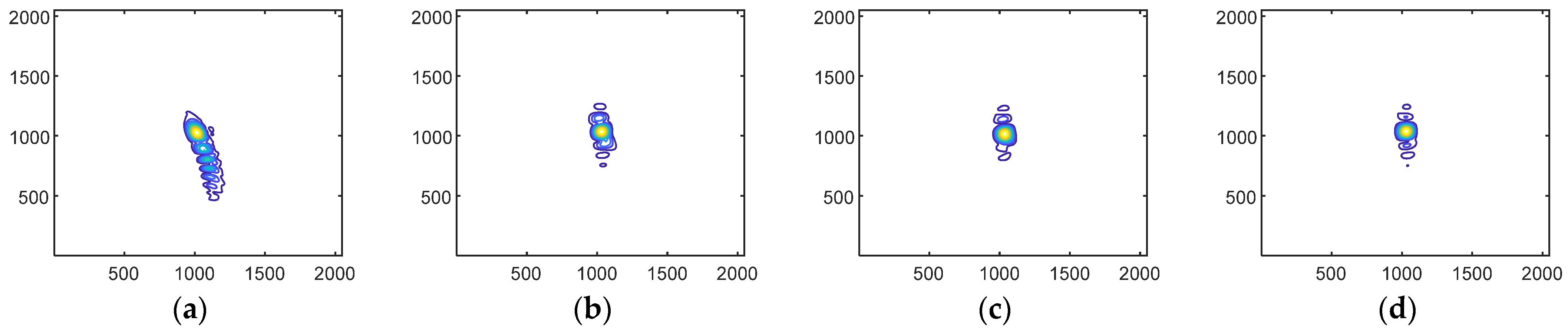

3.2. Simulation Experiments for Improved PTA

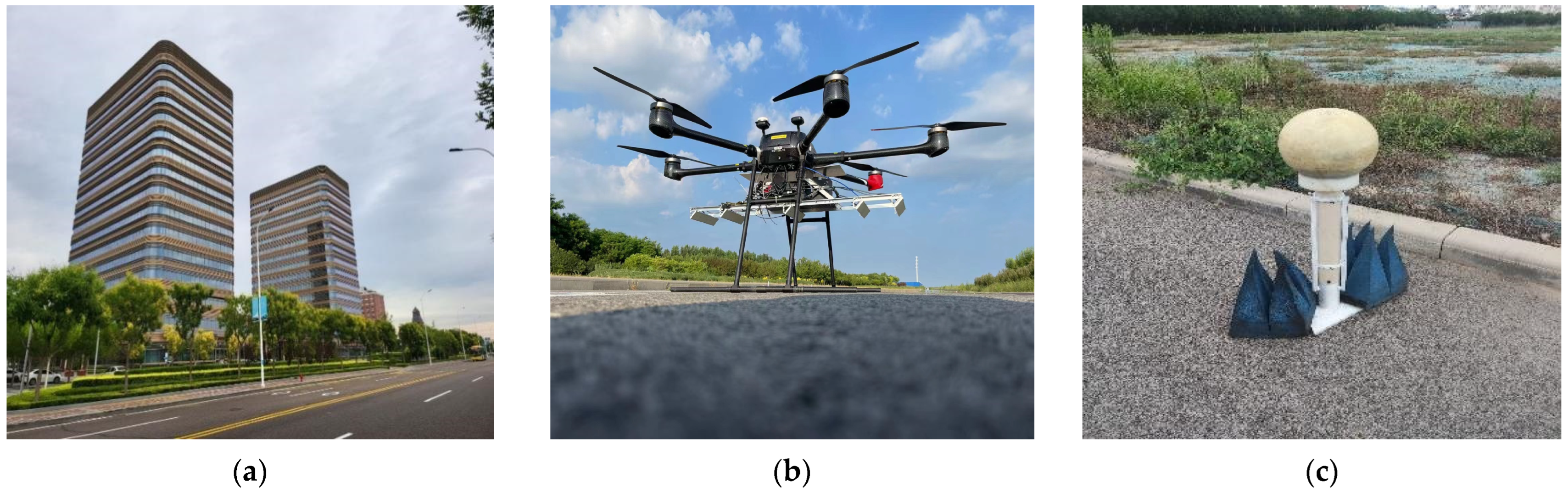

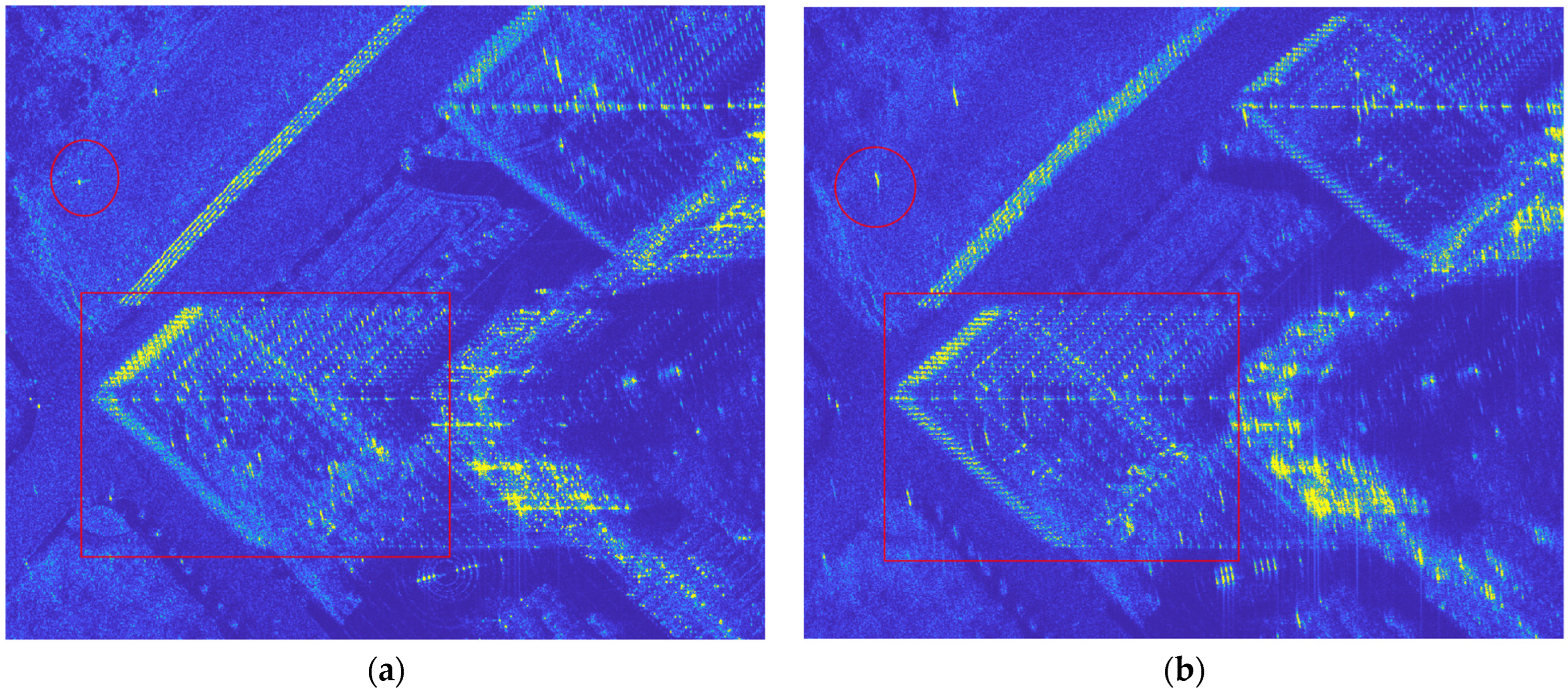

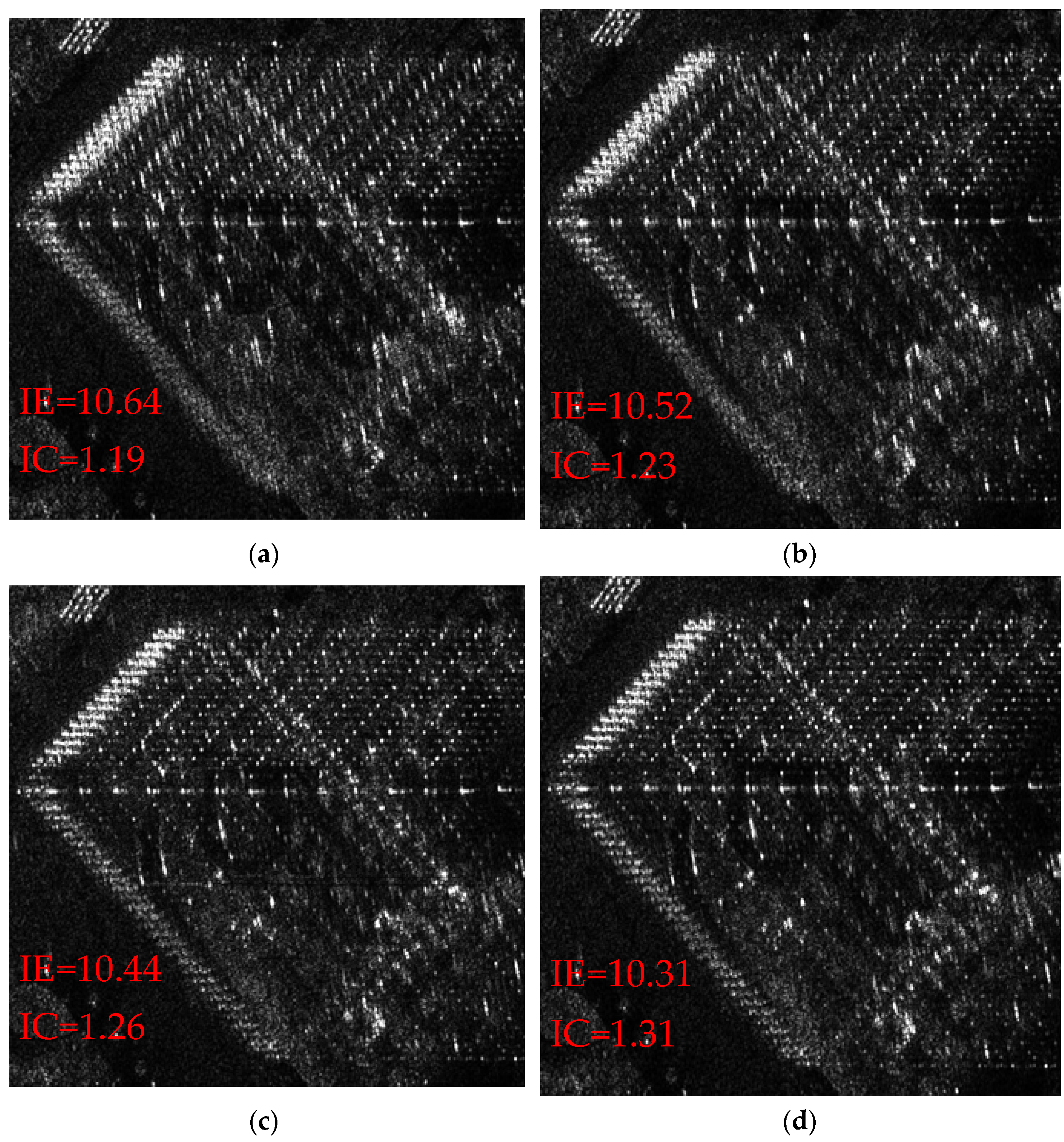

3.3. Actual SAR Data Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Curlander, J.; McDonough, R. Synthetic Aperture Radar: Systems and Signal Processing; Wiley: New York, NY, USA, 1991. [Google Scholar]

- Chen, J.; Xing, M.; Yu, H.; Liang, B.; Peng, J.; Sun, G.-C. Motion Compensation/Autofocus in Airborne Synthetic Aperture Radar: A Review. IEEE Geosci. Remote Sens. Mag. 2022, 10, 185–206. [Google Scholar] [CrossRef]

- Mao, X.; He, X.; Li, D. Knowledge-Aided 2-D Autofocus for Spotlight SAR Range Migration Algorithm Imagery. IEEE Trans. Geosci. Remote Sensing. 2018, 56, 5458–5470. [Google Scholar] [CrossRef]

- Xu, W.; Wang, B.; Xiang, M.; Song, C.; Wang, Z. A Novel Autofocus Framework for UAV SAR Imagery: Motion Error Extraction from Symmetric Triangular FMCW Differential Signal. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Xu, W.; Wang, B.; Xiang, M.; Li, R.; Li, W. Image Defocus in an Airborne UWB VHR Microwave Photonic SAR: Analysis and Compensation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Zhang, L.; Sheng, J.; Xing, M.; Qiao, Z.; Xiong, T. Wavenumber-Domain Autofocusing for Highly Squinted UAV SAR Imagery. IEEE Sens. J. 2012, 12, 1574–1588. [Google Scholar] [CrossRef]

- Brancato, V.; Jäger, M.; Scheiber, R.; Hajnsek, I. A Motion Compensation Strategy for Airborne Repeat-Pass SAR Data. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1580–1584. [Google Scholar] [CrossRef]

- Meng, D.; Hu, D.; Ding, C. A New Approach to Airborne High-Resolution SAR Motion Compensation for Large Trajectory Deviations. Chin. J. Electron. 2012, 21, 764–769. [Google Scholar]

- Yang, M.; Zhu, D. Efficient Space-Variant Motion Compensation Approach for Ultra-High-Resolution SAR Based on Subswath Processing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2090–2103. [Google Scholar] [CrossRef]

- Fornaro, G.; Franceschetti, G.; Perna, S. Motion compensation errors: Effects on the accuracy of airborne SAR images. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 1338–1352. [Google Scholar] [CrossRef]

- Huai, Y.; Liang, Y.; Ding, J.; Xing, M.; Zeng, L.; Li, Z. An Inverse Extended Omega-K Algorithm for SAR Raw Data Simulation With Trajectory Deviations. IEEE Geosci. Remote Sens. Lett. 2016, 13, 826–830. [Google Scholar] [CrossRef]

- Zheng, X.; Yu, W.; Li, Z. A Novel Algorithm for Wide Beam SAR Motion Compensation Based on Frequency Division. In Proceedings of the 2006 IEEE International Symposium on Geoscience and Remote Sensing, Denver, CO, USA, 31 July–4 August 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 3160–3163. [Google Scholar]

- Prats, P.; Camara De Macedo, K.A.; Reigber, A.; Scheiber, R.; Mallorqui, J.J. Comparison of Topography- and Aperture-Dependent Motion Compensation Algorithms for Airborne SAR. IEEE Geosci. Remote Sens. Lett. 2007, 4, 349–353. [Google Scholar] [CrossRef]

- Prats, P.; Reigber, A.; Mallorqui, J.J. Topography-Dependent Motion Compensation for Repeat-Pass Interferometric SAR Systems. IEEE Geosci. Remote Sens. Lett. 2005, 2, 206–210. [Google Scholar] [CrossRef]

- de Macedo, K.A.; Scheiber, R. Precise Topography- and Aperture-Dependent Motion Compensation for Airborne SAR. IEEE Geosci. Remote Sens. Lett. 2005, 2, 172–176. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, L.; Li, J.; Hu, Q. Precise Aperture-dependent Motion Compensation for High-resolution Synthetic Aperture Radar Imaging. IET Radar Sonar Navig. 2017, 11, 204–211. [Google Scholar] [CrossRef]

- Perna, S.; Zamparelli, V.; Pauciullo, A.; Fornaro, G. Azimuth-to-Frequency Mapping in Airborne SAR Data Corrupted by Uncompensated Motion Errors. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1493–1497. [Google Scholar] [CrossRef]

- Lu, Q.; Du, K.; Li, Q. Improved Precise Tomography- and Aperture-Dependent Compensation for Synthetic Aperture Radar. In Proceedings of the 2021 2nd China International SAR Symposium (CISS), Shanghai, China, 3–5 November 2021. [Google Scholar]

- Meng, D.; Hu, D.; Ding, C. Precise Focusing of Airborne SAR Data With Wide Apertures Large Trajectory Deviations: A Chirp Modulated Back-Projection Approach. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2510–2519. [Google Scholar] [CrossRef]

- Meng, D.; Ding, C.; Hu, D.; Qiu, X.; Huang, L.; Han, B.; Liu, J.; Xu, N. On the Processing of Very High Resolution Spaceborne SAR Data: A Chirp-Modulated Back Projection Approach. IEEE Trans. Geosci. Remote Sens. 2018, 56, 191–201. [Google Scholar] [CrossRef]

- Zhang, L.; Li, H.; Qiao, Z.; Xu, Z. A Fast BP Algorithm With Wavenumber Spectrum Fusion for High-Resolution Spotlight SAR Imaging. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1460–1464. [Google Scholar] [CrossRef]

- Li, Y.; Liang, X.; Ding, C.; Zhou, L.; Ding, Q. Improvements to the Frequency Division-Based Subaperture Algorithm for Motion Compensation in Wide-Beam SAR. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1219–1223. [Google Scholar]

- Chen, M.; Qiu, X.; Li, R.; Li, W.; Fu, K. Analysis and Compensation for Systematical Errors in Airborne Microwave Photonic SAR Imaging by 2-D Autofocus. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2221–2236. [Google Scholar] [CrossRef]

| Parameter | Symbol | Value |

|---|---|---|

| Carrier frequency | 15.2 GHz | |

| Bandwidth | 1200 MHz | |

| Reference range | 650 m | |

| Pulse repetition frequency | 250 Hz | |

| Azimuth beamwidth | 3° | |

| Speed of flight | 8 m/s | |

| Squint angle | −5.2° | |

| Flight height | 400 m |

| Parameter | Symbol | Value |

|---|---|---|

| Carrier frequency | 15.2 GHz | |

| Bandwidth | 1200 MHz | |

| Reference range | 650 m | |

| Pulse repetition frequency | 250 Hz | |

| Azimuth beamwidth | 3° | |

| Speed of flight | 7.89 m/s | |

| Squint angle | −7.86° | |

| Flight height | 402 m |

| Method | IRW(m) | PLSR (dB) | ILSR (dB) |

|---|---|---|---|

| Traditional improved PTA | 0.303 | −9.752 | −7.416 |

| CMBP algorithm | 0.301 | −11.524 | −11.460 |

| Proposed improved PTA | 0.295 | −13.515 | −10.773 |

| Method | Image Entropy (IE) | Image Contrast (IC) |

|---|---|---|

| Result of ω-k | 10.64 | 1.19 |

| Result of ω-k +traditional improved PTA | 10.52 | 1.23 |

| Result of ω-k +CMBP | 10.44 | 1.26 |

| Result of ω-k +proposed improved PTA | 10.31 | 1.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, Y.; Qiu, X.; Meng, D. Precise Motion Compensation of Multi-Rotor UAV-Borne SAR Based on Improved PTA. Remote Sens. 2024, 16, 2678. https://doi.org/10.3390/rs16142678

Cheng Y, Qiu X, Meng D. Precise Motion Compensation of Multi-Rotor UAV-Borne SAR Based on Improved PTA. Remote Sensing. 2024; 16(14):2678. https://doi.org/10.3390/rs16142678

Chicago/Turabian StyleCheng, Yao, Xiaolan Qiu, and Dadi Meng. 2024. "Precise Motion Compensation of Multi-Rotor UAV-Borne SAR Based on Improved PTA" Remote Sensing 16, no. 14: 2678. https://doi.org/10.3390/rs16142678