Radar Emitter Recognition Based on Spiking Neural Networks

Abstract

:1. Introduction

- (1)

- A radar emitter recognition framework based on SNNs with higher computational efficiency is proposed. Theoretical analysis and simulation experiments show that the proposed framework has lower computational complexity and energy consumption than traditional methods.

- (2)

- A direct coding method of radar pulse timing features is proposed to apply SNNs with stronger time series data processing ability to radar emitter recognition. Different from existing fixed coding methods, the proposed coding method can adaptively adjust the weight, thereby reducing the information loss in the coding process. Simulation results show that the proposed method has stronger data noise adaptability than the method based on traditional ANNs under the same input data form. This also proves that SNNs are more suitable for radar pulse train recognition than traditional ANNs.

- (3)

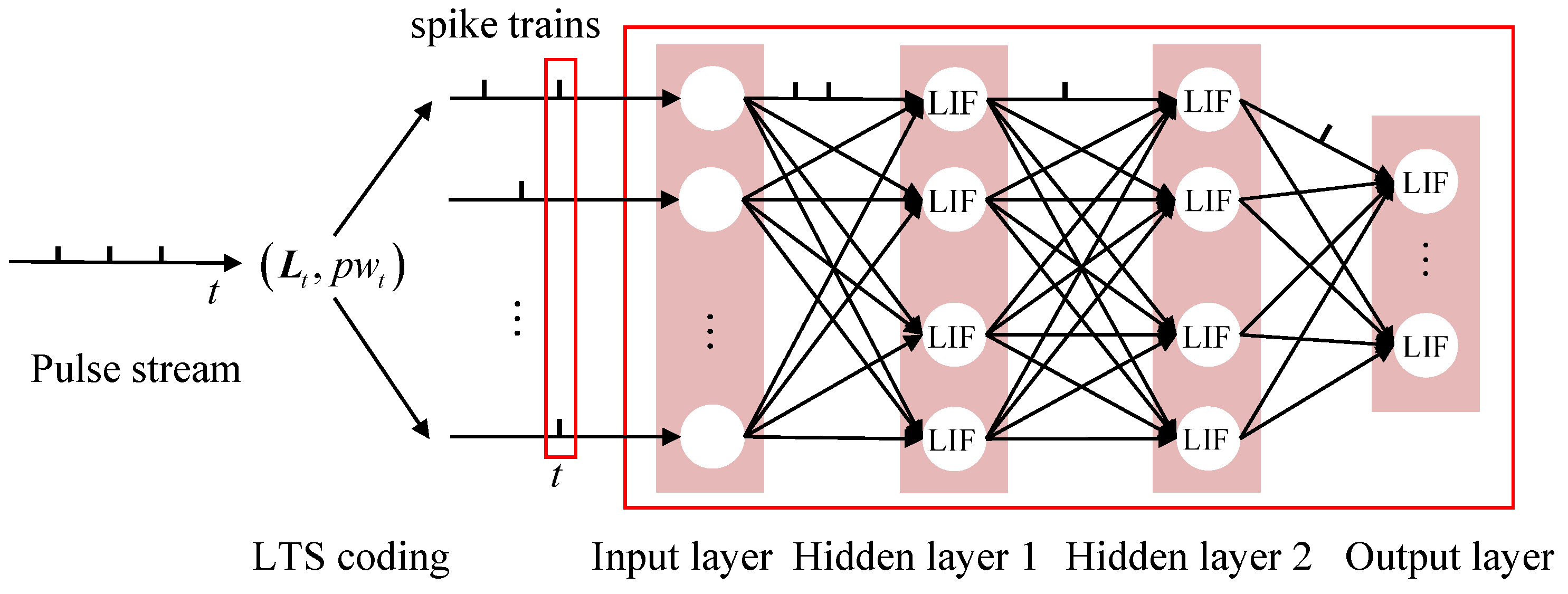

- A radar pulse timing feature coding method based on local timing structure is proposed, which significantly enhances the data noise adaptability of the proposed method. By analyzing the essence of data noise splitting PRI features, the local DTOA sequence of radar pulses is proposed as PRI features of radar pulses, and the SNN is further improved. Experimental results show that the proposed radar emitter recognition method based on improved SNNs has much higher adaptability to data noise than other methods.

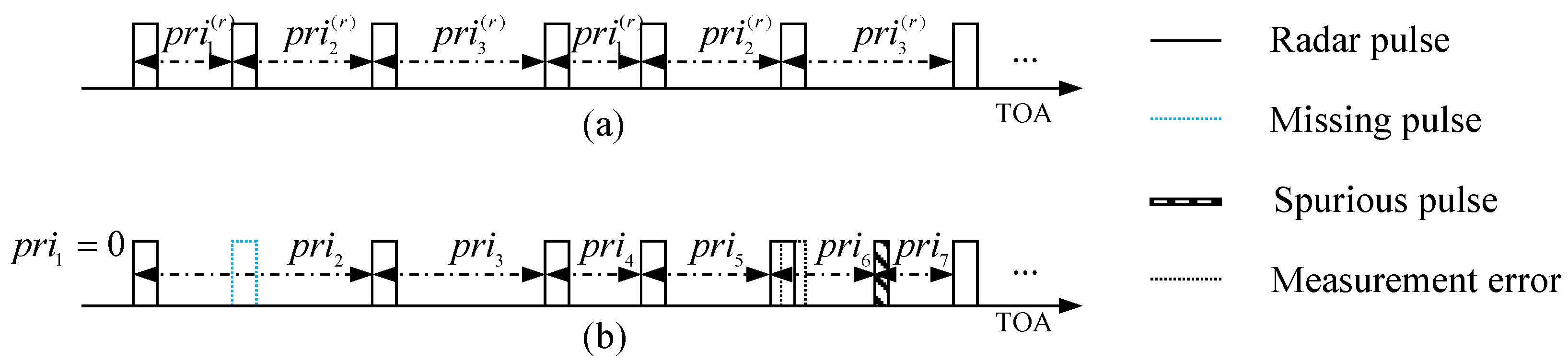

2. Research Background

2.1. Problem Formulation

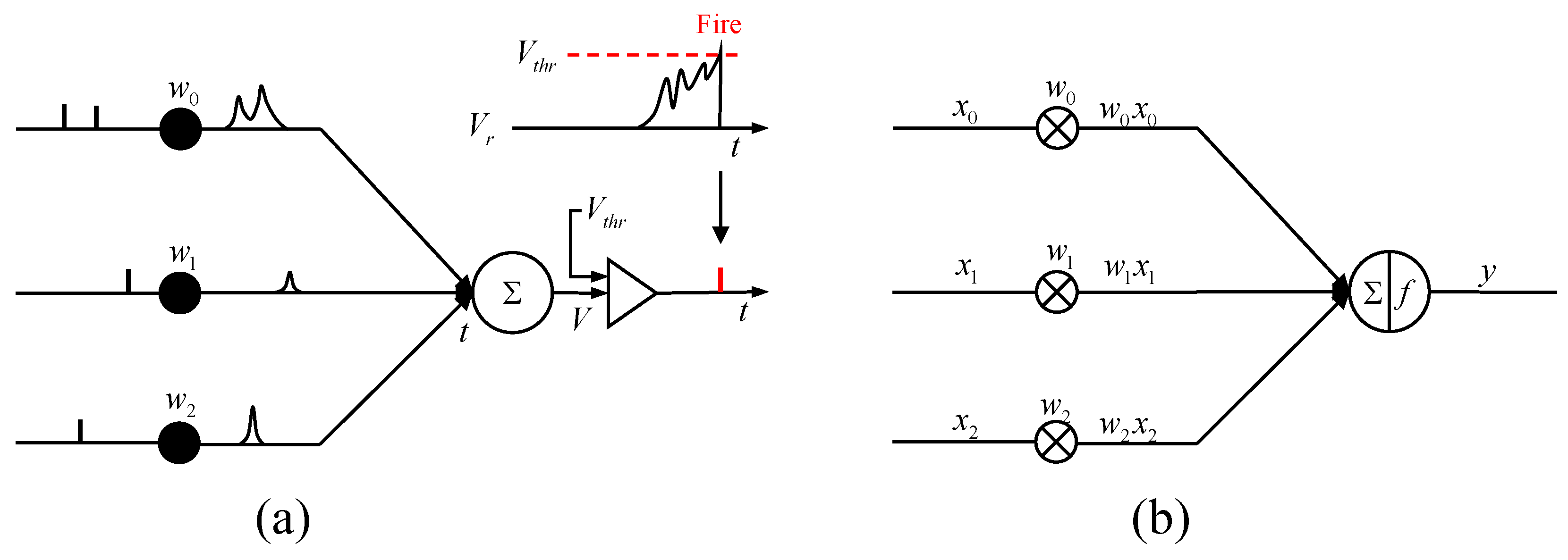

2.2. Spiking Neural Networks

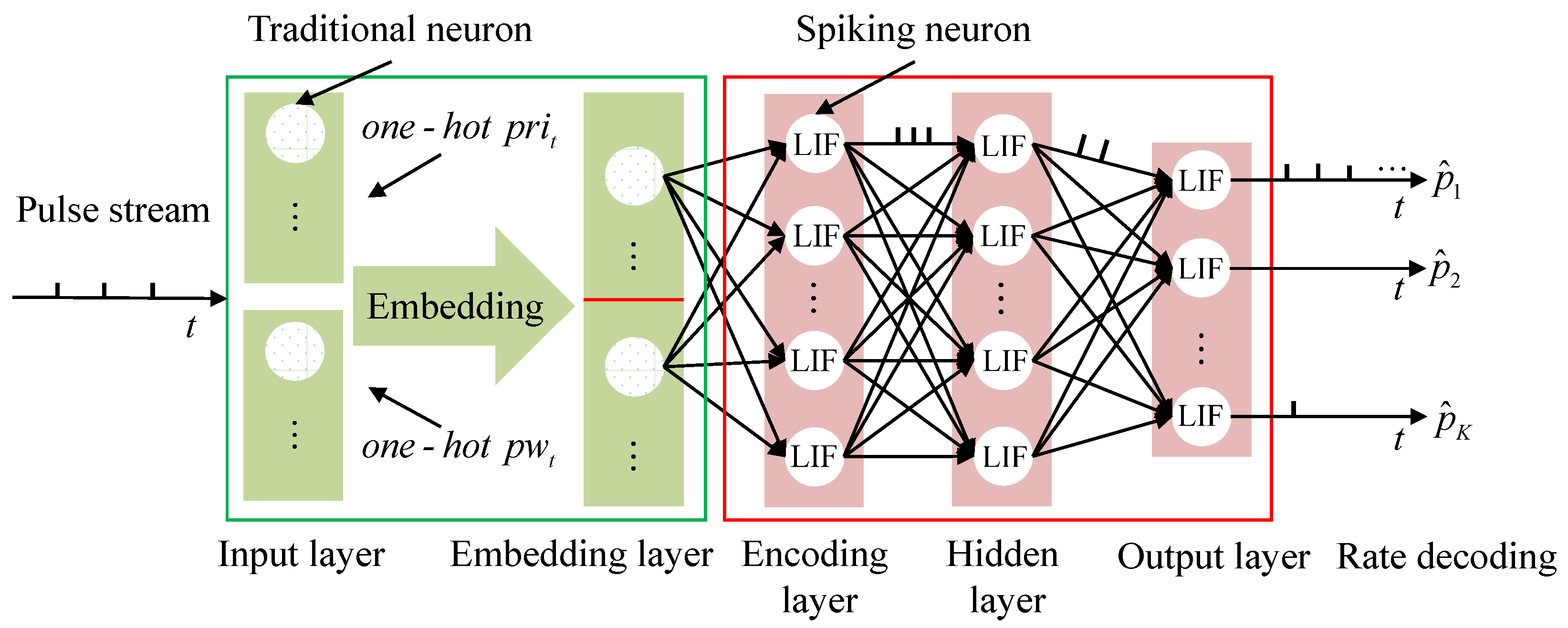

3. Methodology

3.1. Timing Feature Coding of Radar Pulses

3.2. Spiking Neural Network Model for Recognition

3.3. Radar Emitter Recognition and Model Optimization

3.4. Improved Spiking Neural Networks Based on Local Timing Structure Coding

4. Experimental Part

4.1. Simulation Settings

- (1)

- True positive (TP): the number of correctly classified radar pulse trains in the current class;

- (2)

- False positive (FP): the number of radar pulse trains of other classes classified as the current class;

- (3)

- False negative (FN): the number of radar pulse trains of the current class classified as other classes.

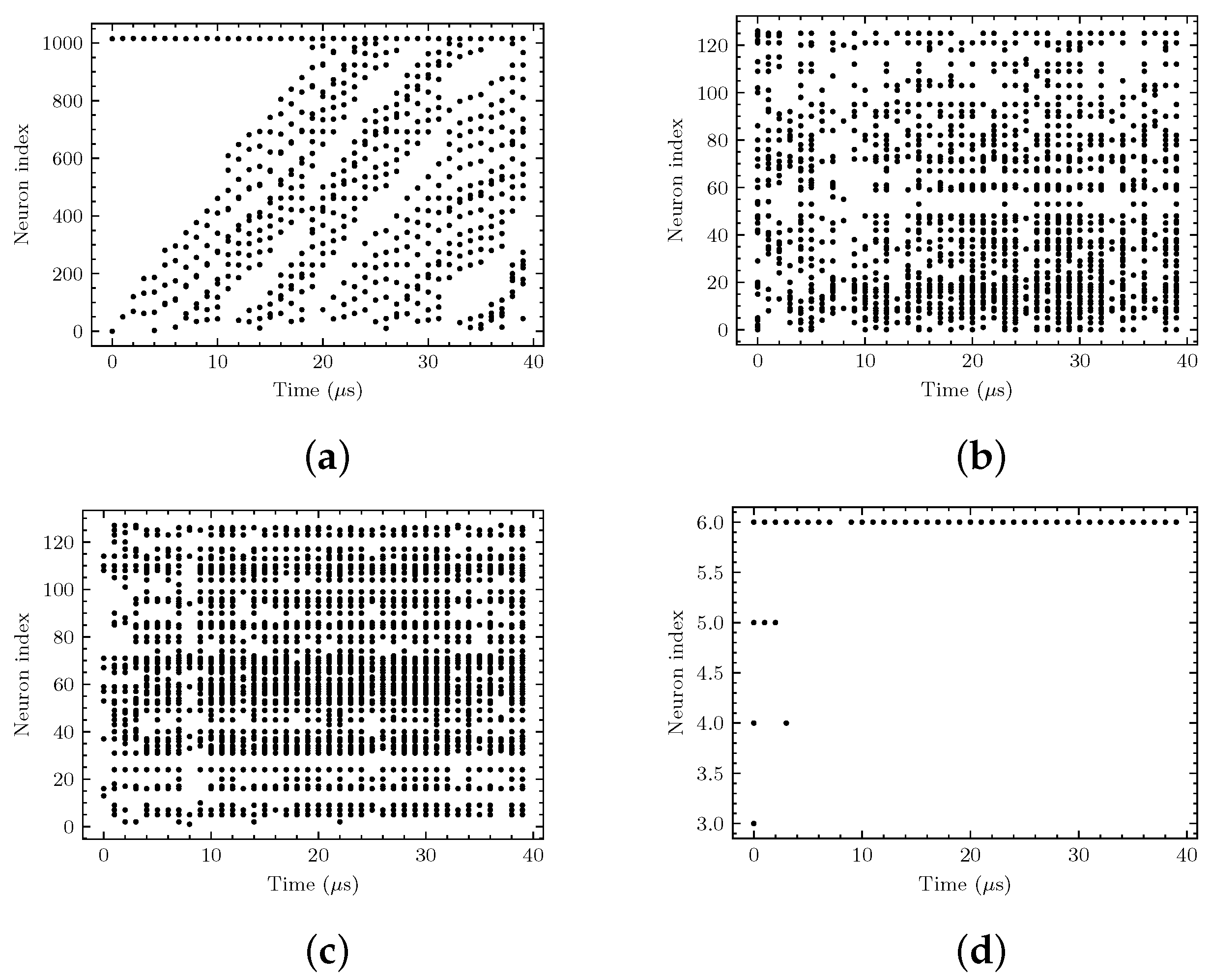

4.2. Recognition Effect Display

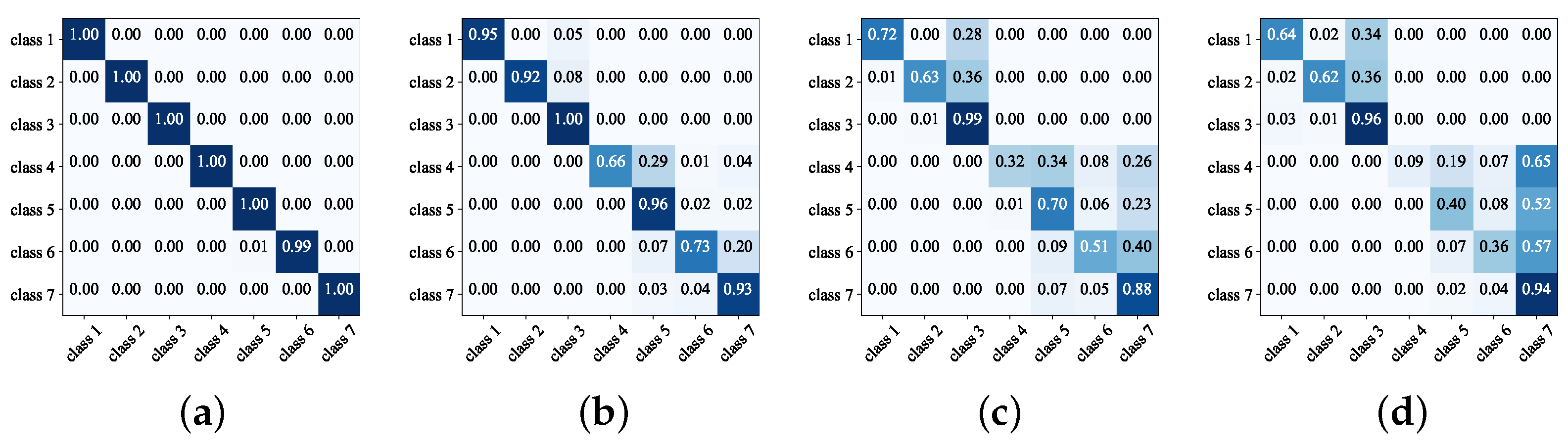

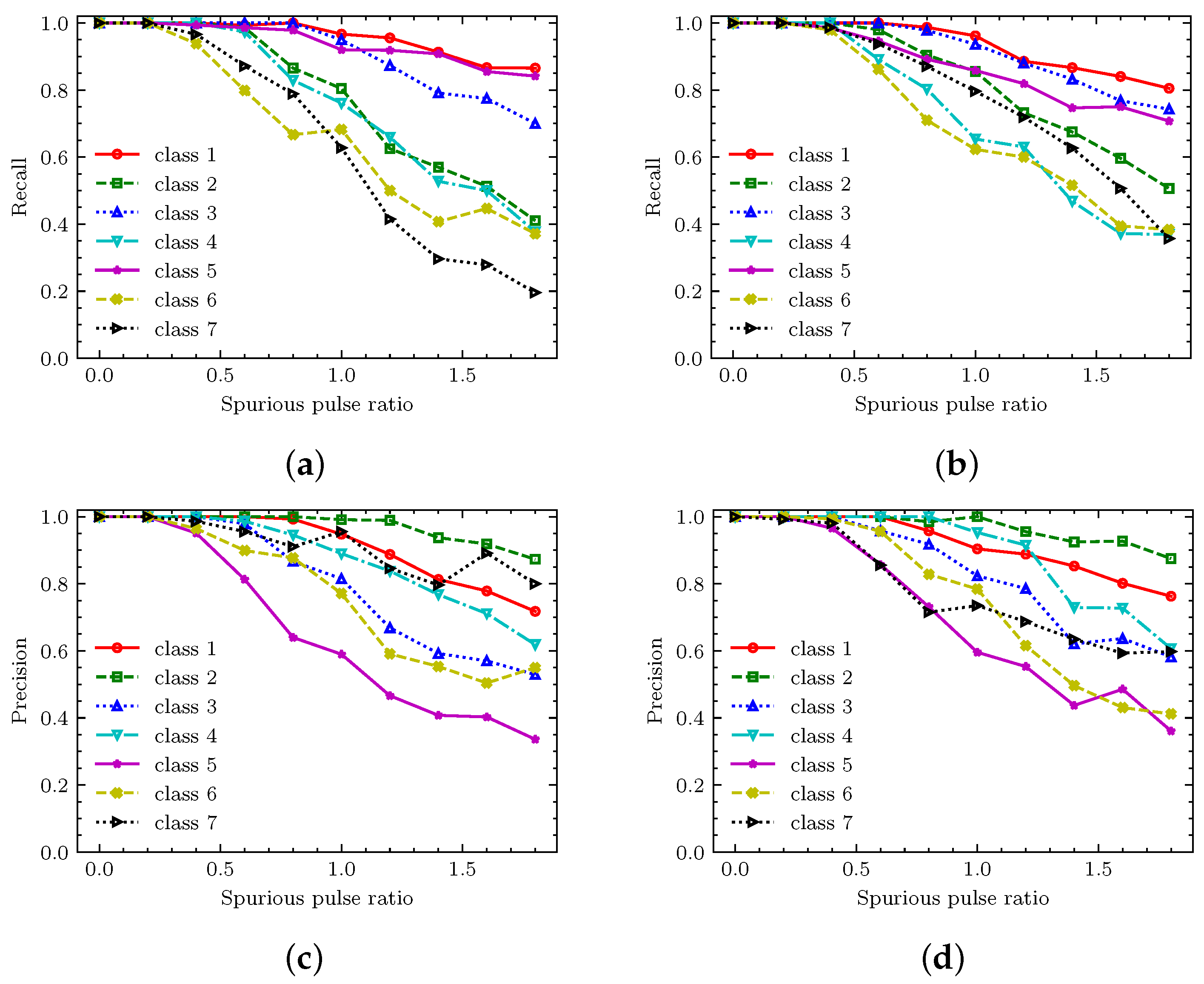

4.3. Recognition Performance Test of SNNs

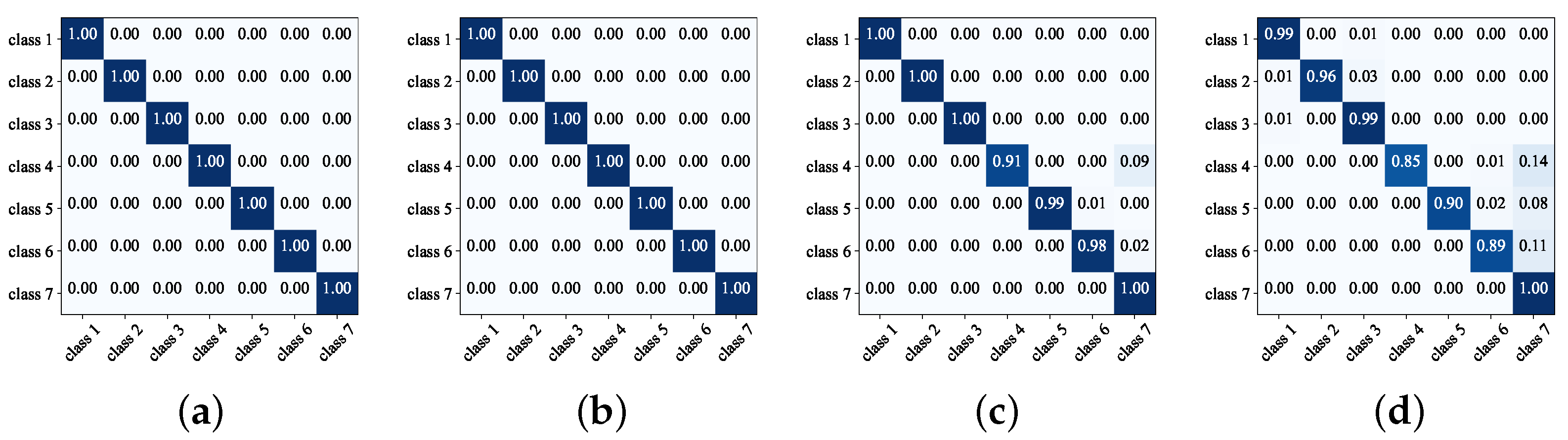

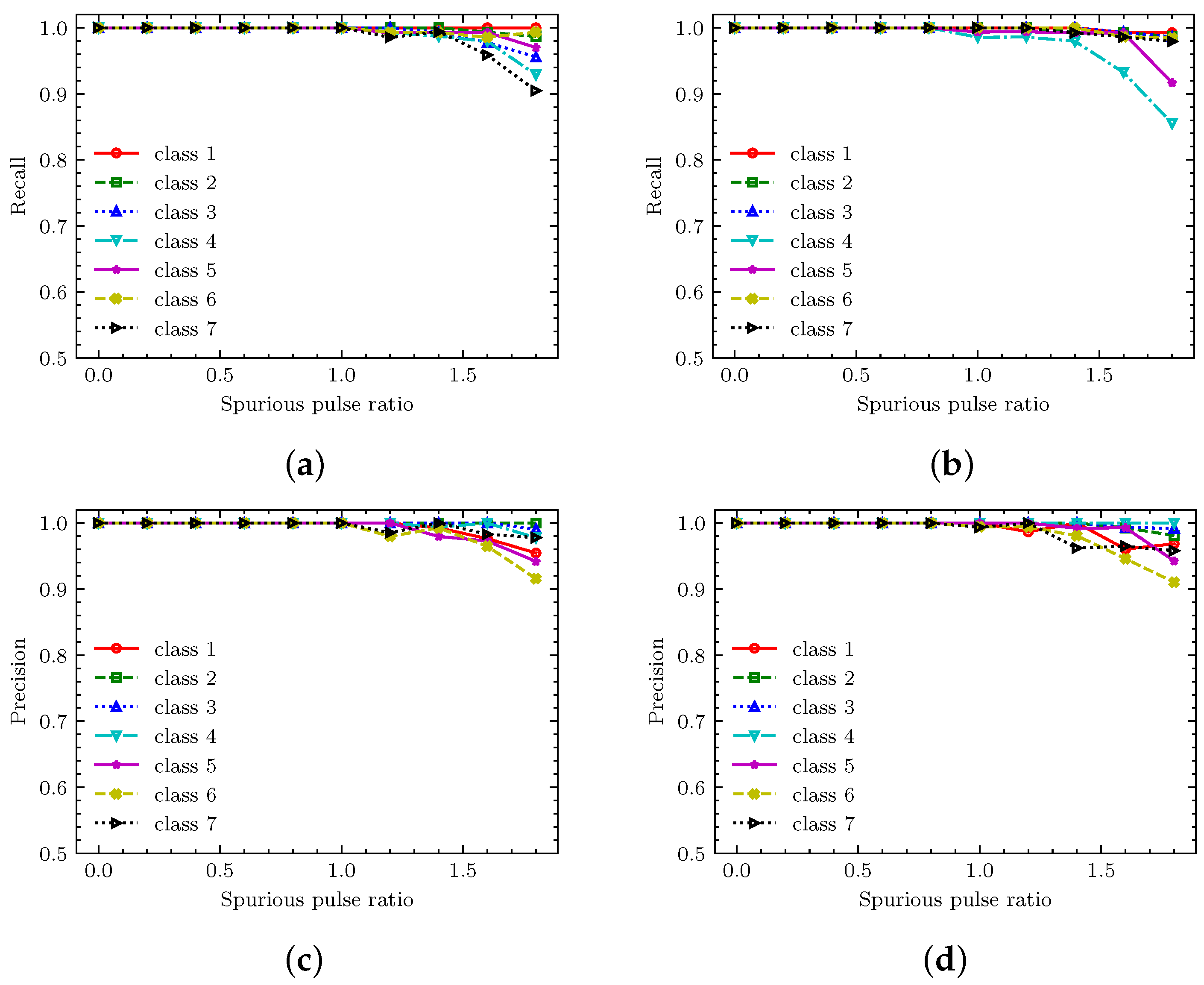

4.4. Recognition Performance Test of Improved SNNs

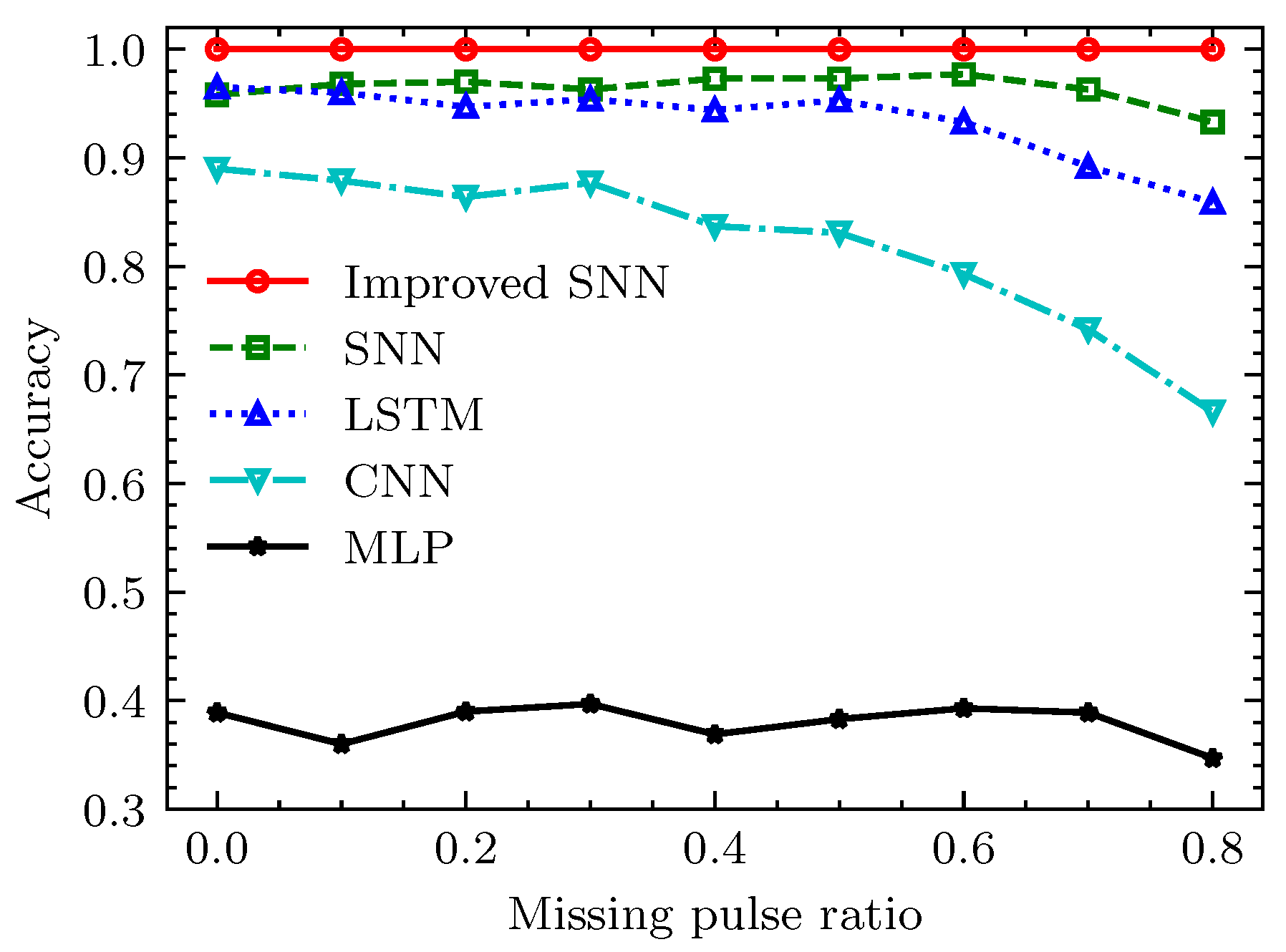

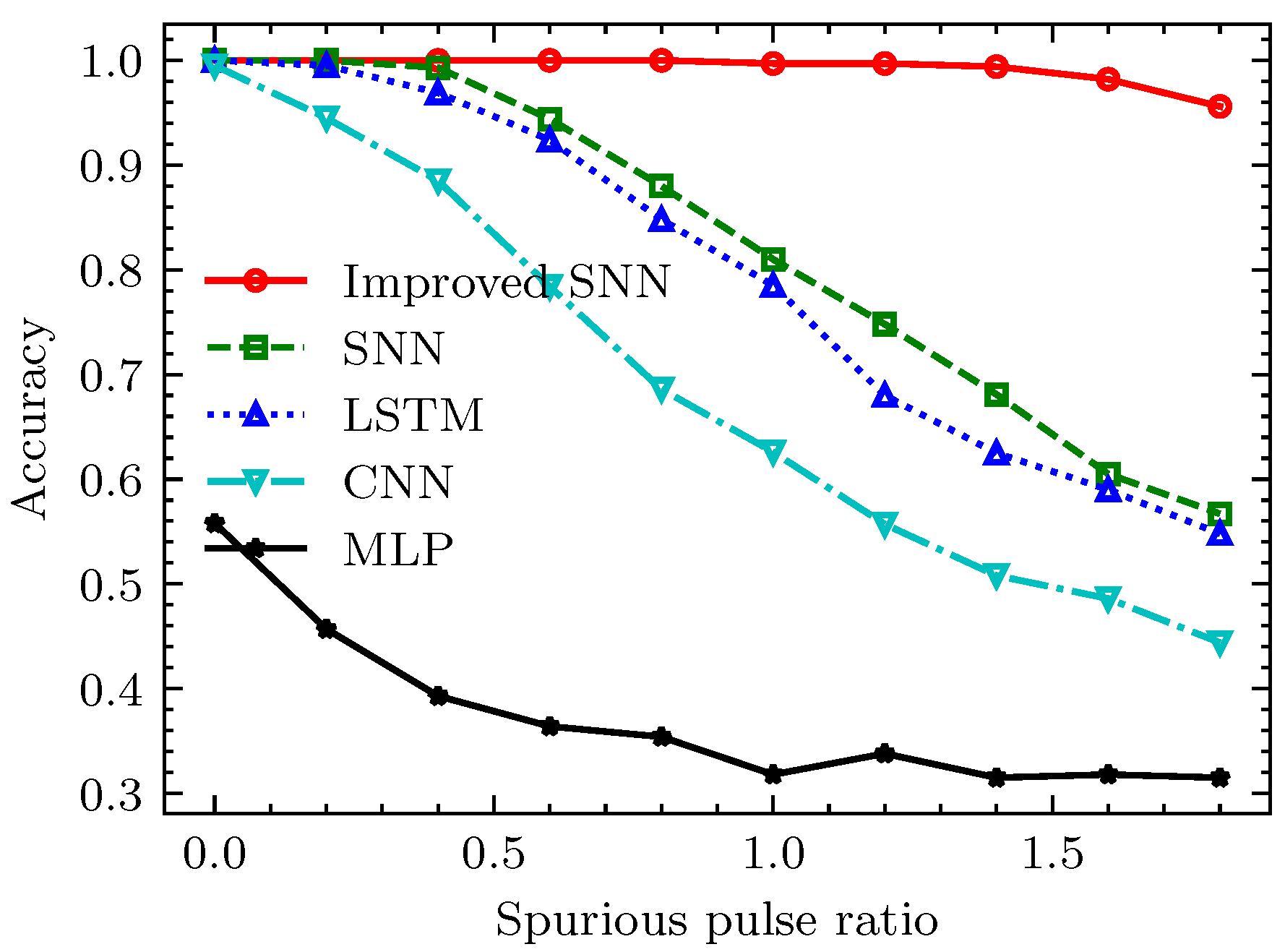

4.5. Performance Comparison of Different Methods

- (1)

- The voltage attenuation and threshold firing characteristics of SNNs effectively suppress data noise within the pulse stream.

- (2)

- The voltage accumulation characteristics of the SNN enable it to generate a continuously increasing response to the specific timing features in the pulse stream.

- (3)

- The proposed local timing structure of each pulse as a PRI feature is less affected by the splitting effect of data noise.

5. Discussion

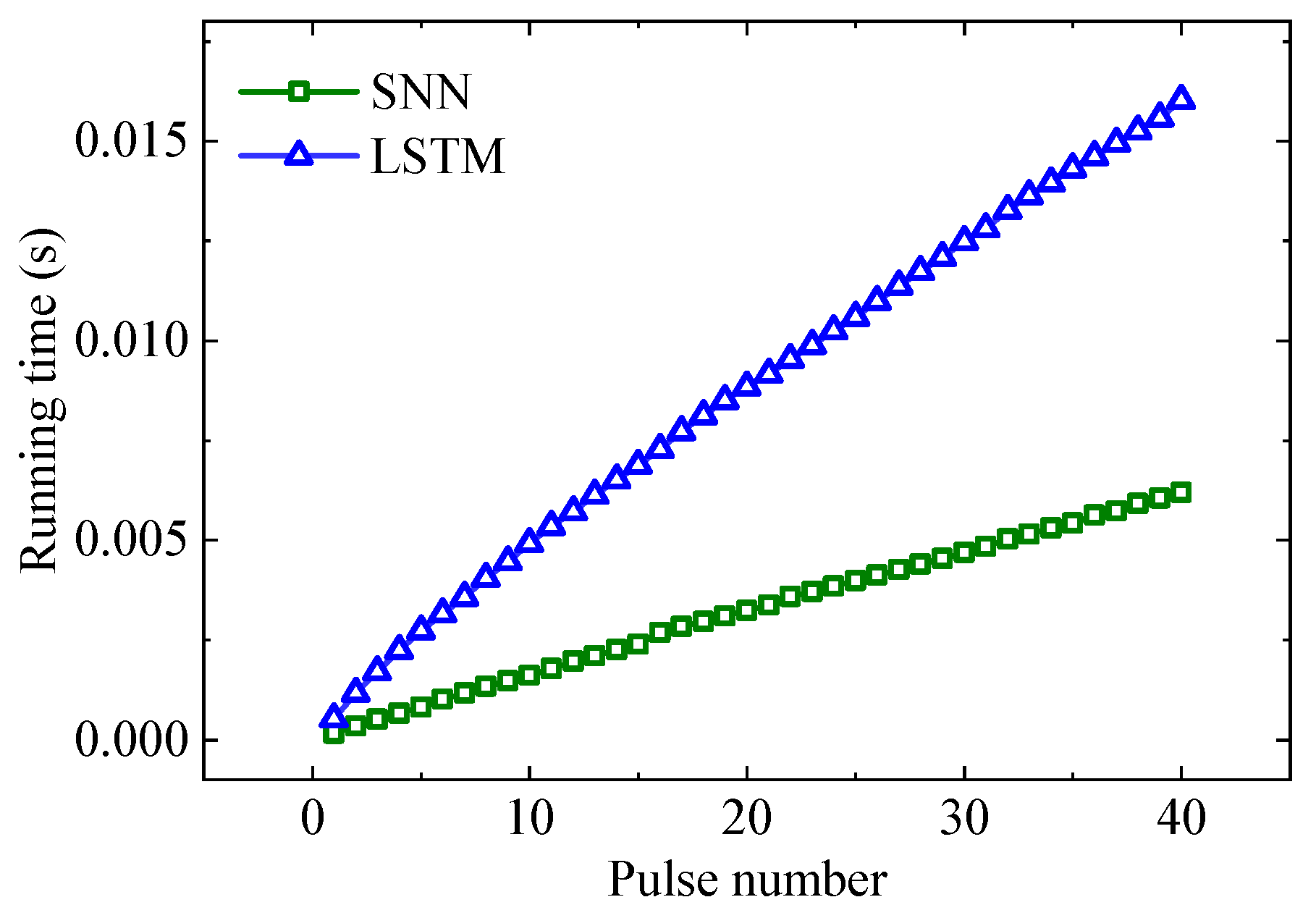

5.1. Computational Efficiency Analysis

5.2. Recognition of Multiple Radar Emitters in Interleaved Pulse Streams

- (1)

- PRI features that can more effectively distinguish the pulses from different radar emitters should be adopted. For example, the hidden state of the temporal feature self-supervised learning network can be used as PRI features of pulses [13], and then the direct coding method in this paper can be used to encode it into spike trains that SNNs can process.

- (2)

- The TOA of each pulse, rather than time step, should be directly used as the time dimension of each pulse. By changing the proposed method to an event-driven form, the network can directly use the TOA of each pulse as a time dimension, thereby generating a more stable response to the pulse stream from a specific radar.

- (3)

- The proposed network should be changed to a multilabel multiclassification form. This includes changing the original cross-entropy loss function to a binary cross-entropy loss function. Moreover, the process of network training needs to be optimized, that is, the model is required to be able to handle the correlation and overlap between data belonging to different labels, which involves more complex training and evaluation processes.

5.3. Recognition of More Complex Aliasing Radar Signals

6. Conclusions

- (1)

- The proposed SNN-based method has a recall and precision of more than 90% for all classes of radars when the spurious pulse rate and missing pulse rate do not exceed 0.5.

- (2)

- Compared with missing pulses, spurious pulses have a greater impact on the recognition accuracy of the SNN-based method. This is because when there is a spurious pulse, the PRI feature of its adjacent pulses is randomly split, resulting in SNNs being unable to generate an effective response.

- (3)

- Compared with other methods, the proposed SNN-based method has stronger data noise adaptability. The reason is that the voltage attenuation, spike-firing, and voltage accumulation characteristics of SNNs can effectively suppress the data noise in the pulse stream while retaining the effective features of the radar pulse stream.

- (4)

- Recall and precision for each class in the improved method are still more than 90% when the missing pulse rate is 0.5 and the spurious pulse rate is 1.8. Moreover, the improved SNNs have significantly higher adaptability to data noise than other methods. This is because the local timing features are less affected by data noise, especially spurious pulses, so they can generate a more stable response to specific timing patterns in the pulse stream.

- (5)

- The computational complexity analysis and simulation experiments of the proposed method show that the proposed method also has higher computational efficiency and provides the possibility for real-time recognition of radar pulse streams under complex signal conditions.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviations | Full Names | Descriptions |

| ESM | Electronic support measurement | It is used to detect, locate, and identify radar to provide knowledge for an electronic countermeasure system. |

| SNN | Spiking neural network | A third-generation neural network which can more realistically simulate the biological brain neurons. |

| ANN | Artificial neural network | The second generation of neural networks using traditional neurons, such as RNN and CNN. |

| LSTM | Long short-term memory | A deep learning model commonly used to process and predict time series data. |

| CNN | Convolutional neural network | A deep learning model for processing and analyzing data with spatial structure. |

| MLP | Multilayer perceptron | A basic feedforward neural network model, usually composed of multiple fully connected layers. |

| LIF | Leaky integrate-and-fire | A low-computational-complexity spiking neuron model. |

| PDW | Pulse description word | A digital descriptor composed of all parameters of a single radar pulse. |

| TOA | Time of arrival | |

| PW | Pulse width | |

| RF | Radio frequency | |

| DOA | Direction of arrival | |

| DTOA | Differential time of arrival | The time interval between two adjacent pulses of an intercepted pulse stream. |

| PRI | Pulse repetition interval | The time interval between two adjacent pulses emitted by the radar. |

| MFR | Multifunction radar | A radar system capable of performing multiple radar tasks. |

| LPI | Low probability of intercept radar | A radar system designed to reduce the likelihood of detection by electronic reconnaissance systems. |

| FMCW | Frequency modulated continuous wave radar | A radar technology based on continuously transmitting and receiving frequency-modulated continuous wave signals. |

| MSE | Mean square error | |

| LTS | Local timing structure | Noise-insensitive PRI feature proposed in this paper. |

| MACs | Multiply-and-accumulate operations | |

| ACs | Accumulate operations |

References

- Wiley, R.G. ELINT: The Interception and Analysis of Radar Signals; Artech House Radar Library, Artech House: Boston, MA, USA, 2006. [Google Scholar]

- Luo, Z.; Yuan, S.; Shang, W.; Liu, Z. Automatic Reconstruction of Radar Pulse Repetition Pattern Based on Model Learning. Digit. Signal Process. 2024, 152, 104596. [Google Scholar] [CrossRef]

- Jing, Z.; Li, P.; Wu, B.; Yan, E.; Chen, Y.; Gao, Y. Attention-Enhanced Dual-Branch Residual Network with Adaptive L-Softmax Loss for Specific Emitter Identification under Low-Signal-to-Noise Ratio Conditions. Remote Sens. 2024, 16, 1332. [Google Scholar] [CrossRef]

- Yuan, S.; Li, P.; Wu, B. Radar Emitter Signal Intra-Pulse Modulation Open Set Recognition Based on Deep Neural Network. Remote Sens. 2023, 16, 108. [Google Scholar] [CrossRef]

- Dash, D.; Valarmathi, J. Radar Emitter Identification in Multistatic Radar System: A Review. In Advances in Automation, Signal Processing, Instrumentation, and Control; Komanapalli, V.L.N., Sivakumaran, N., Hampannavar, S., Eds.; Springer Nature: Singapore, 2021; Volume 700, pp. 2655–2664. [Google Scholar] [CrossRef]

- Xu, T.; Yuan, S.; Liu, Z.; Guo, F. Radar Emitter Recognition Based on Parameter Set Clustering and Classification. Remote Sens. 2022, 14, 4468. [Google Scholar] [CrossRef]

- Liangliang, G.; Shilong, W.; Tao, L. A Radar Emitter Identification Method Based on Pulse Match Template Sequence. In Proceedings of the 2010 2nd International Conference on Signal Processing Systems, Dalian, China, 5–7 July 2010; pp. V3-153–V3-156. [Google Scholar] [CrossRef]

- Kvasnov, A.V.; Shkodyrev, V.P.; Arsenyev, D.G. Method of Recognition the Radar Emitting Sources Based on the Naive Bayesian Classifier. WSEAS Trans. Syst. Control 2019, 14, 112–120. [Google Scholar]

- Revillon, G.; Mohammad-Djafari, A.; Enderli, C. Radar Emitters Classification and Clustering with a Scale Mixture of Normal Distributions. IET Radar Sonar Navig. 2019, 13, 128–138. [Google Scholar] [CrossRef]

- Chen, W.; Fu, K.; Zuo, J.; Zheng, X.; Huang, T.; Ren, W. Radar Emitter Classification for Large Data Set Based on Weighted-xgboost. IET Radar Sonar Navig. 2017, 11, 1203–1207. [Google Scholar] [CrossRef]

- Shieh, C.S.; Lin, C.T. A Vector Neural Network for Emitter Identification. IEEE Trans. Antennas Propag. 2002, 50, 1120–1127. [Google Scholar] [CrossRef]

- Chen, Y.; Li, P.; Yan, E.; Jing, Z.; Liu, G.; Wang, Z. A Knowledge Graph-Driven CNN for Radar Emitter Identification. Remote Sens. 2023, 15, 3289. [Google Scholar] [CrossRef]

- Yuan, S.; Liu, Z.M. Temporal Feature Learning and Pulse Prediction for Radars with Variable Parameters. Remote Sens. 2022, 14, 5439. [Google Scholar] [CrossRef]

- Wang, J.; Wang, H.; Xu, K.; Mao, Y.; Xuan, Z.; Tang, B.; Wang, X.; Mu, X. Visualization and Classification of Radar Emitter Pulse Sequences Based on 2D Feature Map. Phys. Commun. 2023, 61, 102168. [Google Scholar] [CrossRef]

- Liu, Z.M.; Philip, S.Y. Classification, Denoising, and Deinterleaving of Pulse Streams with Recurrent Neural Networks. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 1624–1639. [Google Scholar] [CrossRef]

- Notaro, P.; Paschali, M.; Hopke, C.; Wittmann, D.; Navab, N. Radar Emitter Classification with Attribute-specific Recurrent Neural Networks. arXiv 2019, arXiv:1911.07683. [Google Scholar] [CrossRef]

- Li, X.; Liu, Z.; Huang, Z.; Liu, W. Radar Emitter Classification with Attention-Based Multi-RNNs. IEEE Commun. Lett. 2020, 24, 2000–2004. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, M.; Ma, Y.; Yang, J. Work Modes Recognition and Boundary Identification of MFR Pulse Sequences with a Hierarchical Seq2seq LSTM. IET Radar Sonar Navig. 2020, 14, 1343–1353. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, M.; Li, Y.; Li, Y.; Wang, S. Joint Recognition and Parameter Estimation of Cognitive Radar Work Modes with LSTM-transformer. Digit. Signal Process. 2023, 140, 104081. [Google Scholar] [CrossRef]

- Venkataramani, S.; Roy, K.; Raghunathan, A. Efficient Embedded Learning for IoT Devices. In Proceedings of the 2016 21st Asia and South Pacific Design Automation Conference (ASP-DAC), Macao, China, 25–28 January 2016; pp. 308–311. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, M.; Liu, R.; Cao, K.; Wang, R.; Wang, Y.; Zhao, W.; Zhou, Y. Spike-Based Approximate Backpropagation Algorithm of Brain-Inspired Deep SNN for Sonar Target Classification. Comput. Intell. Neurosci. 2022, 2022, 1633946. [Google Scholar] [CrossRef]

- Maass, W. Networks of Spiking Neurons: The Third Generation of Neural Network Models. Neural Netw. 1997, 10, 1659–1671. [Google Scholar] [CrossRef]

- Wu, Y.; Deng, L.; Li, G.; Zhu, J.; Shi, L. Spatio-Temporal Backpropagation for Training High-Performance Spiking Neural Networks. Front. Neurosci. 2018, 12, 331. [Google Scholar] [CrossRef]

- He, W.; Wu, Y.; Deng, L.; Li, G.; Wang, H.; Tian, Y.; Ding, W.; Wang, W.; Xie, Y. Comparing SNNs and RNNs on Neuromorphic Vision Datasets: Similarities and Differences. Neural Netw. 2020, 132, 108–120. [Google Scholar] [CrossRef]

- Bittar, A.; Garner, P.N. A Surrogate Gradient Spiking Baseline for Speech Command Recognition. Front. Neurosci. 2022, 16, 865897. [Google Scholar] [CrossRef] [PubMed]

- Henderson, A.; Harbour, S.; Yakopcic, C.; Taha, T.; Brown, D.; Tieman, J.; Hall, G. Spiking Neural Networks for LPI Radar Waveform Recognition with Neuromorphic Computing. In Proceedings of the 2023 IEEE Radar Conference (RadarConf23), San Antonio, TX, USA, 1–5 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Roy, K.; Jaiswal, A.; Panda, P. Towards Spike-Based Machine Intelligence with Neuromorphic Computing. Nature 2019, 575, 607–617. [Google Scholar] [CrossRef] [PubMed]

- Yi, Z.; Lian, J.; Liu, Q.; Zhu, H.; Liang, D.; Liu, J. Learning Rules in Spiking Neural Networks: A Survey. Neurocomputing 2023, 531, 163–179. [Google Scholar] [CrossRef]

- Wu, Y.; Deng, L.; Li, G.; Zhu, J.; Shi, L. Direct Training for Spiking Neural Networks: Faster, Larger, Better. arXiv 2018, arXiv:1809.05793. [Google Scholar] [CrossRef]

- Kim, Y.; Park, H.; Moitra, A.; Bhattacharjee, A.; Venkatesha, Y.; Panda, P. Rate Coding Or Direct Coding: Which One Is Better For Accurate, Robust, And Energy-Efficient Spiking Neural Networks? In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 71–75. [Google Scholar] [CrossRef]

- Li, X.; Huang, Z.; Wang, F.; Wang, X.; Liu, T. Toward Convolutional Neural Networks on Pulse Repetition Interval Modulation Recognition. IEEE Commun. Lett. 2018, 22, 2286–2289. [Google Scholar] [CrossRef]

- Al-Malahi, A.; Farhan, A.; Feng, H.; Almaqtari, O.; Tang, B. An Intelligent Radar Signal Classification and Deinterleaving Method with Unified Residual Recurrent Neural Network. IET Radar Sonar Navig. 2023, 17, 1259–1276. [Google Scholar] [CrossRef]

- Petrov, N.; Jordanov, I.; Roe, J. Radar Emitter Signals Recognition and Classification with Feedforward Networks. Procedia Comput. Sci. 2013, 22, 1192–1200. [Google Scholar] [CrossRef]

- Yin, B.; Corradi, F.; Bohté, S.M. Accurate and Efficient Time-Domain Classification with Adaptive Spiking Recurrent Neural Networks. Nat. Mach. Intell. 2021, 3, 905–913. [Google Scholar] [CrossRef]

- Pan, Z.; Wang, S.; Li, Y. Residual Attention-Aided U-Net GAN and Multi-Instance Multilabel Classifier for Automatic Waveform Recognition of Overlapping LPI Radar Signals. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 4377–4395. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, J.; Chen, S.; Zhang, S.; Zhao, H. Recognition and Estimation for Frequency-Modulated Continuous-Wave Radars in Unknown and Complex Spectrum Environments. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 6098–6111. [Google Scholar] [CrossRef]

- Wei, L.; Wei-gang, Z.; Hong-feng, P.; Hong-yu, Z. Radar Emitter Identification Based on Fully Connected Spiking Neural Network. J. Phys. Conf. Ser. 2021, 1914, 012036. [Google Scholar] [CrossRef]

- Xiao, R.; Tang, H.; Gu, P.; Xu, X. Spike-Based Encoding and Learning of Spectrum Features for Robust Sound Recognition. Neurocomputing 2018, 313, 65–73. [Google Scholar] [CrossRef]

- Saeed, M.; Wang, Q.; Martens, O.; Larras, B.; Frappe, A.; Cardiff, B.; John, D. Evaluation of Level-Crossing ADCs for Event-Driven ECG Classification. IEEE Trans. Biomed. Circuits Syst. 2021, 15, 1129–1139. [Google Scholar] [CrossRef]

- Chu, H.; Yan, Y.; Gan, L.; Jia, H.; Qian, L.; Huan, Y.; Zheng, L.; Zou, Z. A Neuromorphic Processing System with Spike-Driven SNN Processor for Wearable ECG Classification. IEEE Trans. Biomed. Circuits Syst. 2022, 16, 511–523. [Google Scholar] [CrossRef] [PubMed]

| Class | PW Mean (s) | PRI Type | PRI Mean (s) |

|---|---|---|---|

| 1 | 2 | constant | 175 |

| 2 | 2 | constant | 200 |

| 3 | 2 | stagger | [175, 200] |

| 4 | 3 | stagger | [175, 200] |

| 5 | 3 | stagger | [175, 200, 220] |

| 6 | 3 | stagger | [175, 200, 220, 250] |

| 7 | 3 | stagger | [175, 200, 220, 250, 320] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, Z.; Wang, X.; Yuan, S.; Liu, Z. Radar Emitter Recognition Based on Spiking Neural Networks. Remote Sens. 2024, 16, 2680. https://doi.org/10.3390/rs16142680

Luo Z, Wang X, Yuan S, Liu Z. Radar Emitter Recognition Based on Spiking Neural Networks. Remote Sensing. 2024; 16(14):2680. https://doi.org/10.3390/rs16142680

Chicago/Turabian StyleLuo, Zhenghao, Xingdong Wang, Shuo Yuan, and Zhangmeng Liu. 2024. "Radar Emitter Recognition Based on Spiking Neural Networks" Remote Sensing 16, no. 14: 2680. https://doi.org/10.3390/rs16142680