Remote Sensing for Mapping Natura 2000 Habitats in the Brière Marshes: Setting Up a Long-Term Monitoring Strategy to Understand Changes

Abstract

:1. Introduction

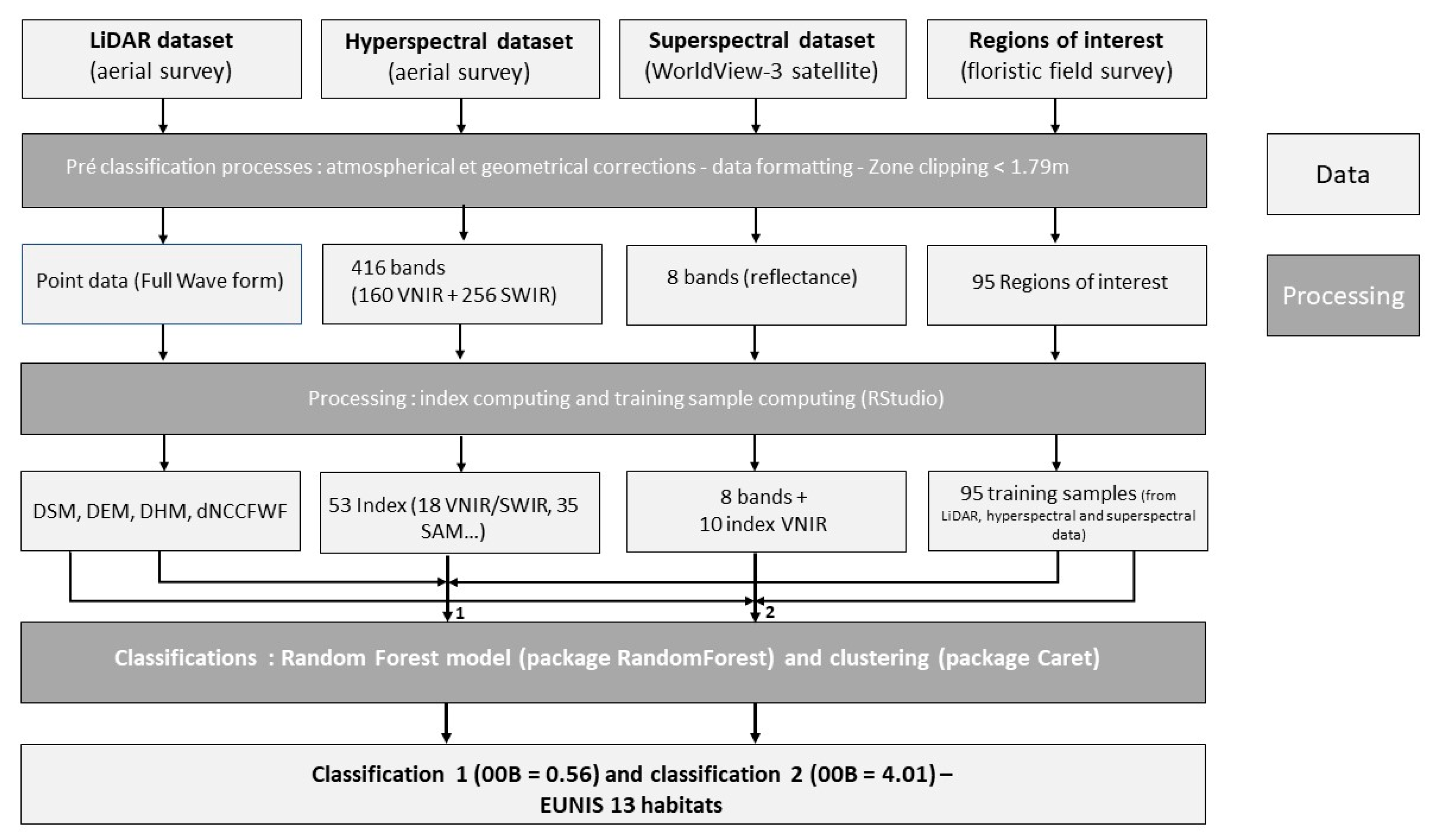

2. Materials and Methods

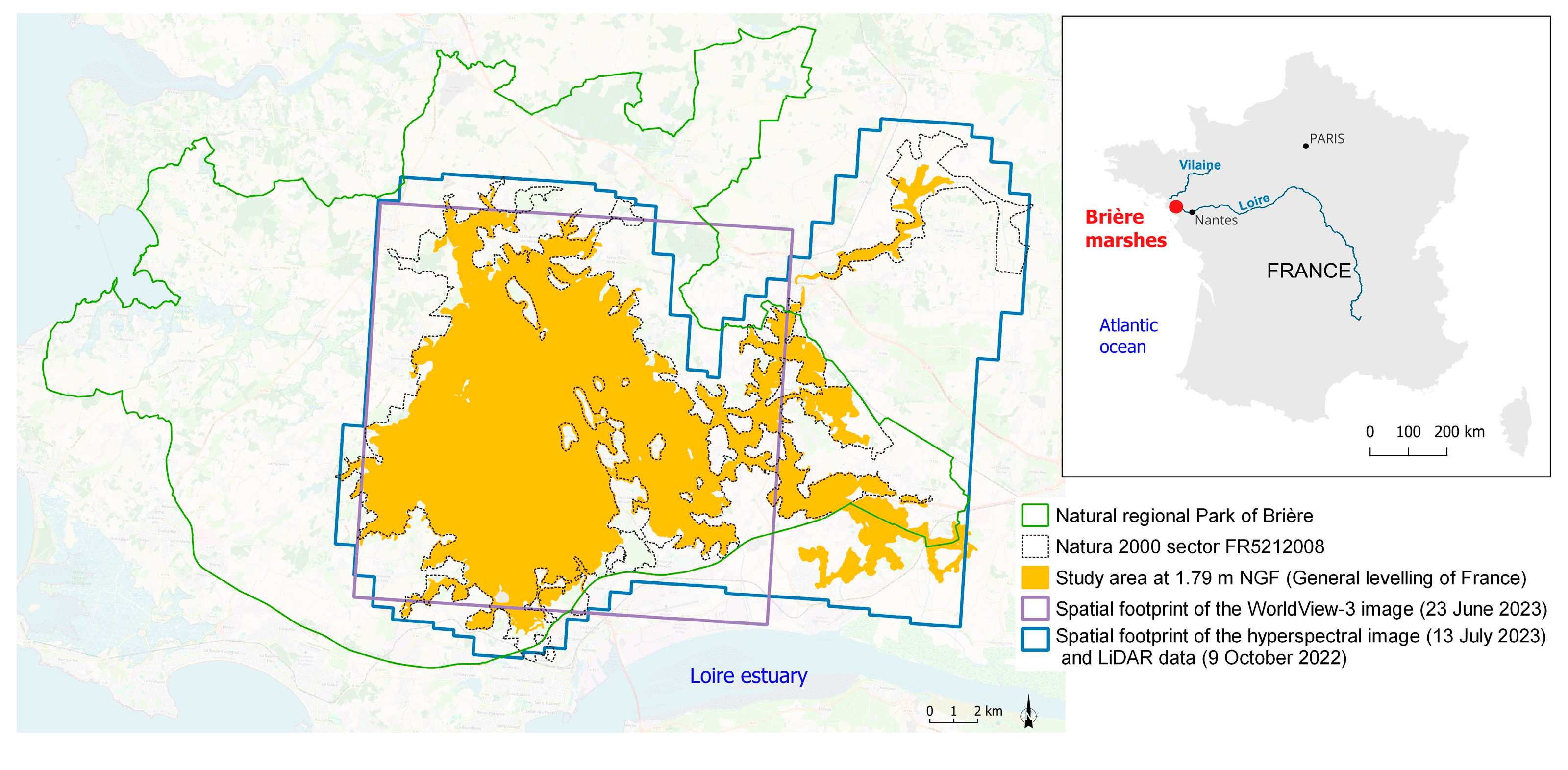

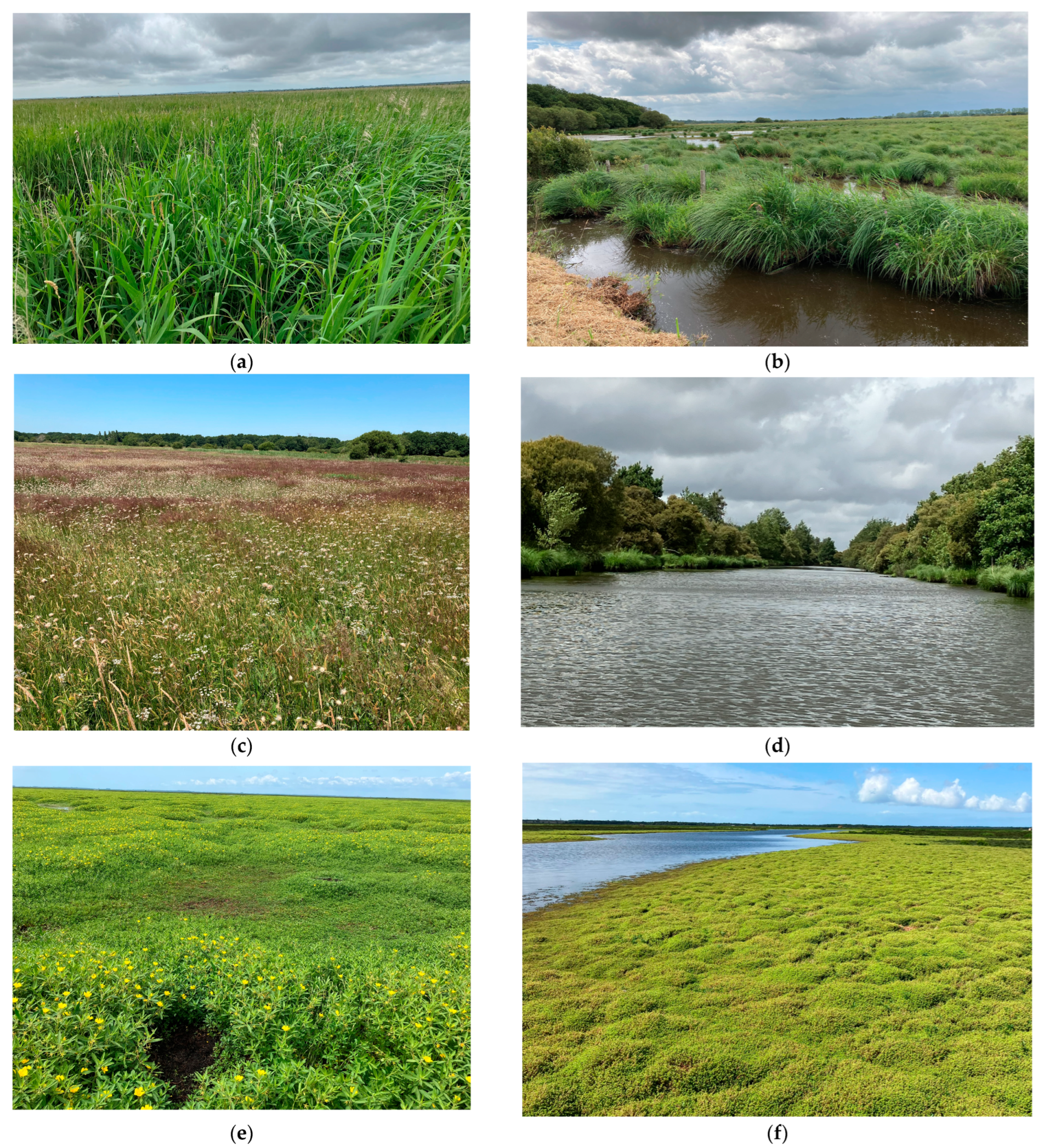

2.1. Study Area

2.2. Data Acquisition

2.3. Image Pre-Processing

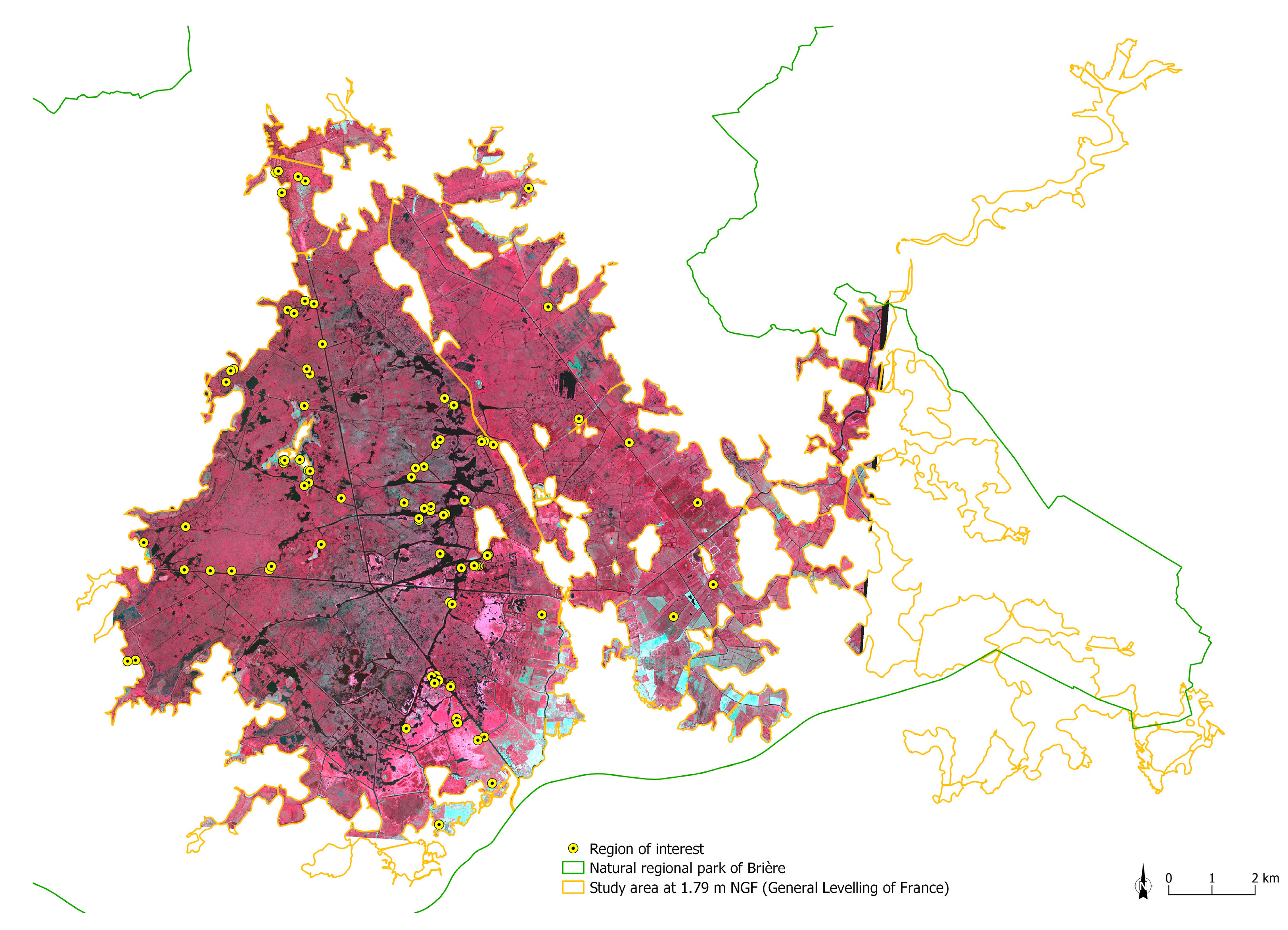

2.4. Field Data Sampling

2.5. Classification Method

2.5.1. Variables’ Calculation

2.5.2. Classification Algorithm

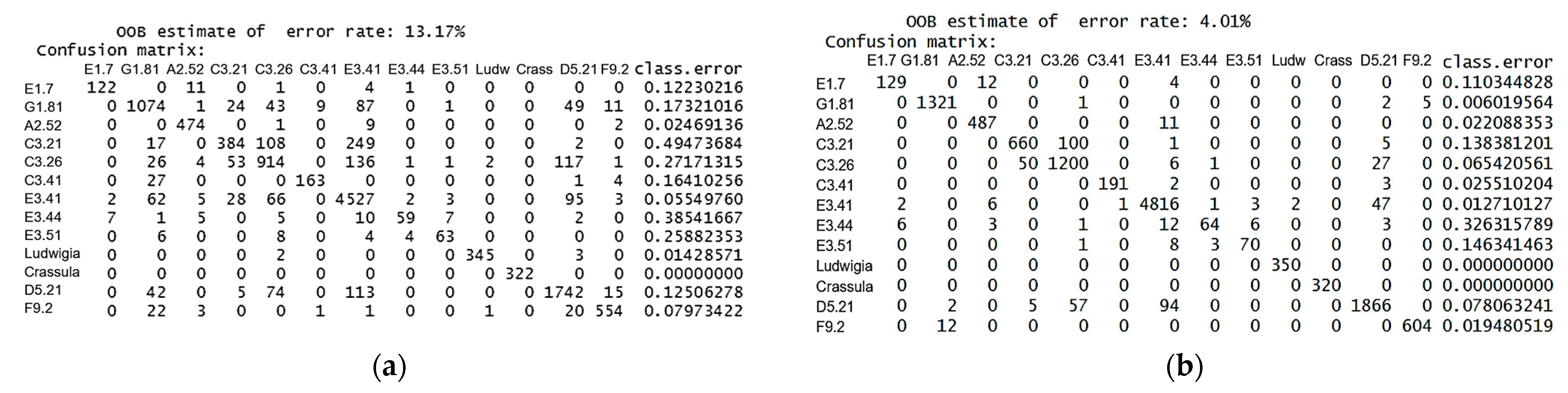

3. Results

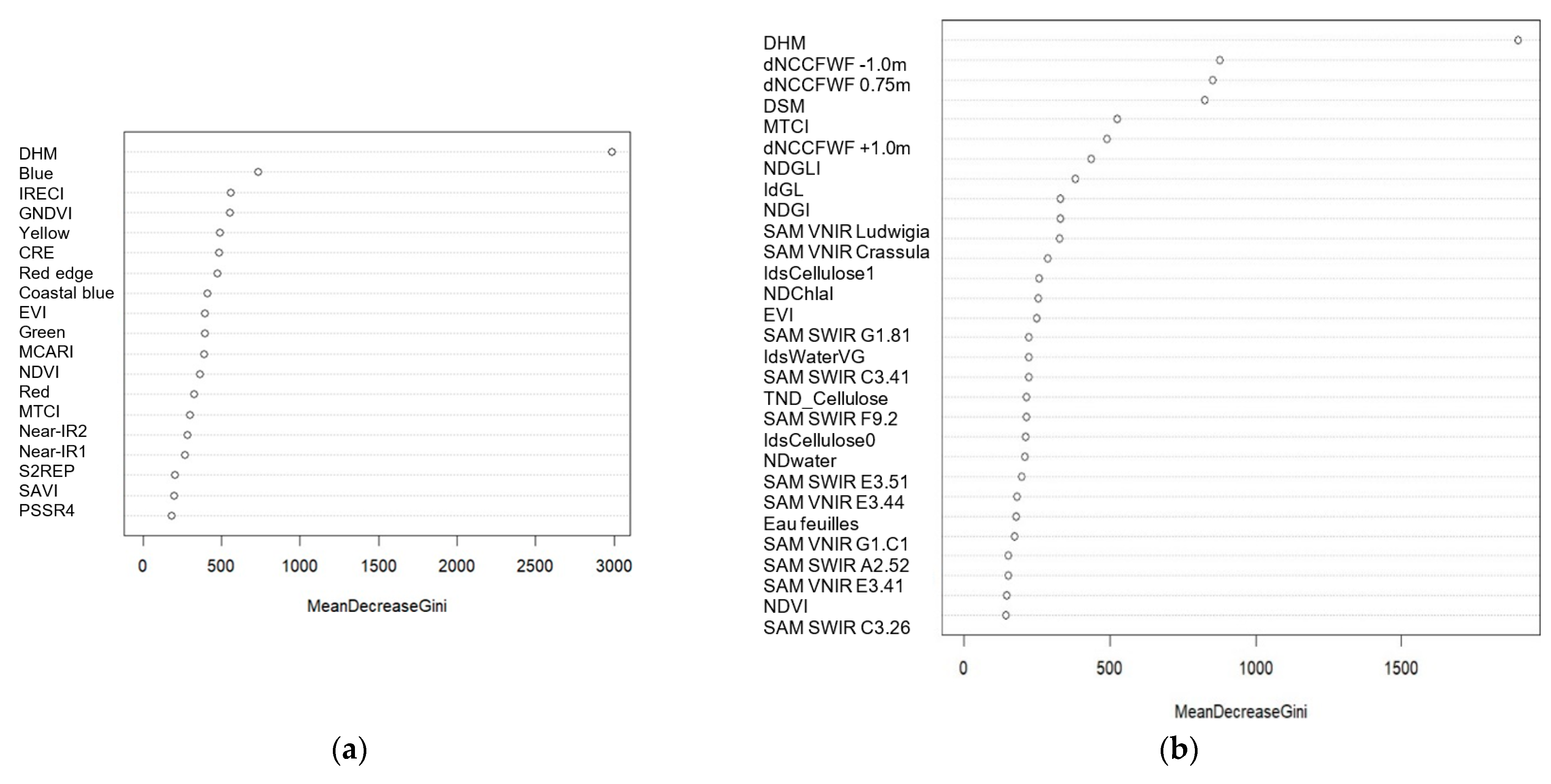

3.1. Variables Importance

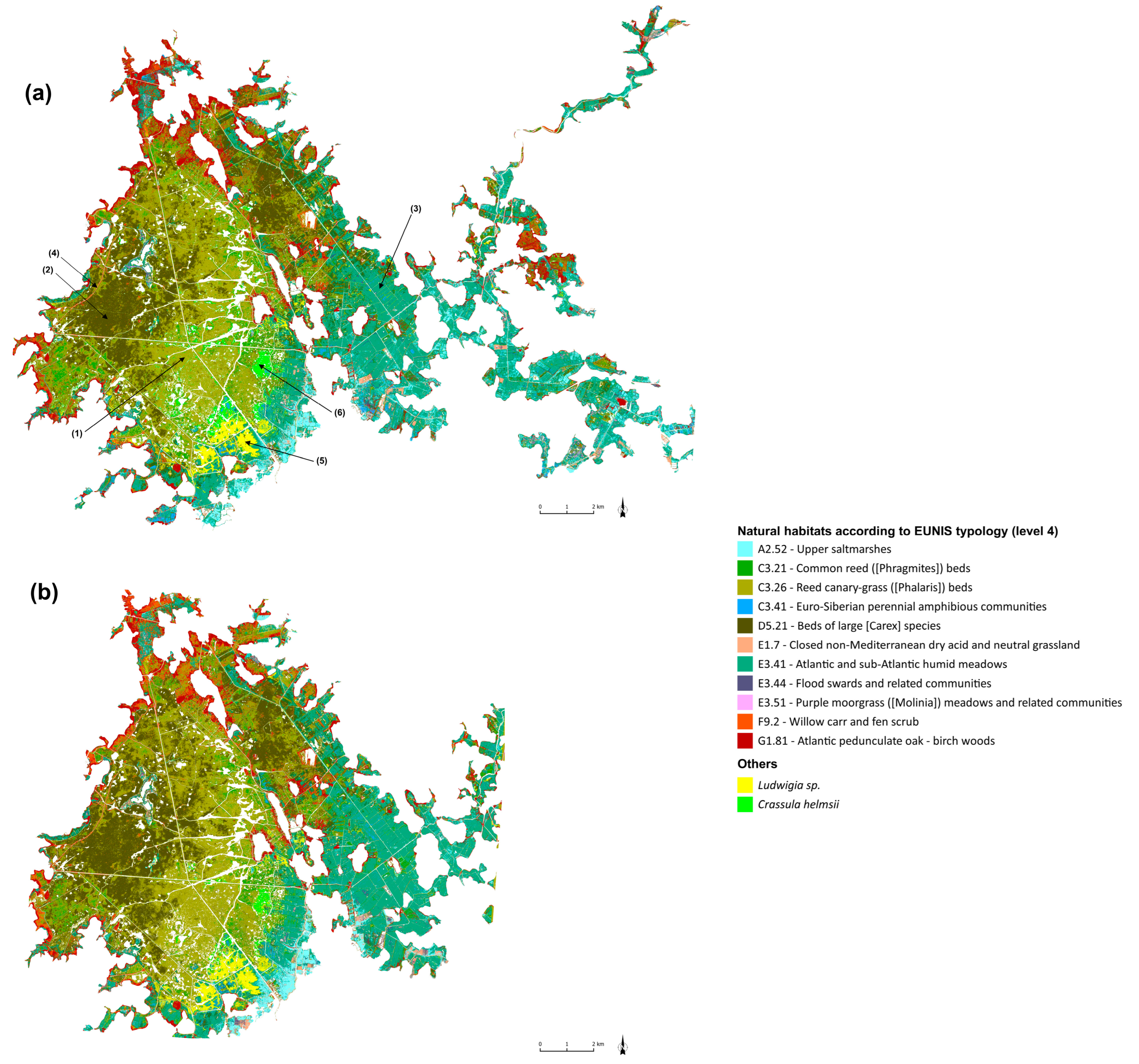

3.2. Up-to-Date Mapping of Habitats

4. Discussion

4.1. Mapping the Distribution of the Habitats

4.2. Long-Term Monitoring Strategy

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fustec, E.; Lefeuvre, J. Fonctions et Valeurs Des Zones Humides; Dunod: Paris, France, 2000. [Google Scholar]

- Gallant, A.L. The Challenges of Remote Monitoring of Wetlands. Remote Sens. 2015, 7, 10938–10950. [Google Scholar] [CrossRef]

- Acreman, M.; Holden, J. How Wetlands Affect Floods. Wetlands 2013, 33, 773–786. [Google Scholar] [CrossRef]

- Dordio, A.; Palace, A.J.; Pinto, A.P. Wetlands: Water Living Filters? Available online: https://dspace.uevora.pt/rdpc/handle/10174/6485 (accessed on 1 May 2024).

- Kingsford, R.T.; Basset, A.; Jackson, L. Wetlands: Conservation’s Poor Cousins. Aquat. Conserv. Mar. Freshw. Ecosyst. 2016, 26, 892–916. [Google Scholar] [CrossRef]

- Fluet-Chouinard, E.; Stocker, B.D.; Zhang, Z.; Malhotra, A.; Melton, J.R.; Poulter, B.; Kaplan, J.O.; Goldewijk, K.K.; Siebert, S.; Minayeva, T.; et al. Extensive Global Wetland Loss over the Past Three Centuries. Nature 2023, 614, 281–286. [Google Scholar] [CrossRef] [PubMed]

- IPCC. Climate Change 2021—The Physical Science Basis: Working Group I Contribution to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK, 2023.

- Belluco, E.; Camuffo, M.; Ferrari, S.; Modenese, L.; Silvestri, S.; Marani, A.; Marani, M. Mapping Salt-Marsh Vegetation by Multispectral and Hyperspectral Remote Sensing. Remote Sens. Environ. 2006, 105, 54–67. [Google Scholar] [CrossRef]

- Bunce, R.G.H.; Metzger, M.J.; Jongman, R.H.G.; Brandt, J.; de Blust, G.; Elena-Rossello, R.; Groom, G.B.; Halada, L.; Hofer, G.; Howard, D.C.; et al. A Standardized Procedure for Surveillance and Monitoring European Habitats and Provision of Spatial Data. Landsc. Ecol. 2008, 23, 11–25. [Google Scholar] [CrossRef]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and Hyperspectral Remote Sensing for Identification and Mapping of Wetland Vegetation: A Review. Wetl. Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Mirmazloumi, S.M.; Moghimi, A.; Ranjgar, B.; Mohseni, F.; Ghorbanian, A.; Ahmadi, S.A.; Amani, M.; Brisco, B. Status and Trends of Wetland Studies in Canada Using Remote Sensing Technology with a Focus on Wetland Classification: A Bibliographic Analysis. Remote Sens. 2021, 13, 4025. [Google Scholar] [CrossRef]

- Ozesmi, S.L.; Bauer, M.E. Satellite Remote Sensing of Wetlands. Wetl. Ecol. Manag. 2002, 10, 381–402. [Google Scholar] [CrossRef]

- Shaikh, M.; Green, D.; Cross, H. A Remote Sensing Approach to Determine Environmental Flows for Wetlands of the Lower Darling River, New South Wales, Australia. Int. J. Remote Sens. 2001, 22, 1737–1751. [Google Scholar] [CrossRef]

- Rosso, P.H.; Ustin, S.L.; Hastings, A. Mapping Marshland Vegetation of San Francisco Bay, California, Using Hyperspectral Data. Int. J. Remote Sens. 2005, 26, 5169–5191. [Google Scholar] [CrossRef]

- Pandey, P.C.; Balzter, H.; Srivastava, P.K.; Petropoulos, G.P.; Bhattacharya, B. 21—Future Perspectives and Challenges in Hyperspectral Remote Sensing. In Hyperspectral Remote Sensing; Pandey, P.C., Srivastava, P.K., Balzter, H., Bhattacharya, B., Petropoulos, G.P., Eds.; Earth Observation; Elsevier: Amsterdam, The Netherlands, 2020; pp. 429–439. ISBN 978-0-08-102894-0. [Google Scholar]

- Carle, M.V.; Wang, L.; Sasser, C.E. Mapping Freshwater Marsh Species Distributions Using WorldView-2 High-Resolution Multispectral Satellite Imagery. Int. J. Remote Sens. 2014, 35, 4698–4716. [Google Scholar] [CrossRef]

- Laba, M.; Downs, R.; Smith, S.; Welsh, S.; Neider, C.; White, S.; Richmond, M.; Philpot, W.; Baveye, P. Mapping Invasive Wetland Plants in the Hudson River National Estuarine Research Reserve Using Quickbird Satellite Imagery. Remote Sens. Environ. 2008, 112, 286–300. [Google Scholar] [CrossRef]

- Norris, G.S.; LaRocque, A.; Leblon, B.; Barbeau, M.A.; Hanson, A.R. Comparing Pixel and Pbject-Based Approaches for Classifying Multispectral Drone Imagery of a Salt Marsh Restoration and Reference Site. Remote Sens. 2024, 16, 1049. [Google Scholar] [CrossRef]

- Frati, G.; Launeau, P.; Robin, M.; Giraud, M.; Juigner, M.; Debaine, F.; Michon, C. Coastal Sand Dunes Monitoring by Low Vegetation Cover Classification and Digital Elevation Model Improvement Using Synchronized Hyperspectral and Full-Waveform LiDAR Remote Sensing. Remote Sens. 2021, 13, 29. [Google Scholar] [CrossRef]

- Launeau, P.; Giraud, M.; Ba, A.; Moussaoui, S.; Robin, M.; Debaine, F.; Lague, D.; Le Menn, E. Full-Waveform LiDAR Pixel Analysis for Low-Growing Vegetation Mapping of Coastal Foredunes in Western France. Remote Sens. 2018, 10, 669. [Google Scholar] [CrossRef]

- Gramond, D. Requalifier les zones humides continentales: Logiques et paradoxes. Géocarrefour 2013, 88, 247–256. [Google Scholar] [CrossRef]

- Rapinel, S.; Clément, B.; Hubert-Moy, L. Cartographie des zones humides par télédétection: Approche multi-scalaire pour une planification environnementale. Cybergeo Eur. J. Geogr. 2019. [Google Scholar] [CrossRef]

- Massard, O.; Mesnage, C.; Marquet, M. Plan d’actions En Faveur de La Flore Remarquable Du Parc Naturel Régional de Brière; Parc naturel régional de Brière, Conservatoire botanique national de Brest: Brest, France, 2017; p. 244. [Google Scholar]

- Wilson, K.L.; Wong, M.C.; Devred, E. Comparing Sentinel-2 and WorldView-3 Imagery for Coastal Bottom Habitat Mapping in Atlantic Canada. Remote Sens. 2022, 14, 1254. [Google Scholar] [CrossRef]

- Richter, R.; Schläpfer, D. Geo-Atmospheric Processing of Airborne Imaging Spectrometry Data. Part 2: Atmospheric/Topographic Correction. Int. J. Remote Sens. 2002, 23, 2631–2649. [Google Scholar] [CrossRef]

- Schläpfer, D.; Schaepman, M.; Itten, K. PARGE: Parametric Geocoding Based on GCP-Calibrated Auxiliary Data. Proc. SPIE Int. Soc. Opt. Eng. 1998, 3438, 334–344. [Google Scholar] [CrossRef]

- Harris, A.; Charnock, R.; Lucas, R.M. Hyperspectral Remote Sensing of Peatland Floristic Gradients. Remote Sens. Environ. 2015, 162, 99–111. [Google Scholar] [CrossRef]

- Jarocińska, A.; Niedzielko, J.; Kopeć, D.; Wylazłowska, J.; Omelianska, B.; Charyton, J. Testing Textural Information Base on LiDAR and Hyperspectral Data for Mapping Wetland Vegetation: A Case Study of Warta River Mouth National Park (Poland). Remote Sens. 2023, 15, 3055. [Google Scholar] [CrossRef]

- Gayet, G.; Baptist, F.; Maciejewski, L.; Poncet, R.; Bensettiti, F. Guide de Détermination Des Habitats Terrestres et Marins de La Typologie EUNIS—Version 1.0.; Guides et Protocoles; Agence française pour la biodiversité—AFB: Vincennes, France, 2018; p. 230. [Google Scholar]

- De Keersmaecker, M.-L. Stratégie d’échantillonnage des données de terrain intégrées dans l’analyse des images satellitaires. Espace Géographique 1987, 16, 195–205. [Google Scholar] [CrossRef]

- Lutra Consulting Limited, MerginMaps Mobile App. 2023. Available online: https://merginmaps.com/docs/misc/licensing/#mergin-maps-mobile-app (accessed on 18 July 2024).

- Lutra Consulting Limited, MerginMaps QGis plugin. 2023. Available online: https://merginmaps.com/docs/misc/licensing/#mergin-maps-qgis-plugin (accessed on 18 July 2024).

- QGIS.org, QGIS Geographic Information System. QGIS Association. 2024. Available online: http://www.qgis.org (accessed on 18 July 2024).

- Trimble TerraSync 2014. Available online: https://www.d3e.fr/gps/Trimble_Terrasync_2016a.html (accessed on 18 July 2024).

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the Radiometric and Biophysical Performance of the MODIS Vegetation Indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Deering, D.W.; Schell, J.A.; Harlan, J.C. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation; NASA: Washington, DC, USA, 1974.

- Dash, J.; Curran, P.J. The MERIS Terrestrial Chlorophyll Index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Gitelson, A.; Viña, A.; Ciganda, V.; Rundquist, D.; Arkebauer, T. Remote Estimation of Canopy Chlorophyll in Crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef]

- Daughtry, C.; Walthall, C.; Kim, M.; Colstoun, E.B.; McMurtrey, J.E. Estimating Corn Leaf Chlorophyll Concentration from Leaf and Canopy Reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a Green Channel in Remote Sensing of Global Vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Blackburn, G.A. Spectral Indices for Estimating Photosynthetic Pigment Concentrations: A Test Using Senescent Tree Leaves. Int. J. Remote Sens. 1998, 19, 657–675. [Google Scholar] [CrossRef]

- Frampton, W.J.; Dash, J.; Watmough, G.; Milton, E.J. Evaluating the Capabilities of Sentinel-2 for Quantitative Estimation of Biophysical Variables in Vegetation. ISPRS J. Photogramm. Remote Sens. 2013, 82, 83–92. [Google Scholar] [CrossRef]

- Huete, A.R. A Soil-Adjusted Vegetation Index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Launeau, P.; Kassouk, Z.; Debaine, F.; Roy, R.; Mestayer, P.G.; Boulet, C.; Rouaud, J.-M.; Giraud, M. Airborne Hyperspectral Mapping of Trees in an Urban Area. Int. J. Remote Sens. 2017, 38, 1277–1311. [Google Scholar] [CrossRef]

- Gao, B. NDWI—A Normalized Difference Water Index for Remote Sensing of Vegetation Liquid Water from Space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Launeau, P.; Giraud, M.; Robin, M.; Baltzer, A. Full-Waveform LIDAR Fast Analysis of a Moderately Turbid Bay in Western France. Remote Sens. 2019, 11, 117. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and Regression by RandomForest. R News 2002, 2, 18–22. [Google Scholar]

- Kuhn, M. Building Predictive Models in R Using the Caret Package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef]

- Maxwell, A.; Warner, T.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Mohammadpour, P.; Viegas, D.X.; Viegas, C. Vegetation Mapping with Random Forest Using Sentinel 2 and GLCM Texture Feature—A Case Study for Lousã Region, Portugal. Remote Sens. 2022, 14, 4585. [Google Scholar] [CrossRef]

- Wang, X.; Gao, X.; Zhang, Y.; Fei, X.; Chen, Z.; Wang, J.; Zhang, Y.; Lu, X.; Zhao, H. Land-Cover Classification of Coastal Wetlands Using the RF Algorithm for Worldview-2 and Landsat 8 Images. Remote Sens. 2019, 11, 1927. [Google Scholar] [CrossRef]

- Belgiu, M.; Drǎguţ, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Halls, J.; Costin, K. Submerged and Emergent Land Cover and Bathymetric Mapping of Estuarine Habitats Using WorldView-2 and LiDAR Imagery. Remote Sens. 2016, 8, 718. [Google Scholar] [CrossRef]

- van Deventer, H.; Linström, A.; Naidoo, L.; Job, N.; Sieben, E.J.J.; Cho, M.A. Comparison between Sentinel-2 and WorldView-3 Sensors in Mapping Wetland Vegetation Communities of the Grassland Biome of South Africa, for Monitoring under Climate Change. Remote Sens. Appl. Soc. Environ. 2022, 28, 100875. [Google Scholar] [CrossRef]

- Schmitz, O.J.; Buchkowski, R.W.; Burghardt, K.T.; Donihue, C.M. Functional Traits and Trait-Mediated Interactions. Connecting Community-Level Interactions with Ecosystem Functioning. In Advances in Ecological Research; Pawar, S., Woodward, G., Dell, A.I., Eds.; Trait-Based Ecology—From Structure to Function; Academic Press: Cambridge, MA, USA, 2015; Volume 52, pp. 319–343. [Google Scholar]

- Avolio, M.L.; Forrestel, E.J.; Chang, C.C.; La Pierre, K.J.; Burghardt, K.T.; Smith, M.D. Demystifying Dominant Species. New Phytol. 2019, 223, 1106–1126. [Google Scholar] [CrossRef] [PubMed]

- Lindenmayer, D.; Pierson, J.; Barton, P.; Beger, M.; Branquinho, C.; Calhoun, A.; Caro, T.; Greig, H.; Gross, J.; Heino, J.; et al. A New Framework for Selecting Environmental Surrogates. Sci. Total Environ. 2015, 538, 1029–1038. [Google Scholar] [CrossRef]

- Marcinkowska-Ochtyra, A.; Ochtyra, A.; Raczko, E.; Kopeć, D. Natura 2000 Grassland Habitats Mapping Based on Spectro-Temporal Dimension of Sentinel-2 Images with Machine Learning. Remote Sens. 2023, 15, 1388. [Google Scholar] [CrossRef]

- Mwita, E.; Menz, G.; Misana, S.; Becker, M.; Kisanga, D.; Boehme, B. Mapping Small Wetlands of Kenya and Tanzania Using Remote Sensing Techniques. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 173–183. [Google Scholar] [CrossRef]

- Poff, N.; Brinson, M.; Day, J. Aquatic Ecosystems & Global Climate Change—Potential Impacts on Inland Freshwater and Coastal Wetland Ecosystems in the United States of America (USA). The Pew Center on Global Climate Change; 1 January 2002; p. 57. Available online: https://www.pewtrusts.org/en/research-and-analysis/reports/2002/01/01/aquatic-ecosystems-and-climate-change-potential-impacts-on-inland-freshwater-and-coastal-wetland-ecosystems-in-the-united-states (accessed on 18 July 2024).

| HySpex Mjolnir V-1240 | HySpex Mjolnir S-620 | WorldView-110 Camera | Titan DW 600 | ||

|---|---|---|---|---|---|

| Sensor | CCD Si | MCT (Hg Cd Te) | / | Channel (nm) | C2: 1064 C3: 535 |

| Pixels | 1240 | 620 | / | Laseraperture (mrad) | C2: 0.35 C3: 0.7 |

| Channels | 160 | 256 | 8 | Operational altitude (m) | 1300 |

| Spectral range (nm) | 410–990 | 970–2500 | 400–1040 | Laser shot frequency (kHz) | 100 |

| Spectral resolution (nm) | 3.0 | 5.1 | / | Scan frequency (Hz) | 70 |

| Sampling per channel (nm) | 3.6 | 6.0 | / | Field of view (°) | 20 |

| Field of view (°) | 20 | 20 | / | Vertical accuracy (cm) | 5–10 |

| Altitude above ground (m) | 3500 | 3500 | 617,000 | Waveform feedback recording (Go/s) | 1 per nanosecond |

| Spatial resolution (m) | 0.94 | 1.89 | 1.24 | Roll compensation | on |

| Surveyor | GPS Point Number | Date | Species/Type of Habitat | EUNIS Code | Pictures Numbers | Height/Comments |

|---|---|---|---|---|---|---|

| Thomas Lafitte | 7 | 26 June 2023 | Mixed sedge meadow vegetation: Carex elata dominant, Reed canary-grass, lysimachia, iris appended | D5.21 | 292-293-294 | Late flooding, 50 cm, water between the carex |

| Thomas Lafitte | 15 | 4 July 2023 | Pure reedbed with Phragmites australis | C3.21 | 469-470-471 | 2.30–2.50 m |

| Class of Habitats | Number of ROIs |

|---|---|

| Upper saltmarshes | 5 |

| Common reed ([Phragmites]) beds | 11 |

| Reed canary-grass ([Phalaris]) beds | 15 |

| Euro-Siberian perennial amphibious communities | 3 |

| Beds of large [Carex] species | 10 |

| Closed non-Mediterranean dry acid and neutral grassland | 5 |

| Atlantic and sub-Atlantic humid meadows | 7 |

| Flood swards and related communities | 6 |

| Purple moorgrass ([Molinia]) meadows and related communities | 4 |

| Willow carr and fen scrub | 4 |

| Atlantic pedunculate oak—birch woods | 5 |

| Crassula | 10 |

| Ludwigia | 10 |

| Dataset | Index | Description | Formula | Reference |

|---|---|---|---|---|

| WV-3, HS | EVI | Enhanced Vegetation Index | 2.5 × (NIR − R)/((NIR + 6 × R − 7.5 × B) + 1) | [35] |

| WV-3, HS | NDVI | Normalised Difference Vegetation Index | (NIR − R)/(NIR + R) | [36] |

| WV-3, HS | MTCI | MERIS Terrestrial Chlorophyll Index | (RE2 − RE1)/(RE1 − R) | [37] |

| WV-3 | CRE | Chlorophyll Red-Edge index | ((NIR/RE1)−1) | [38] |

| WV-3 | MCARI | Modified chlorophyll absorption in reflectance index | [(RE1 − R) − 0.2 (RE1 − G)] × (RE1 − R) | [39] |

| WV-3 | GNDVI | Green Normalised Difference Vegetation Index | (NIR − G)/(NIR + G) | [40] |

| WV-3 | PSSRa | Pigment Specific Simple Ratio | NIR/R | [41] |

| WV-3 | S2REP | Sentinel-2 red-edge position | 705 + 35 × ((((NIR + R)/2) − RE1)/(RE2 − RE1)) | [42] |

| WV-3 | IReCI | Inverted Red-Edge Chlorophyll Index | (NIR − R)/(RE1/RE2) | [42] |

| WV-3 | SAVI | Soil Adjusted Vegetation Index | ((NIR − R)/(NIR + R + 0.428)) × (1 + 0.428) | [43] |

| HS | NGLI | Normalised Green Leaves Index | (R555 − R501)/(R555 + R501) | [44] |

| HS | IdGL | Index Green Leaves | (2 × R555)/(R501 + R602) − 1 | [44] |

| HS | NDGL | Normalised Difference Green Leaves Index | (R922 − R773)/(R922 + R773) | [44] |

| HS | ND ChlaI | Normalised Difference Chl-a Index | (R642 − R675)/(R642 + R675) | [44] |

| HS | Leaves water | / | (R921 − R976) (R921 + R976) | [20] |

| HS | NDWI | Normalised Difference Water Index | (NIR-SWIR1)/NIR + SWIR1) | [45] |

| HS | TND Cellulose | Triple Normalised Difference (2 bands) of Cellulose | (R1082 − R1214 + R1274 − R1334 + R1695 − R1773) | Personal communication |

| HS | Ids Water VG | Indices with 3 vegetation water bands | (–R1003 + 2 × R1082 − R121) | Personal communication |

| Spectral Bands | Spectral Indices | Additional Variables | LiDAR Dataset | Total Variables | |

|---|---|---|---|---|---|

| WorldView3 | Coastal Blue, Blue, Green, Yellow, Red, Red edge, Near-IR1, Near-IR2 | EVI; NDVI; MTCI; CRE; MCARI; GNDVI; PSSRa; S2REP; IReCI; SAVI | / | DHM | 19 variables |

| Hyperspectral | / None of them are used as is | EVI; NDVI; MTCI; NGLI; IdGL; NDGL; IdsCellulose; NDChlaI; Ids Water VG; TND Cellulose; Ids Cellulose0; NDWI; Eau feuilles | Spectral angle mapping in VNIR and SWIR | DHM; DTM; dNCCFWF | 58 variables |

| EUNIS Code (Level 4) | EUNIS Name |

|---|---|

| A2.52 | Upper saltmarshes |

| C3.21 | Common reed ([Phragmites]) beds |

| C3.26 | Reed canary-grass ([Phalaris]) beds |

| C3.41 | Euro-Siberian perennial amphibious communities |

| D5.21 | Beds of large [Carex] species |

| E1.7 | Closed non-Mediterranean dry acid and neutral grassland |

| E3.41 | Atlantic and sub-Atlantic humid meadows |

| E3.44 | Flood swards and related communities |

| E3.51 | Purple moorgrass ([Molinia]) meadows and related communities |

| F9.2 | Willow carr and fen scrub |

| G1.81 | Atlantic pedunculate oak—birch woods |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lafitte, T.; Robin, M.; Launeau, P.; Debaine, F. Remote Sensing for Mapping Natura 2000 Habitats in the Brière Marshes: Setting Up a Long-Term Monitoring Strategy to Understand Changes. Remote Sens. 2024, 16, 2708. https://doi.org/10.3390/rs16152708

Lafitte T, Robin M, Launeau P, Debaine F. Remote Sensing for Mapping Natura 2000 Habitats in the Brière Marshes: Setting Up a Long-Term Monitoring Strategy to Understand Changes. Remote Sensing. 2024; 16(15):2708. https://doi.org/10.3390/rs16152708

Chicago/Turabian StyleLafitte, Thomas, Marc Robin, Patrick Launeau, and Françoise Debaine. 2024. "Remote Sensing for Mapping Natura 2000 Habitats in the Brière Marshes: Setting Up a Long-Term Monitoring Strategy to Understand Changes" Remote Sensing 16, no. 15: 2708. https://doi.org/10.3390/rs16152708