Estimating Maize Crop Height and Aboveground Biomass Using Multi-Source Unmanned Aerial Vehicle Remote Sensing and Optuna-Optimized Ensemble Learning Algorithms

Abstract

1. Introduction

2. Materials and Methods

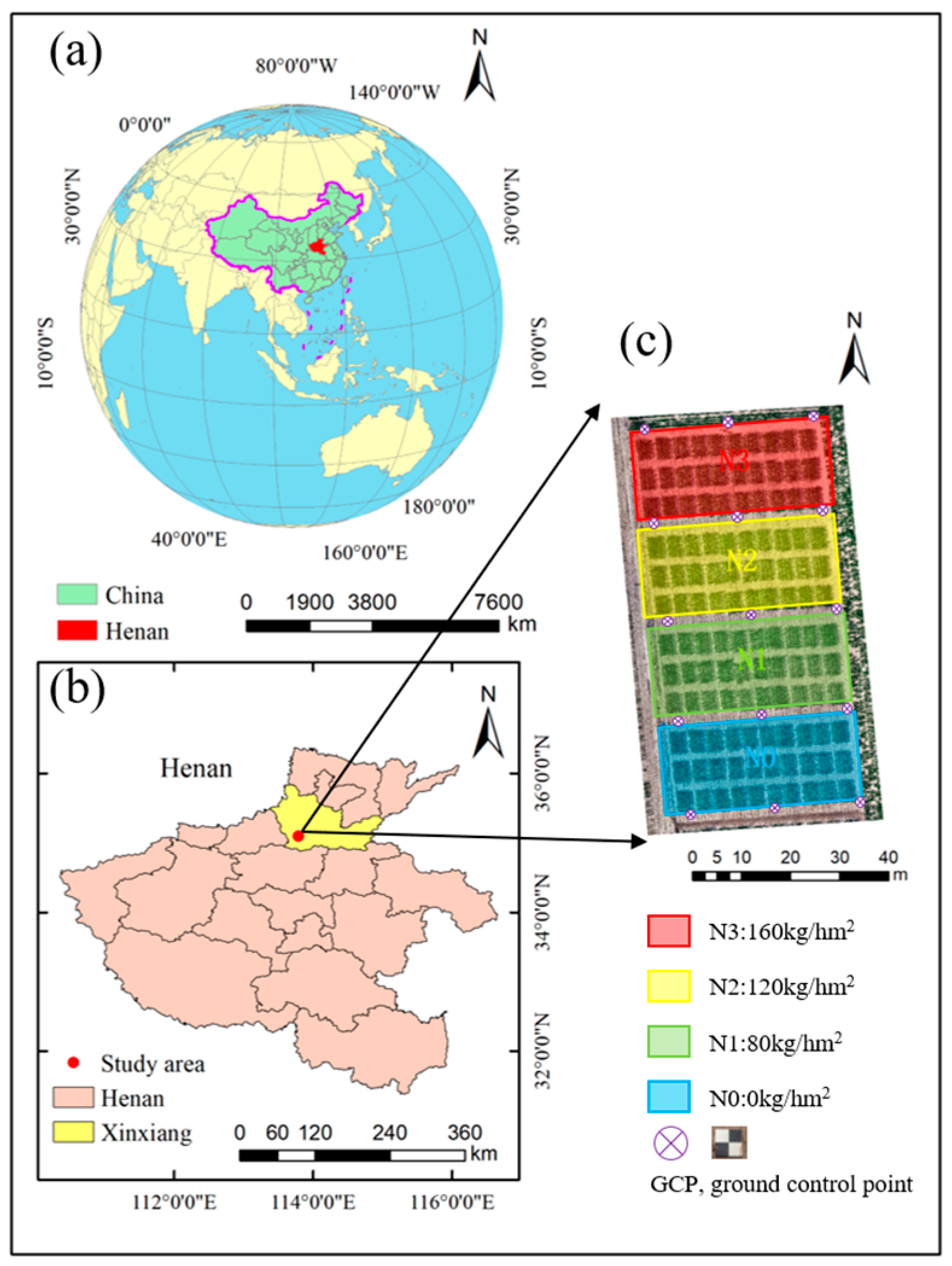

2.1. Study Area

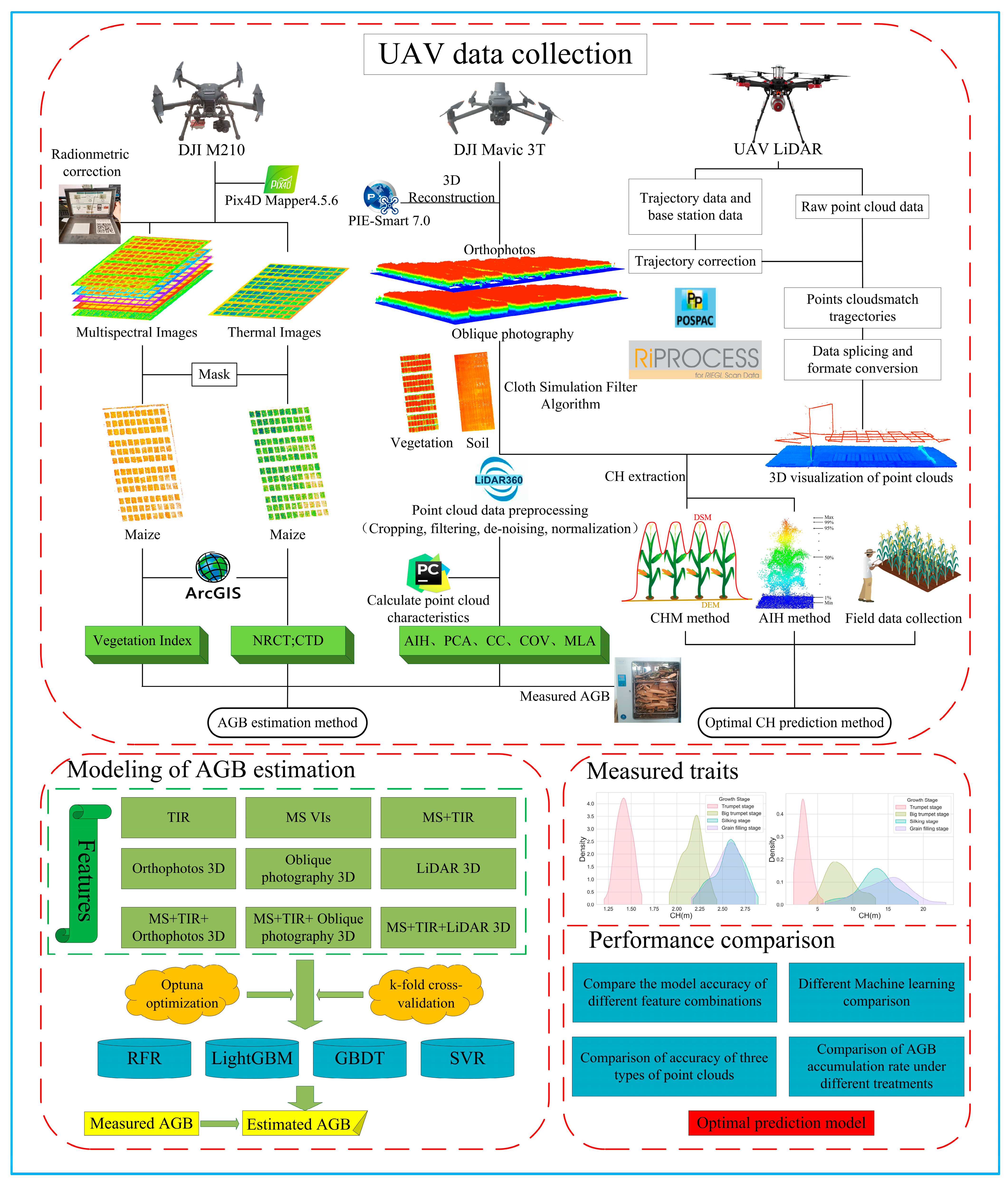

2.2. Data Collection and Feature Extraction

2.2.1. Ground Data Acquisition

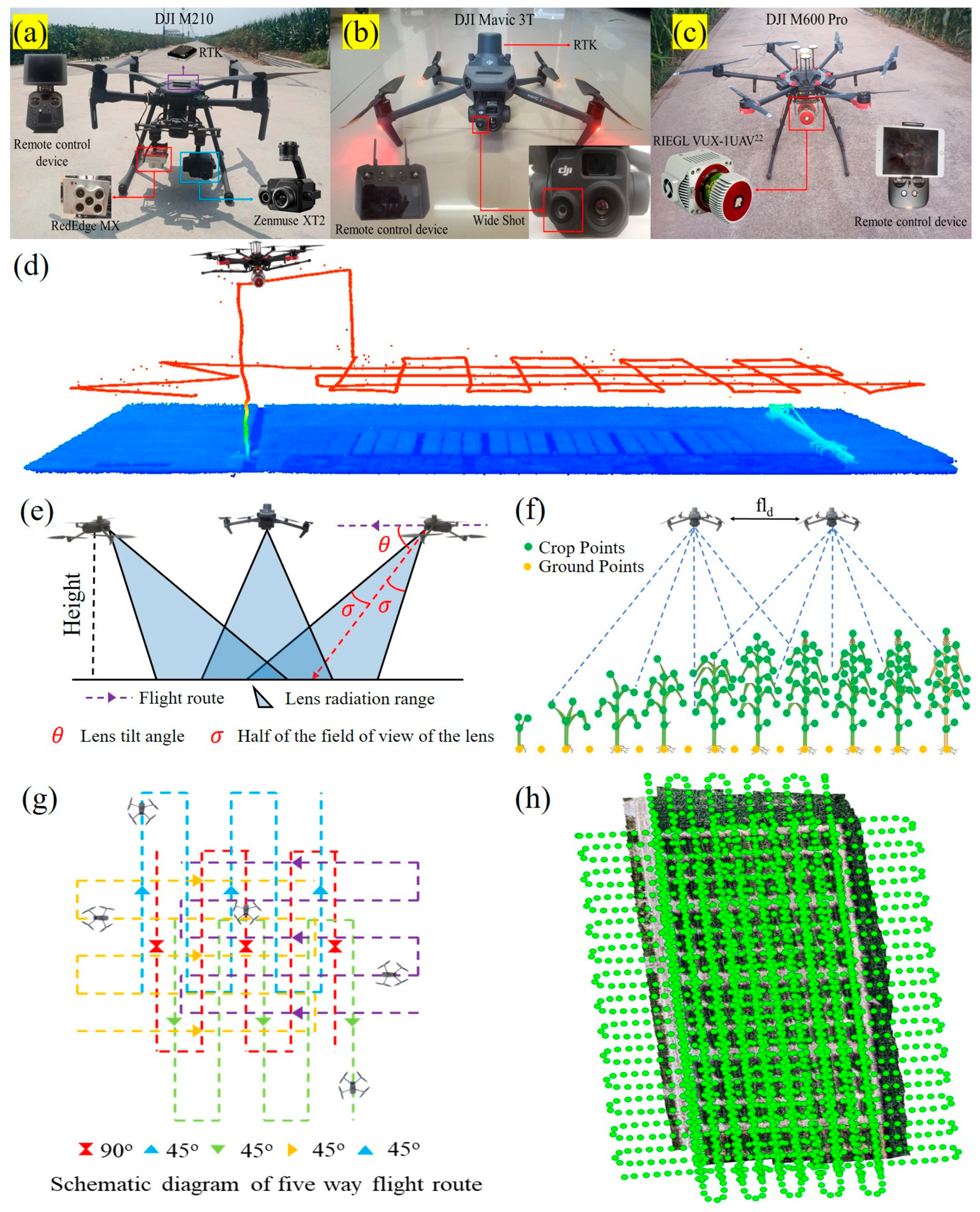

2.2.2. UAV Data Acquisition

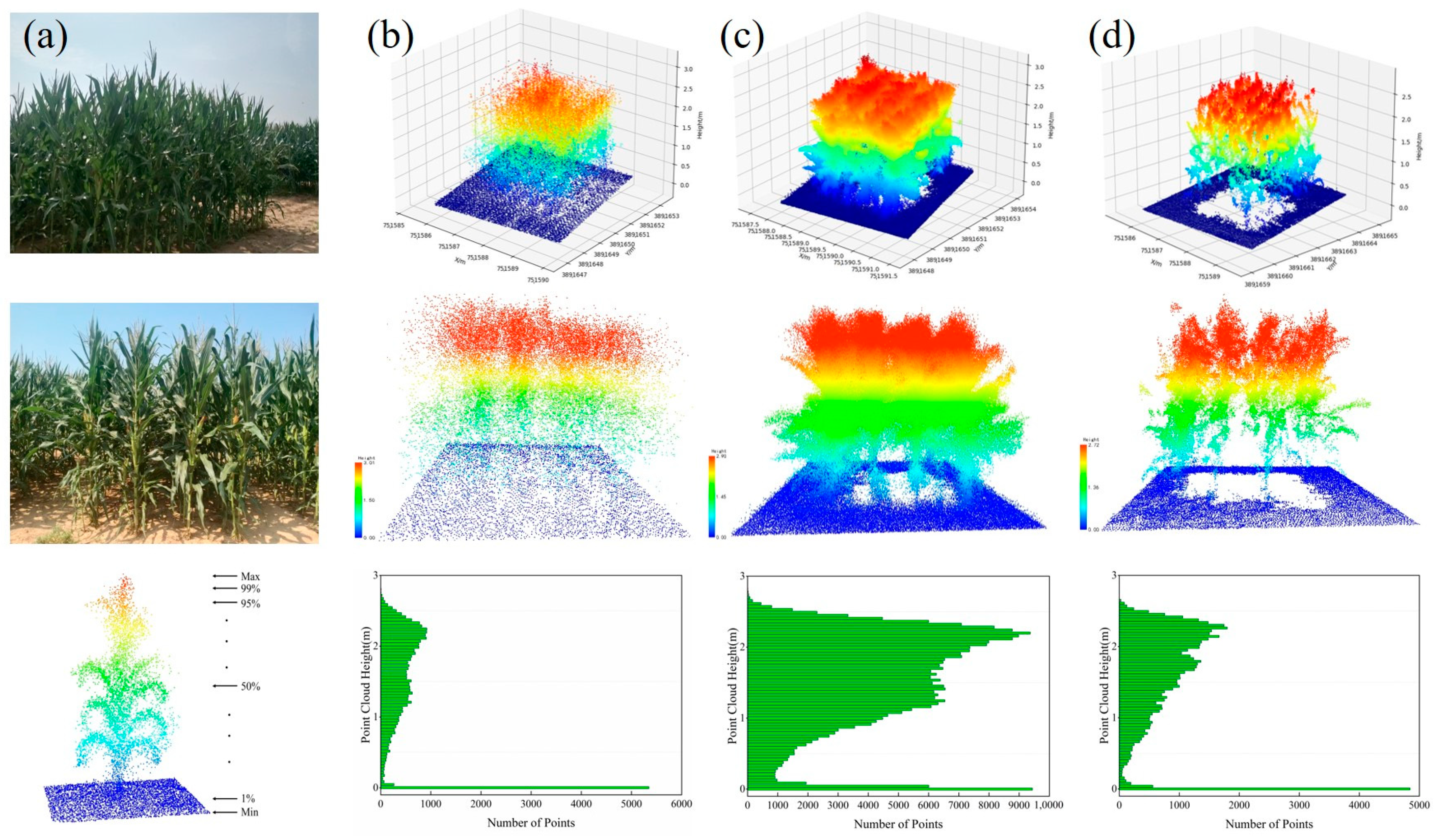

2.2.3. Image Processing and Data Extraction

2.2.4. Canopy Temperature Information

2.3. AGB Model Construction

2.4. Evaluation Metrics

3. Results

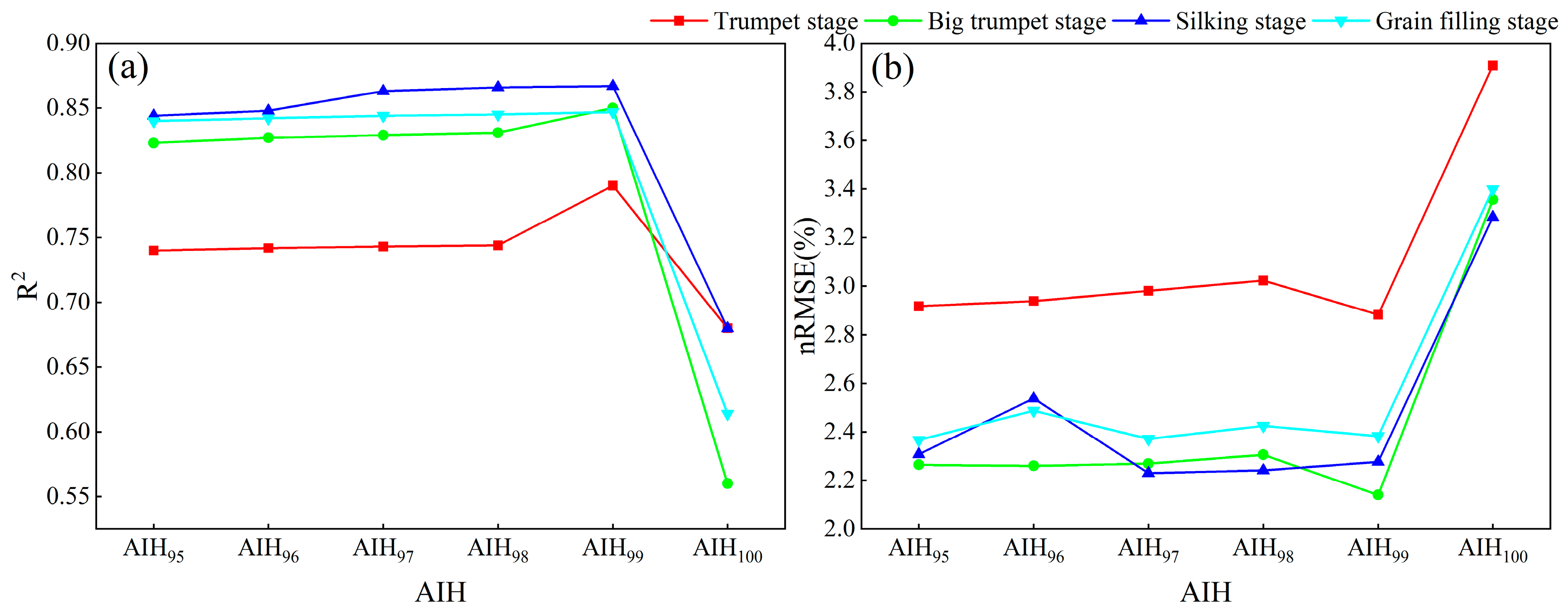

3.1. Extraction of Maize Crop Height at Various Growth Stages

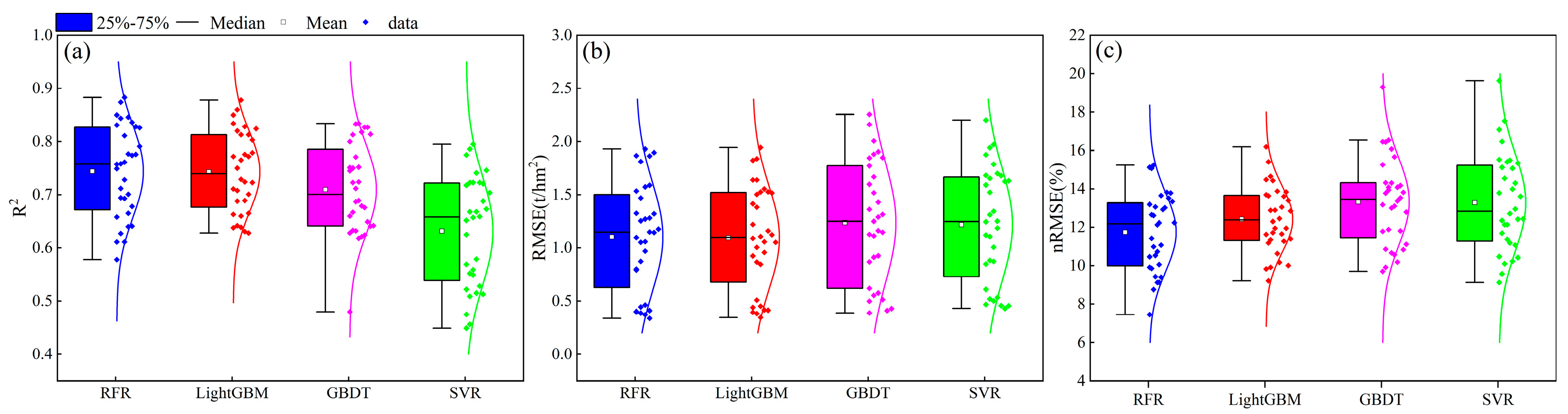

3.2. AGB Estimation for Entire Growth Cycle

3.3. AGB Estimation for Different Growth Stages

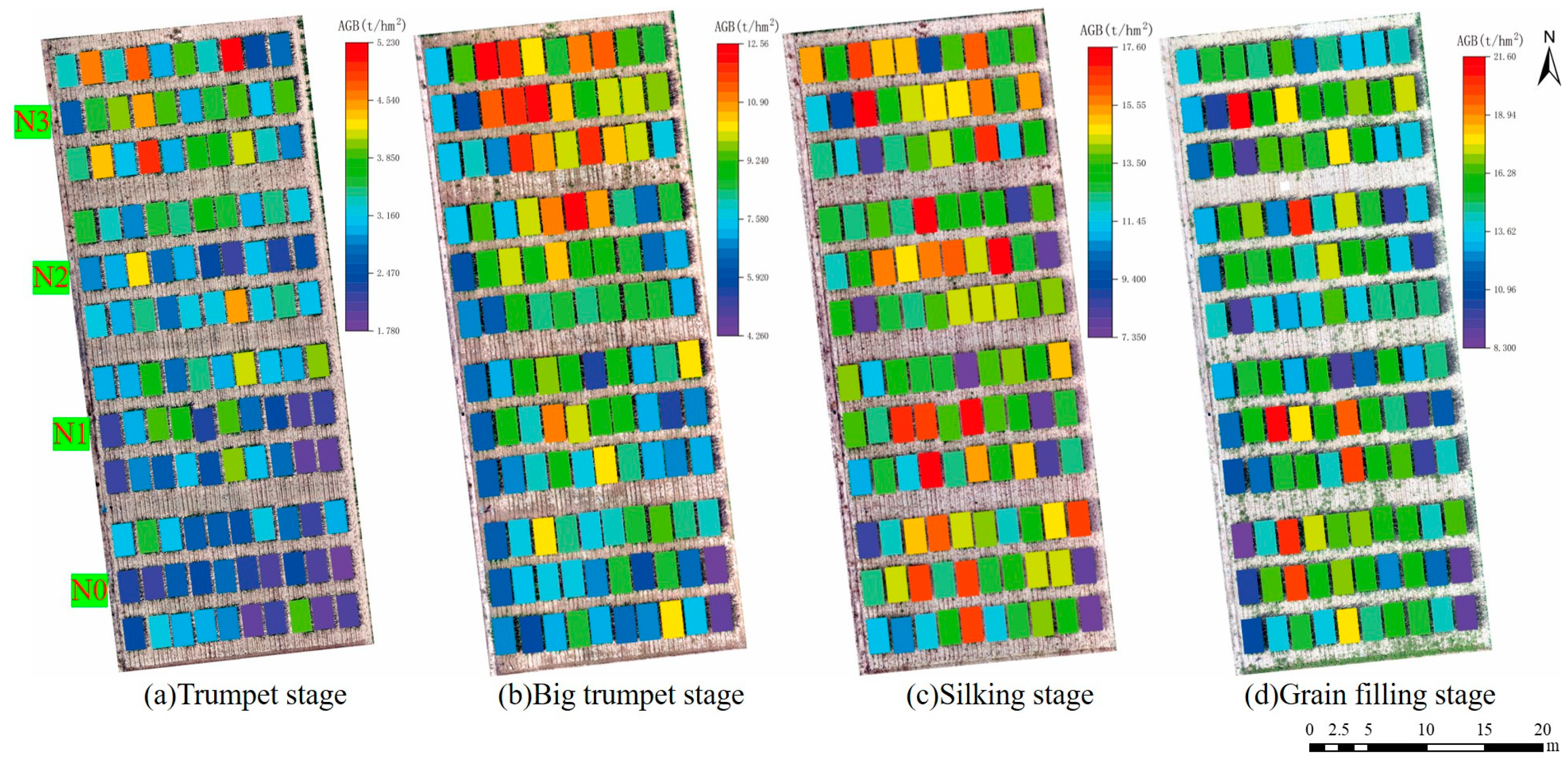

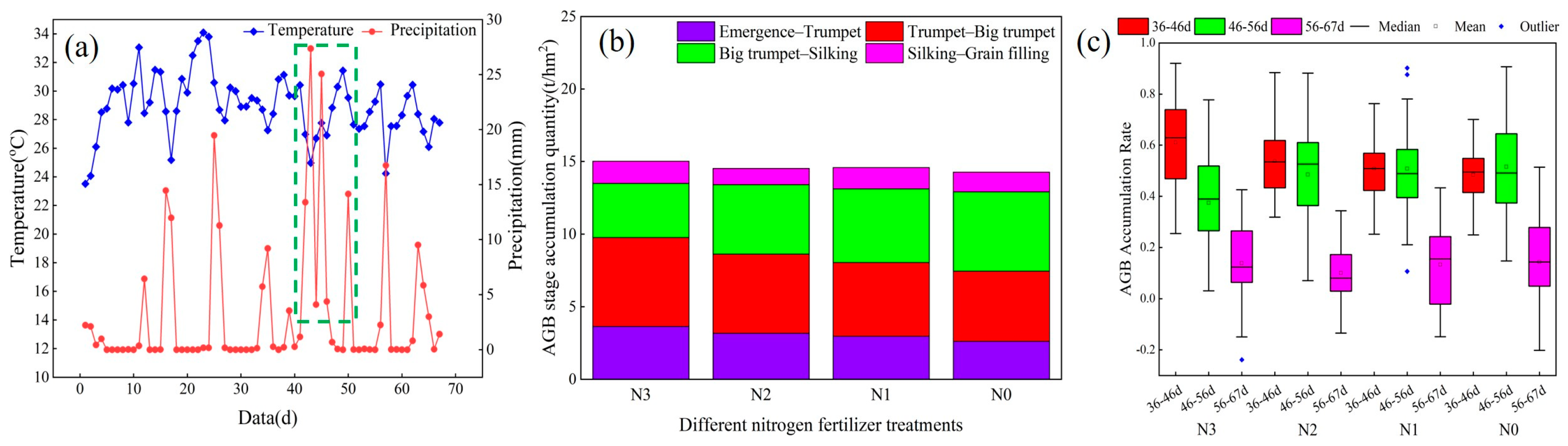

3.4. Spatiotemporal Distribution and Accumulation of AGB of Maize

4. Discussion

4.1. Comparison of Methods for Estimating Maize Crop Height

4.2. Impact of Data Sources and Modeling Algorithms on Biomass Estimation

4.3. AGB Accumulation Rate at Different Growth Stages

4.4. Significance and Constraints of the Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Growth Stage | Features | RFR | LightGBM | GBDT | SVR | ||||

|---|---|---|---|---|---|---|---|---|---|

| R2 | nRMSE (%) | R2 | nRMSE (%) | R2 | nRMSE (%) | R2 | nRMSE (%) | ||

| Trumpet stage | MS | 0.757 | 13.19 | 0.711 | 13.69 | 0.652 | 15.52 | 0.628 | 16.45 |

| MS+TIR | 0.761 | 13.06 | 0.765 | 12.88 | 0.667 | 15.25 | 0.643 | 16.26 | |

| nadir photography 3D | 0.611 | 15.12 | 0.638 | 15.40 | 0.633 | 14.32 | 0.509 | 19.64 | |

| Oblique photography 3D | 0.694 | 12.92 | 0.724 | 12.45 | 0.689 | 11.79 | 0.659 | 16.46 | |

| LiDAR 3D | 0.578 | 15.23 | 0.660 | 13.61 | 0.480 | 19.30 | 0.515 | 17.54 | |

| MS+TIR+nadir photography 3D | 0.776 | 13.34 | 0.779 | 11.95 | 0.712 | 14.17 | 0.688 | 15.07 | |

| MS+TIR+oblique photography 3D | 0.811 | 12.24 | 0.820 | 11.27 | 0.771 | 12.79 | 0.723 | 14.30 | |

| MS+TIR+LiDAR 3D | 0.828 | 11.07 | 0.813 | 11.39 | 0.752 | 11.81 | 0.721 | 15.33 | |

| Big trumpet stage | MS | 0.758 | 12.65 | 0.772 | 14.48 | 0.745 | 13.17 | 0.668 | 13.79 |

| MS+TIR | 0.777 | 12.13 | 0.776 | 12.30 | 0.718 | 10.47 | 0.751 | 13.00 | |

| nadir photography 3D | 0.627 | 15.07 | 0.642 | 14.65 | 0.632 | 14.09 | 0.475 | 15.12 | |

| Oblique photography 3D | 0.693 | 13.02 | 0.701 | 13.05 | 0.679 | 13.53 | 0.549 | 13.99 | |

| LiDAR 3D | 0.701 | 13.79 | 0.689 | 11.72 | 0.676 | 15.67 | 0.579 | 15.49 | |

| MS+TIR+nadir photography 3D | 0.831 | 9.85 | 0.834 | 10.62 | 0.800 | 9.70 | 0.723 | 12.44 | |

| MS+TIR+ Oblique photography 3D | 0.846 | 10.06 | 0.813 | 11.95 | 0.833 | 10.66 | 0.741 | 11.18 | |

| MS+TIR+LiDAR 3D | 0.874 | 9.13 | 0.803 | 12.85 | 0.827 | 10.83 | 0.746 | 11.07 | |

| Silking stage | MS | 0.749 | 10.46 | 0.750 | 11.62 | 0.723 | 13.78 | 0.657 | 12.35 |

| MS+TIR | 0.774 | 9.914 | 0.772 | 11.18 | 0.750 | 13.10 | 0.668 | 12.14 | |

| nadir photography 3D | 0.658 | 11.42 | 0.639 | 12.89 | 0.618 | 16.44 | 0.456 | 14.55 | |

| Oblique photography 3D | 0.640 | 13.82 | 0.631 | 14.43 | 0.624 | 16.08 | 0.552 | 12.95 | |

| LiDAR 3D | 0.665 | 10.98 | 0.665 | 13.84 | 0.641 | 14.09 | 0.528 | 12.42 | |

| MS+TIR+Nadir photography 3D | 0.844 | 8.75 | 0.850 | 10.17 | 0.813 | 10.85 | 0.775 | 10.10 | |

| MS+TIR+oblique photography 3D | 0.850 | 9.43 | 0.860 | 9.83 | 0.834 | 10.56 | 0.786 | 9.12 | |

| MS+TIR+LiDAR 3D | 0.883 | 7.45 | 0.878 | 9.20 | 0.827 | 11.11 | 0.795 | 9.57 | |

| Grain-filling stage | MS | 0.712 | 12.61 | 0.708 | 13.63 | 0.660 | 13.94 | 0.522 | 12.14 |

| MS+TIR | 0.728 | 12.23 | 0.729 | 11.35 | 0.687 | 13.37 | 0.569 | 12.71 | |

| nadir photography 3D | 0.611 | 13.65 | 0.663 | 13.88 | 0.621 | 16.54 | 0.449 | 15.40 | |

| Oblique photography 3D | 0.641 | 13.51 | 0.688 | 16.19 | 0.649 | 14.31 | 0.559 | 11.69 | |

| LiDAR 3D | 0.678 | 10.53 | 0.628 | 13.39 | 0.642 | 13.82 | 0.513 | 13.61 | |

| MS+TIR+Nadir photography 3D | 0.791 | 10.73 | 0.723 | 11.65 | 0.724 | 11.88 | 0.673 | 11.37 | |

| MS+TIR+Oblique photography 3D | 0.836 | 9.117 | 0.828 | 10.01 | 0.818 | 10.19 | 0.723 | 10.23 | |

| MS+TIR+LiDAR 3D | 0.826 | 9.40 | 0.824 | 9.91 | 0.814 | 9.91 | 0.704 | 10.42 | |

References

- Holman, F.; Riche, A.; Michalski, A.; Castle, M.; Wooster, M.; Hawkesford, M. High Throughput Field Phenotyping of Wheat Plant Height and Growth Rate in Field Plot Trials Using UAV Based Remote Sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Shu, M.; Shen, M.; Dong, Q.; Yang, X.; Li, B.; Ma, Y. Estimating the Maize Above-Ground Biomass by Constructing the Tridimensional Concept Model Based on UAV-Based Digital and Multi-Spectral Images. Field Crops Res. 2022, 282, 108491. [Google Scholar] [CrossRef]

- Che, Y.; Wang, Q.; Xie, Z.; Li, S.; Zhu, J.; Li, B.; Ma, Y. High-Quality Images and Data Augmentation Based on Inverse Projection Transformation Significantly Improve the Estimation Accuracy of Biomass and Leaf Area Index. Comput. Electron. Agric. 2023, 212, 108144. [Google Scholar] [CrossRef]

- Jiang, J.; Atkinson, P.M.; Chen, C.; Cao, Q.; Tian, Y.; Zhu, Y.; Liu, X.; Cao, W. Combining UAV and Sentinel-2 Satellite Multi-Spectral Images to Diagnose Crop Growth and N Status in Winter Wheat at the County Scale. Field Crops Res. 2023, 294, 108860. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of Winter-Wheat above-Ground Biomass Based on UAV Ultrahigh-Ground-Resolution Image Textures and Vegetation Indices. Isprs J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Li, Z.; Zhao, Y.; Taylor, J.; Gaulton, R.; Jin, X.; Song, X.; Li, Z.; Meng, Y.; Chen, P.; Feng, H.; et al. Comparison and Transferability of Thermal, Temporal and Phenological-Based in-Season Predictions of above-Ground Biomass in Wheat Crops from Proximal Crop Reflectance Data. Remote Sens. Environ. 2022, 273, 112967. [Google Scholar] [CrossRef]

- Jiang, F.; Kutia, M.; Ma, K.; Chen, S.; Long, J.; Sun, H. Estimating the Aboveground Biomass of Coniferous Forest in Northeast China Using Spectral Variables, Land Surface Temperature and Soil Moisture. Sci. Total Environ. 2021, 785, 147335. [Google Scholar] [CrossRef]

- Pena, J.M.; de Castro, A.; Torres-Sanchez, J.; Andujar, D.; San Martin, C.; Dorado, J.; Fernandez-Quintanilla, C.; Lopez-Granados, F. Estimating Tree Height and Biomass of a Poplar Plantation with Image-Based UAV Technology. Aims Agric. Food 2018, 3, 313–326. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote Sensing for Agricultural Applications: A Meta-Review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.W.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVMVI) for Soybean Biomass Estimation from Unmanned Aerial System-Based RGB Imagery. Isprs J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Itakura, K.; Hosoi, F. Estimation of Leaf Inclination Angle in Three-Dimensional Plant Images Obtained from Lidar. Remote Sens. 2019, 11, 344. [Google Scholar] [CrossRef]

- Oehmcke, S.; Li, L.; Trepekli, K.; Revenga, J.C.; Nord-Larsen, T.; Gieseke, F.; Igel, C. Deep Point Cloud Regression for Above-Ground Forest Biomass Estimation from Airborne LiDAR. Remote Sens. Environ. 2024, 302, 113968. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, J.; Niu, L.; Chang, S.; Sun, L. Comparative analysis of extraction algorithms for crown volume and surface area using UAV tilt photogrammetry. J. For. Eng. 2022, 7, 166–173. [Google Scholar] [CrossRef]

- Kachamba, D.J.; Orka, H.O.; Gobakken, T.; Eid, T.; Mwase, W. Biomass Estimation Using 3D Data from Unmanned Aerial Vehicle Imagery in a Tropical Woodland. Remote Sens. 2016, 8, 968. [Google Scholar] [CrossRef]

- Hassan, M.A.; Yang, M.; Fu, L.; Rasheed, A.; Zheng, B.; Xia, X.; Xiao, Y.; He, Z. Accuracy Assessment of Plant Height Using an Unmanned Aerial Vehicle for Quantitative Genomic Analysis in Bread Wheat. Plant Methods 2019, 15, 37. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, Y.; Guo, T.; Guo, C.; Wang, X.; Jiang, C.; Cheng, T.; Zhu, Y.; Cao, W.; Chen, Q.; et al. Assessment of the Influence of UAV-Borne LiDAR Scan Angle and Flight Altitude on the Estimation of Wheat Structural Metrics with Different Leaf Angle Distributions. Comput. Electron. Agric. 2024, 220, 108858. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-Ground Biomass Estimation and Yield Prediction in Potato by Using UAV-Based RGB and Hyperspectral Imaging. Isprs J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Chang, A.; Jung, J.; Maeda, M.M.; Landivar, J. Crop Height Monitoring with Digital Imagery from Unmanned Aerial System (UAS). Comput. Electron. Agric. 2017, 141, 232–237. [Google Scholar] [CrossRef]

- Wang, D.; Li, R.; Zhu, B.; Liu, T.; Sun, C.; Guo, W. Estimation of Wheat Plant Height and Biomass by Combining UAV Imagery and Elevation Data. Agriculture 2023, 13, 9. [Google Scholar] [CrossRef]

- Hu, P.; Chapman, S.C.; Wang, X.; Potgieter, A.; Duan, T.; Jordan, D.; Guo, Y.; Zheng, B. Estimation of Plant Height Using a High Throughput Phenotyping Platform Based on Unmanned Aerial Vehicle and Self-Calibration: Example for Sorghum Breeding. Eur. J. Agron. 2018, 95, 24–32. [Google Scholar] [CrossRef]

- Lu, J.; Cheng, D.; Geng, C.; Zhang, Z.; Xiang, Y.; Hu, T. Combining Plant Height, Canopy Coverage and Vegetation Index from UAV-Based RGB Images to Estimate Leaf Nitrogen Concentration of Summer Maize. Biosyst. Eng. 2021, 202, 42–54. [Google Scholar] [CrossRef]

- Jimenez-Berni, J.A.; Deery, D.M.; Rozas-Larraondo, P.; Condon, A.T.G.; Rebetzke, G.J.; James, R.A.; Bovill, W.D.; Furbank, R.T.; Sirault, X.R. High Throughput Determination of Plant Height, Ground Cover, and Above-Ground Biomass in Wheat with LiDAR. Front. Plant Sci. 2018, 9, 237. [Google Scholar] [CrossRef]

- Sudu, B.; Rong, G.; Guga, S.; Li, K.; Zhi, F.; Guo, Y.; Zhang, J.; Bao, Y. Retrieving SPAD Values of Summer Maize Using UAV Hyperspectral Data Based on Multiple Machine Learning Algorithm. Remote Sens. 2022, 14, 5407. [Google Scholar] [CrossRef]

- Chen, L.; He, A.; Xu, Z.; Li, B.; Zhang, H.; Li, G.; Guo, X.; Li, Z. Mapping Aboveground Biomass of Moso Bamboo (Phyllostachys pubescens) Forests under Pantana phyllostachysae Chao-Induced Stress Using Sentinel-2 Imagery. Ecol. Indic. 2024, 158, 111564. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, L.; Chen, X.; Liu, Y.; Wang, S.; Wang, L. Prediction of Winter Wheat Yield at County Level in China Using Ensemble Learning. Prog. Phys. Geogr. -Earth Environ. 2022, 46, 676–696. [Google Scholar] [CrossRef]

- Zhang, S.-H.; He, L.; Duan, J.-Z.; Zang, S.-L.; Yang, T.-C.; Schulthess, U.R.S.; Guo, T.-C.; Wang, C.-Y.; Feng, W. Aboveground Wheat Biomass Estimation from a Low-Altitude UAV Platform Based on Multimodal Remote Sensing Data Fusion with the Introduction of Terrain Factors. Precis. Agric. 2024, 25, 119–145. [Google Scholar] [CrossRef]

- Yan, X.; Li, J.; Smith, A.R.; Yang, D.; Ma, T.; Su, Y.; Shao, J. Evaluation of Machine Learning Methods and Multi-Source Remote Sensing Data Combinations to Construct Forest above-Ground Biomass Models. Int. J. Digit. Earth 2023, 16, 4471–4491. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Z.; Luo, Y.; Cao, J.; Xie, R.; Li, S. Integrating Satellite-Derived Climatic and Vegetation Indices to Predict Smallholder Maize Yield Using Deep Learning. Agric. For. Meteorol. 2021, 311, 108666. [Google Scholar] [CrossRef]

- Guo, Y.; Xiao, Y.; Hao, F.; Zhang, X.; Chen, J.; de Beurs, K.; He, Y.; Fu, Y.H. Comparison of Different Machine Learning Algorithms for Predicting Maize Grain Yield Using UAV-Based Hyperspectral Images. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103528. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Campos-Taberner, M.; Moreno-Martinez, A.; Walther, S.; Duveiller, G.; Cescatti, A.; Mahecha, M.D.; Munoz-Mari, J.; Javier Garcia-Haro, F.; Guanter, L.; et al. A Unified Vegetation Index for Quantifying the Terrestrial Biosphere. Sci. Adv. 2021, 7, eabc7447. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel Algorithms for Remote Estimation of Vegetation Fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Xu, R.; Zhao, S.; Ke, Y. A Simple Phenology-Based Vegetation Index for Mapping Invasive Spartina Alterniflora Using Google Earth Engine. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 190–201. [Google Scholar] [CrossRef]

- Zhao, D.; Zhen, J.; Zhang, Y.; Miao, J.; Shen, Z.; Jiang, X.; Wang, J.; Jiang, J.; Tang, Y.; Wu, G. Mapping Mangrove Leaf Area Index (LAI) by Combining Remote Sensing Images with PROSAIL-D and XGBoost Methods. Remote Sens. Ecol. Conserv. 2023, 9, 370–389. [Google Scholar] [CrossRef]

- Jelowicki, L.; Sosnowicz, K.; Ostrowski, W.; Osinska-Skotak, K.; Bakula, K. Evaluation of Rapeseed Winter Crop Damage Using UAV-Based Multispectral Imagery. Remote Sens. 2020, 12, 2618. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, F.; Qi, Y.; Deng, L.; Wang, X.; Yang, S. New Research Methods for Vegetation Information Extraction Based on Visible Light Remote Sensing Images from an Unmanned Aerial Vehicle (UAV). Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 215–226. [Google Scholar] [CrossRef]

- Resende, E.L.; Bruzi, A.T.; Cardoso, E.d.S.; Carneiro, V.Q.; Pereira de Souza, V.A.; Frois Correa Barros, P.H.; Pereira, R.R. High-Throughput Phenotyping: Application in Maize Breeding. AgriEngineering 2024, 6, 1078–1092. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean Yield Prediction from UAV Using Multimodal Data Fusion and Deep Learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Jiang, Z.; Yang, S.; Dong, S.; Pang, Q.; Smith, P.; Abdalla, M.; Zhang, J.; Wang, G.; Xu, Y. Simulating Soil Salinity Dynamics, Cotton Yield and Evapotranspiration under Drip Irrigation by Ensemble Machine Learning. Front. Plant Sci. 2023, 14, 1143462. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Jian, Z.; Tianjin, X.I.E.; Wanneng, Y.; Guangsheng, Z. Research Status and Prospect on Height Estimation of Field Crop Using Near-Field Remote Sensing Technology. Smart Agric. 2021, 3, 1. [Google Scholar] [CrossRef]

- Liu, T.; Zhu, S.; Yang, T.; Zhang, W.; Xu, Y.; Zhou, K.; Wu, W.; Zhao, Y.; Yao, Z.; Yang, G.; et al. Maize Height Estimation Using Combined Unmanned Aerial Vehicle Oblique Photography and LIDAR Canopy Dynamic Characteristics. Comput. Electron. Agric. 2024, 218, 108685. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, R.; Guo, J.; Dai, J.; Zhang, J.; Zhang, L.; Miao, Y. Estimation of Aboveground Biomass of Senescence Grassland in China’s Arid Region Using Multi-Source Data. Sci. Total Environ. 2024, 918, 170602. [Google Scholar] [CrossRef]

- Zhang, C.; Zhu, X.; Li, M.; Xue, Y.; Qin, A.; Gao, G.; Wang, M.; Jiang, Y. Utilization of the Fusion of Ground-Space Remote Sensing Data for Canopy Nitrogen Content Inversion in Apple Orchards. Horticulturae 2023, 9, 1085. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-Based Multi-Sensor Data Fusion and Machine Learning Algorithm for Yield Prediction in Wheat. Precis. Agric. 2023, 24, 187–212. [Google Scholar] [CrossRef]

- Fei, S.; Xiao, S.; Li, Q.; Shu, M.; Zhai, W.; Xiao, Y.; Chen, Z.; Yu, H.; Ma, Y. Enhancing Leaf Area Index and Biomass Estimation in Maize with Feature Augmentation from Unmanned Aerial Vehicle-Based Nadir and Cross-Circling Oblique Photography. Comput. Electron. Agric. 2023, 215, 108462. [Google Scholar] [CrossRef]

- Yu, H.; Li, S.; Ding, J.; Yang, T.; Wang, Y. Water Use Efficiency and Its Drivers of Two Typical Cash Crops in an Arid Area of Northwest China. Agric. Water Manag. 2023, 287, 108433. [Google Scholar] [CrossRef]

- Varga, I.; Radocaj, D.; Jurisic, M.; Kulundzic, A.M.; Antunovic, M. Prediction of Sugar Beet Yield and Quality Parameters with Varying Nitrogen Fertilization Using Ensemble Decision Trees and Artificial Neural Networks. Comput. Electron. Agric. 2023, 212, 108076. [Google Scholar] [CrossRef]

- Shang, W.; Zhang, Z.; Zheng, E.; Liu, M. Nitrogen-water Coupling Affects Nitrogen Utilization and Yield of Film-mulched Maize under Drip Irrigation. J. Irrig. Drain. 2019, 38, 49–55. [Google Scholar] [CrossRef]

- Yang, W.; Parsons, D.; Mao, X. Exploring Limiting Factors for Maize Growth in Northeast China and Potential Coping Strategies. Irrig. Sci. 2023, 41, 321–335. [Google Scholar] [CrossRef]

- Feng, G.Z.; Wang, Y.; Yan, L.; Zhou, X.; Wang, S.J.; Gao, Q.; Mi, G.H.; Yu, H.; Cui, Z.L. Effects of nitrogen and three soil types on maize (Zea mays L.) Grain yield in northeast China. Appl. Ecol. Environ. Res. 2019, 17, 4229–4243. [Google Scholar] [CrossRef]

- Li, L.; Zhang, J.; Dong, S.; Liu, P.; Zhao, B.; Yang, J. Characteristics of Accumulation, Transition and Distribution of Assimilate in Summer Maize Varieties with Different Plant Height. Acta Agron. Sin. 2012, 38, 1080–1087. [Google Scholar] [CrossRef]

| Data | Growth Stage | Number of Images | Point Number | Maximum Density (Points/m2) | Average Density (Points/m2) |

|---|---|---|---|---|---|

| Nadir photography | Trumpet | 279 | 14145676 | 16,152 | 5206.36 |

| Big trumpet | 306 | 11727280 | 10,404 | 4278.47 | |

| Silking | 308 | 5767643 | 7006 | 2133.79 | |

| Grain-filling | 305 | 9870493 | 9411 | 3705.14 | |

| Oblique photography | Trumpet | 1534 | 50155628 | 44,924 | 18,365.3 |

| Big trumpet | 1526 | 45023633 | 48,450 | 16,207.2 | |

| Silking | 1774 | 46439446 | 69,036 | 17,060.8 | |

| Grain-filling | 1772 | 43336920 | 48,793 | 15,770.3 | |

| LiDAR | Trumpet | / | 3471345 | 3266 | 1260.93 |

| Big trumpet | / | 2877150 | 3510 | 1037.93 | |

| Silking | / | 3488299 | 4224 | 1366.89 | |

| Grain-filling | / | 4522743 | 4579 | 1636.9 |

| Features | Formula | Reference |

|---|---|---|

| Kernel-normalized-difference vegetation index (kNDVI) | [30] | |

| Vegetation index green (VIG) | [31] | |

| Ratio vegetation index (RVI) | [6] | |

| Green–red-normalized-difference vegetation index (GRNDVI) | [32] | |

| Renormalized-difference vegetation index—red edge (RDVI-REG) | [33] | |

| Optimization of soil regulatory vegetation index (OSAVI) | [34] | |

| Red green blue vegetation index (RGBVI) | [35] | |

| Visible-band-difference vegetation index (VARI) | [36] | |

| Wide dynamic range vegetation index (WDRVI) | [28] |

| Growth Stage | dx (m) | dy (m) | dz (m) | 3D Error (m) | Vertical Error (m) | Horizontal Error (m) |

|---|---|---|---|---|---|---|

| Trumpet stage | 0.057 | 0.056 | 0.084 | 0.116 | 0.084 | 0.080 |

| Big trumpet stage | 0.059 | 0.058 | 0.057 | 0.101 | 0.057 | 0.083 |

| Silking stage | 0.037 | 0.047 | 0.034 | 0.069 | 0.034 | 0.06 |

| Grain-filling stage | 0.062 | 0.053 | 0.070 | 0.108 | 0.070 | 0.082 |

| Data Type | RFR | LightGBM | GBDT | SVR | ||||

|---|---|---|---|---|---|---|---|---|

| R2 | nRMSE (%) | R2 | nRMSE (%) | R2 | nRMSE (%) | R2 | nRMSE (%) | |

| TIR | 0.797 | 24.17 | 0.809 | 12.66 | 0.780 | 13.57 | 0.734 | 28.53 |

| MS | 0.850 | 19.50 | 0.857 | 10.76 | 0.852 | 9.299 | 0.836 | 20.98 |

| MS+TIR | 0.884 | 18.42 | 0.881 | 9.78 | 0.866 | 10.51 | 0.841 | 21.96 |

| Nadir photography 3D | 0.853 | 20.48 | 0.863 | 10.47 | 0.846 | 10.71 | 0.829 | 21.02 |

| Oblique photography 3D | 0.889 | 19.38 | 0.900 | 8.22 | 0.880 | 8.990 | 0.858 | 21.18 |

| LiDAR 3D | 0.879 | 17.79 | 0.899 | 8.14 | 0.873 | 9.194 | 0.860 | 19.11 |

| MS+TIR+Nadir photography 3D | 0.905 | 15.75 | 0.915 | 7.707 | 0.884 | 10.38 | 0.863 | 21.49 |

| MS+TIR+oblique photography 3D | 0.929 | 15.39 | 0.939 | 6.477 | 0.898 | 8.514 | 0.880 | 18.88 |

| MS+TIR+LiDAR 3D | 0.912 | 15.14 | 0.916 | 7.595 | 0.902 | 8.169 | 0.895 | 17.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Li, C.; Cheng, Q.; Duan, F.; Zhai, W.; Li, Z.; Mao, B.; Ding, F.; Kuang, X.; Chen, Z. Estimating Maize Crop Height and Aboveground Biomass Using Multi-Source Unmanned Aerial Vehicle Remote Sensing and Optuna-Optimized Ensemble Learning Algorithms. Remote Sens. 2024, 16, 3176. https://doi.org/10.3390/rs16173176

Li Y, Li C, Cheng Q, Duan F, Zhai W, Li Z, Mao B, Ding F, Kuang X, Chen Z. Estimating Maize Crop Height and Aboveground Biomass Using Multi-Source Unmanned Aerial Vehicle Remote Sensing and Optuna-Optimized Ensemble Learning Algorithms. Remote Sensing. 2024; 16(17):3176. https://doi.org/10.3390/rs16173176

Chicago/Turabian StyleLi, Yafeng, Changchun Li, Qian Cheng, Fuyi Duan, Weiguang Zhai, Zongpeng Li, Bohan Mao, Fan Ding, Xiaohui Kuang, and Zhen Chen. 2024. "Estimating Maize Crop Height and Aboveground Biomass Using Multi-Source Unmanned Aerial Vehicle Remote Sensing and Optuna-Optimized Ensemble Learning Algorithms" Remote Sensing 16, no. 17: 3176. https://doi.org/10.3390/rs16173176

APA StyleLi, Y., Li, C., Cheng, Q., Duan, F., Zhai, W., Li, Z., Mao, B., Ding, F., Kuang, X., & Chen, Z. (2024). Estimating Maize Crop Height and Aboveground Biomass Using Multi-Source Unmanned Aerial Vehicle Remote Sensing and Optuna-Optimized Ensemble Learning Algorithms. Remote Sensing, 16(17), 3176. https://doi.org/10.3390/rs16173176