SAR Image Despeckling Based on Denoising Diffusion Probabilistic Model and Swin Transformer

Abstract

:1. Introduction

- (1)

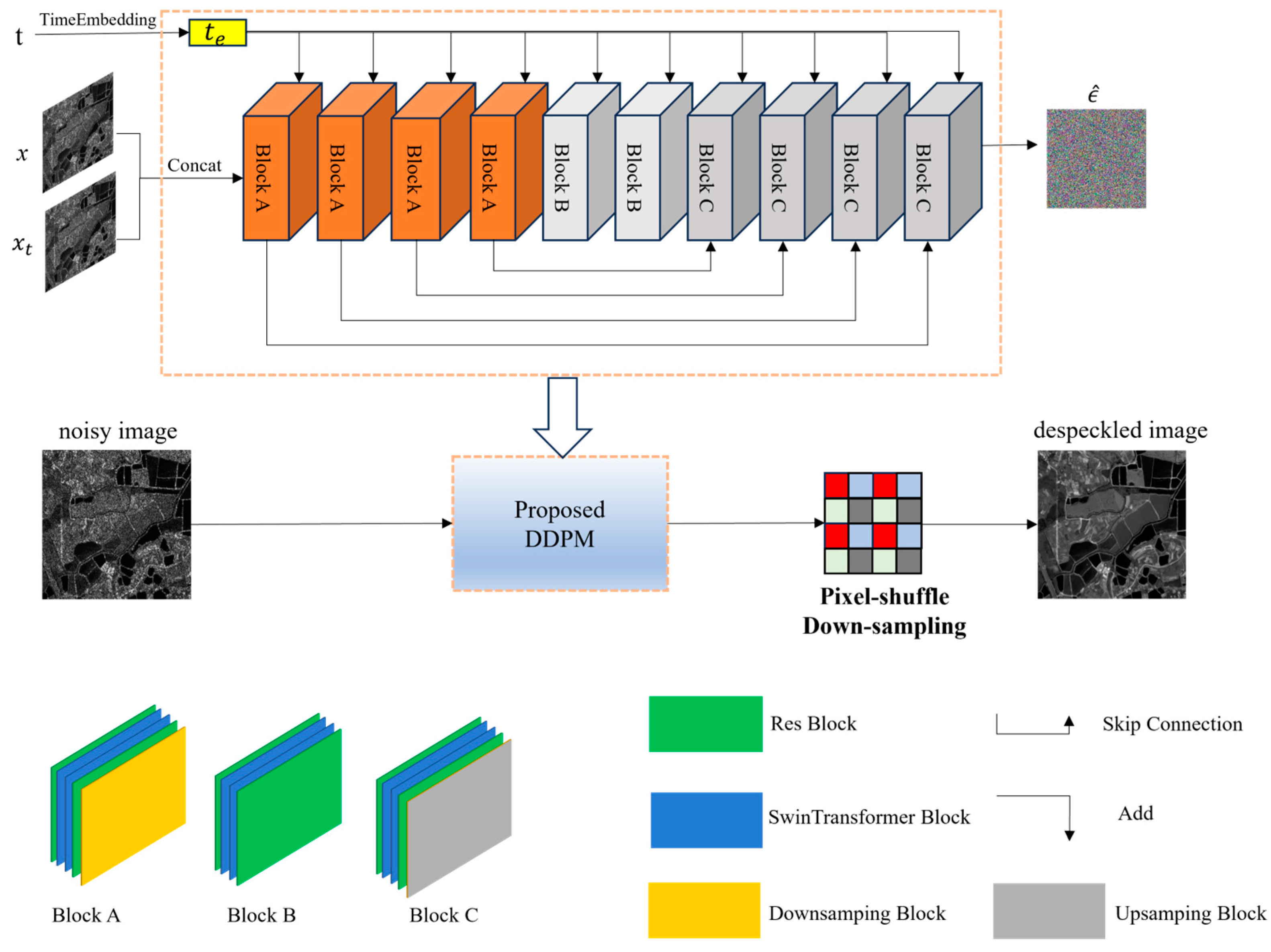

- We propose a SAR denoising algorithm based on the DDPM framework, which enhances the authenticity of the results with its powerful generation ability. The prediction network in the DDPM reverse diffusion process has been customized to better suit SAR despeckling tasks. Additionally, the network adopts a mixed-loss function aimed at effectively suppressing speckles while preserving the texture and details of the image.

- (2)

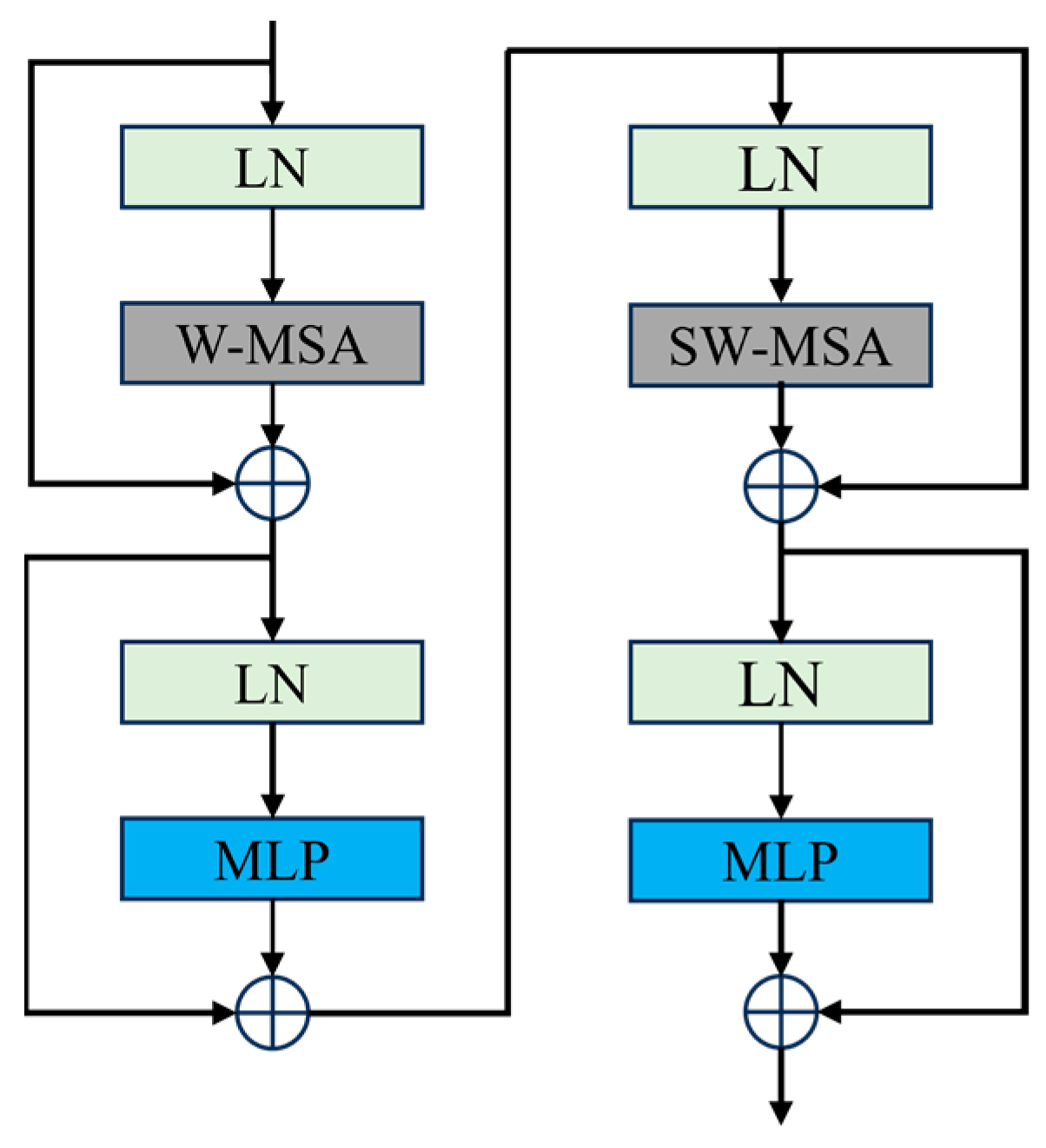

- We integrated the Swin Transformer Block into the U-net network used in DDPM, harnessing the Swin Transformer’s strong capability to extract contextual information. This integration enhances our network’s capacity to extract local and global features effectively.

- (3)

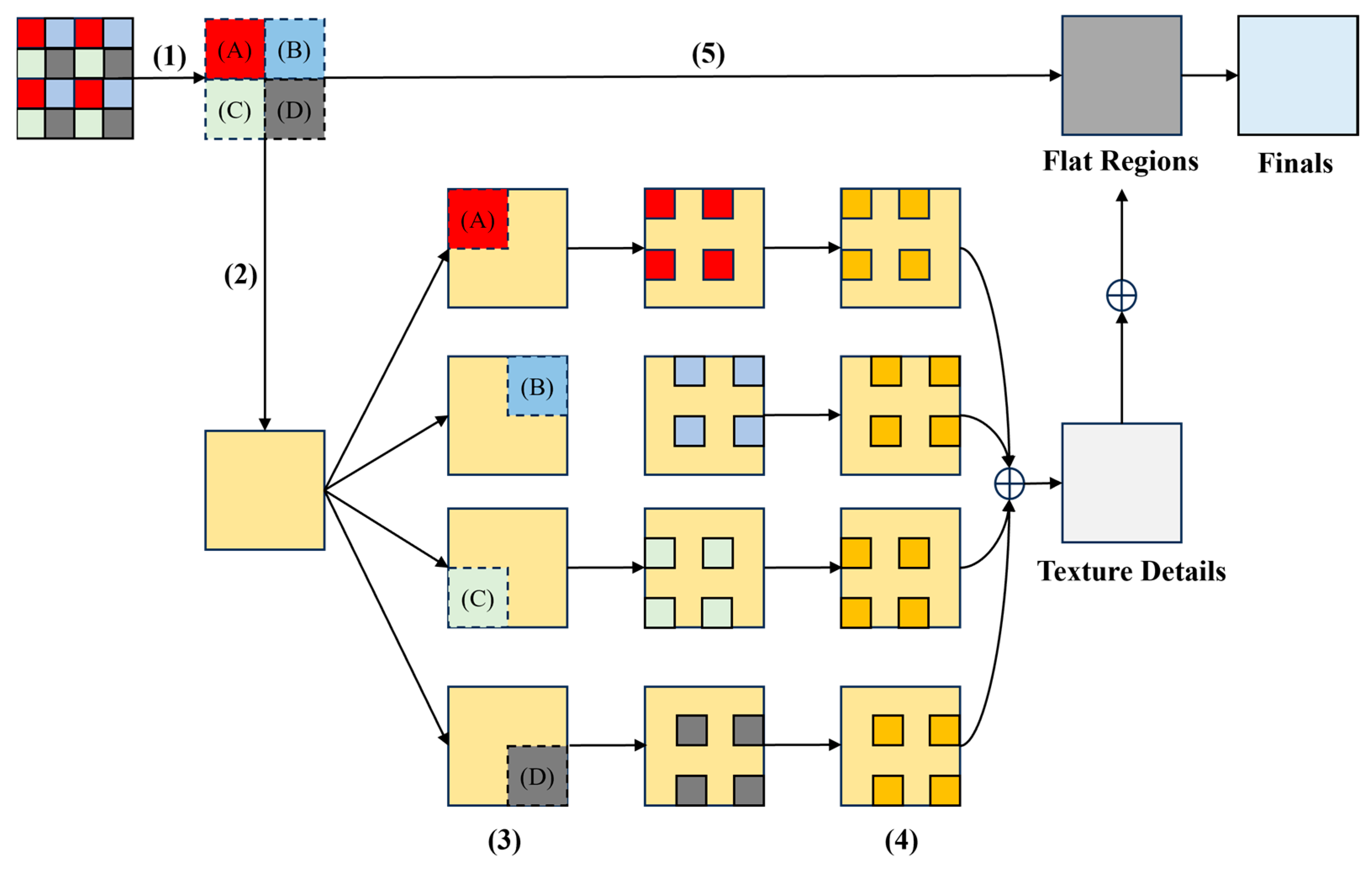

- We implemented a strategy known as PD refinement during the post-processing stage to mitigate the adverse effects of Gaussian white noise, which is typically used in simulated images and DDPM training. This method aims to enhance the algorithm’s capacity to adapt to SAR speckle noise in actual scenarios.

2. Related Works

2.1. SAR Speckle Model

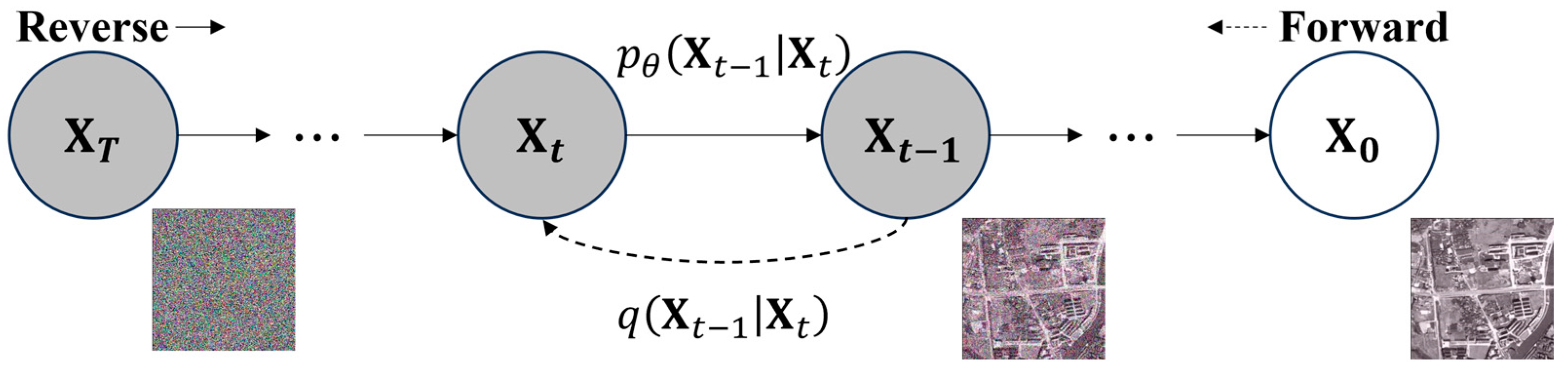

2.2. Denoising Diffusion Probabilistic Model

3. Methodology

3.1. General Architecture

| Algorithm 1 Traing a denoising model |

| 1: repeat |

| 2: 3: 4: 5: Take gradient descent step on 6: until converged |

| Algorithm 2 Samping |

| 1: |

| 2: for t = T, …, 1 do 3: if t > 1, else 4: 5: end for 6: return |

3.2. Swin Transformer Block

3.3. Pixel-Shuffle Down-Sampling

- Use the stride s, which is 2 in our method, to pixel-shuffle the image into a mosaic . By doing so, we can eliminate noise correlation by dividing adjacent pixels into different small images. When the down sampling rate is high, spectral aliasing and edge loss may occur, so we choose a small number of s;

- Despeckle

- Refill each sub-image with speckle image blocks separately, and pixel-shuffle up sample them;

- Despeckle each refilled image again and average them;

- Combining the details and texture information from speckled images with over-smoothed regions.

3.4. Loss Function

3.4.1. L1 Loss

3.4.2. KL Loss

3.4.3. TV Loss

4. Experiments and Results

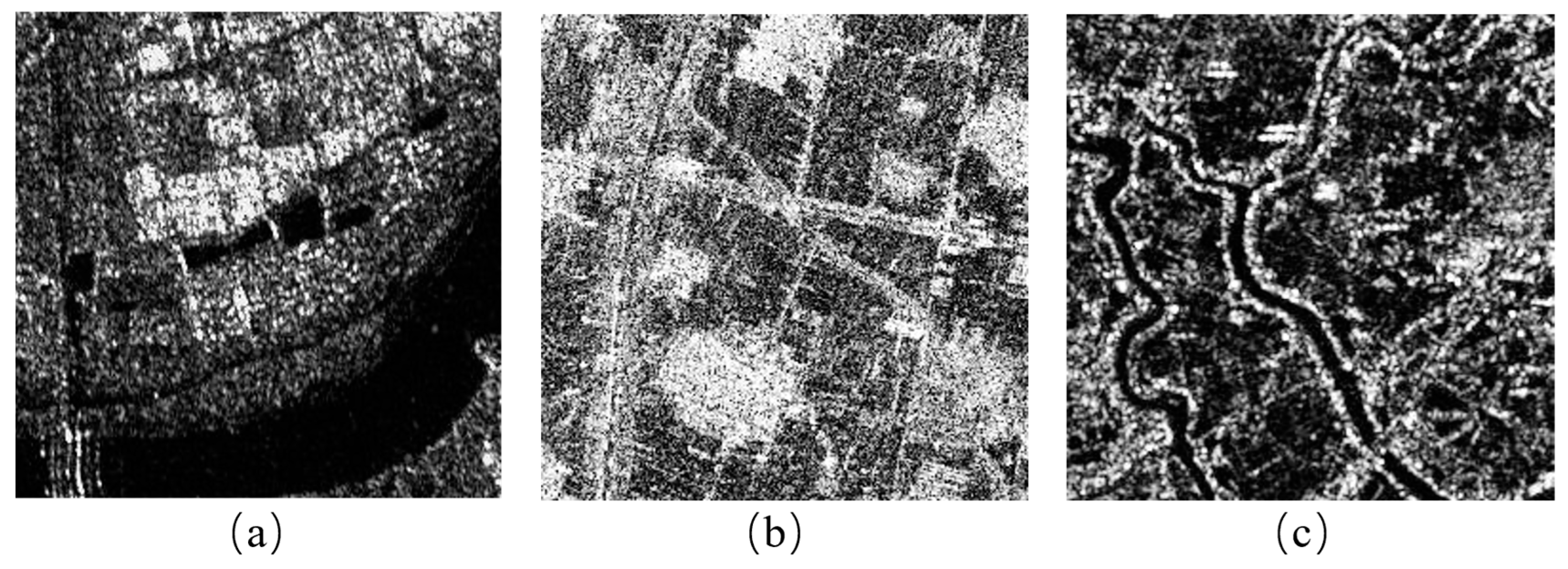

4.1. Datasets

4.2. Experiments Preparation

4.3. Comparative Methods

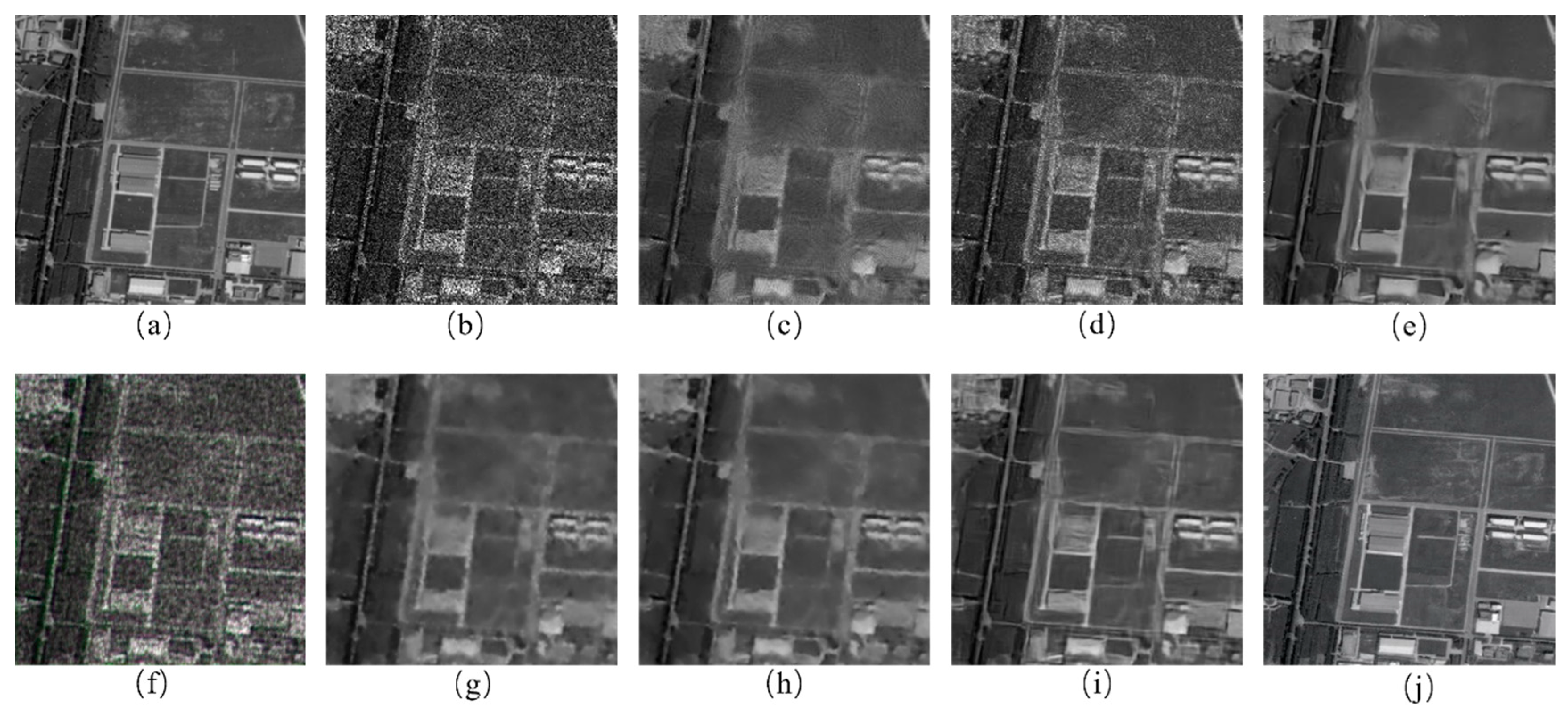

4.4. Synthetic Image Analysis

4.5. No Reference Evaluation Indicators

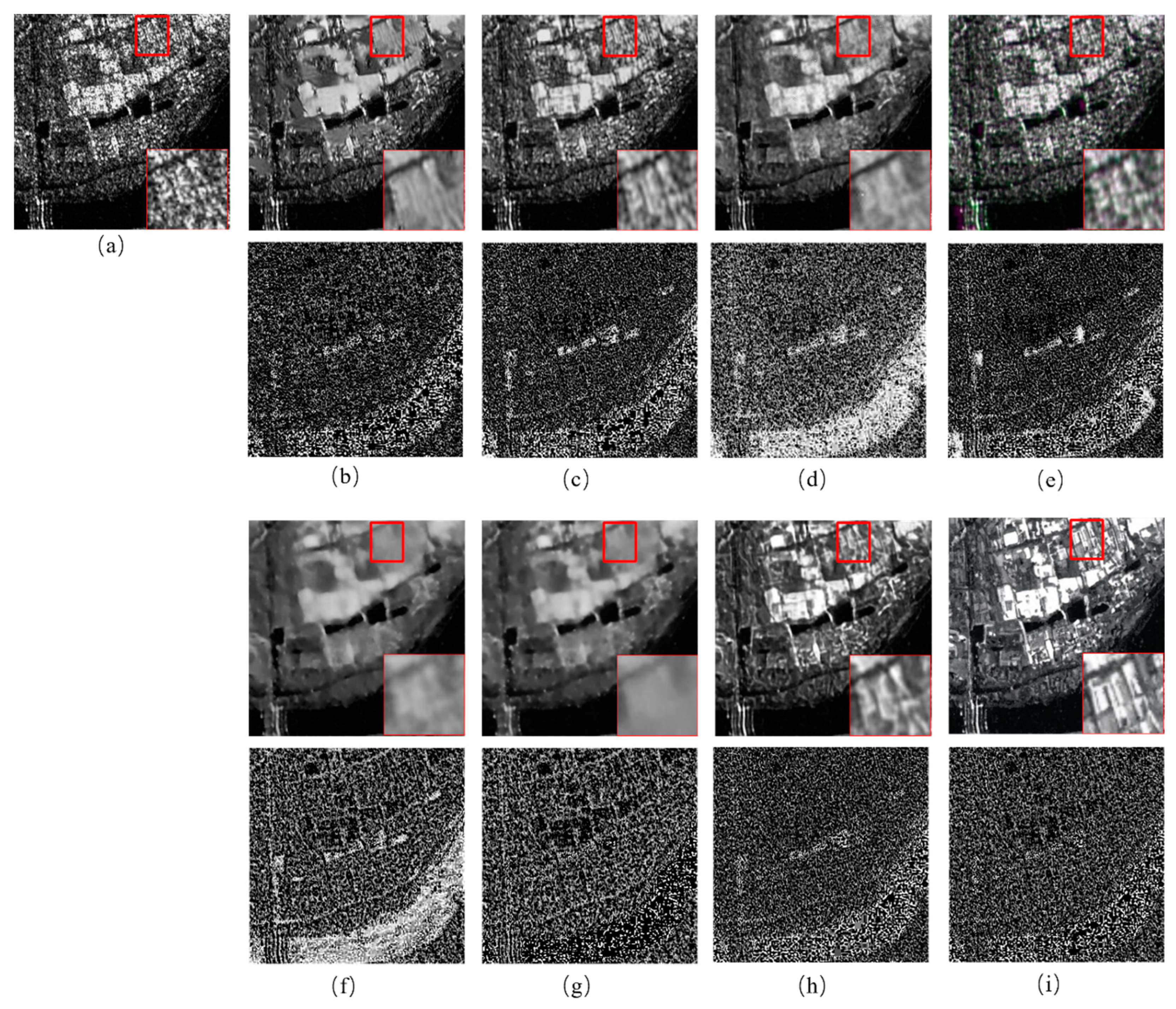

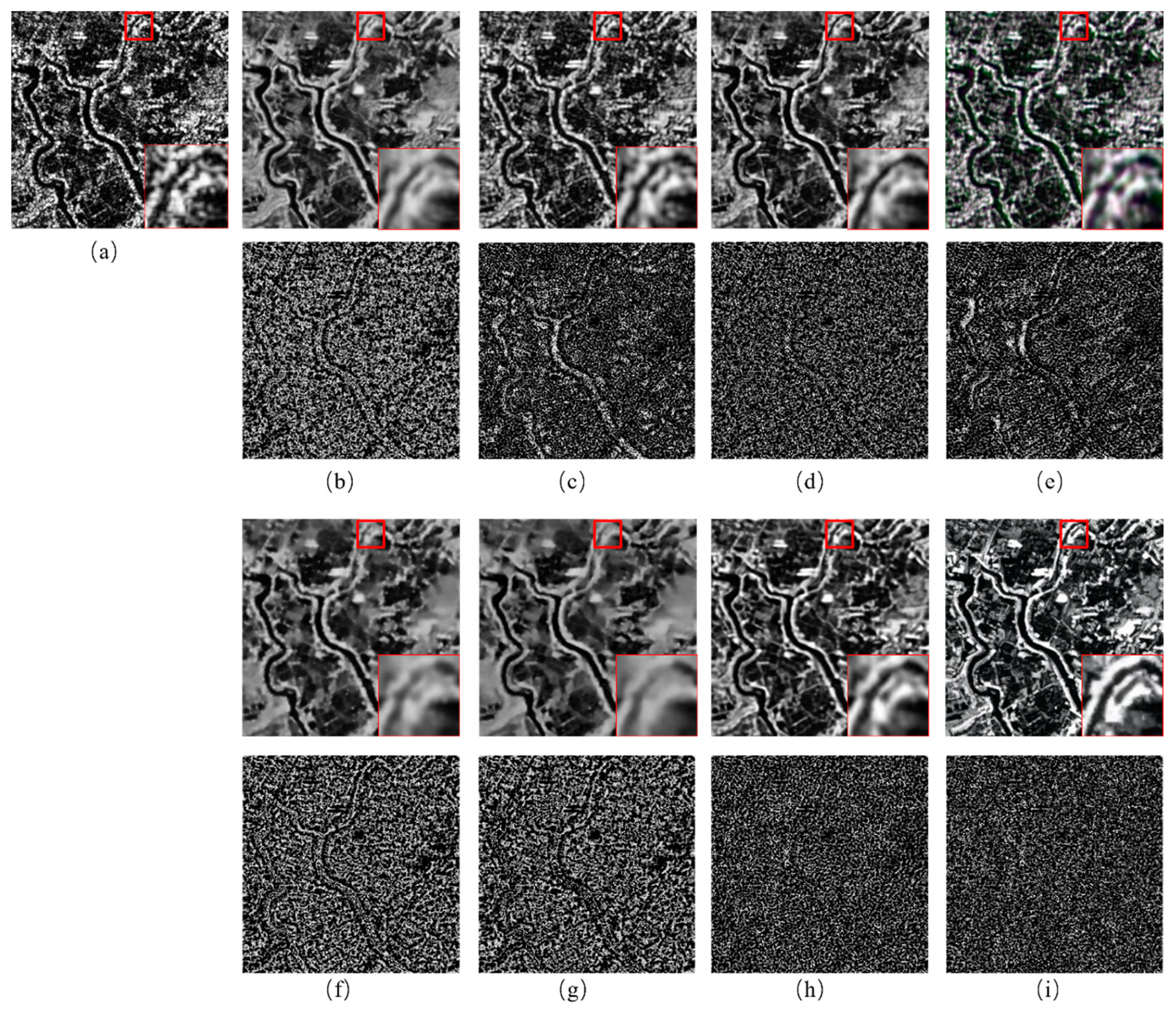

4.6. Real Images Analysis

4.7. Ablation Study

4.7.1. SwinTransformer Block Effect

4.7.2. PD Refinement Effect

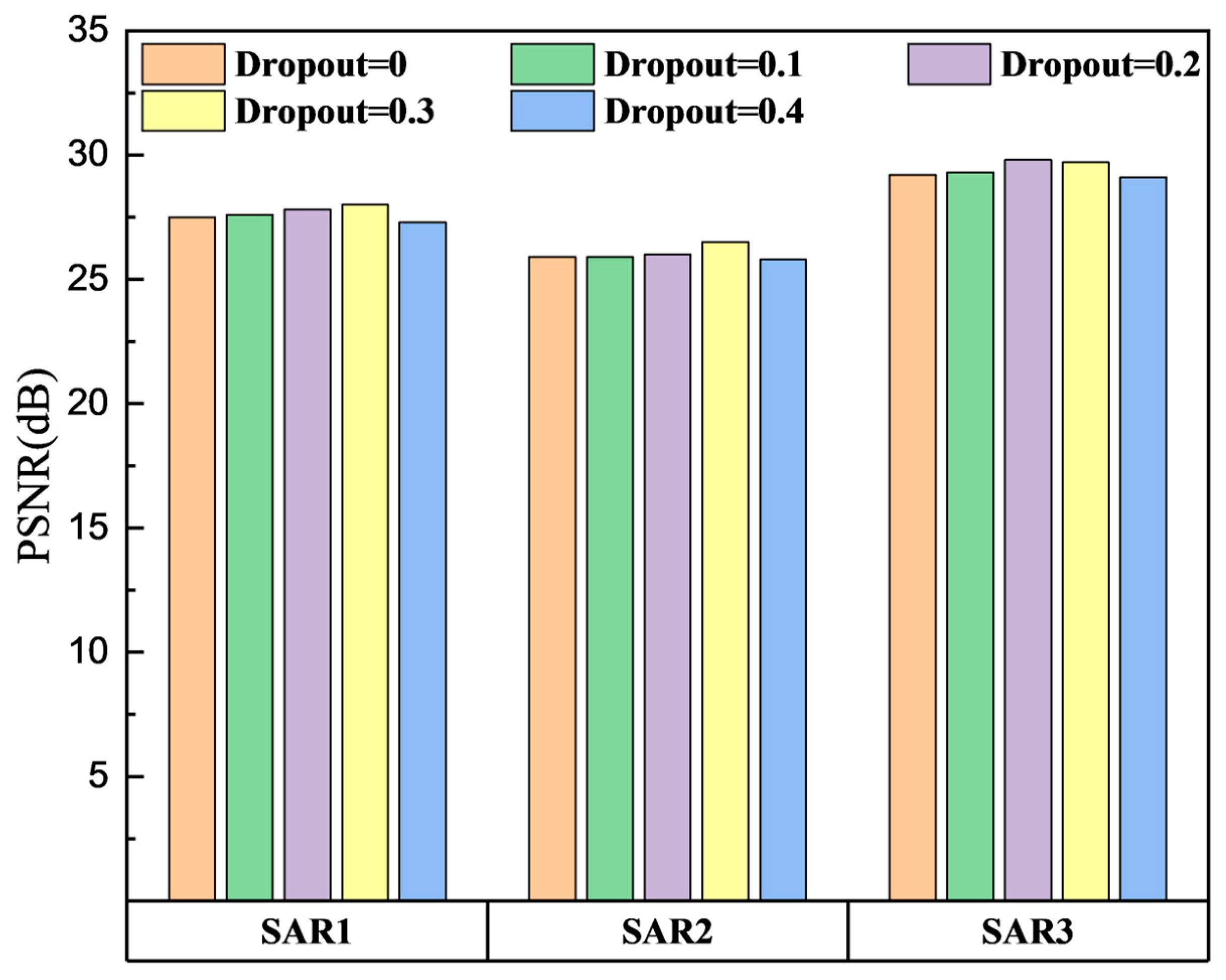

4.7.3. Dropout Rate

4.7.4. Time Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SAR | Synthetic Aperture Radar |

| Probability Density Function | |

| PPB | the probabilistic patch-based |

| SARBM3D | SAR block-matching three-dimensional |

| DDPM | Denoising Diffusion Probabilistic Model |

| CNN | Convolutional Neural Network |

| SAR-DRN | SAR Dilated Residual Network |

| SAR-Transformer | Transformer based SAR |

| SAR-ON | SAR overcomplete convolutional networks |

| SAR-CAM | SAR Continuous Attention Module |

| AWGN | Additive White Gaussian Noise |

| ReLU | Rectifier Linear Unit |

| BN | Batch Normalization |

| ST | Swin Transformer |

| PD | Pixel-shuffle Down-sampling |

| GAN | Generative Adversarial Network |

| TV | Total Variation |

| KL | Kullback-Leibler |

References

- Moreira, J. Improved Multi Look Techniques Applied to SAR and Scan SAR Imagery. In Proceedings of the International Geoscience and Remote Sensing Symposium IGARSS 90, College Park, MD, USA, 20–24 May 1990. [Google Scholar]

- Lee, J.S. Digital Image Enhancement and Noise Filtering by Use of Local Statistics. IEEE Trans. Pattern Anal. Mach. Intell. 2009; PAMI-2, 165–168. [Google Scholar]

- Frost, V.S.; Stiles, J.A.; Shanmugan, K.S.; Holtzman, J.C. A Model for Radar Images and Its Application to Adaptive Digital Filtering of Multiplicative Noise. IEEE Trans. Pattern Anal. Mach. Intell. 1982, 4, 157–166. [Google Scholar] [CrossRef]

- Kuan, D.T.; Sawchuk, A.A.; Strand, T.C.; Chavel, P. Adaptive Noise Smoothing Filter for Images with Signal-Dependent Noise. IEEE Trans. Pattern Anal. Mach. Intell. 1985, 7, 165–177. [Google Scholar] [CrossRef] [PubMed]

- Lopes, A.; Nezry, E.; Touzi, R.; Laur, H. Maximum a Posteriori Speckle Filtering and First Order Texture Models in Sar Images. In Proceedings of the International Geoscience & Remote Sensing Symposium, College Park, MD, USA, 20–24 May 1990. [Google Scholar]

- Guo, H.; Odegard, J.E.; Lang, M.; Gopinath, R.A.; Selesnick, I.W.; Burrus, C.S. Wavelet based speckle reduction with application to SAR based ATD/R. In Proceedings of the Image Processing, Austin, TX, USA, 13–16 November 1994; pp. 75–79. [Google Scholar]

- Franceschetti, G.; Pascazio, V.; Schirinzi, G. Iterative homomorphic technique for speckle reduction in synthetic-aperture radar imaging. J. Opt. Soc. Am. A 1995, 12, 686–694. [Google Scholar] [CrossRef]

- Gagnon, L.; Jouan, A. Speckle filtering of SAR images: A comparative study between complex-wavelet-based and standard filters. In Proceedings of the Optical Science, Engineering and Instrumentation ’97, San Diego, CA, USA, 27 July–1 August 1997. [Google Scholar]

- Chang, S.; Yu, B.; Vetterli, M. Spatially adaptive wavelet thresholding with context modeling for image denoising. IEEE Trans. Image Process. 2000, 9, 1522–1531. [Google Scholar] [CrossRef]

- Argenti, F.; Alparone, L. Speckle removal from SAR images in the undecimated wavelet domain. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2363–2374. [Google Scholar] [CrossRef]

- Deledalle, C.A.; Denis, L.; Tupin, F. Iterative weighted maximum likelihood denoising with probabilistic patch-based weights. IEEE Trans Image Process 2009, 18, 2661–2672. [Google Scholar] [CrossRef]

- Parrilli, S.; Poderico, M.; Angelino, C.V.; Verdoliva, L. A Nonlocal SAR Image Denoising Algorithm Based on LLMMSE Wavelet Shrinkage. IEEE Trans. Geosci. Remote Sens. 2012, 50, 606–616. [Google Scholar] [CrossRef]

- Deledalle, C.A.; Denis, L.; Tupin, F.; Reigber, A.; Jager, M. NL-SAR: A unified Non-Local framework for resolution-preserving (Pol)(In)SAR denoising. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2021–2038. [Google Scholar] [CrossRef]

- Cozzolino, D.; Parrilli, S.; Scarpa, G.; Poggi, G. Fast Adaptive Nonlocal SAR Despeckling. IEEE Geoence Remote Sens. Lett. 2013, 11, 524–528. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Tian, C.; Fei, L.; Zheng, W.; Xu, Y.; Zuo, W.; Lin, C.W. Deep Learning on Image Denoising: An overview. Neural Netw. 2020, 131, 251–275. [Google Scholar] [CrossRef] [PubMed]

- Chierchia, G.; Cozzolino, D.; Poggi, G.; Verdoliva, L. SAR image despeckling through convolutional neural networks. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5438–5441. [Google Scholar]

- Wang, P.; Zhang, H.; Patel, V.M. SAR Image Despeckling Using a Convolutional Neural Network. IEEE Signal Process. Lett. 2017, 24, 1763–1767. [Google Scholar] [CrossRef]

- Vitale, S.; Ferraioli, G.; Pascazio, V. Multi-Objective CNN-Based Algorithm for SAR Despeckling. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9336–9349. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.Q.; Li, J.; Yang, Z.; Ma, X.S. Learning a Dilated Residual Network for SAR Image Despeckling. Remote Sens. 2018, 10, 18. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the NIPS’17: Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Perera, M.V.; Bandara, W.G.C.; Valanarasu, J.M.J.; Patel, V.M. Transformer-Based Sar Image Despeckling. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 751–754. [Google Scholar]

- Ko, J.; Lee, S. SAR Image Despeckling Using Continuous Attention Module. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2022, 15, 3–19. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, H.; Patel, V.M. Generative adversarial network-based restoration of speckled SAR images. In Proceedings of the 2017 IEEE 7th International Workshop on Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP), Curaçao, The Netherlands, 10–13 December 2017. [Google Scholar]

- Gu, F.; Zhang, H.; Wang, C. A GAN-based Method for SAR Image Despeckling. In Proceedings of the SAR in Big Data Era: Models, Methods and Applications, Beijing, China, 5–6 August 2019. [Google Scholar]

- Liu, R.; Li, Y.; Jiao, L. SAR Image Specle Reduction based on a Generative Adversarial Network. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020. [Google Scholar]

- Perera, M.V.; Nair, N.G.; Bandara, W.G.C.; Patel, V.M. SAR Despeckling Using a Denoising Diffusion Probabilistic Model. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4005305. [Google Scholar] [CrossRef]

- Dalsasso, E.; Denis, L.; Tupin, F. SAR2SAR: A semi-supervised despeckling algorithm for SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4321–4329. [Google Scholar] [CrossRef]

- Ma, X.; Wang, C.; Yin, Z.; Wu, P. SAR Image Despeckling by Noisy Reference-Based Deep Learning Method. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8807–8818. [Google Scholar] [CrossRef]

- Dalsasso, E.; Denis, L.; Tupin, F. As if by magic: Self-supervised training of deep despeckling networks with MERLIN. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4704713. [Google Scholar] [CrossRef]

- Molini, A.B.; Valsesia, D.; Fracastoro, G.; Magli, E. Speckle2Void: Deep Self-Supervised SAR Despeckling with Blind-Spot Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5204017. [Google Scholar] [CrossRef]

- Patel, V.M.; Easley, G.R.; Chellappa, R.; Nasrabadi, N.M. Separated Component-Based Restoration of Speckled SAR Images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1019–1029. [Google Scholar] [CrossRef]

- Brock, A.; Donahue, J.; Simonyan, K. Large scale GAN training for high fidelity natural image synthesis. arXiv 2018, arXiv:1809.11096. [Google Scholar]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Zeyde, R.; Elad, M.; Protter, M. On Single Image Scale-Up Using Sparse-Representations. In Curves and Surfaces; Springer: Berlin/Heidelberg, Germany, 2012; pp. 711–730. [Google Scholar]

- Foi, A.; Katkovnik, V.; Egiazarian, K. Pointwise shape-adaptive DCT for high-quality denoising and deblocking of grayscale and color images. IEEE Trans. Image Process. 2007, 16, 1395–1411. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Wu, X.; Buades, A.; Li, X. Color demosaicking by local directional interpolation and nonlocal adaptive thresholding. J. Electron. Imaging 2011, 20, 023016. [Google Scholar]

- Franzen, R. Kodak Lossless True Color Image Suite. Available online: https://www.scirp.org/reference/referencespapers?referenceid=727938 (accessed on 18 July 2024).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 1026–1034. [Google Scholar]

- Perera, M.V.; Bandara, W.G.C.; Valanarasu, J.M.J.; Patel, V.M. SAR Despeckling Using Overcomplete Convolutional Networks. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 401–404. [Google Scholar]

- Dellepiane, S.G.; Angiati, E. Quality Assessment of Despeckled SAR Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 691–707. [Google Scholar] [CrossRef]

- Di Martino, G.; Poderico, M.; Poggi, G.; Riccio, D.; Verdoliva, L. Benchmarking Framework for SAR Despeckling. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1596–1615. [Google Scholar] [CrossRef]

- Ma, X.S.; Shen, H.F.; Zhao, X.L.; Zhang, L.P. SAR Image Despeckling by the Use of Variational Methods With Adaptive Nonlocal Functionals. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3421–3435. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973; SMC-3, 610–621. [Google Scholar] [CrossRef]

- Gomez, L.; Ospina, R.; Frery, A.C. Unassisted Quantitative Evaluation of Despeckling Filters. Remote Sens. 2017, 9, 23. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the ACM SIGSPATIAL International Workshop on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion Models Beat GANs on Image Synthesis. In Proceedings of the NIPS’21: Proceedings of the 35th International Conference on Neural Information Processing Systems, Virtual, 6–14 December 2021. [Google Scholar]

| Datasets | L | PPB | SAR-BM3D | SAR-CNN | SAR-DRN | SAR-Transformer | SAR-ON | SAR-CAM | Proposed |

|---|---|---|---|---|---|---|---|---|---|

| Set12 | 1 | 24.84 | 25.24 | 25.75 | 25.64 | 25.53 | 25.87 | 26.34 | 26.86 |

| 2 | 25.65 | 26.78 | 27.15 | 26.11 | 27.22 | 27.46 | 27.76 | 28.04 | |

| 4 | 27.32 | 28.14 | 28.55 | 27.84 | 28.12 | 29.01 | 28.92 | 29.90 | |

| 8 | 28.19 | 29.77 | 29.96 | 29.13 | 29.78 | 29.94 | 30.49 | 31.62 | |

| 10 | 28.49 | 30.23 | 30.46 | 29.64 | 30.39 | 30.72 | 31.08 | 32.04 | |

| Classic5 | 1 | 23.81 | 25.78 | 25.99 | 25.88 | 25.50 | 26.25 | 27.30 | 27.86 |

| 2 | 24.87 | 26.78 | 26.87 | 26.11 | 27.22 | 27.46 | 27.56 | 28.04 | |

| 4 | 26.04 | 28.14 | 28.22 | 27.84 | 28.12 | 29.01 | 28.99 | 29.90 | |

| 8 | 27.13 | 29.77 | 29.74 | 29.13 | 29.78 | 29.94 | 30.38 | 31.62 | |

| 10 | 28.01 | 30.23 | 30.29 | 29.64 | 30.39 | 30.72 | 31.26 | 32.04 | |

| McMaster | 1 | 24.97 | 25.56 | 25.52 | 25.82 | 26.68 | 26.09 | 27.80 | 28.12 |

| 2 | 26.51 | 27.34 | 27.15 | 27.22 | 28.82 | 27.93 | 29.58 | 29.83 | |

| 4 | 27.09 | 29.01 | 28.96 | 29.14 | 29.35 | 29.01 | 31.36 | 31.54 | |

| 8 | 27.82 | 30.28 | 30.03 | 30.56 | 31.34 | 30.84 | 32.67 | 32.99 | |

| 10 | 29.34 | 31.42 | 31.27 | 31.39 | 31.72 | 31.59 | 33.55 | 33.73 | |

| Kodak24 | 1 | 23.52 | 24.63 | 24.79 | 24.57 | 24.87 | 24.73 | 25.04 | 26.56 |

| 2 | 24.77 | 26.12 | 26.25 | 25.93 | 26.33 | 26.12 | 27.08 | 27.93 | |

| 4 | 25.58 | 28.59 | 28.80 | 27.24 | 28.78 | 28.69 | 29.13 | 29.72 | |

| 8 | 26.64 | 29.14 | 30.64 | 29.33 | 30.51 | 29.98 | 30.26 | 31.23 | |

| 10 | 27.35 | 30.03 | 30.82 | 30.18 | 30.78 | 30.57 | 31.15 | 32.07 |

| Datasets | L | PPB | SAR-BM3D | SAR-CNN | SAR-DRN | SAR-Transformer | SAR-ON | SAR-CAM | Proposed |

|---|---|---|---|---|---|---|---|---|---|

| Set12 | 1 | 0.64 | 0.72 | 0.71 | 0.66 | 0.64 | 0.70 | 0.73 | 0.75 |

| 2 | 0.72 | 0.76 | 0.74 | 0.70 | 0.69 | 0.73 | 0.79 | 0.80 | |

| 4 | 0.75 | 0.79 | 0.76 | 0.72 | 0.73 | 0.73 | 0.82 | 0.87 | |

| 8 | 0.80 | 0.82 | 0.76 | 0.74 | 0.73 | 0.77 | 0.87 | 0.89 | |

| 10 | 0.80 | 0.82 | 0.77 | 0.75 | 0.76 | 0.81 | 0.88 | 0.91 | |

| Classic5 | 1 | 0.61 | 0.72 | 0.71 | 0.65 | 0.67 | 0.74 | 0.77 | 0.79 |

| 2 | 0.66 | 0.74 | 0.75 | 0.70 | 0.71 | 0.77 | 0.80 | 0.81 | |

| 4 | 0.69 | 0.77 | 0.77 | 0.72 | 0.74 | 0.81 | 0.82 | 0.85 | |

| 8 | 0.72 | 0.83 | 0.78 | 0.75 | 0.78 | 0.81 | 0.85 | 0.88 | |

| 10 | 0.73 | 0.85 | 0.80 | 0.75 | 0.82 | 0.85 | 0.87 | 0.88 | |

| McMaster | 1 | 0.65 | 0.74 | 0.70 | 0.70 | 0.72 | 0.77 | 0.75 | 0.80 |

| 2 | 0.70 | 0.79 | 0.74 | 0.75 | 0.74 | 0.81 | 0.80 | 0.83 | |

| 4 | 0.74 | 0.82 | 0.78 | 0.74 | 0.78 | 0.81 | 0.83 | 0.87 | |

| 8 | 0.78 | 0.86 | 0.78 | 0.78 | 0.83 | 0.85 | 0.85 | 0.90 | |

| 10 | 0.81 | 0.88 | 0.84 | 0.82 | 0.85 | 0.90 | 0.88 | 0.93 | |

| Kodak24 | 1 | 0.61 | 0.76 | 0.69 | 0.62 | 0.69 | 0.75 | 0.75 | 0.77 |

| 2 | 0.65 | 0.80 | 0.73 | 0.67 | 0.69 | 0.76 | 0.80 | 0.80 | |

| 4 | 0.67 | 0.82 | 0.78 | 0.72 | 0.75 | 0.77 | 0.82 | 0.82 | |

| 8 | 0.70 | 0.84 | 0.80 | 0.74 | 0.77 | 0.83 | 0.83 | 0.88 | |

| 10 | 0.72 | 0.87 | 0.82 | 0.74 | 0.83 | 0.85 | 0.86 | 0.89 |

| Datasets | Method | ENL | MoI | MoR | M-Index | EPD-ROA | |

|---|---|---|---|---|---|---|---|

| VD | HD | ||||||

| SAR1 | PPB | 153 | 0.9239 | 0.8922 | 10.813 | 0.8208 | 0.8031 |

| SAR-BM3D | 157 | 0.9268 | 0.8710 | 14.458 | 0.7965 | 0.7408 | |

| SAR-CNN | 167 | 0.9033 | 0.8944 | 14.311 | 0.8025 | 0.7417 | |

| SAR-DRN | 152 | 0.9480 | 0.8978 | 18.733 | 0.7802 | 0.7174 | |

| SAR-Transformer | 215 | 0.8887 | 0.8427 | 11.930 | 0.7765 | 0.7119 | |

| SAR-ON | 171 | 0.9001 | 0.8573 | 11.266 | 0.7965 | 0.7298 | |

| SAR-CAM | 145 | 0.8997 | 0.8796 | 9.581 | 0.7832 | 0.7263 | |

| Proposed | 203 | 0.9484 | 0.8994 | 8.438 | 0.8480 | 0.8367 | |

| SAR2 | PPB | 577 | 0.8586 | 0.8129 | 24.031 | 0.7219 | 0.7155 |

| SAR-BM3D | 287 | 0.8999 | 0.8842 | 28.830 | 0.7485 | 0.7384 | |

| SAR-CNN | 515 | 0.9209 | 0.8704 | 19.832 | 0.7417 | 0.7356 | |

| SAR-DRN | 588 | 0.9331 | 0.9064 | 15.761 | 0.7124 | 0.7021 | |

| SAR-Transformer | 513 | 0.9349 | 0.9080 | 22.620 | 0.7210 | 0.7136 | |

| SAR-ON | 703 | 0.9010 | 0.8697 | 23.409 | 0.7211 | 0.7137 | |

| SAR-CAM | 289 | 0.8582 | 0.8380 | 12.076 | 0.7169 | 0.7079 | |

| Proposed | 937 | 0.9639 | 0.9314 | 10.663 | 0.7882 | 0.7924 | |

| SAR3 | PPB | 315 | 0.8493 | 0.7579 | 13.466 | 0.7599 | 0.7702 |

| SAR-BM3D | 204 | 0.9290 | 0.8938 | 7.003 | 0.7966 | 0.8081 | |

| SAR-CNN | 212 | 0.9305 | 0.8791 | 8.863 | 0.7848 | 0.7942 | |

| SAR-DRN | 186 | 0.8831 | 0.9045 | 4.425 | 0.7955 | 0.7970 | |

| SAR-Transformer | 316 | 0.8945 | 0.8105 | 14.232 | 0.7580 | 0.7753 | |

| SAR-ON | 327 | 0.9147 | 0.9007 | 12.146 | 0.7720 | 0.7855 | |

| SAR-CAM | 291 | 0.9290 | 0.9793 | 7.135 | 0.7907 | 0.7912 | |

| Proposed | 351 | 0.9672 | 0.9872 | 5.087 | 0.8799 | 0.8949 | |

| Experiment Name | ST Blocks | Channels | Numbers of Res Blocks |

|---|---|---|---|

| Experiment 1 | ✓ | 768 | 2 |

| Experiment 2 | ✓ | 768 | 3 |

| Experiment 3 | ✓ | 512 | 2 |

| Experiment 4 | ✕ | 768 | 2 |

| Experiment Name | L = 1 PSNR (dB)/SSIM | L = 2 PSNR (dB)/SSIM | L = 4 PSNR (dB)/SSIM | L = 8 PSNR (dB)/SSIM | L = 10 PSNR (dB)/SSIM |

|---|---|---|---|---|---|

| Experiment 1 | 26.85/0.80 | 29.41/0.82 | 31.00/0.86 | 32.13/0.87 | 33.03/0.90 |

| Experiment 2 | 26.11/0.75 | 29.23/0.80 | 29.94/0.85 | 30.82/0.86 | 31.79/0.88 |

| Experiment 3 | 26.38/0.72 | 29.02/0.79 | 29.38/0.82 | 30.46/0.86 | 31.62/0.85 |

| Experiment 4 | 23.53/0.72 | 27.55/0.79 | 28.79/0.83 | 29.67/0.85 | 30.15/0.85 |

| Method | Training Time | Inference Time |

|---|---|---|

| DDPM | 4228 s | 84 s |

| Proposed method | 4704 s | 86 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, Y.; Zhong, L.; Chen, J.; Li, H.; Zhang, X.; Pan, B. SAR Image Despeckling Based on Denoising Diffusion Probabilistic Model and Swin Transformer. Remote Sens. 2024, 16, 3222. https://doi.org/10.3390/rs16173222

Pan Y, Zhong L, Chen J, Li H, Zhang X, Pan B. SAR Image Despeckling Based on Denoising Diffusion Probabilistic Model and Swin Transformer. Remote Sensing. 2024; 16(17):3222. https://doi.org/10.3390/rs16173222

Chicago/Turabian StylePan, Yucheng, Liheng Zhong, Jingdong Chen, Heping Li, Xianlong Zhang, and Bin Pan. 2024. "SAR Image Despeckling Based on Denoising Diffusion Probabilistic Model and Swin Transformer" Remote Sensing 16, no. 17: 3222. https://doi.org/10.3390/rs16173222