Near-Real-Time Long-Strip Geometric Processing without GCPs for Agile Push-Frame Imaging of LuoJia3-01 Satellite

Abstract

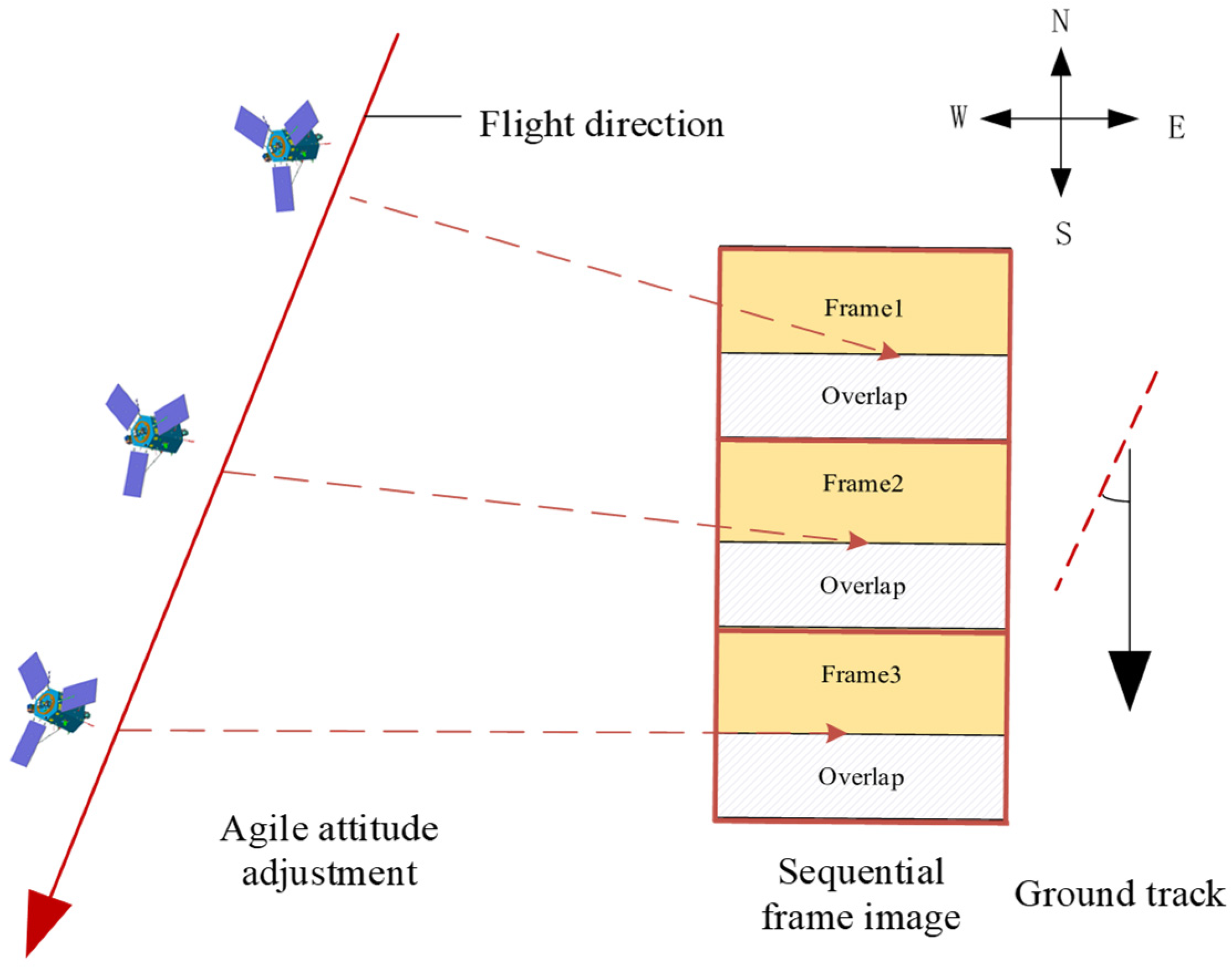

:1. Introduction

2. Methods

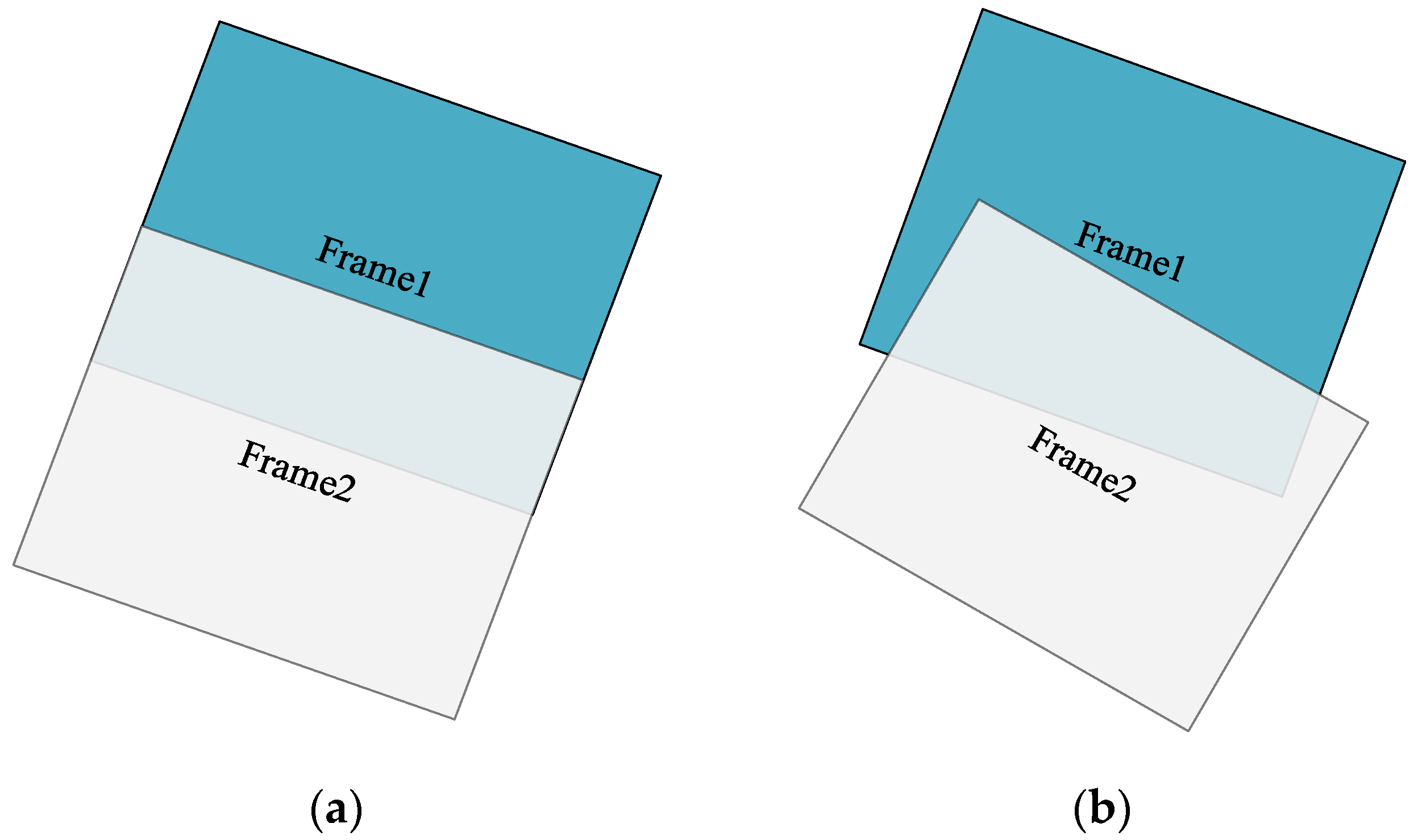

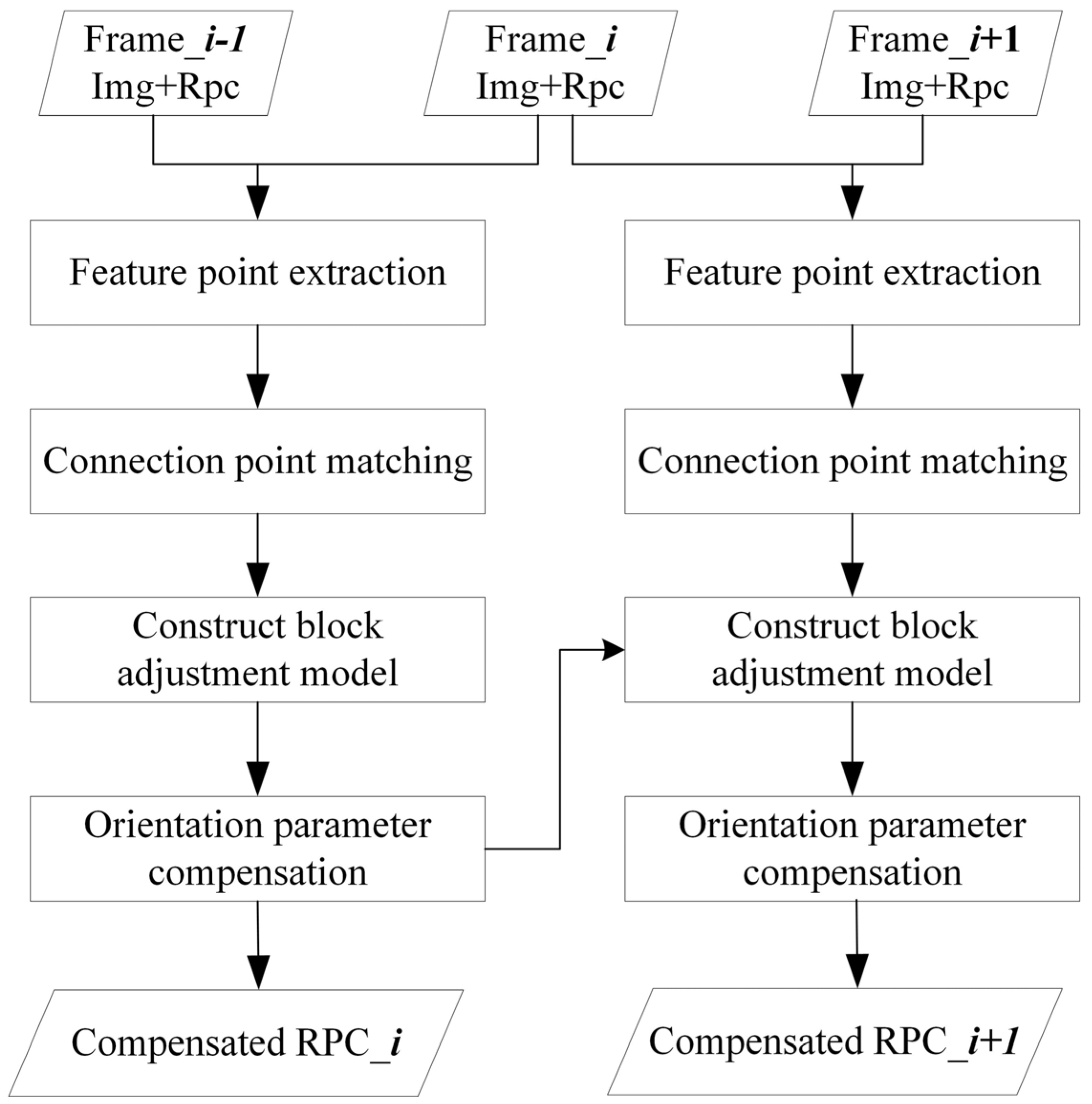

2.1. Sequential Frame Relative Orientation

2.1.1. Connection Point Matching and Gross Error Elimination

2.1.2. Constructed Orientation Model Based on the RFM

2.1.3. Re-Calculation of RFM for the Compensation Frame

- In Section 2.1.1, after eliminating gross errors, several homologous points are obtained. Using Equation (4) and the least squares algorithm, the motion compensation parameters between frames, namely (a0, a1, a2, b0, b1, b2), can be determined.

- Based on the inter-frame motion parameters and the reference frame’s RPC, the inter-frame orientation model is constructed according to Equation (5).

- The compensation frame image is divided into a grid with a certain interval, resulting in uniformly distributed grid points. These points serve as virtual control points. Each virtual control point in the compensation frame is mapped to the reference frame and then projected onto the DEM to obtain the object–space point (lon, lat, h).

- This allows us to determine the image–space coordinates (s, l) and the object–space coordinates (lon, lat, h) for each virtual control point in the compensation frame. Subsequently, the terrain-independent method [16] can be utilized to calculate the high-precision RPC for the compensation frame.

2.2. Long-Strip Image Geometric Correction

2.2.1. Construction of Virtual Object Plane

- Determination of Pixel Resolution for the Virtual Object Image.

- 2.

- Determination of the Projection Model for Object–Space Coordinates.

2.2.2. Building a Block Perspective Transformation Model

2.2.3. Image Point Densified Filling (IPDF) and Coordinate Mapping

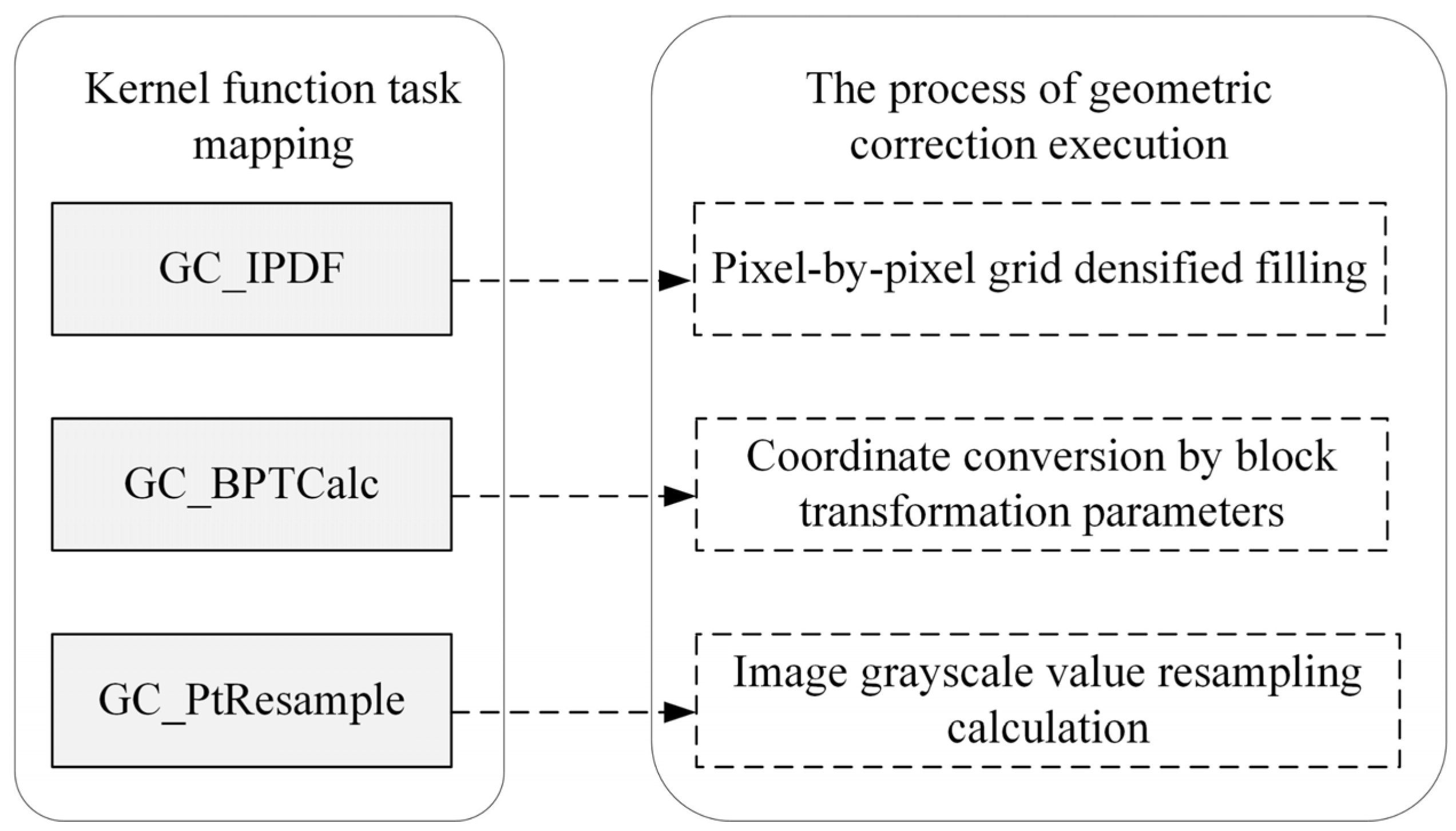

2.3. GPU Parallel Acceleration Processing

2.3.1. Kernel Function Task Mapping

2.3.2. GPU Parameter Optimization Settings

- Kernel function instruction optimization: (1) As few variables as possible should be defined, and variables should be reused as much as possible to save register resources. (2) Sub-functions should be unrolled to avoid function call overhead. (3) Variables should be defined only when they are used to better adhere to the principle of locality, facilitating the compiler optimization of register usage. (4) The use of the branching and cycle code should be avoided if possible.

- Thread block size: (1) The size of the thread block should be at least 64 threads. (2) The maximum number of threads in a block cannot exceed 1024. (3) The size of the thread block should be a multiple of the number of thread warps (32 threads) to avoid meaningless operations on partially unrolled thread warps. In the kernel function, we set the number of threads in a block to 256 (16 pixels × 16 pixels), with each thread responsible for processing 1 pixel. Therefore, if the size of the image to be processed is width × height, [width/16] × [height/16] thread blocks are required to process the entire image (rounding up to ensure that all pixels are covered).

- Optimization of hierarchical memory access in storage: The CUDA architecture provides multiple levels of memory available to each executing thread. Arranged from top to bottom, they are registers, shared memory (L1 cache), L2 cache, constant memory, and global memory. As the level goes down, the capacity gradually increases, while the speed decreases. In the process of IPDF correction, the basic processing unit for a single thread is several adjacent pixels, and there is no need for interaction among the threads. The CUDA function “cudaFuncCachePreferL1” can be used to allocate on-chip shared memory to the L1 cache, optimizing thread performance. Parts of the algorithm that can be considered constants, such as RPC parameters and polynomial fitting parameters, which need to be concurrently read a large number of times during algorithm execution and only involve read operations without write operations, can be placed in constant memory to improve parameter access efficiency by leveraging its broadcast mechanism.

3. Results

3.1. Study Area and Data Source

3.2. Algorithm Accuracy Analysis

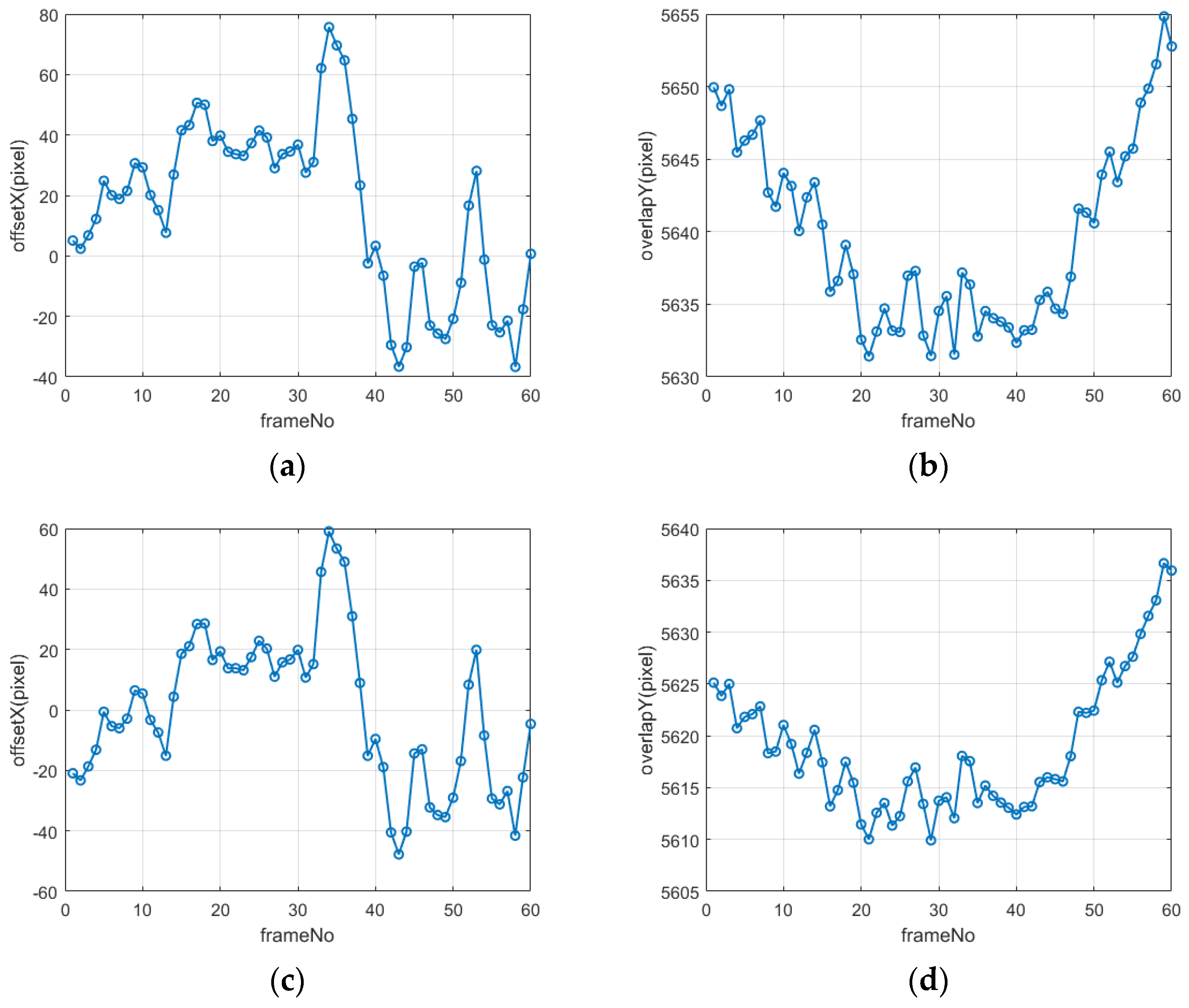

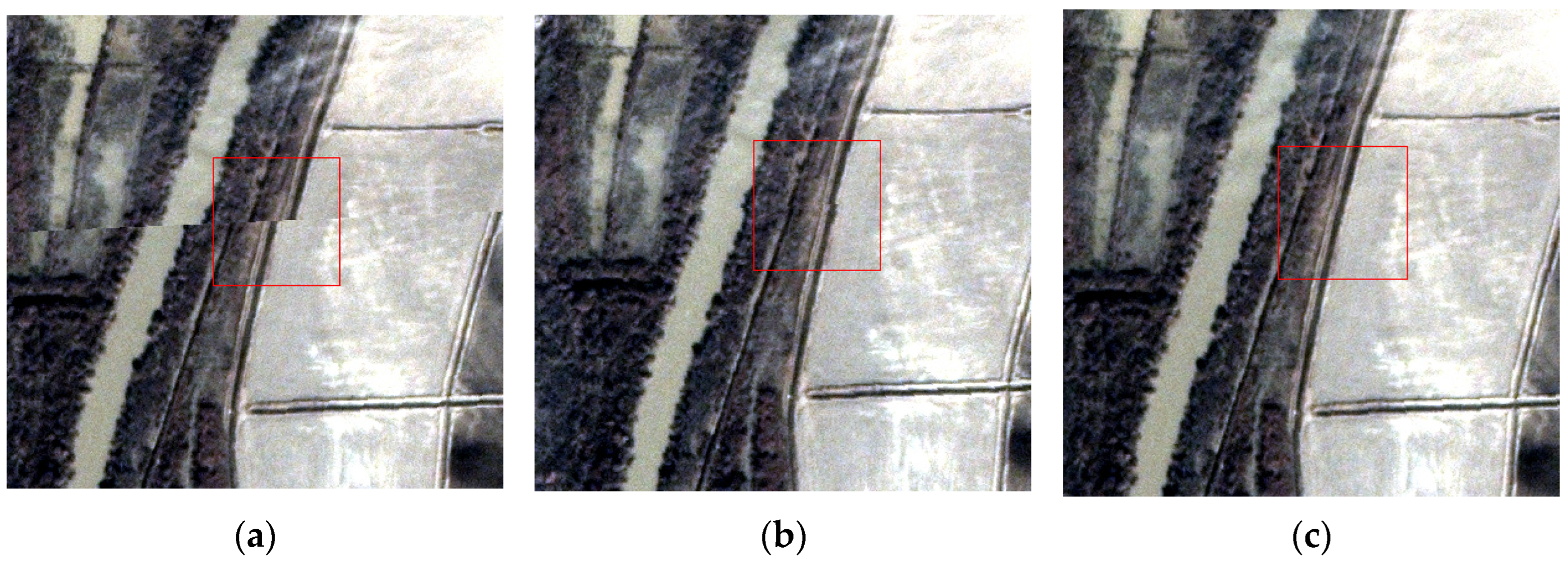

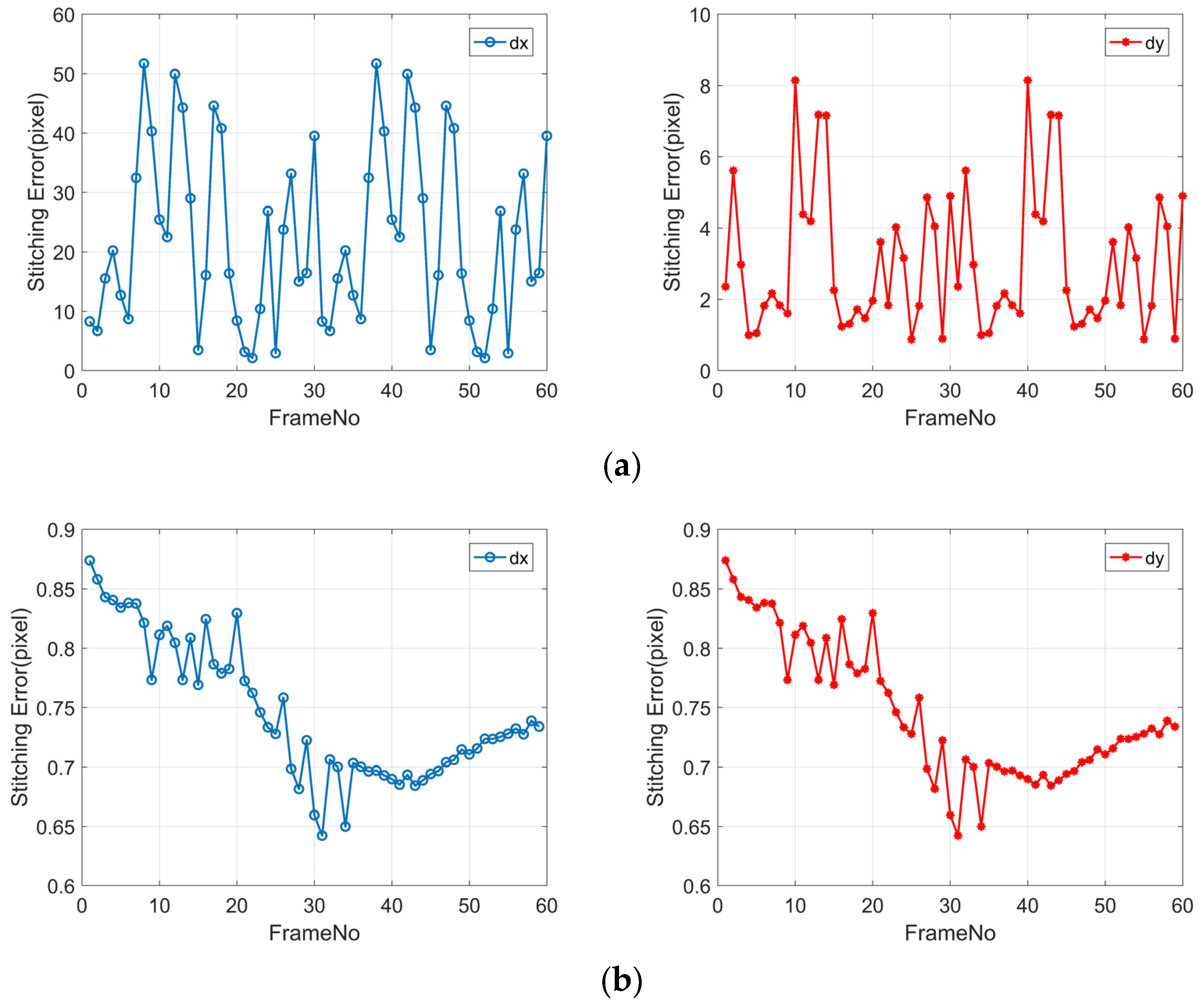

3.2.1. Analysis of Inter-Frame Image Misalignment

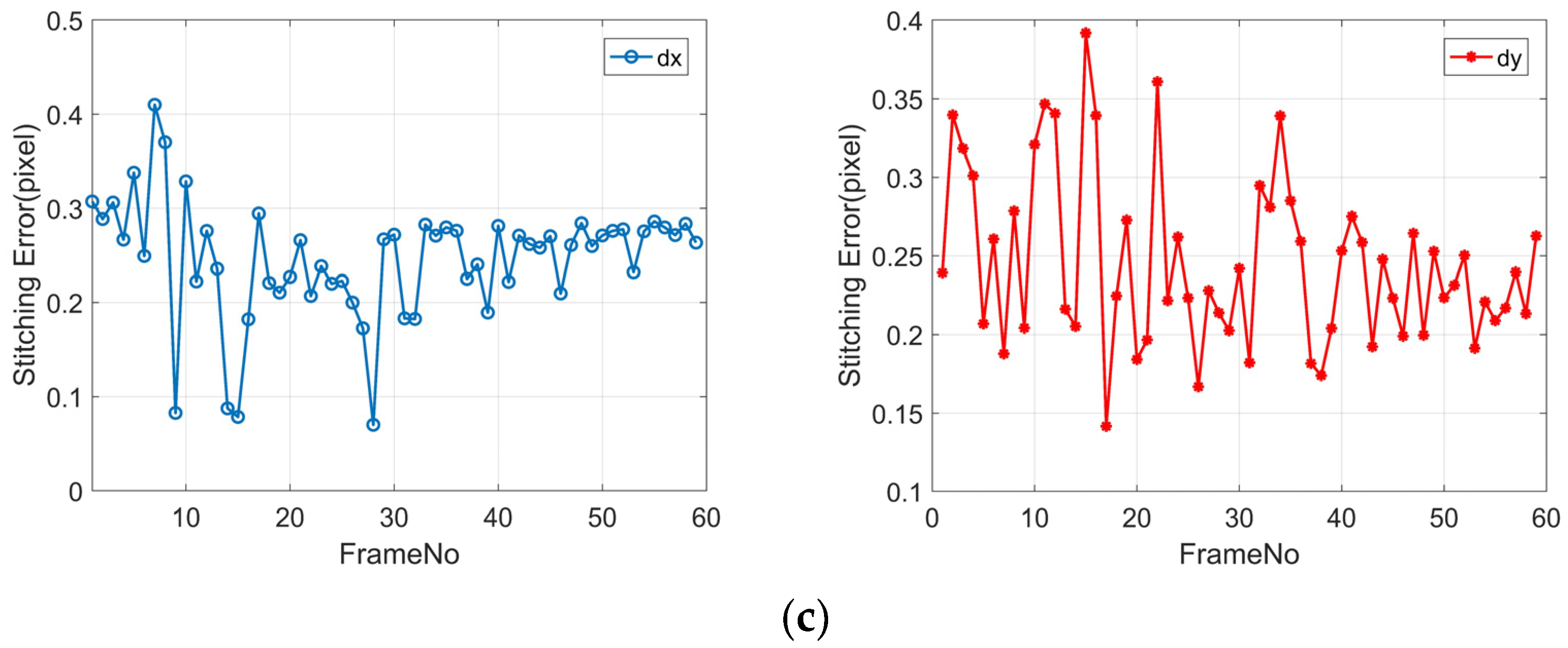

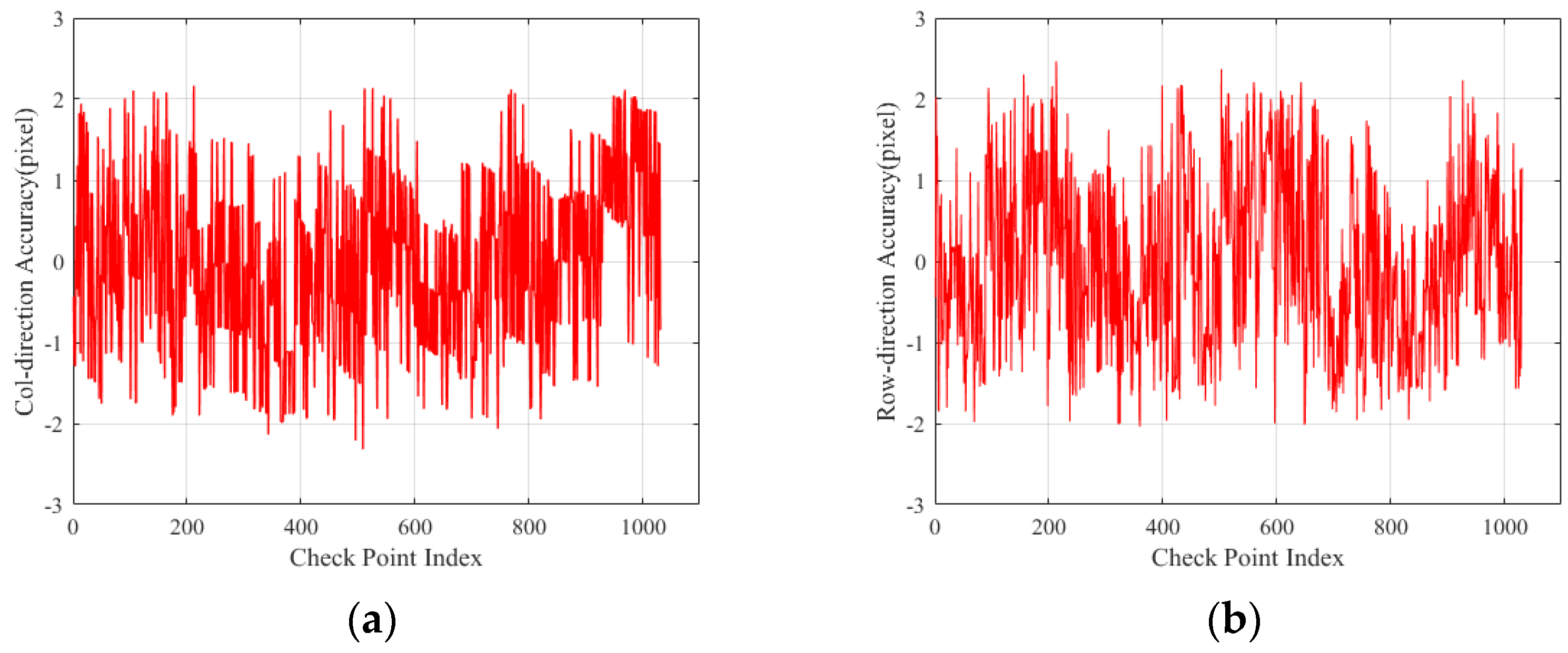

3.2.2. Analysis of Inter-Frame Registration Accuracy

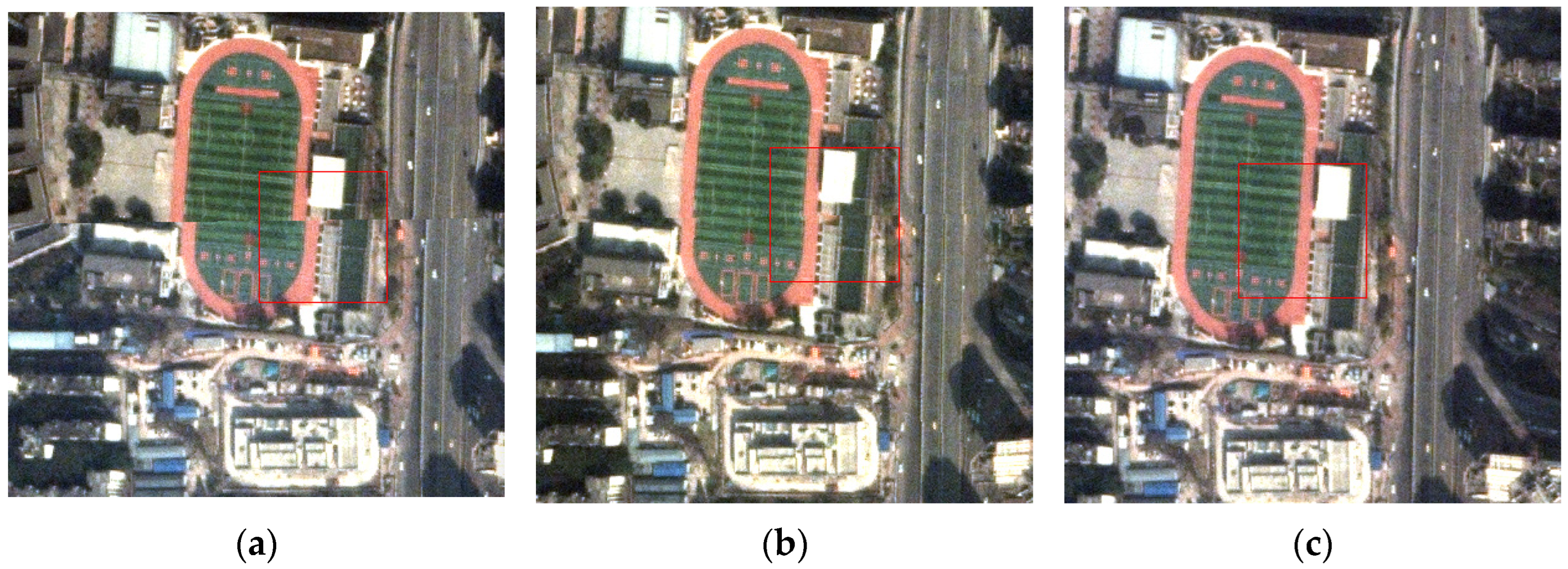

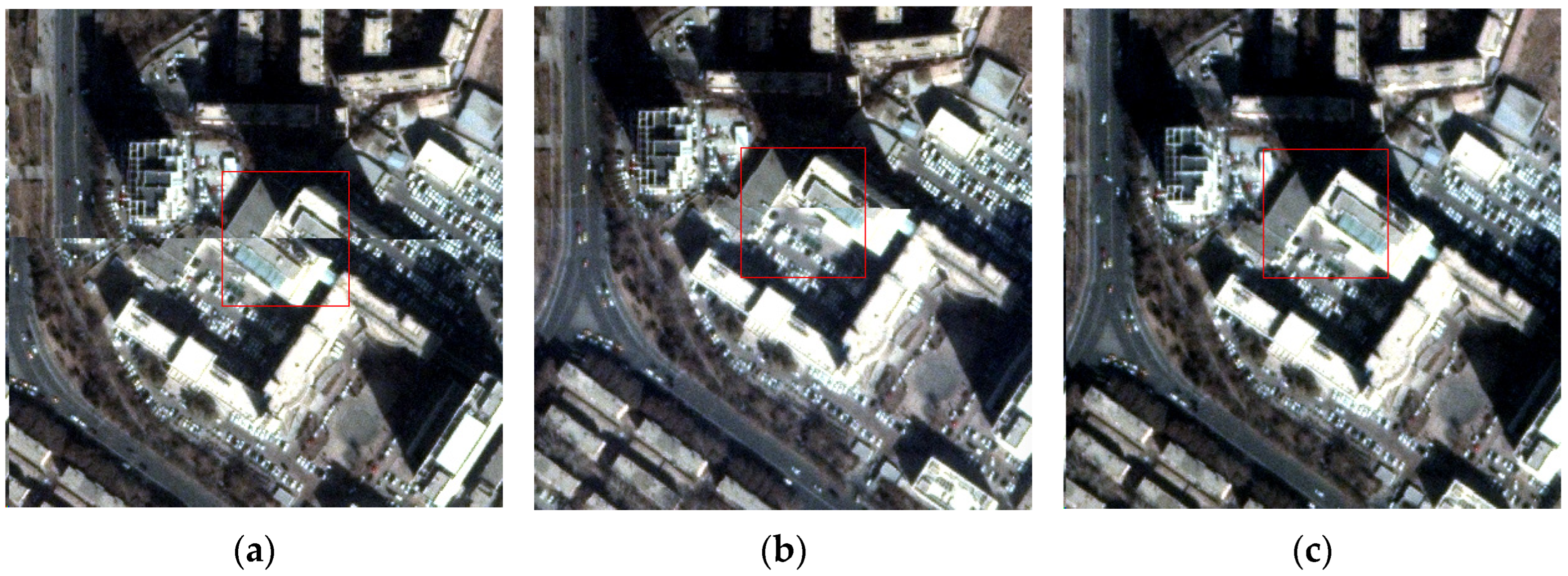

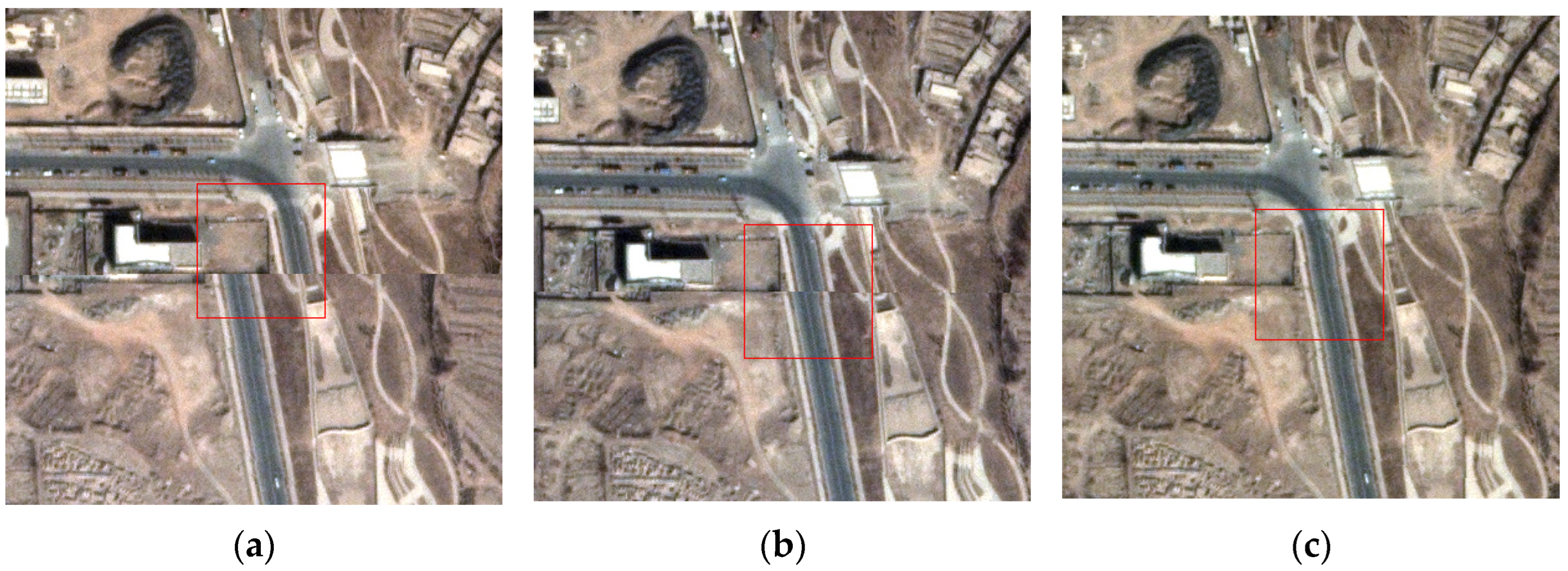

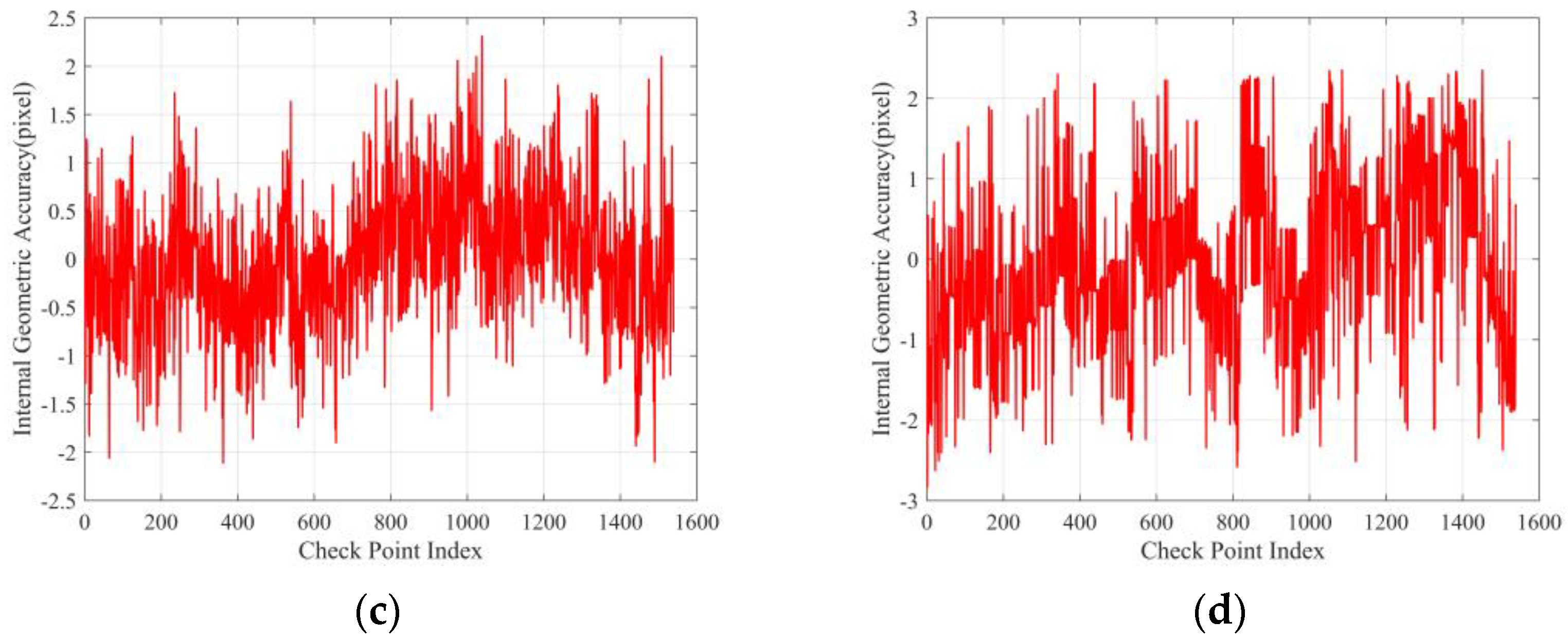

3.2.3. Analysis of Strip Geometric Correction Images’ Internal Geometric Accuracy

3.3. Algorithm Performance Analysis

3.3.1. GPU Parallel Acceleration Performance Analysis

3.3.2. Analysis of Time Consumption for Strip Geometric Correction

- Method 1: Geometric correction of the sequence frame images in the CPU environment, followed by the geometric stitching of the sub-frame images;

- Method 2: Geometric correction of the sequence frame images into long-strip images in the CPU environment;

- Method 3: Geometric correction of the sequence frame images into long-strip images in the GPU environment.

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, D.; Wang, M.; Yang, F.; Dai, R. Internet intelligent remote sensing scientific experimental satellite LuoJia3-01. Geo-Spat. Inf. Sci. 2023, 26, 257–261. [Google Scholar] [CrossRef]

- Wang, M.; Guo, H.; Jin, W. On China’s earth observation system: Mission, vision and application. Geo-Spat. Inf. Sci. 2024, 1, 1–19. [Google Scholar] [CrossRef]

- Li, D. On space-air-ground integrated earth observation network. Geo-Inf. Sci. 2012, 14, 419–425. [Google Scholar] [CrossRef]

- Dial, G.; Bowen, H.; Gerlach, F.; Grodecki, J.; Oleszczuk, R. IKONOS satellite, imagery, and products. Remote Sens. Environ. 2003, 88, 23–36. [Google Scholar] [CrossRef]

- Marí, R.; Ehret, T.; Anger, J.; de Franchis, C.; Facciolo, G. L1B+: A perfect sensor localization model for simple satellite stereo reconstruction from push-frame image strips. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, V-1-2022, 137–143. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Huamg, C.; Zhuang, X. A Sequence Inter-frame Registration Technique Applying the NAR Motion Estimation Method. Spacecr. Recovery Remote Sens. 2017, 38, 86–93. [Google Scholar] [CrossRef]

- Sun, Y.; Liao, N.; Liang, M. Image motion analysis and compensation algorithm for the frame-push-broom imaging spectrometer. In Proceedings of the 2012 International Conference on Systems and Informatics (ICSAI2012), Yantai, China, 19–20 May 2012. [Google Scholar] [CrossRef]

- Nguyen, N.L.; Anger, J.; Davy, A.; Arias, P.; Facciolo, G. Self-supervised super-resolution for multi-exposure push-frame satellites. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Anger, J.; Ehret, T.; Facciolo, G. Parallax estimation for push-frame satellite imagery: Application to Super-Resolution and 3D surface modeling from Skysat products. In Proceedings of the IGARSS 2021—2021 IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 11–16 July 2021. [Google Scholar] [CrossRef]

- Ma, L.; Zhan, D.; Jiang, G.; Fu, S.; Jia, H.; Wang, X.; Huang, Z.; Zheng, J.; Hu, F.; Wu, W.; et al. Attitude-correlated frames approach for a star sensor to improve attitude accuracy under highly dynamic conditions. Appl. Opt. 2015, 54, 7559–7566. [Google Scholar] [CrossRef]

- Yan, J.; Jiang, J.; Zhang, G. Dynamic imaging model and parameter optimization for a star tracker. Opt. Express 2016, 24, 5961–5983. [Google Scholar] [CrossRef]

- Wang, Z.; Jiang, J.; Zhang, G. Global field-of-view imaging model and parameter optimization for high dynamic star tracker. Opt. Express 2018, 26, 33314–33332. [Google Scholar] [CrossRef]

- Wang, J.; Xiong, K.; Zhou, H. Low-frequency periodic error identification and compensation for Star Tracker Attitude Measurement. Chin. J. Aeronaut. 2012, 25, 615–621. [Google Scholar] [CrossRef]

- Wang, Y.; Dong, Z.; Wang, M. Attitude low-frequency error spatiotemporal compensation method for VIMS imagery of gaofen-5b satellite. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Li, X.; Yang, L.; Su, X.; Hu, Z.; Chen, F. A correction method for thermal deformation positioning error of geostationary optical payloads. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7986–7994. [Google Scholar] [CrossRef]

- Radhadevi, P.V.; Solanki, S.S. In-flight geometric calibration of different cameras of IRS-P6 using a physical sensor model. Photogramm. Rec. 2008, 23, 69–89. [Google Scholar] [CrossRef]

- Yang, B.; Pi, Y.; Li, X.; Yang, Y. Integrated geometric self-calibration of stereo cameras onboard the ziyuan-3 satellite. ISPRS J. Photogramm. Remote Sens. 2020, 162, 173–183. [Google Scholar] [CrossRef]

- Cao, J.; Shang, H.; Zhou, N.; Xu, S. In-orbit geometric calibration of multi-linear array optical remote sensing satellites with TIE Constraints. Opt. Express 2022, 30, 28091–28111. [Google Scholar] [CrossRef] [PubMed]

- Guo, B.; Pi, Y.; Wang, M. Sensor Correction method based on image space consistency for planar array sensors of optical satellite. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Devaraj, C.; Shah, C.A. Automated geometric correction of multispectral images from high resolution CCD camera (HRCC) on-board CBERS-2 and cbers-2b. ISPRS J. Photogramm. Remote Sens. 2014, 89, 13–24. [Google Scholar] [CrossRef]

- Toutin, T. Geometric processing of remote sensing images: Models, algorithms and methods. Int. J. Remote Sens. 2004, 25, 1893–1924. [Google Scholar] [CrossRef]

- Xie, G.; Wang, M.; Zhang, Z.; Xiang, S.; He, L. Near real-time automatic sub-pixel registration of Panchromatic and multispectral images for Pan-Sharpening. Remote Sens. 2021, 13, 3674. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, J.; Chen, X. Block-adjustment with sparse GCPS and spot-5 hrs imagery for the project of West China topographic mapping at 1:50,000 scale. In Proceedings of the 2008 International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Beijing, China, 30 June–2 July 2008. [Google Scholar] [CrossRef]

- Cao, J.; Zhang, Z.; Jin, S.; Chang, X. Geometric stitching of a haiyang-1c Ultra Violet Imager with a distorted virtual camera. Opt. Express 2020, 28, 14109–14126. [Google Scholar] [CrossRef]

- Grodecki, J.; Dial, G. Block adjustment of high-resolution satellite images described by rational polynomials. Photogramm. Eng. Remote Sens. 2003, 69, 59–68. [Google Scholar] [CrossRef]

- Wang, M.; Cheng, Y.; Chang, X.; Jin, S.; Zhu, Y. On-orbit geometric calibration and geometric quality assessment for the high-resolution geostationary optical satellite gaofen4. ISPRS J. Photogramm. Remote Sens. 2017, 125, 63–77. [Google Scholar] [CrossRef]

- Yang, B.; Wang, M.; Xu, W.; Li, D.; Gong, J.; Pi, Y. Large-scale block adjustment without use of ground control points based on the compensation of geometric calibration for ZY-3 Images. ISPRS J. Photogramm. Remote Sens. 2017, 134, 1–14. [Google Scholar] [CrossRef]

- Pi, Y.; Yang, B.; Li, X.; Wang, M.; Cheng, Y. Large-scale planar block adjustment of GAOFEN1 WFV images covering most of mainland China. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1368–1379. [Google Scholar] [CrossRef]

- Christophe, E.; Michel, J.; Inglada, J. Remote Sensing Processing: From multicore to GPU. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 643–652. [Google Scholar] [CrossRef]

- Fu, Q.; Tong, X.; Liu, S.; Ye, Z.; Jin, Y.; Wang, H.; Hong, Z. GPU-Accelerated PCG method for the block adjustment of large-scale high-resolution optical satellite imagery without GCPS. Photogramm. Eng. Remote Sens. 2023, 89, 211–220. [Google Scholar] [CrossRef]

- Fang, L.; Wang, M.; Li, D.; Pan, J. CPU/GPU near real-time preprocessing for ZY-3 satellite images: Relative radiometric correction, MTF compensation, and geocorrection. ISPRS J. Photogramm. Remote Sens. 2014, 87, 229–240. [Google Scholar] [CrossRef]

- Tong, X.; Liu, S.; Weng, Q. Bias-corrected rational polynomial coefficients for high accuracy geo-positioning of QuickBird stereo imagery. ISPRS J. Photogramm. Remote Sens. 2010, 65, 218–226. [Google Scholar] [CrossRef]

- Zhang, Z.; Qu, Z.; Liu, S.; Li, D.; Cao, J.; Xie, G. Expandable on-board real-time edge computing architecture for Luojia3 Intelligent Remote Sensing Satellite. Remote Sens. 2022, 14, 3596. [Google Scholar] [CrossRef]

- Oi, L.; Liu, W.; Liu, D. Orb-based fast anti-viewing image feature matching algorithm. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar] [CrossRef]

- Kim, C.-W.; Sung, H.S.; Yoon, H.-J. Camera Image Stitching using Feature Points and Geometrical Features. Int. J. Multimedia Ubiquitous Eng. 2015, 10, 289–296. [Google Scholar] [CrossRef]

| Satellite Platform | Camera Image | ||

|---|---|---|---|

| Type | Indicator | Type | Indicator |

| overall weight | 245 kg | resolution | 0.7 m |

| orbit altitude | 500 km | frame rate | 2 Hz |

| orbit type | sun-sync | imaging width | 5 km |

| attitude accuracy | 0.05° (3σ) | imaging time | 30–60 s |

| attitude stability | 0.005° (3σ) | imaging length | 20–40 km |

| DataId | Image Time | Center Position | Attitude Angle (°) | ||

|---|---|---|---|---|---|

| Roll | Pitch | Yaw | |||

| Data1 | 26 Feb 2023 | E106.52_N29.54 | −22.7~−16.2 | 13.4~−20.8 | −7.6~−6.7 |

| Data2 | 27 Feb 2023 | E93.51_N42.780 | −8.8~0.4 | 13.1~−24.9 | −9.6~−9.3 |

| Data3 | 10 Mar 2023 | E89.20_N42.91 | 9.0~15.8 | 11.7~14.7 | −10.6~−10.9 |

| Data4 | 18 Mar 2023 | E65.52_N44.61 | −6.8~3.4 | 24.9~−15.0 | −10.0~−9.95 |

| DataId | Control Points | Maximum Error (Pixel) | Mean Squares Error (Pixel) | ||||

|---|---|---|---|---|---|---|---|

| Across-Track | Along-Track | Plane | Across-Track | Along-Track | Plane | ||

| Data1 | 1031 | 2.47 | 2.16 | 3.28 | 1.05 | 1.01 | 1.45 |

| Data2 | 1538 | 2.63 | 2.43 | 3.58 | 0.71 | 1.05 | 1.26 |

| Data3 | 1003 | 2.69 | 2.68 | 3.79 | 0.89 | 1.14 | 1.45 |

| Data4 | 7306 | 2.96 | 3.29 | 4.43 | 0.95 | 0.91 | 1.32 |

| Mean value | 0.90 | 1.03 | 1.37 | ||||

| Processing Steps | CPU Time/s | GPU Times/s | Speed-Up Ratio | |

|---|---|---|---|---|

| Orientation | image matching | 3.318 | 0.135 | 24.57 |

| solving RPCs | ||||

| Rectification | point mapping | 35.214 | 0.255 | 138.09 |

| pixel resampling | ||||

| cuda management | — | 1.128 | — | |

| Total time | 38.532 | 1.518 | 25.383 | |

| Frames Count | Method 1 Time/s | Method 2 Time/s | Method 3 Time/s | Speed-Up Ratio | |||

|---|---|---|---|---|---|---|---|

| Total | Average | Total | Average | Total | Average | ||

| 1 | 75.38 | 75.38 | 39.53 | 39.53 | 2.13 | 2.13 | 35.47 |

| 10 | 714.68 | 71.47 | 366.57 | 36.66 | 13.69 | 1.37 | 52.22 |

| 20 | 1423.66 | 71.18 | 735.91 | 36.80 | 25.92 | 1.30 | 54.94 |

| 30 | 2159.91 | 72.00 | 1090.84 | 36.36 | 37.08 | 1.24 | 58.26 |

| 40 | 2889.49 | 72.24 | 1473.24 | 36.83 | 51.96 | 1.30 | 55.62 |

| 50 | 3564.01 | 71.28 | 1811.80 | 36.24 | 63.53 | 1.27 | 56.10 |

| 60 | 4349.01 | 72.48 | 2187.23 | 36.45 | 76.19 | 1.27 | 57.08 |

| DataId | Model | Time/s | |||

|---|---|---|---|---|---|

| Data1 | RFM | 457.818 | 0.965 | 1.083 | 1.451 |

| BATM | 35.132 | 0.991 | 1.126 | 1.500 | |

| BPTM | 35.999 | 0.987 | 1.105 | 1.482 | |

| Data2 | RFM | 461.705 | 0.667 | 0.834 | 1.068 |

| BATM | 36.408 | 0.703 | 1.001 | 1.223 | |

| BPTM | 36.244 | 0.686 | 0.982 | 1.198 | |

| Data3 | RFM | 462.010 | 0.868 | 1.026 | 1.344 |

| BATM | 33.227 | 0.913 | 1.185 | 1.496 | |

| BPTM | 33.219 | 0.889 | 1.156 | 1.458 | |

| Data4 | RFM | 462.432 | 0.884 | 0.888 | 1.253 |

| BATM | 35.390 | 1.034 | 0.965 | 1.414 | |

| BPTM | 35.013 | 0.986 | 0.892 | 1.330 | |

| Average | RFM | 460.991 | 0.846 | 0.958 | 1.279 |

| BATM | 35.039 | 0.910 | 1.069 | 1.408 | |

| BPTM | 35.119 | 0.887 | 1.034 | 1.367 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, R.; Wang, M.; Ye, Z. Near-Real-Time Long-Strip Geometric Processing without GCPs for Agile Push-Frame Imaging of LuoJia3-01 Satellite. Remote Sens. 2024, 16, 3281. https://doi.org/10.3390/rs16173281

Dai R, Wang M, Ye Z. Near-Real-Time Long-Strip Geometric Processing without GCPs for Agile Push-Frame Imaging of LuoJia3-01 Satellite. Remote Sensing. 2024; 16(17):3281. https://doi.org/10.3390/rs16173281

Chicago/Turabian StyleDai, Rongfan, Mi Wang, and Zhao Ye. 2024. "Near-Real-Time Long-Strip Geometric Processing without GCPs for Agile Push-Frame Imaging of LuoJia3-01 Satellite" Remote Sensing 16, no. 17: 3281. https://doi.org/10.3390/rs16173281

APA StyleDai, R., Wang, M., & Ye, Z. (2024). Near-Real-Time Long-Strip Geometric Processing without GCPs for Agile Push-Frame Imaging of LuoJia3-01 Satellite. Remote Sensing, 16(17), 3281. https://doi.org/10.3390/rs16173281