Target Motion Parameters Estimation by Full-Plane Hyperbola-Warping Transform with a Single Hydrophone

Abstract

1. Introduction

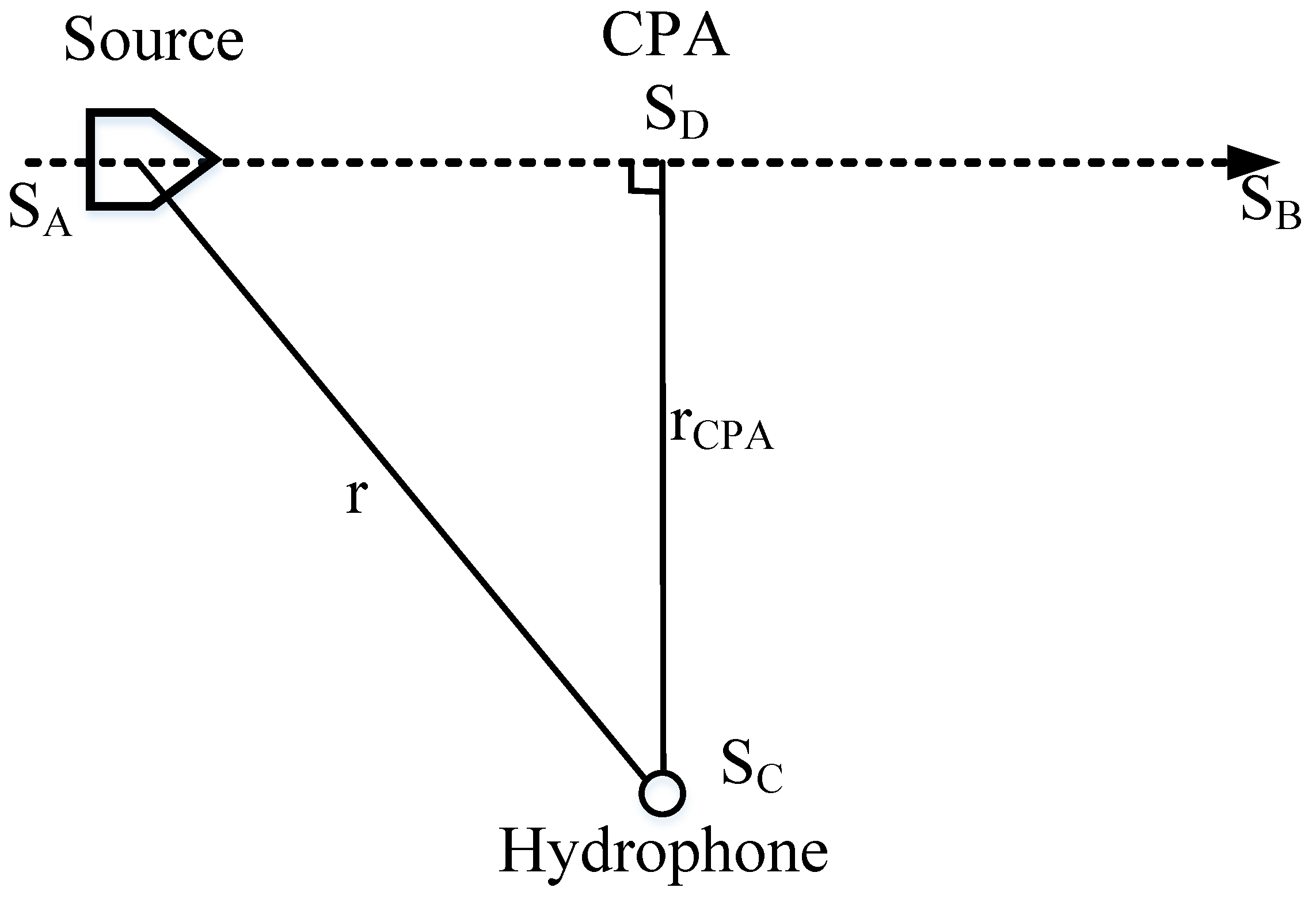

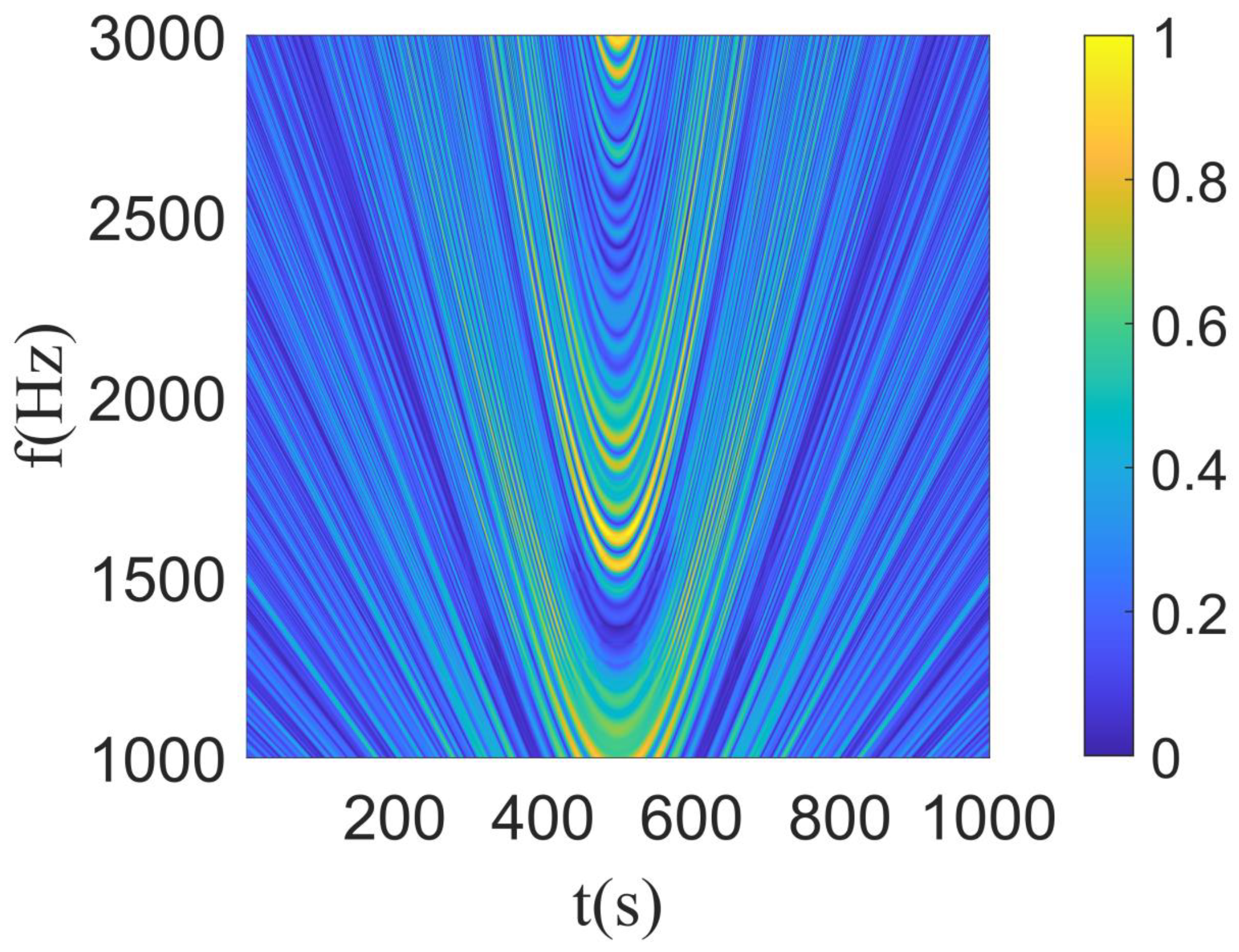

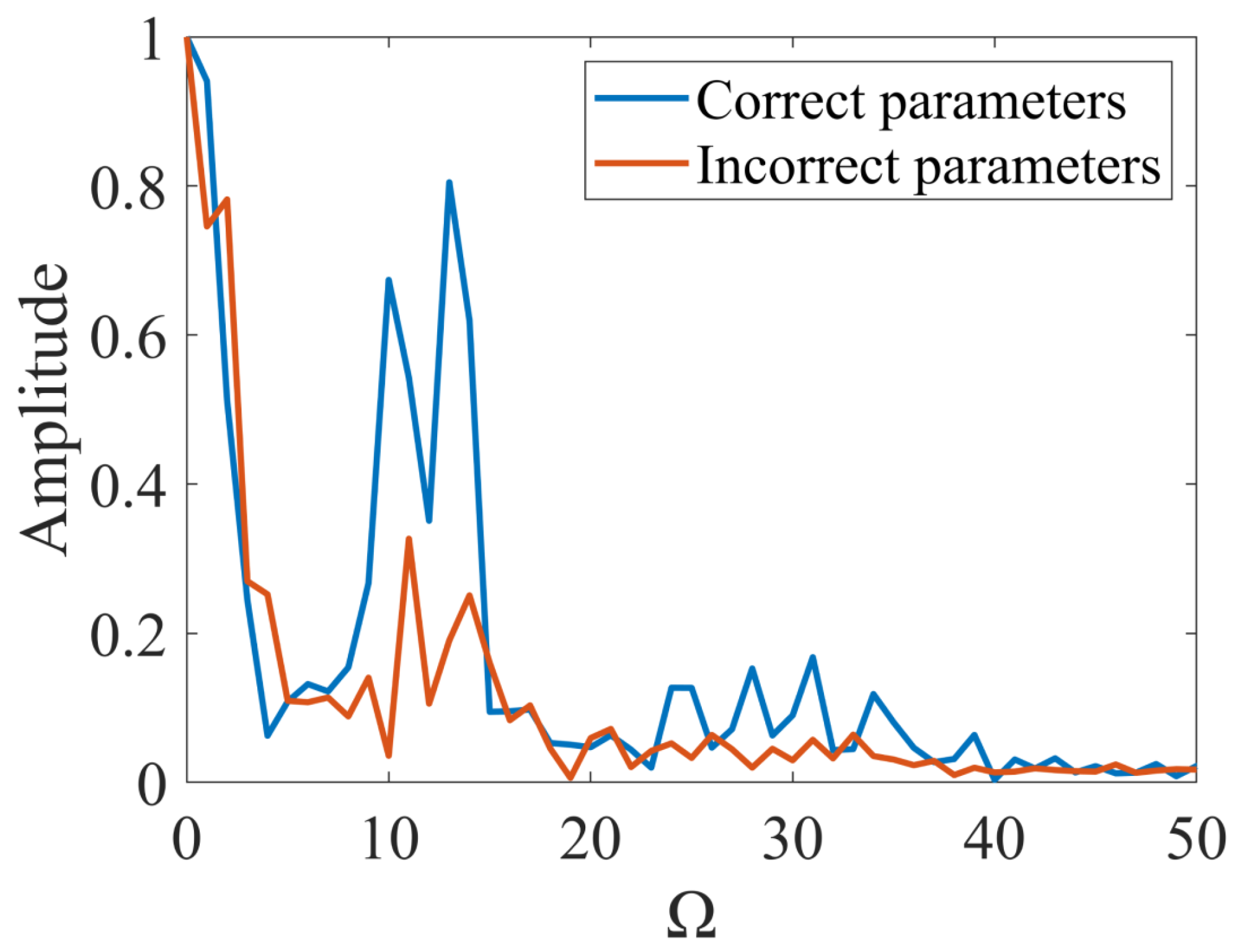

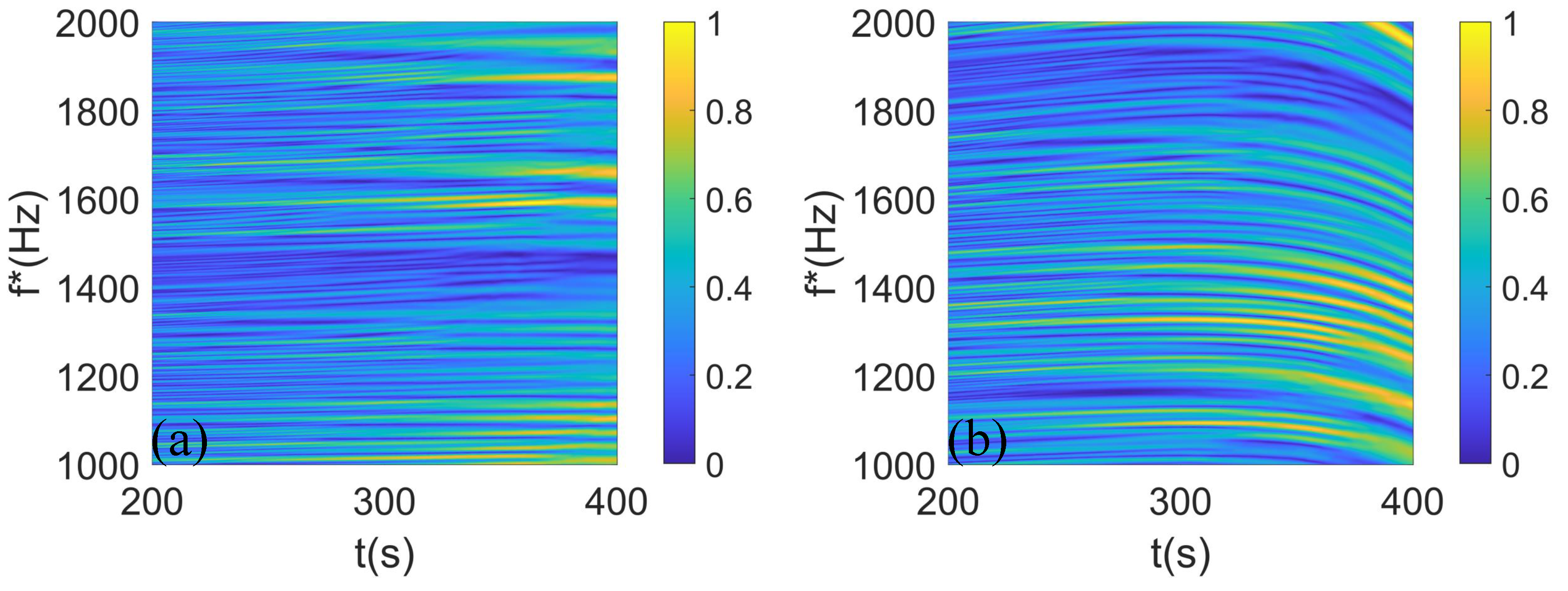

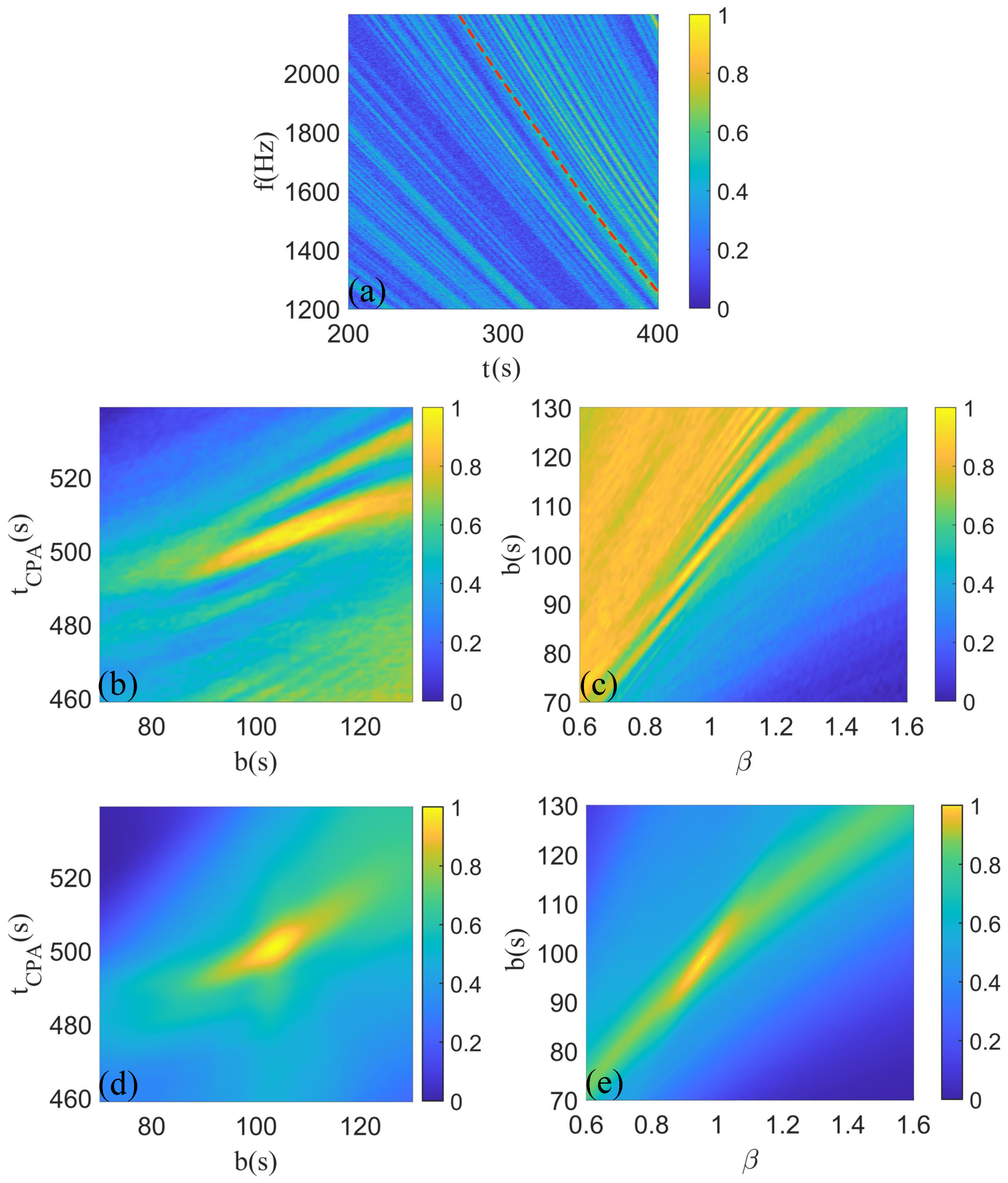

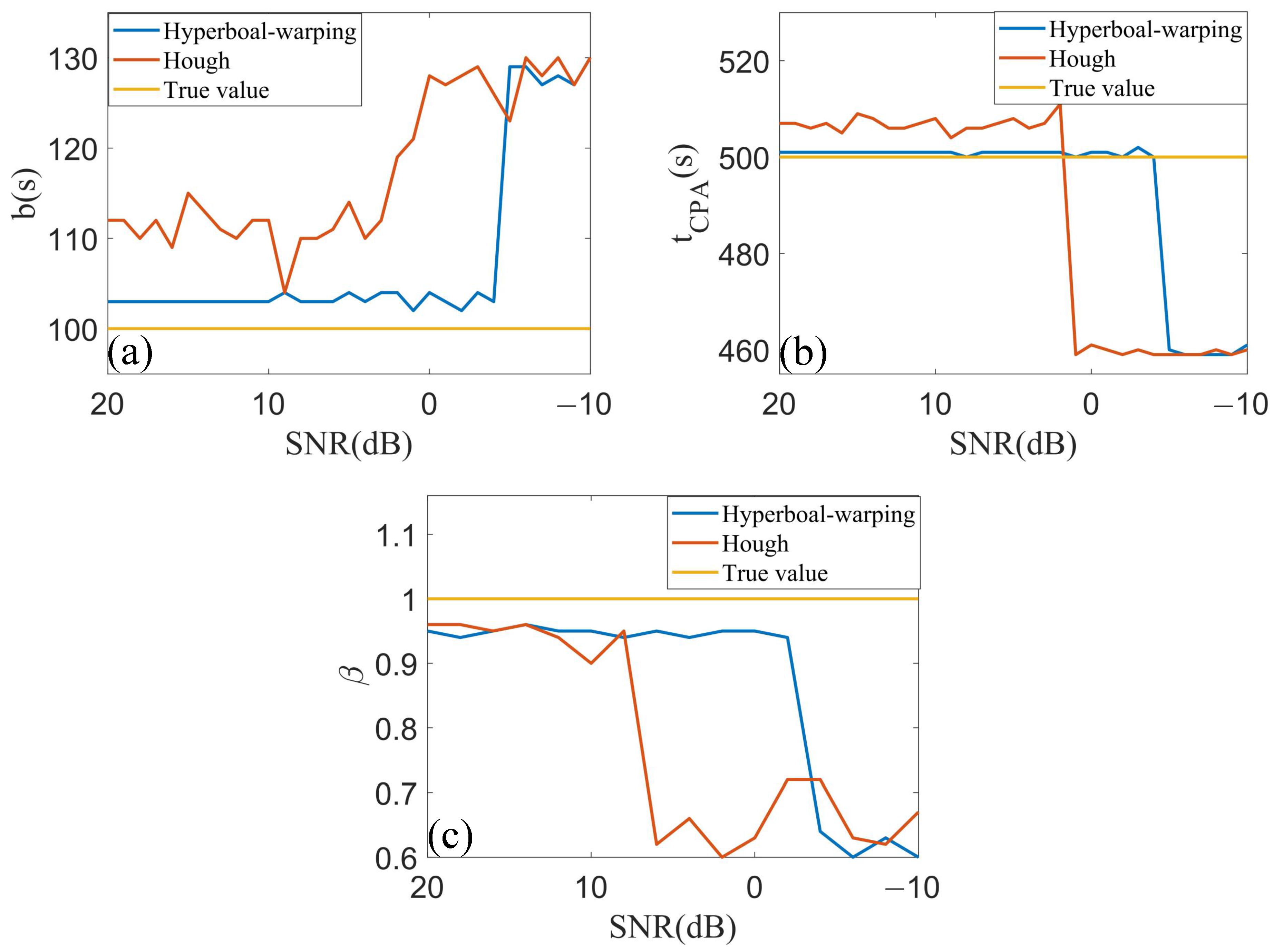

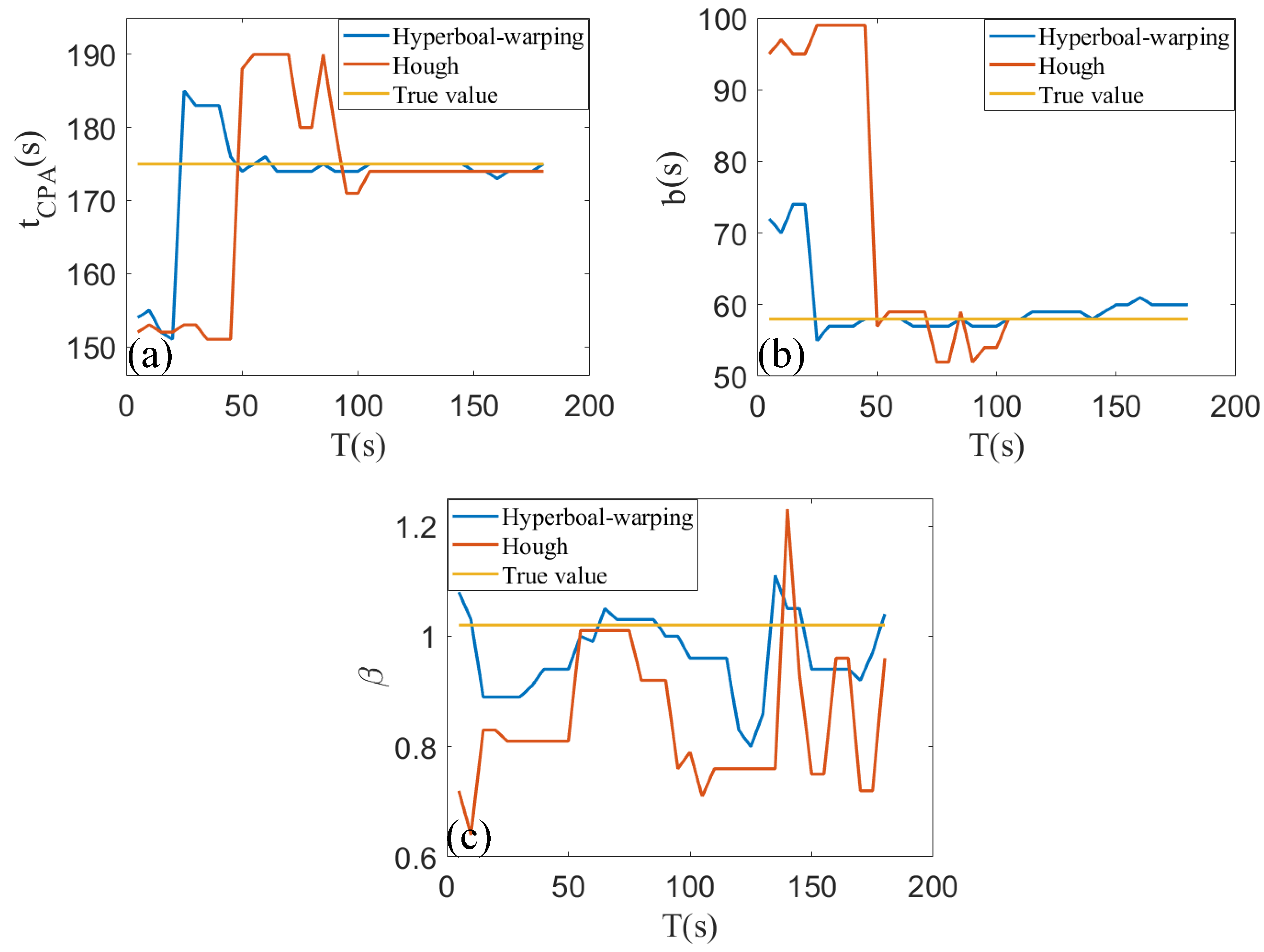

2. Motion Parameter Estimation

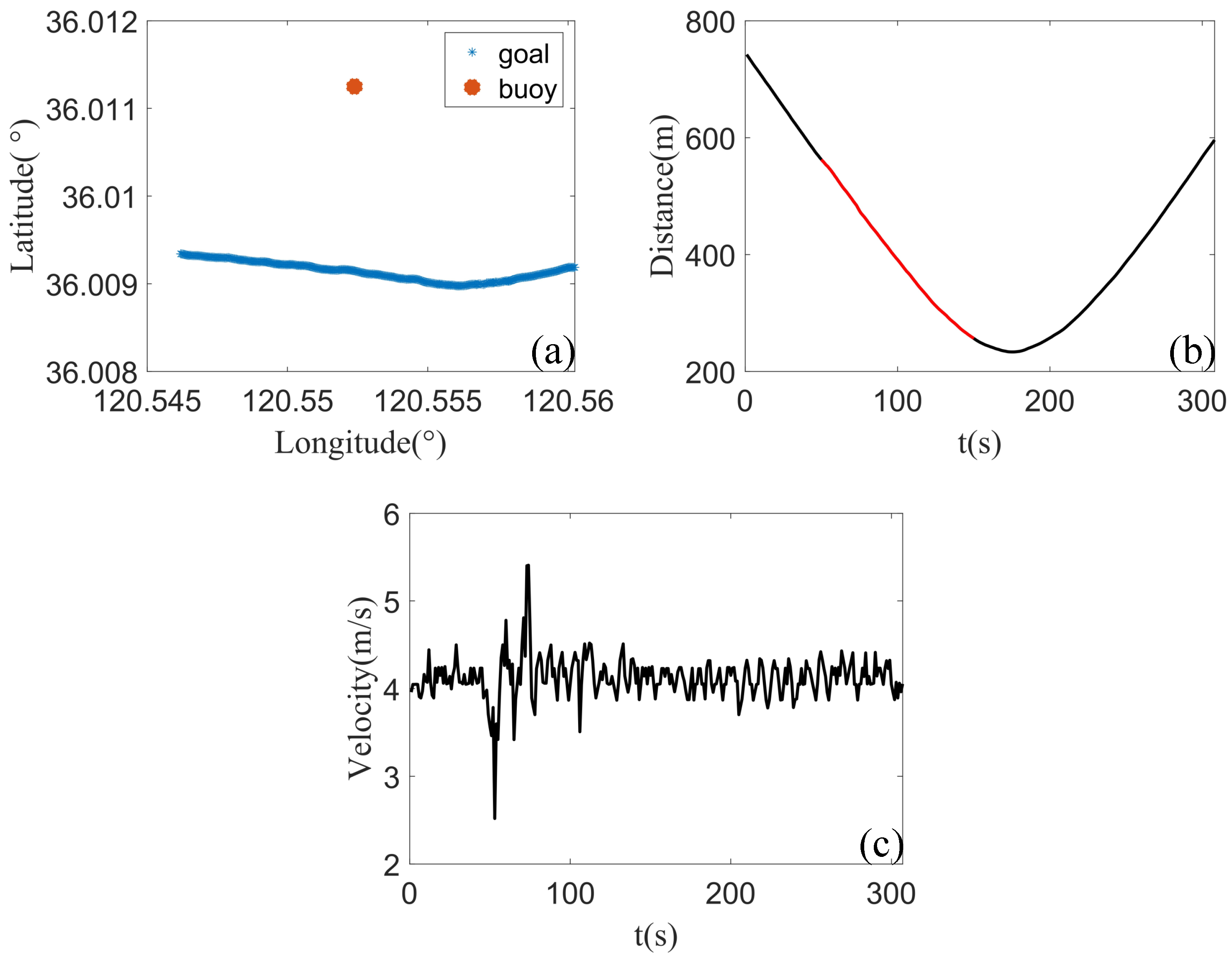

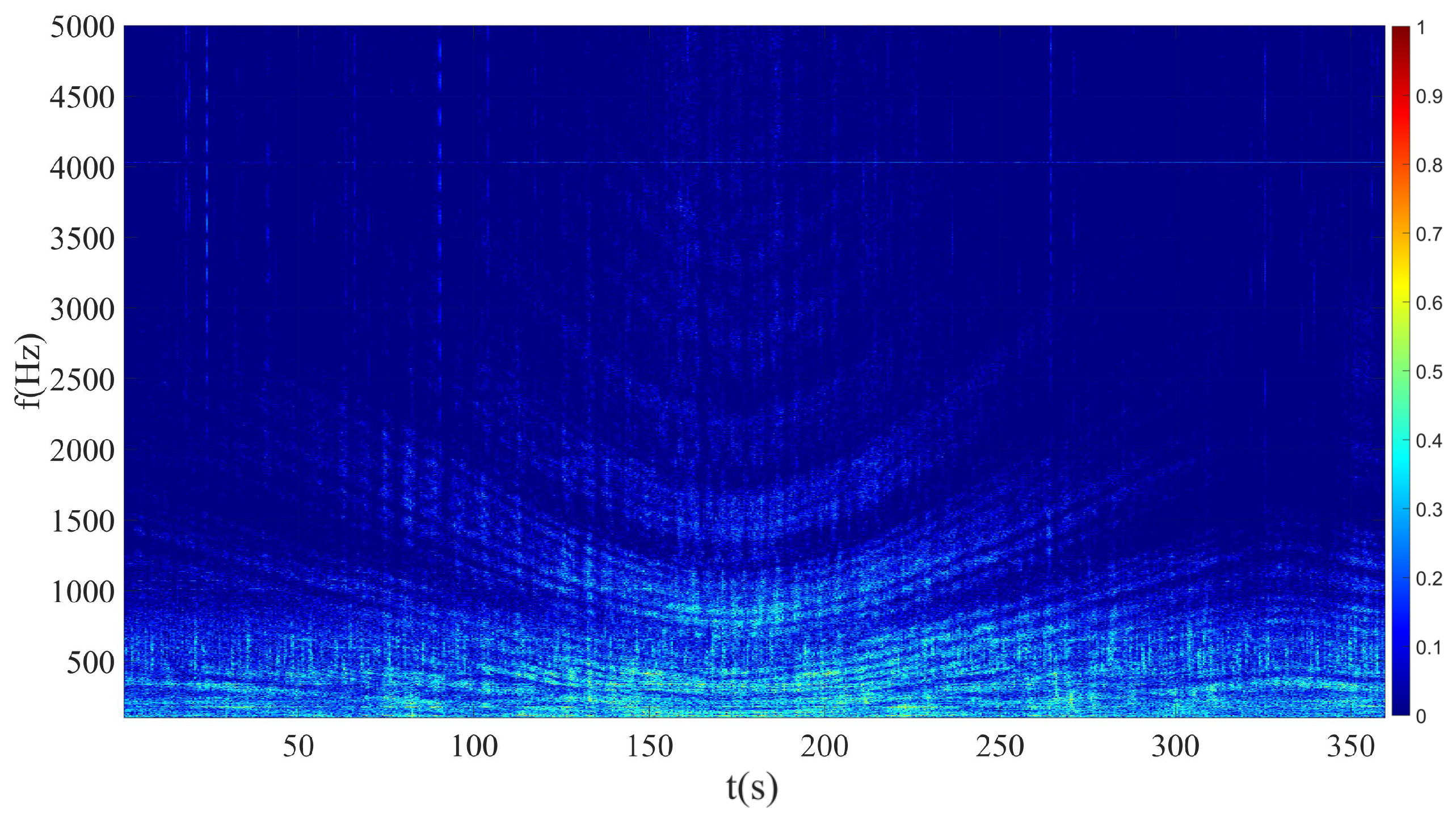

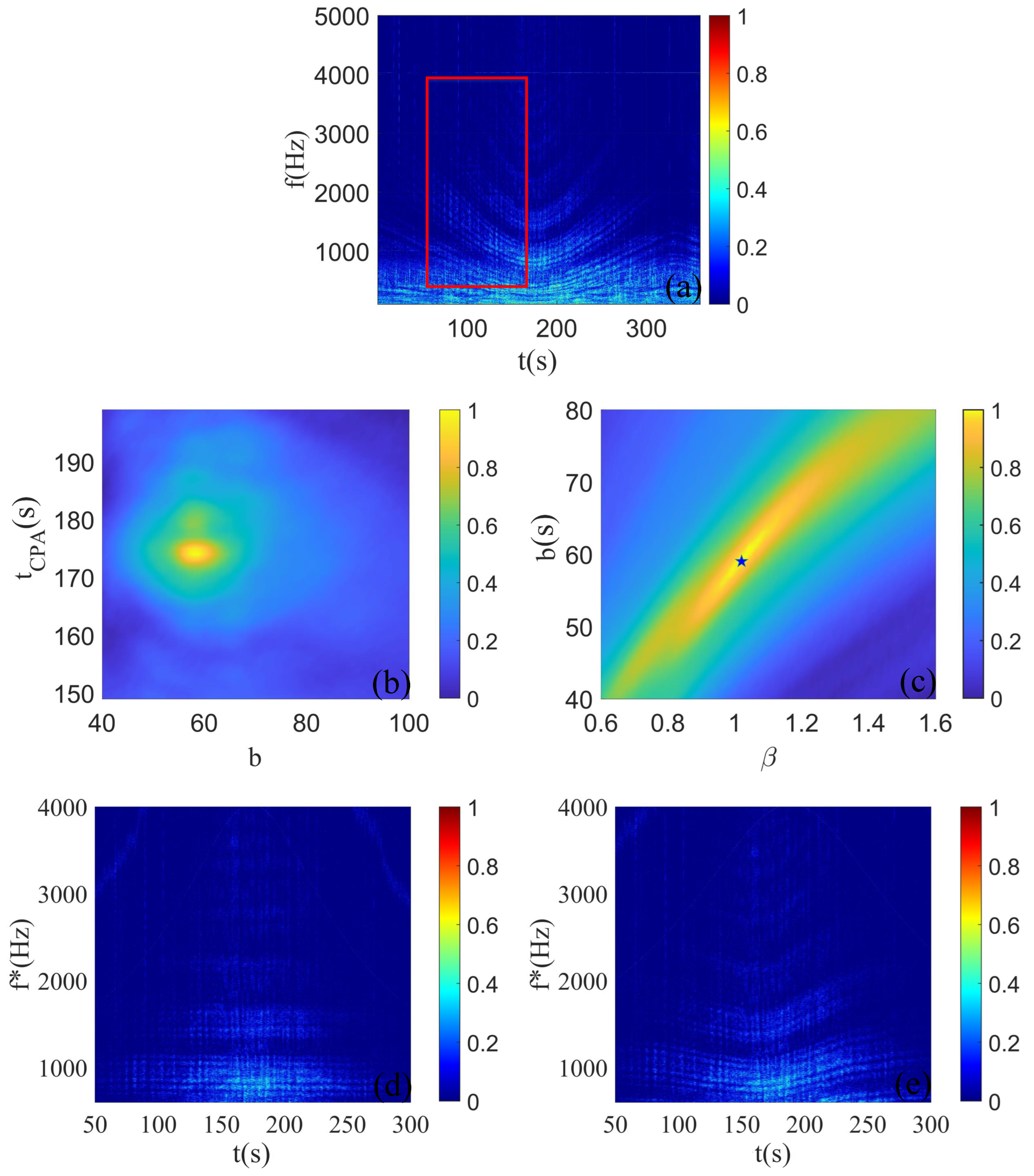

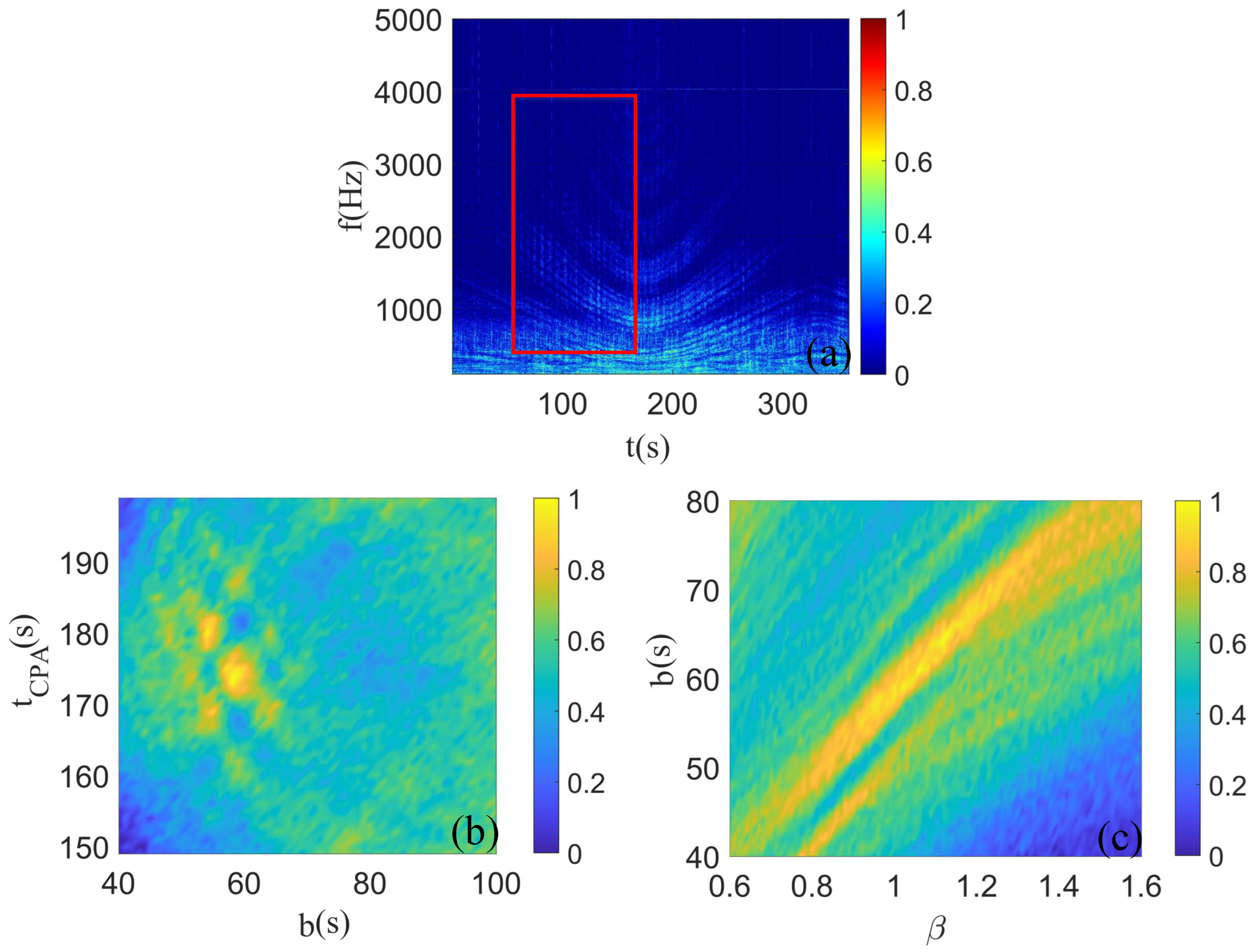

2.1. Theoretical Analysis

2.2. Algorithm Description

- The received signal is transformed by a short-time Fourier transform to obtain its time–frequency spectrum , and the time window and frequency window for parameter estimation are selected in the time–frequency spectrum.

- Define the search grids for parameters b, and , denoted by , and .

- Select any parameter , . The Hyperbola-warping transform is applied to the sound intensity in the range to obtain .

- Calculate the average value of over the time window, denoted as

- Calculate the spectrum of , denoted as .

- Define the standard deviation of the cost function as , denoted by .

- Repeat steps 3–6 until all parameter combinations in the search grid are traversed. When the cost function reaches the maximum value, the corresponding parameter combination is the estimated motion parameter, denoted by .

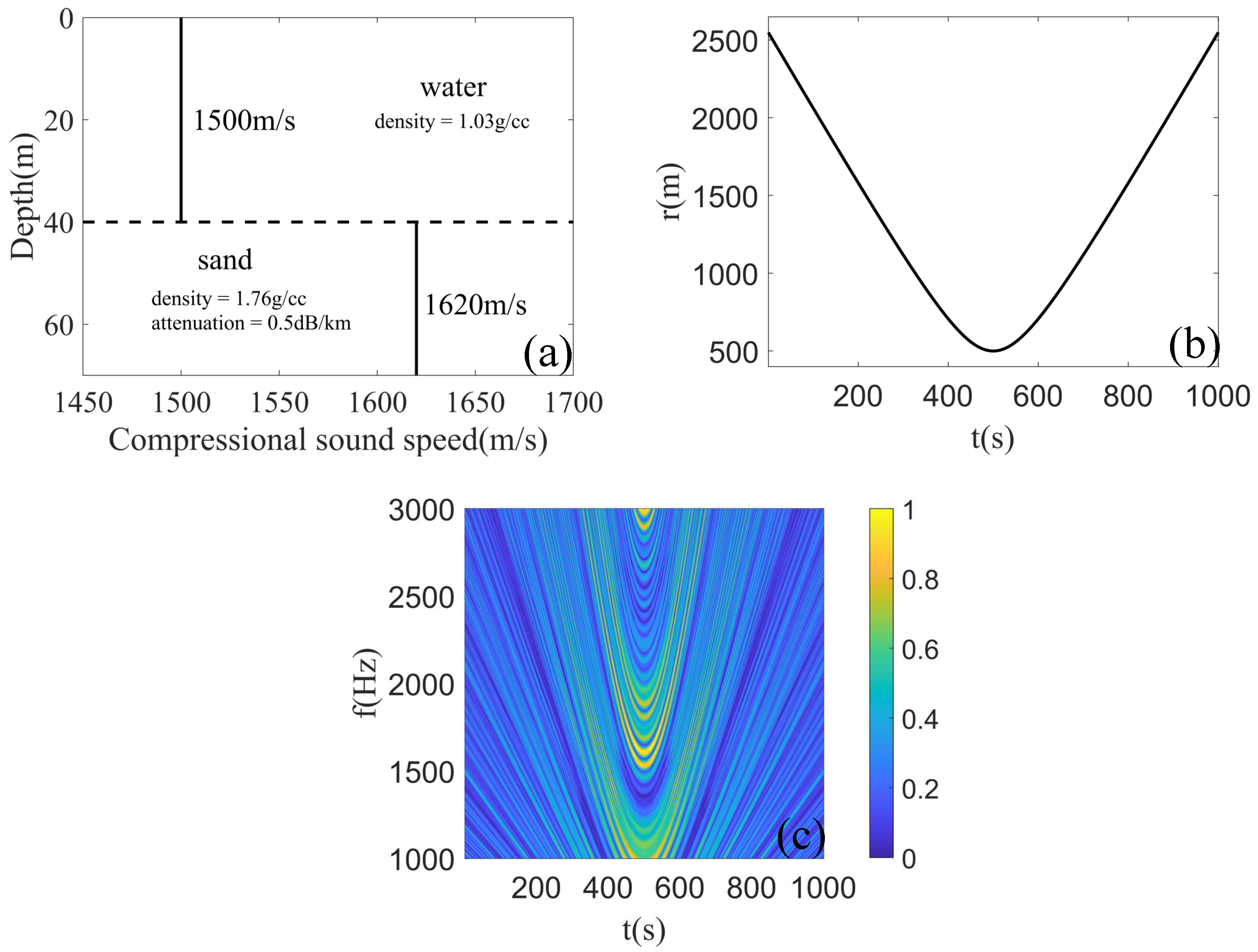

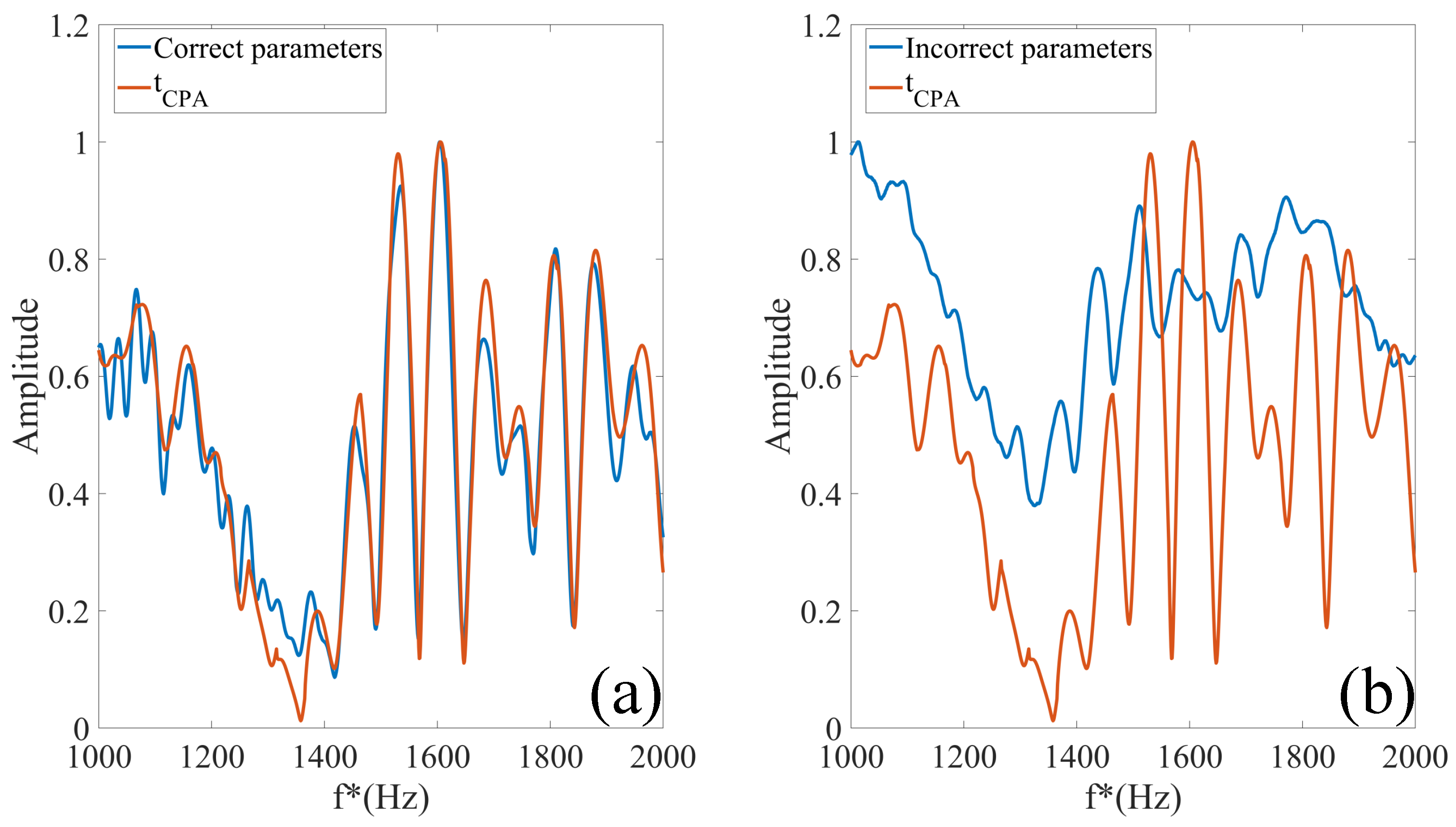

3. Simulation

4. Results of Sea Trial Experiments

5. Discussion and Concluding Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Thode, A.M.; Kuperman, W.; D’Spain, G.; Hodgkiss, W. Localization using Bartlett matched-field processor sidelobes. J. Acoust. Soc. Am. 2000, 107, 278–286. [Google Scholar] [CrossRef]

- Thode, A.M. Source ranging with minimal environmental information using a virtual receiver and waveguide invariant theory. J. Acoust. Soc. Am. 2000, 108, 1582–1594. [Google Scholar] [CrossRef]

- Heaney, K.D. Rapid geoacoustic characterization using a surface ship of opportunity. IEEE J. Ocean. Eng. 2004, 29, 88–99. [Google Scholar] [CrossRef]

- Heaney, K.D. Rapid geoacoustic characterization: Applied to range-dependent environments. IEEE J. Ocean. Eng. 2004, 29, 43–50. [Google Scholar] [CrossRef]

- Li, P.; Wu, Y.; Guo, W.; Cao, C.; Ma, Y.; Li, L.; Leng, H.; Zhou, A.; Song, J. Striation-based beamforming with two-dimensional filtering for suppressing tonal interference. J. Mar. Sci. Eng. 2023, 11, 2117. [Google Scholar] [CrossRef]

- Song, W.; Gao, D.; Li, X.; Kang, D.; Li, Y. Waveguide invariant in a gradual range-and azimuth-varying waveguide. JASA Express Lett. 2022, 2, 056002. [Google Scholar] [CrossRef]

- Song, H.; Byun, G. Localization of a distant ship using a guide ship and a vertical array. J. Acoust. Soc. Am. 2021, 149, 2173–2178. [Google Scholar] [CrossRef]

- Carter, G. Time delay estimation for passive sonar signal processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 463–470. [Google Scholar] [CrossRef]

- Wu, Q.; Xu, Y. A Nonlinear Data-Driven Towed Array Shape Estimation Method Using Passive Underwater Acoustic Data. Remote Sens. 2022, 14, 304. [Google Scholar] [CrossRef]

- Zhang, W.; Jiang, P.; Lin, J.; Sun, J. Array shape calibration based on coherence of noise radiated by non-cooperative ships. Ocean Eng. 2024, 303, 117792. [Google Scholar] [CrossRef]

- Nardone, S.; Lindgren, A.; Gong, K. Fundamental properties and performance of conventional bearings-only target motion analysis. IEEE Trans. Autom. Control 1984, 29, 775–787. [Google Scholar] [CrossRef]

- Lowney, M.P.; Bar-Shalom, Y.; Luginbuhl, T.; Willett, P. Estimation of the Target State with Passive Measurements and Prior Range Information. In Proceedings of the OCEANS 2023-MTS/IEEE US Gulf Coast, Biloxi, MS, USA, 25–28 September 2023; IEEE: New York, NY, USA, 2023; pp. 1–7. [Google Scholar]

- Hinich, M.J. Maximum-likelihood signal processing for a vertical array. J. Acoust. Soc. Am. 1973, 54, 499–503. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, P.; Wang, Y.; Shen, W.; Yang, J.; Wang, J.; Ye, K.; Zhou, M.; Sun, H. A novel multireceiver sas rd processor. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4203611. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, P.; Wang, Y.; Shen, W.; Yang, J.; Ye, K.; Zhou, M.; Sun, H. LBF-based CS algorithm for multireceiver SAS. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1502505. [Google Scholar] [CrossRef]

- Wang, C.; Ma, S.; Meng, Z. Array Invariant-Based Source Localization in Deep Sea by Vertical Linear Array. In Proceedings of the 2019 IEEE 2nd International Conference on Information Communication and Signal Processing (ICICSP), Weihai, China, 28–30 September 2019; IEEE: New York, NY, USA, 2019; pp. 180–184. [Google Scholar]

- Byun, G.; Song, H. Adaptive array invariant. J. Acoust. Soc. Am. 2020, 148, 925–933. [Google Scholar] [CrossRef]

- Wang, Y.; Chi, C.; Li, Y.; Ju, D.; Huang, H. Passive Array-Invariant-Based Localization for a Small Horizontal Array Using Two-Dimensional Deconvolution. Appl. Sci. 2022, 12, 9356. [Google Scholar] [CrossRef]

- Lee, S.; Makris, N.C. The array invariant. J. Acoust. Soc. Am. 2006, 119, 336–351. [Google Scholar] [CrossRef] [PubMed]

- Tao, H.; Hickman, G.; Krolik, J.L.; Kemp, M. Single hydrophone passive localization of transiting acoustic sources. In Proceedings of the OCEANS 2007-Europe, Aberdeen, UK, 18–21 June 2007; IEEE: New York, NY, USA, 2007; pp. 1–3. [Google Scholar]

- Turgut, A.; Orr, M.; Rouseff, D. Broadband source localization using horizontal-beam acoustic intensity striations. J. Acoust. Soc. Am. 2010, 127, 73–83. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Liang, G.; Wang, J.; Qiu, L.; Liu, G.; Dong, W. Single-hydrophone-based passive localization for vertically moving targets in shallow water. Ocean Eng. 2024, 306, 118062. [Google Scholar] [CrossRef]

- Baraniuk, R.G.; Jones, D.L. Unitary equivalence: A new twist on signal processing. IEEE Trans. Signal Process. 1995, 43, 2269–2282. [Google Scholar] [CrossRef]

- Bonnel, J.; Thode, A.; Wright, D.; Chapman, R. Nonlinear time-warping made simple: A step-by-step tutorial on underwater acoustic modal separation with a single hydrophone. J. Acoust. Soc. Am. 2020, 147, 1897–1926. [Google Scholar] [CrossRef]

- Qi, Y.B.; Zhou, S.H.; Zhang, R.H.; Zhang, B.; Ren, Y. Modal characteristic frequency in a range-dependent shallow-water waveguide and its application to passive source range estimation. Acta Phys. Sin. 2014, 63, 044303. [Google Scholar] [CrossRef]

- Bonnel, J.; Dosso, S.E.; Ross Chapman, N. Bayesian geoacoustic inversion of single hydrophone light bulb data using warping dispersion analysis. J. Acoust. Soc. Am. 2013, 134, 120–130. [Google Scholar] [CrossRef] [PubMed]

- Niu, H.Q.; Zhang, R.H.; Li, Z.L.; Guo, Y.G.; He, L. Bubble pulse cancelation in the time-frequency domain using warping operators. Chin. Phys. Lett. 2013, 30, 084301. [Google Scholar] [CrossRef]

- Mao, J.; Peng, Z.; Zhang, B.; Wang, T.; Zhai, Z.; Hu, C.; Wang, Q. An Underwater Localization Algorithm for Airborne Moving Sound Sources Using Doppler Warping Transform. J. Mar. Sci. Eng. 2024, 12, 708. [Google Scholar] [CrossRef]

- Li, X.; Piao, S.; Zhang, H. Modified Warping transformation for typical waveguide with semi-infinite seabed. Harbin Gongcheng Daxue Xuebao/J. Harbin Eng. 2018, 39, 1257–1263. [Google Scholar]

- Wang, D.; Guo, L.-H.; Liu, J.-J.; Qi, Y.-B. Passive impulsive source range estimation based on warping operator in shallow water. Acta Phys. Sin. 2016, 65, 104302. [Google Scholar] [CrossRef]

- Brekhovskikh, L.M.; Lysanov, Y.P. Fundamentals of Ocean Acoustics; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Chuprov, S.; Brekhovskikh, L. Interference structure of a sound field in a layered ocean. Ocean Acoust. Curr. State 1982, 71–91. [Google Scholar]

- Porter, M.B. The KRAKEN Normal Mode Program; Naval Research Lab: Washington, DC, USA, 1992. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Gao, B.; Chen, Z.; Yu, Y.; Wang, Z.; Gao, D. Target Motion Parameters Estimation by Full-Plane Hyperbola-Warping Transform with a Single Hydrophone. Remote Sens. 2024, 16, 3307. https://doi.org/10.3390/rs16173307

Li Y, Gao B, Chen Z, Yu Y, Wang Z, Gao D. Target Motion Parameters Estimation by Full-Plane Hyperbola-Warping Transform with a Single Hydrophone. Remote Sensing. 2024; 16(17):3307. https://doi.org/10.3390/rs16173307

Chicago/Turabian StyleLi, Yuzheng, Bo Gao, Zhuo Chen, Yueqi Yu, Zhennan Wang, and Dazhi Gao. 2024. "Target Motion Parameters Estimation by Full-Plane Hyperbola-Warping Transform with a Single Hydrophone" Remote Sensing 16, no. 17: 3307. https://doi.org/10.3390/rs16173307

APA StyleLi, Y., Gao, B., Chen, Z., Yu, Y., Wang, Z., & Gao, D. (2024). Target Motion Parameters Estimation by Full-Plane Hyperbola-Warping Transform with a Single Hydrophone. Remote Sensing, 16(17), 3307. https://doi.org/10.3390/rs16173307